Unleash the Potential of Your Logs with Logs Pipelines

With Logs Pipelines, you can transform logs to suit your querying and aggregation needs before they get stored in the database.

Once you start sending logs to SigNoz, you can start searching their text and create basic reports based on standard fields. For example, you can find logs with text containing a particular user's id or plot the count of error logs by service name.

However, the text in your logs will typically contain a lot of other valuable information, and it can be inefficient or outright impossible to query and aggregate on that information.

Logs pipelines enable you to unleash the full potential of your logs by pre-processing them to suit your needs before they get stored. This unlocks valuable logs based queries and dashboards that wouldn't be possible otherwise.

You can also use logs preprocessing to achieve other goals like cleaning sensitive information in your logs or normalizing names of fields across services.

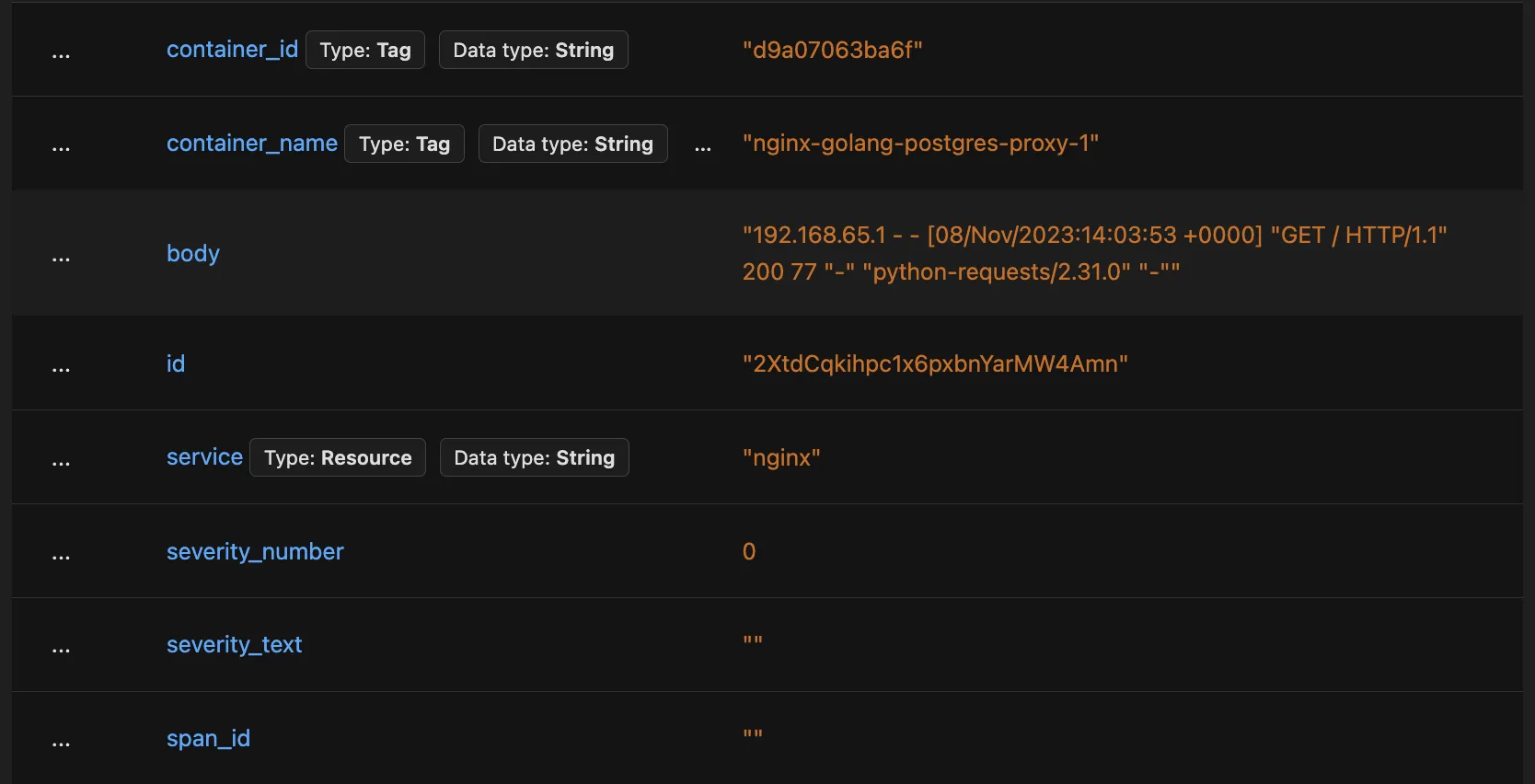

A raw Nginx log

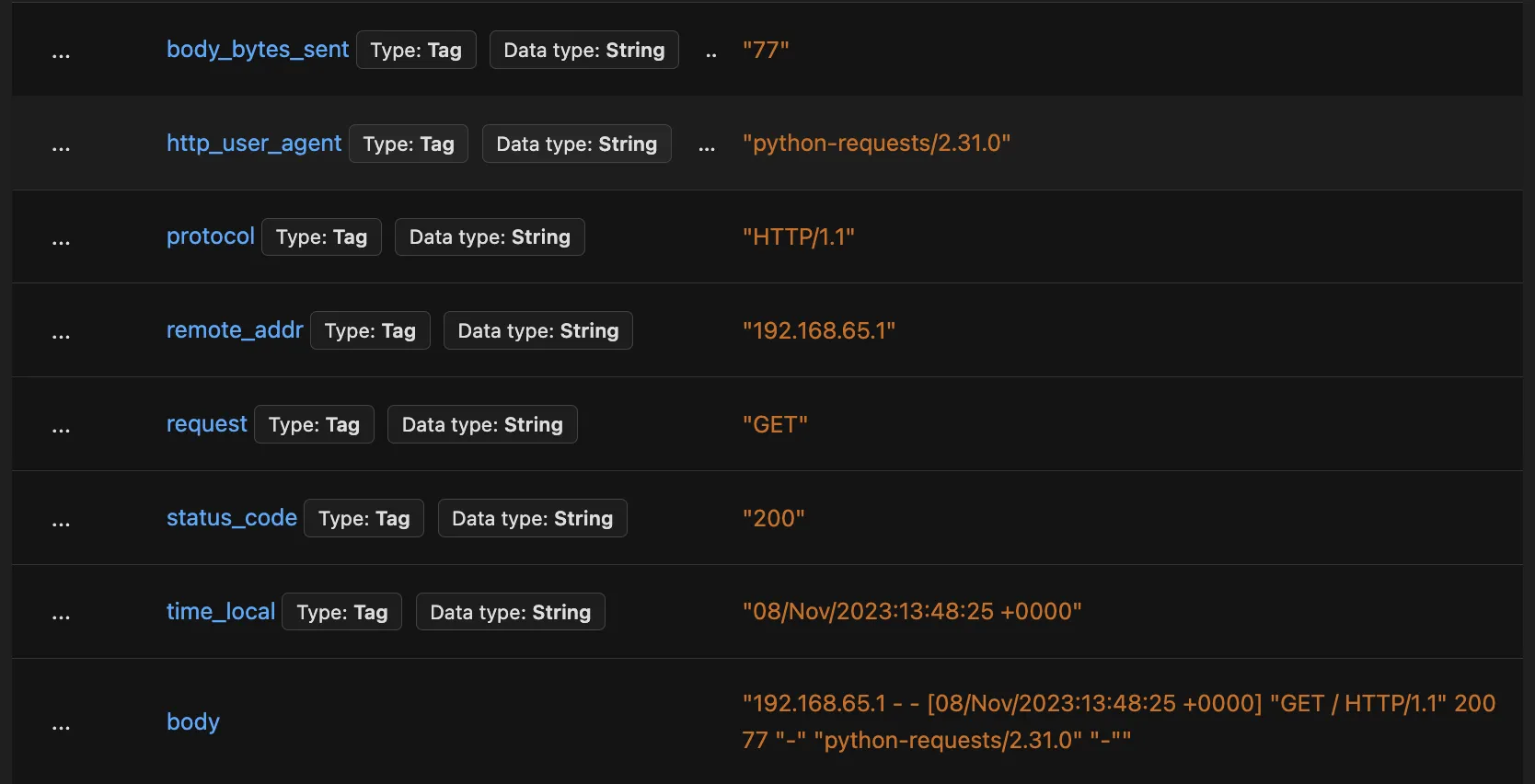

A parsed Nginx log

A Report for Requests by User Agent, made possible by a pipeline that extracts User Agent from Nginx text logs

While you can achieve these goals by changing your application code or by changing config for your otel collectors, logs pipelines allow you to do it in SigNoz UI without having to ship changes or redeploy your applications and collectors.