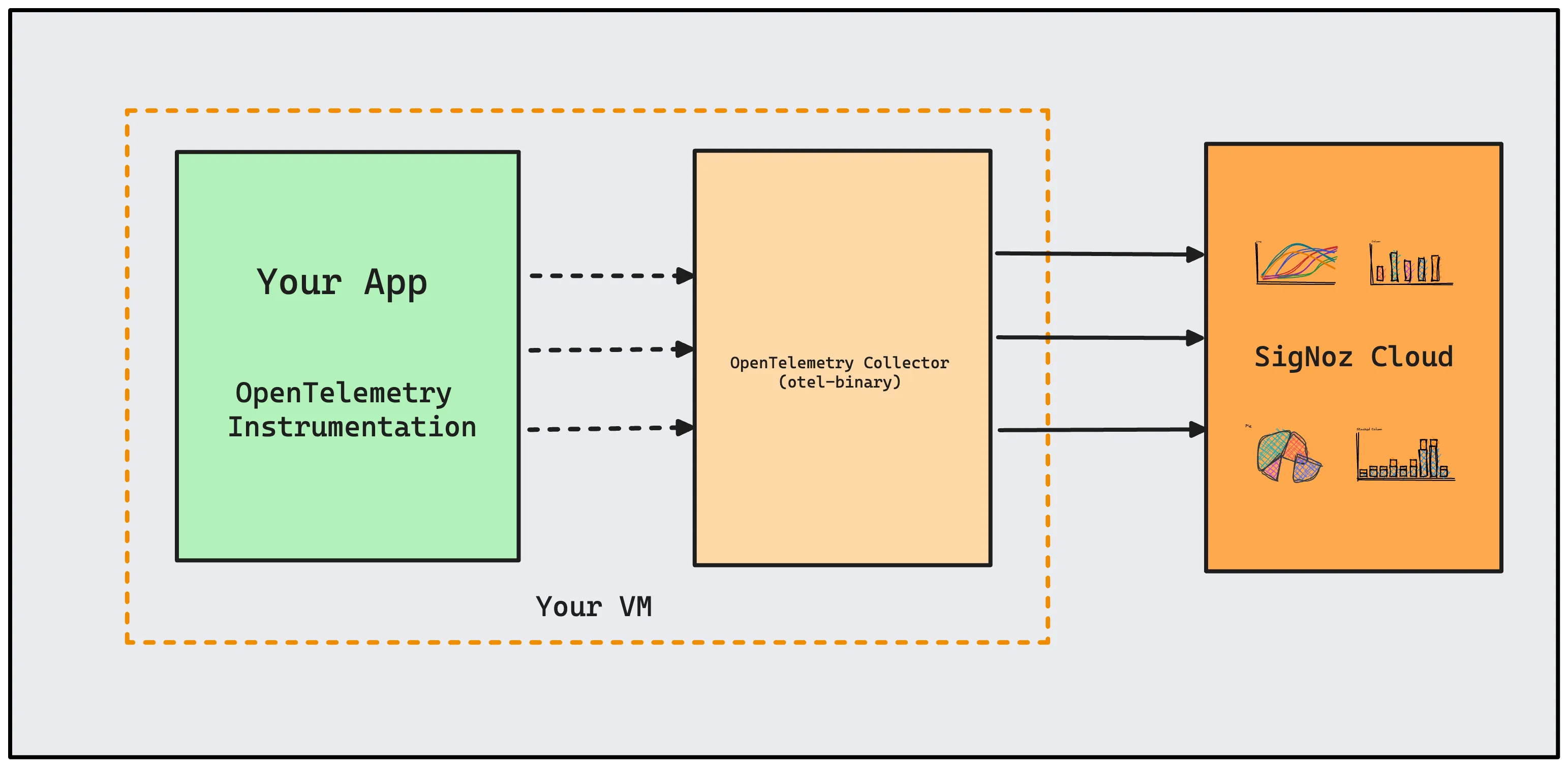

This tutorial shows how you can deploy OpenTelemetry binary as an agent, which collects telemetry data. Data such as traces, metrics and logs generated by applications most likely running in the same virtual machine (VM).

It can also be used for collecting data from other VMs in the same cluster, data center or region, however, binary is not recommended in that scenario but container or deployment which can be easily scaled.

In this guide, you will also learn to set up a hostmetrics receiver to collect metrics from the VM and view in SigNoz.

Setup Otel Collector as agent

OpenTelemetry-instrumented applications in a VM can send data to the otel-binary agent running in the same VM. The OTel agent can then be configured to send data to the SigNoz cloud.

Here are the steps to set up OpenTelemetry binary as an agent.

- Download otel-collector tar.gz for your architecture

wget https://github.com/open-telemetry/opentelemetry-collector-releases/releases/download/v0.143.1/otelcol-contrib_0.143.1_linux_amd64.tar.gz

- Extract otel-collector tar.gz to the

otelcol-contribfolder

mkdir otelcol-contrib && tar xvzf otelcol-contrib_0.143.1_linux_amd64.tar.gz -C otelcol-contrib

- Create

config.yamlinotelcol-contribfolder and paste the below yaml configuration code in it.

receivers:

otlp:

protocols:

grpc:

endpoint: 0.0.0.0:4317

http:

endpoint: 0.0.0.0:4318

hostmetrics:

collection_interval: 60s

scrapers:

cpu: {}

disk: {}

load: {}

filesystem: {}

memory: {}

network: {}

paging: {}

process:

mute_process_name_error: true

mute_process_exe_error: true

mute_process_io_error: true

processes: {}

prometheus:

config:

global:

scrape_interval: 60s

scrape_configs:

- job_name: otel-collector-binary

static_configs:

- targets:

# - localhost:8888 # Uncomment to scrape Collector's own metrics

processors:

batch:

send_batch_size: 1000

timeout: 10s

# Ref: https://github.com/open-telemetry/opentelemetry-collector-contrib/blob/main/processor/resourcedetectionprocessor/README.md

resourcedetection:

detectors: [env, system] # Before system detector, include ec2 for AWS, gcp for GCP and azure for Azure.

# Using OTEL_RESOURCE_ATTRIBUTES envvar, env detector adds custom labels.

timeout: 2s

system:

hostname_sources: [os] # alternatively, use [dns,os] for setting FQDN as host.name and os as fallback

extensions:

health_check: {}

zpages: {}

exporters:

otlp:

endpoint: "ingest.<region>.signoz.cloud:443"

tls:

insecure: false

headers:

"signoz-ingestion-key": "<your-ingestion-key>"

debug:

verbosity: normal

service:

telemetry:

metrics:

level: basic

extensions: [health_check, zpages]

pipelines:

metrics:

receivers: [otlp]

processors: [batch]

exporters: [otlp]

metrics/internal:

receivers: [prometheus, hostmetrics]

processors: [resourcedetection, batch]

exporters: [otlp]

traces:

receivers: [otlp]

processors: [batch]

exporters: [otlp]

logs:

receivers: [otlp]

processors: [batch]

exporters: [otlp]

<region>: Your SigNoz Cloud region<your-ingestion-key>: Your SigNoz ingestion key

- Once we are done with the above configurations, we can now run the collector service with the following command:

From the otelcol-contrib, run the following command:

./otelcol-contrib --config ./config.yaml

Run in background

If you want to run otel collector process in the background:

./otelcol-contrib --config ./config.yaml &> otelcol-output.log & echo "$!" > otel-pid

The above command sends the output of the otel-collector to otelcol-output.log file and prints the process id of the background running otel collector process to the otel-pid file.

If you want to see the output of the logs you’ve just set up for the background process, you may look it up with:

tail -f -n 50 otelcol-output.log

tail 50 will give the last 50 lines from the file otelcol-output.log

You can stop the collector service otelcol when running in backgorund, with the following command:

kill "$(< otel-pid)"

- Download otel-collector tar.gz for your architecture

wget https://github.com/open-telemetry/opentelemetry-collector-releases/releases/download/v0.143.1/otelcol-contrib_0.143.1_linux_arm64.tar.gz

- Extract otel-collector tar.gz to the

otelcol-contribfolder

mkdir otelcol-contrib && tar xvzf otelcol-contrib_0.143.1_linux_arm64.tar.gz -C otelcol-contrib

- Create

config.yamlinotelcol-contribfolder and paste the below yaml configuration code in it.

receivers:

otlp:

protocols:

grpc:

endpoint: 0.0.0.0:4317

http:

endpoint: 0.0.0.0:4318

hostmetrics:

collection_interval: 60s

scrapers:

cpu: {}

disk: {}

load: {}

filesystem: {}

memory: {}

network: {}

paging: {}

process:

mute_process_name_error: true

mute_process_exe_error: true

mute_process_io_error: true

processes: {}

prometheus:

config:

global:

scrape_interval: 60s

scrape_configs:

- job_name: otel-collector-binary

static_configs:

- targets:

# - localhost:8888 # Uncomment to scrape Collector's own metrics

processors:

batch:

send_batch_size: 1000

timeout: 10s

# Ref: https://github.com/open-telemetry/opentelemetry-collector-contrib/blob/main/processor/resourcedetectionprocessor/README.md

resourcedetection:

detectors: [env, system] # Before system detector, include ec2 for AWS, gcp for GCP and azure for Azure.

# Using OTEL_RESOURCE_ATTRIBUTES envvar, env detector adds custom labels.

timeout: 2s

system:

hostname_sources: [os] # alternatively, use [dns,os] for setting FQDN as host.name and os as fallback

extensions:

health_check: {}

zpages: {}

exporters:

otlp:

endpoint: "ingest.<region>.signoz.cloud:443"

tls:

insecure: false

headers:

"signoz-ingestion-key": "<your-ingestion-key>"

debug:

verbosity: normal

service:

telemetry:

metrics:

level: basic

extensions: [health_check, zpages]

pipelines:

metrics:

receivers: [otlp]

processors: [batch]

exporters: [otlp]

metrics/internal:

receivers: [prometheus, hostmetrics]

processors: [resourcedetection, batch]

exporters: [otlp]

traces:

receivers: [otlp]

processors: [batch]

exporters: [otlp]

logs:

receivers: [otlp]

processors: [batch]

exporters: [otlp]

<region>: Your SigNoz Cloud region<your-ingestion-key>: Your SigNoz ingestion key

- Once we are done with the above configurations, we can now run the collector service with the following command:

From the otelcol-contrib, run the following command:

./otelcol-contrib --config ./config.yaml

Run in background

If you want to run otel collector process in the background:

./otelcol-contrib --config ./config.yaml &> otelcol-output.log & echo "$!" > otel-pid

The above command sends the output of the otel-collector to otelcol-output.log file and prints the process id of the background running otel collector process to the otel-pid file.

If you want to see the output of the logs you’ve just set up for the background process, you may look it up with:

tail -f -n 50 otelcol-output.log

tail 50 will give the last 50 lines from the file otelcol-output.log

You can stop the collector service otelcol when running in backgorund, with the following command:

kill "$(< otel-pid)"

- Download otel-collector tar.gz for your architecture

wget https://github.com/open-telemetry/opentelemetry-collector-releases/releases/download/v0.143.1/otelcol-contrib_0.143.1_darwin_amd64.tar.gz

- Extract otel-collector tar.gz to the

otelcol-contribfolder

mkdir otelcol-contrib && tar xvzf otelcol-contrib_0.143.1_darwin_amd64.tar.gz -C otelcol-contrib

- Create

config.yamlinotelcol-contribfolder and paste the below yaml configuration code in it.

receivers:

otlp:

protocols:

grpc:

endpoint: 0.0.0.0:4317

http:

endpoint: 0.0.0.0:4318

hostmetrics:

collection_interval: 60s

scrapers:

cpu: {}

disk: {}

load: {}

filesystem: {}

memory: {}

network: {}

paging: {}

processes: {}

prometheus:

config:

global:

scrape_interval: 60s

scrape_configs:

- job_name: otel-collector-binary

static_configs:

- targets:

# - localhost:8888 # Uncomment to scrape Collector's own metrics

processors:

batch:

send_batch_size: 1000

timeout: 10s

# Ref: https://github.com/open-telemetry/opentelemetry-collector-contrib/blob/main/processor/resourcedetectionprocessor/README.md

resourcedetection:

detectors: [env, system] # Before system detector, include ec2 for AWS, gcp for GCP and azure for Azure.

# Using OTEL_RESOURCE_ATTRIBUTES envvar, env detector adds custom labels.

timeout: 2s

system:

hostname_sources: [os] # alternatively, use [dns,os] for setting FQDN as host.name and os as fallback

extensions:

health_check: {}

zpages: {}

exporters:

otlp:

endpoint: "ingest.<region>.signoz.cloud:443"

tls:

insecure: false

headers:

"signoz-ingestion-key": "<your-ingestion-key>"

debug:

verbosity: normal

service:

telemetry:

metrics:

level: basic

extensions: [health_check, zpages]

pipelines:

metrics:

receivers: [otlp]

processors: [batch]

exporters: [otlp]

metrics/internal:

receivers: [prometheus, hostmetrics]

processors: [resourcedetection, batch]

exporters: [otlp]

traces:

receivers: [otlp]

processors: [batch]

exporters: [otlp]

logs:

receivers: [otlp]

processors: [batch]

exporters: [otlp]

<region>: Your SigNoz Cloud region<your-ingestion-key>: Your SigNoz ingestion key

- Once we are done with the above configurations, we can now run the collector service with the following command:

From the otelcol-contrib, run the following command:

./otelcol-contrib --config ./config.yaml

Run in background

If you want to run otel collector process in the background:

./otelcol-contrib --config ./config.yaml &> otelcol-output.log & echo "$\!" > otel-pid

The above command sends the output of the otel-collector to otelcol-output.log file and prints the process id of the background running otel collector process to the otel-pid file.

If you want to see the output of the logs you’ve just set up for the background process, you may look it up with:

tail -f -n 50 otelcol-output.log

tail 50 will give the last 50 lines from the file otelcol-output.log

You can stop the collector service otelcol when running in backgorund, with the following command:

kill "$(< otel-pid)"

- Download otel-collector tar.gz for your architecture

wget https://github.com/open-telemetry/opentelemetry-collector-releases/releases/download/v0.143.1/otelcol-contrib_0.143.1_darwin_arm64.tar.gz

- Extract otel-collector tar.gz to the

otelcol-contribfolder

mkdir otelcol-contrib && tar xvzf otelcol-contrib_0.143.1_darwin_arm64.tar.gz -C otelcol-contrib

- Create

config.yamlinotelcol-contribfolder and paste the below yaml configuration code in it.

receivers:

otlp:

protocols:

grpc:

endpoint: 0.0.0.0:4317

http:

endpoint: 0.0.0.0:4318

hostmetrics:

collection_interval: 60s

scrapers:

cpu: {}

disk: {}

load: {}

filesystem: {}

memory: {}

network: {}

paging: {}

processes: {}

prometheus:

config:

global:

scrape_interval: 60s

scrape_configs:

- job_name: otel-collector-binary

static_configs:

- targets:

# - localhost:8888 # Uncomment to scrape Collector's own metrics

processors:

batch:

send_batch_size: 1000

timeout: 10s

# Ref: https://github.com/open-telemetry/opentelemetry-collector-contrib/blob/main/processor/resourcedetectionprocessor/README.md

resourcedetection:

detectors: [env, system] # Before system detector, include ec2 for AWS, gcp for GCP and azure for Azure.

# Using OTEL_RESOURCE_ATTRIBUTES envvar, env detector adds custom labels.

timeout: 2s

system:

hostname_sources: [os] # alternatively, use [dns,os] for setting FQDN as host.name and os as fallback

extensions:

health_check: {}

zpages: {}

exporters:

otlp:

endpoint: "ingest.<region>.signoz.cloud:443"

tls:

insecure: false

headers:

"signoz-ingestion-key": "<your-ingestion-key>"

debug:

verbosity: normal

service:

telemetry:

metrics:

level: basic

extensions: [health_check, zpages]

pipelines:

metrics:

receivers: [otlp]

processors: [batch]

exporters: [otlp]

metrics/internal:

receivers: [prometheus, hostmetrics]

processors: [resourcedetection, batch]

exporters: [otlp]

traces:

receivers: [otlp]

processors: [batch]

exporters: [otlp]

logs:

receivers: [otlp]

processors: [batch]

exporters: [otlp]

<region>: Your SigNoz Cloud region<your-ingestion-key>: Your SigNoz ingestion key

- Once we are done with the above configurations, we can now run the collector service with the following command:

From the otelcol-contrib, run the following command:

./otelcol-contrib --config ./config.yaml

Run in background

If you want to run otel collector process in the background:

./otelcol-contrib --config ./config.yaml &> otelcol-output.log & echo "$\!" > otel-pid

The above command sends the output of the otel-collector to otelcol-output.log file and prints the process id of the background running otel collector process to the otel-pid file.

If you want to see the output of the logs you’ve just set up for the background process, you may look it up with:

tail -f -n 50 otelcol-output.log

tail 50 will give the last 50 lines from the file otelcol-output.log

You can stop the collector service otelcol when running in backgorund, with the following command:

kill "$(< otel-pid)"

- Download otel-collector tar.gz for your architecture using command line or from releases page.

wget https://github.com/open-telemetry/opentelemetry-collector-releases/releases/download/v0.143.1/otelcol-contrib_0.143.1_windows_amd64.tar.gz

- Extract otel-collector tar.gz to the

otelcol-contribfolder

mkdir otelcol-contrib

tar xvzf otelcol-contrib_0.143.1_windows_amd64.tar.gz -C otelcol-contrib

Upon successful extraction, change directory using

cd otelcol-contrib

To verify the successful setup of Otel Collector, run the following command

.\otelcol-contrib.exe --version

Expected output: otelcol-contrib version

- Create

config.yamlinotelcol-contribfolder and paste the below yaml configuration code in it.

receivers:

otlp:

protocols:

grpc:

endpoint: 0.0.0.0:4317

http:

endpoint: 0.0.0.0:4318

hostmetrics:

collection_interval: 60s

scrapers:

cpu: {}

disk: {}

load: {}

filesystem: {}

memory: {}

network: {}

paging: {}

process:

mute_process_name_error: true

mute_process_exe_error: true

mute_process_io_error: true

mute_process_user_error: true

processes: {}

prometheus:

config:

global:

scrape_interval: 60s

scrape_configs:

- job_name: otel-collector-binary

static_configs:

- targets:

# - localhost:8888 # Uncomment to scrape Collector's own metrics

processors:

batch:

send_batch_size: 1000

timeout: 10s

# Ref: https://github.com/open-telemetry/opentelemetry-collector-contrib/blob/main/processor/resourcedetectionprocessor/README.md

resourcedetection:

detectors: [env, system] # Before system detector, include ec2 for AWS, gcp for GCP and azure for Azure.

# Using OTEL_RESOURCE_ATTRIBUTES envvar, env detector adds custom labels.

timeout: 2s

system:

hostname_sources: [os] # alternatively, use [dns,os] for setting FQDN as host.name and os as fallback

extensions:

health_check: {}

zpages: {}

exporters:

otlp:

endpoint: "ingest.<region>.signoz.cloud:443"

tls:

insecure: false

headers:

"signoz-ingestion-key": "<your-ingestion-key>"

debug:

verbosity: normal

service:

telemetry:

metrics:

level: basic

extensions: [health_check, zpages]

pipelines:

metrics:

receivers: [otlp]

processors: [batch]

exporters: [otlp]

metrics/internal:

receivers: [prometheus, hostmetrics]

processors: [resourcedetection, batch]

exporters: [otlp]

traces:

receivers: [otlp]

processors: [batch]

exporters: [otlp]

logs:

receivers: [otlp]

processors: [batch]

exporters: [otlp]

<region>: Your SigNoz Cloud region<your-ingestion-key>: Your SigNoz ingestion key

- Once we are done with the above configurations, we can now run the collector service with the following command:

From the otelcol-contrib directory, run the following command:

.\otelcol-contrib.exe --config .\config.yaml

Test Sending Traces

OpenTelemetry collector binary should be able to forward all types of telemetry data received: traces, metrics, and logs, to SigNoz OTLP endpoint via gRPC.

Let's send sample traces to the otelcol using telemetrygen.

To install telemetrygen binary:

go install github.com/open-telemetry/opentelemetry-collector-contrib/cmd/telemetrygen@latest

To send trace data using telemetrygen, execute the command below:

telemetrygen traces --traces 1 --otlp-endpoint localhost:4317 --otlp-insecure

Output should look like this:

...

2023-03-15T11:04:38.967+0545 INFO channelz/funcs.go:340 [core][Channel #1] Channel Connectivity change to READY {"system": "grpc", "grpc_log": true}

2023-03-15T11:04:38.968+0545 INFO traces/traces.go:124 generation of traces isn't being throttled

2023-03-15T11:04:38.968+0545 INFO traces/worker.go:90 traces generated {"worker": 0, "traces": 1}

2023-03-15T11:04:38.969+0545 INFO traces/traces.go:87 stop the batch span processor

2023-03-15T11:04:38.983+0545 INFO channelz/funcs.go:340 [core][Channel #1] Channel Connectivity change to SHUTDOWN {"system": "grpc", "grpc_log": true}

2023-03-15T11:04:38.984+0545 INFO channelz/funcs.go:340 [core][Channel #1 SubChannel #2] Subchannel Connectivity change to SHUTDOWN {"system": "grpc", "grpc_log": true}

2023-03-15T11:04:38.984+0545 INFO channelz/funcs.go:340 [core][Channel #1 SubChannel #2] Subchannel deleted {"system": "grpc", "grpc_log": true}

2023-03-15T11:04:38.984+0545 INFO channelz/funcs.go:340 [core][Channel #1] Channel deleted {"system": "grpc", "grpc_log": true}

2023-03-15T11:04:38.984+0545 INFO traces/traces.go:79 stopping the exporter

If the SigNoz endpoint in the configuration is set correctly and accessible, you should be able to see the traces sent via OpenTelemetry collector in VM from telemetrygen in the SigNoz UI.

Prerequisites

- SigNoz application up and running

- SigNoz endpoint accessible from the VM

- availability of ports:

4317,4318,8888,1777,13133

Installation

You can obtain the OpenTelemetry collector binary in the assets of each release: open-telemetry/opentelemetry-collector-releases/releases. There are two ways of installation with binary release assets: deb as systemd and tar.gz as plain binary.

Systemd

Using the deb file, OpenTelemetry Collector will be installed as a systemd and default configuration prepopulated at the /etc/otelcol-contrib path. This method would be preferable in case you want the OpenTelemetry collector to always be running in the background.

To download the deb file of release version 0.143.1:

Here are the steps to set up OpenTelemetry binary as an agent.

wget https://github.com/open-telemetry/opentelemetry-collector-releases/releases/download/v0.143.1/otelcol-contrib_0.143.1_linux_amd64.deb

wget https://github.com/open-telemetry/opentelemetry-collector-releases/releases/download/v0.143.1/otelcol-contrib_0.143.1_linux_arm64.deb

In the case of different OpenTelemetry collector versions, replace 0.143.1 with the respective version.

To install otelcol as systemd using dpkg:

sudo dpkg -i otelcol-contrib_0.143.1_linux_amd64.deb

sudo dpkg -i otelcol-contrib_0.143.1_linux_arm64.deb

Plain Binary

Using the tar.gz release asset, we can extract the OpenTelemetry collector binary and default configuration at our desired path. You can run the binary directly with flags by using tools like screen or tmux.

- Download otel-collector tar.gz for your architecture

wget https://github.com/open-telemetry/opentelemetry-collector-releases/releases/download/v0.143.1/otelcol-contrib_0.143.1_linux_amd64.tar.gz

- Extract otel-collector tar.gz to the

otelcol-contribfolder

mkdir otelcol-contrib && tar xvzf otelcol-contrib_0.143.1_linux_amd64.tar.gz -C otelcol-contrib

Download otel-collector tar.gz for your architecture

wget https://github.com/open-telemetry/opentelemetry-collector-releases/releases/download/v0.143.1/otelcol-contrib_0.143.1_linux_arm64.tar.gz

Extract otel-collector tar.gz to the otelcol-contrib folder

mkdir otelcol-contrib && tar xvzf otelcol-contrib_0.143.1_linux_arm64.tar.gz -C otelcol-contrib

Download otel-collector tar.gz for your architecture

wget https://github.com/open-telemetry/opentelemetry-collector-releases/releases/download/v0.143.1/otelcol-contrib_0.143.1_darwin_amd64.tar.gz

Extract otel-collector tar.gz to the otelcol-contrib folder

mkdir otelcol-contrib && tar xvzf otelcol-contrib_0.143.1_darwin_amd64.tar.gz -C otelcol-contrib

Download otel-collector tar.gz for your architecture

wget https://github.com/open-telemetry/opentelemetry-collector-releases/releases/download/v0.143.1/otelcol-contrib_0.143.1_darwin_arm64.tar.gz

Extract otel-collector tar.gz to the otelcol-contrib folder

mkdir otelcol-contrib && tar xvzf otelcol-contrib_0.143.1_darwin_arm64.tar.gz -C otelcol-contrib

OpenTelemetry Collector Configuration

Let's download the standalone configuration for the otelcol binary running in the VM:

wget https://raw.githubusercontent.com/SigNoz/benchmark/main/docker/standalone/config.yaml

Replace <IP of machine hosting SigNoz> with the address to SigNoz in the configuration highlighted below:

exporters:

otlp:

endpoint: '<IP of machine hosting SigNoz>:4317'

tls:

insecure: true

In the configuration above, we enable three receivers: OTLP, hostmetrics and prometheus.

OTLP receiver is configured to receive all types of telemetry data: traces, metrics, and logs. These data would be forwarded to SigNoz via OTLP gRPC endpoint.

hostmetrics receiver is configured to collect various metrics of the virtual machine. It consists of metrics related to CPU, memory, disk, file system, network, and others.

prometheus receiver is configured to collect the internal metrics of the otelcol. You can update it as per your need to include additional scrape targets accessible from the VM or remove existing targets.

OpenTelemetry Collector Usage

You copy the configuration file to the respective config paths as per your installation methods. Followed by respective instructions to start, restart, and view logs of the otelcol binary.

Systemd

To copy the updated config.yaml file:

sudo cp config.yaml /etc/otelcol-contrib/config.yaml

To restart otelcol with updated config:

sudo systemctl restart otelcol-contrib.service

To check the status of otelcol:

sudo systemctl status otelcol-contrib.service

To view logs of otelcol:

sudo journalctl -u otelcol-contrib.service

To stop otelcol:

sudo systemctl stop otelcol-contrib.service

Plain Binary

It is recommended to use the otelcol binary inside the terminal multiplexer tools like tmux or screen, since plain binary usage is ephemeral.

To copy the updated config.yaml file:

cp config.yaml ./otelcol-contrib/config.yaml

To change the directory inside the otelcol-contrib folder:

cd otelcol-contrib

Once we are done with the above configurations, we can now run the collector service with the following command:

From the otelcol-contrib, run the following command:

./otelcol-contrib --config ./config.yaml

Run in background

If you want to run otel collector process in the background:

./otelcol-contrib --config ./config.yaml &> otelcol-output.log & echo "$!" > otel-pid

The above command sends the output of the otel-collector to otelcol-output.log file and prints the process id of the background running otel collector process to the otel-pid file.

Once we are done with the above configurations, we can now run the collector service with the following command:

From the otelcol-contrib, run the following command:

./otelcol-contrib --config ./config.yaml

Run in background

If you want to run otel collector process in the background:

./otelcol-contrib --config ./config.yaml &> otelcol-output.log & echo "$!" > otel-pid

The above command sends the output of the otel-collector to otelcol-output.log file and prints the process id of the background running otel collector process to the otel-pid file.

Once we are done with the above configurations, we can now run the collector service with the following command:

From the otelcol-contrib, run the following command:

./otelcol-contrib --config ./config.yaml

Run in background

If you want to run otel collector process in the background:

./otelcol-contrib --config ./config.yaml &> otelcol-output.log & echo "$\!" > otel-pid

The above command sends the output of the otel-collector to otelcol-output.log file and prints the process id of the background running otel collector process to the otel-pid file.

Once we are done with the above configurations, we can now run the collector service with the following command:

From the otelcol-contrib, run the following command:

./otelcol-contrib --config ./config.yaml

Run in background

If you want to run otel collector process in the background:

./otelcol-contrib --config ./config.yaml &> otelcol-output.log & echo "$\!" > otel-pid

The above command sends the output of the otel-collector to otelcol-output.log file and prints the process id of the background running otel collector process to the otel-pid file.

To view the last 50 lines of otelcol logs:

tail -f -n 50 otelcol-output.log

To stop otelcol:

kill "$(< otel-pid)"

Test Sending Traces

OpenTelemetry collector binary should be able to forward all types of telemetry data received: traces, metrics, and logs, to SigNoz OTLP endpoint via gRPC.

Let's send sample traces to the otelcol using telemetrygen.

To install telemetrygen binary:

go install github.com/open-telemetry/opentelemetry-collector-contrib/cmd/telemetrygen@latest

To send trace data using telemetrygen, execute the command below:

telemetrygen traces --traces 1 --otlp-endpoint localhost:4317 --otlp-insecure

The output should look like this:

...

2023-03-15T11:04:38.967+0545 INFO channelz/funcs.go:340 [core][Channel #1] Channel Connectivity change to READY {"system": "grpc", "grpc_log": true}

2023-03-15T11:04:38.968+0545 INFO traces/traces.go:124 generation of traces isn't being throttled

2023-03-15T11:04:38.968+0545 INFO traces/worker.go:90 traces generated {"worker": 0, "traces": 1}

2023-03-15T11:04:38.969+0545 INFO traces/traces.go:87 stop the batch span processor

2023-03-15T11:04:38.983+0545 INFO channelz/funcs.go:340 [core][Channel #1] Channel Connectivity change to SHUTDOWN {"system": "grpc", "grpc_log": true}

2023-03-15T11:04:38.984+0545 INFO channelz/funcs.go:340 [core][Channel #1 SubChannel #2] Subchannel Connectivity change to SHUTDOWN {"system": "grpc", "grpc_log": true}

2023-03-15T11:04:38.984+0545 INFO channelz/funcs.go:340 [core][Channel #1 SubChannel #2] Subchannel deleted {"system": "grpc", "grpc_log": true}

2023-03-15T11:04:38.984+0545 INFO channelz/funcs.go:340 [core][Channel #1] Channel deleted {"system": "grpc", "grpc_log": true}

2023-03-15T11:04:38.984+0545 INFO traces/traces.go:79 stopping the exporter

If the SigNoz endpoint in the configuration is set correctly and accessible, you should be able to see the traces sent via the OpenTelemetry collector in the VM from telemetrygen in the SigNoz UI.