A Comprehensive Guide to Model Monitoring in ML Production

In machine learning, ensuring that your models remain reliable and precise is just as important as keeping a well-oiled machine. Consider a self-driving automobile that gradually begins to incorrectly interpret road signs when traffic patterns or weather conditions change. Consider an e-commerce recommendation system that is unable to keep up with changing user preferences, resulting in irrelevant suggestions. Your machine learning models, like these systems, require regular maintenance to function properly.

This guide delves into the intricacies of model monitoring, equipping you with the knowledge to maintain high-performing ML systems in production environments.

What is Model Monitoring in Machine Learning?

Monitoring machine learning models is like regularly checking the oil and tyre pressure in your car to ensure it runs smoothly and safely over time. The process includes monitoring different metrics and signals to guarantee that models uphold their accuracy, dependability, and efficiency as time progresses. Let us now look at some of the key components of a model monitoring system.

Data Quality Check

You need to ensure that the input data remains consistent and valid. Detect the anomalies and inconsistencies that could impact model performance. This involves checking for missing values, outliers, or sudden changes in data distribution. For example, let's take a recommendation system; in a recommendation system, if there is a sudden influx of duplicate user data then it could skew the model's predictions. You can implement automated checks and validation rules which can help you identify and rectify these anomalies before they impact the model.

Performance Metrics Tracking

Monitor the key performance indicators (KPIs) to assess model effectiveness. KPIs include metrics such as accuracy, precision, and recall. Precision measures the proportion of true positive results among all the positive predictions of the sample, while recall measures the proportion of true positive results out of all actual positives. So, the precision and recall together provide a comprehensive view of the performance of the model.

Drift Detection

Data drift occurs when the statistical properties of the input data change over time, while concept drift refers to the changes in the relationship between input data and target variables. Let us take an example of a spam detection model, the model might experience drift if the characteristics of spam emails change over time. So, you should regularly feed the model with updated data so that it can mitigate the effects of drift.

Resource Utilization Monitoring

Track computational resources, such as CPU and memory usage, as well as latency to ensure the model runs efficiently. For example, if a recommendation system experiences increased latency during peak usage times, then you can monitor the resource utilization because it can help you identify bottlenecks. A solution to this problem can be scaling up the infrastructure or optimizing the model's code, it will make the model's performance better.

Model monitoring differs from general system monitoring as it specifically focuses on ML model behaviour and performance rather than just infrastructure health. This distinction is a must-know for maintaining the integrity and reliability of ML systems in production.

| Model Monitoring | Generic Monitoring | |

|---|---|---|

| Focus | - ML model behavior and performance | - Infrastructure health and performance |

| Key Metrics | - Prediction accuracy - Drift detection - Latency in prediction - Feature importance changes - Model explainability | - CPU usage - Memory usage - Network traffic - Disk I/O - Uptime/downtime |

| Examples | - Monitoring fraud detection model - Tracking the distribution of input features over time | - Monitoring CPU and memory usage of a web server - Tracking network traffic to identify bottlenecks |

| Tools | - SigNoz with Custom Metrics - Prometheus with custom metrics - Seldon Core - Evidently AI - WhyLogs | - SigNoz - Prometheus - Grafana - Nagios - New Relic |

Example: Implementing Basic Model Monitoring

Prerequisites

To run the following example, you need Python installed on your system. Once Python is installed, the following libraries need to be installed using pip utility. Now to install these libraries, you need to open a command prompt with admin privileges and run the following command:

pip install numpy scipy scikit-learn pandas matplotlib nltk

We have also used the logging library in our example. You can read about logging in Python in more detail by clicking here.

Code Example

Here’s a basic example of setting up model monitoring in a Python script using logging and simple performance tracking:

import logging

import numpy as np

from sklearn.metrics import accuracy_score

import sys

# Configure logging to output messages to the console with a specified logging level

logging.basicConfig(stream=sys.stdout, level=logging.INFO, format='%(asctime)s - %(name)s - %(levelname)s - %(message)s')

logger = logging.getLogger(__name__)

# Simulated model class definition with a predict method

class Model:

def predict(self, X):

# Randomly generate predictions for the input data

return np.random.choice([0, 1], size=len(X))

# Input data validation function

def validate_input_data(X):

if np.any(np.isnan(X)):

logger.warning('Input data contains NaN values')

print('Warning: Input data contains NaN values')

if not isinstance(X, np.ndarray):

logger.error('Input data is not a NumPy array')

print('Error: Input data is not a NumPy array')

raise ValueError('Input data must be a NumPy array')

# Instantiate the simulated model

model = Model()

# Generate simulated test data

X_test = np.random.rand(100, 10) # 100 samples, each with 10 features

y_test = np.random.choice([0, 1], size=100) # 100 random true labels (0 or 1)

# Validate the input data

validate_input_data(X_test)

# Use the model to predict the labels for the test data

predictions = model.predict(X_test)

# Calculate the accuracy of the model's predictions

accuracy = accuracy_score(y_test, predictions)

# Log the accuracy of the model

logger.info(f'Model accuracy: {accuracy:.2f}')

print(f'Model accuracy: {accuracy:.2f}')

# Log the performance metrics

logger.info(f'Performance metrics: Accuracy - {accuracy:.2f}')

print(f'Performance metrics: Accuracy - {accuracy:.2f}')

Explanation:

- First, we are setting up logging to capture and report model performance.

- Then we created a mock model and random dataset for testing purposes.

- Then we use the model to make predictions and log the accuracy.

- Then we check for any

NaNvalues in the input data and log a warning if found. - Finally, we are logging the accuracy of the model predictions.

In our code, we have logged accuracy metrics which helps in understanding how well the model is performing and if the model needs any adjustments or retraining.

Sample Output:

The above example shows how to set a basic monitoring framework that logs accuracy and checks data for quality issues. It is just a foundational step towards more complex monitoring in production environments. This output indicates that the model's predictions are 48% accurate which means that the model has correctly predicted 48% of the labels in the test data. Since, the model is making random predictions, an accuracy close to 50% is expected in a binary classification problem, as random guessing would ideally result in an accuracy of around 50%.

Why is Model Monitoring Critical for ML Performance?

Model monitoring plays a crucial role in maintaining the effectiveness of ML systems. Here's why it's essential:

- Ensures model accuracy: Regular monitoring helps in early detection of the performance degradation thus it allows you to modify the model and maintain model reliability. For example, if you continuously track the accuracy and other performance metrics of your model then it can signal when a model's predictions are no longer as accurate as they were during initial deployment.

- Addresses data drift: Identifies shifts in input data distributions that can impact model predictions, ensuring the model remains relevant and accurate.

- Maintains compliance: Maintaining compliance helps you to ensure that your models adhere to regulatory requirements and ethical AI practices. It also safeguards the models against biases and unfair outcomes. Maintaining compliance includes monitoring for discriminatory patterns or ensuring the model's decisions remain fair and justifiable.

- Detects concept drift: Identify the shifts in input data distributions that can impact model predictions. This ensured that the model remains relevant and accurate. A data drift can occur when the statistical properties of the input data change over time and it can lead to decreased model performance. So, you must address the changes. For example, if a model was trained on data where certain features had specific relationships, any significant changes in those relationships in new data might indicate concept drift. Let’s take a code-based example for better clarity.

Example: Detecting Data Drift

To understand the importance of monitoring for data drift, consider the following example where we use statistical tests to detect changes in the input data distribution:

import numpy as np

from scipy.stats import ks_2samp

# Generate simulated training data

X_train = np.random.rand(1000, 10)

# 1000 samples, each with 10 features

# Generate simulated test data with slight drift introduced

X_test = np.random.rand(1000, 10) * 1.1

# Scaling to introduce slight drift

# Function to detect data drift using the Kolmogorov-Smirnov (KS) test

def detect_data_drift(X_train, X_test):

p_values = []

# List to store p-values for each feature

for i in range(X_train.shape[1]):

# Perform KS test for each feature in the training and test datasets

_, p_value = ks_2samp(X_train[:, i], X_test[:, i])

p_values.append(p_value)

# Append the p-value to the list

return np.array(p_values)

# Return the list of p-values as a numpy array

# Detecting data drift by comparing the training and test data distributions

p_values = detect_data_drift(X_train, X_test)

# Determine if data drift is detected by checking if any p-value is less than 0.05

drift_detected = np.any(p_values < 0.05)

# Print the result of the data drift detection

if drift_detected:

print('Data drift detected')

else:

print('No data drift detected')

Explanation:

- First, we have imported the necessary libraries, such as

numpyfor numerical operations andks_2samp from scipy.statsfor the Kolmogorov-Smirnov test. - The

X_trainrepresents training data, whileX_testrepresents new incoming data. A slight drift is introduced inX_testby multiplying it by 1.1. - The function

detect_data_driftperforms the Kolmogorov-Smirnov test for each feature to compare distributions betweenX_trainandX_test. - The

detect_data_driftfunction is called, and thep-valuesfor each feature are printed. The p-values indicate whether there's a significant difference between the distributions of each feature inX_trainandX_test.

Sample Output:

Data drift detected

This example demonstrates how statistical tests can be used to monitor data drift, which is important for maintaining model performance. By identifying the features where data drift occurs, you can take necessary actions such as retraining the model or adjusting data preprocessing steps to ensure the model remains effective and accurate over time. In this example, the distributions of each feature in the training and test datasets are compared using the Kolmogorov-Smirnov (KS) test. Data drift is identified if substantial differences are discovered (shown by p-values less than 0.05). If the p-value for a feature is less than 0.05 the distribution of that feature has significantly changed between the training and test datasets. This suggests data drift, which could impact model performance. On the other hand, if the p-value is greater than or equal to 0.05, it suggests that there is no significant change in the distribution of that feature, indicating no data drift.

Note

The Kolmogorov-Smirnov (KS) test is a non-parametric test which is used to compare the distributions of two independent samples. This test evaluates the null hypothesis that the two samples are drawn from the same distribution.

How to Implement Effective Model Monitoring Strategies

To set up robust model monitoring, follow these steps:

Establish Baseline Metrics: Set performance thresholds based on the initial model validation. This covers measurements like accuracy, precision, recall, and F1 score. Baseline measurements serve as a reference point for detecting deviations in model performance.

Example:

from sklearn.metrics import accuracy_score, precision_score, recall_score, f1_score def calculate_baseline_metrics(y_true, y_pred): """ Calculate baseline metrics for model evaluation. y_true (list or array): True labels. y_pred (list or array): Predicted labels. Returns a dictionary containing accuracy, precision, recall, f1_score """ metrics = { 'accuracy': accuracy_score(y_true, y_pred), 'precision': precision_score(y_true, y_pred, average='weighted'), 'recall': recall_score(y_true, y_pred, average='weighted'), 'f1_score': f1_score(y_true, y_pred, average='weighted') } return metrics # Sample data for true labels and predictions y_true = [0, 1, 2, 2, 1, 0] # True labels y_pred = [0, 0, 2, 2, 1, 1] # Predicted labels # Calculate baseline metrics baseline_metrics = calculate_baseline_metrics(y_true, y_pred) # Print the calculated metrics print(baseline_metrics)Explanation:

- Importing the required Libraries:

accuracy_score,precision_score,recall_score, andf1_scorefromsklearn.metrics. - The

calculate_baseline_metricsfunction takesy_true(true labels) andy_pred(predicted labels) as inputs. It calculates four evaluation metrics: accuracy, precision, recall, and F1 score. Finally, it returns these metrics in a dictionary.

Sample Output:

{ 'accuracy': 0.6666666666666666, 'precision': 0.7333333333333333, 'recall': 0.6666666666666666, 'f1_score': 0.6333333333333333 }- Importing the required Libraries:

Implement Data Quality Checks: Set up automated tests to ensure data integrity and consistency. Ensuring that the input data fulfils the requirements is critical for model performance.

Example:

import pandas as pd def check_data_quality(data): # .values converts the DataFrame to a NumPy array # data.dtypes returns a Series with the data type of each column. assert not data.isnull().values.any(), "Data contains null values" assert data.dtypes.isin([int, float]).all(), "Data types are not consistent" print("Data quality checks passed") # Assuming df is your DataFrame df = pd.DataFrame({ 'feature1': [1, 2, 3], 'feature2': [4.0, 5.0, 6.0] }) check_data_quality(df)Sample Output:

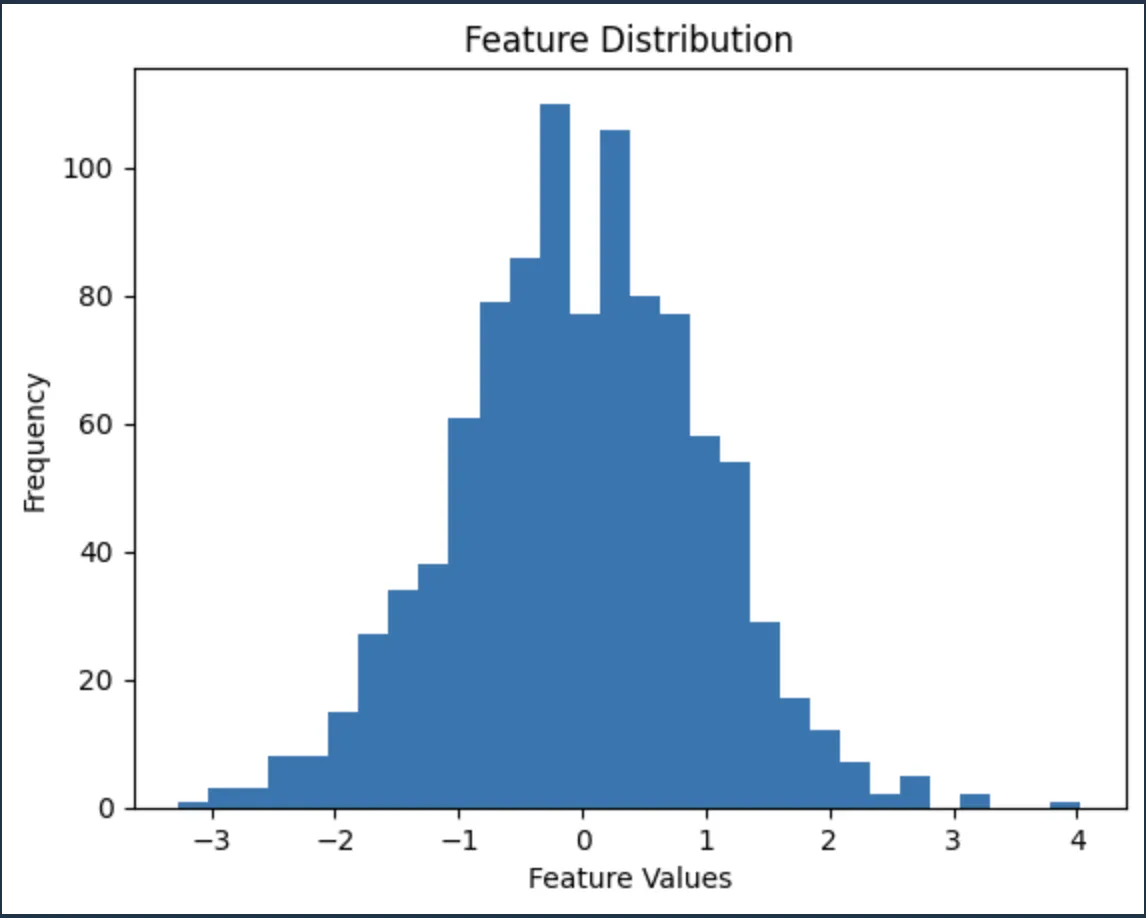

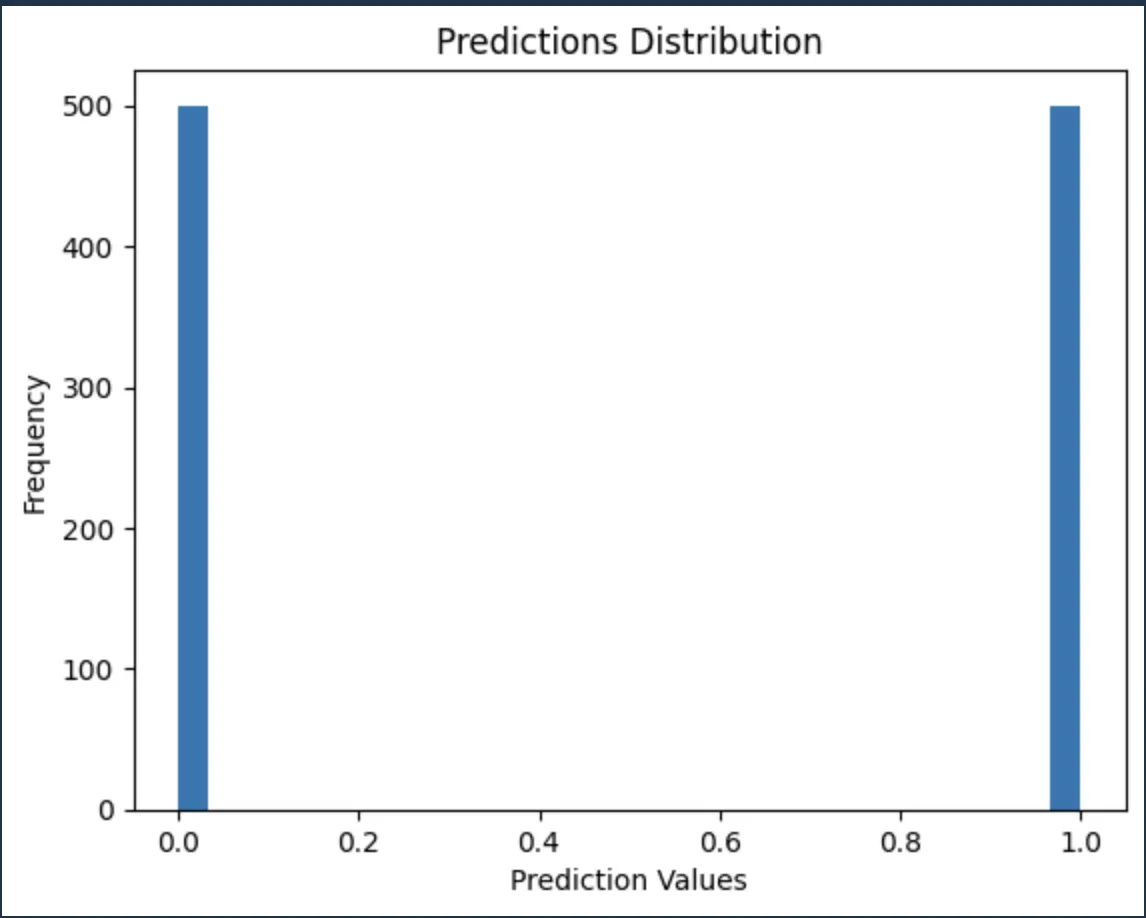

Data quality checks passedMonitor Model Inputs and Outputs: Track feature distributions and prediction trends to identify anomalies. Monitoring both inputs and outputs aids in detecting issues like data drift and model performance decline.

Example:

import numpy as np import matplotlib.pyplot as plt def monitor_feature_distribution(data): """ Plot the distribution of a feature data (array-like): The feature data to plot """ # Plot histogram of the data with 30 bins plt.hist(data, bins=30) plt.title("Feature Distribution") plt.xlabel("Feature Values") plt.ylabel("Frequency") plt.show() # Generate sample feature data feature_data = np.random.randn(1000) # Normally distributed data # Monitor the feature distribution monitor_feature_distribution(feature_data) def monitor_predictions(predictions): """ Plot the distribution of predictions predictions (array-like): The predictions to plot """ # Plot histogram of the predictions with 30 bins plt.hist(predictions, bins=30) plt.title("Predictions Distribution") plt.xlabel("Prediction Values") plt.ylabel("Frequency") plt.show() # Generate sample predictions data predictions = np.random.randint(0, 2, size=1000) # Binary predictions # Monitor the predictions distribution monitor_predictions(predictions)Explanation:

- Importing the required libraries:

numpyfor generating sample data andmatplotlib.pyplotfor plotting histograms. - The

monitor_feature_distribution(data)andmonitor_predictions(predictions)function plots a histogram of the provided feature data. - The

feature_datahas normally distributed data with 1000 samples. - The

predictionscontain binary predictions (0 or 1) with 1000 samples. - Calling the

monitor_feature_distributionwith the generated feature data to plot its distribution. - Calling

monitor_predictionswith the generated predictions to plot their distribution.

Sample Output:

Two histograms will be displayed according to your data set:

Histogram of

feature_data

Histogram of feature_data Histogram of

predictions

Histogram of predictions - Importing the required libraries:

Set Up Alerting Mechanisms: Generate automated warnings for performance degradation or anomalies. Alerts ensure that you are instantly aware of significant changes in model performance. You can use Python’s

email.mimefor this purpose. For more details, please refer to the official Python documentation.

Common Challenges in ML Model Monitoring

Implementing effective model monitoring comes with its share of challenges:

High-dimensional data: Completely monitoring complex models with a large number of features might be challenging. So, to prevent the overload of information (features) in the data, it can be necessary to use advanced approaches while monitoring all features and their interactions. Information overload can occur when the number of features is so large that it becomes difficult to identify which ones are most important to monitor.

Sample Solution: To concentrate on the most important components, apply dimensionality reduction strategies such as Principal Component Analysis (PCA). PCA helps by transforming the data into a set of principal components, retaining the most significant information while reducing the number of features.

from sklearn.decomposition import PCA import numpy as np # Assuming X is your high-dimensional dataset X = np.random.rand(100, 50) # High-dimensional data example pca = PCA(n_components=2) X_reduced = pca.fit_transform(X) print(X_reduced)Explanation:

By applying PCA, we reduce the dimensionality of the dataset from 50 features to 2 principal components. This reduction helps in focusing on the most important components and thus it lowered the risk of information overload.

Sample Output:

[[-0.09784562 -0.05306694] [-0.15915395 0.08565201] [ 0.03284712 -0.22509111] ... [ 0.09221238 -0.14241958] [ 0.12828239 -0.11862985] [ 0.18943509 -0.04983527]]Delayed feedback: The lack of instant access to ground truth labels in some applications makes it challenging to evaluate model performance in real time. Ground truth labels are the actual correct outputs used to train and validate the model. In real-time applications, obtaining these labels immediately may not be possible which can lead to delays in performance evaluation.

Proxy Metrics are alternative metrics that are used to estimate performance when ground truth labels are not available. They can provide a quick, though less accurate, indication of model performance. Delayed Batch Evaluations involve collecting a batch of predictions and their corresponding delayed ground truth labels to evaluate the model's performance after some time.

Example Solution: Let’s implement proxy metrics or use delayed batch evaluations to approximate real-time performance.

# Example of using delayed feedback with a proxy metric

def calculate_proxy_metric(predictions, true_labels):

proxy_metric = sum(predictions == true_labels) / len(true_labels)

return proxy_metric

# Simulated delayed feedback

delayed_true_labels = [0, 1, 0, 1, 1]

predictions = [0, 1, 1, 1, 0]

proxy_metric = calculate_proxy_metric(predictions, delayed_true_labels)

print(f'Proxy Metric: {proxy_metric}')

Explanation: We simulate delayed feedback by calculating a proxy metric, which is the accuracy of the model's predictions compared to the delayed true labels. Although this does not provide real-time evaluation, it approximates the model's performance, helping to maintain oversight.

Sample Output:

Proxy Metric: 0.6

- Scalability issues: With the growth in the production number of models in, monitoring systems must scale accordingly. This requires robust infrastructure and efficient algorithms to handle increased load.

Key Metrics for Model Monitoring

Focus on these key variables to assess model health and guarantee consistent performance:

Predictive Performance: Monitor standard performance indicators to determine how well the model predicts. These are accuracy, F1-score, AUC-ROC, precision, and recall. The F1-score is a measure of the accuracy of a model. It considers both precision and recall. The F1-score is the harmonic mean of precision and recall thus it provides a balance between the two metrics. AUC-ROC stands for the Area Under the Receiver Operating Characteristic Curve. The ROC curve is a graphical representation of a model's diagnostic ability, plotting the true positive rate (recall) against the false positive rate (1-specificity).

Example:

from sklearn.metrics import accuracy_score, f1_score, roc_auc_score import numpy as np def calculate_performance_metrics(y_true, y_pred, y_prob): """ Calculates the performance metrics for the model. y_true (array-like): True labels. y_pred (array-like): Predicted labels. y_prob (array-like): Predicted probabilities for the positive class. Returns a dictionary containing accuracy, F1-score, and AUC-ROC. """ metrics = { 'accuracy': accuracy_score(y_true, y_pred), 'f1_score': f1_score(y_true, y_pred, average='weighted'), 'auc_roc': roc_auc_score(y_true, y_prob) } return metrics # Generate sample data for demonstration np.random.seed(0) y_true = np.random.randint(0, 2, size=100) # True labels y_pred = np.random.randint(0, 2, size=100) # Predicted labels y_prob = np.random.rand(100) # Predicted probabilities for the positive class # Calculate performance metrics performance_metrics = calculate_performance_metrics(y_true, y_pred, y_prob) print(performance_metrics)Explanation:

- Importing the necessary functions

accuracy_score,f1_score, androc_auc_scoreare imported fromsklearn.metrics. - The

calculate_performance_metricsfunction takes three arguments:y_true(true labels),y_pred(predicted labels), andy_prob. Inside the function, a dictionarymetricsis created to store the computed metrics:accuracyis calculated usingaccuracy_score.f1_scoreis calculated usingf1_scorewith a weighted average to account for class imbalance.auc_rocis calculated usingroc_auc_score.

- The function

calculate_performance_metricsis called with the sample data, and the resulting metrics are printed.

Sample Output:

{ 'accuracy': 0.44, 'f1_score': 0.457209104928158, 'auc_roc': 0.5654444444444444 }- Importing the necessary functions

Business Impact Metrics: Assess the impact of your model on business-specific KPIs such as conversion rates, client retention, and revenue. These measurements let you understand your model's real-world effectiveness.

Example:

def calculate_conversion_rate(predictions, actuals): conversions = sum((predictions == 1) & (actuals == 1)) total_positives = sum(actuals == 1) conversion_rate = conversions / total_positives if total_positives > 0 else 0 return conversion_rate # Assuming predictions and actuals are your model's predictions and actual outcomes predictions = [1, 0, 1, 1] actuals = [1, 1, 1, 0] conversion_rate = calculate_conversion_rate(predictions, actuals) print(f'Conversion Rate: {conversion_rate:.2%}')Sample Output:

Conversion Rate: 66.67%

Monitoring Unstructured Data Models

Monitoring models that work with unstructured data, such as text and images, necessitate specialized methodologies that are adapted to the unique characteristics and challenges of various data sources. Whatever we discussed till now works only for structured data. Structured data is highly organized and it is easy to search for data in databases, such as tables with rows and columns (e.g., customer information in a database). On the other hand, the unstructured data does not have a predefined format or structure thus it is more challenging to analyze (e.g., emails, social media posts, images).

NLP Models

Natural Language Processing (NLP) models work with text data to understand, interpret, and generate human language. These models often use various metrics to evaluate their performance and quality. Some of the most common metrics include perplexity, BLEU scores, and bespoke domain-specific metrics.

- Perplexity measures how well a probability model predicts a sample. A lower perplexity indicates better performance.

- BLEU Scores (Bilingual Evaluation Understudy) assesses the quality of text generated by comparing it to one or more reference texts.

- Bespoke Domain-Specific Metrics are custom metrics designed for specific applications, tailored to the unique requirements of the domain.

Example:

from nltk.translate.bleu_score import sentence_bleu, SmoothingFunction

# Sample ground truth (reference) and model output (candidate)

reference = [['this', 'is', 'a', 'test']]

candidate = ['this', 'is', 'an', 'experiment']

# Calculate BLEU score with smoothing

smoothing_function = SmoothingFunction().method1

bleu_score = sentence_bleu(reference, candidate, smoothing_function=smoothing_function)

print(f'BLEU score: {bleu_score:.2f}')

Sample Output:

BLEU score: 0.17

Explanation: The BLEU score calculated the overlap between model-generated text and reference text, indicating the model's ability to generate meaningful and coherent language. A BLEU score of 1.00 signifies a perfect match. Here, the BLEU score of 0.72 indicates that the candidate sentence is reasonably similar to the reference but not a perfect match.

Computer Vision Models

Computer vision models rely heavily on measures such as object detection accuracy, segmentation quality, and classification confidence. These metrics help to guarantee that the model correctly identifies and processes visual input.

Example:

from sklearn.metrics import accuracy_score

# True labels for the test images

ground_truth = [1, 0, 1, 1]

# 1 for cat, 0 for dog

# Model's predictions for the test images

predictions = [1, 0, 1, 0]

# Example predictions from the model

# Calculate and print accuracy

accuracy = accuracy_score(ground_truth, predictions)

print(f'Accuracy: {accuracy:.2f}')

Sample Output:

Accuracy: 0.75

Explanation: Accuracy is a straightforward metric that indicates the proportion of correct predictions made by the model, providing a clear measure of performance. So, if the accuracy is 0.75, it means the model correctly classified 75% of the test images.

Proxy Metrics

When ground truth is missing, use proxy measurements to indirectly assess performance. Proxy metrics may contain user engagement data, feedback ratings, or other related indicators.

Example:

import numpy as np

# Sample user engagement data in seconds

view_durations = [120, 90, 150, 200]

# Calculate average view duration

average_duration = np.mean(view_durations)

print(f'Average view duration: {average_duration:.2f} seconds')

Sample Output:

Average view duration: 140.00 seconds

Explanation: Average view duration can serve as a proxy metric for content relevance and engagement, indirectly reflecting the model's effectiveness in recommending or generating engaging content.

Balancing Privacy Concerns

When working with sensitive unstructured data, it is important to find a balance between effective monitoring and privacy considerations. Implement anonymization techniques and follow data protection standards.

Example:

# Example of anonymizing sensitive data

def anonymize_data(data):

anonymized_data = {key: 'REDACTED' for key in data.keys()}

return anonymized_data

# Sample sensitive data

user_data = {'name': 'John Doe', 'email': 'john.doe@example.com'}

# Anonymize data

anonymized_user_data = anonymize_data(user_data)

print(anonymized_user_data)

Sample Output:

{'name': 'REDACTED', 'email': 'REDACTED'}

Explanation: Anonymising sensitive data protects user privacy while enabling effective model performance monitoring and analysis.

Adopting these specialized methodologies allows you to properly monitor NLP and computer vision models, ensuring their excellent performance and dependability in production scenarios.

Advanced Techniques in Model Monitoring

Elevate your monitoring capabilities with these advanced strategies:

A/B Testing

A/B testing compares a new model version to an old one in production. This strategy helps determine whether the new model performs better before fully implementing it.

Example:

# Sample logic for A/B testing

import random

def model_a(input_data):

# Existing model logic

pass

def model_b(input_data):

# New model logic

pass

def ab_test(input_data):

if random.random() > 0.5:

return model_a(input_data)

else:

return model_b(input_data)

# Route requests to A/B testing function

result = ab_test(sample_input_data)

Explanation: By randomly routing user requests to either the existing or new models, you can compare their performance and make educated model deployment decisions.

Ensemble Monitoring

Combining numerous models in a single entity can result in more robust monitoring systems. Ensemble models can assist detect inconsistencies and increase overall dependability.

Example:

from sklearn.ensemble import VotingClassifier

# Sample models

model1 = ... # First model

model2 = ... # Second model

model3 = ... # Third model

# Create an ensemble model

ensemble = VotingClassifier(estimators=[('model1', model1), ('model2', model2), ('model3', model3)], voting='hard')

# Fit ensemble model

ensemble.fit(X_train, y_train)

# Predict with ensemble model

predictions = ensemble.predict(X_test)

Explanation: An ensemble model integrates predictions from various models to improve accuracy and robustness, resulting in a more dependable monitoring system.

Continuous learning

Continuous learning enables models to update automatically based on monitoring data. This strategy enables models to adapt to new data while maintaining peak performance.

Example:

# Pseudo-code for continuous learning

def update_model(model, new_data):

# Retrain model with new data

model.fit(new_data)

return model

# Monitor performance and trigger updates

performance_metrics = monitor_model_performance(model)

if performance_metrics['accuracy'] < threshold:

model = update_model(model, new_data)

Explanation: By continuously retraining the model with new data, you can ensure it stays up-to-date and maintains high performance in changing environments.

ML Monitoring Best Practices

1. Start Monitoring Early

- Begin monitoring during the development phase, not just after deployment.

- Track metrics and logs from the experimentation stage onwards.

- This approach helps establish baselines and identify potential issues early.

2. Define Clear Metrics and KPIs

- Establish specific, measurable metrics that align with business objectives.

- Include both model-specific metrics (e.g., accuracy, F1-score) and operational metrics (e.g., latency, resource utilization).

- Ensure all stakeholders agree on the definitions and importance of each metric.

3. Implement Comprehensive Monitoring

- Monitor at both functional and operational levels:

- Functional: Input data, model performance, and output predictions

- Operational: System performance, pipelines, and costs

- Use a combination of tools to cover all aspects (e.g., Prometheus, Grafana, Evidently AI).

4. Set Up Automated Alerts

- Configure alerting systems to notify relevant team members of significant changes or issues.

- Define thresholds for each metric that trigger alerts when crossed.

- Ensure alerts are actionable and provide enough context for quick diagnosis.

5. Create a Troubleshooting Framework

- Develop a systematic approach to investigate and resolve issues.

- Document common problems and their solutions for quick reference.

- Establish a clear escalation path for complex issues.

6. Plan for Model Updates

- Anticipate the need for model updates due to data drift or performance degradation.

- Implement a streamlined process for model retraining and deployment.

- Use A/B testing to validate new model versions before full deployment.

7. Monitor Data Quality and Drift

- Regularly check for changes in input data distribution.

- Implement data validation checks to ensure data integrity.

- Use statistical tests to detect data drift and assess its impact on model performance.

8. Use Proxy Metrics When Necessary

- When ground truth labels are unavailable, utilize proxy metrics to gauge model performance.

- Monitor prediction distributions and business impact metrics as alternatives.

- Validate proxy metrics periodically to ensure they remain relevant.

9. Implement Version Control

- Track all changes to the model, including hyperparameters, features, and training data.

- Maintain a clear history of model versions in production.

- Ensure the ability to rollback to previous versions if needed.

10. Foster Cross-functional Collaboration

- Encourage communication between data scientists, engineers, and business stakeholders.

- Ensure all team members understand their roles in the monitoring process.

- Conduct regular reviews to align monitoring efforts with business objectives.

11. Continuously Optimize Monitoring Systems

- Regularly review and update monitoring practices to improve efficiency.

- Stay informed about new tools and techniques in ML monitoring.

- Solicit feedback from team members to identify areas for improvement.

12. Maintain Compliance and Ethics

- Ensure monitoring practices comply with relevant regulations (e.g., GDPR, CCPA).

- Monitor for bias and fairness issues in model predictions.

- Implement auditing processes to track model decisions and their impacts.

By following these best practices, you can create a robust monitoring system that helps maintain the performance and reliability of your machine learning models in production, ultimately driving sustained business value.

Key Takeaways

- Crucial for ML Performance: Model monitoring is a must for ensuring the correctness and dependability of machine learning models in production settings.

- Comprehensive Strategies: An effective monitoring strategy includes data quality checks, performance measurements, and operational features.

- Timely Issue Detection: Regular audits and automatic alerts are critical for timely issue detection and resolution, resulting in consistent model performance.

- Adapted procedures: Monitoring procedures should be adapted to individual model types and data domains to successfully handle unique difficulties and requirements.

FAQs

What is the difference between model monitoring and model observability?

Model monitoring tracks certain metrics and performance indicators, whereas model observability provides a more comprehensive picture of the model's behaviour, including internal states and decision-making processes.

How often should I monitor my ML models in production?

The frequency varies based on your application. While some applications might only need to be checked once a day or once a week, others could need to be monitored in real-time. Take into account variables such as model complexity, data volume, and business impact.

Can model monitoring help prevent bias and fairness issues?

Yes, by tracking performance across various subgroups and spotting differences in model results, model monitoring can assist in the detection of bias. Consistent observation enables you to quickly identify and resolve issues related to equity.

What are the key indicators of model drift, and how can they be detected?

Key indicators include changes in feature distributions, shifts in prediction patterns, and degradation in performance metrics. Detect drift through statistical testing, distribution comparisons, and performance tracking over time.