How to Create or Append to Log Files - Python Logging

Maintaining and troubleshooting Python applications requires effective logging. Implementing a dependable logging system can greatly reduce the amount of work needed for debugging and monitoring, regardless of the script size or application being developed. This article will cover how to use Python's robust logging module to create new log files or append to existing ones. With the help of this article, you can set up your logging system to either continue logging to an already existing log file or generate a new one if one doesn't already exist.

Understanding Python Logging Basics

The integrated logging module in Python is an efficient tool that offers a more organized and effective approach to tracking events inside your program than just print statements. Appropriate logging is essential for tracking the health and behaviour of your application over time, as well as for debugging.

Key Components of Python Logging

The logging module comprises four main components that work together to facilitate logging:

- Loggers: These are the points of entry for logging in your application. Each logger captures log messages, which can subsequently be routed to one of several outputs depending on the handlers that have been set.

- Handlers: Handlers specify the destination of log messages. Some of the most common handlers are

StreamHandler(for console output) andFileHandler(for logging into a file). You can set up numerous handlers for a single logger to send logs to different destinations. - Formatters: Formatters control the layout and content of log messages. They govern how timestamps, log levels, and messages are shown in the logs.

- Levels: Logging levels (such as

DEBUG,INFO,WARNING,ERROR, andCRITICAL) help you to manage the logs in a better way. Setting a specified log level allows you to filter out less important messages and focus on what matters.

To learn more about logging in Python with its best practices and examples, you can check out our other articles:

- Python Logging - From Setup to Monitoring with Best Practices

- Python Logging Best Practices - Expert Tips with Practical Examples

Why Use Logging Over Print Statements?

Logging gives you a lot of benefits when it comes to tracking, monitoring, and debugging. Some of them are:

- Flexibility: You can quickly set up the format and location of your log messages by using logging.

- Granularity: You can fine-tune the kinds of messages that are logged by utilizing logging levels.

- Performance: In larger applications where performance is crucial, logging is more effective than utilizing print statements.

- Consistency: Logging offers a consistent method of information capture, simplifying the management and analysis of your application's behaviour over time.

Example Scenario: Let's say you are a developer assigned to debug a complicated problem in a real-world setting. You would have to rely on several print statements without a well-configured logging system, which could overlook important information. Logging provides an organized method to record specific context—such as the file, function, and line number—and enables you to identify and fix problems more rapidly.

How to Create a Log File if It Doesn't Exist?

To route log messages to a file in Python, use the FileHandler class found in the logging module. Let us go through the process of configuring basic logging to a new file so that your logs are effectively stored for later use.

Basic Setup for Logging to a File

Let us begin with a simple example of how to create a new log file and log some messages:

import logging

# Create a logger object

logger = logging.getLogger('my_logger')

logger.setLevel(logging.DEBUG)

# Create a file handler to log messages to a file

handler = logging.FileHandler('app.log')

handler.setLevel(logging.DEBUG)

# Define the log message format

formatter = logging.Formatter('%(asctime)s - %(name)s - %(levelname)s - %(message)s')

handler.setFormatter(formatter)

# Attach the handler to the logger

logger.addHandler(handler)

# Log some messages

logger.debug('This is a debug message')

logger.info('This is an info message')

Output:

2024-09-03 00:19:01,488 - my_logger - DEBUG - This is a debug message

2024-09-03 00:19:01,488 - my_logger - INFO - This is an info message

Explanation:

- FileHandler: The

FileHandlerobject writes log messages to a file. If the specified file (app.log) doesn’t exist, Python will automatically create it. If the file already exists, it will be opened in append mode by default, ensuring that new log entries are added to the end of the file. - Logger: The

getLogger('my_logger')call creates or retrieves a logger namedmy_logger, allowing you to organize logs by different components or modules of your application. - Formatter: The

Formatterobject specifies how log messages should be formatted, making it easier to read and analyze logs.

Configuring Log File Creation

To gain more control over how your log files are created and managed, you can configure several aspects of the FileHandler:

Set the File Mode: By default, the

FileHandleropens the log file in append mode ('a'). If you want to overwrite the file each time the script runs, use write mode ('w'):handler = logging.FileHandler('app.log', mode='w')- Append Mode (

'a'): New log entries are added to the end of the existing file. - Write Mode (

'w'): The file is overwritten each time the logger is initialized, erasing previous logs.

- Append Mode (

Specify Log File Paths: Absolute paths are an excellent approach to ensure that your log files are created in the correct location, especially in production environments. This method helps to avoid problems with relative routes that may differ depending on where your script is executed.

import os log_directory = '/var/log/myapp' os.makedirs(log_directory, exist_ok=True) handler = logging.FileHandler(os.path.join(log_directory, 'app.log'))- Absolute Paths: Using

os.path.join, you can create a log file at a specific location, ensuring that other logs are stored consistently across different environments. - Directory Creation: The

os.makedirs(log_directory, exist_ok=True)line ensures that the directory exists before attempting to create the log file.

- Absolute Paths: Using

Implement Error Handling: When working with file systems, handling potential problems with caution, such as permission difficulties that may prohibit the log file from being created is essential.

try: handler = logging.FileHandler('app.log') except PermissionError: print("Error: Unable to create log file due to permission issues.") sys.exit(1)- Permission Handling: By catching

PermissionError, you can provide a clear error message and take appropriate action, such as exiting the program or logging to an alternative destination.

- Permission Handling: By catching

Customize Log File Naming: To avoid overwriting log files or to create unique logs for different sessions, you can dynamically generate log file names using timestamps or other identifiers:

from datetime import datetime log_filename = f"app_{datetime.now().strftime('%Y%m%d_%H%M%S')}.log" handler = logging.FileHandler(log_filename)- Dynamic Naming: This approach ensures that each log file has a unique name, making it easier to organize and review logs from different runs or sessions.

Appending to Existing Log Files

When it comes to logging, it's important to avoid losing important data. By default, Python's FileHandler opens log files in append mode (a). This feature ensures that new log messages are appended to the end of an existing file without overwriting its content. How to attach logs to an existing file:

Example: Appending Logs

import logging

# Create a logger

logger = logging.getLogger('my_logger')

logger.setLevel(logging.DEBUG)

# Create a file handler that appends to an existing file (in my case it is app.log)

handler = logging.FileHandler('app.log', mode='a')

handler.setLevel(logging.DEBUG)

# Define the logging format

formatter = logging.Formatter('%(asctime)s - %(name)s - %(levelname)s - %(message)s')

handler.setFormatter(formatter)

# Attach the handler to the logger

logger.addHandler(handler)

# Append new log messages

logger.info('This message will be appended to the existing log file')

Sample Output:

2024-09-03 00:19:01,488 - my_logger - DEBUG - This is a debug message

2024-09-03 00:19:01,488 - my_logger - INFO - This is an info message

2024-09-03 00:20:16,755 - my_logger - INFO - This message will be appended to the existing log file

Explanation:

- FileHandler: The

FileHandler('existing_log.log', mode='a')opens the file in append mode, preserving previous logs and adding new messages at the end. - LOGGER: When configured with the file handler, the logger will continue to append logs whenever your application generates a new message.

Implementing Log Rotation

As applications run over time, log files can become much larger, making them difficult to maintain and analyze. Log rotation is a strategy for managing log file sizes that automatically archives old logs and creates new ones. Python's RotatingFileHandler and TimedRotatingFileHandler simplify this operation.

Rotating Log Files Based on Size

The RotatingFileHandler rotates log files when they reach a certain size. It archives the old log and starts a new one, ensuring that no single log file becomes too large:

from logging.handlers import RotatingFileHandler

# Create a rotating file handler

handler = RotatingFileHandler('app.log', maxBytes=1024*1024, backupCount=5)

maxBytes: The maximum file size before rotation occurs (in bytes).backupCount: The number of backup files to keep. Older logs are deleted as new ones are created.- In the above code, with

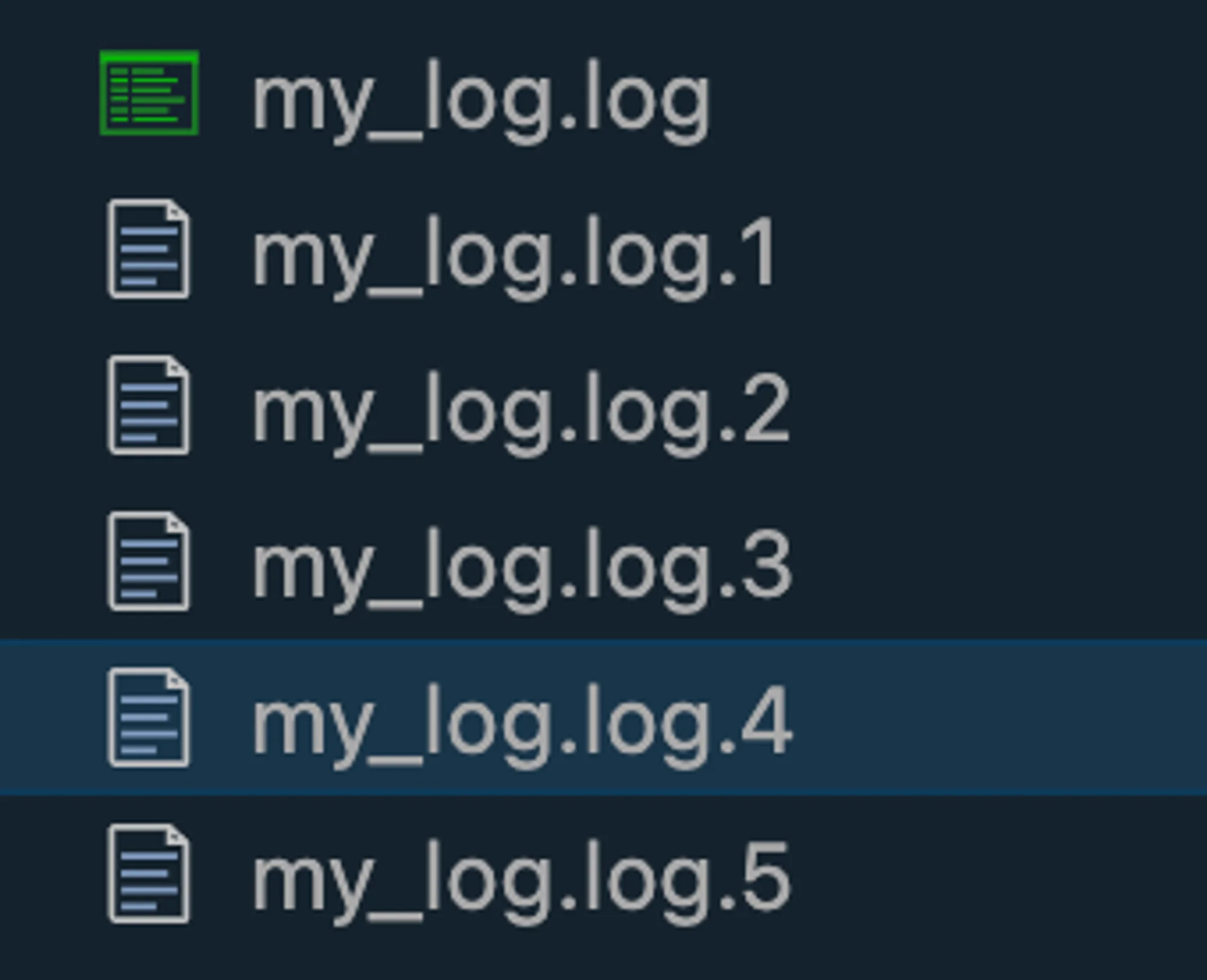

backupCount=5, theRotatingFileHandlerwill maintain 5 backup copies of the log file (app.log). Each file will not exceed the specified size (1024*1024bytes = 1 MB). When the current log reaches 1 MB, the handler will rename it (e.g.,app.log.1), and a newapp.logwill start. After creatingapp.log.1, subsequent log rotations will create filesapp.log.2,app.log.3, and so on, up toapp.log.5. Once this limit is reached, the handler will start overwriting the oldest file (i.e.,app.log.1).

Example:

import logging

from logging.handlers import RotatingFileHandler

# Create a logger

logger = logging.getLogger('my_logger')

logger.setLevel(logging.DEBUG)

# Setup rotating file handler (10KB file size limit, keep 5 backups for testing)

handler = RotatingFileHandler('my_log.log', maxBytes=10*1024, backupCount=5)

formatter = logging.Formatter('%(asctime)s - %(levelname)s - %(message)s')

handler.setFormatter(formatter)

logger.addHandler(handler)

# Generate large log messages in a loop to test rotation

for i in range(10000):

logger.debug(f"This is a very large log message {i} " + "X" * 500) # 500 X's added to increase size

It will generate files like:

Rotating Log Files Based on Time

The TimedRotatingFileHandler rotates logs at specific time intervals, such as every day, week, or at midnight:

from logging.handlers import TimedRotatingFileHandler

# Create a time-based rotating handler

handler = TimedRotatingFileHandler('app.log', when='midnight', backupCount=7)

when: Specifies the time interval for rotation (e.g.,'midnight','W0'for weekly rotation on Monday).backupCount: The number of backup files to keep, with older logs automatically deleted.

Advanced Logging Techniques for File Management

Python's logging module is incredibly adaptable, allowing you to create various techniques to meet specific needs.

1. Custom File Handlers

If your application requires specific logging behaviour, you can create your file handler by creating the subclass of logging.FileHandler. This allows you to use customized initialization and emission behaviours.

Example:

class CustomFileHandler(logging.FileHandler):

def __init__(self, filename, mode='a', encoding=None, delay=False):

super().__init__(filename, mode, encoding, delay)

# Add custom initialization here

def emit(self, record):

# Customize log emission behavior

super().emit(record)

# Add post-emission actions here

__init__(): This method initializes the file handler. By callingsuper().__init__(), it ensures that the base class (FileHandler) sets up the basic functionality, such as opening the file and setting the mode (append or write). You can extend this method to add custom setup, such as initializing specific resources.emit(): This method processes log records before they are written to the file. By overriding it, you can modify log messages, add extra context, or trigger other actions. In this example, the message is prefixed with "Custom Log", and after the log is written, a message is printed to the console.

Use Case: If you are building a web application that writes logs for each incoming HTTP request. You may want to add more context to logs, such as tagging them based on the request type (GET, POST) or user roles. You may want to trigger specific actions, like notifying an external monitoring service when an error is logged. These are some of the scenarios where a custom file handler becomes useful.

Example:

# Example usage of CustomFileHandler

import logging

logger = logging.getLogger('my_custom_logger')

logger.setLevel(logging.DEBUG)

# Initialize the custom file handler

handler = CustomFileHandler('custom_log.log')

logger.addHandler(handler)

# Write log messages

logger.info("This is an informational message")

logger.error("An error occurred!")

Output:

CustomFileHandler initialized for file: custom_log.log

Log written: Custom Log: This is an informational message

Log written: Custom Log: An error occurred!

In this case:

- The

CustomFileHandlerprocesses each log, appending "Custom Log" to the beginning of each message. - The handler then writes the modified log entry to

custom_log.logand performs any additional post-emission actions (e.g., printing a confirmation).

2. Thread-Safe Logging

In multi-threaded applications, log messages from separate threads may become intermingled, and confusing. To avoid this, use thread-safe logging to record log messages sequentially, even while numerous threads are active.

Example:

import threading

import logging

class ThreadSafeHandler(logging.Handler):

def __init__(self):

super().__init__()

self.lock = threading.Lock() # Initialize a lock for synchronizing access to the logging process

def emit(self, record):

# Acquire the lock before writing to the log

with self.lock:

# Perform thread-safe logging operations here

super().emit(record) # Emit the log record after acquiring the lock

# The lock is released automatically when exiting the 'with' block

threading.Lock: It is a synchronization primitive that allows only one thread to access a critical section of code at a time. When one thread acquires the lock, other threads attempting to acquire the same lock will be blocked until the lock is released.with self.lock:: When thewithblock is entered, the lock is acquired. Once the block is exited, the lock is automatically released, even if an exception is raised within the block.emit()method: Theemit()method is responsible for writing the log record to the desired output (file, console, etc.). We overrideemit()to wrap the logging operation in a lock, ensuring that only one thread can log at any given moment. By callingsuper().emit(record), the log record is processed and written using the standard logging behavior of the baselogging.Handlerclass.

Use Case: Consider a web server handling thousands of concurrent requests. Each request might generate log entries, including user information, request details, and any errors encountered during processing. Without thread-safe logging, multiple threads could attempt to write to the same log file at the same time, leading to interleaved log messages that are difficult to interpret.

Example:

import logging

import threading

import time

# Logger setup

logger = logging.getLogger('thread_safe_logger')

logger.setLevel(logging.DEBUG)

# Use the custom ThreadSafeHandler

handler = ThreadSafeHandler()

logger.addHandler(handler)

# Function for logging in multiple threads

def log_from_thread(thread_name):

for i in range(5):

logger.info(f"{thread_name} logging message {i}")

time.sleep(0.1) # Simulate some processing time

# Create multiple threads

threads = []

for i in range(3):

thread = threading.Thread(target=log_from_thread, args=(f"Thread-{i+1}",))

threads.append(thread)

thread.start()

# Join threads to ensure all logging completes

for thread in threads:

thread.join()

Explanation:

- Three threads (

Thread-1,Thread-2,Thread-3) are logging messages simultaneously. - Each thread calls the

log_from_thread()function, which logs messages five times in a loop. - With the help of

ThreadSafeHandler, the log messages from all threads are written in an orderly and sequential fashion, without overlap or corruption, ensuring clear and consistent logging.

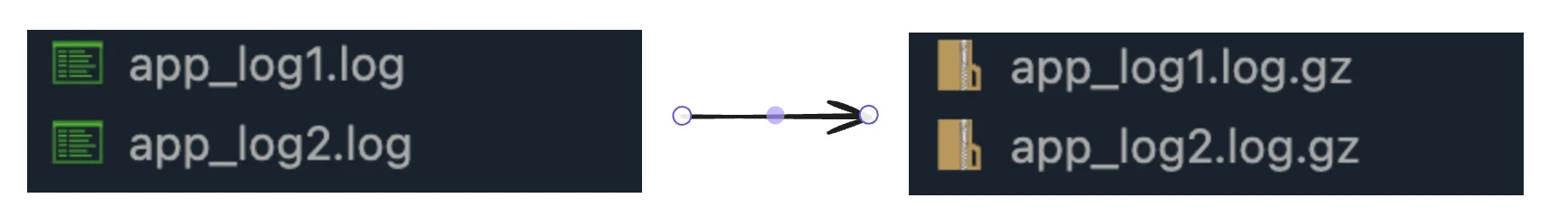

3. Log File Compression.

Log files can be compressed automatically after rotation to save disk space and improve log management efficiency. Here's a basic approach for compressing log files with gzip:

Example

import gzip

import os

def compress_log(log_file):

with open(log_file, 'rb') as f_in:

with gzip.open(f"{log_file}.gz", 'wb') as f_out:

f_out.writelines(f_in)

os.remove(log_file)

Use Case: Automatic compression is especially useful in environments where large volumes of logs are generated, such as in big data processing or cloud services, where storage efficiency is key.

Suppose we have two files named app_log1.log and app_log2.log and we want to compress both. We can do so by:

import gzip

import os

def compress_log(log_file):

"""Compress a single log file using gzip."""

with open(log_file, 'rb') as f_in:

# Create a compressed version of the log file with .gz extension

with gzip.open(f"{log_file}.gz", 'wb') as f_out:

f_out.writelines(f_in) # Write the contents to the compressed file

os.remove(log_file) # Delete the original log file after compression

print(f"Compressed {log_file} -> {log_file}.gz")

def compress_multiple_logs(log_files):

"""Compress multiple log files."""

for log_file in log_files:

compress_log(log_file)

# List of log files to compress

log_files = ['app_log1.log', 'app_log2.log']

# Compress all the log files in the list

compress_multiple_logs(log_files)

Output:

Compressed app_log1.log -> app_log1.log.gz

Compressed app_log2.log -> app_log2.log.gz

Monitoring and Analyzing Log Files with SigNoz

While Python's logging module is excellent for creating and storing logs, analyzing and extracting meaningful insights from them can be time-consuming. This is where SigNoz comes into the picture—a robust open-source observability platform that simplifies log management, allowing you to monitor and evaluate the performance of your application more effectively.

Benefits of Using SigNoz for Python Applications

SigNoz offers a range of features that make it an excellent choice for managing logs generated by Python applications:

- Centralized Log Management: Collect and store logs from multiple sources in a single, consistent location.

- Real-Time Log Analysis: Search, filter, and analyze logs as they are generated, providing immediate insight into application behaviour.

- Visualization and Dashboards: Create customized dashboards to view log data, identify patterns, and track critical metrics.

- Alerting: Create alerts to notify you when specified log patterns or thresholds are hit, allowing for proactive issue solutions.

- Integration with Traces and Metrics: Combine log data with application traces and metrics for complete observability, allowing you to diagnose and resolve issues more quickly.

Integrating SigNoz with Python Logging

SigNoz Cloud is the easiest way to run SigNoz. Sign up for a free account and get 30 days of unlimited access to all features.

You can also install and self-host SigNoz yourself since it is open-source. With 24,000+ GitHub stars, open-source SigNoz is loved by developers. Find the instructions to self-host SigNoz.

For detailed implementation steps, refer to SigNoz's guide on logging in Python with OpenTelemetry here. This guide will provide specific instructions tailored to integrate Python logging with SigNoz's observability platform using OpenTelemetry.

Best Practices for Python Logging to File

To get the most out of Python's logging capabilities, you can follow some of the recommended practices that ensure your logs are both useful and manageable.

Structure Log Messages Consistently: Use a consistent format for log messages to make them easier to parse and analyze, particularly when working with log management tools like SigNoz.

logger.info("User %s logged in from %s", username, ip_address)Log Exceptions with Tracebacks: When an exception occurs, always log the entire traceback to assist diagnose the problem. This provides useful context and makes debugging much easier.

try: # Some risky operation except Exception as e: logger.exception("An error occurred: %s", str(e))Use Context Managers for Temporary Configurations: If you need to temporarily change the logging level or settings, think about utilizing a context manager. This allows you to make modifications that are automatically undone when the context is exited.

with logger.temporary_level(logging.DEBUG): logger.debug("This is temporarily a debug message")Balance Logging Verbosity and Performance: Logging is a great tool, but it can have an impact on performance if not maintained properly. Follow these suggestions to keep a healthy balance:

- Set Appropriate Log Levels:

DEBUGis reserved for thorough diagnostic information, whereasINFO,WARNING,ERROR, andCRITICALare used for progressively important warnings. - Don't Log Sensitive Data: Maintain privacy and security by not logging important information (such as passwords and personal data).

- Consider Lazy Logging for Costly Operations: Use lazy evaluation for logs that require expensive procedures, so that they are only evaluated when the log level is enabled.

- Set Appropriate Log Levels:

Key Takeaways

- Python's logging module has powerful facilities for creating and maintaining log files, allowing you to log messages at varying severity levels and store them efficiently.

FileHandleris required for writing logs to files, with the ability to append to existing logs or generate new ones, ensuring that your logs are always kept securely.- Implementing log rotation, either size-based with

RotatingFileHandleror time-based withTimedRotatingFileHandler, is critical for managing log file sizes and organizing historical data. - Using solutions like SigNoz can help you take log management to the next level by providing centralized log management, real-time analysis, and seamless integration with metrics and traces for complete observability.

- Following recommended practices, such as properly constructing log messages, logging exceptions with tracebacks, and balancing verbosity with performance, guarantees that your logging strategy is both effective and maintainable.

FAQs

How do I ensure my Python script creates a new log file if it doesn't exist?

You can use FileHandler with the default mode (a). It will create a new file if it doesn't exist or append to an existing one:

handler = logging.FileHandler('app.log')

Can I append to an existing log file without overwriting its contents?

Yes, the default mode for FileHandler is append (a). It will automatically append to the existing files without overwriting:

handler = logging.FileHandler('existing_log.log') # 'a' mode is default

What's the best way to implement log rotation in Python?

You can use RotatingFileHandler for size-based rotation or TimedRotatingFileHandler for time-based rotation:

handler = RotatingFileHandler('app.log', maxBytes=1024*1024, backupCount=5)

# or

handler = TimedRotatingFileHandler('app.log', when='midnight', backupCount=7)

How can I handle logging in multi-threaded or multi-process Python applications?

You can use thread-safe handlers or implement locking mechanisms. For example:

import threading

class ThreadSafeHandler(logging.Handler):

def __init__(self):

super().__init__()

self.lock = threading.Lock()

def emit(self, record):

with self.lock:

# Perform thread-safe logging operations here