Optimising OpenTelemetry Pipelines to Cut Observability Costs and Data Noise

Fat bills from observability vendors and tons of not-so-insightful telemetry data have turned out to be a very common issue today.

This often leaves teams having to explain the lack of clear ROI, despite the growing costs. If you’re using OpenTelemetry to record your observability data, there are some practical methods you can apply to keep those costs from piling up.

The intention of this blog is to share some ideas and inspiration on how you can optimise your OTel Collector to reduce observability costs and dial down telemetry data noise, without losing the signals that matter.

What is Observability Data Noise?

Observability is the practice of getting X-ray vision into logs, metrics and traces generated by your application. When things break, as they inevitably do, finding the right information in the shortest amount of time is very crucial. Most observability backends charge based on the amount of data ingested into them, which becomes another reason to be smart about reducing observability data [telemetry] noise. So not only does unnecessary telemetry data bury down critical signals, it also inflates costs. This unnecessary telemetry data is collectively termed as “noise”.

Let’s have a quick checklist for you to evaluate whether the collected telemetry data classifies as noise or not. Suppose you are trying to classify ‘X’ as noise or not:

Can you troubleshoot effectively without ‘X’?

If not, it might be noise.

Is ‘X’ a valuable data, but extremely common?

There are techniques to take this scenario into account, like sampling.

Would you ever search, alert, or dashboard based on ‘X’?

If the answer is no, it's likely noise.

Now let’s understand how rising observability data costs contribute to growing frustration with lack of return of investment [ROI].

Rising Observability Costs and No ROI

Picture this. You are the head of devops for your org. You are tasked with setting up observability for your growing infrastructure. You come across auto-instrumentation offered by OpenTelemetry and decide to go ahead with it.

So, you take the fastest route:

- Auto-instrument your services with OpenTelemetry.

- Pipe all telemetry, logs, metrics and traces straight into vendor X.

Everything works like a charm. You can see alerts and dashboards painting a beautiful picture. But this honeymoon is short-lived.

Soon, the org is hit with a fat bill from the vendor ‘X’, and engineers are complaining that they can't find the right signal when incidents happen. You are paying more, but getting less value, and leadership starts questioning the ROI. This is the classic observability trap, where collecting everything feels right at first, but soon turns into a costly mess of observability noise.

If you are a victim of this trap or are likely to be a potential one, we have some solid tips that could save you!

Reducing Observability Data Noise

Let’s look at some solid and proven techniques that have been adopted widely to reduce the clutter in the observability pipeline.

Filtering

The first attempt to cut down observability noise is to remove anything that you absolutely don’t want. Filters help to drop unnecessary logs, spans and metrics from our pipeline. Filtering is the process of completely removing specific types of data, based on a set of rules.

OpenTelemetry provides a filterprocessor that allows dropping spans, span events, metrics, datapoints, and logs from the collector. This processor uses the OpenTelemetry Transformation Language [OTTL] to match conditions with the incoming telemetry and drop data as needed. In case of multiple condition checks, the conditions are ORed.

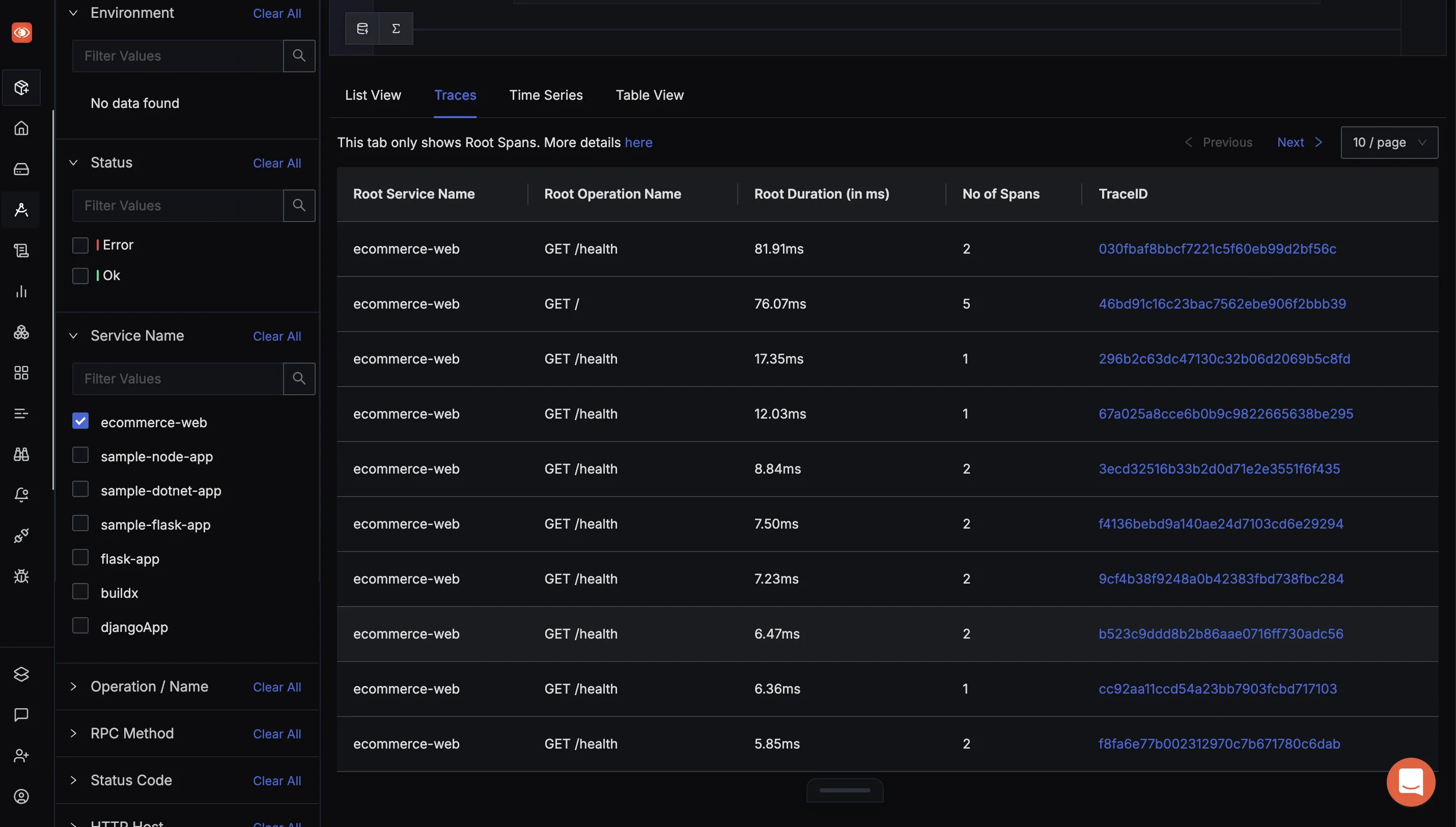

Some of the most common places where you can use filtering are to remove the telemetry data generated from periodic health checks and synthetic monitoring [if any].

Let’s look at an example.

Your system architecture will often involve microservices, which do periodic health checks to see if a corresponding server is up. These intermittent health checks, if observed, generate lots of traces and logs which do not add any value. Hence, it makes sense to reduce noise and lower costs by filtering out the traces for these health checks early in the telemetry pipeline.

Let’s add a filter to drop the health-check HTTP calls in our OTel collector.

processors:

// other processors if any

filter/healthcheck:

traces:

span:

- 'attributes["http.target"] == "/health"'

Don’t forget to add this to the list of processors in the pipeline.

service:

pipelines:

traces:

receivers: [otlp]

processors: [filter/healthcheck]

exporters: [debug, otlp]

After restarting the collector, we will no longer see traces from the health check calls. You can explore many more examples of filters from the OTel Github Docs for filterprocessor.

Dropping Logs based on Severity Level

In high-throughput systems, logs at levels like DEBUG or INFO can generate massive volumes of data. While these logs are invaluable during development or load-testing phases, they often provide limited value in production [unless absolutely necessary] environments. Filtering out lower-severity logs according to environment and necessity goes a long way when it comes to reducing costs associated with log ingestion.

This is a chart that you can follow to an extent.

| Environment | Recommended Log Level | Rationale |

|---|---|---|

| Development | DEBUG or TRACE | Full verbosity helps developers understand internal behavior during feature dev. |

| Testing / QA | INFO | Ensures visibility into application behavior without flooding the logs. |

| Staging | INFO (with scoped DEBUG) | Mirrors production closely; enable DEBUG selectively to troubleshoot issues. |

| Production | INFO or WARN or ERROR | Focuses on actionable issues, reducing log volume and observability costs. |

Always make sure to use your conscience and situation into consideration before deciding to drop logs of a particular level.

Let’s look at what this looks like in our OTel collector. These examples drop the DEBUG and INFO logs in the telemetry pipeline.

processors:

filter/drop-lowsevlogs:

logs:

log_record:

- 'severity_text == "DEBUG"'

- 'severity_text == "INFO"'

Make sure to plug the filter in the processor list of pipelines as well.

service:

pipelines:

logs:

receivers: [otlp]

processors: [filter/drop-lowsevlogs]

exporters: [debug, otlp]

After restarting the collector, logs of INFO and DEBUG levels won’t fill up our results.

Sampling

Sampling differs from filtering in that its goal is to reduce the overall data volume that the pipeline must process. Sampling, like filtering, should happen early in the pipeline to avoid wasting time processing telemetry that isn’t going to be exported.

Sampling is also a highly representative process; a small set of telemetry data is often highly representative of a larger set. Additionally, the more data you generate, the less data you actually need to have a representative sample. For high-volume systems, it is quite common for a sampling rate of 1% or lower to very accurately represent the other 99% of data.

Sampling can be used when a system may have an operation that is valuable to monitor, but also extremely common. For example, the GET request for the home page of a website.

Sampling is divided into 3 types based on the time at which sampling takes place [if sampling decision should be made ASAP or after considering most spans]

Let’s do sampling [tail-based sampling] in our OTel collector for the GET requests for the home page of a website.

processors:

tail_sampling:

decision_wait: 10s

num_traces: 100

expected_new_traces_per_sec: 10

decision_cache:

sampled_cache_size: 100

non_sampled_cache_size: 10000

policies:

[

{

name: homepage-sampling,

type: string_attribute,

string_attribute: {key: http.target, values: [/home]}

}

]

Make sure to add the sampler in the processor list of pipelines as well.

service:

pipelines:

traces:

receivers: [otlp]

processors: [tail_sampling]

exporters: [debug, otlp]

After restarting the collector, traces generated from the GET requests of the homepage would be subject to tail sampling.

Protecting Observability Data Costs From Exponential Rise

Systems commonly experience unanticipated spikes during sudden application errors [where you miss that one edge case!], traffic increases or large-scale log generation during a release, etc. These unexpected surges can quickly use up your daily data limit in a short period, leaving the system unable to ingest further data for the rest of the day. This can cause critical gaps in monitoring, lead to missed alerts, and disrupt the ability to troubleshoot effectively.

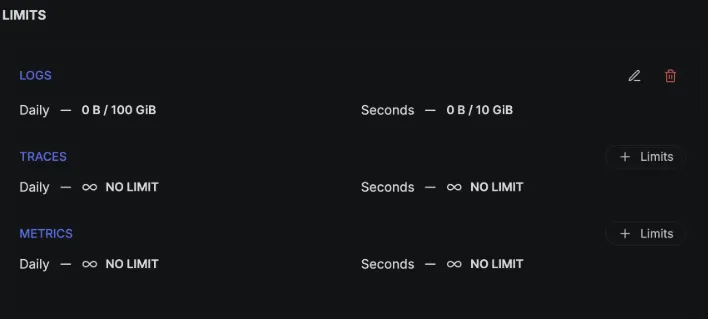

Most of the observability backends today offer some kind of ingestion guardrails and data safety features that help control telemetry data ingestion volume and reduce costs. For example, SigNoz has an ingest guard feature that helps you to set data ingestion limits based on the type of signal, engineering teams, environment, etc. This also offers second-level limits. You can configure these to manage sudden data spikes, ensuring that unexpected surges don’t exhaust daily quotas too quickly.

Other observability backends like Splunk’s Ingest Actions, Datadog’s Logging Without Limits, etc, also help guard against these surges to a reasonable extent.

Key Takeaways

- Filter out low-value telemetry like health checks and synthetic traffic early in the pipeline to reduce noise and cut down ingestion costs.

- Adjust log levels by environment; keep DEBUG and INFO in dev, but focus on WARN and ERROR in production to avoid paying for unnecessary logs.

- Use smart sampling to retain representative traces and logs without overwhelming your backend, especially for high-volume or repetitive operations.

- Set up ingestion guardrails like SigNoz Ingest Guard or Datadog Logging Without Limits to handle sudden data surges and protect yourself from surprise bills.

By applying these strategies, you can build a leaner, more cost-efficient observability setup that focuses on the signals that matter, cuts through the noise, and delivers insights without blowing up your budget.