Top 5 ClickHouse Alternatives for High-Performance Analytics

ClickHouse has gained a reputation for delivering ultra-fast analytical queries at scale, thanks to its columnar storage format, vectorized execution engine, and efficient compression. These features make it a powerhouse for aggregations over massive datasets, real-time analytics pipelines, and event tracking. However, certain limitations—such as more complex query scenarios, joining multiple large tables, or the need for managed cloud offerings—often prompt teams to explore other high-performance databases.

In this article, we will discuss the nature of ClickHouse, what it does well, where it struggles and then explore robust alternatives such as Google BigQuery, Snowflake, Amazon Redshift, Databricks, and StarRocks. We’ll also discuss each database’s architecture, typical workloads, concurrency and scalability considerations, as well as real-world scenarios in which they shine or falter.

What is ClickHouse?

ClickHouse is an open-source, columnar database management system (DBMS) designed for real-time analytics and Online Analytical Processing (OLAP) workloads. Developed by Yandex, a Russian technology company, ClickHouse was built to handle massive datasets and deliver blazing-fast query performance.

It is widely used for applications that require rapid data analysis, such as business intelligence dashboards, log processing, network monitoring, and real-time analytics pipelines.

Why Does ClickHouse Matter

ClickHouse matters because it is a fast, open-source, columnar database designed for real-time analytics. It excels in scenarios that require processing and analyzing massive volumes of data with low latency, making it a popular choice for businesses that prioritize speed and scalability. ClickHouse offers:

- Columnar Storage: One of the major features of ClickHouse is its columnar storage architecture. Unlike traditional row-based databases, which store data row by row, ClickHouse organizes data column by column.

- Vectorized Query Execution: ClickHouse further accelerates performance with its vectorized query execution engine. This approach processes data in batches instead of handling one record at a time, leveraging modern CPU architectures to execute operations in parallel.

- Real-Time Data Ingestion: ClickHouse is designed to handle real-time data ingestion, making it suitable for applications that require continuous data updates, such as monitoring systems, ad-tracking platforms, and IoT devices.

Common Limitations of ClickHouse

ClickHouse comes with certain limitations that developers and businesses should consider before adopting it. Here are some of its limitations

- Limited Transaction Support (ACID Compliance): ClickHouse is designed as an OLAP (Online Analytical Processing) database, prioritizing fast reads and analytical queries over transactional integrity. Unlike traditional relational databases such as PostgreSQL or MySQL, ClickHouse does not offer full ACID compliance (Atomicity, Consistency, Isolation, Durability).

- Complex Joins and Query Limitations: One of ClickHouse’s key strengths is its ability to process analytical queries quickly, especially when dealing with large datasets. However, its performance can drop significantly when queries involve multi-table joins or nested subqueries.

- Operational Complexity and Cluster Management: Setting up and managing a ClickHouse cluster can be challenging, especially for teams without prior experience in distributed databases.

Why Consider Alternatives to ClickHouse?

ClickHouse is widely recognized for its exceptional performance in analytical workloads and real-time data processing, but there are scenarios where alternative solutions may better suit specific needs. Organizations dealing with complex data pipelines, stringent compliance requirements, or seamless cloud integration often explore other options. Here is why you need to consider other alternatives

- Simplified Solutions: Many teams prioritize simplicity over granular control. ClickHouse’s self-managed deployment model, while powerful, demands significant expertise in cluster configuration, performance tuning, and fault tolerance. This can pose a challenge for organizations with limited DevOps resources or smaller teams.

- Complex Query Workloads: ClickHouse is optimized for fast analytical queries, its handling of multi-table joins, advanced window functions, and nested queries can introduce performance bottlenecks.

- Seamless Integration with Existing Data Ecosystems: Modern data infrastructures often rely on interconnected tools for ETL (Extract, Transform, Load), data visualization, and reporting. While ClickHouse supports many integrations, setting up and maintaining these connections may not always be straightforward

Importance of Choosing the Right Database

- Performance: Performance defines how quickly a database can process queries, retrieve results, and handle large-scale data workloads. High-performance databases are optimized for speed, enabling real-time analytics and insights.

- Total Cost of Ownership (TCO): TCO is a critical factor, especially for startups and mid-sized businesses. Database prices can vary widely, from open-source solutions with minimal upfront costs to fully managed cloud services with pay-as-you-go pricing.

- Scalability: As businesses grow, so do their data volumes and processing requirements. A database must meet current needs and effortlessly scale as the workload increases.

Now, let’s look at the top 5 ClickHouse Alternatives for High-performance Analytics.

1. Google BigQuery

Google BigQuery is a fully managed, serverless data warehouse designed for high-performance analytics. It is built on Google Cloud Platform (GCP), it eliminates the need for database administration, infrastructure provisioning, and maintenance, allowing developers to focus entirely on analyzing data.

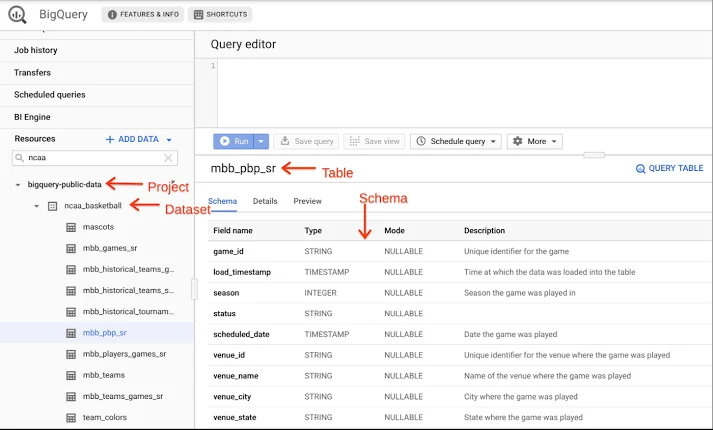

Google BigQuery showing the overview section

Key Features of Google BigQuery

- Pay-Per-Query Pricing Model: BigQuery uses a pay-as-you-go pricing model, where you are billed based on the volume of data scanned during queries rather than a fixed infrastructure cost.

- Machine Learning Integration with BigQuery ML: BigQuery’s ML integration allows developers to train and deploy machine learning models directly using SQL queries, with no need for specialized ML expertise or separate infrastructure.

- Federated Queries: BigQuery supports federated queries, which allow users to analyze data stored outside BigQuery, including:

- Cloud Storage (CSV, JSON, Avro, Parquet)

- Google Sheets

Performance Comparison of Google BigQuery with ClickHouse

| Factors | Google BigQuery | ClickHouse |

|---|---|---|

| Streaming Ingestion | BigQuery supports near-real-time ingestion through its Streaming API. Data can be sent to BigQuery within seconds, making it available for analysis almost immediately. | ClickHouse processes data ingestion through batch uploads or streaming tools like Kafka or RabbitMQ. It’s designed for high-throughput ingestion, handling millions of rows per second without breaking a sweat. |

| Concurrency Metrics | BigQuery dynamically scales to handle thousands of concurrent queries by allocating compute slots on demand. It maintains sub-second p95 latency for smaller queries and 2–5 seconds for complex queries. | ClickHouse supports hundreds to thousands of concurrent queries, leveraging vectorized query execution to maintain sub-50ms p95 latency for analytical workloads. |

Use Cases for Choosing Google BigQuery

Ad-hoc Analytics with Variable Workloads

BigQuery is particularly well-suited for ad-hoc analytics, where query demands can be unpredictable and vary significantly over time.

- Example Use Case: A marketing team needs to analyze user behavior data from a recent campaign. Using BigQuery, they can run SQL queries on petabyte-scale datasets without setting up infrastructure. When the campaign ends, they stop querying and incur no further charges.

Machine Learning Workload

BigQuery seamlessly integrates with machine learning tools, making it an excellent choice for building and deploying ML models directly within the data warehouse.

- Example Use Case: A retail company uses BigQuery ML to predict sales trends based on historical data. They build and test a regression model in SQL, deploy it for production predictions, and integrate the results with their dashboards for real-time insights.

GCP-Native Environments

BigQuery is deeply integrated into the Google Cloud Platform (GCP) ecosystem, making it the ideal choice for organizations already leveraging GCP services.

- Example Use Case: A financial services company builds its data warehouse entirely on GCP. They ingest data from Google Cloud Storage into BigQuery, process transformations using Dataflow, and visualize insights through Looker. This end-to-end workflow minimizes compatibility issues and reduces development time.

Google BigQuery Configuration Example

{

"query": {

"cache": true,

"timeoutMs": 600000,

"priority": "INTERACTIVE"

}

}

Basic Query Examples

-- BigQuery

SELECT DATE_TRUNC(timestamp, MONTH) AS month,

COUNT(*) AS events

FROM events

GROUP BY month

Data Ingestion Examples:

# BigQuery Streaming Insert

from google.cloud import bigquery

def stream_data(data):

client = bigquery.Client()

errors = client.insert_rows_json('dataset.tableRows', data)

return errors

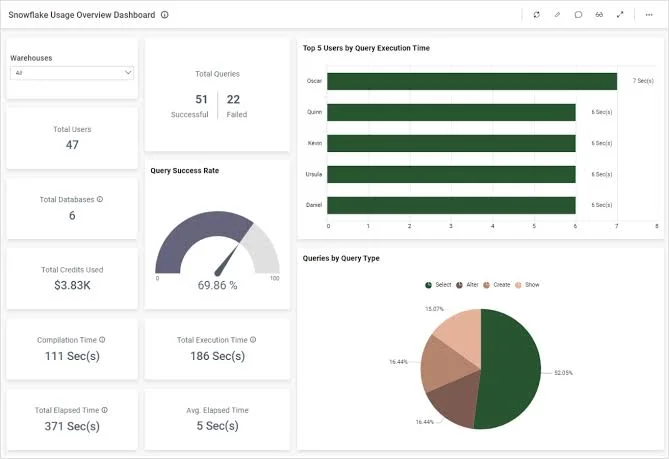

2. Snowflake

Snowflake is a cloud-based data platform that simplifies data storage, processing, and analysis. It offers a fully managed service that eliminates the need for hardware provisioning, software configuration, and manual optimization.

Key Features of Snowflake

Secure Data Sharing: Snowflake’s Secure Data Sharing feature simplifies collaboration across teams and organizations without the need for complex ETL (extract, transform, and load) pipelines or data duplication.

Multi-Cloud Support: Snowflake supports deployments across leading cloud platforms, ensuring flexibility and redundancy.

Elastic Scalability: Snowflake’s elastic scaling allows you to scale up or down based on demand without downtime automatically.

Performance Comparisons Against ClickHouse

| Factors | Snowflake | ClickHouse |

|---|---|---|

| Concurrency & Auto-Scaling | Snowflake leverages a multi-cluster architecture that automatically scales computing resources based on query load. | ClickHouse typically requires manual configuration for scaling, which can add complexity for teams unfamiliar with cluster management. |

| Aggregation & Complex Queries | Snowflake is purpose-built for complex analytical queries, especially when dealing with structured and semi-structured data. | ClickHouse, on the other hand, is optimized for high-speed analytical processing but is better suited for simpler aggregation queries. |

| Concurrency Metrics | Snowflake supports hundreds of concurrent queries through multi-cluster virtual warehouses that scale linearly. It delivers consistent p99 latency under 2 seconds by dynamically adding clusters during load spikes. | ClickHouse supports hundreds to thousands of concurrent queries, leveraging vectorized query execution to maintain sub-50ms p95 latency for analytical workloads. |

Use Cases for Choosing Snowflake

- Multi-Tenant Data Platforms

Snowflake is ideal for organizations that frequently share data with partners or internal teams. Its secure data-sharing features make it easy to grant access without duplicating data.

- Example Use Case: A SaaS provider uses Snowflake to create isolated workspaces for multiple clients, enabling each client to securely access their own datasets while leveraging shared infrastructure.

- Compliance and Governance

Snowflake offers built-in governance features for data security, lineage tracking, and auditing, ensuring compliance with regulatory standards.

- Example Use Case: A healthcare provider uses Snowflake to store patient records, enforcing strict access controls and audit trails to comply with HIPAA(Health Insurance Portability and Accountability Act.) regulations.

Snowflake Warehouse Configuration

# Snowflake Warehouse Configuration

warehouse_size: X-LARGE

auto_suspend: 300

auto_resume: true

scaling_policy: STANDARD

Snowflake Query Example:

-- Snowflake

SELECT DATE_TRUNC('MONTH', timestamp) AS month,

COUNT(*) AS events

FROM events

GROUP BY month

Snowflake Data Ingestion Example:

# Snowflake Data Ingestion (using snowflake-connector-python)

import snowflake.connector

def insert_data(data):

conn = snowflake.connector.connect(

user='USER', password='PASSWORD', account='ACCOUNT'

)

cursor = conn.cursor()

cursor.executemany("INSERT INTO events VALUES (%s, %s)", data)

cursor.close()

conn.close()

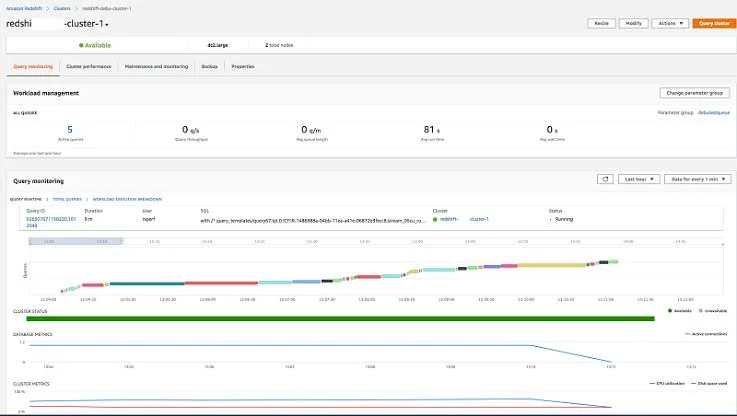

3. Amazon Redshift

Amazon Redshift is a fully managed data warehouse service provided by Amazon Web Services (AWS). It is designed to handle large-scale data analytics and business intelligence (BI) workloads efficiently. Redshift is optimized for online analytical processing (OLAP), enabling organizations to analyze massive datasets and extract insights quickly.

Key Features of Amazon Redshift

Columnar Storage for High Performance: Amazon Redshift employs a column-oriented architecture instead of row-based storage.

Massively Parallel Processing (MPP): Redshift uses MPP architecture, where tasks are distributed across multiple nodes in a cluster, which ensures faster performance for complex queries and scalability to handle petabyte-scale data.

Distribution Styles and Sort Keys: Redshift provides mechanisms to optimize query performance based on data distribution and sorting strategies.

Performance Comparisons Against ClickHouse

| Factors | Amazon Redshift | ClickHouse |

|---|---|---|

| Concurrency Scaling | Amazon Redshift’s concurrency scaling allows clusters to handle bursts of queries by automatically provisioning additional compute resources when needed. | ClickHouse scales horizontally by adding nodes to a cluster, distributing data shards, and replicating tables. |

| Performance Tuning | Amazon Redshift is a fully managed, cloud-based data warehouse that optimizes performance through columnar storage and parallel query execution. | ClickHouse is a columnar database designed for lightning-fast analytical queries. It natively supports: Sparse Indexing and Materialized Views |

| Concurrency Metrics | Amazon Redshift handles 50 concurrent queries by default, scaling to hundreds using Concurrency Scaling. It maintains p95 latency between 1–3 seconds under high-concurrency scenarios. | ClickHouse supports hundreds to thousands of concurrent queries, leveraging vectorized query execution to maintain sub-50ms p95 latency for analytical workloads. |

Use Cases for Choosing Amazon Redshift

- AWS-Centric Workloads

Redshift seamlessly integrates with AWS services, making it an ideal choice for organizations with existing AWS ecosystems.

- Example Use Case: A retail company using AWS S3 for storing customer data can leverage Redshift Spectrum to directly query data in S3 without needing to load it into the warehouse. This reduces ETL (Extract, Transform, Load) overhead and speeds up data analysis.

- Operational Analytics

Redshift is particularly effective for operational analytics where high volumes of transactional data require near real-time reporting and insights.

- Example Use Case: An e-commerce platform utilizes Redshift to monitor customer orders and inventory levels, updating dashboards dynamically for supply chain management

Amazon Redshift Configuration Example

{

"ClusterIdentifier": "redshift-cluster-1",

"NodeType": "dc2.large",

"ClusterType": "multi-node",

"NumberOfNodes": 3,

"MasterUsername": "admin",

"MasterUserPassword": "password123"

}

Amazon Redshift Query Example:

-- Redshift

SELECT DATE_TRUNC('month', timestamp) AS month,

COUNT(*) AS events

FROM events

GROUP BY month

Amazon Redshift Data Ingestion Example:

# Redshift Data Ingestion (using psycopg2)

import psycopg2

def insert_data(data):

conn = psycopg2.connect(

dbname="dbname", user="user", password="password", host="host", port="port"

)

cursor = conn.cursor()

cursor.executemany("INSERT INTO events VALUES (%s, %s)", data)

cursor.close()

conn.commit()

conn.close()

4. Databricks

Databricks is a cloud-based analytics platform built on Apache Spark, designed to process massive datasets for batch and streaming workloads. It combines data engineering, data science, and machine learning (ML) in a unified workspace, making it an attractive choice for teams handling advanced analytics and AI projects.

Key Features of Databricks

Apache Spark Integration: Databricks is built on Apache Spark, a distributed computing framework widely known for its ability to process large datasets in parallel.

Delta Lake for Reliable Data Pipelines: Delta Lake is an open-source storage layer that adds ACID (Atomicity, Consistency, Isolation, Durability) transactions to data lakes.

Collaborative Notebooks for Data Exploration: Databricks provides interactive, collaborative notebooks where teams can write code, visualize data, and share results in real time.

Performance Comparisons Against ClickHouse

| Factors | Databricks | ClickHouse |

|---|---|---|

| Large-Scale ETL Jobs | Databricks is built on Apache Spark, a distributed computing engine designed for scalability and parallel processing. This makes it highly effective for running large-scale ETL workflows. | ClickHouse is optimized for OLAP (Online Analytical Processing) workloads, which focus primarily on read-heavy analytics rather than data transformation. |

| Ad-Hoc SQL Queries | Databricks offers SQL Analytics, which allows users to query data using ANSI SQL. While this makes it approachable for SQL developers, its query performance can sometimes lag when compared to ClickHouse, especially for pure analytical workloads. | ClickHouse was specifically designed for OLAP-style queries. Its columnar storage format and vectorized execution engine make it exceptionally fast for Aggregation, Filtering and Joins |

| Concurrency Metrics | Databricks supports hundreds of concurrent queries through distributed Spark clusters, which excels in big data analytics and machine learning workloads. | ClickHouse supports hundreds to thousands of concurrent queries, leveraging vectorized query execution to maintain sub-50ms p95 latency for analytical workloads. |

Use Cases for Choosing Databricks

- Hybrid Machine Learning: Databricks excels at combining machine learning pipelines with analytical processing in a single platform.

Example Use Case: A financial institution leverages Databricks to detect fraud in real-time. It ingests transactional data, performs feature engineering, and trains machine learning models directly on the platform. The models continuously monitor transactions and flag suspicious patterns for investigation. Data Lakehouse Architecture

- Data Lakehouse Architecture: Databricks supports a lakehouse architecture, a hybrid approach combining the scalability of data lakes with the structure and performance of data warehouses.

Example Use Case: A media streaming service implements Databricks as a central lakehouse platform to store and analyze large volumes of user activity logs, content metadata, and recommendation models. With Delta Lake, they can update data incrementally while preserving historical records for compliance and analysis.

Databricks Cluster Configuration Example:

{

"cluster_name": "my-cluster",

"spark_version": "7.3.x-scala2.12",

"node_type_id": "i3.xlarge",

"num_workers": 4

}

Databricks Query Example (SQL on Delta):

-- Databricks (Delta SQL)

SELECT DATE_TRUNC('month', timestamp) AS month,

COUNT(*) AS events

FROM events

GROUP BY month

Databricks Data Ingestion Example:

# Databricks Data Ingestion (using PySpark)

from pyspark.sql import SparkSession

def insert_data(data):

spark = SparkSession.builder.appName("databricks_insert").getOrCreate()

df = spark.createDataFrame(data, ["timestamp", "event"])

df.write.format("delta").mode("append").save("/mnt/delta/events")

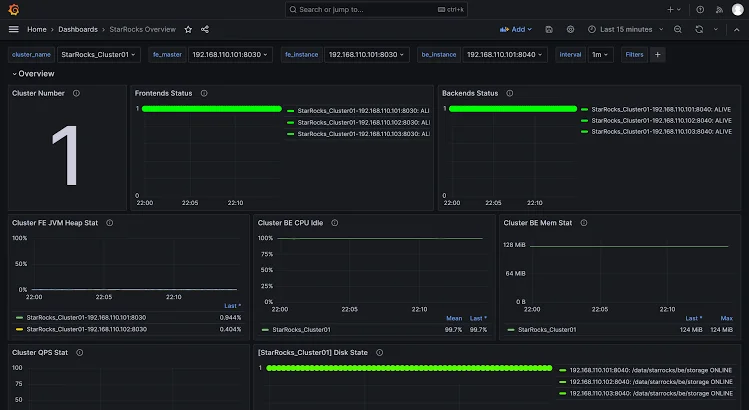

5. StarRocks

StarRocks is an emerging analytical database designed to deliver high-performance, real-time analytics. Built with a vectorized execution engine, it shares similarities with ClickHouse but offers distinct advantages in areas like query performance, real-time updates, and handling complex joins.

Key Features of StarRocks

- Vectorized Query Engine: StarRocks utilizes a vectorized execution engine to process data in batches instead of row by row. This significantly improves performance, particularly for analytical queries involving aggregates, joins, and filters. The vectorized approach minimizes CPU overhead, enabling faster data processing.

- Real-Time Data Updates: Unlike many analytical databases that struggle with real-time updates, StarRocks supports streaming data ingestion and incremental updates. This makes it ideal for use cases where data needs to be analyzed as soon as it arrives—such as dashboards, monitoring systems, and recommendation engines.

- High Concurrency Support: StarRocks is built to handle thousands of concurrent queries without degrading performance. It achieves this through multi-threaded query execution and efficient resource management, making it a great fit for scenarios involving multiple users accessing the system simultaneously.

How StarRocks Addresses ClickHouse Limitations

- Efficient Joins and Complex Queries: StarRocks is optimized for multi-table joins by using advanced indexing and partitioning strategies. It reduces join overhead, making it more suitable for workloads involving complex queries and relational datasets.

- Real-Time Updates with Low Latency: Unlike ClickHouse, which often relies on batch processing, StarRocks supports real-time data ingestion. This enables immediate availability of data for queries, making it a better choice for streaming analytics or event-driven architectures.

Use Cases for Choosing StarRocks

- High Concurrency, Real-Time Dashboards: StarRocks is optimized for high concurrency, making it an ideal choice for real-time dashboards that demand instantaneous insights.

- Example Use Case: Financial Trading Platforms A financial trading platform needs to provide its users with real-time market data and analytics. With StarRocks, the platform can handle thousands of concurrent queries, ensuring that users receive up-to-the-second information to inform their trading decisions.

Other Noteworthy Alternatives

| Other Alternatives | Advantages | Disadvantages |

|---|---|---|

| PostgreSQL / TimescaleDB | PostgreSQL is widely known for its familiar SQL syntax and strong ACID compliance, making it a reliable choice for transactional and analytical workloads. The TimescaleDB extension adds time-series capabilities, simplifying the management of time-stamped data. | PostgreSQL is powerful but its performance can decline with very large datasets (billions of rows) unless carefully optimized or horizontally sharded, which can add operational complexity. |

| SingleStore | SingleStore offers a hybrid storage model which combines in-memory rowstore for fast transactions and a disk-based columnstore for analytics. | The cost of managed services can be high compared to self-hosted solutions, especially for businesses with unpredictable workloads or limited budgets. |

| Apache Druid | Apache Druid excels at real-time data ingestion and indexing, enabling sub-second query latencies for interactive dashboards and streaming analytics. | Its architecture requires careful configuration and tuning, making it complex to operate and less suitable for teams without prior experience managing distributed systems. |

| Apache Cassandra | Apache Cassandra is designed for high availability and linear scalability, which makes it ideal for write-heavy applications that require fault tolerance and distributed storage across multiple regions. | Cassandra is optimized for OLTP (Online Transaction Processing) workloads and lacks advanced OLAP(Online Analytical Processing) features, which limits its ability to perform complex analytical queries efficiently. |

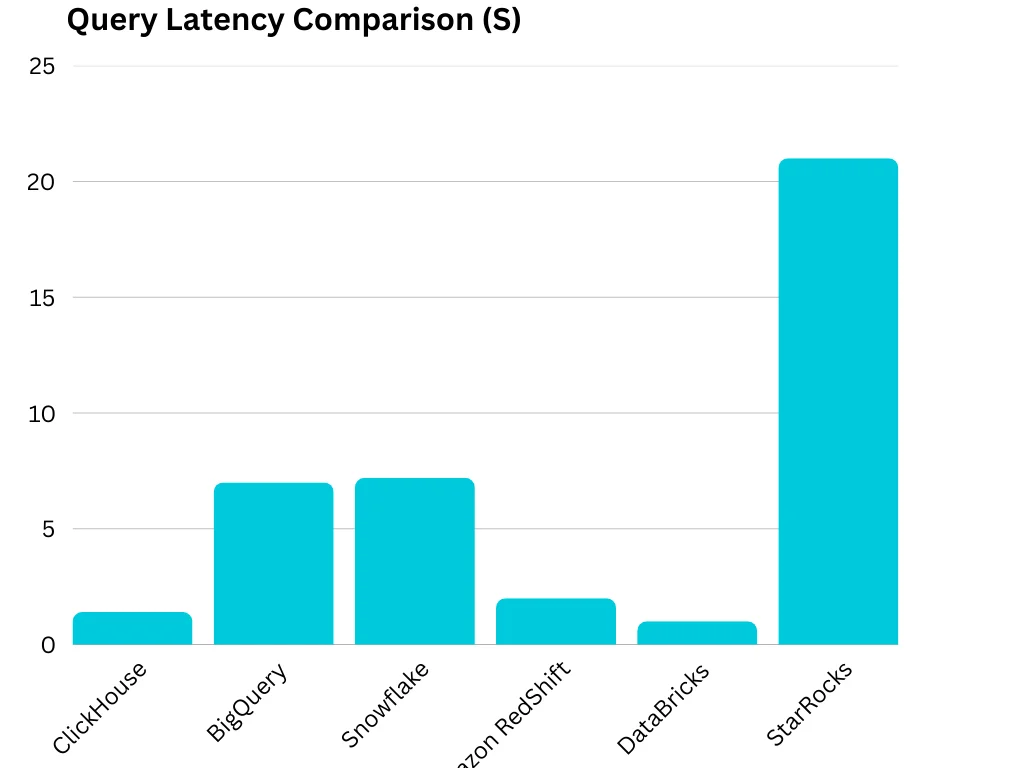

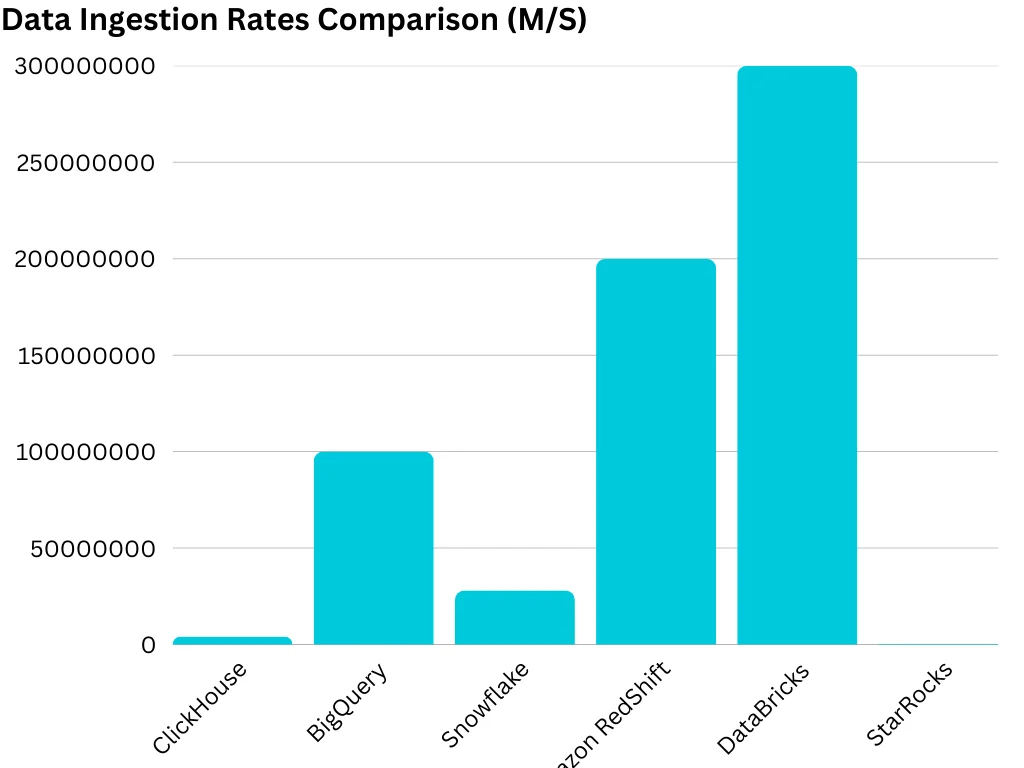

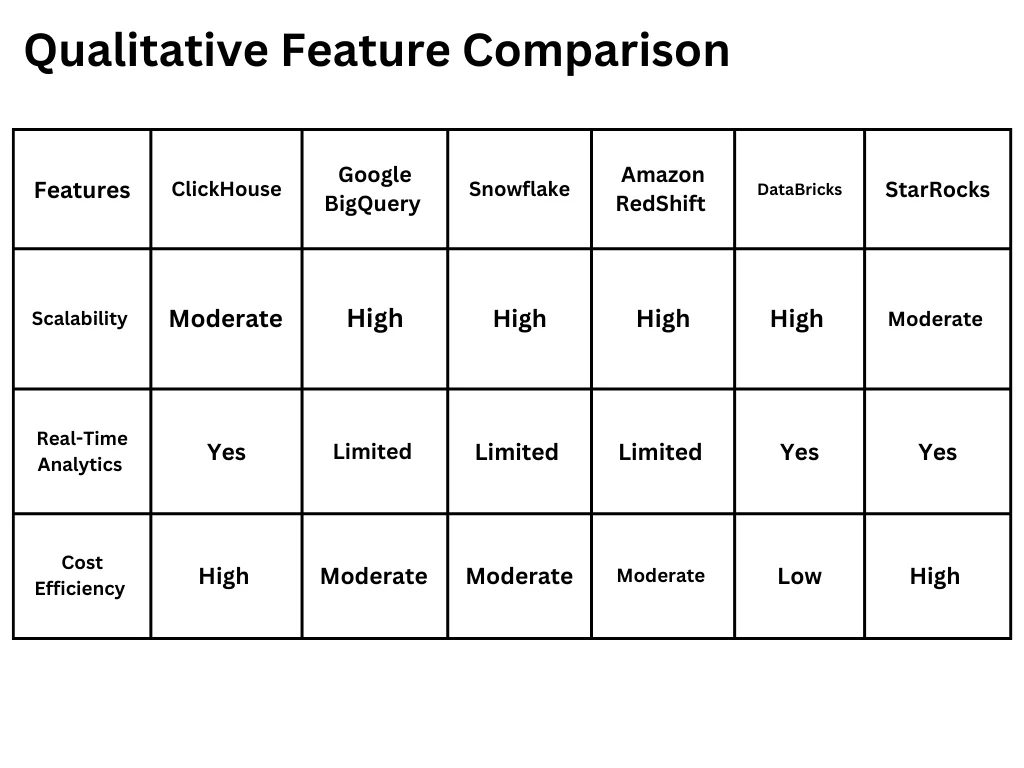

Performance Comparisons of All 5 Alternatives against ClickHouse

| Features | ClickHouse | Google Big query | Snowflake | Amazon RedShift | Databricks | StarRocks |

|---|---|---|---|---|---|---|

| Query Latency (s) | 1.4 | 7 | 7200(120 minutes) | 2 | 1 | 21 |

| Data Ingestion Rates (M/s) | 4 million rows/S | 100 billion rows/ 30s | 28 billion rows/ second | 200 million rows/ second | 300 million rows/second | 356000 rows /52 seconds |

| Scalability | Moderate | High | High | High | High | Moderate |

| Real-Time Analytics | Yes | Limited | Limited | Limited | Yes | Yes |

| Cost Efficiency | High | Moderate | Moderate | Moderate | Low | High |

Query Latency Comparison

This graph measures how quickly each alternative processes queries, which reflects response times for analytical workloads and real-time data analysis.

Data Ingestion Rates Comparison

This graph shows the rate at which each alternative can process and load data from various sources into its storage or analytics platform.

Qualitative Feature Comparison

This table shows a high-level evaluation of database platforms based on features suitable for specific workloads.

Factors to Consider When Choosing a ClickHouse Alternative

- Scalability and Performance Requirements: The ability of a database to handle increasing workloads without performance degradation is critical.

- Data Volume and Query Complexity: Your choice should align with how data is structured and queried. Complex Queries and Multi-table joins, star-schema queries, and advanced aggregations often perform better in databases like Snowflake or StarRocks, which handle complex joins efficiently.

- Integration with Existing Infrastructure: Compatibility with your current tools and platforms can simplify setup and operation; If your infrastructure is cloud-native, services like BigQuery (Google Cloud) or Redshift (AWS) offer seamless integration. On-premises solutions may be better suited for compliance-sensitive environments.

- Cost Considerations and Pricing Models: Budget constraints often drive database selection, so it’s important to assess both upfront and ongoing costs. Pay-as-you-go services like BigQuery are cost-effective for variable workloads, while provisioned clusters (e.g., Redshift) may suit predictable usage patterns.

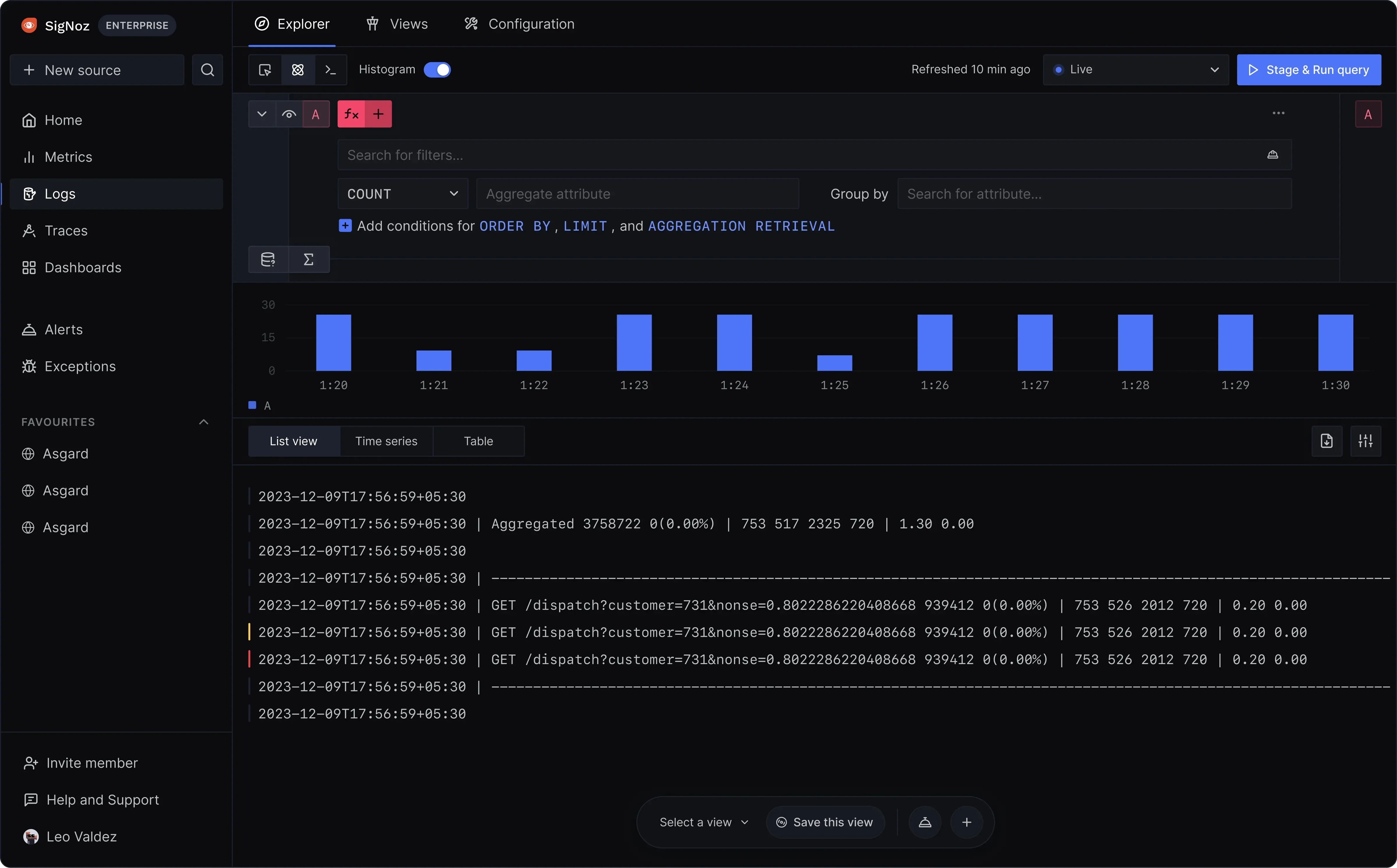

Implementing High-Performance Observability with SigNoz

SigNoz is a cutting-edge observability platform designed to monitor, debug, and optimize analytics databases and infrastructure. It is Built on OpenTelemetry standards, it offers distributed tracing, metrics, and log management in a single interface, which enables developers to analyze system performance effectively.

How SigNoz Complements High-Performance Analytics Databases

Modern analytics databases like ClickHouse, Snowflake, BigQuery, and Redshift are designed for speed and scalability, but they often face challenges such as query bottlenecks, unpredictable workloads, and scaling inefficiencies. SigNoz addresses these challenges by providing end-to-end observability, ensuring developers and database administrators (DBAs) can monitor, debug, and optimize database performance effectively.

- Real-Time Query Performance Monitoring: SigNoz helps track and analyze query performance in real-time, allowing teams to Pinpoint Slow Queries, Optimize Query Plans, and Track Query Trends.

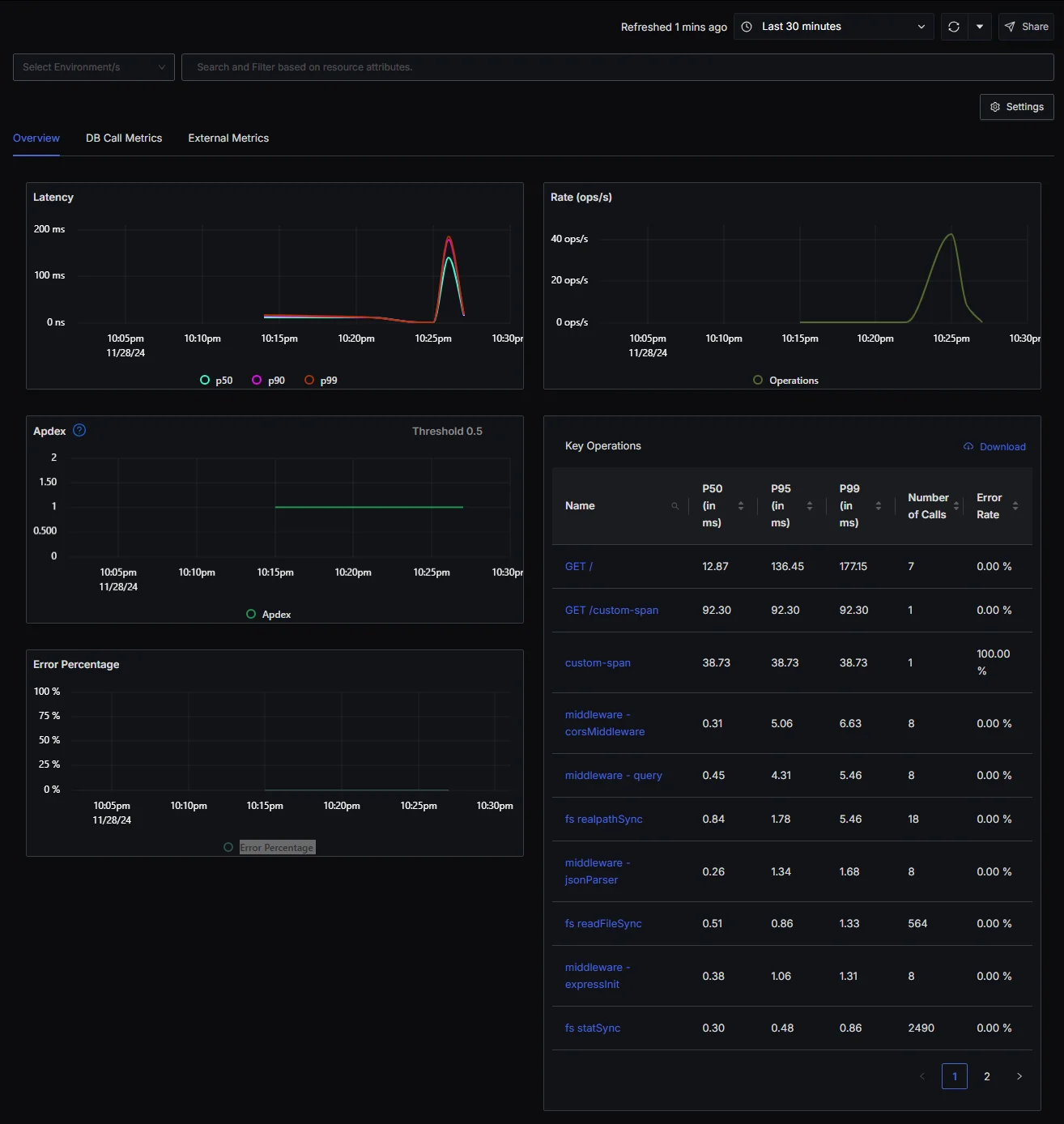

Real-time querying capabilities

- Microservices Observability: For distributed analytics platforms that rely on microservices, SigNoz offers: Distributed Tracing, Bottleneck Identification and Latency Tracking.

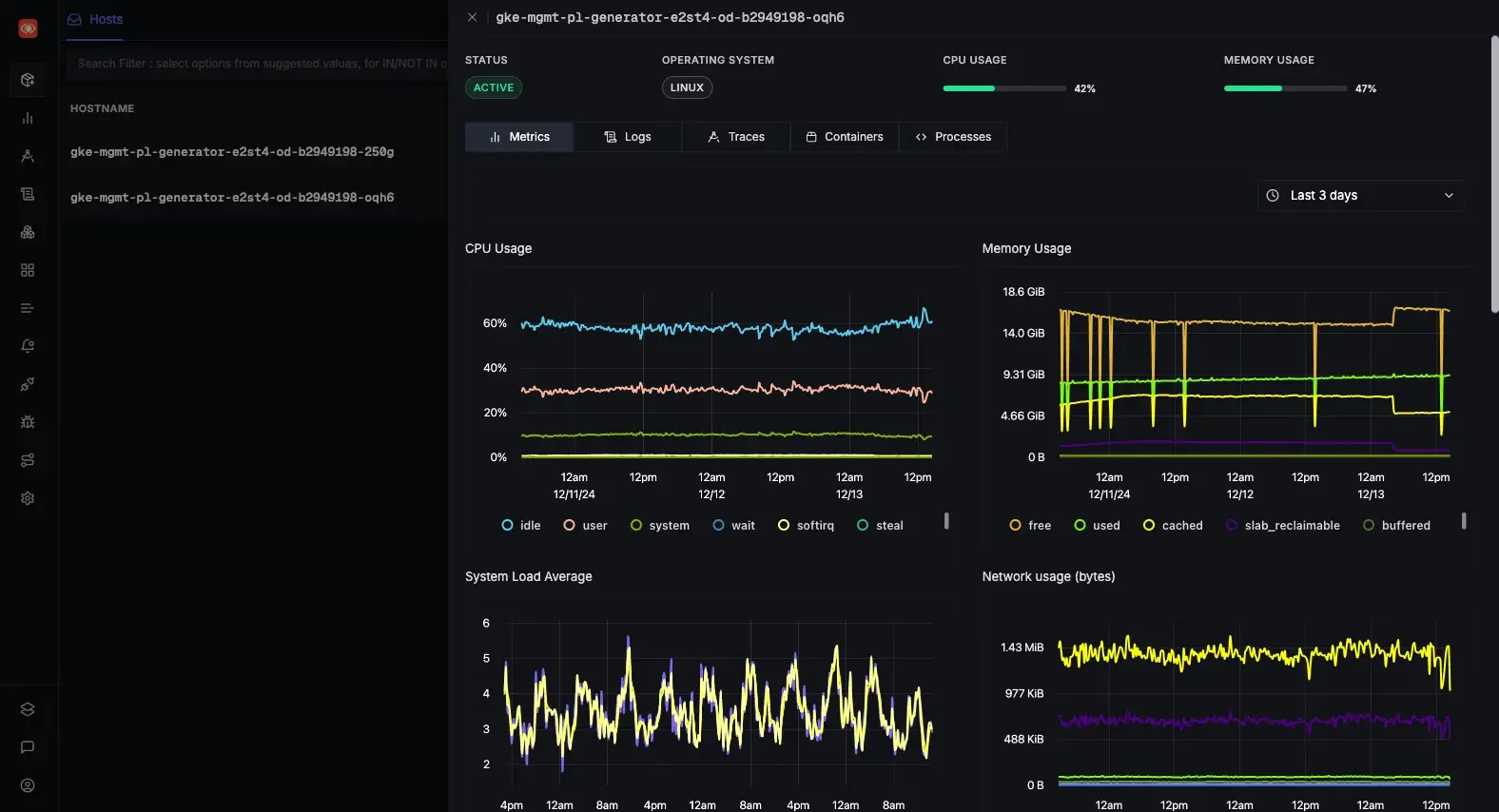

- Infrastructure Health Monitoring: SigNoz also monitors the infrastructure hosting analytics databases to prevent hardware-related performance issues.

SigNoz Cloud is the easiest way to run SigNoz. Sign up for a free account and get 30 days of unlimited access to all features.

You can also install and self-host SigNoz yourself since it is open-source. With 24,000+ GitHub stars, open-source SigNoz is loved by developers. Find the instructions to self-host SigNoz.

Key Takeaways

- No One-Size-Fits-All: ClickHouse delivers exceptional performance for analytical workloads, but it may not fit every use case. Alternatives provide advantages like simpler cluster management, better query optimization, and flexible pricing models.

- Performance and Scalability Matter: Evaluate your workload’s size, query complexity, and scalability needs when choosing a database.

- Community and Ecosystem Support: Popular solutions often come with extensive documentation, libraries, and third-party integrations.

- Observability is Critical: Monitoring performance is key to maximizing database efficiency. Tools like SigNoz enable real-time insights, helping teams track query latencies, detect performance bottlenecks, and optimize resource utilization seamlessly.

FAQS

What Makes ClickHouse Popular for Analytics?

ClickHouse is widely known for its columnar storage format and vectorized query execution, which enable ultra-fast analytical processing. It also supports high-speed data ingestion and materialized views to simplify real-time aggregations.

How Do ClickHouse Alternatives Compare in Terms of Query Performance?

Alternatives like Snowflake and BigQuery offer excellent scalability and ease of use, while StarRocks and SingleStore provide better optimization for joins and real-time ingestion. Performance depends on your data size, query complexity, and workload patterns.

Can I Migrate from ClickHouse to These Alternatives Easily?

Yes, most alternatives support SQL-based queries and offer migration tools. However, schema design and performance tuning may need adjustments to optimize queries for the new database.

How Does Pricing Compare Among ClickHouse and Its Alternatives?

ClickHouse is open-source and cost-effective for self-hosted setups but requires operational overhead. Cloud solutions like BigQuery and Snowflake use pay-as-you-go pricing models, which simplify management but can become expensive with high query volumes.

Are There Any Observability Best Practices for High-Performance Databases?

Yes, you need to monitor query performance, latency, and resource usage (CPU, memory, disk I/O). Tools like SigNoz help track bottlenecks with distributed tracing, metrics dashboards, and alerts for proactive database monitoring.