Trace based alerts

A Trace-based alert in SigNoz allows you to define conditions based on trace data, triggering alerts when these conditions are met. Here's a breakdown of the various sections and options available when configuring a Trace-based alert:

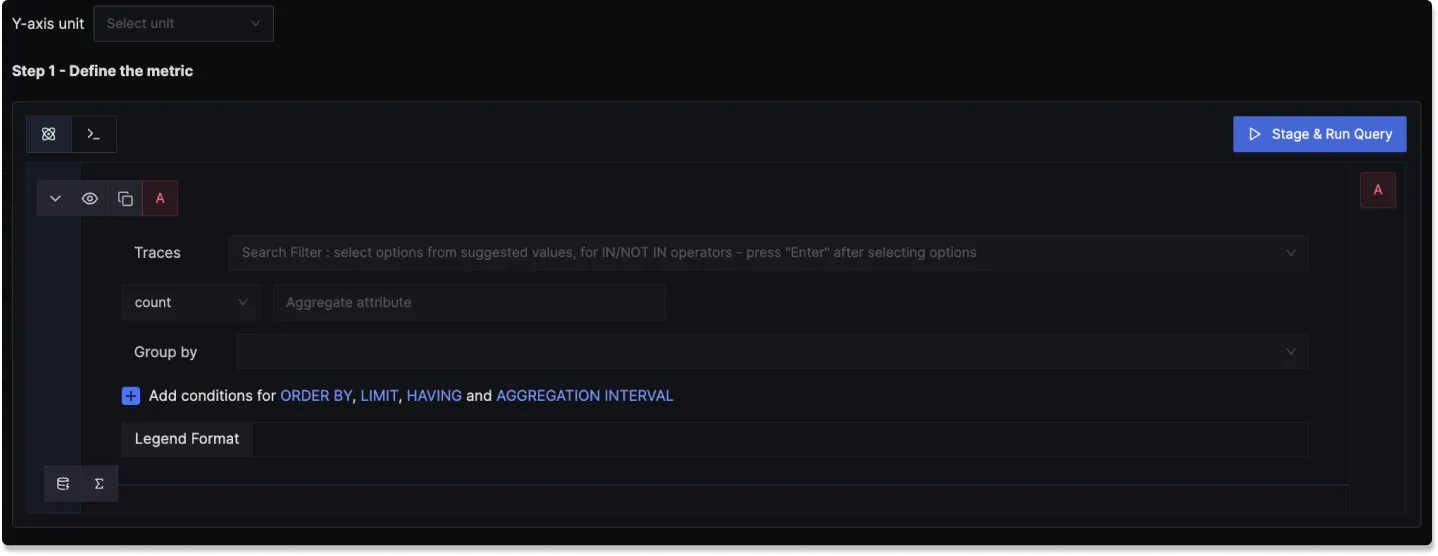

Step 1: Define the Trace Metric

In this step, you use the Traces Query Builder to perform operations on your Traces to define conditions based on traces data. Some of the fields that are available in Traces Query Builder includes

Traces: A field to filter the trace data to monitor.

Aggregate Attribute: Allows you to choose how the trace data should be aggregated. You can use functions like "Count"

Group by: Lets you group trace data by different span/trace attributes, like "serviceName", "Status" or other custom attributes.

Legend Format: An optional field to define the format for the legend in the visual representation of the alert.

Having: Apply conditions to filter the results further based on aggregate value.

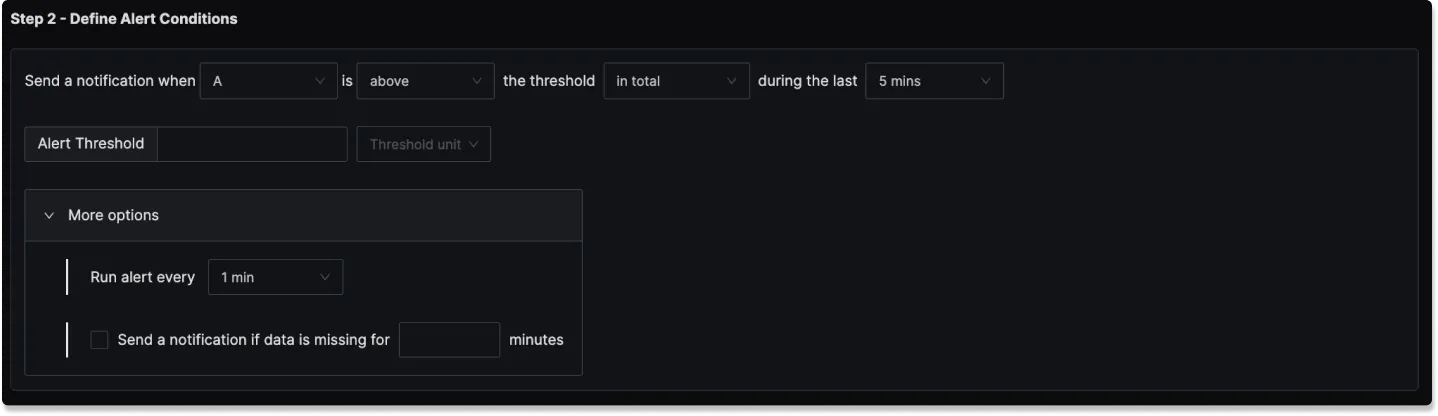

Step 2: Define Alert Conditions

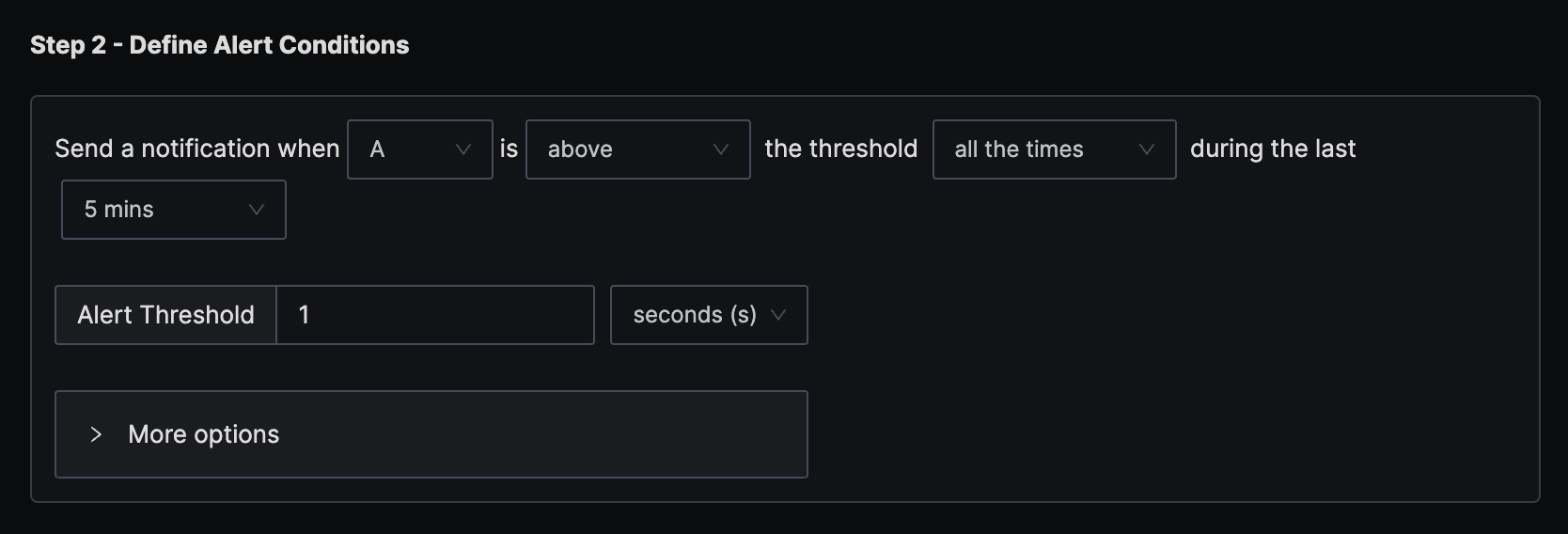

In this step, you set specific conditions for triggering the alert and determine the frequency of checking these conditions:

Send a notification when [A] is [above/below] the threshold in total during the last [X] mins: A template to set the threshold for the alert, allowing you to define when the alert condition should be checked.

Alert Threshold: A field to specify the threshold value for the alert condition.

More Options :

Run alert every [X mins]: This option determines the frequency at which the alert condition is checked and notifications are sent.

Send a notification if data is missing for [X] mins: A field to specify if a notification should be sent when data is missing for a certain period.

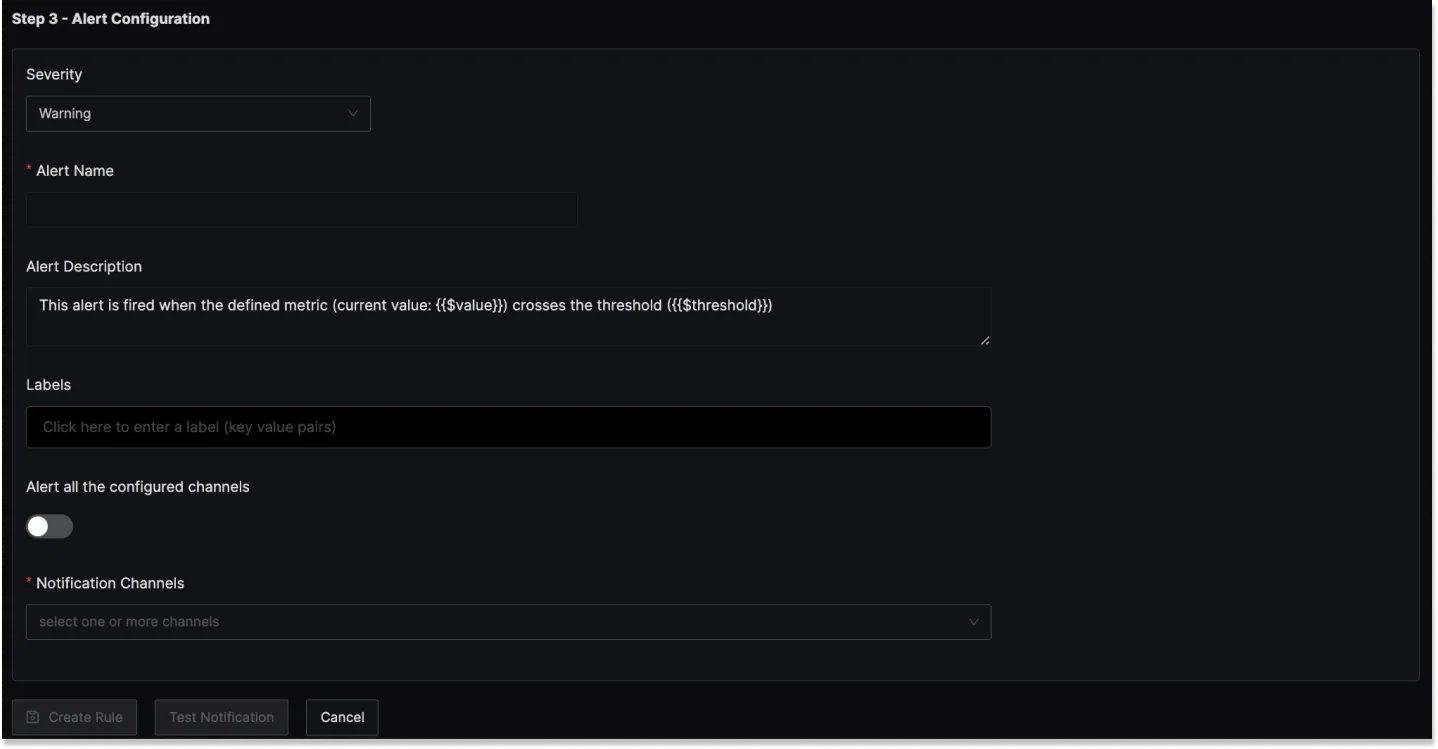

Step 3: Alert Configuration

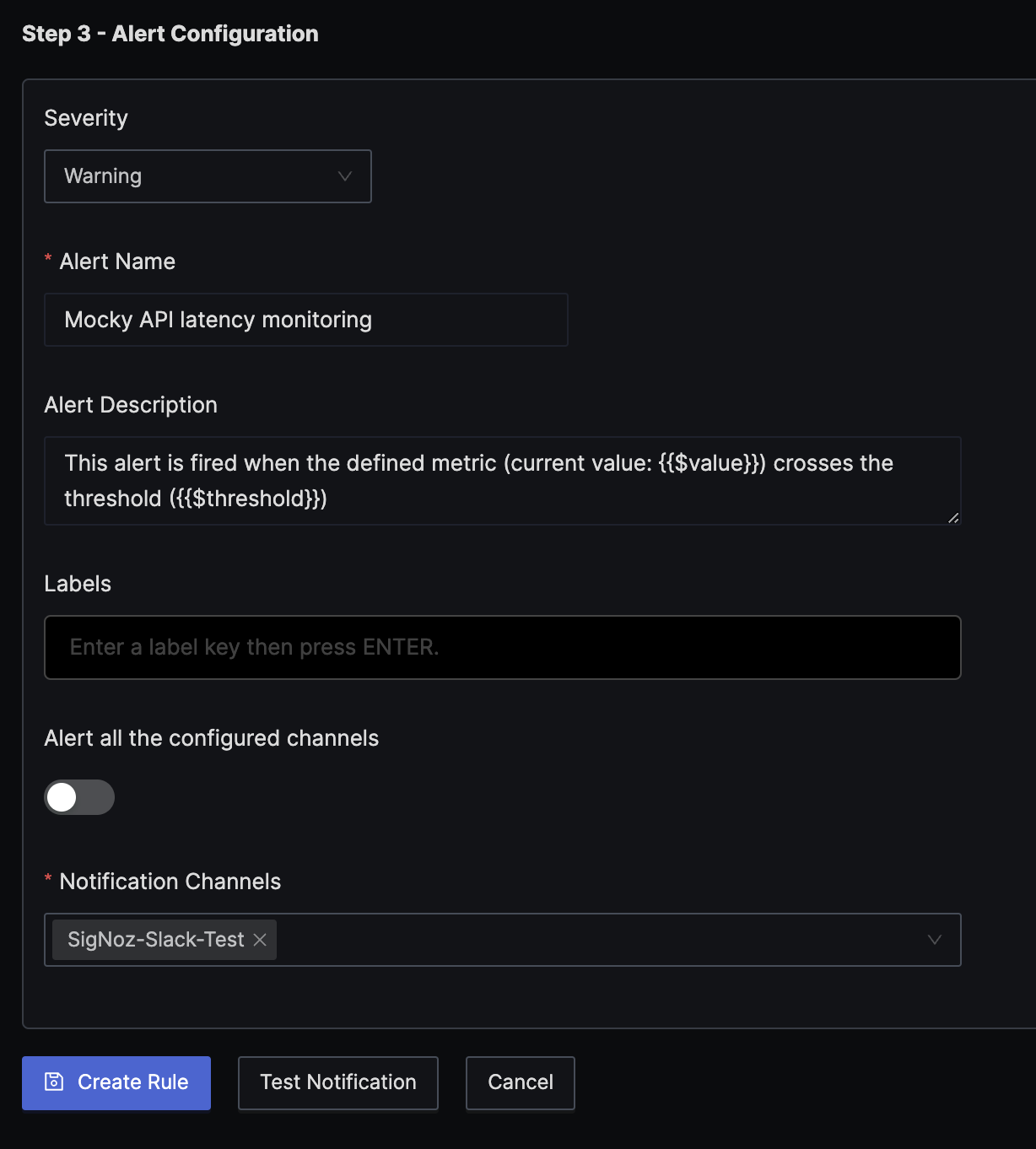

In this step, you set the alert's metadata, including severity, name, and description:

Severity

Set the severity level for the alert (e.g., "Warning" or "Critical").

Alert Name

A field to name the alert for easy identification.

Alert Description

Add a detailed description for the alert, explaining its purpose and trigger conditions.

You can incorporate result attributes in the alert descriptions to make the alerts more informative:

Syntax: Use $<attribute-name> to insert attribute values. Attribute values can be any attribute used in group by.

Example: If you have a query that has the attribute service.name in the group by clause then to use it in the alert description, you will use $service.name.

Slack alert format

Using advanced slack formatting is supported if you are using Slack as a notification channel.

Test Notification

A button to test the alert to ensure that it works as expected.

Examples

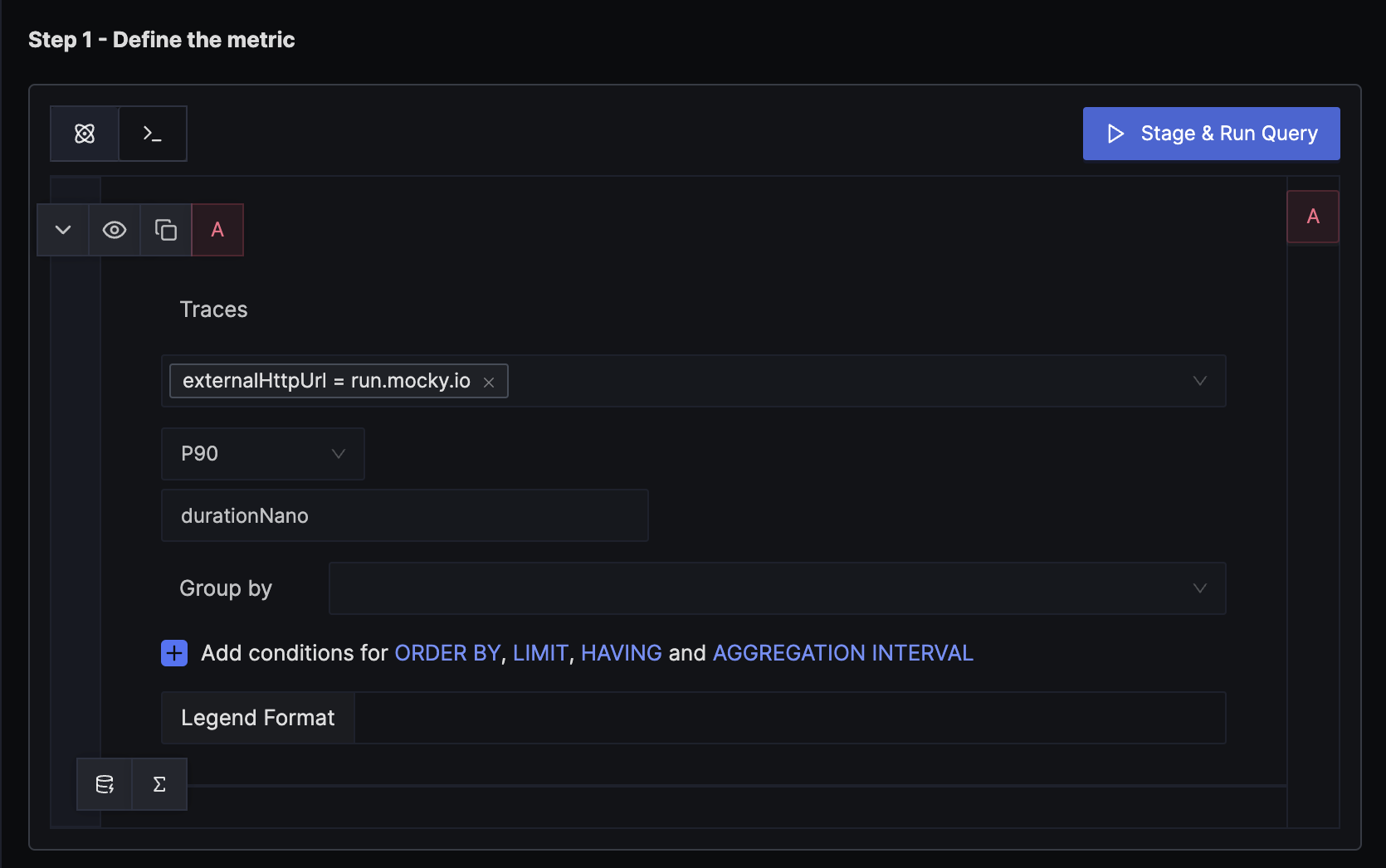

1. Alert when external API latency (P90) is over 1 second for last 5 mins

Here's a video tutorial for creating this alert:

Step 1: Write Query Builder query to define alert metric

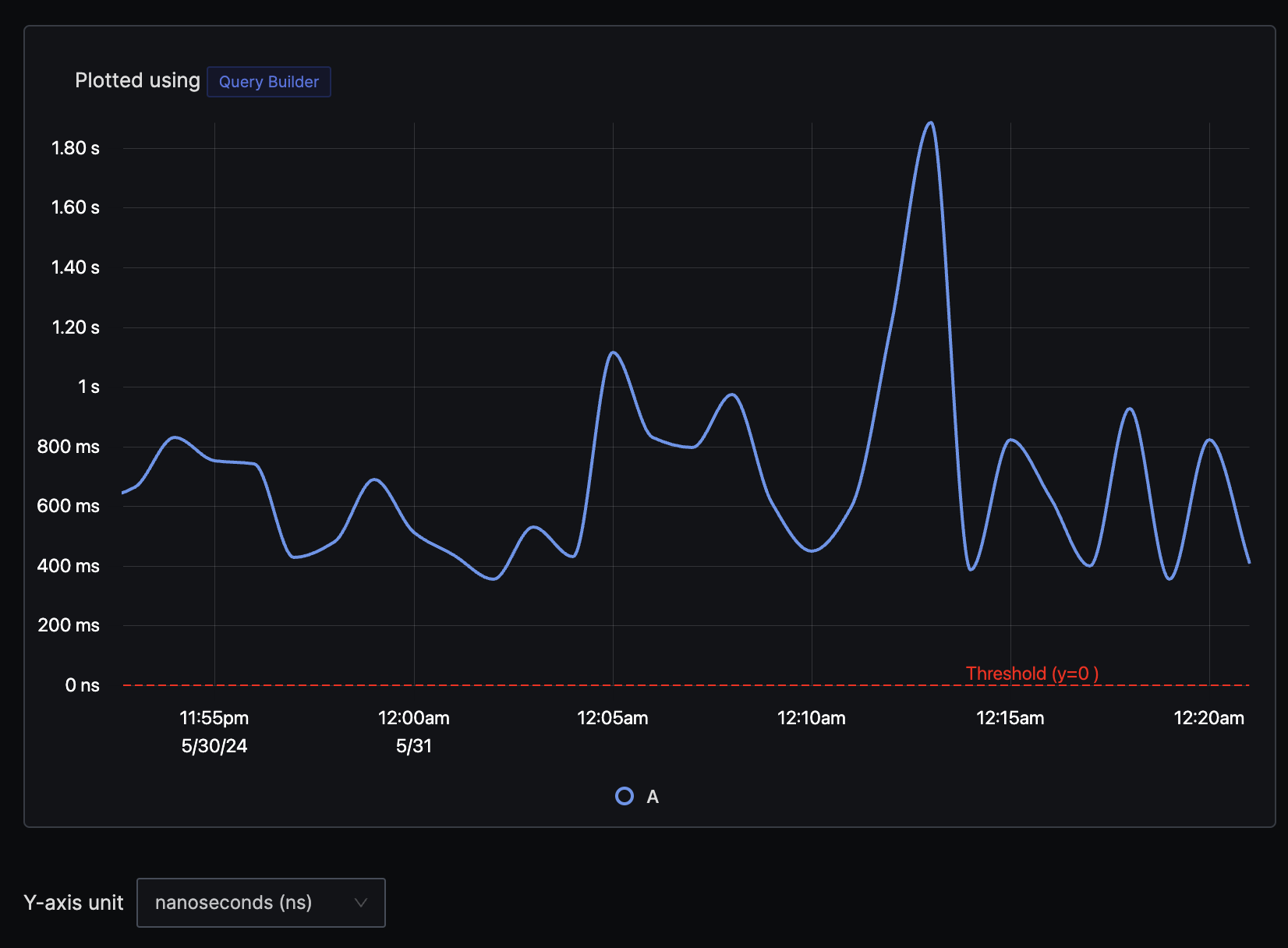

Using externalHttpUrl attribute we can filter specific external API endpoint and then set aggregation attribute to durationNano with P90 aggregation operation to plot a chart which measures 90th percentile latency. You can also choose Avg or anyother operation as aggregate operation depending on your needs.

Remember to select y-axis unit as nanoseconds as our aggregate key is durationNano.

Step 2: Set alert conditions

The condition is set to trigger a notification if the per-minute external API latency (P90) exceeds the threshold of 1 second all the time in the last five minutes.

Step 3: Set alert configuration

At last configure the alert as Warning, add a name and notification channel.

Last updated: June 6, 2024