Overview

This guide walks you through migrating metrics from Prometheus, Mimir, or Grafana Cloud to SigNoz. You will:

- Inventory your current metric sources

- Set up the OpenTelemetry Collector

- Configure receivers for your existing exporters

- Validate metrics are flowing correctly

SigNoz supports Prometheus metrics natively, so migration typically involves pointing your existing exporters to the OpenTelemetry Collector or using the Collector to scrape your targets.

Prerequisites

Before starting, ensure you have:

- A SigNoz account (Cloud) or a running SigNoz instance (Self-Hosted)

- Access to your Prometheus/Mimir configuration (

prometheus.ymlor Grafana Agent config) - Administrative access to deploy the OpenTelemetry Collector

Step 1: Assess Your Current Metrics

Before migrating, list what you are currently collecting.

List Your Metric Jobs

Run this PromQL query in Grafana (against your Prometheus/Mimir datasource) to see active jobs:

count({__name__=~".+"}) by (job)

This will give you a list of jobs (e.g., node_exporter, kubernetes-pods, myapp) and the number of series for each.

Categorize Your Sources

Group your metrics by how they are collected:

| Source Type | Migration Path | | ------------------------ | ------------------------------------------ | ----------------------------------------------------- | | Prometheus Exporters | node_exporter, postgres_exporter, etc. | Use Prometheus Receiver | | Application Metrics | /metrics endpoint on your app | Use Prometheus Receiver | | Grafana Agent | Using Grafana Agent for collection | Migrate to OTel Collector |

Step 2: Set Up the OpenTelemetry Collector

Most migration paths require the OpenTelemetry Collector.

- Install the OpenTelemetry Collector in your environment.

- Configure the OTLP exporter to send metrics to SigNoz Cloud.

Step 3: Migrate Each Metric Source

Work through each source type from your inventory.

From Prometheus Exporters

If you have existing Prometheus exporters running (like node_exporter), you can configure the OpenTelemetry Collector to scrape them just like Prometheus did.

- Copy Scrape Configs: Take the

scrape_configssection from yourprometheus.yml. - Add to Collector: Paste them into the

prometheusreceiver inotel-collector-config.yaml.

receivers:

prometheus:

config:

scrape_configs:

- job_name: 'node-exporter'

scrape_interval: 60s

static_configs:

- targets: ['localhost:9100']

- Enable in Pipeline:

service:

pipelines:

metrics:

# Ensure 'prometheus' is in this list

receivers: [otlp, prometheus]

processors: [batch]

exporters: [otlp]

For more details on configuring the Prometheus receiver, see Prometheus Metrics in SigNoz.

Replace Prometheus Exporters with OpenTelemetry Receivers

While the Prometheus Receiver allows you to continue using your existing Prometheus exporters, we highly recommend migrating to native OpenTelemetry receivers for many common systems and services. These provide a more direct integration with the OpenTelemetry ecosystem.

Here are some common Prometheus exporters and their OpenTelemetry equivalents:

| Prometheus Exporter | OpenTelemetry Receiver | Description |

|---|---|---|

| node_exporter | hostmetrics | Collects system metrics (CPU, memory, disk, network) |

| mysqld_exporter | mysqld | Collects MySQL server metrics |

| redis_exporter | redis | Collects Redis metrics |

| mongodb_exporter | mongodb | Collects MongoDB metrics |

| postgres_exporter | postgresql | Collects PostgreSQL metrics |

| kafka_exporter | kafka | Collects Kafka metrics |

| nginx_exporter | nginx | Collects NGINX metrics |

SigNoz supports all the receivers that are listed in the OpenTelemetry Registry. To configure a new metric receiver, follow these instructions here.

From Application Metrics

If your applications expose a /metrics endpoint (common in Go, Java, Python apps using Prometheus client libraries):

- Add Scrape Job: Add a job to the

prometheusreceiver to scrape your app.

receivers:

prometheus:

config:

scrape_configs:

- job_name: 'my-app'

scrape_interval: 30s

static_configs:

- targets: ['app-host:8080']

From Grafana Agent

Grafana Agent is based on the Prometheus and OpenTelemetry Collector codebases. Migrating to the official OpenTelemetry Collector is usually straightforward.

- Identify Receivers: Check your Grafana Agent config for

integrationsormetricssections. - Map to OTel:

node_exporterintegration ->hostmetricsreceiver orprometheusreceiver scrapingnode_exporter.prometheus.scrape->prometheusreceiver.otlp->otlpreceiver.

Refer to OpenTelemetry Metrics Receivers for more details.

Finding More Metric Sources

For a complete list of all supported metric collection methods, see Send Metrics to SigNoz.

Switching to Native OTel Metrics

For new applications, we recommend using OpenTelemetry SDKs directly instead of Prometheus client libraries. This avoids the need for scraping and provides better context (exemplars).

See Instrumentation Guides for your language.

Validate

Compare your SigNoz metrics against your original inventory.

Check Metrics Are Arriving

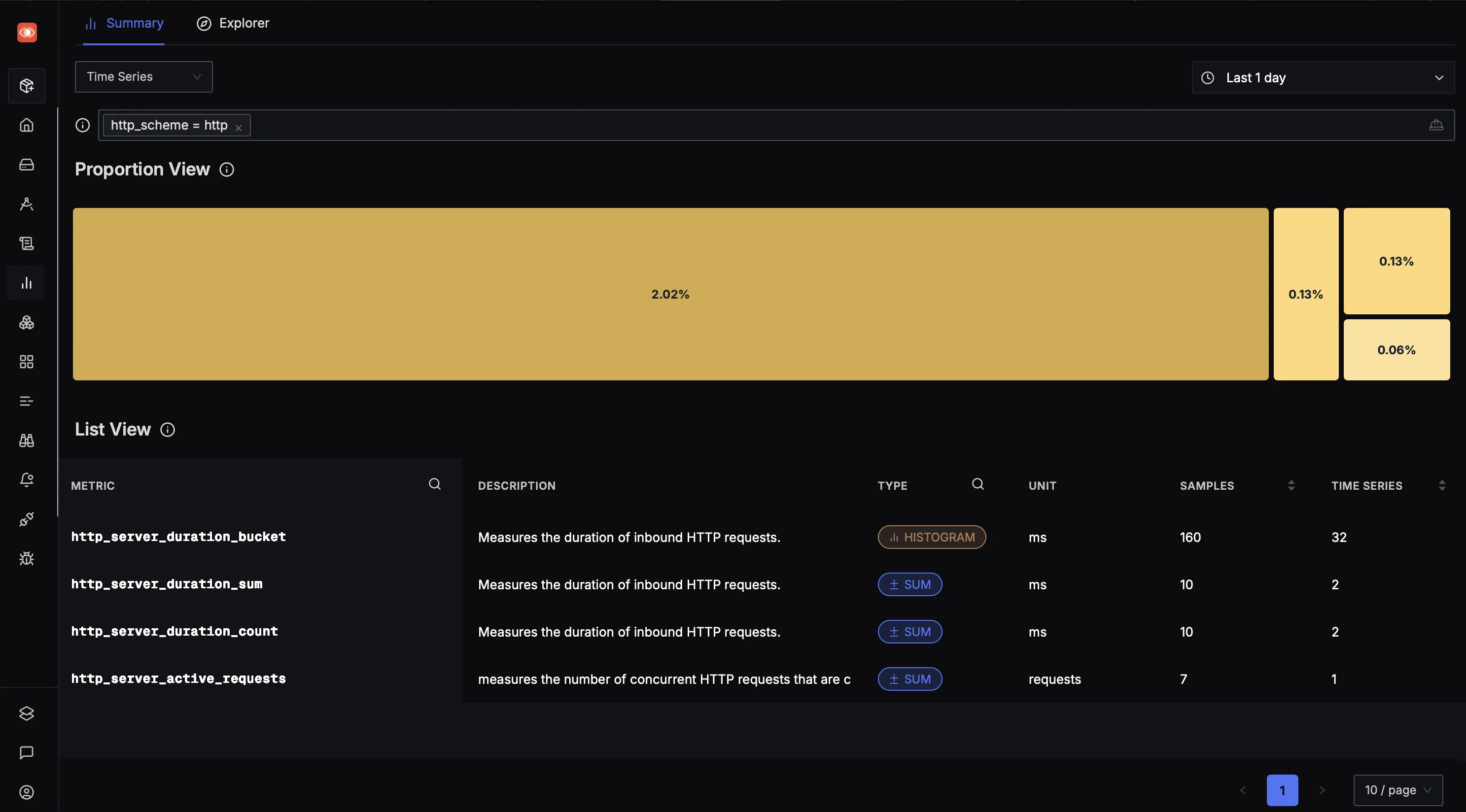

- In SigNoz, navigate to Metrics in the left sidebar.

- Use the List View to browse available metrics.

- Click a metric name to see its attributes and values.

Troubleshooting

Metrics not appearing in SigNoz

- Check Collector status: Verify the OpenTelemetry Collector is running.

- Verify endpoint: Confirm

https://ingest.<region>.signoz.cloud:443matches your account region. - Check ingestion key: Ensure

signoz-ingestion-keyheader is set correctly. - Check scrape targets: Look at Collector logs to see if Prometheus scrapes are failing (connection refused, timeout).

Missing attributes on metrics

If metrics appear but lack expected labels:

- Check relabel configs: Ensure you aren't dropping labels in your scrape config.

- Verify resource attributes: Ensure

service.nameand other resource attributes are set.

Metric values don't match

- Align time ranges: Ensure both queries cover the exact same period.

- Match aggregations: PromQL

rate()vsirate()can produce different results.

Next Steps

Once your metrics are flowing to SigNoz:

- Migrate your dashboards to visualize metrics in SigNoz

- Set up alerts based on your metrics

- Migrate traces for end-to-end observability

- Migrate logs to complete your observability stack