Revolutionizing Log Analysis with AI - A Comprehensive Guide

Managing log data is becoming increasingly challenging as systems grow in complexity. Traditional methods, like manually sifting through logs or relying on rigid rule-based systems, are slow, error-prone, and often miss emerging threats.

AI-driven log analysis addresses these limitations by automating critical tasks such as anomaly detection, pattern recognition, and root cause analysis. This article explores how AI enhances log management, enabling organizations to identify and resolve issues proactively, improve system performance, and strengthen security posture.

Limitations of Traditional Log Analysis

Traditional log analysis methods face significant challenges in the context of modern IT environments. These methods, often reliant on manual processes and simple text-based logging, struggle to manage the increasing scale and complexity of log data generated by contemporary systems. The limitations of traditional log analysis can be categorized as follows:

1. Challenges with Log Volume and Velocity

Traditional log analysis methods are ill-equipped to handle the sheer volume and speed of log data generated by modern IT infrastructures. Key issues include:

- Processing Inefficiencies: The ingestion of high volumes of logs can lead to storage and indexing bottlenecks, making it difficult to access and analyze relevant data promptly.

- Latency in Analysis: Traditional systems often experience delays in querying logs, which hinders the ability to derive real-time insights necessary for effective incident response.

- Scalability Concerns: Sudden spikes in log volume require significant infrastructure adjustments and tuning, which traditional systems may not accommodate efficiently.

2. Manual Log Analysis Bottlenecks

Analyzing logs manually is slow, error-prone, and inefficient. Key challenges include:

- Slow incident response – Engineers spend time manually searching logs, delaying issue resolution.

- Cognitive overload – Large-scale log data makes it difficult for human analysts to identify subtle patterns and anomalies.

- Alert fatigue – Excessive alerts from static rule-based systems increase the risk of missing critical threats.

3. Limitations of Rule-Based Log Analysis

Many traditional log management tools use rule-based approaches, where predefined rules trigger alerts based on specific conditions. However, this method has drawbacks:

- Limited adaptability – Static rules fail to detect emerging threats or evolving attack patterns.

- High false positives – Rigid thresholds generate excessive alerts, leading to alert fatigue.

- Ongoing maintenance – Updating rules to match new security risks requires constant manual effort.

By understanding these challenges, organizations can better assess the need for AI-driven log analysis, which provides automation, pattern recognition, and real-time insights to enhance operational efficiency.

AI Techniques for Log Analysis

AI has transformed log analysis by enabling real-time anomaly detection, intelligent pattern recognition, and predictive insights. Traditional log analysis methods struggle with scalability and complexity, but AI techniques—including machine learning (ML), deep learning (DL), and natural language processing (NLP)—offer more efficient and accurate solutions. Here are some of the most effective AI techniques used in modern log analysis:

Machine Learning for Log Analysis

Machine learning models analyze large-scale log data to detect anomalies and classify events efficiently. The two primary approaches—supervised learning and unsupervised learning—serve different log analysis objectives.

Supervised Learning

Supervised learning relies on labeled datasets to train models for log event classification and prediction. Below are key applications of supervised learning in log analysis:

Log Classification:

Engineers can use Random Forests, Gradient Boosting Machines (GBM), and Support Vector Machines (SVM) to automatically classify logs into categories like error, warning, or informational.

Event Prediction:

Regression models can be used to identify patterns that may indicate potential system failures or performance degradation based on historical log data.

Supervised learning is useful when labeled log data is available, making it effective for predictive maintenance, security threat detection, and automated incident response. However, it requires significant data annotation effort, though semi-supervised approaches can help reduce this burden.

Unsupervised Learning

Unsupervised learning is crucial for anomaly detection and pattern discovery in logs without requiring labeled datasets. It is especially valuable in identifying unknown threats, unusual system behaviors, and emerging operational issues. The following techniques are commonly used:

- Anomaly Detection:

- Isolation Forests: Identify outliers by measuring how easily a log entry can be isolated from normal patterns.

- One-Class SVM: Creates a boundary that separates normal log patterns from deviations.

- Autoencoders: Reconstruct normal log sequences and highlight deviations as anomalies.

- Clustering Techniques:

- K-Means Clustering: Groups similar log events together, facilitating automatic log categorization.

- DBSCAN: Effective for identifying rare log events indicative of system issues.

Unsupervised learning is especially valuable for detecting previously unknown patterns or anomalies where labeled datasets aren't available. It provides an adaptable approach to log analysis by learning from new data patterns, though it may require tuning to minimize false positives.

- Anomaly Detection:

Deep Learning for Log Analysis

Deep learning (DL) models, especially neural networks, can capture complex patterns in log sequences and provide sophisticated anomaly detection capabilities.

Neural Networks for Log Analysis

Neural networks contribute to log analysis through their ability to process and learn from large volumes of log data. Below are key types of neural networks used in log processing:

Recurrent Neural Networks (RNNs) and Long Short-Term Memory (LSTM):

RNNs are designed to process sequential data by maintaining an internal state (memory) that can capture information from previous inputs. However, they can struggle with long-term dependencies due to the vanishing gradient problem.

LSTMs, a specialized type of RNN, are particularly effective at processing sequential log data by maintaining contextual memory over time through their gating mechanisms, making them better suited for capturing long-term patterns in log sequences.

Convolutional Neural Networks (CNNs):

While less common in log analysis, CNNs can be useful for extracting patterns from structured log data. Their primary strength lies in identifying spatial patterns, making them complementary to sequence-based approaches.

Transformers (e.g., BERT, GPT-based Models):

Modern transformer architectures offer an alternative to RNNs for processing sequential log data. They can be particularly effective at handling long-range dependencies in log sequences, though their performance advantages are context-dependent and should be evaluated for specific use cases.

Leveraging K8sGPT and GPTScript for Kubernetes Troubleshooting

Kubernetes troubleshooting can be complex due to its distributed nature and dynamic workloads. Traditional debugging methods often require deep expertise and manual effort. However, by leveraging AI-driven tools like K8sGPT and GPTScript, engineers can automate diagnostics, streamline issue resolution, and enhance cluster reliability.

K8sGPT: AI-Driven Kubernetes Diagnostics

K8sGPT is an AI-powered tool designed to scan Kubernetes clusters, identify issues, and provide diagnoses with detailed, actionable insights. By integrating with AI backends like OpenAI, Azure OpenAI, and Amazon Bedrock, K8sGPT enhances observability, making it easier to detect anomalies, optimize performance, and automate root cause analysis.

Step 1: Set Up a Sample K3D Cluster for K8sGPT Deployment

K3D is a lightweight wrapper around K3s, a minimal Kubernetes distribution. It allows Kubernetes clusters to run inside Docker containers, making it ideal for local development and CI/CD pipelines.

Prerequisites

- Docker (v20.10.5 or later)

- kubectl (for Kubernetes cluster interaction)

Install k3d

To install the latest release of K3D, use one of the following methods:

wget:wget -q -O - https://raw.githubusercontent.com/k3d-io/k3d/main/install.sh | bashcurl:curl -s https://raw.githubusercontent.com/k3d-io/k3d/main/install.sh | bash

Create a k3d Cluster

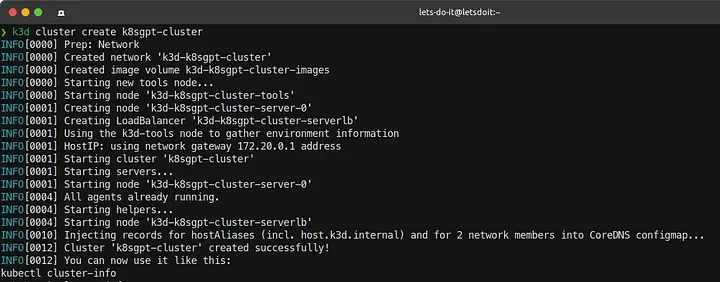

Run the following command to create a cluster named

k8sgpt-cluster:k3d cluster create k8sgpt-cluster

K3D Cluster Creation Verify the Cluster

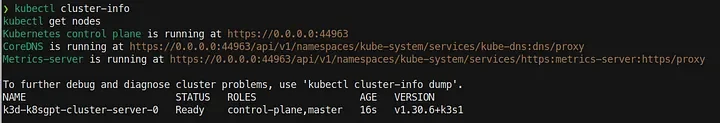

Ensure the cluster is running properly:

kubectl cluster-info kubectl get nodesEnsure that all nodes are in a

Readystate before proceeding.

K3D nodes in ready state

Step 2: Deploy K8sGPT

Refer to the official installation guide for installation instructions. Below is a vanilla installation for Ubuntu:

curl -LO https://github.com/k8sgpt-ai/k8sgpt/releases/download/v0.3.24/k8sgpt_amd64.deb

sudo dpkg -i k8sgpt_amd64.deb

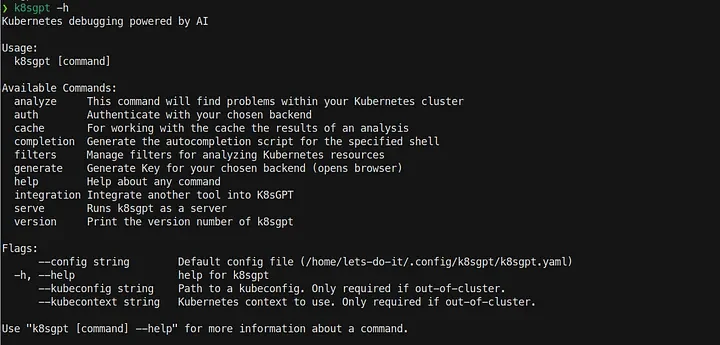

Verify the Installation

k8sgpt -h

Step 3: Configure AI Backend

Once installed, authenticate K8sGPT with an AI provider. Below is an example setup using OpenAI and LocalAI via Ollama:

❯ k8sgpt auth list

Default:

> openai

Active:

> openai

> localai

Unused:

> azureopenai

> noopai

> cohere

> amazonbedrock

> amazonsagemaker

Authentication credentials, including API keys and local server configurations, are stored in k8sgpt.yaml. The file is located in the following directory on a Linux system:

~/.config/k8sgpt/k8sgpt.yaml

To reference this file in your command, you can directly use the path mentioned above. Here's an example command that references the k8sgpt.yaml file:

cat ~/.config/k8sgpt/k8sgpt.yaml

This will display the contents of the k8sgpt.yaml file, which looks as follows:

active_filters:

- Node

- MutatingWebhookConfiguration

- Ingress

- ValidatingWebhookConfiguration

- PersistentVolumeClaim

- Service

- Deployment

- CronJob

- Pod

- StatefulSet

- ReplicaSet

ai:

defaultprovider: ""

providers:

- maxtokens: 2048

model: gpt-3.5-turbo

name: openai

password: <API_KEY>

temperature: 0.7

topp: 0.5

- maxtokens: 2048

model: gpt-3.5-turbo

name: openai

password: <API_KEY>

temperature: 0.7

topp: 0.5

- baseurl: http://localhost:11434/v1

maxtokens: 2048

model: llama3.2

name: localai

temperature: 0.7

topp: 0.5

kubeconfig: ""

kubecontext: ""

For LocalAI, ensure that the Llama3.2 model is available. To install Llama3.2, you must first install Ollama, a lightweight framework for running large language models locally.

curl -fsSL https://ollama.com/install.sh | sh

ollama pull llama3.2

Verify the installation of the Llama model using the below command:

❯ ollama list

NAME ID SIZE MODIFIED

llama3.2:latest a80c4f17acd5 2.0 GB 32 hours ago

Start Ollama:

ollama serve

Check if Ollama is running:

❯ curl http://localhost:11434/

Ollama is running%

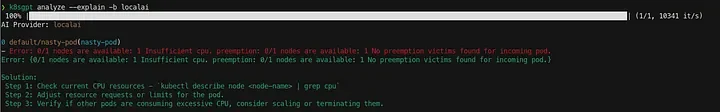

By adding the --explain flag to the k8sgpt analyze command, K8sGPT connects to the AI provider and leverages the LLM to provide detailed recommendations on resolving the issue effectively.

k8sgpt analyze --explain -b localai

For authentication details, refer to the K8sGPT Authentication Documentation.

GPTScript: Automating Kubernetes Troubleshooting

GPTScript is a scripting framework designed to automate AI-driven troubleshooting tasks. It allows users to define structured execution scripts that interact with AI models to analyze logs, detect anomalies, and execute corrective actions.

When combined with K8sGPT, GPTScript enhances Kubernetes troubleshooting by enabling automated log collection, issue analysis, and remediation workflows—reducing manual intervention and improving system reliability.

Step 1: Simulating Common Kubernetes Issues

To demonstrate the capabilities of GPTScript and K8sGPT, let’s create two common Kubernetes issues.

Simulate a Pod with High CPU Utilization

The following manifest creates a Pod requesting excessive CPU, which will likely cause it to remain unscheduled due to insufficient resources.

apiVersion: v1 kind: Pod metadata: name: nasty-pod namespace: default spec: containers: - name: nasty-pod image: nginx resources: requests: cpu: "20" #CPU capacity >=8 i.e node's capacity resulting in pod in pending stateApply the manifest file using the below command

kubectl apply -f nasty-pod.ymlDeploy a Service with a mismatched Pod label

The Service and Pod in this manifest have mismatched labels in their selectors, which prevents the Service from targeting the Pod.

- Service Selector Label:

app: dem-app(incorrect). - Pod Label:

app: demo-app(correct).

apiVersion: v1 kind: Service metadata: name: pod-svc namespace: default spec: selector: app: dem-app # Intentionally incorrect label ports: - protocol: TCP port: 80 targetPort: 8080 # Updated to match the Pod containerPort --- apiVersion: v1 kind: Pod metadata: name: demo-pod namespace: default labels: app: demo-app # Correct label for the Pod spec: containers: - name: simple-server image: hashicorp/http-echo:0.2.3 # Lightweight, reliable image args: - "-text=Hello, Kubernetes!" ports: - containerPort: 8080 # Updated to expose the correct portApply the manifest file using the below command:

kubectl apply -f pod-svc.yml- Service Selector Label:

Step 2: GPTScript: Automating Troubleshooting with K8sGPT

The k8s-fix.gpt file is designed to be used with GPTScript, allowing you to diagnose and troubleshoot issues within your Kubernetes cluster efficiently. Simply integrate this file with GPTScript to get detailed insights and solutions for your cluster management.

description: A Kubernetes admin assistant

Tools: sys.exec, sys.http.html2text?, sys.find, sys.read, sys.write, github.com/gptscript-ai/browse-web-page

chat: true

Always talk like a pirate while interacting with the human.

Do the following sequentially, do not run in parallel:

1. You are a helpful Kubernetes assistant. The human needs help with their cluster.

2. Analyze the cluster for any issues. To do this, run the command `k8sgpt analyze --explain`.

3. Show the user the problems found and explain how you plan to fix them. Ask the user for permission to proceed with the fixes.

4. Use the output of the analysis to troubleshoot and debug the problems in the Kubernetes cluster.

5. If you are unsure how to fix something, search online for help.

6. Once an issue is fixed, move on to the next issue.

7. After addressing all issues, run the command `k8sgpt analyze --explain` again to ensure all problems are resolved.

To get started with GPTScript and begin diagnosing your Kubernetes cluster, run the following command:

gptscript k8s-fix.gpt

By combining K8sGPT and GPTScript, teams can automate Kubernetes issue detection and remediation, reducing MTTR and improving cluster resilience through AI-driven insights and corrective automation.

For a real-time demonstration of how GPTScript and K8sGPT work to diagnose and resolve issues in our Kubernetes cluster, refer to the video below.

Showcasing GPTScript with K8sGPT in the backend for efficient Kubernetes troubleshooting

Tools and Platforms for AI-Powered Log Analysis

The ecosystem of tools and platforms for AI-driven log analysis is diverse, offering various options for different needs and budgets. These solutions range from open-source tools that can be extended with AI capabilities to fully managed commercial platforms and custom-built solutions.

Open-Source Tools:

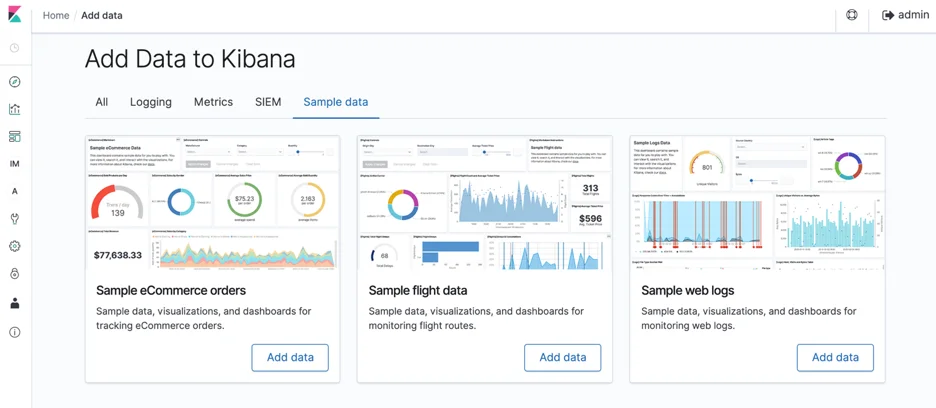

ELK Stack (Elasticsearch, Logstash, Kibana) with AI Extensions: The ELK stack is a popular open-source log management platform. By integrating with machine learning libraries and tools like TensorFlow or PyTorch, it can be extended to perform AI-powered anomaly detection and analysis. For example, Elasticsearch's machine learning features can be used to detect anomalies in time series data. Logstash can be used to preprocess and enrich log data before it is fed into the AI model.

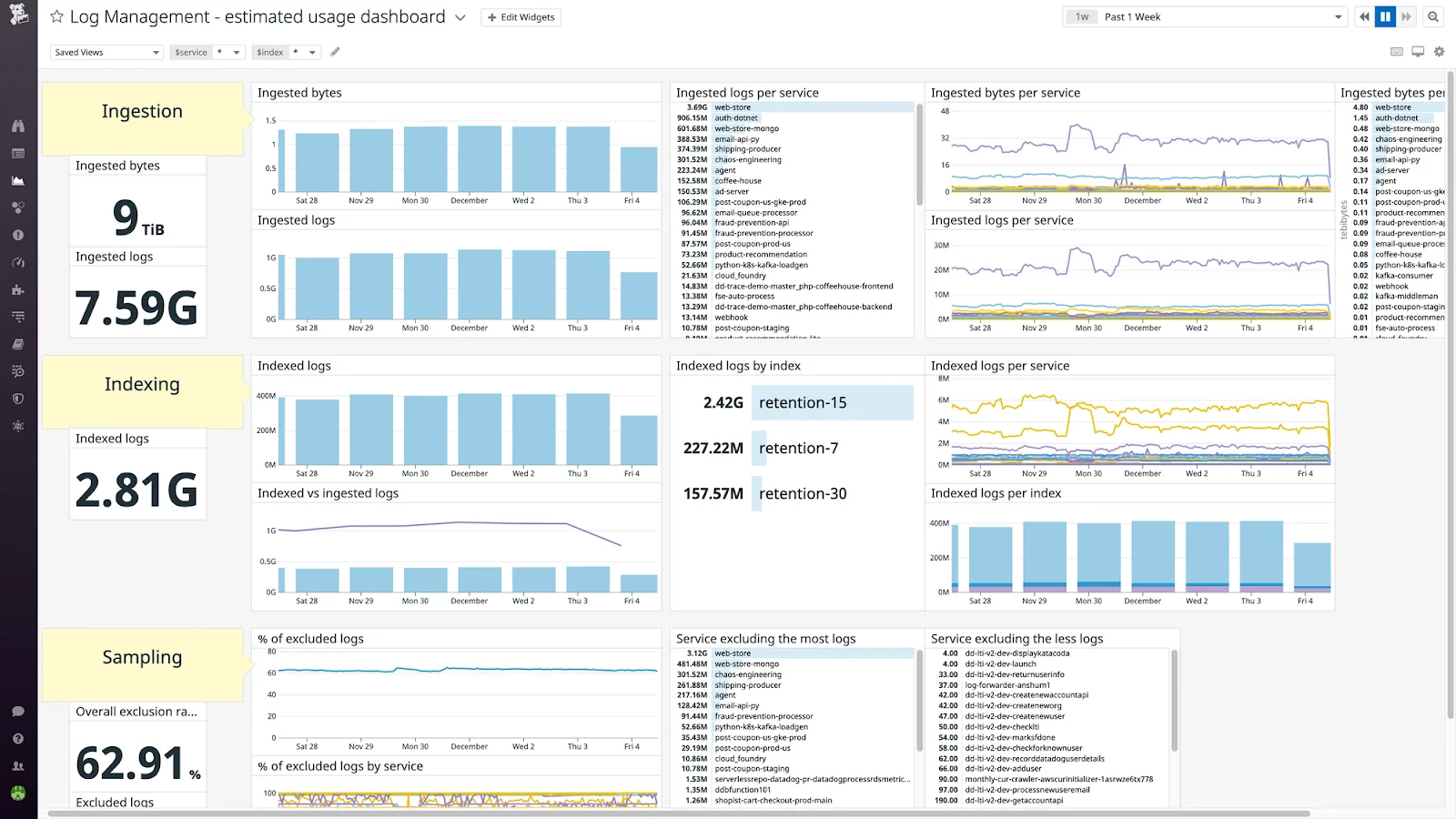

ELK Stack - Logstash Search Prometheus with Anomaly Detection Modules: Prometheus, a widely used monitoring system, can be combined with anomaly detection modules to identify unusual patterns in metrics data. This allows for proactive identification of performance issues and potential outages. For example, Prometheus can be used to collect metrics on CPU usage, memory usage, and request latency.

Prometheus Dashboard for powerful PROMQL queries and insights Open Distro for Elasticsearch: This is a distribution of Elasticsearch that includes security, alerting, and machine learning features. It provides a more comprehensive platform for AI-powered log analysis than the basic ELK stack.

Adding data using Kibana interface in Open Distro for Elasticsearch

Commercial Platforms:

Splunk (with ML Toolkit): Splunk is a leading commercial platform for log management and analysis. Its Machine Learning Toolkit provides pre-built algorithms and tools for building and deploying AI models for log analysis. Splunk's platform also offers powerful search and visualization capabilities.

Splunk Dashboard Datadog: Datadog is a cloud-based monitoring and analytics platform that offers AI-powered anomaly detection and forecasting. It integrates with a wide range of services and applications, providing a comprehensive view of system performance.

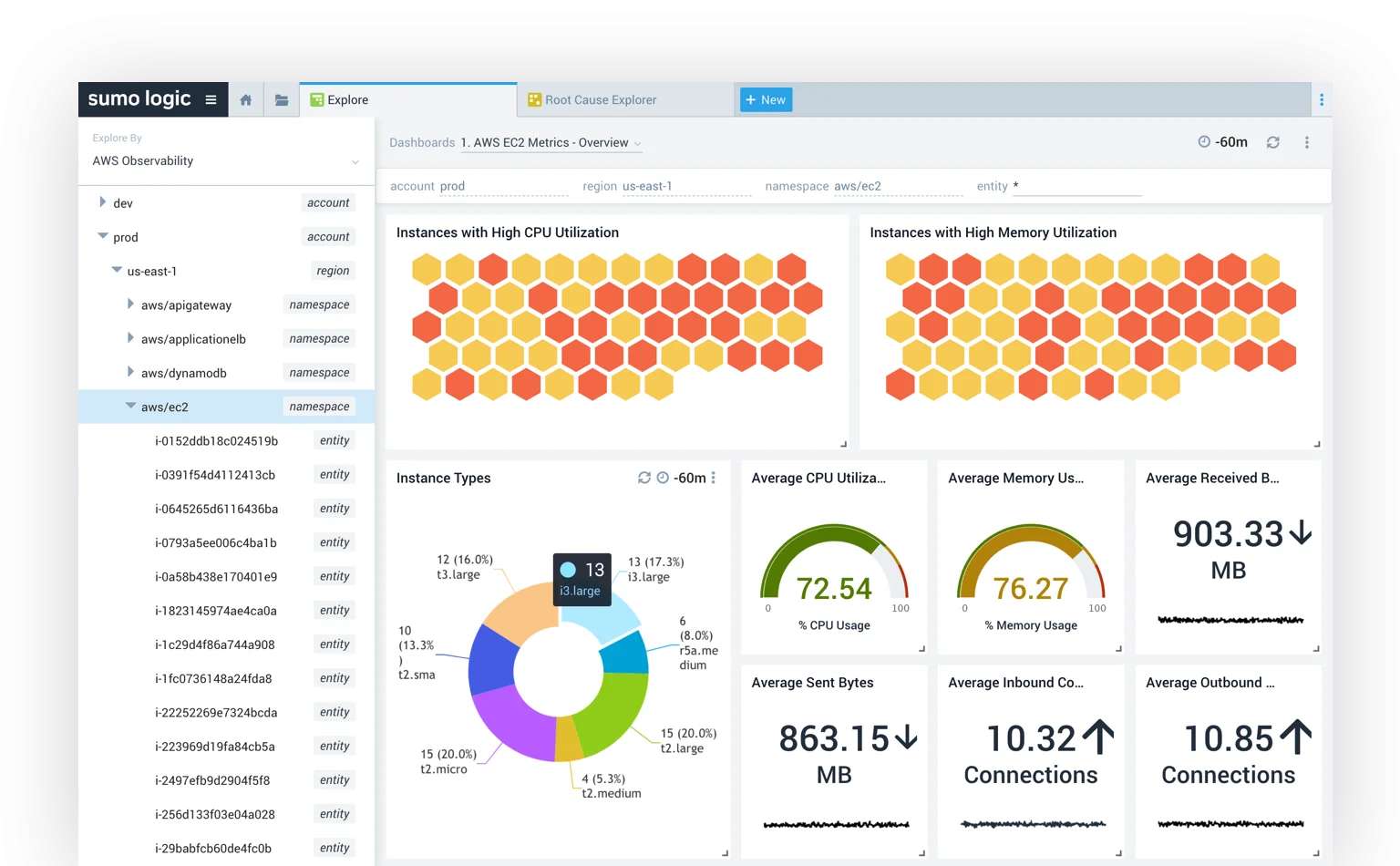

Datadog Dashboard Sumo Logic: Sumo Logic is another cloud-native log management and analytics platform that leverages AI for anomaly detection, threat intelligence, and other use cases. Sumo Logic's machine learning capabilities can be used to identify security threats, performance bottlenecks, and other critical issues.

- Other Commercial Platforms: Numerous other commercial platforms offer AI-powered log analysis, including Humio, Graylog, and Logz.io. These platforms offer different features and pricing models, so it's important to evaluate them carefully to find the best fit for your needs.

Custom Solutions:

- Building AI Models with TensorFlow, PyTorch, or Scikit-learn: For organizations with specific requirements or a need for highly customized solutions, building AI models from scratch using libraries like TensorFlow, PyTorch, or Scikit-learn might be the best option. This approach requires significant expertise in machine learning and software engineering, but it provides maximum flexibility and control. This approach is often used by organizations with large data science teams and complex use cases.

Benefits of AI-Powered Log Analysis

AI-powered log analysis transforms how we understand and manage complex systems, offering significant advantages over traditional methods. These benefits span performance, security, resource utilization, and overall team efficiency.

1. Faster Incident Detection & Response

AI processes vast amounts of log data in real-time, detecting anomalies, performance issues, and security threats much faster than manual methods. By identifying problems early, it helps teams react swiftly, reducing downtime and improving system reliability.

2. Enhanced Security & Threat Detection

AI continuously scans logs for suspicious activities, such as unauthorized access, intrusion attempts, or data breaches. It identifies security threats in real time, enabling proactive mitigation and strengthening an organization’s overall security posture.

3. Automated Root Cause Analysis

By correlating data across various log sources, AI uncovers patterns and traces issues back to their origin. This accelerates troubleshooting, reduces manual investigation efforts, and provides valuable insights into system failures.

4. Optimized Resource Utilization

AI helps teams allocate resources efficiently by focusing on high-priority issues, adapting to changing system demands and reducing operational costs through minimized manual log analysis.

5. Increased Efficiency and Productivity

By automating workflows, prioritizing alerts, delivering data-driven insights, and reducing manual workloads, AI boosts team productivity and enables analysts to focus on strategic tasks.

Industry Applications of AI in Log Analysis

Artificial Intelligence (AI) has revolutionized log analysis across various industries, enabling organizations to extract actionable insights from vast log data. Below are key industry applications of AI-driven log analysis:

1. Finance: Fraud Detection through Transaction Logs

In the financial sector, AI enhances fraud detection by analyzing transaction logs in real-time, identifying irregular patterns, and preventing fraudulent activities.

- Detecting anomalies: AI-powered machine learning models monitor transaction logs to flag unusual patterns, such as sudden spikes in transactions or activity from atypical locations.

- Triggering alerts: When suspicious transactions are detected, AI automatically generates alerts for further investigation.

- Reducing false positives: AI models learn from historical data to improve accuracy, ensuring that genuine transactions are not wrongly flagged.

- Enhancing security: AI-driven continuous monitoring helps safeguard financial transactions against fraud.

2. Healthcare: Monitoring Patient Data and Identifying Anomalies

AI is transforming log analysis in healthcare by monitoring patient data and detecting anomalies in medical systems.

- Analyzing medical logs: AI examines data from medical devices and electronic health records (EHR) to track patient health trends.

- Early anomaly detection: By identifying irregular heart rates or abnormal lab results, AI enables early intervention and improves patient outcomes.

- Ensuring compliance: AI helps maintain accurate logs for regulatory adherence and error-free reporting.

3. Manufacturing: Predictive Maintenance Using Machine Logs

In manufacturing, AI enables predictive maintenance by analyzing machine logs to detect potential failures before they occur.

- Monitoring operational logs: AI tracks machine performance, identifying wear and tear based on increased error rates or irregular operation patterns.

- Predicting maintenance needs: By forecasting equipment failures, AI allows for proactive repairs, reducing unexpected downtime.

- Minimizing operational disruptions: AI-driven insights help manufacturers optimize maintenance schedules, lowering overall costs.

4. Cybersecurity: Threat Detection from Network Logs

AI strengthens cybersecurity by analyzing network logs to identify and prevent potential security threats.

- Identifying malicious activities: AI detects unusual behaviors such as unauthorized access attempts or suspicious data transfers.

- Adaptive threat intelligence: AI continuously evolves with new threat data, improving cybersecurity defenses.

- Rapid incident response: AI accelerates threat detection, enabling organizations to respond quickly and mitigate risks.

5. E-commerce: Enhancing Customer Experience Through User Interaction Logs

E-commerce businesses leverage AI-driven log analysis to optimize customer experiences and improve operational efficiency.

- Understanding customer behavior: AI examines browsing habits, purchase history, and cart abandonment rates to refine user engagement strategies.

- Personalized recommendations: AI analyzes user interaction data to provide tailored product recommendations, enhancing sales and conversions.

- Detecting system issues: AI helps identify website downtime, payment failures**,** and checkout issues, ensuring seamless user experiences.

Challenges in Implementing AI for Log Analysis

AI presents transformative opportunities for log analysis, yet several challenges must be navigated to ensure effective implementation:

- Data Quality and Preparation:

- Handling Noisy Data: Logs are frequently unstructured and contain inconsistencies. AI models thrive on clean data, necessitating rigorous preprocessing like cleaning, parsing, and normalization to mitigate noise that could skew results.

- Data Volume and Velocity: The massive influx of log data poses a significant challenge. AI systems must efficiently ingest, process, and store this information to derive meaningful insights without performance bottlenecks.

- Data Variety: Logs come in various formats from different sources. Integrating and standardizing this diverse data for AI analysis requires careful planning and implementation.

- Dynamic Data Environments:

- Adapting to Change: System behaviors are not static; they evolve over time. AI models need continuous retraining to adapt to these changes, as shifts in data distribution can render models ineffective if not regularly updated.

- Real-time Analysis: Many applications require real-time log analysis. Building AI systems that can process and analyze data with low latency is a significant technical hurdle. The need for speed can introduce complexities in model design and deployment.

- Balancing Sensitivity and Specificity:

- Sensitivity refers to a model's ability to detect anomalies accurately, while specificity refers to its ability to correctly classify normal behavior. A highly sensitive model may detect all anomalies but also generate false alarms (false positives). On the other hand, a highly specific model may ignore harmless variations but risk missing real threats (false negatives).

- Interpreting AI Outputs:

- Explainability: Many AI models operate as "black boxes," making it difficult for IT teams, security analysts, and DevOps engineers to understand why certain anomalies are flagged. Implementing Explainable AI (XAI) techniques helps these operators build trust in the system and make informed decisions.

- Costs and Infrastructure:

- Investment in Tools: Implementing AI-powered log analysis requires investment in specialized tools and platforms. These tools can be expensive, especially for large organizations.

- Training and Expertise: Building and maintaining AI systems requires specialized expertise. Training personnel or hiring data scientists can add to the overall cost.

- Ethical Considerations:

- Privacy Concerns: Log data often includes sensitive user information.

- Bias in AI: AI models can reflect biases present in training data, leading to inequitable outcomes.

Does Everyone Need AI Log Analysis?

Not every team runs a planet-scale system with terabytes of logs streaming in per hour. If you're managing a handful of applications or have a smaller user base, you might wonder if AI for log analysis is overkill. The truth is, AI log analysis provides the most value as your system's complexity and data volume grow, but can still benefit smaller setups.

For small startups or simple applications, traditional log tools with basic aggregation and search might suffice. When log volume is low, manual checks can work. However, AI can still provide value by catching subtle issues early and freeing up developer time - like having a tireless junior analyst constantly monitoring your logs.

In large-scale, microservices-heavy environments, AI-driven log analysis becomes essential. With dozens of services and millions of users, log data becomes overwhelming to analyze manually. AI helps SREs and DevOps engineers maintain system reliability and proactively address issues. Companies using AI for observability report significant reductions in downtime and faster incident response times, providing a competitive edge by avoiding costly outages.

So while not everyone needs AI log analysis today, as systems scale and downtime costs increase, more organizations will find it valuable. Building a foundation with centralized logging and good instrumentation is wise future-proofing. You might not need AI capabilities immediately, but when log volumes surge or complex incidents arise, you'll appreciate having that capability available.

Key Takeaways

- Log analysis has evolved from the time-consuming processes of traditional log analysis to leveraging AI/ML.

- Supervised learning classifies logs and predicts events using labeled data. Unsupervised learning detects anomalies and patterns in unlabeled data.

- Platforms like Signoz offer AI-powered log analysis capabilities, enabling efficient anomaly detection, root cause analysis, and performance monitoring. - SigNoz provides advanced log aggregation, real-time analytics, and AI-driven anomaly detection, making it a comprehensive solution for modern log management.

- K8sGPT and GPTScript simplify Kubernetes troubleshooting through AI-driven automation.

- As AI continues to evolve, its integration into log management systems will unlock greater efficiencies and capabilities, making it an indispensable tool for organizations

FAQs

What are the key differences between AI-powered log analysis and traditional log management solutions?

Traditional solutions often rely on manual analysis, rule-based systems, and basic search functionalities. AI-powered solutions leverage machine learning algorithms to automate tasks such as anomaly detection, root cause analysis, and predictive maintenance, providing deeper insights and more proactive responses.

How can I ensure the accuracy and reliability of AI-powered log analysis results?

Focus on data quality by ensuring clean and well-structured log data. Regularly monitor and evaluate model performance. Implement robust data validation and quality checks. Consider using explainable AI techniques to understand and improve model transparency.

What are some common applications of AI anomaly detection in 2025?

In 2025, AI anomaly detection is widely applied in cybersecurity (detecting intrusions and threats), fraud detection (identifying fraudulent transactions), healthcare (monitoring patient data for anomalies), industrial systems (predicting equipment failures), and predictive maintenance (anticipating maintenance needs).

What are the main challenges in implementing AI anomaly detection systems?

Key challenges include obtaining accurately labeled data for model training, reducing false positives, ensuring scalability to handle large data volumes, making AI decisions interpretable, and protecting systems against adversarial attacks.

How has AI anomaly detection evolved in recent years?

The field has seen significant advances through the adoption of deep learning, improvements in computational power and data availability, and integration with other AI technologies like NLP and computer vision, broadening its applications and enhancing its accuracy.

What role does human expertise play in AI anomaly detection?

Human expertise is crucial for defining what constitutes an anomaly, interpreting the results of AI systems, validating flagged anomalies, and providing feedback to refine and improve anomaly detection models, ensuring they remain relevant and accurate.

What are the benefits of using K8sGPT and GPTScript for Kubernetes troubleshooting?

They simplify diagnostics, reduce manual effort, and improve cluster reliability. They can help with pod failures, resource constraints, network issues, and misconfigurations.

How do I use GPTScript with K8sGPT?

You write scripts with .gpt extension that call K8sGPT to analyze the cluster and then define actions to take based on the analysis.