Implementing Alerts as Code - Best Practices for DevOps

Modern DevOps practices thrive on automation, consistency, and reliability. One key aspect of achieving this is "Alerts as Code" (AaC), a practice that treats alert definitions as version-controlled, reusable configurations. Alerts as Code (AaC) is transforming how DevOps teams manage and respond to incidents. By implementing AaC, you can significantly reduce manual errors, improve collaboration, and enhance your incident response capabilities.

This guide walks you through understanding, implementing, and excelling at Alerts as Code, equipping your team to improve monitoring processes and incident response.

What is Alerts as Code?

Alerts as Code is a methodology that applies software development principles to alert management. It involves defining, managing, and versioning alert configurations using code, ensuring that alerting mechanisms are integrated into the software development lifecycle. This approach enables consistency, version control, and automation in the configuration and management of alerts.

Key Components of Alerts as Code

- Alert Configurations: Define the rules or conditions that trigger alerts, such as thresholds for CPU usage or error rates.

- Thresholds: Specify the acceptable limits for metrics, ensuring alerts are triggered only when necessary.

- Notification Channels: Determine how alerts are delivered, such as via email, Slack, PagerDuty, or custom integrations.

AaC is closely related to Infrastructure as Code (IaC) and Monitoring as Code (MaC). While IaC focuses on provisioning and managing infrastructure, and MaC deals with defining monitoring configurations, AaC specifically addresses the alerting aspect of your observability stack.

Key Benefits of Alerts as Code

- Consistency: Ensure uniform alert configurations across environments.

- Example: Imagine deploying a new service in production, but QA missed critical alert thresholds. With AaC, you ensure uniform alert configurations across dev, staging, and production, avoiding such gaps.

- Version control: Track changes and roll back when needed

- Example: A team accidentally lowers an alert threshold, flooding your ops team with false positives. With AaC, you can track changes in Git and quickly roll back to the previous, stable configuration.

- Automation: Integrate alert management into CI/CD pipelines

- Example: A new microservice is added to your architecture. By integrating AaC into your CI/CD pipeline, you can automatically deploy alerts for the service alongside its code, ensuring instant monitoring.

- Collaboration: Improve teamwork between development and operations

- Example: A developer adds a feature that could cause memory spikes. By managing alerts as code, they can work directly with ops to define memory usage alerts in the same repository, improving communication.

Why Adopt Alerts as Code?

Alerts as Code isn’t just a trend—it’s a game-changer for modern DevOps workflows. Here’s why adopting it can elevate your alerting process:

Improved Collaboration Between Development and Operations Teams

When developers and operations teams work with the same version-controlled alert configurations, they can collaborate seamlessly. For example, a developer pushing a new feature can define alert thresholds directly in the same repo, ensuring shared responsibility for monitoring.

Reduced Manual Errors and Configuration Drift

Manual changes in alert settings across environments often lead to inconsistencies and missed alerts. With Alerts as Code, these configurations are automated and version-controlled, eliminating the risk of misaligned settings during deployments.

Enhanced Scalability and Reusability Across Projects

Managing alerts for a single service is easy, but scaling that to dozens of microservices is not. That’s where reusable alert templates shine. Alerts as Code allows you to define modular, reusable configurations that can be applied consistently across projects.

Faster Incident Response and Reduced Mean Time to Resolve (MTTR)

Codified alerts come with detailed context, such as runbooks and incident response playbooks, right in the alert definition. This ensures your team can act swiftly and precisely, cutting down MTTR during incidents.

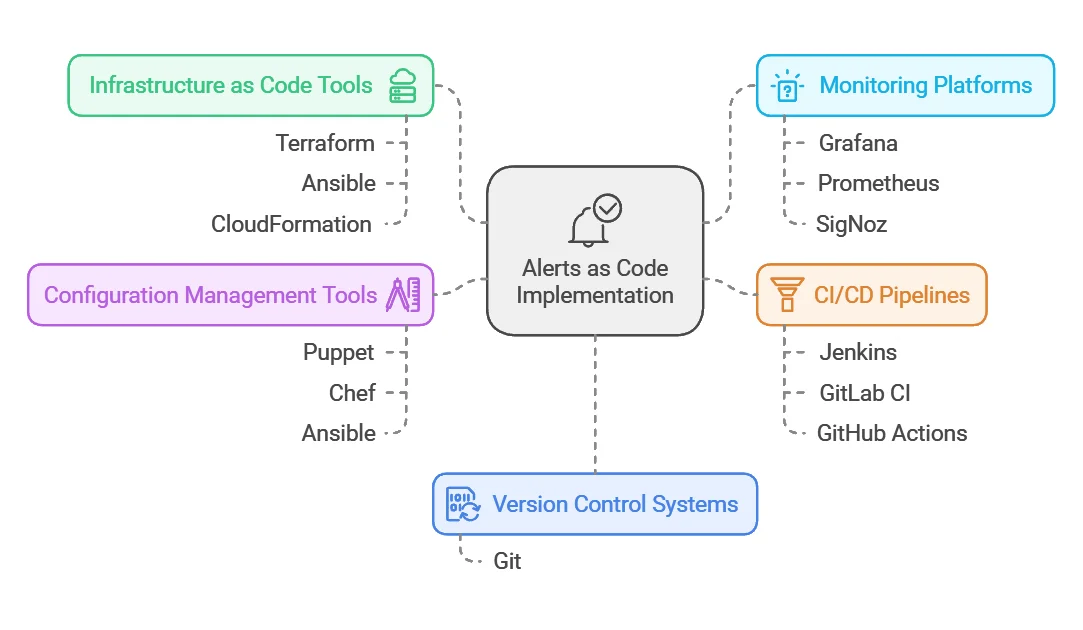

Essential Tools for Implementing Alerts as Code

To successfully implement Alerts as Code, you'll need a combination of tools that support this approach:

- Version control systems: Git is the most popular choice for managing alert configuration files.

- Infrastructure as Code tools: Terraform, Ansible, or CloudFormation can help provision and manage the infrastructure that supports your alerts.

- Monitoring platforms with API support: Tools like Grafana, Prometheus, or SigNoz offer APIs that allow programmatic management of alerts.

- CI/CD pipelines: Jenkins, GitLab CI, or GitHub Actions can automate the deployment of alert configurations.

- Configuration management tools: Puppet, Chef, or Ansible can help manage alert configurations across different environments.

Best Practices for Alerts as Code Implementation

To get the most out of Alerts as Code, follow these best practices:

- Define Clear Naming Conventions and Structure

Establish a consistent naming scheme for your alert configurations. This makes it easier to understand the purpose of each alert at a glance. For example:

alerts:

- name: high_cpu_usage

description: "CPU usage exceeds 90% for 5 minutes"

severity: warning

query: "avg(cpu_usage_percent) > 90"

for: 5m

Use intuitive names like high_cpu_usage and group alerts logically (e.g., by service or environment) to maintain clarity.

- Use Templates and Modules

Create reusable alert patterns to maintain consistency and reduce duplication. For instance, you might have a template for standard resource utilization alerts:

resource_alert_template:

- name: "${resource}_high_usage"

description: "${resource} usage exceeds ${threshold}% for ${duration}"

severity: ${severity}

query: "avg(${resource}_usage_percent) > ${threshold}"

for: ${duration}

Templates allow you to define common alert structures once and customize them with parameters for different services.

- Implement Code Review Processes

Treat alert configurations like any other code. Implement pull requests and code reviews to ensure quality and catch potential issues before they're deployed. Code reviews catch potential errors, improving alert quality and reducing false positives.

- Regularly Test and Validate Alerts

Set up automated testing for your alert configurations. This can include:

- Syntax validation

- Threshold testing

- Integration tests with your monitoring system

Creating Effective Alert Configurations

When defining alerts, consider the following practices:

Base thresholds on SLOs: Align your alert thresholds with your service level objectives to ensure meaningful alerting.

Implement multi-stage alerting: Use different severity levels for escalating issues. For example:

alerts: - name: api_error_rate warning: threshold: 1% duration: 5m critical: threshold: 5% duration: 1mUse dynamic thresholds: Implement adaptive thresholding to account for normal fluctuations in system behavior.

Include context and runbooks: Provide detailed information and troubleshooting steps in your alert definitions to speed up resolution.

Integrating Alerts as Code with Existing DevOps Workflows

To seamlessly incorporate Alerts as Code into your DevOps practices:

- Add alert configurations to CI/CD pipelines: Automatically deploy and update alerts alongside your application code. This ensures alert configurations are deployed automatically whenever code changes, keeping monitoring aligned with application updates.

- Implement automated testing: Create tests that validate your alert configurations before deployment. Automated tests catch syntax issues and logical errors, ensuring reliable alerts in production.

- Use feature flags: Gradually roll out new alert rules to minimize disruption. Feature flags allow you to test new alerts in a subset of environments before full deployment.

- Establish feedback loops: Regularly review alert effectiveness and iterate on your configurations. Regularly reviewing and refining alerts ensures they remain actionable and effective over time.

Challenges and Solutions in Alerts as Code Adoption

While implementing Alerts as Code offers numerous benefits, it also comes with challenges:

- Resistance to change: Educate team members on the benefits of AaC and provide training to ease the transition.

- Learning curve: Start with simple alerts and gradually increase complexity as your team becomes more comfortable with the approach.

- Tool fragmentation: Choose a monitoring platform that supports AaC natively or invest in integration tools to bridge gaps between systems.

- Complexity at scale: Use modular design patterns and leverage automation to manage large-scale alert configurations effectively.

Measuring Success: KPIs for Alerts as Code

To gauge the effectiveness of your Alerts as Code implementation, track these key performance indicators:

- Reduction in false positive alerts: Measure the decrease in non-actionable alerts.

- Improved alert response times: Track the time from alert trigger to resolution.

- Increased coverage: Monitor the percentage of critical systems and services with defined alerts.

- Time saved: Quantify the reduction in time spent on alert configuration and management.

Implementing Alerts as Code with SigNoz

SigNoz is a modern, open-source observability platform that helps monitor your applications and infrastructure by providing powerful metrics, logs, and trace visualizations. It offers seamless integrations with industry-standard monitoring and observability tools, making it a great choice for managing distributed systems and microservices.

With SigNoz, you can collect metrics, logs, and traces from your applications, visualize them in customizable dashboards, and set up powerful alerting systems to keep track of critical issues in real time. It supports multiple data sources, including Prometheus, OpenTelemetry, and more, allowing you to aggregate and monitor all your observability data in a single place.

SigNoz Cloud is the easiest way to run SigNoz. Sign up for a free account and get 30 days of unlimited access to all features.

You can also install and self-host SigNoz yourself since it is open-source. With 24,000+ GitHub stars, open-source SigNoz is loved by developers. Find the instructions to self-host SigNoz.

Alert as Code using Prometheus Alert Manager and SigNoz Cloud

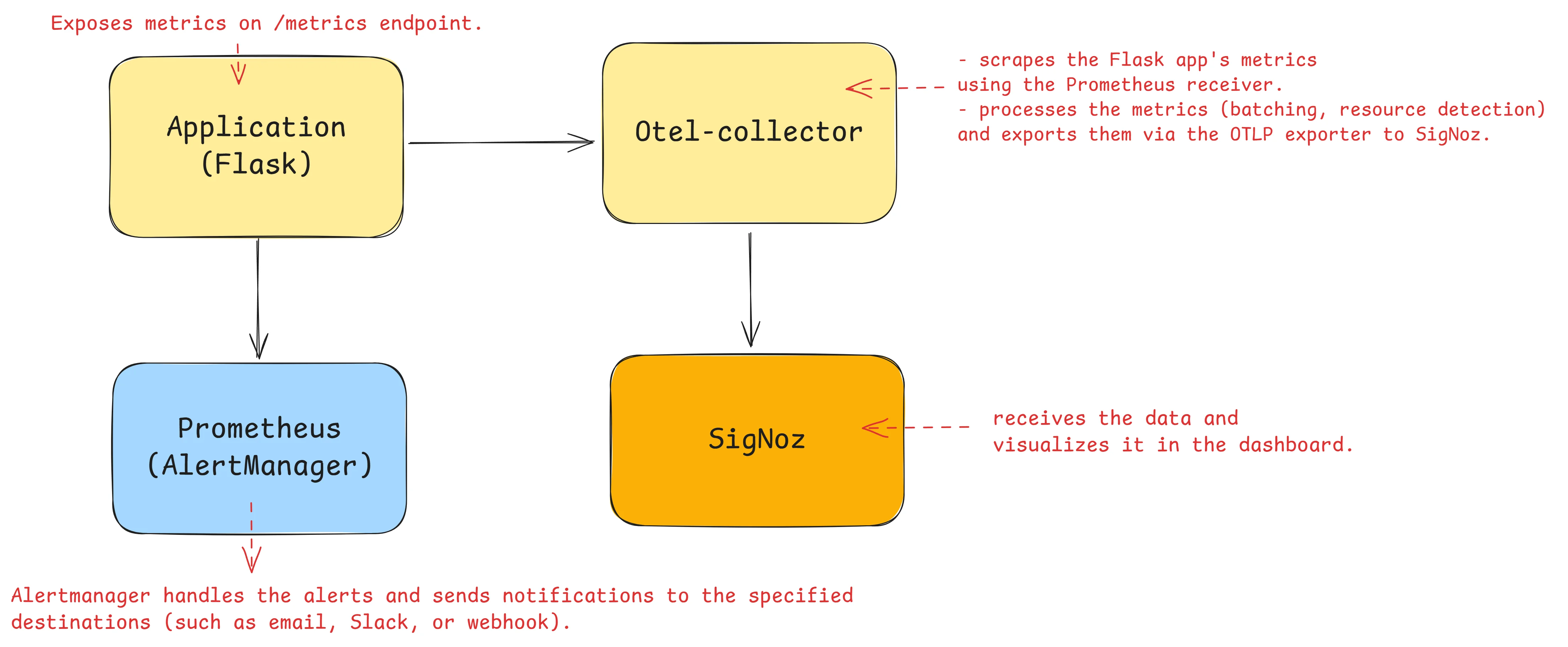

This section guides you through the process of setting up complete monitoring and alerting that integrates Flask, Prometheus, OpenTelemetry Collector, SigNoz, and Prometheus Alertmanager.

Architecture Overview

The diagram highlights how metrics and traces flow between components:

- Flask Application: Generates metrics and traces.

- Prometheus: Scrapes metrics from Flask and forwards them to SigNoz.

- OpenTelemetry Collector: Sends traces to SigNoz Cloud for visualization and analytics.

- Prometheus Alertmanager: Sends alerts triggered by conditions defined in your alerting rules.

Achieving Alerts as Code

The concept of Alerts as Code means defining and managing alerting configurations in code files (like YAML), allowing for better control, consistency, and automation. Here's how this setup enables Alerts as Code:

- Declarative Configuration:

- Alerting rules (

alerts.yml), Prometheus settings (prometheus.yml), and OpenTelemetry configurations are written in declarative YAML files. - These files describe the desired state of the system, making them easy to read, modify, and share.

- Alerting rules (

- Version Control:

- Store all configuration files in a Git repository.

- This ensures every change to your alerting setup is tracked, auditable, and reversible. For example, you can roll back to a previous configuration if a new alerting rule causes unexpected issues.

- Reusability Across Environments:

- The same configuration files can be reused across environments (e.g., development, staging, production) with minor modifications (like endpoint URLs).

- This ensures that all environments follow the same alerting standards.

- Automation in CI/CD Pipelines:

- Integrate the configuration files into your CI/CD pipelines.

- For instance, whenever changes are merged into the repository, the updated configurations can be deployed automatically to Prometheus, OpenTelemetry Collector, and Alertmanager.

- Scalability and Consistency:

- Managing alerts as code ensures consistent alert definitions across all systems.

- As your infrastructure scales, you can easily update or extend your alerting rules by modifying the configuration files.

Prerequisite

- Python installed

- Prometheus installed and running

- OpenTelemetry Collector installed. Download the latest version from the OpenTelemetry GitHub releases.

- SigNoz Cloud account

Step 1: Flask App with Prometheus Metrics

First, a Flask app needs to be set up to expose metrics for Prometheus to scrape:

Install Flask and the Prometheus client:

pip install Flask prometheus_clientCreate a file named

flask_app.pywith the following content:from flask import Flask from flask import request from prometheus_client import Counter, generate_latest, REGISTRY from prometheus_client.exposition import basic_auth_handler app = Flask(__name__) # Define a counter metric http_requests_total = Counter('http_requests_total', 'Total HTTP Requests', ['method', 'endpoint']) # Increment the counter on each request @app.before_request def before_request(): http_requests_total.labels(method=request.method, endpoint=request.path).inc() # Expose metrics at /metrics endpoint @app.route('/metrics') def metrics(): return generate_latest(REGISTRY) if __name__ == "__main__": app.run(debug=True)Run the Flask app:

python flask_app.py

The Flask app will now be running on http://localhost:5000/ and exposing metrics at http://localhost:5000/metrics.

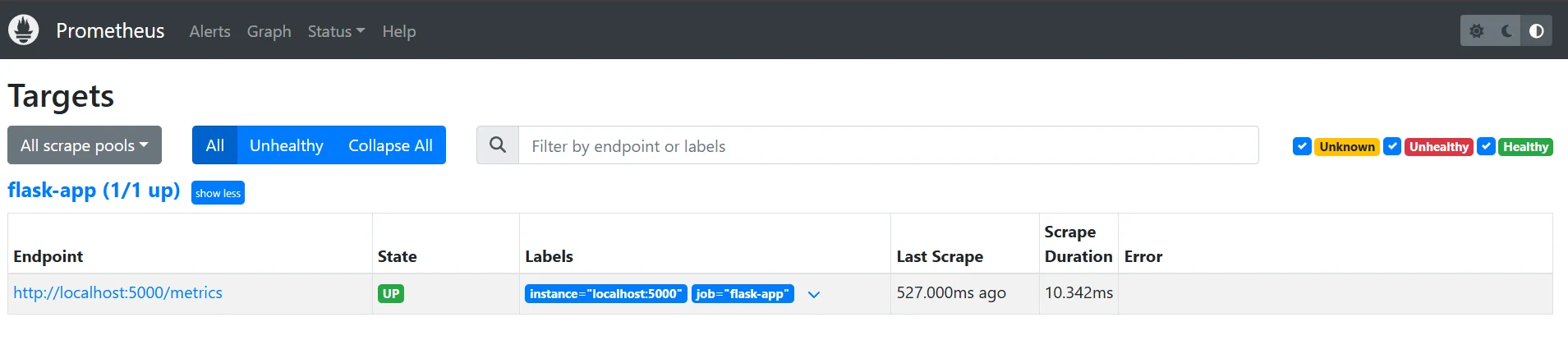

Step 2: Set Up Prometheus to Scrape Metrics

Next, Prometheus needs to be configured to scrape metrics from the Flask app:

Create a

prometheus.ymlconfiguration file to scrape metrics from the Flask app:global: scrape_interval: 10s scrape_configs: - job_name: 'flask-app' static_configs: - targets: ['localhost:5000'] # Flask app metrics endpointStart Prometheus with the configuration:

prometheus --config.file=prometheus.ymlPrometheus will now scrape the Flask app’s metrics every 10 seconds.

Prometheus Scraping

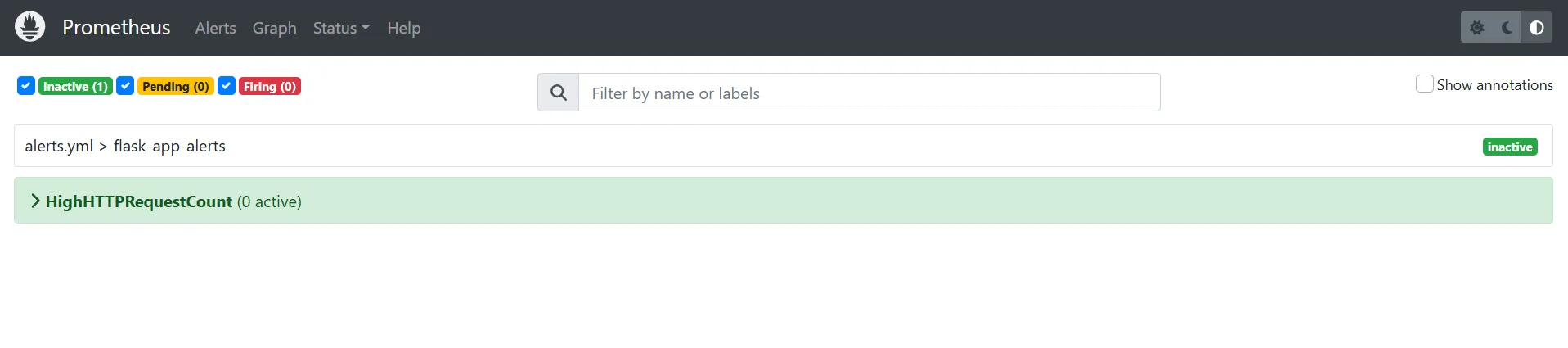

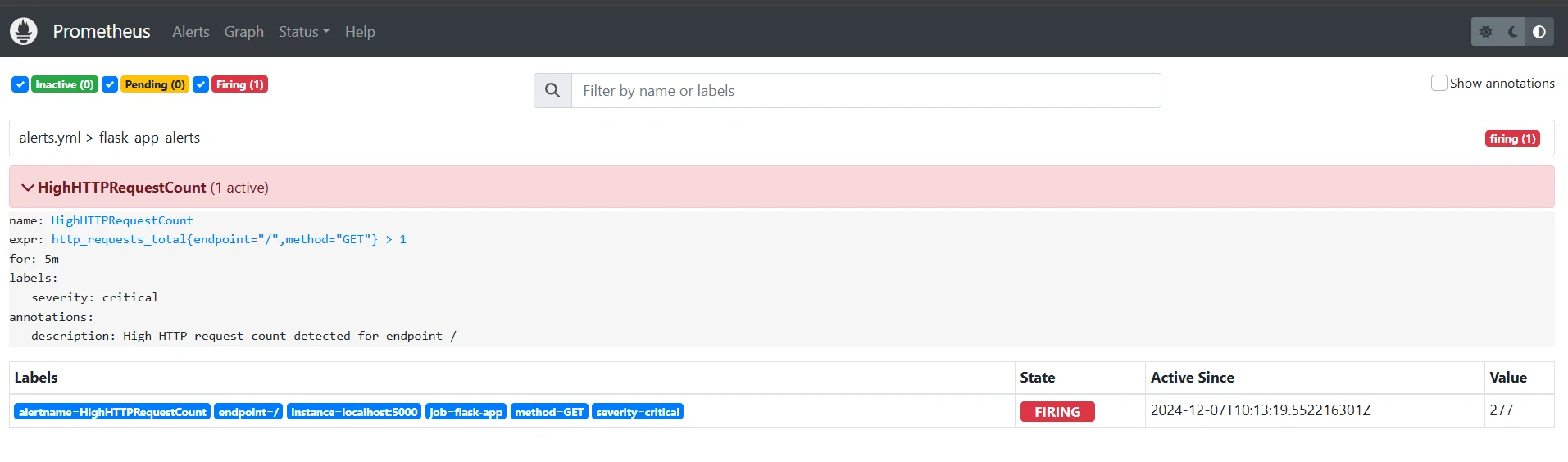

Step 3: Configuring Alert as Code with Prometheus Alert Manager

Next, update the Prometheus config file to include alert configuration.

Create an alert file named

alerts.ymlwith alert condition and threshold.groups: - name: flask-app-alerts rules: - alert: HighHTTPRequestCount expr: http_requests_total{method="GET", endpoint="/"} > 250 for: 5m # Trigger alert if condition holds for 5 minutes labels: severity: critical annotations: description: "High HTTP request count detected for endpoint /"In the Prometheus configuration file add the reference to the alert rule:

rule_files: - "alerts.yml"Restart the Prometheus server, and check the alerts section to see it the alert is listed.

Prometheus Alerts

Step 3: Configure OpenTelemetry Collector

The OpenTelemetry Collector will be used to process the Prometheus metrics and forward them to SigNoz.

Create an

otel-collector-config.yamlconfiguration file for the OpenTelemetry Collector:receivers: prometheus: config: scrape_configs: - job_name: 'flask-app' static_configs: - targets: ['localhost:5000'] processors: batch: send_batch_size: 1000 timeout: 10s resourcedetection: detectors: [env, system] timeout: 2s exporters: otlp: endpoint: "ingest.in.signoz.cloud:443" # SigNoz endpoint tls: insecure: false headers: "signoz-ingestion-key": "<your_signoz_ingestion_key>" service: pipelines: metrics: receivers: [prometheus] processors: [batch, resourcedetection] exporters: [otlp]Run the OpenTelemetry Collector:

./otelcol-contrib --config otel-collector-config.yaml

Now, the OpenTelemetry Collector will scrape Prometheus metrics, process them, and send them to SigNoz.

Step 4: Visualize Metrics in SigNoz

- Log in to your SigNoz Cloud account.

- Navigate to the Metrics dashboard to view the Flask app metrics (from OpenTelemetry Collector).

- Create custom dashboards to visualize metrics like request counts, latency, and more.

Say you receive an alert that the HTTP request count exceeded the set threshold.

You can visualize the metric in the dashboard and see if there was a sudden spike, or there has been a constant increase.

Best Practices for Integrating SigNoz Alerts into Your IaC Workflow

Integrating SigNoz alerts into an Infrastructure as Code (IaC) workflow helps automate the monitoring and alerting process across your infrastructure. Here are some best practices to follow:

- Define Alerting Rules in Code: Use tools like Terraform or Ansible to manage SigNoz alert configurations within IaC scripts.

- Parameterize Alert Thresholds: Make alert thresholds configurable based on environment needs (e.g., dev vs. production).

- Automate Notification Channels: Configure notification channels (Slack, email, PagerDuty) directly through IaC tools.

- Version Control and Review: Store alert configurations in version-controlled repositories for collaboration and code review.

- Environment-Specific Configurations: Tailor alert thresholds for different environments (production, staging, dev).

- Automate SigNoz Deployment: Use Helm or Kubernetes Operators to automate SigNoz deployment and alert rule management.

Future Trends in Alerts as Code

As Alerts as Code continues to evolve, keep an eye on these emerging trends:

- Machine learning integration: Expect to see more AI-powered anomaly detection and dynamic thresholding.

- AIOps integration: Alerts as Code will likely become a key component of broader AIOps platforms.

- Expanded observability: AaC will extend to cover more aspects of observability, including logs and traces.

- Standardization: Look for industry-wide efforts to standardize Alerts as Code practices and formats.

Key Takeaways

Implementing Alerts as Code can significantly improve your DevOps practices by:

- Enhancing reliability and consistency in your monitoring setup

- Fostering better collaboration between development and operations teams

- Enabling faster incident response and reduced MTTR

- Providing a scalable and maintainable approach to alert management

FAQs

What's the difference between Monitoring as Code and Alerts as Code?

Monitoring as Code focuses on defining and managing the overall monitoring configuration, including what metrics to collect and how to visualize them. Alerts as Code specifically deals with defining the conditions under which alerts should be triggered and how they should be handled.

How do I get started with Alerts as Code in my organization?

Begin by selecting a monitoring platform that supports AaC, such as SigNoz. Start with a small set of critical alerts, define them in code, and integrate them into your version control system. Gradually expand your AaC implementation as your team becomes more comfortable with the approach.

Can Alerts as Code work with my existing monitoring tools?

Many modern monitoring tools support some form of Alerts as Code. Check your current tools for API support or integration capabilities. If your existing tools don't support AaC directly, consider using a platform like SigNoz that offers native AaC support.

What are the security considerations for implementing Alerts as Code?

When implementing Alerts as Code, consider:

- Access control: Limit who can modify alert configurations

- Secrets management: Securely store and manage any sensitive information used in alert definitions

- Audit trails: Maintain logs of changes to alert configurations

- Testing: Implement security testing for your alert configurations to prevent potential vulnerabilities

By addressing these considerations, you can ensure that your Alerts as Code implementation enhances rather than compromises your overall security posture.