What Is Apdex Score? A Complete Technical Guide

Distinct performance metric like average latency often hides the outliers because a few slow requests (e.g., taking 5 seconds) get drowned out by thousands of fast requests (e.g., taking 0.1 seconds), making the average still look good (e.g., 0.15 seconds) even though some users are extremely unhappy. The Apdex (Application Performance Index) bridges this gap by translating response times into a standardized satisfaction signal, enabling engineering and business teams to align on the quality of the user experience.

This guide explains what is Apdex score, how the Apdex formula works, how to choose the right threshold for your services, how to measure it using OpenTelemetry and production best practices.

What is Apdex?

Apdex is a standardized way to summarize application latency data into a single score that reflects how satisfied users are with performance, using a predefined response-time target to map requests onto a scale between poor and excellent experience.

What is an Apdex Score?

Apdex score is a numeric value between 0 and 1 that represents the level of user satisfaction based on how application response times compare to a defined performance threshold over a given time window.

While traditional metrics like "Average Response Time" measure the system, Apdex measures the experience. It forces you to define a target response time (the threshold) and then evaluates each request against it.

The result is a normalized score on a 0 to 1 scale:

1.0 (Perfect): All users are satisfied.

0.0 (Terrible): All users are frustrated.

How is the Apdex Score Calculated?

To calculate Apdex, you must first define a threshold, denoted as T. This is the maximum response time that a user considers "satisfactory." Based on this threshold T, every request is categorized into one of three zones.

The Three Zones of Satisfaction

Satisfied (0 to T): The user is fully productive. The system responded quickly enough that the user’s workflow was not impeded.

Tolerating (T to 4T): The user noticed the delay. The response was slow (up to 4 times the threshold), but not slow enough to cause the user to abandon the task. The experience was imperfect but acceptable.

Frustrated (> 4T or Error): The response was unacceptably slow (more than 4 times the threshold), or the request returned a server-side error (for example, an HTTP 5xx). The user likely abandoned the task or lost focus.

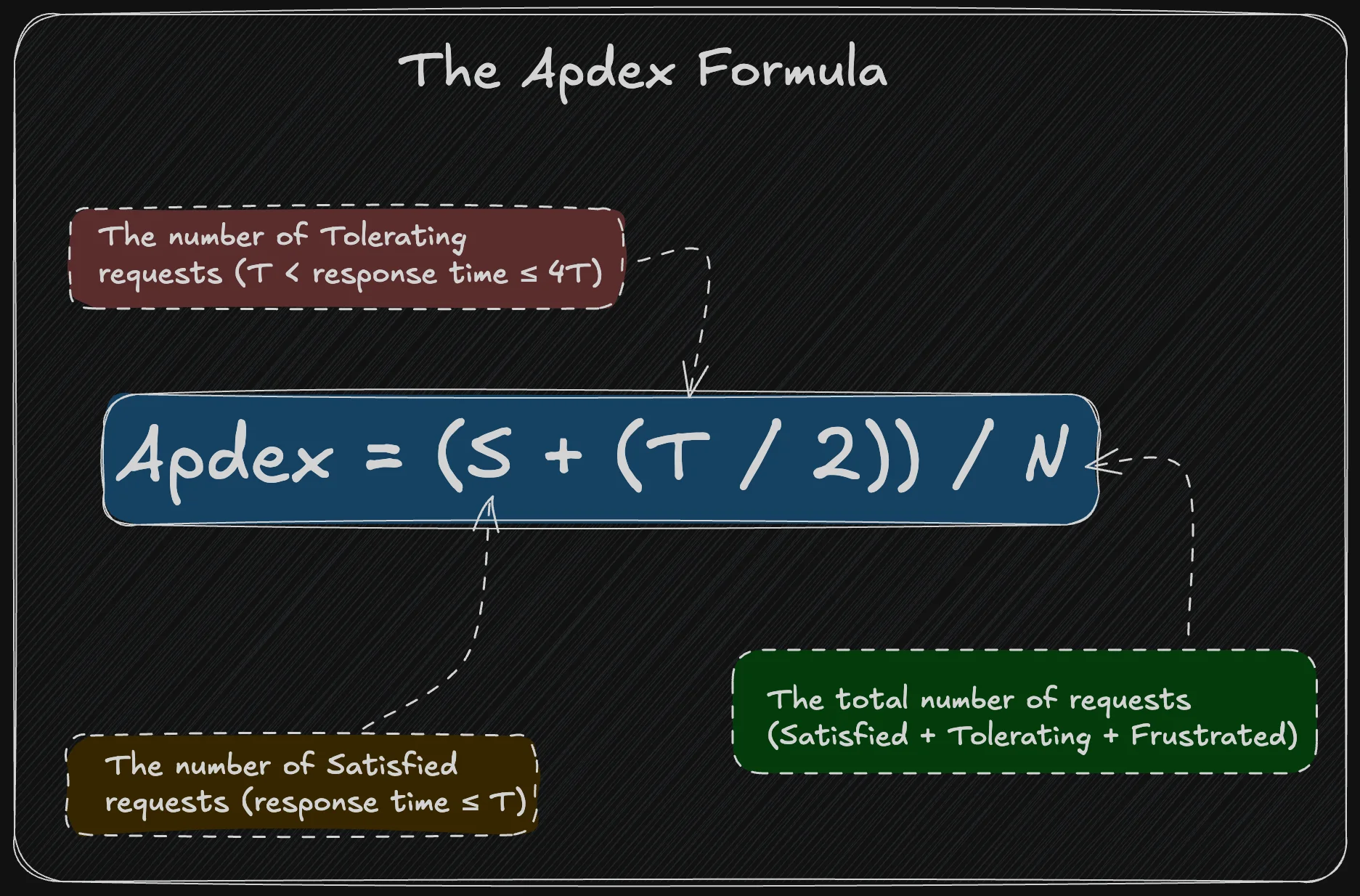

Apdex Formula

Once the monitoring tool collects the counts for these three zones, the Apdex score is calculated using this formula:

Why this formula matters?

Satisfied requests count as 1 point.

Tolerating requests counts as 0.5 points.

Frustrated requests count as 0 points.

This weighting system partially penalises slowness and completely penalises failure. It provides a more nuanced view than a simple pass/fail metric.

Example Calculation

Imagine you have a web service with a threshold T = 500ms. Over a 5-minute window, you receive 100 requests:

70 requests were completed in less than 500ms (Satisfied).

20 requests were between 500ms and 2 seconds (Tolerating).

10 requests took longer than 2 seconds or failed (Frustrated).

Apdex Score = (70 + (20/2) ) / 100

Apdex Score = 0.8

An Apdex score of 0.80 tells you that while the majority of users are happy, there is significant room for improvement.

How to Choose the Right Threshold (T)?

The most critical part of using Apdex is selecting the correct threshold T. If you set T too high (e.g., 5 seconds), you will get a perfect score of 1.0 even if your app is lagging. If you set it too low (e.g., 10ms), you will get a score of 0.0 even if the app feels instant to humans.

There is no universal standard for T because it depends on context. A background report generation can take 2 seconds and be "Satisfied," while a type-ahead search bar must respond in 100ms.

Here is a practical approach to choosing T:

Start with User Expectations: What is the maximum time a user waits before they feel the system is "working" versus "waiting"? For web apps, 500ms is a common starting point for backend processing.

Use Historical Data: Look at your current P50 (median) latency. A good rule of thumb is to set T close to your baseline P50 or P75 latency during normal operation. This calibrates the score to your system's "normal" behaviour.

Differentiate by Service: Do not use a global T for your entire architecture. Your checkout API needs a stricter threshold than your legacy reporting service.

What is a Good Apdex Score?

Because Apdex is a normalized score, you can define general health ranges. While these are not strict laws, they are commonly used accepted industry standards:

| Apdex Score Range | Rating | User Experience Description |

|---|---|---|

| 0.94 – 1.00 | Excellent | Users are fully productive. |

| 0.85 – 0.93 | Good | Users are generally satisfied, with occasional sluggishness. |

| 0.70 – 0.84 | Fair | Many users notice delays; optimization is recommended. |

| 0.50 – 0.69 | Poor | The application is painfully slow for a large portion of users. |

| < 0.50 | Unacceptable | The application is effectively unusable. |

How to Measure Apdex with OpenTelemetry?

Apdex is a metric derived from latency data. You do not need to write code to calculate Apdex manually. Instead, you instrument your application to send latency data (traces or metrics), and your observability backend calculates the score.

At SigNoz, we use OpenTelemetry, the industry-standard instrumentation framework, to collect request latency data from the application. This latency data is then sent to the observability backend, where Apdex may be calculated by the observability backend if it supports Apdex computation, using a defined response-time threshold. In SigNoz, Apdex is available out of the box as part of the APM experience and is derived directly from trace latency data.

Prerequisites

Before running the Apdex demo, you need a working observability backend that can receive OpenTelemetry data and derive latency-based metrics.

SigNoz Cloud: Apdex is computed from request latency data received and processed by SigNoz.

Ingestion Region & API keys: Required so that the demo application can export telemetry used to derive Apdex score.

Python 3: To run the demo HTTP service that generates real request latency.

Latest stable OpenTelemetry Python packages:

pip3 install opentelemetry-sdk \ opentelemetry-exporter-otlp \ opentelemetry-instrumentation-flask

Step 1: Instrument your application

Here is a simple Python Flask application instrumented with OpenTelemetry. It sends trace data to an OTLP endpoint (like SigNoz Cloud).

from flask import Flask

import time

import random

from opentelemetry import trace

from opentelemetry.sdk.resources import Resource

from opentelemetry.sdk.trace import TracerProvider

from opentelemetry.sdk.trace.export import BatchSpanProcessor

from opentelemetry.exporter.otlp.proto.http.trace_exporter import OTLPSpanExporter

from opentelemetry.instrumentation.flask import FlaskInstrumentor

# Define the service name

resource = Resource.create({

"service.name": "apdex-demo-service"

})

# Configure the provider and exporter

provider = TracerProvider(resource=resource)

trace.set_tracer_provider(provider)

# Replace <SIGNOZ_OTLP_ENDPOINT> and <INGESTION_KEY> with your details

otlp_exporter = OTLPSpanExporter(

endpoint="https://ingest.<REGION>.signoz.cloud/v1/traces",

headers={"signoz-ingestion-key": "<INGESTION_KEY>"}

)

provider.add_span_processor(BatchSpanProcessor(otlp_exporter))

app = Flask(__name__)

# Automatically instrument Flask to capture request duration

FlaskInstrumentor().instrument_app(app)

@app.route("/work")

def work():

# Simulate varying latency

delay = random.choice([0.1, 0.3, 0.8, 1.5])

time.sleep(delay)

return f"Response time: {delay}s\n"

if __name__ == "__main__":

app.run(port=8080)

This code doesn’t calculate Apdex, it exports request spans, whose start/end timestamps give you request duration.

Remember to replace <Region> with your regions and <INGESTION_KEY> with your ingestion key.

Step 2: Start the Flask app

python3 fileName.py

Step 3: Generate test traffic

Run the following command in a separate terminal. Once you have enough traffic, press ctrl +C to stop it.

while true; do curl http://localhost:8080/work; done

Step 4: Configure the Threshold in SigNoz

Once your application is sending data, you can view the Apdex score in SigNoz.

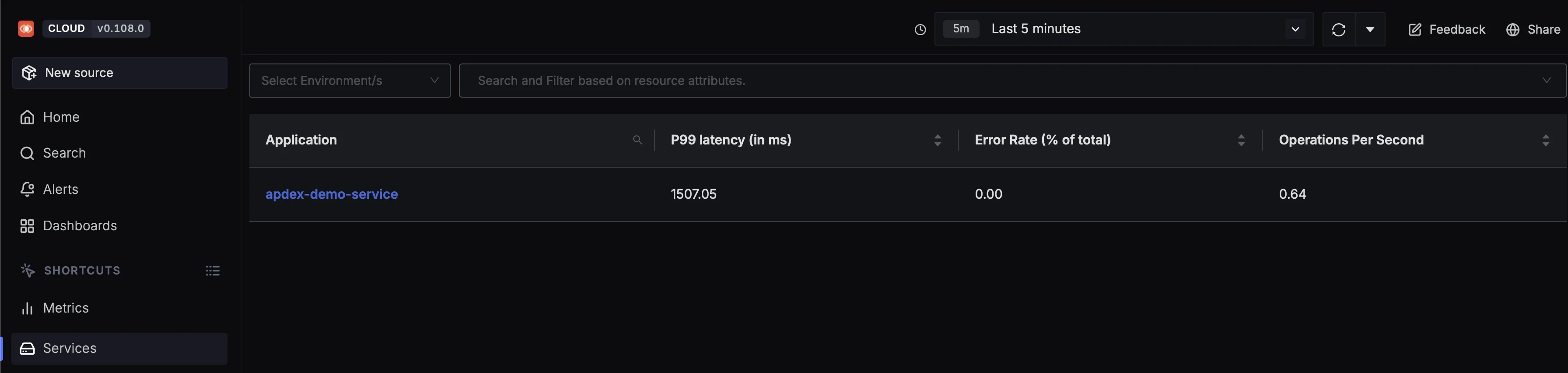

Navigate to the

Servicestab in SigNoz.

SigNoz Cloud service overview displaying latency, error rate, and throughput for the Apdex demo service, which are used to derive user experience metrics like Apdex Select your service (e.g.,

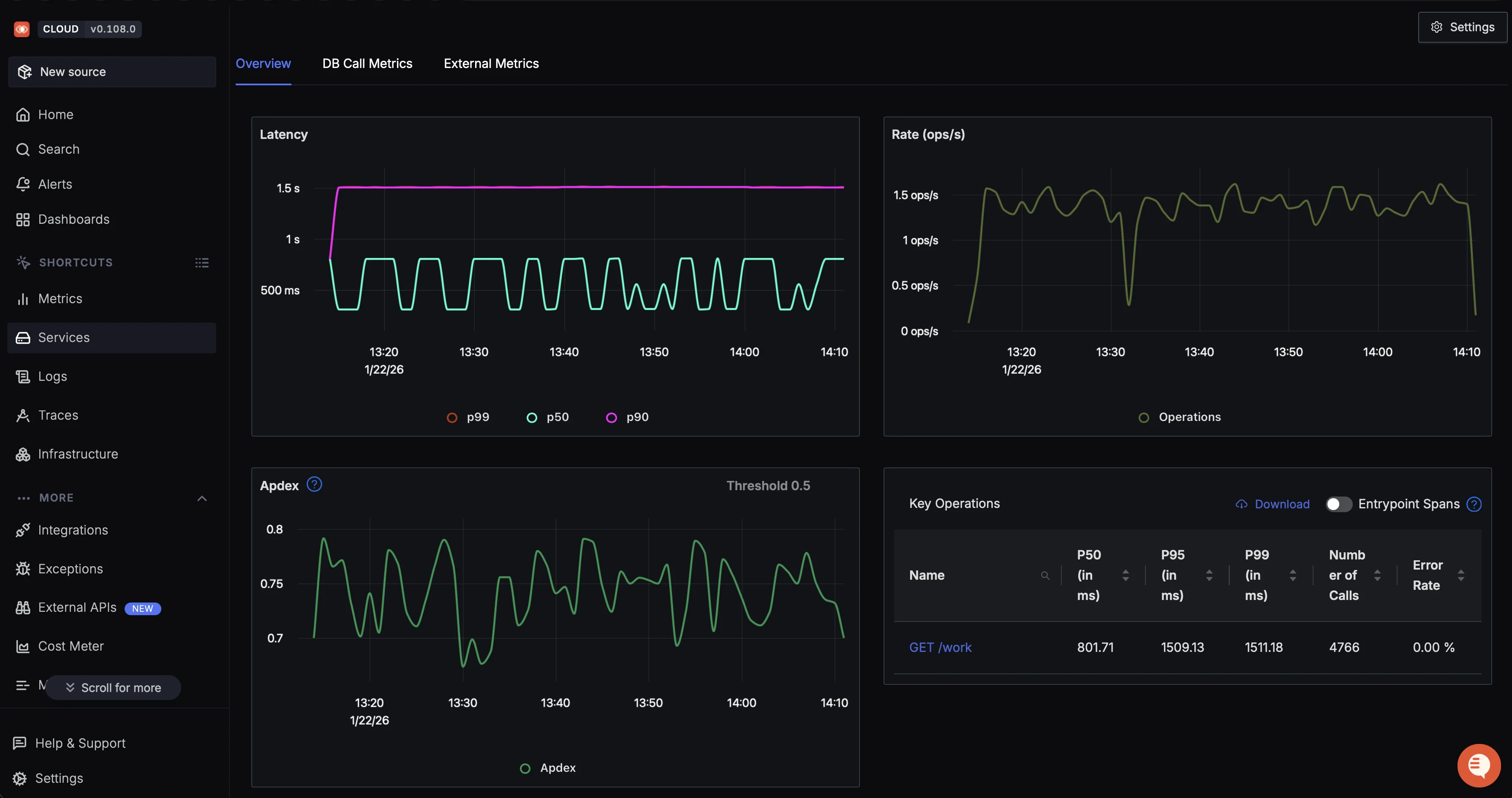

apdex-demo-service).SigNoz provides an out-of-the-box dashboard with common, important key metrics to monitor. Apdex score is also one of them and is displayed on the dashboard.

SigNoz Cloud service dashboard illustrating latency trends, throughput, and the resulting Apdex score To improve accuracy, look for the

Apdex settings(top right) and update the T threshold to match your specific requirement (e.g., 300ms).

SigNoz will effectively re-bucket your traffic into Satisfied, Tolerating, and Frustrated categories based on this new T and update the score in real time.

Apdex Score vs Other Related Metrics

Apdex Score vs Average Latency

Average latency reports the mean response time across a set of requests, which makes it useful for understanding overall system speed, but is poor at reflecting the user experience. A small number of very slow requests can be masked by many fast ones, resulting in a healthy-looking average while users remain frustrated. Apdex converts response times into satisfaction categories based on a threshold and penalizes slow outliers, making it a user-experience metric rather than a purely statistical one.

Apdex Score vs Percentile Latency (P95 / P99)

Percentile latency focuses on tail behaviour by showing how slow the worst-performing requests are, which is excellent for diagnosing performance bottlenecks and saturation issues. However, percentiles are hard to communicate outside engineering teams and do not directly answer whether users are satisfied overall. Apdex builds on the idea of tail latency but summarizes its impact into a single normalized score, trading diagnostic precision for clarity and comparability.

Apdex Score vs Error Rate

Error rate measures how many requests fail, not how slow successful requests are. A system can have a near-zero error rate and still deliver a poor user experience due to high response times. Apdex treats errors as frustrated requests, but it also accounts for slow successes, which error rate alone completely ignores. This makes Apdex broader in scope, though less specific when isolating failure causes.

Apdex Score vs Throughput

Throughput shows how much load a system is handling, such as requests per second, and is useful for capacity planning and scaling decisions. It says nothing about how users perceive performance. Apdex is orthogonal to throughput: a system can handle high traffic and still have a low Apdex if responses are slow, or low traffic and still have a high Apdex if responses are consistently fast.

Summary Table

| Metric | What it measures | User-centric | Sensitivity to slow requests | Ease of interpretation |

|---|---|---|---|---|

| Apdex score | User satisfaction based on response time | Yes | High | High |

| Average latency | Mean response time | No | Low | Medium |

| P95 / P99 latency | Tail response time | Indirect | Very high | Low |

| Error rate | Failed requests | Partial | None | Medium |

| Throughput | Request volume | No | None | Medium |

Common Pitfalls

Apdex is useful, but it has some limitations:

Low Throughput Instability: If an endpoint receives only a few requests per minute, a single slow request can tank the score from 1.0 to 0.5. Apdex is statistically more stable with higher traffic volumes.

Errors are "Frustrated": Apdex treats a 500 error the same as a very slow request. If you have a high error rate but fast response times, your Apdex will drop. You should always check the Error Rate alongside Apdex to understand why the score is low.

Non-Interactive Workloads: Apdex assumes a user is waiting. It is meaningless for async worker queues or batch jobs where "response time" doesn't directly map to human frustration.

FAQs

Q1. What does Apdex stand for?

Apdex stands for Application Performance Index, a standardized way to quantify user satisfaction based on response time.

Q2. How often should Apdex be measured?

Apdex is usually measured continuously over rolling time windows, such as 1 or 5 minutes, to reflect real-time changes in user experience.

Q3. Can Apdex be calculated manually?

Yes, in theory, if you have request durations and counts. In practice, Apdex is almost always calculated automatically by monitoring or observability tools.

Q4. Is Apdex still relevant today?

Yes, especially as a high-level experience indicator, but it is most effective when combined with modern metrics like percentiles, traces, and real user monitoring rather than used alone.

Further Reading

Total Blocking Time (TBT) - All You Need to Know

APM Metrics: All You Need to Know

Is this span actually slow? Span Percentile gives you the answer

Understanding OpenTelemetry Spans in Detail