How to Grep Docker Logs - A Quick Guide

In containerized environments, efficient log analysis is crucial for maintaining application health and performance. One powerful tool for this purpose is grep, a command-line utility that allows users to search for specific strings within text. Combining Docker logs with grep can streamline troubleshooting and debugging processes by pinpointing relevant log entries quickly.

Understanding Docker Logs and Grep

Docker logs provide crucial insights into container behavior — they are a window into an application's runtime environment. Grep, a powerful text-searching tool, allows you to sift through these logs with precision. When combined, Docker logs and grep form a formidable duo for debugging and monitoring containerized applications.

Why use grep with Docker logs?

It's simple: you can quickly pinpoint specific events, errors, or patterns within the sea of log data. This combination proves invaluable when troubleshooting issues in complex microservices architectures or monitoring application health.

Common use cases include:

- Tracking error messages across multiple containers

- Monitoring specific user activities

- Identifying performance bottlenecks

- Auditing security-related events

Let’s discuss one of the common use cases where a developer needs to track down frequent error messages in a highly active container.

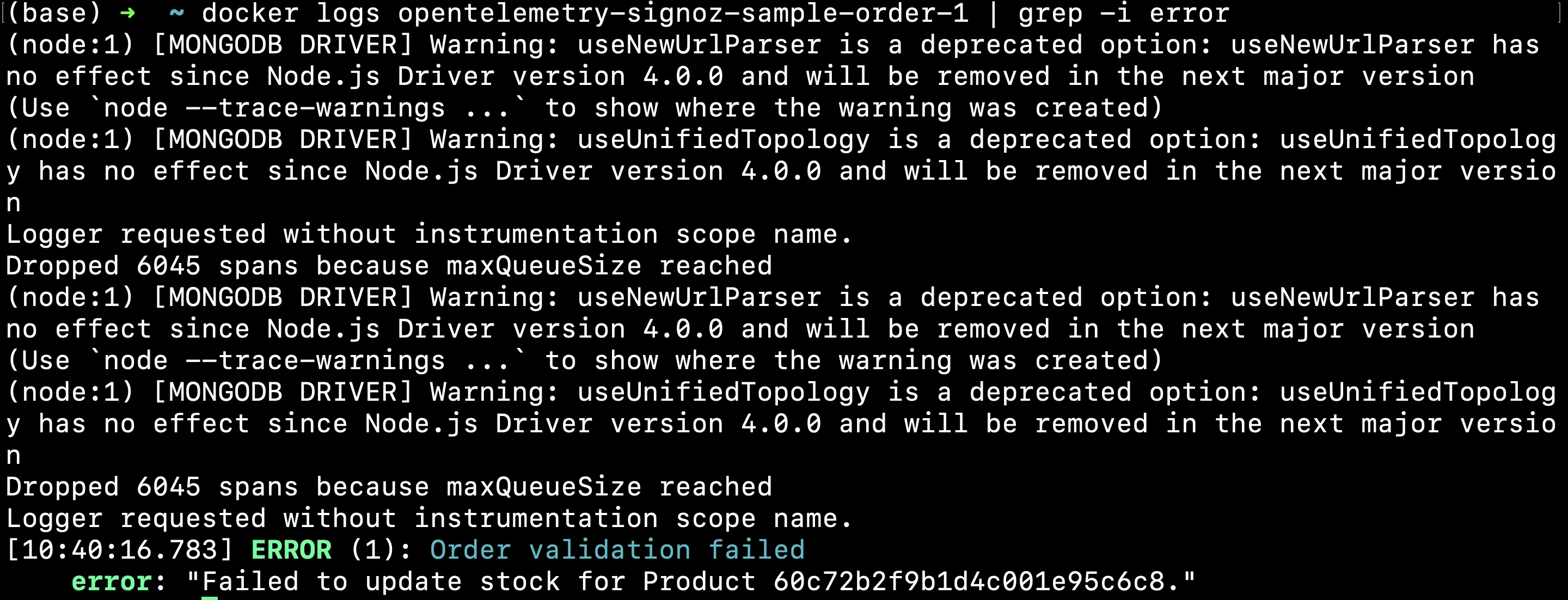

To search for "error" in a container named "opentelemetry-signoz-sample-order-1":

docker logs opentelemetry-signoz-sample-order-1 | grep -i "error"

This command filters the logs to only show entries containing "error," helping the developer quickly focus on potential issues.

Basic Syntax for Grepping Docker Logs

The fundamental command structure for grepping Docker logs is:

docker logs <container> 2>&1 | grep <pattern>

Here's why each part matters:

docker logs <container>: Retrieves logs from the specified container.2>&1: Redirects stderr to stdout, ensuring you capture all output.|: Pipes the log output to grep.grep <pattern>: Searches for the specified pattern in the logs.

Common Grep Options for Docker Logs

Grep offers several options to refine your searches:

-i: Performs case-insensitive matching

The -i flag makes the search case-insensitive, allowing you to find all instances of a specified pattern regardless of case. This is particularly useful when you're unsure of the case used in the logs or when the case might vary. Let’s say a developer is notified about recurring errors in a specific container and needs to identify these errors to begin troubleshooting quickly.

docker logs [container_name] 2>&1 | grep -i [pattern]

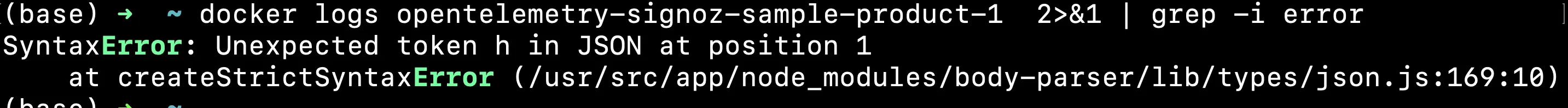

docker logs opentelemetry-signoz-sample-product-1> 2>&1 | grep -i error

The command docker logs [container_name] 2>&1 | grep -i [pattern] is used to search through a Docker container's logs for a specific pattern, ignoring case differences.

docker logs [container_name]: Fetches logs from the specified Docker container.2>&1: Redirects all output (standard and error) to one stream for consistent handling.|: Pipes the combined output to the next command.grep -i [pattern]: Filters the output, showing only lines that contain the specified pattern in a case-insensitive manner.

This command is particularly useful for quickly finding errors or specific events in container logs.

You should see a similar output:

This command filters the logs to display only those containing the term "error," allowing the developer to focus on potentially critical issues without distraction.

-v:Inverts the match, showing lines that don't contain the pattern

The -v option inverts the match, showing all lines that do not contain the specified pattern. This is useful when you want to exclude certain messages from the logs, such as routine operations or successful executions.

docker logs [container_name] 2>&1 | grep -v [pattern]

|: This pipe takes the output from the previous command and passes it to the next command.grep -v [pattern]: Filters the logs. Thevoption withgrepinverts the match, meaning it only shows log lines that do not contain the wordpattern.

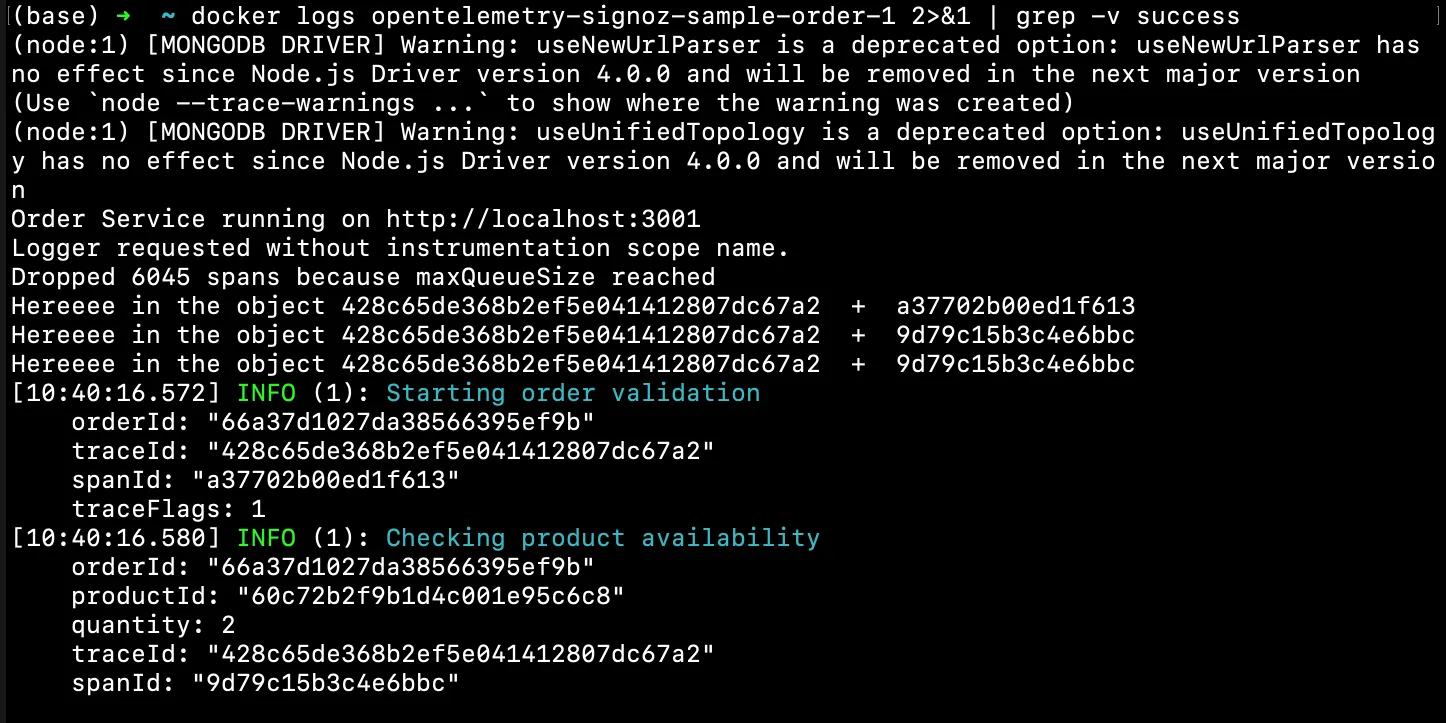

Let’s say a person from Ops wants to check the logs for all events except those marked as "success" to focus on potential problems. This helps focus on logs that might indicate issues by excluding those that confirm successful operations.

docker logs opentelemetry-signoz-sample-order-1 2>&1 | grep -v success

You should see a similar output:

grep -v output

-n: Displays line numbers alongside matches

The -n flag adds line numbers to the grep output. This is particularly useful for pinpointing the exact location of a log entry within a large log file, facilitating quicker debugging.

docker logs [container_name] 2>&1 | grep -n [pattern]

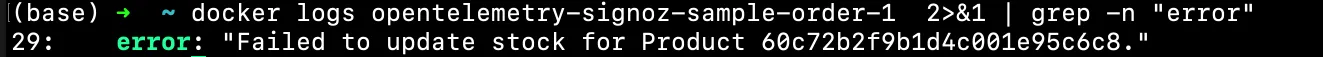

To know the exact location or line of “error” within the logs. This gives you the exact line number at which a particular log containing an error is located.

docker logs opentelemetry-signoz-sample-order-1 2>&1 | grep -n "error"

You should see a similar output:

-C: Shows context lines before and after matches

The -C option shows a specified number of lines of "context" around each matching line. This can be critical for understanding the circumstances or events leading up to and following a logged event.

docker logs [container_name] 2>&1 | grep -C [num] [pattern]

C [num]: This option tellsgrepto display[num]lines of leading and trailing context surrounding each line that matches the pattern. The[num]should be replaced with a numeric value indicating how many lines above and below the match to include in the output.[pattern]: This is the text or regular expression you are searching for within the input.[file_or_input]: This is the file or command output wheregrepsearches for the pattern. If this part is omitted,grepwill read from the standard input (stdin).

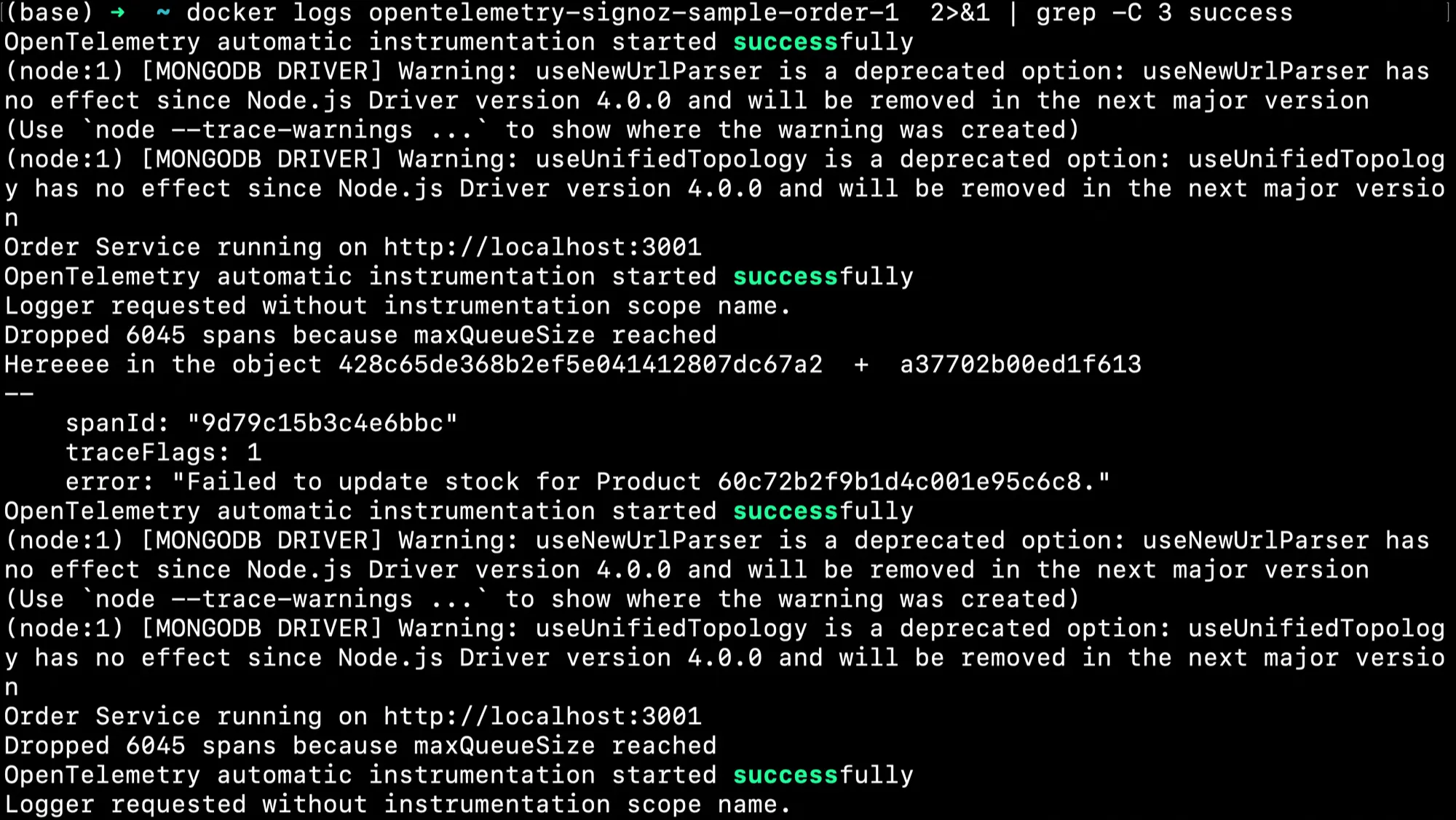

docker logs opentelemetry-signoz-sample-order-1 2>&1 | grep -C 3 success

This command retrieves logs from the Docker container named opentelemetry-signoz-sample-order-1, then uses grep to search for the term "success". The -C 3 option with grep specifies that for every line where "success" is found, the command should also display the three lines before and three lines after the matching line.

You should see a similar output:

These options can be combined for more powerful searches. For instance, -in performs a case-insensitive search with line numbers.

Advanced Techniques for Grepping Docker Logs

Here, we are exploring several methods to enhance log analysis within Docker environments, making it an invaluable resource for anyone involved in system monitoring and troubleshooting. Here’s a breakdown of the techniques discussed:

- Time-Based Filtering:

In a situation where a service encounters unexpected behavior, such as a sudden performance drop or critical error, to troubleshoot the cause within a specific timeframe, you can use the —-since and —-until options together with your docker logs command:

docker logs --since <YYYY-MM-DDTHH:MM:SS> --until <YYYY-MM-DDTHH:MM:SS> <container_name_or_id>

The --since option specifies the start time for log retrieval and the --until option specifies the end time for log retrieval, creating a precise time window for log analysis.

Docker accepts timestamps in this format: YYYY-MM-DDTHH:MM:SS, where;

- YYYY: Year (e.g., 2024)

- MM: Month (01-12)

- DD: Day (01-31)

- T: Separator between date and time

- HH: Hour (00-23)

- MM: Minute (00-59)

- SS: Second (00-59)

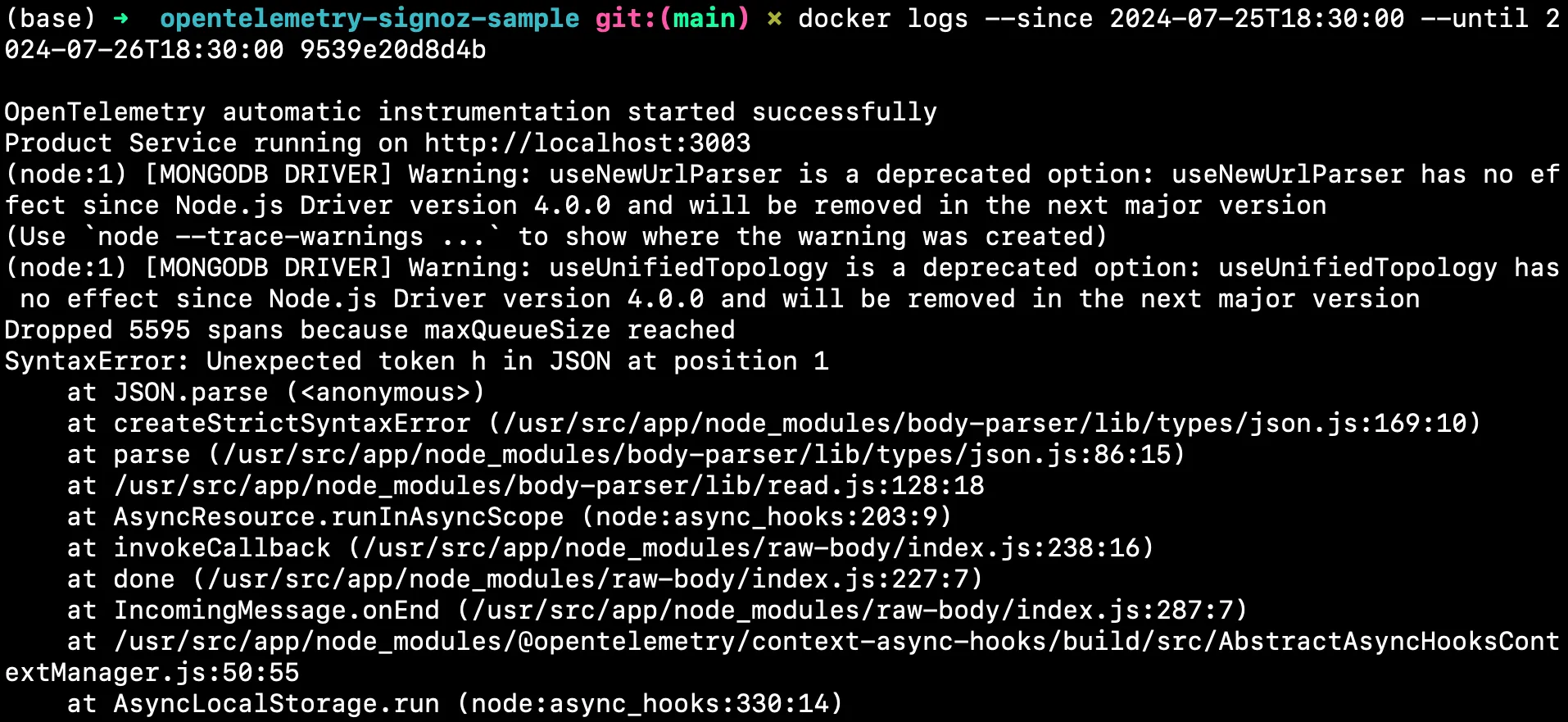

For example, if the opentelemetry-signoz-sample-product container experienced a failure that started on July 25, 2024, at 18:30:00, and persisted for 24 hours, the command will be as follows:

docker logs --since 2024-07-25T18:30:00 --until 2024-07-26T18:30:00 opentelemetry-signoz-sample-product

You should see a similar output:

This approach allows you to isolate the logs within a specific time window, making it easier to perform a detailed analysis without the distraction of irrelevant data. It's particularly useful for debugging transient issues that occurred at known times or reviewing system behavior during scheduled events (e.g., a new release deployment).

- Piping to other Commands

Combining grep with other command-line utilities like wc (word count) allows for counting occurrences, which can be instrumental in quantifying the frequency of errors or other log events.

docker logs <container_name> 2>&1 | grep <keyword> | wc -l

<container_name>: The name of the Docker container you want to fetch logs from.2>&1: Redirects standard error (stderr) to standard output (stdout) so both are processed together.grep <keyword>: Filters the logs, outputting only lines that contain the specified<keyword>.wc -l: Counts the number of lines output bygrep, which corresponds to the number of times the<keyword>appears in the logs.

For example:

docker logs opentelemetry-signoz-sample-product-1 2>&1 | grep success | wc -l

This command filters logs to find lines containing "success" and then counts these occurrences with wc -l. This is ideal for monitoring the volume of errors over time, helping in assessing the stability and health of the application.

You should see a similar output:

- Multiple Grep Commands

Using multiple grep commands in sequence allows for refining the search by including or excluding specific patterns. This method is beneficial when needing to filter logs with multiple criteria.

docker logs [container_name] 2>&1 | grep [pattern] | grep [pattern]

| grep [pattern]: Pipes the output fromdocker logsto thegrepcommand, which filters the logs to include only those lines that contain the specified[pattern]. Replace[pattern]with the text or expression you're interested in, such as "success".

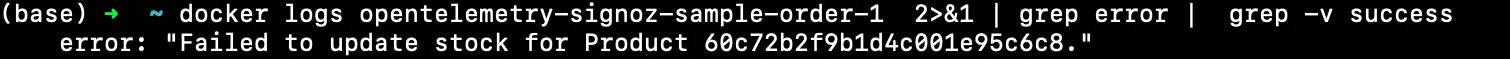

docker logs opentelemetry-signoz-sample-product-1 2>&1 | grep error | grep -v "success"

This command chain first filters log entries containing "error" and then excludes any of these that also contain "success". It's useful for isolating true errors from logs that might contain both keywords, such as logs describing successful error handling or recovery.

You should see a similar output:

- Using awk for Complex Parsing

awk is a powerful text-processing tool that can be used in conjunction with grep to extract and manipulate fields from log entries, enabling more complex queries and data extraction.

docker logs [container_name] 2>&1 | grep [pattern] | awk '{print $field1, $field2, ...}'

| grep [pattern]: Pipes the output fromdocker logstogrep, which filters the logs to only include lines containing the specified[pattern].| awk '{print $field1, $field2, ...}': Pipes the filtered output fromgreptoawk. Here,awkis used to extract and manipulate fields from each log entry. Theprint $field1, $field2, ...command insideawkspecifies which fields (columns) to output. Replace$field1, $field2, ...with the specific field numbers you want to extract. Fields inawkare typically separated by whitespace or a specific delimiter, and each$fieldNrepresents one field number (e.g.,$1for the first field,$2for the second, and so on).

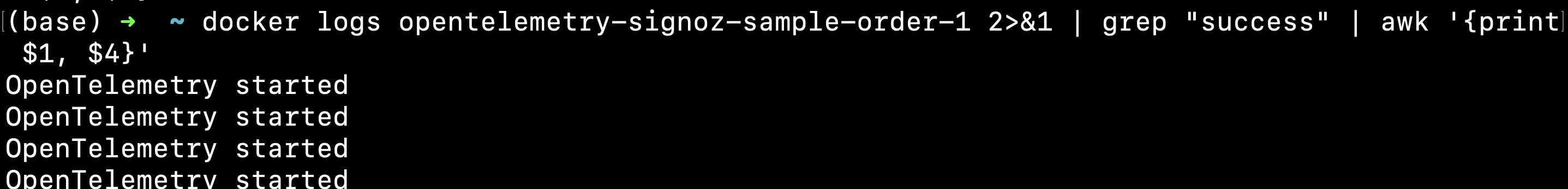

docker logs opentelemetry-signoz-sample-order-1 2>&1 | grep "success" | awk '{print $1, $4}'

After filtering the logs for entries containing "success", this command uses awk to extract and print the first and fourth fields from these lines. This technique is particularly valuable for logs with structured formats, where specific information (like timestamps or status codes) needs to be isolated for further analysis.

You should see a similar output:

grep with awk

Handling Multi-line Log Entries

Multi-line log entries, common in complex applications, present unique challenges for analysis. They often contain crucial details that are spread across several lines, making traditional single-line grep searches inadequate.

Multi-line log entries require advanced techniques because important information is often not contained within a single line.

- For logs in JSON format, tools like

jqbecome essential by making it possible to extract and filter specific data fields, making the analysis more manageable and focused. - These strategies are essential for maintaining an efficient workflow in environments where quick diagnostics and responses are required to ensure application reliability and performance.

- Each method enhances the ability to effectively sift through and make sense of extensive logging data, thereby supporting better decision-making and faster troubleshooting.

Here are practical strategies and scenarios for effectively managing and analyzing these types of logs:

- Use Grep's Context Options

Using grep's context options allows you to view not just the line of interest but also the surrounding lines, which are often key to understanding the event fully.

docker logs [container_name] 2>&1 | grep -A [num] "[pattern]"

docker logs [container_name]: Retrieves the logs from the specified Docker container.2>&1: Combines standard output (stdout) and standard error (stderr) into one stream.| grep -A [num] "[pattern]": Pipes the logs to grep, which filters lines containing the specified[pattern]. TheA [num]option tells grep to also display[num]lines of trailing context after each match, providing more insight into what follows the pattern.

For example:

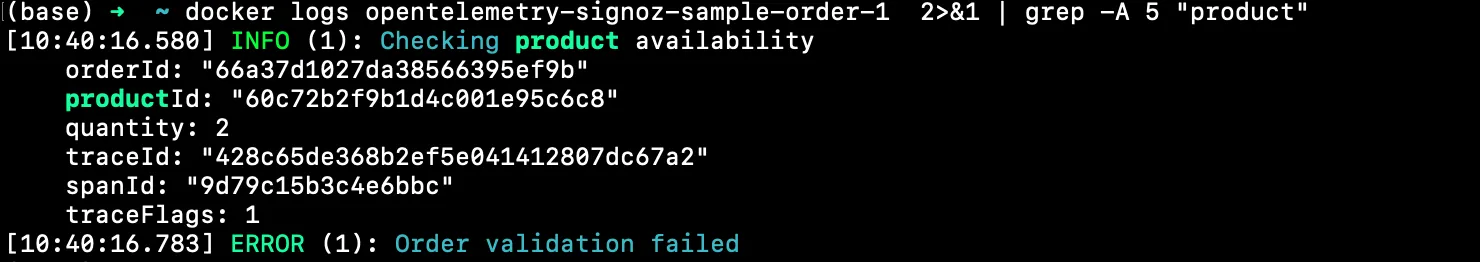

docker logs opentelemetry-signoz-sample-order-1 2>&1 | grep -A 5 "product"

This shows the line which contains the word product and 5 lines after it.

You will see the below output:

- Parse JSON logs with jq and grep:

JSON-formatted logs are increasingly common, especially in services that use modern logging standards. The jq tool is perfect for parsing JSON and extracting specific fields before using grep for further filtering. In a situation where a system continuously logs detailed messages in a structured JSON format, a DevOps engineer may need to quickly identify error messages to diagnose issues post-deployment. Using jq to extract the 'message' field simplifies the logs into a more readable format, and grep then filters out only those entries that contain errors. This approach ensures that the engineer can swiftly pinpoint issues without manually combing through dense JSON structures.

docker logs [container_name] 2>&1 | jq -r '[field]' | grep [pattern]

docker logs [container_name]: Fetches the logs from a specific Docker container.2>&1: Redirects all output into a single stream for consistent processing.| jq -r '[field]': Pipes the logs tojq, a command-line JSON processor, which extracts the specified[field]from each JSON-formatted log entry. The-rflag ensures that the output is raw, i.e., without quotes, making it cleaner for further processing.| grep [pattern]: Filters the output ofjqto include only those lines that match the specified[pattern].

docker logs opentelemetry-signoz-sample-order-1 2>&1 | jq -r '.message' | grep error

This extracts the 'message' field from JSON logs and searches for errors.

You will see the below output:

Best Practices and Optimization

To maximize the effectiveness of grepping Docker logs:

Use specific search patterns to reduce false positives.

Create aliases for common log search commands.

Consider using

--tailto limit the amount of log data processed:docker logs --tail 1000 <container-name> 2>&1 | grep errorFor large log files, use

zgrepon compressed log archives.Implement log rotation to manage log file sizes and improve grep performance.

Be mindful of the potential performance impact when grepping large log files on production systems.

Ensure proper permissions and security measures are in place when accessing and analyzing logs.

Implement log rotation and management strategies to prevent logs from consuming excessive disk space.

Tips for Efficient Log Grepping

- Using Appropriate Log Levels: Ensure your logs use appropriate log levels (DEBUG, INFO, WARN, ERROR) to make grepping more effective.

- Structuring Log Messages for Easier Grepping: Format your log messages consistently to facilitate easier searching and parsing.

- Combining Grep with Other Command-Line Tools: Enhance your log analysis by combining

grepwith tools likeawkandsed:

docker logs [container-id] | grep 'ERROR' | awk '{print $1, $5}'

Enhancing Log Analysis with SigNoz

While grep is powerful for command-line log analysis, SigNoz provides a more comprehensive solution for managing and analyzing logs in complex, distributed systems.

- Centralized Log Management

- Advanced Search and Filtering

- Log Correlation with Metrics and Traces

- Real-time Log Tailing

- Log-based Alerting

- Log Visualizations and Dashboards

SigNoz Cloud is the easiest way to run SigNoz. Sign up for a free account and get 30 days of unlimited access to all features.

You can also install and self-host SigNoz yourself since it is open-source. With 24,000+ GitHub stars, open-source SigNoz is loved by developers. Find the instructions to self-host SigNoz.

Read how to send Docker Logs to SigNoz.

Conclusion

- Using

grepwith Docker logs simplifies identifying and resolving issues in containerized environments, enhancing productivity and system reliability. - The combination of Docker and

grepallows for detailed log analysis, crucial for monitoring application health and identifying performance bottlenecks effectively. - Advanced techniques like time-based filtering and multiline searching enable tailored analysis, focusing on specific incidents or periods.

- Each command and technique serves real-world troubleshooting needs, from isolating error messages to analyzing user activities and providing actionable insights.

- Emphasizes efficient practices such as log rotation and the use of command-line tools for optimized log analysis, ensuring sustainable and effective log management.

- Integrating advanced tools and adopting sophisticated grep strategies foster ongoing enhancement of monitoring and debugging processes, supporting high service standards.

FAQs

How can I check Docker logs?

Use the docker logs [container-id] command to check logs for a specific container.

How to see the last Docker logs?

Use docker logs --tail [number] [container-id] to see the last few lines of logs.

How do I view Docker Compose logs?

Use docker-compose logs to view logs for all services in a Docker Compose setup.

Where are Docker logs stored on Mac?

Docker logs on Mac are stored within the Docker virtual machine, accessible via the Docker Desktop UI or command line.

How do I list all Docker containers?

Use docker ps -a to list all containers.

How to check Docker running status?

Use docker ps to check the status of running containers.

How to use Docker service logs?

Use docker service logs [service-id] to view logs for a specific Docker service.