How to Write to a File Using the Logging Python Module - A Step-by-Step Guide

Python's logging module is an important tool for event recording, problem debugging, and application debugging. Unlike basic print instructions, the logging module provides a reliable and flexible method for capturing and storing log data. In this article, you'll learn how to configure file-based logging, apply advanced approaches, and improve your logging procedures. Whether you're new to Python or an experienced developer, this guide will help you improve your logging approach in Python applications.

Understanding Python's Logging Module

The logging module is a built-in Python library that provides a systematic approach for creating log messages in your applications. It provides numerous benefits over using print statements. Some of them are:

- Configurability: The logging module allows you to simply change log levels, customize output formats, and route log messages to various destinations, such as files or other systems.

- Persistence: Logs can be saved to files, allowing you to keep crucial event data for later analysis, auditing, or debugging.

- Separation of Concerns: By isolating logging code from essential application logic, you keep your code cleaner and can easily manage or adjust logging behaviour without affecting the program's operation.

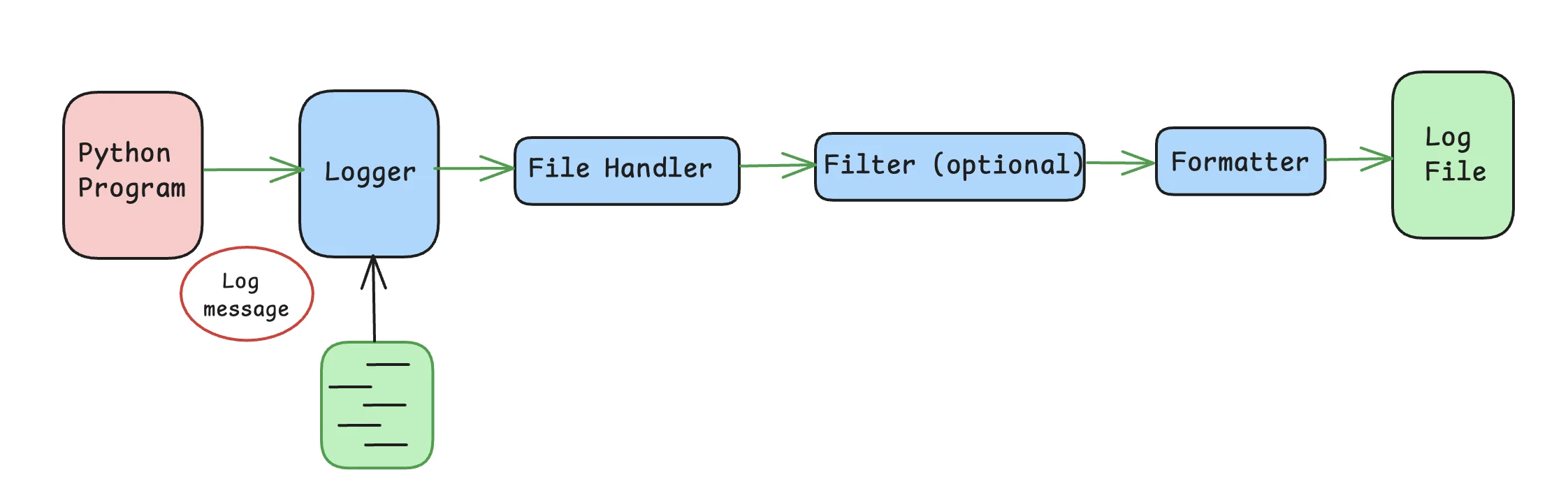

The logging module comprises four main components that work together to facilitate logging:

- Loggers: These are the points of entry for logging in your application. Each logger captures log messages, which can subsequently be routed to one of several outputs depending on the handlers that have been set.

- Handlers: Handlers specify the destination of log messages. Some of the most common handlers are

StreamHandler(for console output) andFileHandler(for logging into a file). You can set up numerous handlers for a single logger to send logs to different destinations. - Formatters: Formatters control the layout and content of log messages. They govern how timestamps, log levels, and messages are shown in the logs.

- Levels: Logging levels (such as

DEBUG,INFO,WARNING,ERROR, andCRITICAL) help you to manage the logs in a better way. Setting a specified log level allows you to filter out less important messages and focus on what matters.

To learn about logging in Python, different levels of logging, formatters, etc. in more detail, you can refer to this article Python Logging - From Setup to Monitoring with Best Practices. You can also check out Python Logging Best Practices - Expert Tips with Practical Examples.

Quick Start: Writing Logs to a File

Let us start the logging with a basic example of writing logs to a file using Python's logging module:

import logging

# Configure the logger

logging.basicConfig(

filename='app.log', # Specify the log file name

level=logging.INFO, # Set the logging level to INFO

format='%(asctime)s - %(levelname)s - %(message)s' # Define the log message format

)

# Write an informational log message

logging.info('This is an informational message')

This simple Python script performs the following actions:

- Imports the logging module: Provides access to the logging functions.

- Configures the logger using

basicConfig():filename='app.log': Specifies that logs should be written to the fileapp.log.level=logging.INFO: Sets the logging level toINFO, meaning all messages with a severity ofINFOor higher will be logged.format='%(asctime)s - %(levelname)s - %(message)s': Defines the log message format to include the timestamp, log level, and message content.

- Writes a log message: The

logging.info()function generates an informational log entry.

After running this script, you'll find a file named app.log in your current working directory containing a log entry similar to this:

2024-08-23 10:30:45,123 - INFO - This is an informational message

Advanced File Logging Techniques

As your application expands, you may require more advanced logging configurations to efficiently manage log files and guarantee that relevant data is captured without exhausting your storage.

So, let us now look at some advanced techniques of logging:

Using RotatingFileHandler for log rotation

RotatingFileHandler is used to write the log records to a set of files and rotate them when they reach a certain size. When we say rotates them, it means that once the current log file reaches the specified size (maxBytes), it is closed and renamed to include a number suffix (e.g., rotating.log.1), and a new log file is created. This process continues, and older log files are either deleted or rotated further based on the backupCount.

import logging

from logging.handlers import RotatingFileHandler

# Create a logger object

logger = logging.getLogger('my_app')

logger.setLevel(logging.INFO) # Set the logger's level to INFO

# Set up a rotating file handler

handler = RotatingFileHandler(

'app.log', # Log file name

maxBytes=100000, # Maximum size of a log file in bytes before rotation

backupCount=3 # Number of backup files to keep

)

# Optional: Set a formatter for the log messages

formatter = logging.Formatter('%(asctime)s - %(name)s - %(levelname)s - %(message)s')

handler.setFormatter(formatter)

# Add the handler to the logger

logger.addHandler(handler)

# Write a log message

logger.info('This message will be written to the rotating log file')

maxBytes=100000: The log file rotates after it reaches 100,000 bytes (approximately 100KB).backupCount=3: Up to 3 backup files are kept, namedapp.log.1,app.log.2, etc.

Sample Output:

INFO:my_app:This message will be written to the rotating log file

Implementing TimedRotatingFileHandler

For applications where logs need to be rotated based on time rather than size, TimedRotatingFileHandler is an ideal choice. It rotates log files at specific intervals, such as midnight or hourly.

from logging.handlers import TimedRotatingFileHandler

# Set up a timed rotating file handler

handler = TimedRotatingFileHandler(

'app.log', # Log file name

when='midnight', # Rotate the log file at midnight

interval=1 # Rotate every day

)

# Add the handler to the logger

logger.addHandler(handler)

when='midnight': The log file is rotated at midnight every day.interval=1: The log file is rotated once every day.

Configuring Multiple Handlers

In some circumstances, you may need to route log messages to several destinations, such as a file and the console. Python's logging module lets you attach numerous handlers to a single logger. Here is an example for more clarity:

import logging

# Create a logger object

logger = logging.getLogger('my_app')

# Set up a file handler to write logs to a file

file_handler = logging.FileHandler('app.log')

# Set up a console handler to output logs to the console

console_handler = logging.StreamHandler()

# Add both handlers to the logger

logger.addHandler(file_handler)

logger.addHandler(console_handler)

# Write a log message

logger.info('This message will be written to both the file and the console')

FileHandler('app.log'): Directs log messages to a file namedapp.log.StreamHandler(): Outputs log messages to the console.

Sample Output:

This message will be written to both the file and the console

INFO:my_app:This message will be written to both the file and the console

Handling Exceptions and Variables in Log Files

Effective logging not only includes capturing simple information. Logging exceptions and variable states provide context and help in better troubleshooting:

import logging

# Configure the logger

logging.basicConfig(

filename='app.log',

level=logging.ERROR,

format='%(asctime)s - %(levelname)s - %(message)s'

)

# Example: Handling an exception and logging it

try:

result = 10 / 0

except Exception as e:

logging.error(f"An error occurred: {e}", exc_info=True) # Logs the error with traceback

# Example: Logging a variable's state

user_id = 12345

logging.info(f"Processing data for user {user_id}")

exc_info=True: This parameter ensures that the full traceback of the exception is included in the log, providing detailed information about where the error occurred.- Variable Logging: Using string formatting (e.g.,

f"Processing data for user {user_id}") allows you to dynamically incorporate variable values in your log messages, making them more useful and contextual.

Sample Output:

ERROR:root:An error occurred: division by zero

Traceback (most recent call last):

File "<ipython-input-9-ee43e62a9cd0>", line 12, in <cell line: 11>

result = 10 / 0

ZeroDivisionError: division by zero

Configure Logging for Multiple Modules

Larger applications, particularly those with several modules, require a centralized logging configuration. This strategy ensures that logs are handled consistently throughout all areas of the program. Python's logging module can help you to achieve this through centralized configuration using dictConfig().

Here's an example of configuring centralized logging:

import logging.config

# Centralized logging configuration using dictConfig

logging.config.dictConfig({

'version': 1, # Configuration schema version

'disable_existing_loggers': False, # Ensure existing loggers are not disabled

'handlers': { # Handlers define where and how logs are output

'file': {

'class': 'logging.FileHandler', # Handler class for writing logs to a file

'filename': 'app.log', # Log file name

'formatter': 'simple' # Refer to the formatter defined below

}

},

'formatters': { # Formatters define the structure of the log messages

'simple': {

'format': '%(asctime)s - %(name)s - %(levelname)s - %(message)s' # Log format

}

},

'root': { # The root logger configuration

'level': 'INFO', # Set the logging level to INFO

'handlers': ['file'] # Attach the file handler to the root logger

}

})

# Example usage

# Create a logger with the module's name

logger = logging.getLogger() # Get the root logger

# Log an informational message

logger.info('This log comes from the root logger')

Explanation:

- Centralized Configuration: The

dictConfig()function defines a comprehensive logging configuration that can be applied across all modules in the application. - Handlers: The example uses a

FileHandlerto direct log output toapp.log. You can add more handlers as needed (e.g., for logging into the console or sending logs over the network). - Formatters: The log format includes the module name (

%(name)s), which helps identify where the log message originated, making debugging easier. - Usage in Modules: Each module can retrieve a logger using

logging.getLogger(__name__). This ensures that the log messages are tagged with the correct module name.

Sample Output:

2024-08-27 10:32:31,935 - INFO - This is an informational message

2024-08-27 10:42:52,006 - root - INFO - This log comes from the root logger

Optimizing Logging Performance

While logging is necessary for monitoring and debugging, it can impact your application's performance if handled incorrectly. So, here are some strategies to improve log performance:

Use Appropriate Log Levels:

- Use

DEBUGfor detailed development logs, which can be turned off in production to reduce overhead. To disableDEBUGlogging in production while keeping it enabled in development, you can configure the logging level dynamically based on the environment (can be managed through configuration settings). - Reserve

INFOfor general tracking andERRORfor critical issues that require immediate attention.

- Use

Implement Lazy Logging:

- Lazy logging is a technique which can be used to delay the creation and formatting of log messages until it is certain that the message will be logged. This approach is particularly useful for optimizing performance when logging messages involve expensive computations or operations that might not always be necessary.

- Lazu logging helps you to reduce the overhead associated with logging by avoiding unnecessary computations for log messages. This is crucial in high-performance applications where even minor delays can accumulate.

- Expensive operations such as database queries or complex calculations are only executed if the corresponding log level is enabled. This prevents wasting resources on operations that might be irrelevant if the log level is set higher (e.g.,

INFOorWARNINGinstead ofDEBUG). Example:

# Example of lazy logging to avoid unnecessary computation if logger.isEnabledFor(logging.DEBUG): logger.debug(f"Complex calculation result: {expensive_function()}")- Explanation: The

isEnabledFor(logging.DEBUG)check ensures thatexpensive_function()is only called ifDEBUGlogging is enabled. This prevents unnecessary computation whenDEBUGlogs are not required.

Consider Asynchronous Logging:

Asynchronous logging can help yout to reduce the performance impact of logging by handling log messages in a separate thread or process. This approach minimizes the delay caused by I/O operations which ensures that logging does not interfere with the primary application flow.

It allows the main application to continue running smoothly by passing on the log processing to a background thread or process. So by offloading logging tasks, your application can handle more operations per second, which is crucial for high-performance or high-throughput systems.

You can use libraries such as

concurrent-log-handlerto implement asynchronous logging. You can install theconcurrent-log-handlerusing the command:pip install concurrent-log-handler. Example:import logging from concurrent_log_handler import ConcurrentRotatingFileHandler # Create a logger logger = logging.getLogger('my_logger') logger.setLevel(logging.DEBUG) # Set up an asynchronous file handler handler = ConcurrentRotatingFileHandler('app.log', maxBytes=10485760, backupCount=5) formatter = logging.Formatter('%(asctime)s - %(name)s - %(levelname)s - %(message)s') handler.setFormatter(formatter) # Add the handler to the logger logger.addHandler(handler) # Example log entry logger.info('This is an informational message')

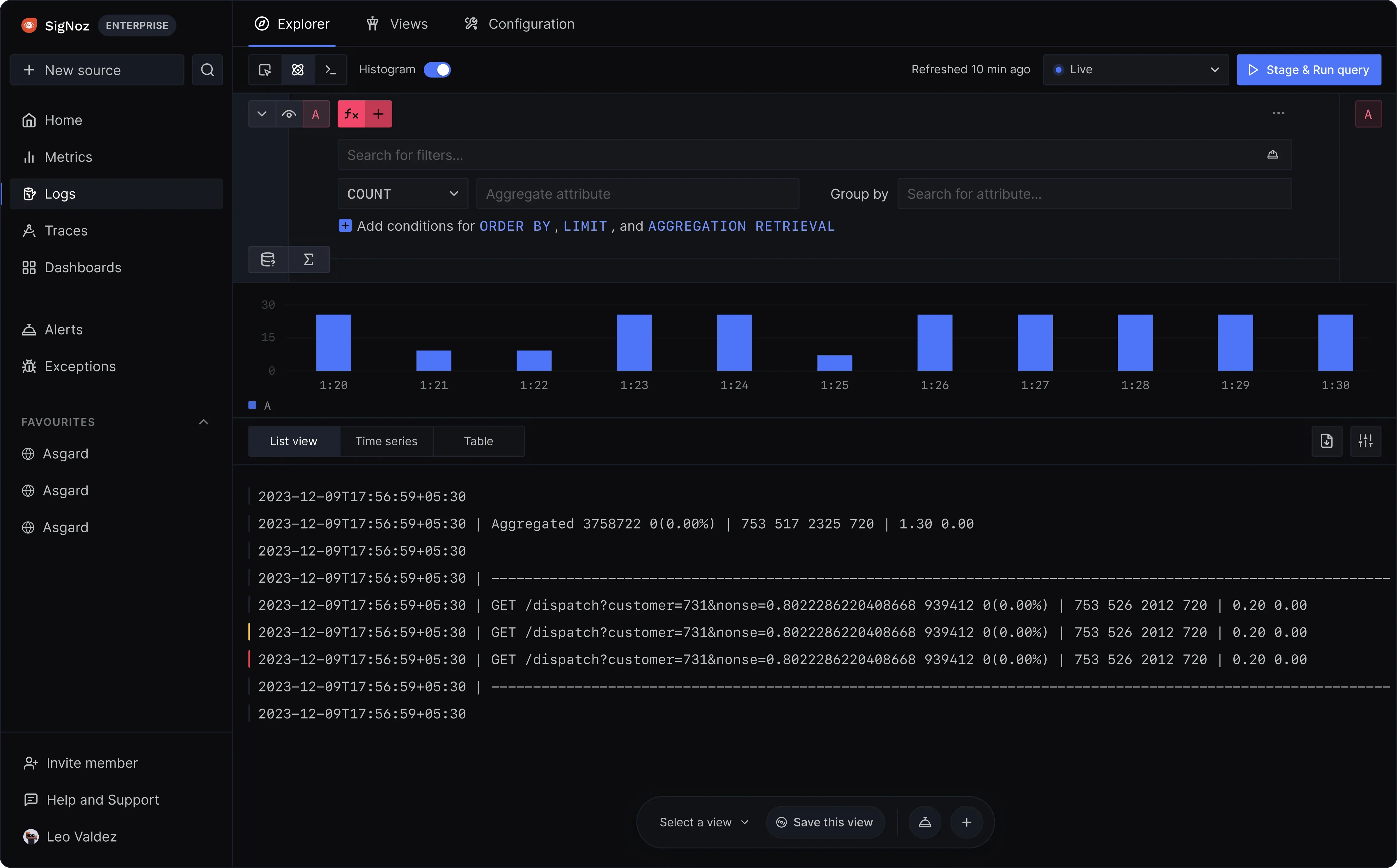

Enhancing Observability with SigNoz

Traditional file-based logging may not give sufficient visibility in modern, distributed applications. Here's where observability platforms like SigNoz come in. SigNoz is an open-source Application Performance Monitoring (APM) tool that improves application observability by combining logs, traces, and metrics.

SigNoz Cloud is the easiest way to run SigNoz. Sign up for a free account and get 30 days of unlimited access to all features.

You can also install and self-host SigNoz yourself since it is open-source. With 24,000+ GitHub stars, open-source SigNoz is loved by developers. Find the instructions to self-host SigNoz.

For detailed implementation steps, refer to SigNoz's guide on logging in Python with OpenTelemetry here. This guide will provide specific instructions tailored to integrate Python logging with SigNoz's observability platform using OpenTelemetry

Here's how SigNoz can support your logging strategy:

- Centralized Log Management: SigNoz collects logs from many services and displays them on a centralized dashboard. This makes it easier to evaluate logs from various portions of your program without having to manually search through log files.

- Trace Correlation: SigNoz allows you to connect logs and dispersed traces. This means you can track a given request as it moves through several services and correlate those traces with logs to get a complete picture of the request's lifecycle.

- Performance Metrics: In addition to logs, SigNoz gathers performance measures (such as CPU utilization and response times). By tracking these metrics alongside your logs, you can gain a more complete picture of your application's health and performance.

Best Practices for File-Based Logging in Python

You should adhere to certain best practices to guarantee that your file-based logging is effective, secure, and managed. These methods improve log quality and make debugging and monitoring your applications easier.

- Use appropriate log levels based on message severity (e.g.,

DEBUG,INFO,WARNING,ERROR, orCRITICAL). Properly categorizing log messages prevents you from missing key issues or becoming overwhelmed by less essential ones. - Log messages with consistent structure are easier to read, search, and analyze. Including essential information in your log messages, such as user IDs, IP addresses, or transaction IDs, can significantly boost their utility.

Example:

logger.info("User %s logged in from IP %s", user_id, ip_address)You can use

%sin log messages to pass variables as arguments here.

- Large log files can consume disk space and become difficult to manage. Log rotation will automatically archive old log files and produce new ones, which helps to manage your log directory and prevents disk space difficulties.

- Logs frequently contain sensitive information, such as user data, authentication tokens, and system configurations. It is critical to encrypt your log files to prevent unauthorized access and to avoid logging sensitive information entirely.

You can use proper file permissions to restrict log file access.

Do not log sensitive data, such as passwords or personally identifiable information (PII). If necessary, disguise or encrypt this information before logging.

Example:

# Example of masking sensitive data masked_email = re.sub(r'(\\w{2})\\w+(@\\w+)', r'\\1***\\2', user_email) logger.info("User email: %s", masked_email)

Key Takeaways

- Python's logging module offers a reliable and adaptable mechanism for implementing file-based logging.

- Using complex handlers such as

RotatingFileHandleris critical for controlling log file growth. These handlers automatically archive old logs and keep the logging environment clean, balancing log retention with disk space utilization. - Properly designed logging, particularly exception and variable logging, greatly improves debugging capabilities. Clear and consistent log messages make it easier to identify and handle problems.

- Centralized logging setups ensure that all modules within an application adhere to the same logging protocols.

- Logging does not need to slow down your application. By using speed optimization techniques like lazy logging and asynchronous handlers, you can ensure that logging operations do not interfere with the core application processes.

- Tools like SigNoz supplement file-based logging by offering a more comprehensive observability solution.

FAQs

How do I append logs to an existing file instead of overwriting it?

By default, the FileHandler in Python's logging module opens files in write mode ('w'), which means that each time the handler is initialized, it will overwrite the existing file content. To append logs to an existing file rather than overwriting it, use the 'a' mode when creating a FileHandler:

handler = logging.FileHandler('app.log', mode='a')

This ensures that new log entries are added to the end of the file without deleting previous logs.

Can I log into multiple files simultaneously?

Yes, you can log to multiple files by creating multiple FileHandlers and adding them to your logger:

handler1 = logging.FileHandler('app.log')

handler2 = logging.FileHandler('errors.log')

logger.addHandler(handler1)

logger.addHandler(handler2)

Example:

import logging

# Create a logger

logger = logging.getLogger('my_logger')

logger.setLevel(logging.DEBUG)

# Create handlers for different log files

handler1 = logging.FileHandler('app.log')

handler2 = logging.FileHandler('errors.log')

# Add handlers to the logger

logger.addHandler(handler1)

logger.addHandler(handler2)

# Log messages

logger.debug('This is a debug message')

logger.error('This is an error message')

Sample Output (both in app.log and errors.log:

This is a debug message

This is an error message

What's the difference between DEBUG and INFO log levels?

The DEBUG log level is the most verbose and is used to store comprehensive debugging information, which is typically useful during development. The INFO level is less verbose and is used to track the application's overall progress or state in a production environment.

How can I format log messages with timestamps?

To format log messages to contain timestamps, use a formatter with a timestamp in the format string:

formatter = logging.Formatter('%(asctime)s - %(levelname)s - %(message)s')

handler.setFormatter(formatter)

How can I rotate log files based on size or time?

To rotate log files based on size, use the RotatingFileHandler. For time-based rotation, use the TimedRotatingFileHandler:

# Size-based rotation

handler = RotatingFileHandler('app.log', maxBytes=2000000, backupCount=5)

# Time-based rotation

handler = TimedRotatingFileHandler('app.log', when='midnight', interval=1)

What are some common pitfalls to avoid in file-based logging?

Common pitfalls include:

- Overuse of

DEBUGlogs in production. This might lead to performance difficulties and excessive log files. - Logs may include important information that must be protected.

- Forgetting to implement log rotation might result in excessively large log files, which use disk space.

- Replace hardcoding paths in

FileHandlerwith dynamic routes based on environment variables or configuration files.

Can I use Python logging with third-party monitoring tools?

Yes, Python's logging module is compatible with third-party monitoring systems such as SigNoz. This is usually accomplished by configuring custom handlers that deliver log data to these services. You can also install and self-host SigNoz yourself since it is open-source.

How do I log exceptions along with the stack trace?

To log exceptions together with the stack trace, use the exc_info=True parameter in the logger.error method.

try:

result = 10 / 0

except Exception as e:

logger.error("An error occurred", exc_info=True)