The Developer's Guide to Java Profiling [Tools & Best Practices Included]

Java applications can slow down for many reasons, such as memory leaks, inefficient algorithms, database bottlenecks, or thread contention. Often, these performance issues remain hidden until real production traffic hits and users begin to complain about slowness. Even experienced developers might spend days diagnosing the root cause of a slowdown without a systematic approach.

Profiling provides a solution. Instead of guessing where a problem lies, profiling reveals exactly what is happening inside the running application. This guide focuses on practical aspects of Java profiling: which tools are effective, how to interpret profiling data without getting overwhelmed, and what fixes make a real difference in production environments.

What is Java Application Profiling?

Java application profiling is the process of measuring and analyzing a program's behavior and performance as it runs. In simpler terms, profiling tells you what your application actually does at runtime (and where it spends time or uses memory), rather than what the code appears to do.

Profiling provides insights that can lead to improved responsiveness, reduced resource usage, and a better user experience. It is especially useful for identifying performance bottlenecks in large-scale applications, pinpointing memory leaks, analyzing thread contention issues, and understanding I/O bottlenecks.

How Profiling Differs from Monitoring and Tracing

It's important to distinguish profiling from infrastructure monitoring and distributed tracing:

Infrastructure monitoring might tell you that your server's CPU usage is at 90%. Profiling goes deeper and tells you which specific Java method or operation is consuming that CPU time.

Distributed tracing might show that a certain request took 5 seconds as it traveled through various microservices. Profiling can reveal why it took 5 seconds by pinpointing the exact lines of code or database calls that consumed most of the time.

In essence, monitoring and tracing expose high-level symptoms at the system or service level, whereas profiling drills down to the code level root causes.

Common Performance Problems

Performance issues in Java applications tend to fall into a few common categories:

CPU bottlenecks: One or a few methods consume an inordinate amount of processing time. Examples include a nested loop that inadvertently grows into O(n²) or O(n³) complexity, or heavy computation that isn't optimized.

Memory issues: These manifest as high memory usage or OutOfMemoryError crashes. Causes include objects accumulating faster than garbage collection can reclaim them, static collections that grow indefinitely (memory leaks), or improper heap usage causing frequent garbage collection pauses.

Concurrency problems: Issues in multi-threaded code can degrade performance significantly. Common problems are threads waiting on locks (leading to thread contention), deadlocks, or thread pools misconfigured for the workload.

I/O delays: Input/Output operations (database queries, file reads/writes, network calls) are much slower than in-memory operations. Performance problems arise from things like database queries that are not optimized (missing indexes or using N+1 query patterns), or performing blocking network calls in a tight loop.

Remember that performance bugs are as critical as functional bugs—they may not throw exceptions, but they hurt the user experience and, in the worst cases, can bring down systems. Performance issues often take longer to surface (usually under high load or large data volumes) and can be harder to reproduce, which is why a proactive approach with profiling is important.

Essential Java Profiling Tools for 2025

There are many Java profiling tools available, but a handful of tried-and-true tools cover most needs. This section outlines the most essential tools and when to use them.

Built-in JVM Tools

Before exploring external profilers, it’s wise to start with the tools that come bundled with the JDK. They cost nothing extra and are readily available in any Java environment:

Java Flight Recorder (JFR) – Now included for free in OpenJDK (since Java 11). It runs with very low overhead (often less than 2%) even in production. JFR can record CPU samples, memory allocations, garbage collection events, thread states, and more.

For example, you can start a flight recording on a running application with:

# Start recording on a running Java application for 60 seconds java -XX:+FlightRecorder -XX:StartFlightRecording=duration=60s,filename=myapp.jfr MyApplication # Alternatively, attach to an already running process by PID jcmd <pid> JFR.start duration=60s filename=recording.jfrJDK command-line tools – The JDK provides several command-line utilities that can be invaluable for quick profiling and troubleshooting:

jconsole # GUI to monitor JVM performance jmap -histo <pid> # Prints heap object usage histogram jstack <pid> # Dumps all thread stacks (find deadlocks) jcmd <pid> GC.heap_info # Heap usage summaryThese tools work anywhere you have a JVM running and require no setup. For instance, if you suspect a memory leak, jmap -histo can immediately show which object classes are occupying the most heap space. If your application seems hung, jstack can show if threads are stuck waiting on locks. Because they’re on-demand, you can use them in production as a first line of investigation with very low risk.

Open-Source Profilers

There are several open-source profilers available that provide more advanced features or nicer interfaces on top of what the JDK offers:

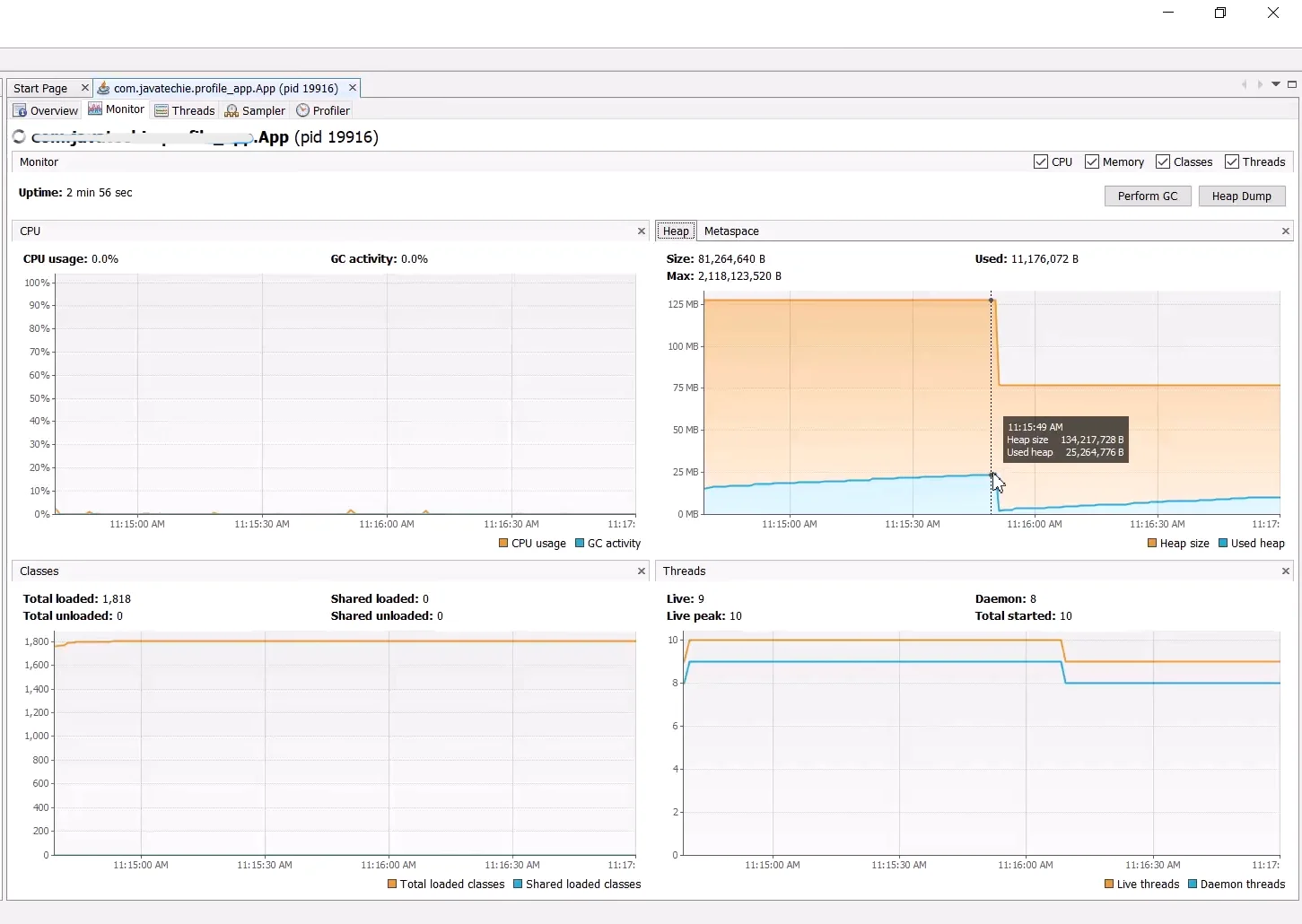

- VisualVM – Free profiling tool with an easy GUI for profiling local applications. Connect to a running JVM, hit "Profile", and see CPU usage, memory usage, thread activity, and more. Excellent for development and testing environments.

Async Profiler – Low-overhead Java profiler specializing in CPU and memory profiling using sampling. Often used to generate flame graphs for CPU usage.

./profiler.sh -e cpu -d 30 -f flamegraph.html <pid>This runs a 30-second CPU profile and outputs an HTML flame graph. Ideal for production due to minimal overhead.

Eclipse Memory Analyzer (MAT) – Tool for analyzing heap dumps. When you suspect a memory leak, take a heap dump and open it in MAT. It has a "Leak Suspects" report that automatically points out likely memory leaks.

Commercial Profilers

There are also commercial profiling tools which come with additional features, integration, and support. Two of the most popular ones are:

- JProfiler and YourKit – Comprehensive profilers with intuitive UIs, CPU call graphs, memory allocation hotspots, and IDE integration. Teams that regularly diagnose performance issues often find the cost justified by time saved. However, free tools are usually sufficient for occasional profiling.

The Four Pillars of Java Application Profiling

When profiling a Java application, it helps to break down the task into four main aspects (pillars), each targeting a specific type of performance issue. These are CPU profiling, Memory profiling, Thread profiling, and I/O profiling. We’ll discuss each in detail, including what to look for and how to address issues uncovered.

1. CPU Profiling: Finding Processing Bottlenecks

CPU profiling focuses on which methods or operations consume the most CPU time. This is the first place to investigate when the application is slow or CPU usage is high.

Reading CPU Profile Results

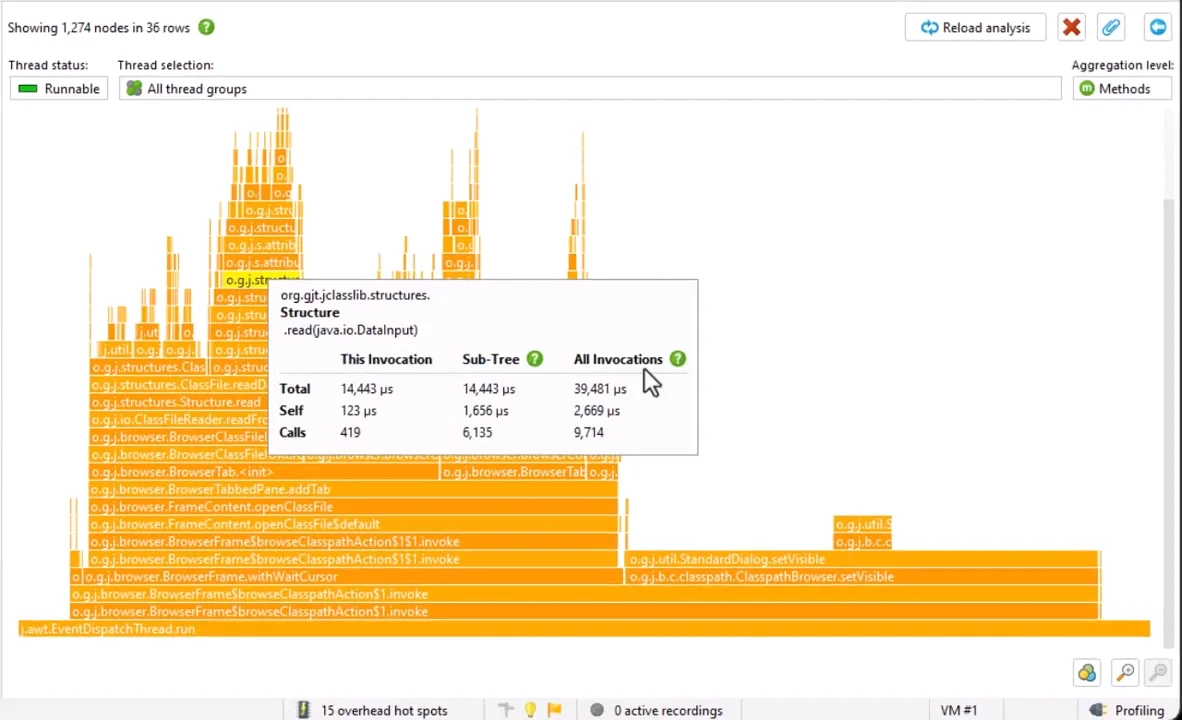

The most intuitive output is the flame graph. It visualizes the call stack vertically and time spent in each method horizontally. Wide blocks indicate hotspots consuming large fractions of CPU time.

When interpreting a flame graph or profile report, look for:

Wide blocks or high percentages: These indicate hotspots that consume a large fraction of CPU time. If one function is, say, 40% of the total execution time, that’s an area to investigate for potential optimization.

Repeated patterns: If you see the same sequence of calls repeated many times in the profile, it might indicate an unnecessary repetition in the code (for example, doing an expensive operation inside a loop instead of once).

Deep call stacks: A very deep stack in a profile might suggest that a lot of layers (or recursion) are involved in a computation. Sometimes simplifying the call stack (inlining logic or reducing recursion) can help performance.

Methods that shouldn’t be hot: Sometimes profiling reveals a surprise – a method that you thought was trivial is actually consuming a lot of time. This often leads to discovering a bug or an inefficiency (e.g., a library method being misused in a way that makes it expensive).

CPU Profiling Fixes

Once a CPU hotspot is identified, how do we go about fixing it? The approach depends on what the hotspot is:

Optimize the algorithm: If profiling shows a method is slow because it’s doing too much work, see if the logic can be improved. For example, replace nested loops with a more efficient algorithm, or use a data structure that provides faster lookups. A classic case is replacing an O(n^2) search with a HashSet for O(n) or using a caching mechanism to avoid repeated calculations.

// Inefficient O(n * m) complexity: for (Order order : orders) { for (Product product : allProducts) { if (order.containsProduct(product.getId())) { process(order, product); } } } // Optimized using HashMap for O(1) lookups: Map<String, Product> productById = allProducts.stream() .collect(Collectors.toMap(Product::getId, p -> p)); for (Order order : orders) { for (String productId : order.getProductIds()) { Product product = productById.get(productId); if (product != null) { process(order, product); } } }Cache results: Profiling might show a method that calculates something expensive is being called repeatedly with the same inputs. In such cases, caching can help. By storing the result of an expensive computation the first time and reusing it on subsequent calls, you trade memory for CPU time.

Leverage JIT optimizations: The Java Just-In-Time (JIT) compiler will optimize hot code at runtime. One nuance in profiling is that if you profile too early, you might catch methods before the JIT optimizes them. A best practice is to let the application “warm up” (execute for some time) before profiling, so that the hottest code paths are JIT-compiled.

2. Memory Profiling: Optimizing Heap Usage

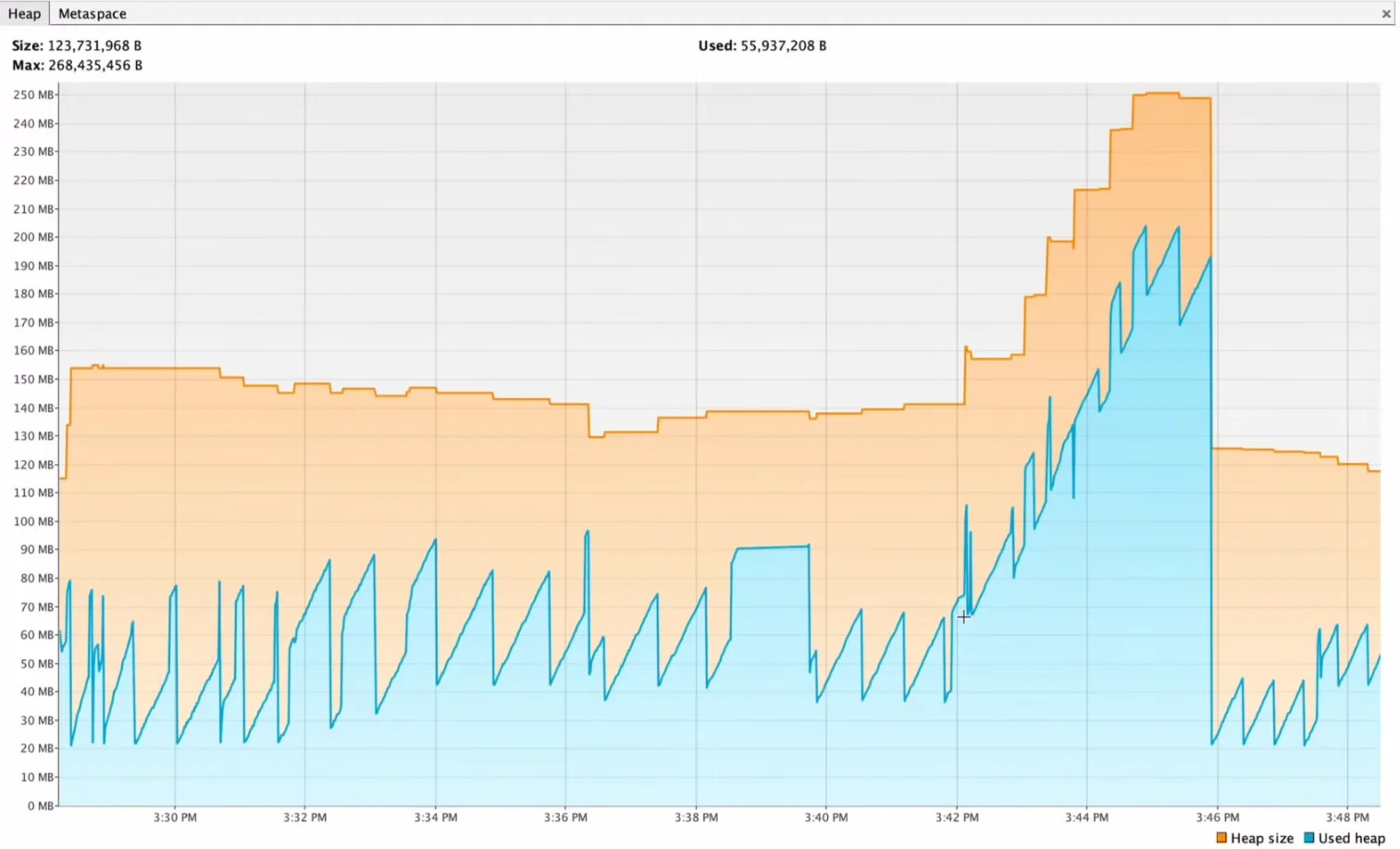

Memory profiling deals with how your application uses the heap (the memory allocated for objects). The goal is to identify memory leaks, reduce unnecessary allocations, and ensure garbage collection is efficient. Memory issues typically show up as either an OutOfMemoryError, or as gradually worsening performance due to frequent garbage collection (if the heap is constantly filling up).

Finding Memory Leaks

A memory leak in Java doesn’t mean the same as in C (where memory is lost and cannot be freed due to missing free() calls). In Java, a “leak” usually means you still have references to objects that you don’t need, so the garbage collector can’t reclaim that memory. Over time, these unneeded objects pile up.

To catch leaks:

- Enable heap dump on OOM:

-XX:+HeapDumpOnOutOfMemoryError - Trigger heap dumps manually:

jcmd <pid> GC.heap_dump filename.hprof - Use Eclipse MAT's "Leak Suspects" report

- Look for collections that keep growing

Once you identify a memory leak, the fix usually involves removing references to objects that should be garbage-collected.

Reducing Garbage Creation

Even when you don’t leak memory, creating a lot of objects can hurt performance because the JVM has to allocate and later garbage-collect those objects. Memory profiling can show the allocation rate (how quickly your program is creating new objects). If the allocation rate is extremely high, it means the GC will be working hard to collect short-lived objects.

Ways to reduce allocations:

- Reuse objects: Consider object pooling for frequently created objects

- Avoid object creation in loops: Move creation outside when possible

- Use primitive types: Prefer primitive arrays over

List<Integer>

Here’s a simple example of avoiding unnecessary string garbage:

// Creating unnecessary garbage:

for (Item item : items) {

log.debug("Processing item " + item.getId() + " for user " + user.getName());

}

// Better: use placeholders

for (Item item : items) {

log.debug("Processing item {} for user {}", item.getId(), user.getName());

}

In the above, many short-lived String objects would be created in the first version (and then immediately discarded if debug logging is off). The second version avoids that by deferring string construction until it’s certain the message will be used (the logging framework handles it).

3. Thread Profiling: Resolving Concurrency Issues

Thread profiling examines how an application uses threads – crucial for understanding performance in a concurrent environment. If your application is multi-threaded (which most server applications are, by virtue of handling multiple requests, using thread pools, etc.), you need to ensure threads are running efficiently and not stepping on each other’s toes.

Symptoms of issues include:

- Application freezes under load (deadlocks or contention)

- Low CPU but low throughput (threads idle or waiting)

Identifying Issues

When suspecting a thread issue, the first step is to take a thread dump (using jstack or VisualVM’s thread viewer). A thread dump will list all threads, their states, and the locks they hold or are waiting for. Key things to look for:

- BLOCKED threads: Waiting to acquire locks

- WAITING threads: Waiting on conditions

- Deadlock detection: Thread dumps explicitly highlight deadlocks

Resolving Contention

Reduce lock scope: Minimize work inside synchronized blocks:

// Improved: limit lock scope public void updateStats(Stats s) { Result temp = heavyComputation(); // outside lock synchronized(this) { globalStats.merge(s); // quick operation under lock } }By reducing what’s inside the synchronized block, other threads don’t have to wait as long.

Use finer-grained locks or lock-free structures: Sometimes a single lock is protecting too much (e.g., a global lock for all data). Using multiple locks for independent parts of data can allow more concurrency. Or use concurrent data structures from java.util.concurrent which handle synchronization internally and more efficiently (like ConcurrentHashMap, ConcurrentLinkedQueue, etc., which avoid locking the whole structure for many operations by using internal locks or non-blocking algorithms).

Thread pool tuning: Thread pools are a common way to manage concurrency. But if the pool is too small, threads will be waiting for work, and if it’s too large, there will be contention for the limited number of threads. The ideal number of threads is roughly the number of CPU cores, plus a small buffer for I/O-bound tasks (where threads spend most of their time waiting).

- CPU-bound tasks: threads ≈ CPU cores

- I/O-bound tasks: threads ≈ cores × (1 + wait time/compute time)

4. I/O Profiling: Optimizing External Operations

I/O operations are often the slowest part of a Java application’s execution profile (simply because reading from disk or network is magnitudes slower than CPU or memory access). Profiling I/O means looking at how your application interacts with databases, external services, and the file system, and finding inefficiencies.

Signs of I/O issues include:

- High response times even when CPU is mostly idle (the app is waiting on I/O).

- Database CPU is high or queries are slow (could indicate inefficient SQL).

- Lots of waiting threads for network or database calls (as seen in thread dumps in WAITING state on socket read, etc.).

- Large spikes in latency correlated with certain operations (maybe when a big file is read or written).

Database Performance

For applications using a database, the database is a common source of latency:

- Enable query logging to find slow queries (in dev or with caution in production). Tools like p6spy or datasource-proxy can log all SQL statements executed and how long they took. By reviewing the logs, you might find one particular query that is called far too often or takes too long.

- Look for N+1 query patterns (common in ORMs)

- Check connection pool sizing

Network and File I/O

If your application calls external HTTP services (REST APIs, microservice calls, etc.), profiling might show those calls as hotspots:

- Use timeouts on network calls. A very common mistake is not setting a timeout, meaning if the remote service hangs, your thread could hang indefinitely.

- Use asynchronous or non-blocking I/O if appropriate (for example, using CompletableFuture with an async HTTP client) so that waiting on a slow network call doesn’t block an OS thread that could do other work.

- Just like with database, look for repetitive patterns. Are you calling the same service repeatedly for data you could cache? Are you making calls in series that could be made in parallel?

Modern Profiling Challenges (Cloud & Microservices)

Java applications today often run in containers (Docker/Kubernetes) and are part of microservice ecosystems. This adds some complexity to profiling, but there are tools and practices to handle it.

Microservices and Distributed Tracing

In microservices, traditional profiling in one JVM won't tell the whole story. Use distributed tracing (OpenTelemetry, Jaeger, Zipkin) to trace requests end-to-end across services. This helps identify which service is the bottleneck before drilling down with profiling.

For example, a trace might reveal that a request spends 50 ms in Service A, 20 ms in Service B, and 500 ms in Service C. That immediately tells you Service C is the problem. Then you can profile Service C specifically to see why it’s slow (maybe a database call inside C).

Without tracing, you might profile Service A (where the issue was noticed) and find nothing, not realizing Service A was waiting on Service C.

Container Considerations

When running Java in containers:

- The JVM needs to know it’s in a container with limited resources. Modern JVM versions (Java 11+) do this automatically by reading cgroup limits. But it’s good to explicitly set memory limits via options like -XX:MaxRAMPercentage to ensure the JVM doesn’t try to use more heap than the container allows.

- Always use resource requests/limits in Kubernetes that match your profiling configuration. If you profile an app with 4 CPU cores and 8 GB RAM on your machine but deploy it with a 1 CPU, 1 GB limit, the performance characteristics will be very different.

- Be aware of port/file sharing requirements for profiling tools

Continuous Profiling in Production

A newer trend is continuous profiling, which means always running a lightweight profiler in production and collecting data over time. Services like Google Cloud Profiler, or open-source tools like Conductor (formerly Uber’s JVM profiler) or Async Profiler in continuous mode, allow you to capture profiles (e.g., CPU usage) continuously and then examine them historically. The advantage is you can catch issues that only happen at certain times (e.g., a CPU spike at midnight) because you have a record of profiling data from that period. It also lets you observe trends, like certain methods creeping up in CPU usage after a new release, indicating a performance regression. For example, you might run Java Flight Recorder in a continuous mode:

java -XX:StartFlightRecording=maxage=1h,settings=profile.jfc,name=ContinuousRecording,dumponexit=true -jar MyApp.jar

This keeps a rolling window of the last 1 hour of profiling data. If something goes wrong, you can dump the recording to analyze it. To minimize overhead, continuous profiling typically uses wide sampling intervals and only records high-level data. If a specific alert triggers (say latency goes above X), you might temporarily increase the profiling detail.

Best Practices for Effective Profiling

To wrap up, here are some best practices to integrate profiling into your development and maintenance process:

Profile Early in the Development Cycle

Don't wait until your application is in production and users report issues to think about performance. It's much easier to catch and fix performance problems during development or testing:

Include a basic performance test as part of your CI pipeline. It could be as simple as starting the application, hitting a few key endpoints with a tool like Apache Bench or JMeter, and collecting a CPU/memory profile for 30 seconds. This can catch obvious regressions (e.g., a new change causing CPU to double for a specific action).

When developing a new feature, consider writing a benchmark test for its core algorithm using JMH (Java Microbenchmark Harness) if it's computationally heavy. This way you can verify the algorithm's efficiency and compare different implementation approaches objectively.

Run VisualVM or JProfiler on your app locally every so often, even if nothing seems wrong, just to see if there are any unexpected hot spots or growing memory usage. You might discover a minor issue (like an inefficient loop) that isn't yet a problem but could worsen at scale.

Set Realistic Performance Goals

Have concrete, realistic goals for performance, based on requirements or user expectations:

For example: "P99 (99th percentile) response time for checkout should be < 500 ms under 1000 concurrent users" or "The service should handle 100 requests per second with < 70% CPU usage on 4 cores."

These goals help determine if you need to profile and optimize further. If your current performance is far from the goal, you know you have work to do. Conversely, if you're already meeting targets with room to spare, you might focus optimization efforts elsewhere.

Having goals helps in making trade-off decisions. You might not need to hyper-optimize a piece of code if it already meets the goal thresholds, allowing you to prioritize other features or improvements.

Make Performance Visible

Integrate performance monitoring into your application dashboards so that you notice issues quickly:

Track key metrics like request latency (p50, p95, p99), throughput, error rates, as well as resource metrics like CPU, memory, and GC pause times. Tools like SigNoz (open-source), Prometheus/Grafana, or commercial APMs can help here.

Set up alerts for abnormal conditions (e.g., CPU stays above 90% for 5 minutes, or error rate > 1%). When an alert fires, it's an indicator to possibly profile to see what's happening.

Share performance findings with your team. If you fixed a performance issue, explain what it was (e.g., "We found a bottleneck in the OrderService where it was making N+1 DB calls. We batched those calls and brought the response time down from 3s to 300ms."). This builds a performance-aware culture and helps others learn from that experience.

Know Your Tools (Before a Crisis)

Become familiar with the profiling tools before you have an emergency:

Practice taking thread dumps and heap dumps on a test application so you know the commands by heart and can interpret the output confidently.

Try out JFR and open the recording in Mission Control to see what kind of data you get and how to navigate the interface effectively.

Generate a flame graph using Async Profiler on a simple app, just so you know end-to-end how to do it when needed and can quickly spot patterns in the visualization.

If using an APM like SigNoz, explore the dashboards and tracing so you know where to look when something is off and how to correlate different types of data.

This way, when an issue arises at 3 AM, you're not trying to learn a new tool under pressure – you already have an idea of what to do and can focus on solving the problem rather than fighting with unfamiliar interfaces.

Use the Right Tool for the Problem

There's no one-size-fits-all profiler. Use a combination of tools depending on the situation:

If an issue is reported in production but hard to reproduce, start with lightweight tools (JFR or Async Profiler) that you can safely run in production without significantly impacting performance.

If the issue is easily reproduced in a test or staging environment, you can afford to use heavier tools (like JProfiler/YourKit or instrumentation mode) to get more detailed information about method-level performance.

Use thread dumps for locking issues, heap dumps for memory leaks, and CPU sampling for general slowness. Each tool type excels at revealing different kinds of problems.

Don't forget logs – sometimes adding logging around a suspected slow operation can confirm if it's being called too often or taking too long. Logging complements profiling by providing context about when and why certain code paths are executed.

Continuously Reassess

After making optimizations, always re-profile to ensure the changes had the intended effect and didn't introduce new issues. Performance tuning can sometimes have unintended side effects, so verify the results under the same conditions. Also, as dependencies or usage patterns change (new versions of a library, or users start using a feature much more), the performance landscape can change. Periodic profiling (even when things seem fine) can catch issues early.

Profiling with SigNoz (APM Integration)

While profiling tools show you code-level details, an Application Performance Monitoring tool like SigNoz provides observability at the system and application level. SigNoz (a unified observability tool with logs, metrics, and traces in a single pane) can work hand-in-hand with profiling:

- Performance dashboards: Identify which service/endpoint has issues

- Distributed tracing: Shows request timeline across services

- Database tracking: Lists slow queries automatically

- Correlation: Links logs, errors, and performance data

Workflow example:

- SigNoz alerts high latency on

/checkoutendpoint - Trace shows time spent in

PaymentService - Profile PaymentService to find exact slow method

- Fix issue and verify improvement in SigNoz

Key Takeaways

Use built-in tools first: JDK tools (JFR, jstack, jmap) are free and powerful.

Target the relevant area: Match profiling approach to the problem (CPU, memory, threads, or I/O).

Production profiling requires care: Use low-overhead sampling profilers, keep durations short.

Most issues have obvious causes once found: Focus on low-hanging fruits first – they yield biggest improvements.

Modern architectures need modern tools: Combine distributed tracing and APM with traditional profilers.

Integrate profiling into routine practice: Include in code reviews, testing, and monitoring. Catching regressions early is much easier than fixing them in production.

Frequently Asked Questions

What is the difference between sampling and instrumentation profiling?

Sampling periodically checks thread activity (low overhead, ~2-3%). Instrumentation hooks every method (precise but 10x+ slowdown). Use sampling in production, instrumentation only in controlled environments.

How often should I profile?

- During development of complex features

- In CI/CD performance tests

- Before major releases

- Continuously in production (if using low-overhead tools)

- When issues are reported

Which tool should I start with?

Beginners: Start with VisualVM (graphical, easy). For production: Use JFR or Async Profiler. For memory analysis: Eclipse MAT.

What metrics should I focus on?

- Slow application: Response times, CPU usage, method execution times

- Memory issues: Heap usage, GC frequency, object counts

- Hanging: Thread states, blocked/waiting threads

- High CPU: CPU profiles, flame graphs

- Database-heavy: Query counts, query times

We hope this guide has helped demystify Java application profiling and provided a clear strategy to diagnose and fix performance issues. For more questions or to discuss specific challenges, join our Slack community or subscribe to our SigNoz newsletter for regular performance optimization tips.