Log Parsing 101 - A Beginner's Guide to Structured Data

Logs are invaluable sources of information to provide insights into system operations, user activities, and application performance. Log parsing is the process of analyzing these logs to extract meaningful data, enabling organizations to monitor, troubleshoot, and optimize their systems effectively. This guide will explore the fundamentals of log parsing, common log formats, how log parsers work, popular tools, and best practices to help you harness the power of your log data.

Understanding Log Parsing

Log parsing is the systematic process of extracting meaningful information from log files generated by various applications, systems, and devices. These logs contain records of events that have occurred within the systems, such as user activities, application processes, system errors, and security incidents. The key steps in log parsing are:

- Reading log files: Log files come from sources like web servers, application servers, operating systems, network devices, and security systems. They can be in formats such as plain text, JSON, XML, CSV, or syslog. The parser should accurately read these formats.

- Extracting relevant information: After reading the logs, extract key data like timestamps, log levels (INFO, ERROR), event types, user IDs, IP addresses, and messages, filtering out extraneous information.

- Transforming data into a structured format: Convert extracted data into structured formats like JSON, CSV, or database entries for easier querying, visualisation, and analysis.

- Analyzing log data: Analyze structured log data to identify patterns, detect anomalies, and gain actionable insights. For instance, a spike in error logs may indicate deployment issues, while repeated failed logins could signal a security threat.

The main aspects of log parsing, includes handling common log formats like plaintext, JSON, XML, CSV, and Windows Event logs.

Common Log Formats

Logs can come in various formats, depending on the source system or application generating them. Understanding these common log formats is essential for effective log parsing and analysis. Here are some of the most frequently encountered log formats:

Plaintext: Plaintext logs are simple text files where each log entry is a line of text. They are the most basic form of logs and are easy to read and write. Often used in web server logs and application logs. Example:

2024-07-23 15:43:21 INFO User login successful - UserID: 7890, SessionID: abc1232024-07-23 15:43:21is the timestamp,INFOis the log level,User login successful - UserID: 7890, SessionID: abc123is the message describing the event.JSON (JavaScript Object Notation): JSON logs are structured logs that use key-value pairs, making them easily parsable and readable. JSON logs are commonly used in modern web applications, micro-services, and APIs due to their structured nature and compatibility with various data processing tools. Example:

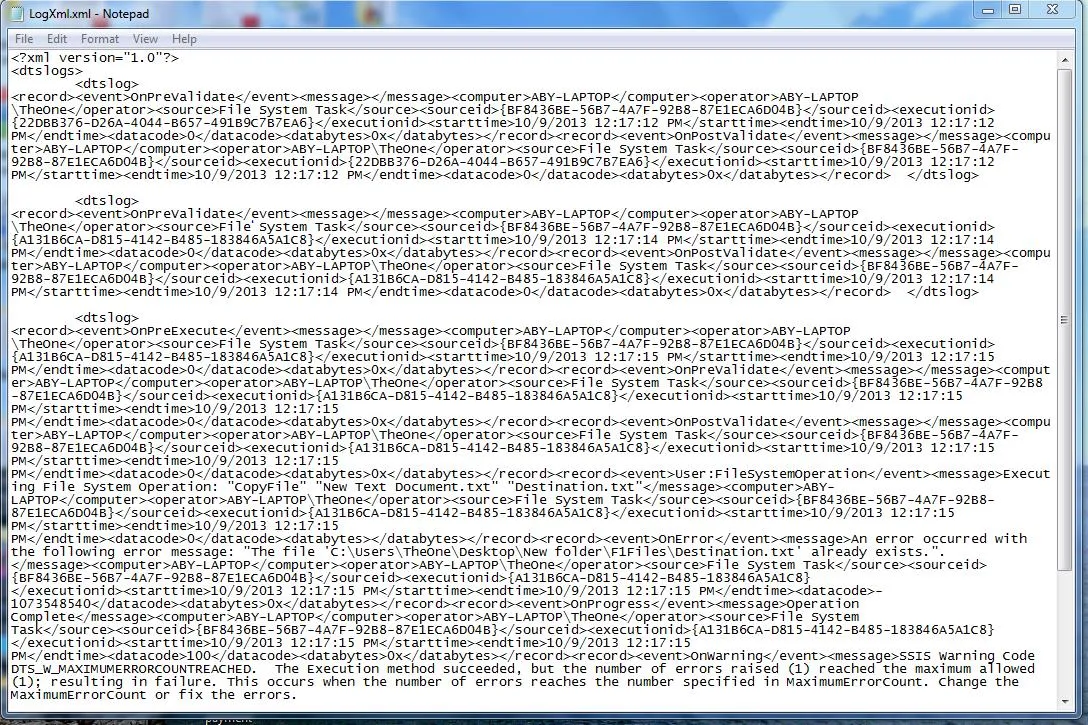

Logs formatted as JSON logs XML (Extensible Markup Language): XML logs use a markup language that is both human and machine-readable. Although XML is more verbose than JSON, it is nevertheless frequently used to describe structured data. Web services (SOAP), configuration files, and enterprise applications all frequently use XML logs. Example:

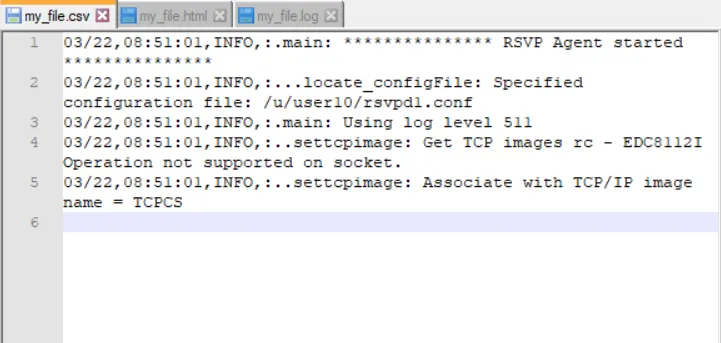

XML Logs view CSV (Comma-Separated Values): CSV logs use a simple text format where each log entry is a line and values are separated by commas. CSV logs are commonly used for exporting and importing data between applications, especially in spreadsheets and databases. Example:

CSV Logs Windows Event Log: Windows Event Log is a proprietary logging format used by the Microsoft Windows operating system. It records events related to the operating system, application software, and hardware. Windows Event Logs are used for recording system, security, and application events on Windows operating systems. To know more about Windows Logs you can checkout Window Event Logs.

Understanding these common log formats and their appropriate use cases is crucial for effective log management and analysis. By choosing the right format and leveraging the appropriate tools, organisations can streamline their log parsing and gain valuable insights from their log data. The choice of log format depends on several factors, including the complexity of the data, ease of parsing, readability, and the tools available.

How Log Parser Works

The process involves some key steps like log data ingestion (which involves collecting logs from various sources), preprocessing (which cleans and normalises the data), parsing (which is the main step; read log entries and extract meaningful information), and data transformation.

Log Data Ingestion

The first step in log parsing is log data ingestion, which involves collecting logs from various sources. These sources can include:

- Web servers (e.g., Apache, Nginx)

- Application servers (e.g., Tomcat, Node.js)

- Operating systems (e.g., Linux syslog, Windows Event Logs)

- Network devices (e.g., routers, switches)

- Security systems (e.g., firewalls, IDS/IPS)

The log parser must support multiple log formats and protocols to ingest data from diverse sources. Common methods for data ingestion include reading log files from disc, receiving logs over network protocols (e.g., Syslog), or using APIs to pull log data.

Preprocessing

Before the actual parsing, logs often require preprocessing to clean and normalise the data. Preprocessing steps can include:

- Filtering: Removing irrelevant or duplicate log entries.

- Normalization: Converting log entries into a consistent format (e.g., unified timestamp format).

- Decoding: Converting encoded data (e.g., base64-encoded strings) into readable text.

Preprocessing ensures that the log data is in a suitable state for parsing, reducing the risk of errors and improving parsing efficiency.

Parsing

The core functionality of a log parser is to read log entries and extract meaningful information. This involves several techniques:

Log Parsing Techniques

Regular Expressions (Regex) Regular expressions are used to identify patterns within log entries. They are powerful and flexible, allowing the parser to match complex patterns and extract specific fields. Example: Consider the log entry:

2024-07-25 12:34:56,789 INFO [UserService] User 123 logged in from IP 192.168.1.1Using the regex pattern:

(?P<timestamp>\d{4}-\d{2}-\d{2} \d{2}:\d{2}:\d{2},\d{3}) (?P<log_level>INFO|ERROR|DEBUG) \[(?P<source>[^\]]+)\] (?P<message>.*?) from IP (?P<ip>\d+\.\d+\.\d+\.\d+)The named groups

timestamp,log_level,source,message, andipwill capture:- Timestamp:

2024-07-25 12:34:56,789Log level:INFOSource:UserServiceMessage:User 123 logged inIP Address:192.168.1.1

- Timestamp:

Tokenisation Tokenisation involves splitting log entries into smaller units (tokens) based on predefined delimiters (e.g., spaces, commas). Each token is then assigned to a specific field. Example: Consider the log entry:

2024-07-25,12:34:56,INFO,UserService,User 123 logged in,192.168.1.1These tokens can then be mapped to fields:

- Date:

2024-07-25, Time:12:34:56, Log level:INFO, Source:UserService, Message:User 123 logged in, IP Address:192.168.1.1

- Date:

Structured Log Parsing For logs in structured formats like JSON or XML, parsers can directly map the log fields to the corresponding data structures.

Example: Consider the JSON log entry:

{ "timestamp": "2024-07-25T12:34:56.789Z", "level": "INFO", "source": "UserService", "message": "User 123 logged in", "ip": "192.168.1.1" }Using a JSON parser, you can directly access and see what you need.

Unstructured Log Parsing For unstructured logs, the parser uses advanced techniques like machine learning to identify and extract relevant information.

Example: Consider the unstructured log entry:

User 123 logged in on 2024-07-25 at 12:34:56 from IP 192.168.1.1Using machine learning models trained on similar log patterns, the parser can identify and extract User ID:

123, Action:logged in, Date:2024-07-25, Time:12:34:56, IP Address:192.168.1.1

Data Transformation

After parsing, the extracted log data is transformed into a structured format suitable for analysis. Common structured formats include JSON, CSV, and database entries.

Example:

pythonCopy code

log_data = {

'timestamp': '2024-07-10T14:32:00Z',

'level': 'INFO',

'message': 'User login successful',

'user_id': '12345'

}

Enrichment

Enrichment involves adding additional context to the parsed log data. This can include:

- Geolocation: Adding geographical information based on IP addresses.

- User Details: Adding user information from external databases.

- Threat Intelligence: Adding threat intelligence data for security logs.

Storage

The structured and enriched log data is then stored in a log management system, database, or data warehouse for further analysis. This storage solution must be scalable and capable of handling large volumes of log data.

Analysis and Visualisation

The final step involves analysing the structured log data to gain insights. This can be done using various tools and techniques:

- Querying: Using SQL-like queries to filter and aggregate log data.

- Visualisation: Creating dashboards and visualisations to identify patterns and trends.

- Alerting: Setting up alerts based on specific conditions (e.g., error rate thresholds).

Popular Log Parsing Tools

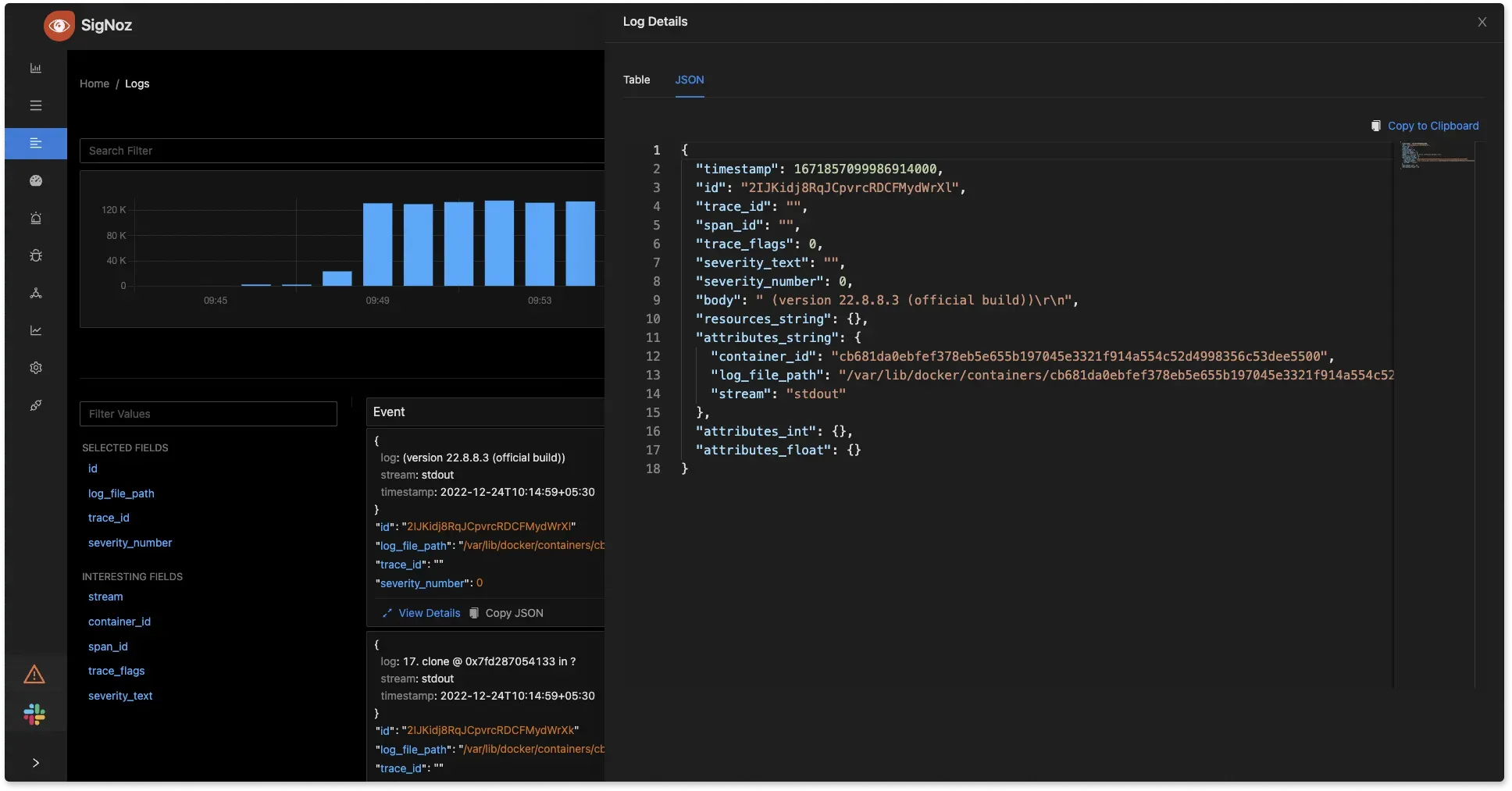

SigNoz

An open-source observability platform that helps you monitor and troubleshoot your applications. It provides comprehensive insights by integrating metrics, traces, and logs into a single platform.SigNoz Cloud is the easiest way to run SigNoz. Sign up for a free account and get 30 days of unlimited access to all features.

You can also install and self-host SigNoz yourself since it is open-source. With 24,000+ GitHub stars, open-source SigNoz is loved by developers. Find the instructions to self-host SigNoz.

Logstash

Logstash is a free and open-source utility for gathering, processing, and archiving logs for later use. It integrates easily with Kibana and Elasticsearch and is a component of the Elastic Stack.Fluentd

An open-source data collector that unifies data collection and consumption. It's known for its flexibility and support for a wide range of output destinations.Graylog

Graylog is a strong log management solution that gives you real-time search, analysis, and visualisation capabilities over your log data. Its great performance and scalability are designed in.Splunk

A leading platform for searching, monitoring, and analysing machine-generated big data via a web-style interface. It can handle a vast amount of data and is widely used in enterprise environments.

Advanced Log Parsing Techniques

As the complexity of modern applications and systems grows, so does the volume and intricacy of the log data they generate. In order to efficiently handle and get significant insights from this abundance of data, sophisticated log-parsing methods become needed. These techniques go beyond basic log analysis, leveraging sophisticated methods to handle diverse log formats, enrich data, and detect anomalies.

By incorporating tools like machine learning and real-time processing, organisations can transform raw log data into actionable intelligence. This section explores various advanced log parsing techniques that enhance the ability to monitor, troubleshoot, and optimise systems, providing a deeper understanding of their behaviour and performance.

- Machine Learning: Use machine learning techniques to categorise log data, identify abnormalities, and forecast future problems. This can aid in proactive monitoring and the detection of patterns that manual analysis could miss.

- Visualisation: Use dashboards and visual tools to represent log data graphically. Visualisation aids in identifying trends, spotting anomalies, and understanding log data at a glance.

- Indexing and Search Optimisation: Optimise log storage and indexing mechanisms to speed up searches and queries. Efficient indexing techniques reduce the time required to retrieve relevant log entries from large datasets.

- Custom Log Parsing Scripts: Develop custom scripts to parse and process logs tailored to specific application requirements. Custom scripts can handle unique log formats and perform specialised analysis tasks.

Features of Log Parser

A log parser offers several key features that enhance the management and analysis of log data. It provides flexible parsing rules to handle various log formats, enabling customization according to specific needs. Key features of log parsers include:

- Universal Data Access: Log parsers can handle a wide variety of log formats and sources, ensuring compatibility with different systems and applications. Whether logs are generated by web servers, databases, network devices, or applications, these parsers can ingest and process them. This flexibility allows organisations to centralise their log management and streamline the analysis process.

- Data Extraction Log parsers can extract relevant data from unstructured log entries, converting it into a structured format for easier analysis. This involves identifying and capturing specific fields, like timestamps from raw data logs. Effective data extraction is crucial for generating actionable insights and performing detailed troubleshooting.

- Data Transformation: Log Parser tools can process and transform log data by applying filters, aggregations, and other modifications. Data transformation helps make the log data more meaningful and easier to analyse.

- Data Output: The data parsed can be output in various formats, like CSV, JSON, and XML, which makes it easier to integrate with various tools.

- Integration Capabilities: These tools can integrate with other systems and applications, such as monitoring and alerting platforms. This enhances the overall log management and observability framework.

Log parsers offer a wide range of features that cater to various needs, depending on your specific use case. These tools can be customized and extended to suit different environments, ensuring you have the right capabilities to efficiently manage and analyze your log data.

Best Practices for Log Parsing

- Standardise Log Formats: Use consistent log formats across all applications and systems to ensure compatibility and ease of parsing.

- Include Timestamps: Ensure all logs include accurate and consistent timestamps to facilitate chronological analysis and debugging.

- Use structured logging: Adopt structured logging formats like JSON to make it easier to parse and analyse log data programmatically.

- Centralise Log Storage: Store logs in a central location to streamline access, analysis, and retention management.

- Implement Log Rotation: Use log rotation techniques to manage log file sizes and prevent storage issues.

- Filter and Reduce Noise: Apply filters to exclude irrelevant log entries and focus on meaningful data to improve analysis efficiency.

- Secure Log Data: Ensure logs are protected from unauthorised access and tampering through encryption and access controls.

- Monitor Log Parsing Performance: Regularly assess the performance of your log parsing setup to ensure it scales with growing log volumes and complexity.

Takeaways

- Log parsing is a vital process for extracting actionable insights from log files generated by systems and applications. By systematically analysing logs, organisations can monitor system operations, troubleshoot issues, and optimise performance.

- Key steps in log parsing include reading logs, extracting relevant information, and transforming it into a structured format.

- Common log formats include

plaintext,JSON,XML,CSV, andWindows Event Log, each with its own use cases. - Log parsers work by ingesting data, preprocessing it, parsing with techniques like

regex, and transforming it into a structured format. - Advanced parsing techniques use

machine learning,visualisations, andcustom scriptsto handle complex log data and detect anomalies. - A log parser offers several key features that enhance the management and analysis of log data. It provides flexible parsing rules to handle various log formats, enabling customisation according to specific needs.

FAQ

What Does Log Parsing Mean?

Log parsing is the systematic process of extracting meaningful information from log files generated by various applications, systems, and devices. These logs contain records of events such as user activities, application processes, system errors, and security incidents.

What is the Log Parser Tool?

A log parser tool is a software application or library that automates the process of log parsing. It reads log files from various sources, extracts relevant information using predefined rules or patterns, and transforms the data into a structured format. Popular log parser tools include Logstash, Fluentd, and Splunk.

What is Parsing in SIEM?

In Security Information and Event Management (SIEM), parsing refers to the process of analyzing and extracting relevant information from security logs and event data. SIEM systems use parsing to normalize and structure log data from various sources, making it easier to detect security incidents, analyze trends, and generate alerts.

What is Log Parser in Linux?

A log parser in Linux is a tool or utility designed to read, analyze, and extract meaningful information from log files generated by various system components and applications.

What Do You Mean by Parsing?

Parsing is the process of analyzing a sequence of symbols (such as text) to identify its structure and extract meaningful information. In the context of log files, parsing involves reading log entries and breaking them down into their constituent parts (e.g., timestamps, log levels, messages) for further analysis.

Why is it Called Parsing?

"Parsing" comes from the Latin word "pars," meaning "part." Parsing involves disassembling symbols into their component pieces to comprehend their structure and meaning. This concept is widely used in computer science, linguistics, and data analysis.

Why is a Parser Used?

A parser is used to analyze and interpret structured data or text, transforming it into a format that is easier to process and understand.

What is a Parsing Tool?

A parsing tool is a software application or library designed to process and analyze data or text according to predefined rules or patterns. It reads raw input (such as log files, code, or data) and breaks it down into structured, meaningful components. Examples of parsing tools include SigNoz, Splunk, Logstash, and Fluentd.

What is the Fastest Log Parser?

The speed of a log parser can depend on various factors such as the volume of logs, the complexity of parsing rules, and the system's hardware.

What is Parsing in Security?

In the context of security, parsing refers to the process of analyzing and interpreting security logs and event data.

What is the Process of Parsing?

The process of parsing involves several steps to analyze and interpret data, transforming it from an unstructured or semi-structured format into a structured format for easier processing and analysis. The main steps are:

- Tokenization

- Syntax Analysis

- Semantic Analysis

- Transformation

- Error Handling

- Output Generation

What Does Parsing Mean in Data?

Parsing in data refers to the process of analyzing and interpreting a sequence of symbols or text to identify its structure and extract meaningful information. This involves breaking down the data into its component parts, such as fields and values, according to predefined rules or patterns.

Why Do I Get a Parsing Error?

Parsing errors occur when there is a problem with interpreting the structure or format of the data being analyzed. Common causes include:

- Syntax Errors

- Unexpected Characters

- Incomplete Data

- Incorrect Configuration

- Corrupted Files

What Does Parse Mean in FF Logs?

In Final Fantasy (FF) logs, "parse" refers to the process of recording and analyzing combat performance data, typically from raids or other group activities. Players use parsing tools to capture detailed logs of their actions and those of their teammates during encounters.

What is Log Parser in Python?

A log parser in Python is a script or application that uses Python's programming capabilities to read, analyze, and extract meaningful information from log files. It can process logs in various formats (e.g., text, JSON, CSV) using regular expressions, built-in string manipulation methods, or specialized libraries like logging, re, and pandas. Log parsers in Python automate the task of sifting through large amounts of log data to identify patterns, errors, and insights, making it easier to monitor and troubleshoot applications.