Managed Prometheus - Simplifying Cloud-Native Monitoring

Cloud-native applications have revolutionized business operations, but they've also introduced new challenges in monitoring and observability. Traditional monitoring tools often struggle to keep pace with the dynamic nature of containerized and microservices architectures.

Managed Prometheus, a cloud-based solution, provides a streamlined approach to monitoring these complex environments. By handling the technical complexities, Managed Prometheus lets you focus on understanding your systems and ensuring optimal performance.

In this article, we’ll explore the benefits of Managed Prometheus and how it's transforming the landscape of cloud-native monitoring.

What is Managed Prometheus and Why Does It Matter?

Managed Prometheus is a cloud-based service designed to simplify monitoring for cloud-native applications. It is based on the popular open-source monitoring tool, Prometheus, but eliminates the need for manual infrastructure management. Instead of setting up and maintaining Prometheus yourself, a managed service handles everything for you, making it easier to monitor large-scale applications. The service also typically includes built-in features such as high availability, automatic scaling, and seamless integration with other cloud-native services, making it easier to maintain a robust, end-to-end monitoring solution without the hassle of manual setup and maintenance.

Key Differences Between Self-Hosted Prometheus and Managed Services

Some of the differences between using self-hosted Prometheus and managed services include:

Infrastructure management

With self-hosted Prometheus, you're responsible for managing the entire infrastructure—provisioning servers, configuring storage, ensuring network connectivity, and handling system updates. This requires significant time, resources, and expertise, especially as your system grows.

Managed Prometheus, on the other hand, offloads these responsibilities to the cloud provider. The service automatically provisions resources, scales with demand, and keeps systems up to date. This reduces the operational overhead and allows your team to focus on improving application performance and tackling business-critical tasks instead of managing infrastructure.

High availability

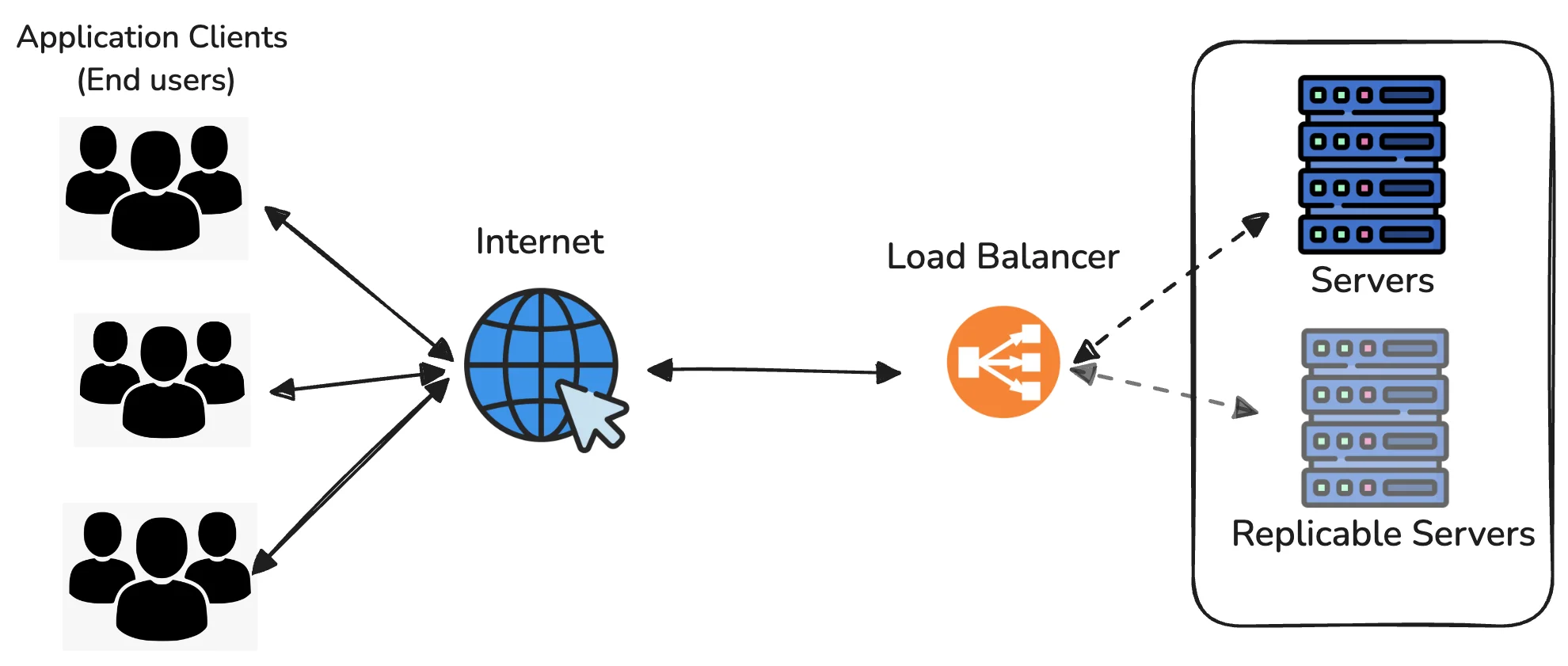

In a self-hosted setup, ensuring high availability involves configuring complex redundancy, replication, and failover mechanisms. You need to manage multiple Prometheus instances and load balancers to avoid downtime, which adds to the complexity.

Managed Prometheus simplifies this by providing built-in redundancy and failover capabilities. These features ensure uninterrupted monitoring even in the face of infrastructure failures, allowing your system to stay operational with minimal intervention.

High Availability Setup Long-term storage

Prometheus, by default, has limited data retention. To store metrics long-term, you need to set up and manage external storage systems, adding complexity and operational burden.

Managed Prometheus typically includes long-term storage as part of the service. It handles data retention automatically, allowing you to analyze historical trends, such as performance and resource usage, without worrying about managing or losing older metrics.

Integration

Self-hosted Prometheus often requires custom configurations and extra effort to integrate with other services like logging, alerting, or visualization tools. Building a complete monitoring solution requires manual setup for each integration.

Managed Prometheus simplifies this process by offering native integration with cloud ecosystems like AWS, Google Cloud, and Microsoft Azure, as well as with tools like Grafana for visualization and Alertmanager for notifications. This out-of-the-box compatibility streamlines your monitoring stack, allowing you to easily build a fully integrated observability system.

Benefits of using a Managed Service for Prometheus

- Reduced Operational Overhead: Managed Prometheus eliminates the need for managing infrastructure, updates, and scaling. Cloud providers handle these tasks, allowing your team to focus on application performance rather than system maintenance.

- Improved Scalability and Reliability: Managed services automatically scale with your application, ensuring reliable monitoring even as your system grows. High availability features like redundancy and failover are built-in, providing continuous monitoring without manual intervention.

- Enhanced Security and Compliance: Managed Prometheus includes built-in security features such as encryption and access control, often meeting regulatory standards like GDPR. This reduces the complexity and risks of managing security yourself in a self-hosted setup.

- Faster Time-to-Value: With Managed Prometheus, setup is quick and requires minimal effort. This allows teams to start monitoring their applications in minutes, leading to faster insights, early issue detection, and quicker decision-making.

The Evolution of Cloud-Native Monitoring

Traditional monitoring tools were designed for stable, monolithic applications running on dedicated servers. However, with the rise of cloud-native architectures—comprising microservices, containers, and serverless functions—monitoring has become more complex. These environments are dynamic and distributed, introducing several key challenges:

- Ephemeral resources In cloud-native environments, resources like containers and serverless functions are ephemeral, meaning they can be created and destroyed rapidly. This makes traditional monitoring approaches, which expect long-running services, less effective, as the monitoring system must quickly adapt to the changing environment.

- Service discovery Cloud-native applications often consist of many microservices that are deployed, scaled, and updated independently. As services come and go, it becomes a challenge to discover and track all these services in real-time. Effective monitoring requires a system that can automatically detect these services as they are created, modified, or removed.

- High cardinality Microservices architecture generates a large number of unique metrics, a phenomenon known as high cardinality. Each container, service, or function may produce multiple metrics, leading to an explosion in the amount of data being collected. Traditional monitoring tools can struggle to handle this volume efficiently, especially at scale.

- Resource utilization Monitoring at scale requires efficient use of computing and storage resources. Collecting and storing high volumes of metrics can quickly become resource-intensive, leading to concerns about resource utilization. A monitoring solution must be designed to manage these resources effectively, without causing a significant performance hit.

Prometheus as a Solution

Prometheus emerged as a popular tool for monitoring cloud-native environments, addressing many of these challenges:

- Pull-based Metrics Collection: Prometheus actively queries services for metrics, making it suitable for dynamic environments where services frequently start and stop.

- PromQL: The Prometheus Query Language allows extensive data analysis, filtering, and aggregation of metrics, helping detect trends and troubleshoot performance issues.

- Service Discovery: Prometheus automatically discovers and monitors new services in dynamic environments, reducing manual configuration and ensuring real-time tracking of services.

- Time-Series Database: Prometheus uses an efficient time-series database optimized for handling continuously updated metrics, making it ideal for managing high-cardinality environments.

Challenges Associated with Self-Hosted Prometheus

As organizations expanded their cloud-native infrastructures and the number of services increased, the original setup of Prometheus, while powerful, started to present some new challenges. The growth in scale and complexity of modern applications required organizations to rethink how they manage and maintain their monitoring systems. Key challenges included:

Scaling

One of the primary challenges was ensuring that Prometheus could scale effectively as the volume of metrics increased. In larger environments, where microservices, containers, and instances multiply rapidly, the sheer amount of data Prometheus needed to collect, process, and store began to strain the system

High availability

High availability is essential for mission-critical applications, where downtime or gaps in monitoring can lead to serious issues, including missing important alerts or performance problems. In a self-hosted Prometheus setup, achieving high availability is difficult. Prometheus was not natively designed to handle redundancy and failover out of the box, which means teams need to manually configure multiple Prometheus instances to avoid single points of failure

Long-term storage By default, Prometheus is designed for short-term data storage. It stores recent metrics data efficiently, which is fine for real-time monitoring, but many organizations require long-term storage for historical analysis and data retention. This is important for identifying trends over weeks or months, understanding how performance changes over time, and meeting regulatory or compliance requirements for data retention.

Operational complexity Running and maintaining Prometheus at scale introduces significant operational complexity. A self-hosted Prometheus environment requires ongoing maintenance, including regular software upgrades, patching, scaling adjustments, and managing storage capacity. As deployments grow, the number of Prometheus instances increases, adding more complexity to the system. Keeping Prometheus up-to-date and optimized becomes resource-intensive, and any mistakes during upgrades or configuration changes can lead to service disruptions.

These challenges paved the way for managed Prometheus services, which address scaling and maintenance issues while preserving the benefits of Prometheus.

Key Features of Managed Prometheus Services

Managed Prometheus services provide a range of features designed to streamline and enhance cloud-native monitoring. These features address common challenges associated with self-hosted Prometheus setups and offer significant benefits for organizations. Here’s a closer look at the key features:

- Automated setup and configuration Managed Prometheus services simplify deployment through automated setup and configuration. This means that you can quickly get started with monitoring without the need for manual setup. The service provider handles the configuration of Prometheus instances, applying industry best practices to ensure optimal performance and reliability from the start. This automation reduces the time and effort required to deploy monitoring solutions, allowing teams to focus on other critical tasks.

- Scalability and high availability One of the most notable features of managed services is their ability to provide scalability and high availability. Managed Prometheus services automatically scale to accommodate increasing volumes of metrics data. Whether your application grows or the number of services expands, the service adjusts resources accordingly to handle the load. Additionally, these services come with built-in redundancy and failover mechanisms, ensuring that monitoring remains uninterrupted even if there are infrastructure issues. This guarantees that data collection and alerting continue seamlessly, without the risk of downtime or data loss.

- Built-in security and compliance Managed Prometheus services offer strong security and compliance capabilities to safeguard your monitoring information. These services often contain strong security mechanisms like

encryption,authentication, andaccess controlto protect data from unauthorized access. In addition, these services adhere to a variety of regulatory and industry requirements, includingGDPRandSOC 2. This indicates that the service meets stringent data management and privacy standards, simplifying your compliance processes and lowering the chance of data breaches or legal concerns. - Integration with cloud ecosystems Integration with cloud ecosystems is another significant advantage of managed Prometheus services. These services are designed to work seamlessly with other cloud-based tools and services within your cloud provider’s ecosystem. This connectivity enables easy integration with additional monitoring, logging, and analytics tools, as well as with cloud-native infrastructure components like Kubernetes, serverless functions, and more. This seamless integration enhances the overall monitoring experience, providing a more cohesive view of your application’s performance and simplifying the management of your cloud-native environment.

Data Collection and Storage

Understanding how managed Prometheus handles data is crucial:

- Prometheus data model: Managed Prometheus uses a time-series data model. This means that data is organized and stored based on time. Each piece of data, or metric, is associated with a metric name (like

http_requests_total) and key-value pairs (such asmethod="GET"). This structure allows Prometheus to efficiently track and analyze how metrics change over time, providing a detailed view of performance and behavior. - Metric ingestion: Prometheus collects metrics using several methods. The primary method is

pull-based scraping, where Prometheus actively queries your services at regular intervals to gather metric data. This approach is effective in dynamic environments because Prometheus can adapt to changes in the monitored services. Additionally, Prometheus supports push gateways for metrics that are not easily scraped, allowing you to push data to Prometheus from services that cannot be directly scraped. - Long-term storage: Managed Prometheus services offer solutions for

long-term storageof metrics. While Prometheus itself is optimized for short-term data retention, managed services often provide extended retention options. This might include tiered storage, where data is stored in different layers based on age and access frequency. For example, recent data might be kept in high-performance storage for quick access, while older data is moved to more cost-effective storage solutions. This approach helps manage large volumes of historical data efficiently. - Query performance: To support quick and efficient data analysis, managed Prometheus services are optimized for

query performance. The system is designed to retrieve and analyze large datasets rapidly, allowing users to run complex queries and generate insights without delays. This optimization ensures that you can get timely answers to questions about your metrics, such as trends, anomalies, or performance bottlenecks, even as the amount of data grows.

Different managed Prometheus services might handle data storage and retention in various ways. For example, AWS for Prometheus uses a purpose-built time-series database tailored for handling large volumes of metric data efficiently. In contrast, other services might use object storage solutions for long-term retention, providing flexibility in how data is stored and accessed.

Top Managed Prometheus Offerings in the Market

Several cloud providers and specialized services offer managed Prometheus solutions:

Amazon Managed Service for Prometheus (AMP):

Fully Managed and Prometheus-Compatible: Amazon AMP is a fully managed service that is compatible with Prometheus, meaning it supports Prometheus metrics collection and querying without needing extensive setup.

Seamless Integration with AWS Services: It integrates smoothly with Amazon EKS (Elastic Kubernetes Service) and other AWS services, making it easy to monitor applications running in AWS environments.

PromQL API: Provides a PromQL-compatible API for querying metrics, allowing you to leverage the same powerful query language used in traditional Prometheus setups.

![AWS Managed Prometheus ~ Image Credits [AWS]](/img/guides/2024/10/managed-prometheus-image.webp)

AWS Managed Prometheus ~ Image Credits [AWS]

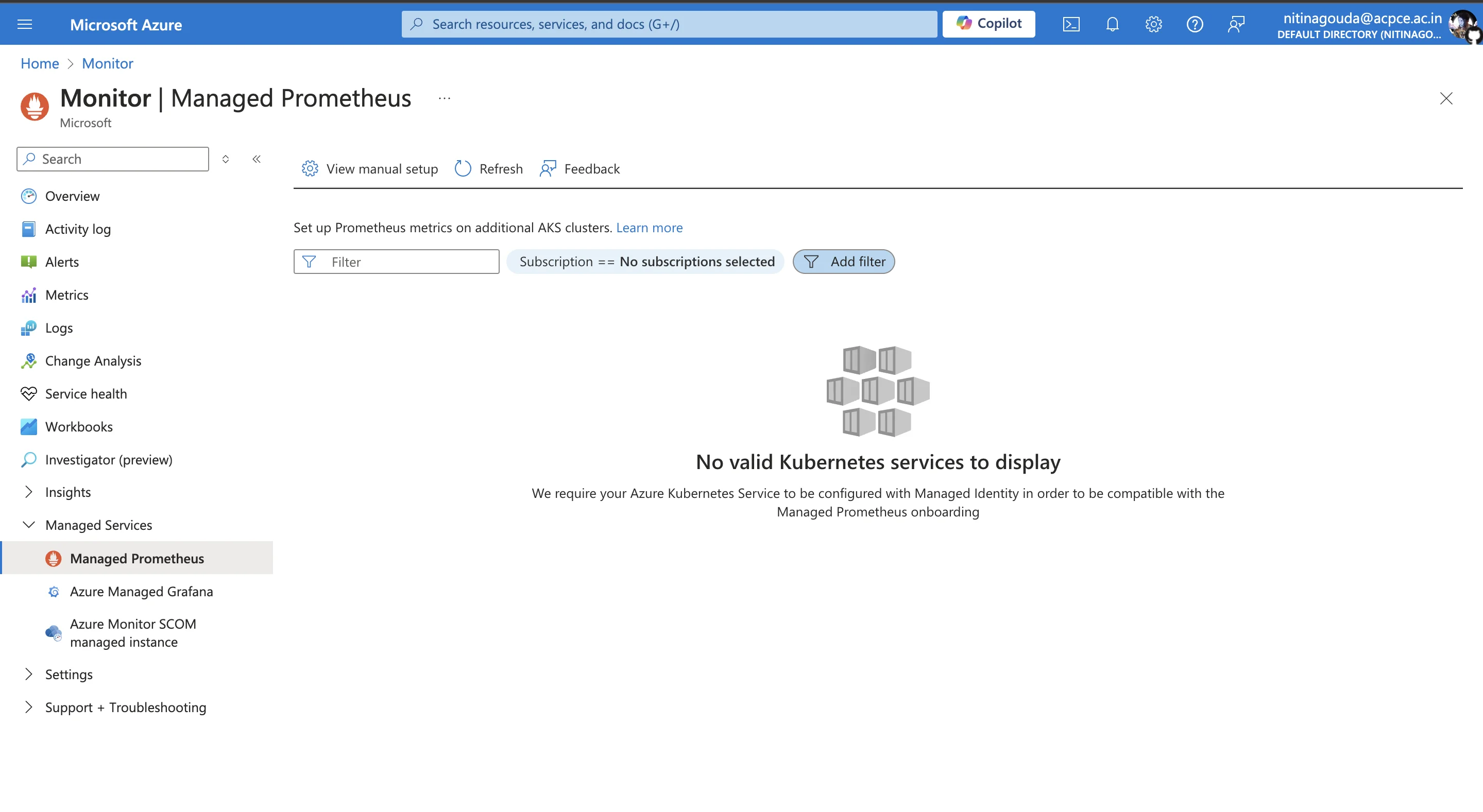

Azure Monitor Managed Service for Prometheus:

Part of Azure Monitor for Containers: This service is integrated into Azure Monitor, specifically designed for containerized applications running in the Azure ecosystem.

Prometheus-Compatible Endpoint: It offers an endpoint compatible with Prometheus, enabling you to collect and monitor metrics from your applications seamlessly.

Integration with Azure Kubernetes Service (AKS): The service integrates with Azure Kubernetes Service, simplifying the monitoring of Kubernetes clusters and workloads in Azure.

Azure Managed Prometheus

- Google Cloud Managed Service for Prometheus:

- Integrated with Google Kubernetes Engine (GKE): This managed service is designed to work well with Google Kubernetes Engine, providing a robust monitoring solution for applications running in Google Cloud.

- Long-Term Storage and PromQL-Compatible API: It offers long-term storage options and supports the PromQL query language, allowing for detailed analysis and historical data retention.

- Seamless Integration with Google Cloud Operations Suite: Provides easy integration with other Google Cloud tools and services, enhancing overall observability and management.

![Google Cloud Managed Service for Prometheus ~ Image Credits ~ [GCP]](/img/guides/2024/10/managed-prometheus-image 1.webp)

Google Cloud Managed Service for Prometheus ~ Image Credits ~ [GCP] - Grafana Cloud:

Managed Prometheus in a Broader Observability Platform: Grafana Cloud offers managed Prometheus as part of its comprehensive observability platform, which includes features beyond just metrics collection.

Additional Features: Includes advanced features like alerting, dashboarding, and data visualization, which help in gaining deeper insights into your metrics.

Support for Multi-Cloud and Hybrid Environments: Grafana Cloud supports a variety of environments, including multi-cloud and hybrid setups, making it a versatile choice for organizations with diverse infrastructure.

![Grafana Cloud ~ Image Credits ~ [Grafana]](/img/guides/2024/10/managed-prometheus-image 2.webp)

Grafana Cloud ~ Image Credits ~ [Grafana]

Choosing the Right Managed Prometheus Service

When selecting a managed Prometheus service, consider the following factors:

- Compatibility Ensure the managed Prometheus service is compatible with your current infrastructure, tools, and applications. This includes checking whether it supports the metrics you’re already collecting and integrates well with your existing systems, such as cloud providers, container orchestration platforms (like Kubernetes), and other monitoring tools. Seamless compatibility will reduce the complexity of migrating to the service.

- Scalability It's essential to choose a service that can grow with your needs. Evaluate its ability to handle increasing volumes of metrics as your application scales. Some services may limit the amount of data they can process or store, while others provide automatic scaling to handle higher loads. Ensure the service can scale both horizontally (across multiple instances) and vertically (within a single instance) to meet your long-term requirements.

- Cost Managed Prometheus services often have different pricing models, so it’s important to understand the costs involved. Pay attention to how pricing is calculated typically based on factors like the rate of data ingestion (how much data is being collected), storage retention (how long data is stored), and the number of queries performed. Make sure you choose a service that matches your budget and has a clear price structure with no hidden fees.

- Features Beyond basic monitoring, many managed Prometheus services offer additional features that can enhance your observability stack. Look for capabilities like alerting, advanced visualization tools (for creating customized dashboards), and integrations with other services such as logging platforms or cloud-native monitoring solutions. The appropriate set of features can help you streamline your monitoring operations and gain deeper insights into your system's performance.

- Support The quality of support can be a critical factor, especially if you're new to Prometheus or have complex monitoring needs. Consider the availability and responsiveness of vendor support, the quality of their documentation, and whether they offer additional resources like training or a strong user community. Services with good support can help resolve issues quickly and provide guidance on best practices for setting up and optimizing your monitoring environment.

Implementing Managed Prometheus in Your Stack

To get started with a managed Prometheus service:

- Choose a provider The first step is to select a managed Prometheus service provider that fits your organization’s needs. Managed Prometheus providers handle the underlying infrastructure, allowing you to focus on monitoring your applications without worrying about maintenance, scaling, or upgrades. When choosing a provider, consider.

- Scalability: Ensure the provider can scale to support your growing data needs.

- Integration: Check if the service integrates easily with your current tech stack, such as Kubernetes or other cloud services.

- Cost: Evaluate pricing based on your expected data volume and retention period.

- Support: Look for a provider that offers strong customer support and documentation.

- Set up the service Once you've selected a provider, follow their setup instructions. This typically involves creating a Prometheus instance via the provider's user interface or API, specifying storage and retention settings, and setting up any necessary authentication or authorization. Providers usually offer step-by-step tutorials to guide you through the setup process. Follow these closely to ensure proper configuration.

- Configure data sources To start collecting metrics, you'll need to configure Prometheus to scrape data from your applications and infrastructure. Use Prometheus exporters (or agents) to collect metrics from various systems (e.g., Node Exporter for system metrics or application-specific exporters). Many managed services offer integrations with popular systems, reducing the need for manual configuration.

- Migrate existing data If you're transitioning from a self-hosted Prometheus setup, migrating historical data can ensure continuity. Some managed services provide tools for data migration, or you can use Prometheus’s remote write capabilities to push data to the new service. Start with a limited migration to test the new setup and verify data integrity before moving all your data.

- Set up dashboards and alerts After collecting metrics, you’ll want to visualize them and set up alerts. Most managed Prometheus services integrate with Grafana for dashboards, where you can create custom views of your data. Set alerting rules based on critical metrics to notify your team of potential issues. Start with pre-built dashboards offered by your service provider and customize them as you become more familiar with your monitoring needs.

Best Practices for Migration

To successfully migrate your existing Prometheus setup, follow these best practices:

- Before migrating everything, begin with a pilot project to test the managed service. Monitor its performance, scalability, and compatibility with your existing infrastructure. This will help you identify any issues early without impacting your entire system.

- Prometheus federation allows you to run managed and self-hosted instances in parallel. This enables a smoother transition, giving you time to gradually migrate workloads. You can collect metrics from both environments, ensuring consistency and avoiding data loss during the switch.

- Compare the data from your managed service with the metrics from your self-hosted Prometheus instance. Pay attention to any discrepancies and fix them before the full migration. This ensures the accuracy and completeness of your monitoring setup in the new environment.

- After completing the migration, make sure to update all internal documentation, playbooks, and runbooks. This includes changes to procedures, new alerting rules, and how to troubleshoot the managed Prometheus service. Having updated guides ensures your team can effectively manage the new setup without confusion.

Integrating with Existing Tools

Managed Prometheus services often provide APIs and integrations to work with popular observability tools:

- PromQL: Managed Prometheus fully supports

PromQL, allowing you to reuse queries developed for self-hosted Prometheus, ensuring continuity in your metric analysis. You can perform complex queries for aggregating data, setting up alerts, and visualizing trends without additional configuration. SigNoz also supports PromQL, offering features like distributed tracing and real-time monitoring, providing deeper insights into your application performance. - Grafana: Grafana, widely known for its powerful visual dashboards, integrates smoothly with managed Prometheus. This enables real-time visualization of metrics from your infrastructure and applications. You can monitor system health, identify trends, and set up custom alerts. Managed Prometheus uses the same data format as self-hosted instances, meaning your existing Grafana dashboards work with minimal changes. SigNoz can also be integrated with Grafana for added flexibility in visualization.

- Alertmanager: Managed Prometheus services often integrate with Prometheus Alertmanager or other alerting systems, enabling you to define custom alert rules and receive notifications via email, SMS, or other communication platforms. These alerts help streamline operational efficiency by automating incident responses and routing alerts to the right on-call teams.

- Logging and tracing: Observability isn't complete without a combination of metrics, logs, and traces. Managed Prometheus integrates with logging systems like Loki and tracing tools like Jaeger and Zipkin to give you a comprehensive understanding of system performance. This allows you to track issues at different levels, from high-level metrics to specific traces, making it easier to pinpoint root causes. SigNoz also offers an integrated view of logs, metrics, and traces, giving you a unified platform for observability.

- SigNoz: SigNoz is an open-source alternative to traditional monitoring tools, providing integrated monitoring for metrics, logs, and distributed tracing. Like Prometheus, SigNoz supports

PromQL, allowing you to query your metrics easily. It offers powerful features like distributed tracing, helping you trace requests across microservices, and provides a unified platform for monitoring application performance in real-time. This makes troubleshooting more efficient and provides deeper insights into system behavior across different layers.

Optimizing Your Monitoring Strategy with SigNoz

To effectively monitor your applications and systems, leveraging an advanced observability platform like SigNoz can elevate your monitoring strategy. SigNoz is an open-source observability tool that provides end-to-end monitoring, troubleshooting, and alerting capabilities across your entire application stack.

Built on OpenTelemetry—the emerging industry standard for telemetry data—SigNoz integrates seamlessly with your existing Managed Prometheus setup. Using the OpenTelemetry Collector’s Prometheus receiver, SigNoz ingests Prometheus metrics and correlates them with distributed traces and logs, delivering a unified observability solution. This integration allows you to:

- Extend Prometheus Monitoring: Retain your existing Prometheus setup while enhancing it with tracing and logging capabilities.

- Faster Troubleshooting: Correlate metrics with traces and logs to quickly identify and resolve performance issues.

- Flexible Data Analysis: Use both PromQL and SigNoz's advanced query builder for versatile data exploration and analysis.

- Unified Dashboards: Build custom dashboards that bring together metrics, traces, and logs, helping you monitor key performance indicators and identify trends effectively.

For more information on integrating Prometheus metrics with SigNoz, check out the OpenTelemetry Collector Prometheus Receiver guide.

Benefits of using SigNoz for End-to-End Observability

In addition to extending Prometheus monitoring, SigNoz offers the following benefits:

- Unified Monitoring Across Metrics, Traces, and Logs: SigNoz consolidates all observability data—metrics, logs, and traces—into a single platform. This holistic view provides a deeper understanding of system health, enabling you to correlate events and metrics for better visibility and faster issue diagnosis.

- Efficient Root-Cause Analysis: By collecting and linking metrics, traces, and logs, SigNoz facilitates quick and precise root-cause analysis. You can trace requests across services and drill down into logs or metrics to pinpoint performance issues—all within one tool.

- Proactive Issue Detection with Real-Time Alerts: SigNoz supports setting up real-time alerts based on predefined thresholds or anomalies in your metrics. With these instant alerts, you can proactively address issues before they impact your users, improving uptime and reliability.

SigNoz Cloud is the easiest way to run SigNoz. Sign up for a free account and get 30 days of unlimited access to all features.

You can also install and self-host SigNoz yourself since it is open-source. With 24,000+ GitHub stars, open-source SigNoz is loved by developers. Find the instructions to self-host SigNoz.

Performance and Scalability Considerations

As cloud-native applications scale, maintaining optimal performance in a managed Prometheus environment becomes crucial. Managed services handle much of the complexity behind the scenes, but there are still important performance and scalability factors to consider. Here’s an in-depth look at key considerations:

- High-volume metric ingestion: As your system grows, the number of metrics collected can increase exponentially. Properly managing high-volume metric ingestion is essential to prevent performance bottlenecks.

- Use Service Discovery: In dynamic environments, where containers and services are frequently spun up and down, Prometheus' service discovery feature can automatically find and scrape new targets. This minimizes manual configuration and ensures that you always have up-to-date metrics, even in rapidly changing environments.

- Implement Metric Aggregation and Pre-Processing: When dealing with large amounts of raw metrics, consider using aggregation techniques to reduce data volume. Aggregating at the source (e.g., summarizing data at specific intervals) can reduce the load on Prometheus and improve query performance, especially when fine-grained detail isn’t necessary.

- Leverage Push Gateways: For applications that cannot be scraped directly (e.g., batch jobs), using a Prometheus push gateway allows metrics to be sent in batches. This reduces the need for frequent scrapes and can improve efficiency in environments with intermittent workloads.

- Query performance optimization: As your dataset grows, Prometheus queries (especially complex PromQL queries) can become resource-intensive. Optimizing query performance ensures that your monitoring dashboards remain responsive, even with large-scale deployments.

- Use Efficient PromQL Queries: Avoid overly complex queries that require heavy processing, especially for real-time monitoring. Simplify queries where possible by reducing the number of time series analyzed. Additionally, avoid using functions that require large amounts of data, such as

rate()orhistogram_quantile()over long time ranges. - Implement Caching for Frequently Accessed Dashboards: Many managed services allow you to cache query results for frequently used dashboards. Caching ensures that users don’t repeatedly query the same data, reducing load on your Prometheus instance and improving response times.

- Query Federation for Large-Scale Deployments: In very large environments, splitting your Prometheus instances into multiple smaller, federated instances can help distribute the query load. Query federation allows you to run global queries across these instances while keeping query times manageable.

- Use Efficient PromQL Queries: Avoid overly complex queries that require heavy processing, especially for real-time monitoring. Simplify queries where possible by reducing the number of time series analyzed. Additionally, avoid using functions that require large amounts of data, such as

- Cost management: Managed Prometheus services can accumulate costs based on the volume of data ingested and retained, as well as the query resources consumed. Managing these aspects proactively can help you avoid unexpected expenses.

- Monitor Metric Volume and Adjust Retention Policies: Regularly review the number of metrics being ingested and adjust retention policies to balance the need for historical data with storage costs. Keeping metrics for too long can increase costs unnecessarily, especially if you don’t need to analyze older data.

- Use Metric Relabeling: Reduce unnecessary data by relabeling metrics at the collection stage. Prometheus allows you to drop unneeded labels or rename them to optimize storage. This can significantly reduce data volume, especially in environments where similar metrics are collected from multiple sources.

- Leverage Cloud Provider Cost Optimization Tools: Many cloud providers offer cost management tools that can help monitor and optimize usage. These tools can provide insights into where your monitoring infrastructure is consuming resources and recommend adjustments to save costs. For instance, you may identify opportunities to reduce storage costs by compressing old metrics or lowering data retention times.

Security and Compliance in Managed Prometheus

Managed Prometheus services often provide enhanced security features:

- Data encryption: Managed Prometheus services normally encrypt your metric data at rest and in transit to prevent unauthorized access during storage and transmission. This assures that even if data is intercepted, it will be illegible without the encryption keys.

- Access control: Many systems use fine-grained access controls, which allow administrators to assign precise roles and permissions to users. This reduces vulnerability by ensuring that only authorized workers may view or manage metrics.

- Audit logging: Managed services typically offer detailed audit logs, tracking who accessed the system, what changes were made, and when these actions occurred. This provides transparency and is essential for both security monitoring and compliance reporting.

- Compliance certifications: Many managed Prometheus providers adhere to industry-standard security and compliance frameworks like SOC 2, HIPAA, or GDPR. These certifications ensure that the service meets strict regulatory requirements for data handling and privacy.

Best practices for securing your monitoring infrastructure:

- Principle of Least Privilege (PoLP): Give users and services only the necessary rights. As a result, the likelihood of unauthorized access or unintentional misconfigurations is decreased, potentially reducing the attack surface.

- Network Isolation: Place your Prometheus servers and endpoints behind network boundaries. Use VPNs, private subnets, or firewall rules to control who can access your metric collection systems. Restrict access only to trusted systems and users.

- Regular Security Audits: Routinely review your access control policies, audit logs, and system configurations. Ensure that outdated accounts are removed and that any potential vulnerabilities are addressed promptly.

- Encrypt Sensitive Metric Data: Be cautious when including sensitive information (such as user IDs, API keys, or IP addresses) in metric labels. Encrypt or sanitize this data to ensure that it doesn’t become a source of information leakage in your observability systems.

Future Trends in Cloud-Native Monitoring

The field of cloud-native monitoring is rapidly evolving:

- AI-powered anomaly detection: Artificial intelligence (AI) and machine learning (ML) are becoming increasingly important in monitoring. AI-powered anomaly detection uses machine learning algorithms to automatically identify unusual patterns in metrics that may indicate problems. This reduces the reliance on manual thresholds or static alerts, allowing systems to detect issues faster and more accurately, even in complex, dynamic environments. As AI becomes more sophisticated, this technology will help teams proactively manage performance and prevent outages before they escalate.

- Automated root cause analysis: (x) Finding the root cause of an issue in cloud-native applications can be challenging due to the sheer volume of data from metrics, logs, and traces. Automated root cause analysis uses intelligent systems to correlate data across these sources and quickly identify the underlying cause of an issue. This trend is set to revolutionize troubleshooting by significantly reducing the time it takes to resolve incidents and helping teams make data-driven decisions to improve system reliability.

- Edge monitoring: As more firms use edge computing, in which data is processed closer to the source rather than centralized in the cloud, there is a greater demand for effective edge monitoring. This involves extending observability to edge environments, ensuring that even remote or distributed systems are monitored effectively. Edge monitoring allows organizations to gain insights into performance, security, and reliability at the edge, making it an essential trend for future cloud-native applications.

- eBPF-based monitoring: Extended Berkeley Packet Filter (eBPF) technology is emerging as a powerful tool for understanding system behavior. eBPF allows you to safely run custom monitoring programs directly in the Linux kernel, capturing high-fidelity data without the overhead typically associated with traditional monitoring agents. This approach enables more granular monitoring of system-level events, network traffic, and application performance, providing a more detailed understanding of system operations.

- Unified observability platforms: The future of monitoring is moving towards unified observability platforms that combine metrics, logs, and traces into a single, integrated solution. This convergence streamlines the monitoring process by offering a comprehensive view of the complete system in a single platform, removing the need to manage several tools. Unified platforms make it easier to understand how different aspects of the system are interconnected, improving both troubleshooting and optimization efforts.

As these trends develop, managed Prometheus services will likely adapt to incorporate new capabilities and address emerging challenges in cloud-native environments.

Key Takeaways

- By handling infrastructure management and scaling, Managed Prometheus services reduce the operational burden on your team. This allows you to focus on monitoring and optimizing your applications rather than managing complex infrastructure.

- These services offer automated setup, effortless scalability, and seamless integration with cloud ecosystems. This makes it easier to deploy and manage Prometheus for monitoring cloud-native applications without needing deep expertise in infrastructure management.

- Leading cloud providers like Amazon Web Services (AWS), Microsoft Azure, and Google Cloud offer robust managed Prometheus services. Additionally, specialized platforms like Grafana Cloud provide tailored solutions, each with unique features to suit different use cases.

- Implementing Managed Prometheus involves choosing the right provider, configuring data sources to gather metrics, and setting up dashboards and alerts for monitoring. This ensures you have real-time insights into your application’s performance and health, with minimal manual intervention.

- SigNoz works alongside Managed Prometheus, enhancing your observability capabilities by offering additional features such as distributed tracing and better visualization. It provides a more comprehensive view of your system's performance, making it easier to troubleshoot issues.

- When implementing Managed Prometheus, it's important to consider factors like performance, scalability, and security. Ensuring the system can handle growing workloads, while maintaining secure and compliant data management, is critical to a successful deployment.

- The future of monitoring is moving towards AI-driven insights and unified observability platforms that combine metrics, logs, and traces in one solution. This will lead to smarter, more automated monitoring, helping teams identify and resolve issues faster.

FAQs

What are the main differences between self-hosted and managed Prometheus?

Managed Prometheus and self-hosted Prometheus differ in several key ways:

- Infrastructure Management:

- Managed Prometheus services handle all aspects of infrastructure management, including server setup, scaling, and maintenance. This reduces the burden on your team and ensures that the system is always up-to-date and performing optimally.

- In contrast, self-hosted Prometheus requires you to manage these tasks yourself, which can be resource-intensive and complex.

- Scalability and High Availability:

- Managed Prometheus services come with built-in scalability and high availability features. They automatically adjust resources to handle increased metric volumes and include redundancy and failover mechanisms to prevent downtime.

- Self-hosted Prometheus requires manual configuration for scaling and high availability, which can be challenging to implement and maintain effectively.

- Security Features:

- Managed services often provide enhanced security features such as encryption, access controls, and compliance with industry standards. This is handled by the service provider, ensuring that your data is protected.

- With self-hosted Prometheus, you need to implement and manage these security measures yourself, which requires additional expertise and effort.

- Customization:

- Self-hosted Prometheus allows for greater customization in terms of configuration and integration with other systems. If your monitoring needs require specific adjustments or integrations, self-hosted Prometheus offers more flexibility.

- Managed Prometheus services, while convenient, may have limitations on customization compared to a self-hosted setup.

How does managed Prometheus handle data retention and long-term storage?

Managed Prometheus services address data retention and long-term storage through:

- Tiered Storage Options: Many managed services use tiered storage, where data is moved to different storage layers based on its age and access frequency. Recent data might be kept in high-performance storage for quick access, while older data is moved to more cost-effective, long-term storage. This approach helps manage costs while retaining important historical data.

- Automatic Data Lifecycle Management: Managed services often automate the process of managing data lifecycle. This means that the system automatically handles data retention policies, such as how long to keep data and when to archive or delete it. This automation reduces the need for manual intervention and ensures compliance with data retention requirements.

Can I use my existing Prometheus exporters with managed Prometheus services?

Yes, most managed Prometheus services are compatible with standard Prometheus exporters. This compatibility allows you to continue using your existing exporters and monitoring configurations with minimal changes. Since Prometheus exporters are designed to work with the Prometheus data model and query language, they can generally be integrated into managed services without significant modifications.

How do managed Prometheus services ensure high availability and disaster recovery?

Managed Prometheus services ensure high availability and disaster recovery through:

- Redundancy: They typically implement redundancy across multiple

availability zonesorregions. This means that if one zone or region experiences issues, the system can continue to operate from another, minimizing downtime. - Automatic Failover Mechanisms: These services have built-in failover mechanisms that automatically switch to backup systems or instances if the primary system fails. This ensures that monitoring continues without interruptions.

- Regular Backups: Managed services perform regular backups of data to protect against data loss. In case of infrastructure failures or other issues, these backups can be used to restore data and resume normal operations.

By leveraging these features, managed Prometheus services provide robust solutions for maintaining continuous monitoring capabilities and safeguarding against data loss.