Essential Guide to Remote Server Monitoring in 2026

Remote server monitoring is the process of continuously observing the health and performance of servers and applications from an external location. It enables teams to track key metrics (like CPU usage, memory, and response times), collect error logs, trigger alerts on abnormal conditions, and even automate responses. At its core, monitoring provides visibility into a system, which is essential for judging service health and diagnosing issues.

SREs and developers rely on monitoring to:

- Track performance metrics: CPU, memory, disk I/O, network throughput, and application-specific metrics (like request latency or throughput) over time.

- Log and analyze errors: Collect application logs, system logs, and error traces to debug failures and exceptions.

- Alert on issues: Automatically notify on-call engineers when defined thresholds are breached or anomalies are detected, so they can respond quickly.

- Automate remediation: Trigger automated workflows or scripts in response to certain alerts (for example, restart a service or scale out resources) to reduce manual intervention.

In cloud environments, servers may be distributed across data centers or regions, making remote monitoring (often via network) the only way to gather telemetry. The goal is to detect problems early and minimize downtime or performance degradation for end users.

Key Aspects of Remote Monitoring

Effective remote server monitoring involves more than just tracking performance metrics; it requires a comprehensive approach encompassing system resources, application behavior, security measures, and rapid response mechanisms. By addressing these core components, organizations can ensure their IT infrastructure remains reliable, secure, and efficient.

1. System Resource Tracking

Monitoring system resources forms the foundation of server monitoring, ensuring that critical components such as CPU, memory, disk storage, and network performance operate within optimal thresholds. Consistently tracking these metrics helps identify bottlenecks, prevent resource exhaustion, and maintain overall system stability.

Key metrics to monitor:

- CPU Utilization: High CPU usage often signals resource bottlenecks or misconfigured applications. Sustained usage above 85% can lead to performance degradation, slower application response times, or system instability. Monitoring CPU utilization helps ensure critical processes have the resources to run efficiently.

- Memory Usage: Monitoring memory consumption is essential to prevent issues like memory leaks, application crashes, or slow performance caused by insufficient available memory. A system running low on memory may experience significant slowdowns, particularly during peak usage. Tracking memory metrics helps maintain smooth operations and prevent resource exhaustion.

- Disk I/O and Storage: Disk I/O and storage monitoring ensure that read/write operations and available storage capacity are sufficient for system demands. High disk I/O can result in delays for applications accessing data, while nearly full disks can cause disruptions or prevent the saving of critical data. Regular monitoring allows teams to address these issues before they impact performance.

- Network Performance: Metrics like bandwidth usage, packet loss, and latency are crucial for maintaining reliable communication between servers and users. Network congestion or hardware issues often manifest as high latency or dropped packets, directly affecting application performance. Monitoring these metrics ensures stable and efficient data flow across the network.

2. Application Performance Monitoring

Applications are central to most IT infrastructures, and their performance directly impacts user experience. Application Performance Monitoring (APM) tools focus on application-specific metrics, providing valuable insights to optimize performance and identify issues.

Critical APM metrics:

- Response Times: This metric measures how long it takes for an application to respond to user requests. For example, if response times exceed 2 seconds, it could indicate performance issues like high server load or slow database queries, affecting user experience.

- Error Rates: Error rates track the frequency of issues such as failed requests or crashes. An increase in errors, such as 500 Internal Server Errors, may point to problems in the application code or server configuration, requiring immediate attention.

- User Experience Indicators: Metrics like page load times and transaction success rates help gauge how users perceive the application's performance. Slow load times or failed transactions can lead to user frustration and decreased engagement, highlighting areas for improvement.

- Request Rate (Throughput): Measures the volume of requests processed by the application, helping to identify traffic patterns and capacity requirements.

Google's SRE philosophy recommends focusing on the "Four Golden Signals" for any service: Latency, Traffic, Errors, and Saturation. These fundamental metrics provide a comprehensive view of service health and performance, enabling teams to quickly detect abnormalities and respond appropriately.

In practice, this means measuring how long requests take (latency), how much demand is hitting the system (traffic/request rate), how many errors are occurring, and how utilized the system resources are (CPU, memory saturation). Tracking these over time helps in capacity planning and in detecting abnormal behavior quickly.

3. Error Logging and Analysis

Beyond metrics, comprehensive log collection and analysis are crucial for effective monitoring. Logs capture detailed information about events and errors that might not appear as metric anomalies.

Key logging components:

- Centralized Log Aggregation: Implementing a centralized logging system where servers send their logs to a remote management system enables teams to search and filter logs across distributed systems. Tools like Fluentd, Logstash, or cloud-native solutions facilitate this process.

- Structured Logging: Using structured formats like JSON for logs makes them easier to parse, query, and analyze. This approach enables more efficient troubleshooting, such as counting specific error occurrences or identifying patterns.

- Log Correlation: Correlating logs with metrics provides richer context for troubleshooting. For instance, seeing that an error log entry coincided with a latency spike can help pinpoint the cause of performance issues.

4. Security Monitoring

Security is a critical aspect of remote server monitoring. Integrating security features into monitoring tools helps protect servers from potential breaches and ensures compliance with regulatory standards.

Key security components:

- Intrusion Detection Systems (IDS): IDSs are designed to identify unauthorized access attempts or unusual activity patterns that could signal a security breach. These systems monitor network traffic, server logs, and user behavior for anomalies, such as multiple failed login attempts or access from untrusted IP addresses. Early detection through IDS helps mitigate potential threats before they escalate.

- Log Analysis: Analyzing server logs is critical for identifying anomalies or suspicious activities. Logs provide detailed records of server events, such as login attempts, system changes, and error reports. Repeated login failures, unexpected modifications to sensitive files, or unusual patterns of access are common indicators of security threats. Regular log analysis ensures that any signs of compromise are promptly detected and addressed.

- Compliance Checks: Compliance checks ensure that servers adhere to regulatory standards like GDPR, HIPAA, or PCI-DSS. These standards often mandate strict guidelines for data protection, encryption, and user privacy. Regularly auditing server configurations and monitoring compliance ensures not only the security of sensitive information but also helps avoid legal penalties or reputational damage resulting from non-compliance.

5. Alerting and Notification Systems

A robust alerting and notification system ensures that IT teams are promptly informed of critical issues. It minimizes downtime by enabling quick responses to potential threats or performance bottlenecks.

Key features include:

- Thresholds: Defining thresholds for critical metrics is essential to trigger timely alerts when systems approach or exceed operational limits. For example, setting a threshold for CPU usage at 90% or disk space at 10% ensures that IT teams are alerted before these metrics reach levels that could lead to performance degradation or system failure. Clearly defined thresholds help prioritize responses to issues based on their severity.

- Multi-Channel Notifications: A robust alerting system uses multiple communication channels—such as email, SMS, and push notifications—to deliver alerts promptly to the right team members. This redundancy ensures that critical alerts are not missed, even if one communication channel fails. For instance, a server experiencing high latency can trigger notifications via all channels, enabling swift action to minimize downtime.

- Escalation Procedures: Tiered escalation workflows are vital for ensuring that unresolved issues are addressed at higher levels of responsibility. If an alert remains unresolved within a set timeframe, it is automatically escalated to senior personnel or backup teams. This approach ensures accountability and prevents critical incidents from being overlooked, maintaining system reliability and uptime.

- Alert Severity Levels: Implementing different severity levels (info, warning, critical) helps distinguish minor issues from major incidents. This classification enables teams to prioritize their response efforts effectively.

6. Automation in Monitoring

Automation plays a crucial role in modern monitoring systems, reducing manual effort and accelerating incident response.

Key automation aspects:

- Auto-Discovery: Modern monitoring platforms can automatically discover and register new servers or services, ensuring they're monitored without manual intervention. This is particularly valuable in dynamic cloud environments.

- Auto-Remediation: Predefined actions can execute automatically when certain conditions occur. For example, a system might restart an unresponsive service, clean up disk space when storage is low, or scale out resources during high load periods.

- Alert Processing: Automation can filter, group, and route alerts intelligently. For instance, related alerts can be consolidated into a single notification to prevent alert fatigue, or alerts can be automatically classified by urgency to prioritize response efforts.

How to Implement a Robust Remote Server Monitoring Strategy

Implementing an effective remote server monitoring strategy involves several key steps:

Assess Your Infrastructure Create an inventory of all servers, applications, and devices in your network. Identify critical systems and map dependencies between them to prioritize monitoring efforts.

Define Monitoring Goals Establish performance baselines representing normal operating conditions for your servers. Define measurable objectives like maintaining 99.9% uptime or ensuring application response times under 2 seconds during peak traffic.

Select Appropriate Monitoring Tools Choose solutions based on your infrastructure, needs, and budget. For small setups, tools like SigNoz, Nagios or Zabbix might suffice. Larger enterprises may need platforms like Datadog or SolarWinds that offer scalability and integration capabilities.

Establish Baselines and Set Alerts Use monitoring tools to determine typical performance metrics and configure alerts for significant deviations. For example, set notifications when CPU usage exceeds 90% for more than 10 minutes or free disk space falls below 15%.

Develop Incident Response Procedures Create clear escalation protocols and document troubleshooting steps for common issues. Specify who gets notified at each stage of an incident and outline resolution procedures.

Train Your Team Ensure staff understand how to use monitoring systems, interpret alerts, and follow response protocols. Run simulated drills to prepare for real-world scenarios and build confidence in handling incidents.

Regularly Review and Adjust Your Strategy As your infrastructure evolves, review and update your monitoring approach. Incorporate feedback from incidents to refine procedures and improve system reliability.

Leveraging SigNoz for Advanced Remote Server Monitoring

Remote server monitoring is essential for maintaining the health, performance, and security of modern IT infrastructures. Whether your servers are hosted in the cloud, on-premises, or in a hybrid setup, having a comprehensive monitoring strategy ensures minimal downtime and efficient operations.

SigNoz is a powerful observability platform that simplifies remote server monitoring. With its ability to monitor infrastructure, applications, and user experience in one unified platform, SigNoz provides the visibility teams need to keep their systems running smoothly. By the end of this walkthrough, you’ll have a clear understanding of how to use SigNoz to monitor your server resources, application performance, and system health in real-time.

Follow the steps outlined below to set up and leverage SigNoz effectively for advanced remote server monitoring:

Create a SigNoz Cloud Account: SigNoz Cloud provides a 30-day free trial period. This demo uses SigNoz Cloud, but you can choose to use the open-source version.

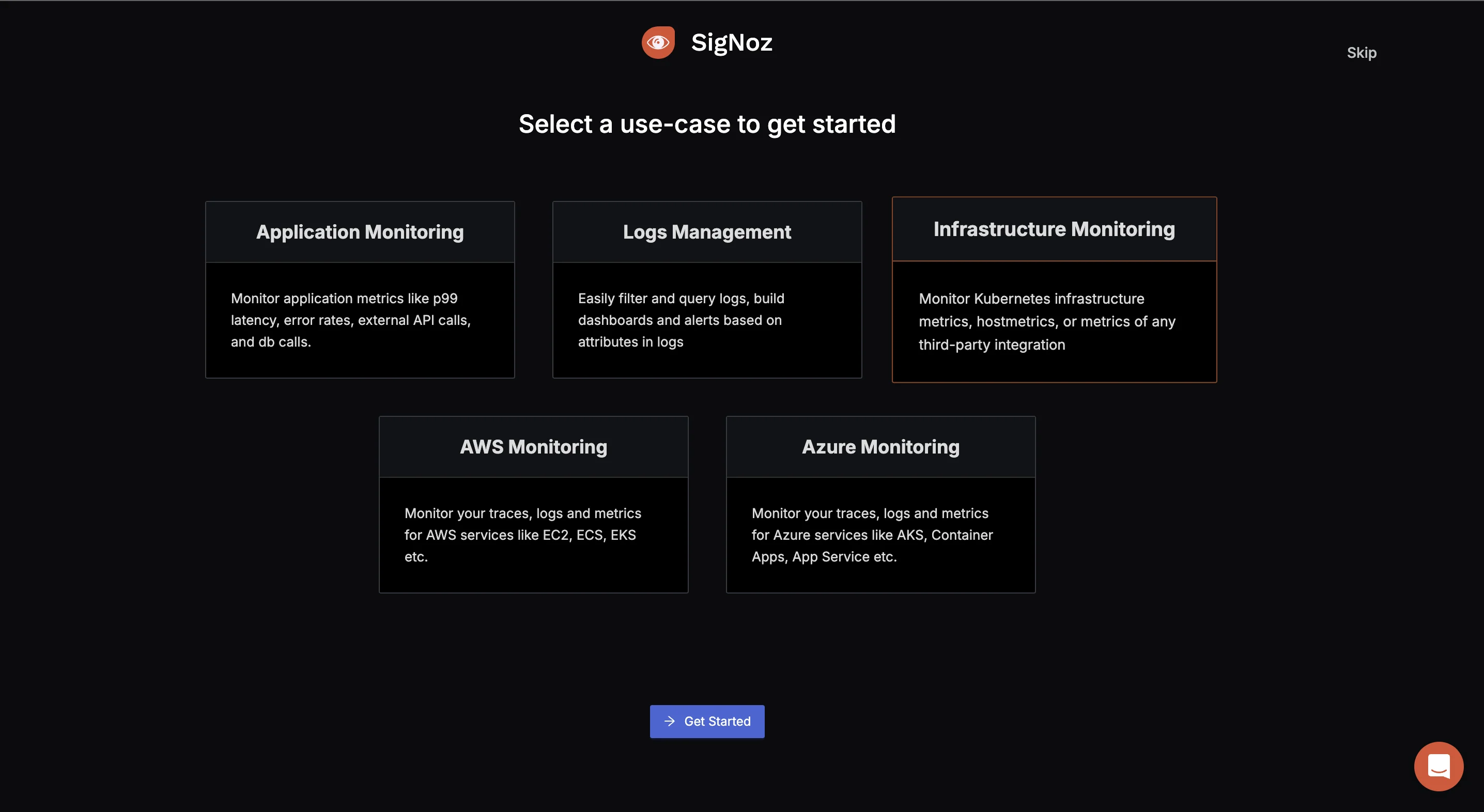

Using SigNoz Infrastructure Monitoring: In the top left corner, click on

Get Started.

SigNoz Dashboard You are redirected to “Get Started Page”. Choose

Infrastructure Monitoring

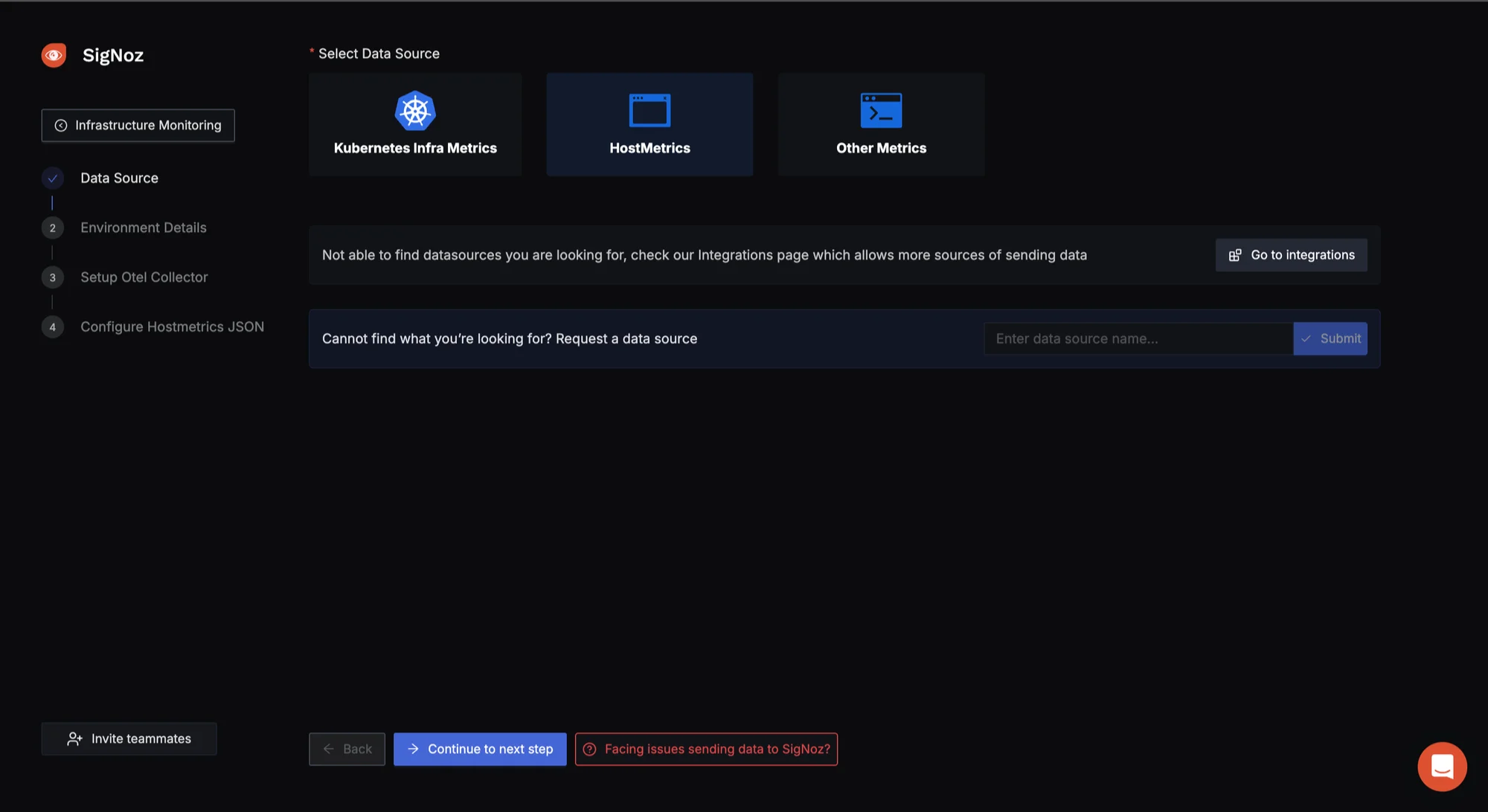

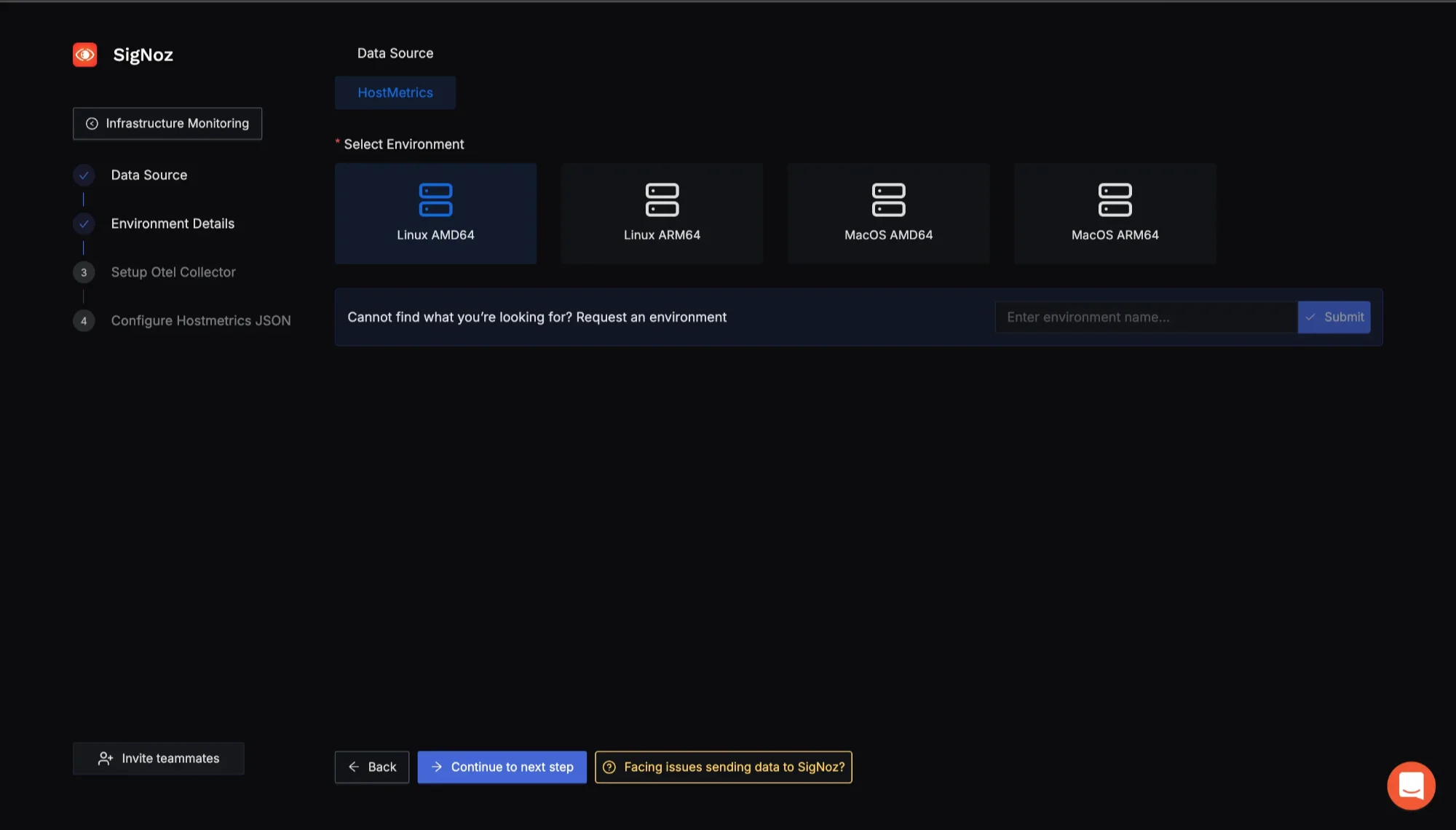

Infrastructure Monitoring Select Data Source: You must select the Data Source. Since the metrics to be collected here are from the host system, select

HostMetrics.

SigNoz Host Metrics Choose the environment of your host system. For this demo, I am using Ubuntu AMD64.

Host System Environment Setup OTel Collector as an agent: For more information, refer to OpenTelemetry Binary Usage in Virtual Machine(VM) to get your Otel Collector agent up and running.

Note: Download the latest version of the Otel Collector that matches your host operating system.

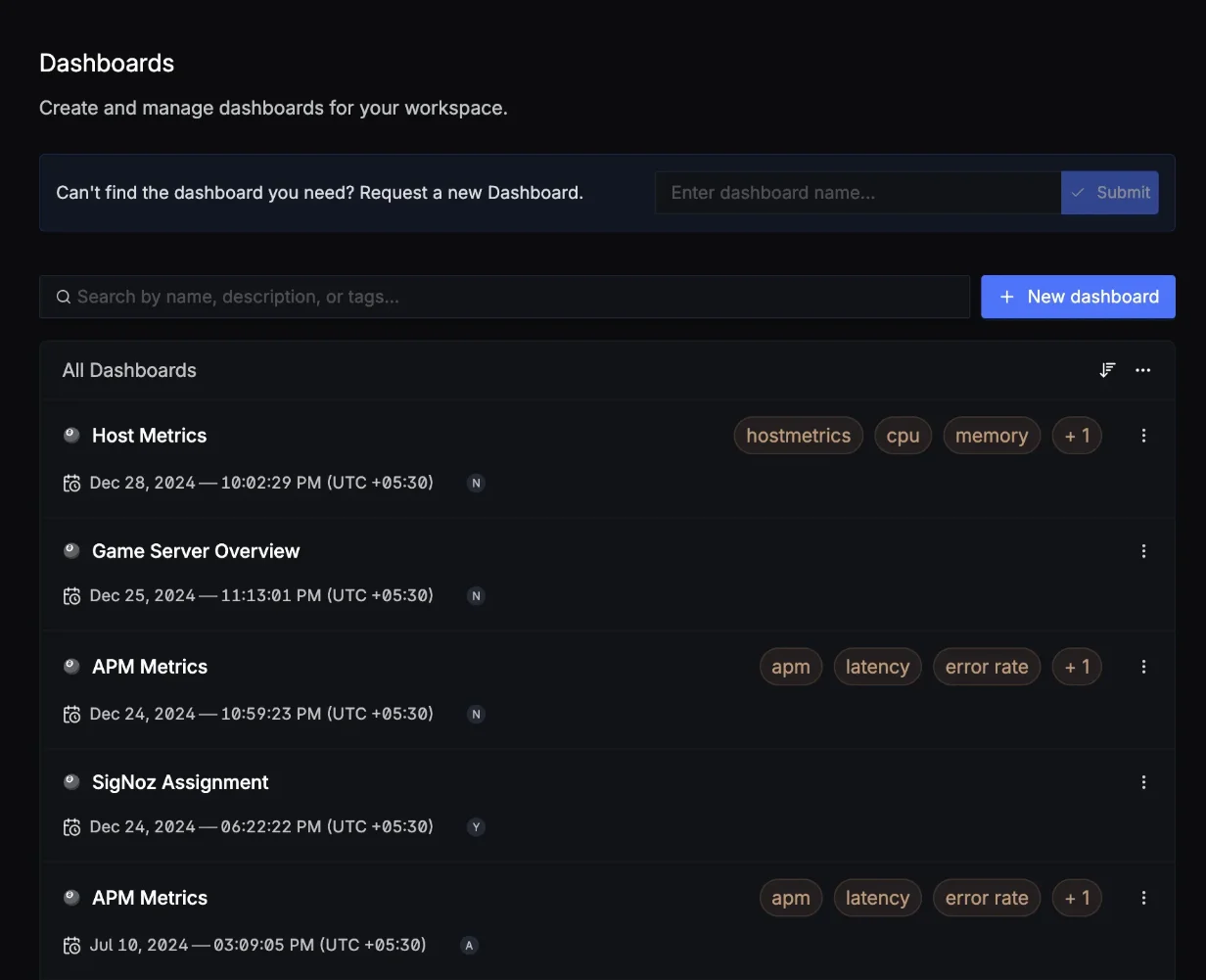

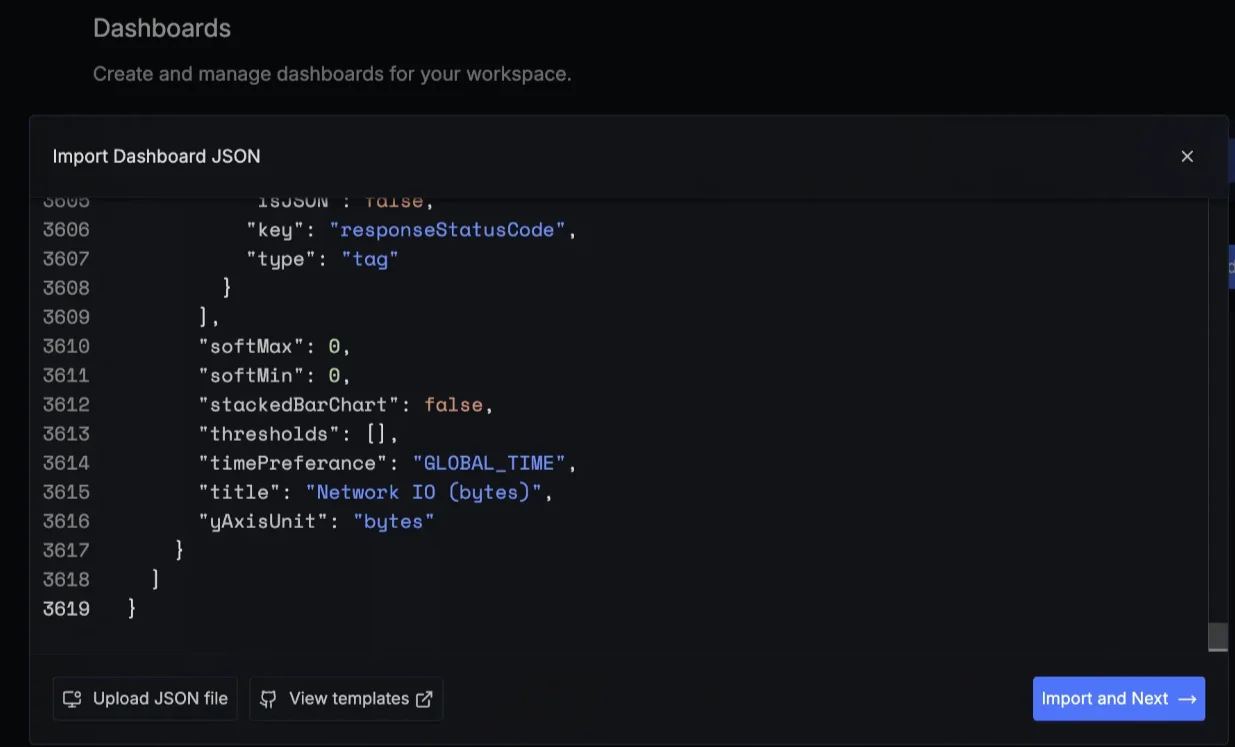

Import Dashboard: Click

New Dashboardand then import JSON file to create an existing dashboard. For this demo we are using SigNoz Hostmetrics JSON Dashboard. For more information, refer to Hostmetrics documentation.

Dashboard View

Importing JSON File Once done, click

Import and Next. You will be directed to the dashboard you just created.

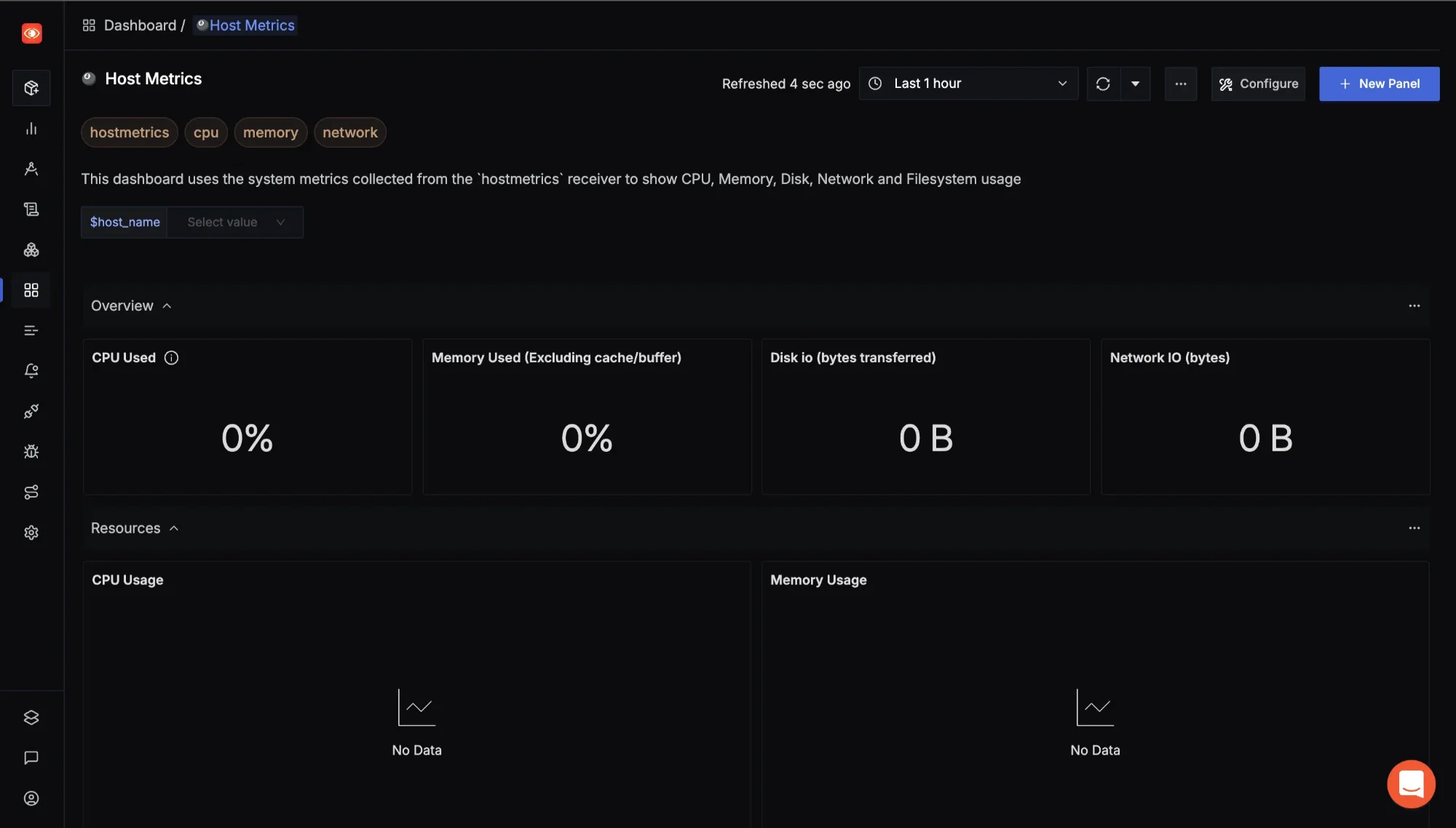

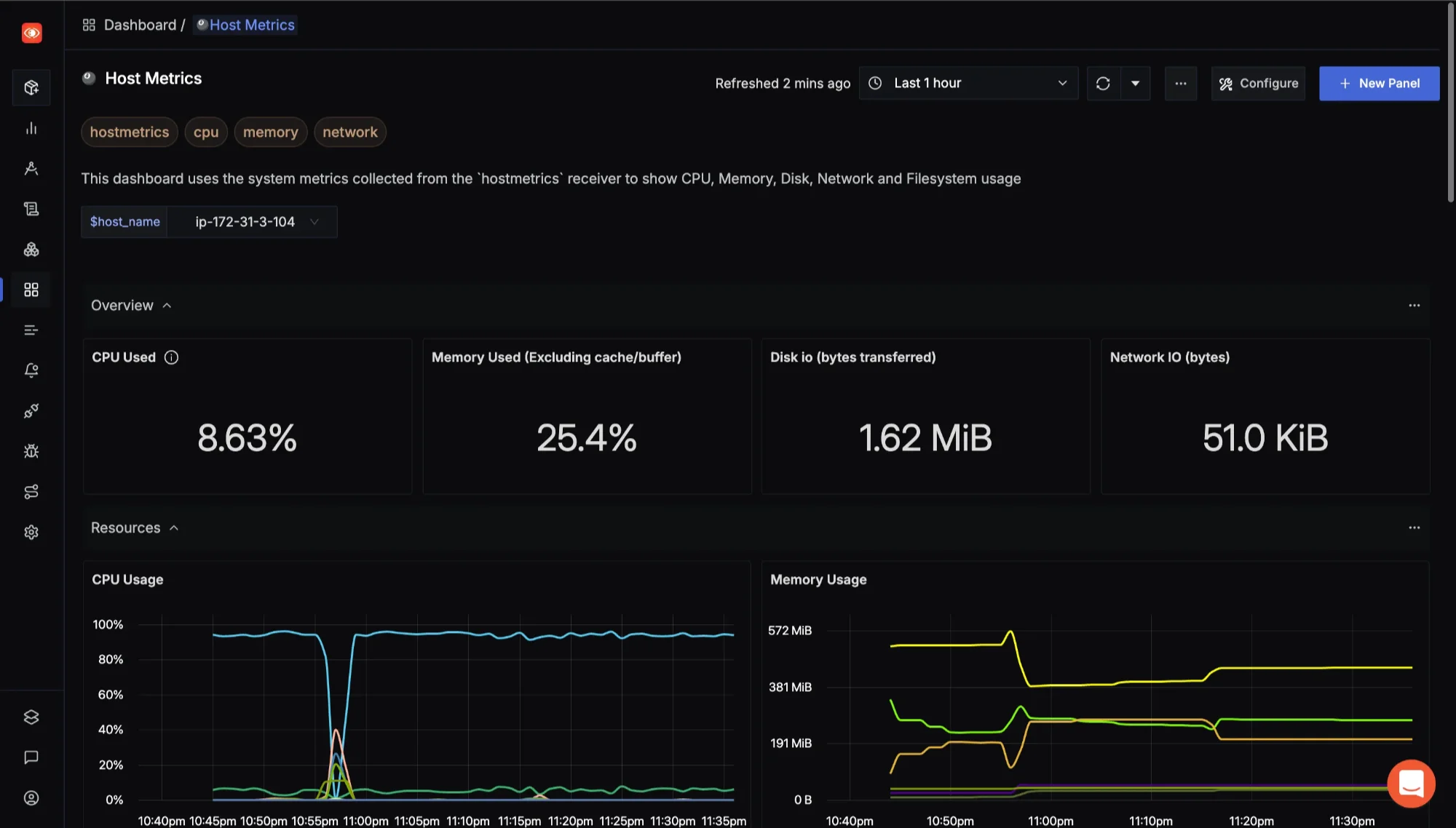

Selecting host_name for dashboard view On the

$host_nameselect your environment, on which you downloaded the OTel-collector exporter. If exporter is running properly you can see your Remote Server metrics.

Setting host_name

HostMetrics Full Dashboard To learn more, refer to Manage Dashboards and Panels.

Infrastructure Monitoring Support:

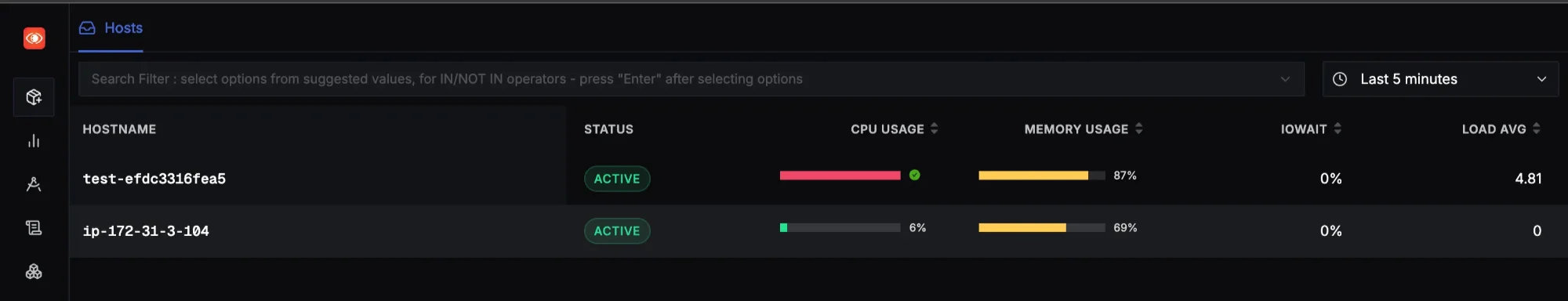

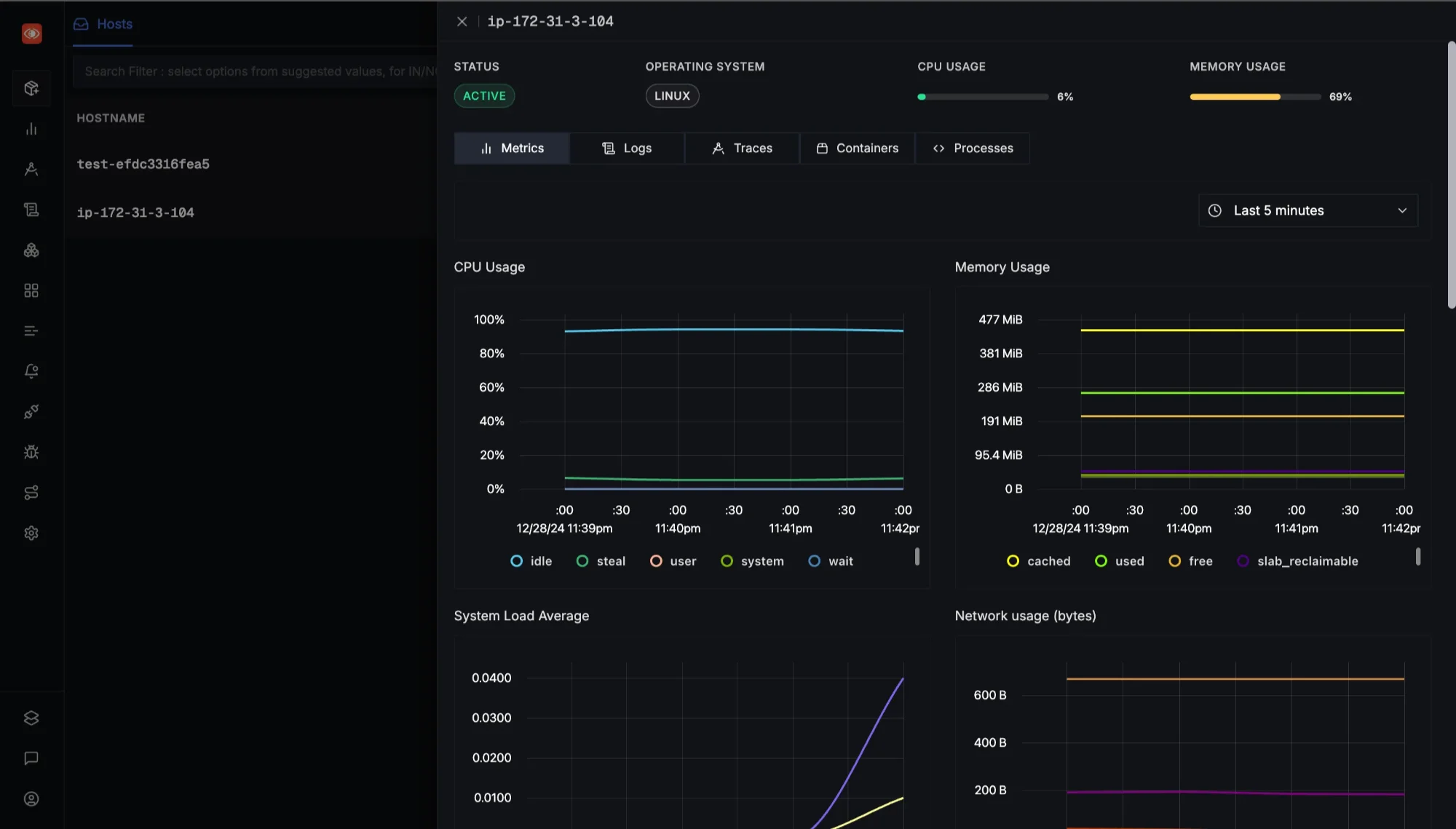

Click SigNoz sidebar >> Infra Monitoring to view all the available hosts. SigNoz Cloud offers dedicated support for infrastructure monitoring.

Click on Infra Monitoring Click on the desired

Host Nameto open its corresponding dashboard

Select your hostname The dashboard displays comprehensive host metrics data for your selected host. This feature ensures you can easily monitor and analyze infrastructure metrics for efficient system performance management.

Infrastructure dashboard To learn more on how to send the Infra Metrics to SigNoz you can follow our user guide here.

Key Benefits of Remote Server Monitoring

- Proactive Issue Detection Remote monitoring enables early detection of potential issues, such as resource bottlenecks, failing hardware, or software errors. By identifying these problems before they escalate, IT teams can take preventive action to avoid costly disruptions. For example, monitoring disk usage can help identify when storage is nearing capacity, allowing for timely upgrades.

- Improved Uptime: Server downtime can lead to significant revenue loss, especially for businesses dependent on 24/7 operations. Remote monitoring tools ensure IT teams receive instant alerts when performance drops or outages occur, enabling rapid responses. This minimizes the impact of downtime on customers and business operations.

- Resource Optimization: Remote server monitoring enables IT teams to track CPU, memory, and network usage in real time, helping them optimize resource allocation. This prevents overprovisioning and reduces operational costs while ensuring critical applications have the resources they need. For example, detecting idle virtual machines through continuous monitoring allows teams to deallocate unnecessary resources, resulting in significant cost savings.

- Cost Reduction: Remote monitoring reduces the need for on-site personnel and costly manual interventions. By automating routine monitoring tasks and enabling remote troubleshooting, businesses save time and money. This is particularly beneficial for organizations managing multiple data centers or remote sites.

Best Practices for Remote Server Monitoring in 2026

As IT infrastructure evolves, staying current with server monitoring practices is essential for reliability and performance. Here are key practices for effective monitoring in 2026:

Implement Agentless Monitoring: This lightweight approach collects metrics using built-in protocols like SNMP or APIs without installing additional software.

- Reduced Overhead: Minimizes resource consumption on monitored systems

- Simplified Deployment: Easier to implement across diverse environments

Utilize AI and Machine Learning: These technologies transform monitoring through:

- Predictive Analytics: Forecasting potential issues before they occur

- Anomaly Detection: Identifying unusual patterns that might indicate problems

Integrate with Automation Tools: Enhances efficiency through:

- ChatOps Integration: Combining monitoring with platforms like Slack for collaborative troubleshooting

- Auto-Remediation: Configuring scripts to resolve common issues automatically

Ensure Scalability: Critical for growing infrastructures through:

- Flexible Deployments: Using containerized solutions that scale alongside your infrastructure

- Future-Proof Solutions: Selecting tools that handle hybrid environments

Adopt a Unified Monitoring Approach: Consolidates tools and data for:

- Single Pane of Glass: One dashboard for all metrics, logs, and alerts

- Reduced Complexity: Eliminating redundant tools to focus on key indicators

Overcoming Common Challenges in Remote Server Monitoring

Network Connectivity Issues: Build resilience through redundant communication pathways and store-and-forward techniques that cache data during outages.

Data Volume Management: Implement efficient storage through:

- Metric aggregation instead of storing every data point

- Time-series databases like Prometheus or InfluxDB with compression techniques

Alert Fatigue: Reduce noise by:

- Calibrating thresholds carefully (e.g., alert on 85% CPU usage for >5 minutes)

- Using tools with intelligent correlation to group related alerts

Security and Compliance: Protect monitoring systems through:

- Encryption of monitoring traffic using protocols like TLS

- Regular audits of monitoring configurations to ensure compliance

Performance Impact: Optimize monitoring load by:

- Adjusting data collection frequency based on operational needs

- Implementing sampling techniques for high-volume metrics

Addressing these challenges creates a more robust monitoring system that supports your operational goals without compromising performance.

Key Takeaways

- Essential for maintaining high-performing, secure IT systems, remote server monitoring ensures smooth operations, minimizes downtime, and alerts you to potential issues before they escalate into critical problems.

- Focus on system resources like CPU, memory, and disk usage; application performance metrics such as response time, error rates, and throughput; and security metrics to detect unauthorized access or unusual activities that could indicate threats.

- Use AI-driven analytics to automatically identify anomalies, consolidate all monitoring data into a unified dashboard for a clearer overview, and set up automated alerts to focus only on critical issues without constant manual checks.

- Tackle alert fatigue by configuring alerts to highlight only meaningful issues, and manage large volumes of data by using tools that filter and prioritize actionable insights to prevent information overload.

- Use beginner-friendly solutions like SigNoz that offer real-time metrics visualization, distributed tracing, and actionable analytics, providing an all-in-one monitoring platform for comprehensive insights.

- Stay ahead by preparing for edge computing with low-latency monitoring, leveraging AIOps to automate routine monitoring tasks with AI, and adapting to serverless architectures by focusing on application and service performance rather than server metrics.

FAQs

What are the essential metrics to monitor in remote servers?

Critical metrics to monitor include CPU utilization to prevent overloads, memory usage to avoid crashes, disk I/O for detecting storage issues, and network performance to identify bandwidth or latency problems. Additionally, application-specific metrics like response times, error rates, and throughput help ensure smooth application performance.

How does remote server monitoring differ from on-premises monitoring?

Remote monitoring accesses server data over the internet using tools designed for secure, real-time tracking of performance and security metrics. It handles challenges like network latency and cybersecurity risks. On-premises monitoring, on the other hand, involves direct access to local servers and is less affected by network-related issues but requires on-site infrastructure and expertise.

Can remote server monitoring help prevent downtime?

Yes, remote monitoring helps prevent downtime by detecting anomalies like resource spikes or application failures early. Real-time alerts enable quick responses to critical issues, and historical data analysis helps anticipate and mitigate future risks, ensuring a stable IT environment.

What security considerations should be taken into account for remote monitoring?

To secure remote monitoring, use encrypted data transmission (e.g., HTTPS), implement multi-factor authentication (MFA), conduct regular security audits, and ensure compliance with data protection regulations like GDPR. These measures safeguard sensitive server data and prevent unauthorized access.