Cloud Infrastructure Explained - Components and Benefits

Cloud infrastructure provides the hardware and software components that power cloud computing. It allows you to focus on your business logic instead of managing physical resources.

In this article, you'll learn about cloud infrastructure, its benefits, and core components. You'll also explore delivery and deployment models that cater to different business needs, and discover how SigNoz can help you monitor and optimize your cloud infrastructure.

What is Cloud Infrastructure?

Cloud infrastructure includes both hardware and software components, such as servers, storage, and networking. Think of it as a virtual data center. You borrow resources instead of owning and maintaining them.

Traditionally, on-premises infrastructure requires your IT team to manage every aspect of computing, storage, and networking. In contrast, cloud infrastructure lets you focus on developing your applications without the headaches of managing hardware or controlling costs.

Benefits of cloud infrastructure

Some benefits of using cloud infrastructure include:

- Scalability: Easily scale resources up or down based on your needs.

- Cost Efficiency: Pay only for the resources you use.

- Reduced Maintenance: Your cloud provider handles hardware updates, security, and maintenance.

- High Availability: Leverage built-in redundancy and disaster recovery.

- Faster Deployment: Quickly deploy apps and services.

Core Components of Cloud Infrastructure

Cloud infrastructure offers various services that abstract the complexity of underlying physical hardware. When you use the cloud, you're primarily interacting with these key components:

- You can rent virtual machines (VMs) or container instances to run apps. For example, you can rent an EC2 instance to run a web app.

- You can store and retrieve data easily using cloud storage solutions. This includes options like object storage, block storage, and file storage.

- Cloud networking lets you connect your application components securely. This includes virtual networks, load balancers, and Content Delivery Networks (CDNs).

To better understand these components, let’s consider the example of building a streaming platform.

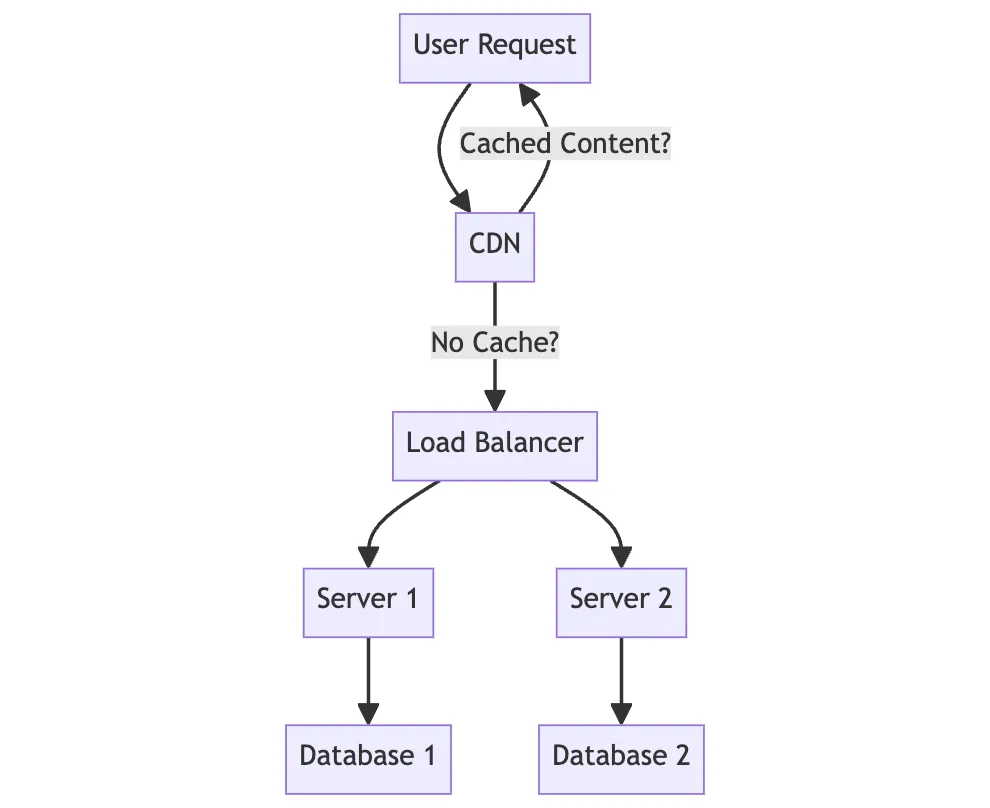

- The load balancer handles incoming streaming requests from users. It distributes these requests to available servers based on server load. This optimizes performance and reduces latency.

- The servers process video streams, manage session data, and perform tasks like logging and analytics. These servers interact with the database to fetch content that isn't cached and handle other backend services.

- Storage devices are used to store user data, video files, and other assets.

- Networking ensures that all components of the platform communicate efficiently. The CDN reduces latency by caching content closer to the user, improving the overall streaming experience.

Hardware Resources

The physical layer consists of servers, storage, and networking equipment, all hosted in data centers by your cloud provider. For a streaming platform, you need:

- In AWS, you rent VMs to handle streaming request. You also can select the VM size, OS and other configurations. AWS provisions these VMs on physical servers in their data centers, but you manage them as flexible virtual resources.

- In AWS, you can use S3 bucket to store video files and RDS to store user data. You can select different storage tiers based on how frequently the data is accessed. Ensuring cost efficiency while meeting performance requirements.

- In AWS, you can configure IP address range and apply network restrictions. For example, you can restrict certain services to specific IP ranges for security purposes.

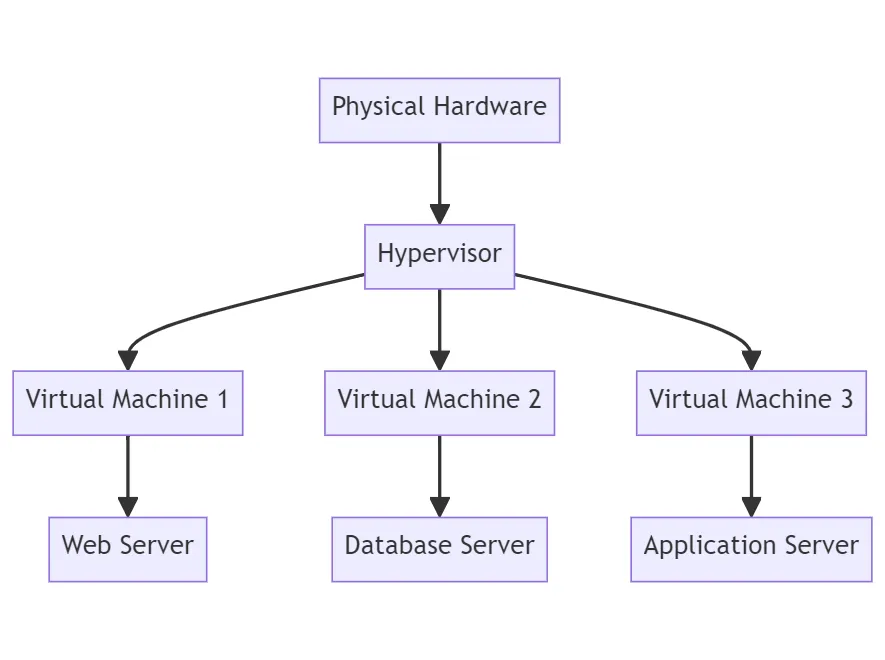

Virtualization Layer

Virtualization allows you to run multiple isolated OS's on a single physical machine by creating Virtual Machines (VM). These VMs behave like independent computers, but share physical hardware resource of the host machine.

A hypervisor manages virtualization by sitting between the hardware and the VMs. It allocates resources like CPU, memory, storage, and networking to each VM as needed.

For example, developers often need isolated environments for testing code. They can spin up several VMs running different OS to test apps. Virtualization allows this without the need for dedicated physical hardware.

Benefits of virtualization include:

- Improved resource utilization.

- Increased flexibility and scalability.

- Enhanced isolation and security.

Storage Systems

There are three main types of cloud data storage: object storage, file storage, and block storage. Each offers its advantages and has its own use cases:

- Object storage to store unstructured data like images and videos.

- File storage uses a hierarchical file system to store information similar to a traditional file system. You can store files such as logs and other data that can be shared across multiple servers.

- Block storage divides data into multiple blocks and stores them separately. Each block has its own address. You can store structured data, such as relational databases.

Networking

Networking in cloud infrastructure involves:

- Virtual networks are your personal cloud infrastructure, isolating your resources from others.

- Load balancers to distribute traffic across multiple servers to maintain optimal performance.

- Content Delivery Networks (CDNs) to reduce latency and improve app performance by delivering content from geographically distributed servers based on user location.

Computing Resources in Cloud Infrastructure

Cloud infrastructure provides various computing options to suit different workloads. Each option has its strengths and is suitable for different scenarios, depending on the app's and organization's needs.

The image below, helps you decide which computing option to choose.

Virtual Machines (VMs)

VMs are software emulations of physical computers. They run on top of the virtualization layer and can be quickly provisioned or decommissioned as needed.

Benefits of VMs:

- Isolation from other workloads

- Flexibility to run different operating systems

- Easy scalability, as you can add more resources like CPU to the existing VMs or spin up new VMs. This scalability is essential for handling variable workloads.

For example, you are managing an e-commerce platform. To ensure your platform runs smoothly during traffic surges, you spin up multiple VMs:

- VM 1 Runs the website’s front-end.

- VM 2: Handles the payment processing.

- VM 3: Manages customer databases.

- VM 4: Runs a testing environment for new features.

During the sale, VM 1 experiences increased traffic. You quickly add more VMs to balance the load across multiple VMs running the front-end. After the sale, you can scale back by decommissioning the extra VMs, reducing costs.

Containers

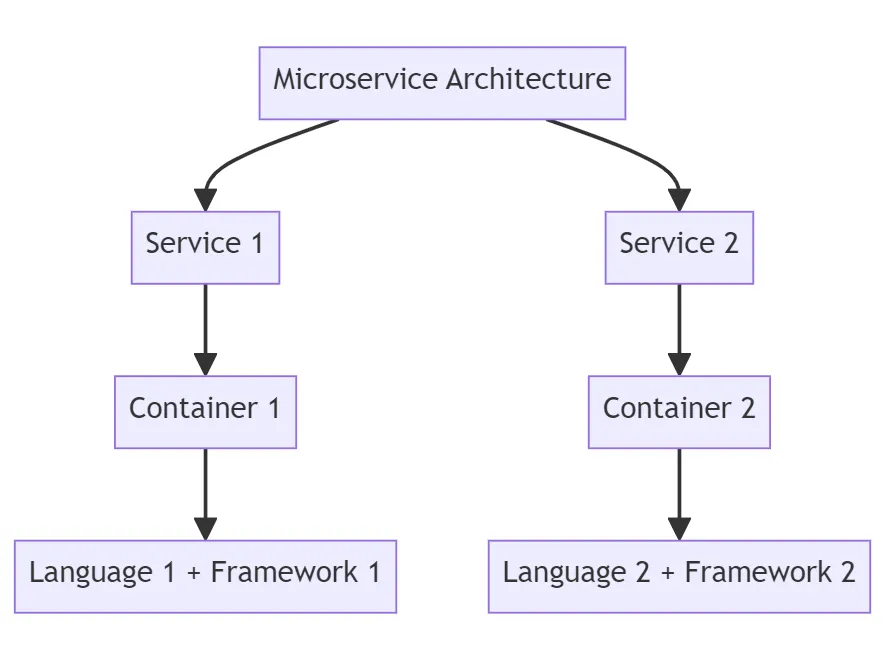

Containers allow you to package an app and its dependencies into a single portable unit. They share the host OS kernel but run in isolated user spaces. They have several benefits:

- Containers are smaller and start faster compared to VMs.

- Containers encapsulate all the dependencies and configurations required to run an app, making them portable across different environments.

Containers provide a consistent runtime environment across development, testing, and production.

For example, If you are building a web app using a microservice architecture. However, each service uses a different programming language and framework. Using Containers, you can package each microservice with its dependencies and run them across different environments.

Some common tools to store images are:

- After building your containers, push the container images to DockerHub for storing and sharing.

- Use Kubernetes to pull these images from DockerHub and deploy them to your cluster. Kubernetes manages scaling, load balancing, and orchestration of these containers across your infrastructure.

For example, you can store your container images in Amazon Elastic Container Registry (ECR). This is a fully managed container registry to deploy app images.

Serverless Computing

Serverless computing allows you to build and run apps without managing the underlying infrastructure. You focus on writing your business logic, and the cloud provider handles the deployment, scaling, and maintenance.

Some benefits of using serverless computing are:

- Serverless functions are triggered by events and only run when needed.

- Cloud can automatically scale the resource up or down based on demand

- You only pay for execution time and resources used by your functions.

For example, with AWS Lambda, you can trigger functions in response to events, such as a button click. You only pay for the execution time, not idle time, reducing costs.

Bare Metal Servers

Bare Metal Servers are physical servers dedicated to a single tenant. They offer direct access to the hardware and do not use virtualization. Some other benefits are:

- As there's no virtualization layer, bare metal servers provide full access to hardware resources, leading to high performance.

- You have full control over the hardware and OS configurations.

- The server is dedicated to one organization, providing high security and isolation from other users.

For example, you build a financial app for an organization handling sensitive data and complex transactions. To meet their needs for high performance and low latency, you need a solution that delivers maximum performance without the overhead of virtualization. Bare metal servers are ideal as they provide dedicated resources and high levels of security.

Cloud Infrastructure Delivery Models

Cloud infrastructure offers a range of service models that provide varying levels of control, flexibility, and management.

When choosing between IaaS, PaaS, and SaaS, consider what level of control and flexibility you need. For example,

- IaaS is like making a pizza from scratch with the ingredients provided.

- PaaS is like getting a ready-made pizza with an oven to bake it.

- SaaS is like having a pizza delivered and served at your table.

Infrastructure as a Service (IaaS)

Infrastructure as a Service (IaaS) provides virtualized computing resources over the internet. This includes servers, storage, networking, and virtualization. You have control over the OS, storage, and deployed apps. However, the underlying hardware is managed by the cloud provider.

Some benefits of IaaS are:

- Cloud providers maintain physical servers and other hardware, reducing capital expenditure.

- You can easily scale resources up or down based on demand.

- You only pay for the resources you consume, which makes IaaS a cost-effective solution for businesses with variable workloads.

Platform as a Service (PaaS)

Platform as a Service (PaaS) provides a platform that enables you to build, run, and manage apps without worrying about the underlying infrastructure. PaaS includes infrastructure, middleware, development frameworks, databases, and other services.

Some benefits of PaaS are:

- You focus on coding and deploying apps without spending time on infrastructure management.

- PaaS abstracts much of the complexity associated with infrastructure management, such as configuring servers or managing security patches.

- PaaS offerings typically include integrated development tools, database management systems, and middleware, simplifying the development process.

Software as a Service (SaaS)

Software as a Service (SaaS) delivers software apps over the internet. Users can access these apps through a web browser without installing or maintaining the software on their local machines. The service provider manages everything, including the underlying infrastructure, security, updates, and data storage.

Some benefits of SaaS are:

- Users can access the software from any device with an internet connection, eliminating the need for installation or maintenance on individual devices.

- The SaaS provider automatically updates the software, ensuring users can always access the latest features and security patches.

How Does Cloud Infrastructure Work

Cloud infrastructure offers unparalleled flexibility, scalability, and efficiency. It operates on fundamental principles:

- Resource pooling: allocate physical resources across multiple users (tenants). Virtualization technology ensures that each user's resources are isolated from others.

- On-demand self-service: You can provision resources as needed using a web interface or API.

- Elasticity: Resources can scale up or down automatically based on demand. This ensures that you have the necessary resources during peak times and don't pay for idle resources when demand is low.

- Measured service: Cloud usage is monitored, controlled, and reported, enabling a pay-as-you-go model. This means users only pay for what they use, and they can monitor their usage in real-time.

For example, you are running a web app that will host a sale. You have opted to use cloud infrastructure, and each fundamental principle helps you:

- Host your web app on a virtual machine (VM) within a larger resource pool, ensuring isolation and security.

- As the sale approaches, you can provision additional resources, such as more VMs or storage.

- Based on real-time demand, scale your resources up during the sale and down afterward.

- Track resource usage (e.g., VMs and storage) to calculate your bill and plan for future events.

Automate management of cloud infrastructure

Automation and orchestration are critical for the efficient management of cloud infrastructure. They enable rapid provisioning, consistent configuration, and efficient handling of large-scale deployments. You can:

- Automate cloud resources to be provisioned and de-provisioned quickly. You can add scripts to adjust resource allocation based on demand and performance.

- Orchestration ensures consistency across your environments. You can use templates to define configurations. The template ensures that all resources are created and configured as specified.

- Orchestration and automation help manage and scale large cloud environments efficiently, handling complex tasks like load balancing, backup, and disaster recovery.

For example, during your web app sale:

- Automatically scale your resources up or down depending on network traffic.

- Orchestrate a load balancer between your VMs to distribute traffic.

Cloud Infrastructure Deployment Models

Cloud deployment models define how cloud services are delivered and managed. Each model offers different levels of control, flexibility, and management, depending on the organization's needs. The key models include Public Cloud, Private Cloud, Hybrid Cloud, and Multi-Cloud.

Public Cloud

In the public cloud, third-party vendors provide cloud services to the general public. The provider owns and operates all hardware, software, and infrastructure components.

Some benefits of the public cloud are:

- Resources like servers and storage are shared among multiple users (tenants).

- You pay only for the resources they consume, making it cost-effective for many organizations.

- The cloud provider handles the infrastructure's management, maintenance, and security.

Private Cloud

A private cloud is dedicated to a single organization and offers more environmental control. It can be managed internally or by a third-party provider.

Some benefits of the private cloud are:

- You have full control over your data, security, and compliance.

- You have dedicated resources for better performance and reliability.

For example, a finance app with strict regulatory requirements uses a private cloud to manage sensitive customer data. This offers the necessary security and compliance while customizing the environment.

However, the private cloud requires significant investment and ongoing maintenance compared to the public cloud.

Hybrid Cloud

A hybrid cloud combines public and private cloud infrastructures, enabling data and apps to be shared between them. This model offers flexibility, allowing workloads to run in the most suitable environment.

Some benefits of hybrid cloud are:

- You can choose where to run specific workloads based on cost, performance, and security.

- Sensitive data can be kept on-premises in a private cloud, while less-sensitive workloads run in the public cloud.

Hybrid clouds allow you to gradually transition to the cloud, leveraging existing on-premises investments while adopting public cloud services.

Multi-Cloud

A multi-cloud approach uses multiple cloud providers to meet various business needs. This avoids vendor lock-in and leveraging the best services from each provider.

Some benefits of multi-cloud are:

- You are not tied to a single cloud provider, reducing dependency and increasing flexibility.

- Cloud providers excel in different areas, allowing organizations to choose the best services.

- Multi-cloud strategies enhance disaster recovery and resilience, as workloads can be distributed across multiple providers.

For example, you choose the best tools for each task, using AWS for machine learning, Azure for enterprise apps, and GCP for big data analytics.

Monitoring and Optimizing Cloud Infrastructure with SigNoz

Effective monitoring is crucial for maintaining a healthy and efficient cloud infrastructure. SigNoz is an open-source app performance monitoring system that ensures smooth cloud operations.

SigNoz Cloud is the easiest way to run SigNoz. Sign up for a free account and get 30 days of unlimited access to all features.

You can also install and self-host SigNoz yourself since it is open-source. With 24,000+ GitHub stars, open-source SigNoz is loved by developers. Find the instructions to self-host SigNoz.

Some benefits of using SigNoz are:

- Trace requests across your cloud infrastructure to identify bottlenecks and failures.

- Visualize real-time key performance indicators (KPIs), such as CPU usage or memory consumption.

- Centralize logs from various sources for easier analysis and troubleshooting.

- Set up custom alerts to notify users of potential issues before they impact them.

For example, consider you have a microservice architecture in the cloud. SigNoz can help you:

- Pinpoint issues like slow database queries by tracing requests across all services.

- Track CPU and memory usage on VMs running your microservices.

- Quickly search and filter logs in SigNoz during an outage.

- Set thresholds for microservices to trigger alerts if exceeded.

For more information, refer to Getting Started with SigNoz.

Considerations in Cloud Infrastructure

While cloud infrastructure offers significant benefits. It also presents challenges that you must address for successful adoption and operation. To ensure a smooth cloud infrastructure:

- Build robust security, including encryption, access controls, and regular audits.

- Ensure compliance with regulations to avoid legal and financial penalties.

- Maintain secure communication between cloud and on-premises infrastructure.

- Build cost management practices, such as monitoring usage and optimizing resource allocation. To learn more, refer to how SigNoz can help you.

- Maintain network latency and data transfer speeds.

- Develop a multi-cloud strategy to avoid dependency on a single cloud provider's proprietary services.

Key takeaways

- Cloud infrastructure forms the foundation of cloud computing, offering flexibility and scalability.

- Core components include hardware, virtualization, storage, and networking resources.

- Various delivery and deployment models cater to different business needs.

- To maintain a healthy cloud infrastructure, SigNoz offers performance monitoring, request tracing, KPI visualization, log centralization, and custom alerts.

- Challenges involve security, compliance, and cost management.

FAQs

What is the difference between cloud infrastructure and cloud architecture?

Cloud infrastructure comprises the physical and virtual resources that form a cloud environment. Cloud architecture defines the design and organization of these components to create a solution.

How does cloud infrastructure enhance business agility?

Cloud infrastructure empowers businesses to rapidly provision resources, scale apps, and deploy new services. This acceleration is achieved by eliminating significant upfront investments and lengthy procurement processes.

How does SigNoz aid in cloud infrastructure management?

SigNoz provides monitoring, request tracing, key performance indicator (KPI) visualization, log centralization, and custom alerts to maintain optimal cloud infrastructure performance and health.

Can all app types leverage cloud infrastructure?

While cloud infrastructure supports many apps, legacy systems or those with specific hardware dependencies may require modifications for successful cloud deployment.

What security measures typically protect cloud infrastructure?

Common security practices for cloud infrastructure include encryption, access controls, network segmentation, regular security audits, and compliance certifications. Many cloud providers offer advanced security features like threat detection and DDoS protection.

How does virtualization benefit cloud infrastructure?

Virtualization improves resource utilization, flexibility, scalability, and security by enabling multiple virtual machines (VMs) to operate on a single physical server.

What is serverless computing?

Serverless computing abstracts infrastructure management and shifts the focus to code development. It automatically scales resources based on demand and charges solely for execution time.

What factors should be considered for effective cloud infrastructure management?

Successful cloud infrastructure management requires attention to security, compliance, cost optimization, network latency, and adopting a multi-cloud strategy.