OpenClaw's hidden OTel plugin shows where all your tokens go

OpenClaw Agent has seen explosive popularity, and building autonomous workflows with it is a lot of fun. But if you've experimented with various plugins, and model integrations, you've probably hit rate limits or drained your token budget surprisingly fast.

Without visibility into what's happening under the hood—which tool calls are failing, or exactly how many tokens are being consumed, you can't plan your agent usage and budget.

It turns out OpenClaw ships with a built-in (but disabled by default) diagnostics-otel plugin that emits standard OpenTelemetry traces and metrics. I enabled it and built a dashboard to track exactly where the tokens are going.

Here's a quick guide on how to enable this hidden plugin to get full visibility into your OpenClaw agent, plus a ready-to-use Dashboard JSON file so you can grab this dashboard right away.

What type of Telemetry does Diagnostic-OTel provide?

Understanding what telemetry Diagnostic-OTel emits will help us plan our Dashboard and Alerts more effectively beforehand. OpenClaw uses OpenTelemetry internally for telemetry collection. We get the following:

- Traces: spans for model usage and webhook/message processing.

- Metrics: counters and histograms, token usage, cost, context size, run duration, message-flow counters, queue depth, and session state.

- Logs: the same structured records written to your Gateway log file, exported over OTLP when enabled.

The practical value is immediate. You get token cost attribution (which sessions are expensive and why), latency breakdown (is it the LLM call or the tool execution?), tool failure visibility, and error detection, all without writing a single line of custom instrumentation.

You can check the names and types of the exported metrics in detail in the Official OpenClaw Documentation.

Setting up the Diagnostic-OTel plugin in under 10 minutes

Prerequisites

- The latest version of OpenClaw is installed and configured.

- A backend with an endpoint to receive telemetry. In this article, we will be using SigNoz Cloud.

Step 1: Enable the Plugin

The diagnostics-otel plugin ships with OpenClaw but is disabled by default. You can enable it via CLI:

openclaw plugins enable diagnostics-otel

Or add it directly to your config file (~/.openclaw/openclaw.json):

{

"plugins": {

"allow": ["diagnostics-otel"],

"entries": {

"diagnostics-otel": {

"enabled": true

}

}

}

}

Step 2: Configure the OTEL Exporter

You can configure the exporter vi CLI:

openclaw config set diagnostics.enabled true

openclaw config set diagnostics.otel.enabled true

openclaw config set diagnostics.otel.traces true

openclaw config set diagnostics.otel.metrics true

openclaw config set diagnostics.otel.logs true

openclaw config set diagnostics.otel.protocol http/protobuf

openclaw config set diagnostics.otel.endpoint "https://ingest.<region>.signoz.cloud:443"

openclaw config set diagnostics.otel.headers '{"signoz-ingestion-key":"<YOUR_SIGNOZ_INGESTION_KEY>"}'

openclaw config set diagnostics.otel.serviceName "openclaw-gateway"

If you are following and using SigNoz Cloud, you can follow our Ingestion Key guide to get your Ingestion Region and API Key.

Step 3: Check your config (Optional)

You can quickly check your config using the following command:

openclaw config get diagnostics

Your output should look like this.

{

"enabled": true,

"otel": {

"enabled": true,

"endpoint": "https://ingest.<region>.signoz.cloud:443",

"protocol": "http/protobuf",

"headers": {

"signoz-ingestion-key": "<YOUR_SIGNOZ_INGESTION_KEY>"

},

"serviceName": "openclaw-gateway",

"traces": true,

"metrics": true,

"logs": true,

"sampleRate": 1,

"flushIntervalMs": 5000

}

}

OR you can check your ~/.openclaw/openclaw.json config file too.

Step 4: Restart your OpenClaw gateway

openclaw gateway restart

Step 5: Visualize in SigNoz

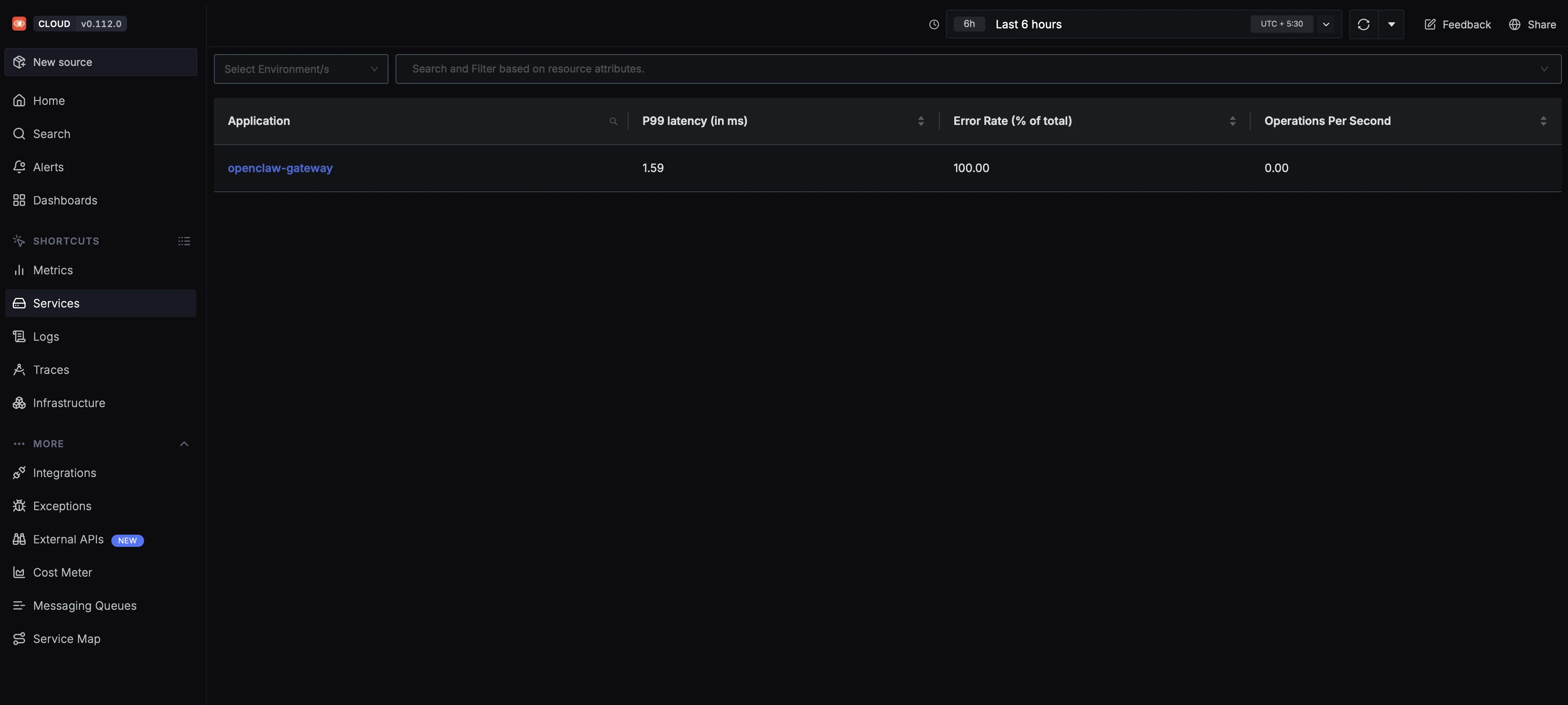

Open your SigNoz Cloud and navigate to Service Tab. If you have followed everything till here service name openclaw-gateway should be visible to you.

You can click on the service name to view the out-of-the-box dashboard provided by SigNoz.

Step 6: Customized Dashboard

You can import the custom dashboard JSON to create a new customised dashboard.

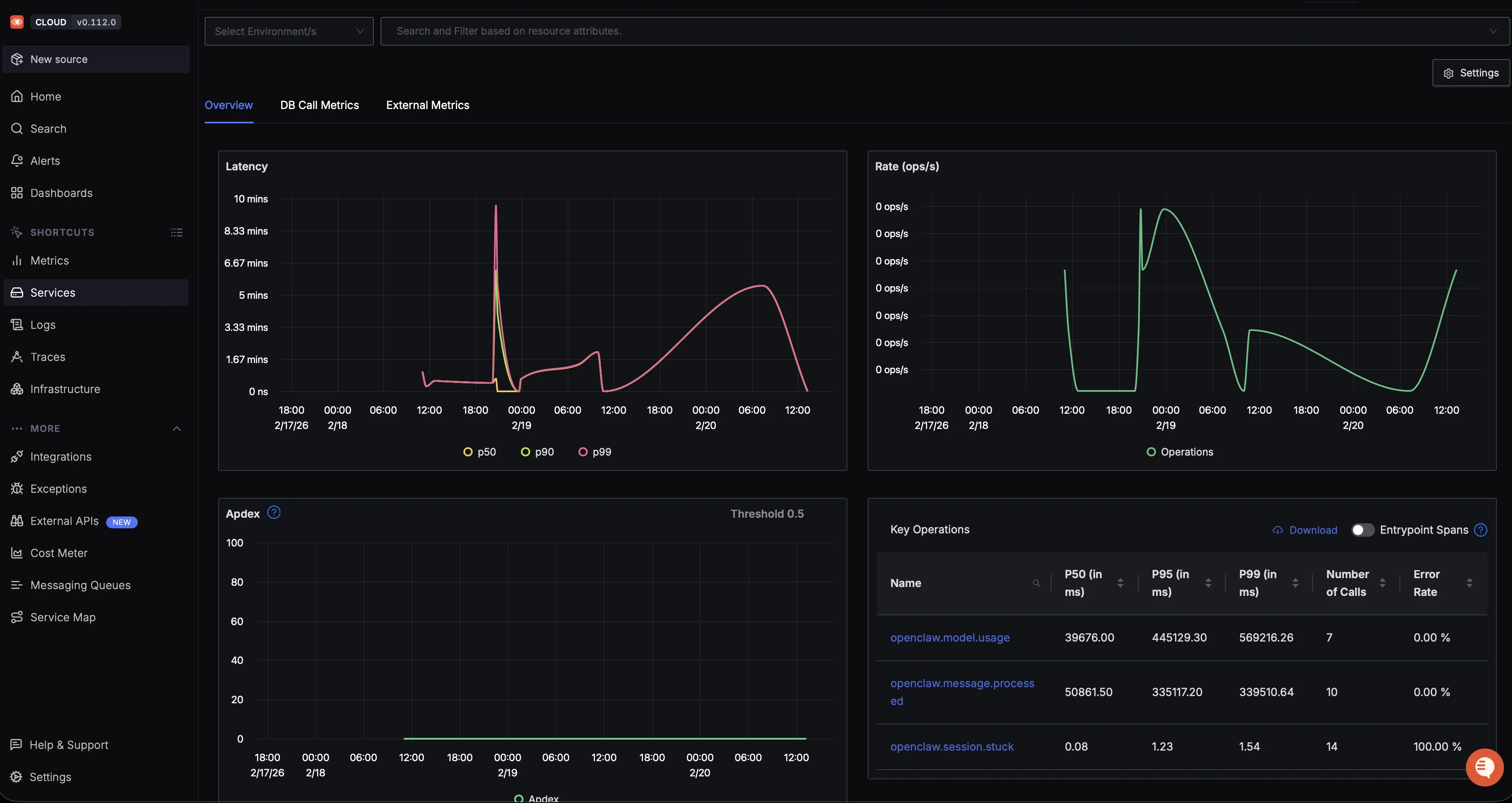

Walkthrough of the OpenClaw Overview dashboard showing LLM token usage, queue and session health metrics, and error logs.

Conclusion

When building autonomous workflows with OpenClaw, running blind isn't an option. Without tracking your model calls and tool executions, token budgets drain quickly and debugging agent loops is impossible.

The built-in diagnostics-otel plugin makes fixing this straightforward. With no custom code required, you can connect it directly to SigNoz and see exactly where your tokens are going.