What is OTLP and How It Works Behind the Scenes

If you have worked with observability tools in the last decade, you have likely managed, and been burnt by, a fragmented collection of tools and libraries. Each observability signal required its own tool, data formats were incompatible and had little or no correlation. For example, log records would not link to traces, meaning you had to guess which traces led to which events.

The OpenTelemetry Protocol (OTLP) solves this by decoupling how telemetry is generated from where it is analyzed. It is a general-purpose, vendor-neutral protocol designed to transfer telemetry (logs, metrics, and traces) data between any compatible source and destination.

What is OTLP?

OTLP is the data-delivery protocol defined by the OpenTelemetry (OTel) project. While OpenTelemetry aims to standardize how telemetry data is generated, OTLP, as part of this mission, defines precisely how telemetry data is serialized and transmitted.

It acts as the universal language between clients (applications) and servers (OTel collectors or OpenTelemetry-native observability backends like SigNoz). When configured with exporters, your applications emit a clean, unified stream of data that any compatible backend can ingest without additional processing.

Why was OTLP Needed?

Before OTLP arrived, the observability landscape was fragmented because different signals required entirely different tools. This lack of a common standard meant vital context was lost as data formats were incompatible across the stack.

Further, engineers also found themselves vendor-locked. Because the application code used vendor-specific instrumentation agents, switching providers required weeks of effort. The prevailing mindset was just to make it work with the current toolset, rather than trying to improve it.

The daily routine of using these tools was equally frustrating. Since telemetry data was sent to isolated platforms, like Jaeger for tracing and Filebeat for logs, debugging a single incident led to frequent context switching. Anyone who has debugged a high-pressure production issue knows how difficult tracking moving parts is. Imagine doing that with minimal context on cause-and-effect!

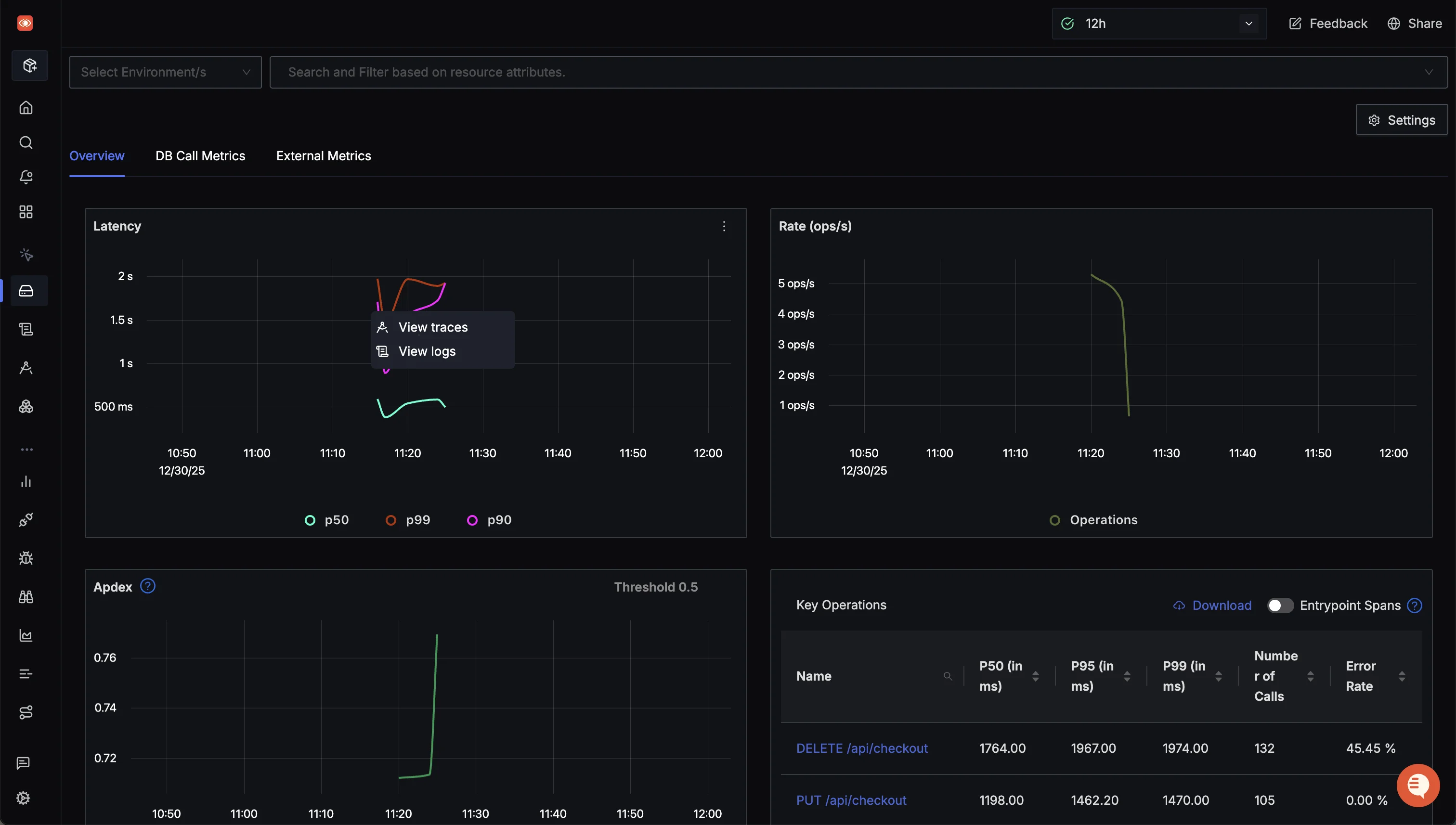

OTLP’s unified standard gives teams the flexibility to use a single backend for all their observability needs. With the vendor lock-in barrier removed, observability tools must deliver better features. Consider SigNoz, which leverages OTLP to provide out-of-the-box APM dashboards for each service. Using the standardized data formats, SigNoz easily correlates traces, metrics, and logs across the entire platform, giving users a holistic view that wasn’t possible earlier.

How OTLP Works Behind the Scenes

In the observability landscape, data is sacred, and data consistency is an expectation- there is no room for data loss or malformed data. Data loss over just 500ms can prevent engineers from understanding and fixing issues in an instrumented application.

OpenTelemetry built OTLP to deliver on these requirements while being efficient and highly performant with data transmission over the wire. It leverages the Protobuf message protocol to minimize the payload size and build a robust message schema, and smartly organizes and batches data to reduce network round trips. Further, it uses a request-response model that guarantees message delivery and enables servers to signal when under load, preventing data loss.

These design decisions enable OTLP to be performant and reliable across observability pipelines of all sizes, and to remain flexible to change as the observability landscape evolves.

Protobuf: for Speed & Stability

Protobuf is Google’s platform and language agnostic data serialization format, commonly used for structured data transmission between services.

Protobuf encodes messages in a compact binary format, reducing payload size and improving data transfer times. It also enforces strict schema compatibility rules, enabling services to maintain compatibility as message definitions evolve over time.

OTLP leverages these features to efficiently transfer binary messages that are faster to process than text-based formats like JSON. On receiving these messages, server nodes spend less CPU cycles parsing the byte stream compared to JSON text messages.

Core OpenTelemetry libraries also enjoy the flexibility to iterate quickly on smaller changes, without being blocked by the protocol versioning. Similarly, minor changes to OTLP do not force dependency updates, allowing libraries to develop at their own pace.

While Protobuf is the preferred (and the default) message schema, OTLP also supports JSON encoding for messages over HTTP networks. This is particularly useful in web environments where loading a full Protobuf library can balloon the JavaScript bundle size. It is also commonly used to maintain compatibility with existing ingestion solutions already on JSON, or for ease of debugging (due to JSON’s human-readable nature).

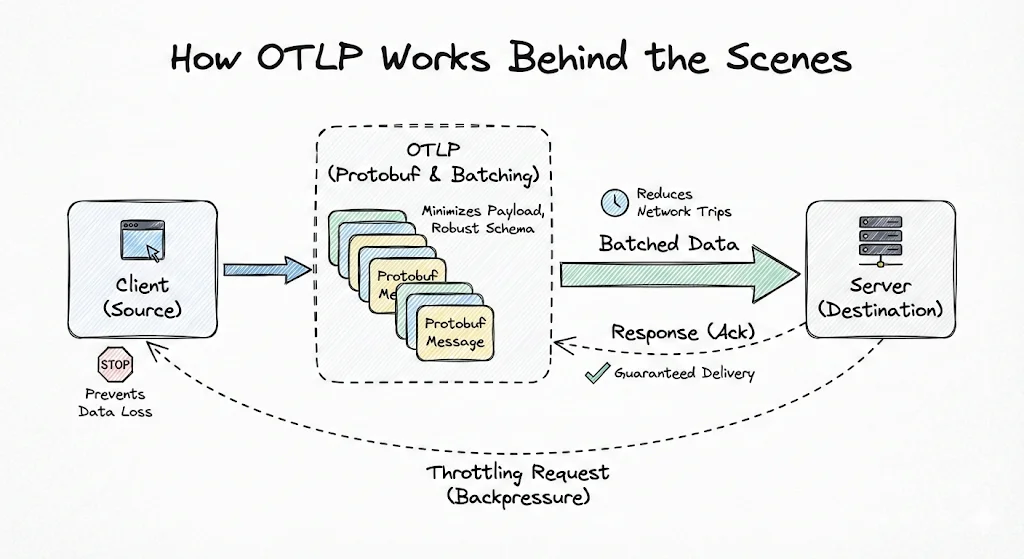

Guaranteed Delivery via Request-Response

If “data is sacred”, then “fire and forget” is not an option.

OTLP uses a strict request-response model where it works on a simple handshake principle - the client sends a request, and the server must explicitly parse, queue and acknowledge it before the transaction is considered complete. In case of transmission failures, the client retries the request to prevent any intermittent data loss.

Critically, OTLP limits this guarantee to one hop at a time- between a pair of nodes, such as an instrumented app and an observability backend. To achieve end-to-end reliability across the entire observability pipeline, OTLP chains these acknowledgement hops together, creating a path of continuous delivery.

OTLP servers can return one of three response types, with each response affecting the client’s next action:

- Full Success: The server validates and accepts the entire request payload. The client can send the next batch of data.

- Partial Success: A critical feature of OTLP, where servers ingest valid data while rejecting only the specific malformed items. The response includes details on the failure so the client knows exactly what went wrong. Since these rejections occur due to deterministic issues (like invalid formatting), the client does not retry such requests, saving CPU and network resources.

- Failure: Indicates that the server failed to process the data. Clients retry requests for transient errors such as network timeout. In case of permanent errors like data validation, the client drops the data to prevent blocking the pipeline with malformed requests.

Smart Batching and Data Organization

Network calls are expensive, and opening a new connection for sending one telemetry data point can critically slow down observability pipelines. OTLP groups data into batches to reduce this overhead. Not only that, it organizes the data to solve a massive pain point in legacy systems: data redundancy.

In older systems, metadata was often “flat”, where it was added to each individual data point. If you sent 100 log entries from your checkout service, each log entry would likely repeat the string service.name = checkout. This is a massive waste of bandwidth and storage.

OTLP solves this by structuring data hierarchically, preventing metadata duplication across the entire batch. This works because the entire batch is arriving from a single source. The hierarchy is:

- Resource: Resource sits at the root of the batch. This defines the entity producing the metadata (

service.name = payments). This is defined once per batch and every data point inherits it automatically. - Scope: The instrumentation scope is nested inside resource. This defines the exact code or library that generated the data (eg.,

telemetry.sdk.version = 1.38.1orio.opentelemetry.contrib.mongodb). - Data: Finally, the scope contains the actual payload: the list of

Spans,LogRecords, orMetricPoints, generated by the instrumented application. Because relevant metadata is already defined above, the data only contains details about the application event itself.

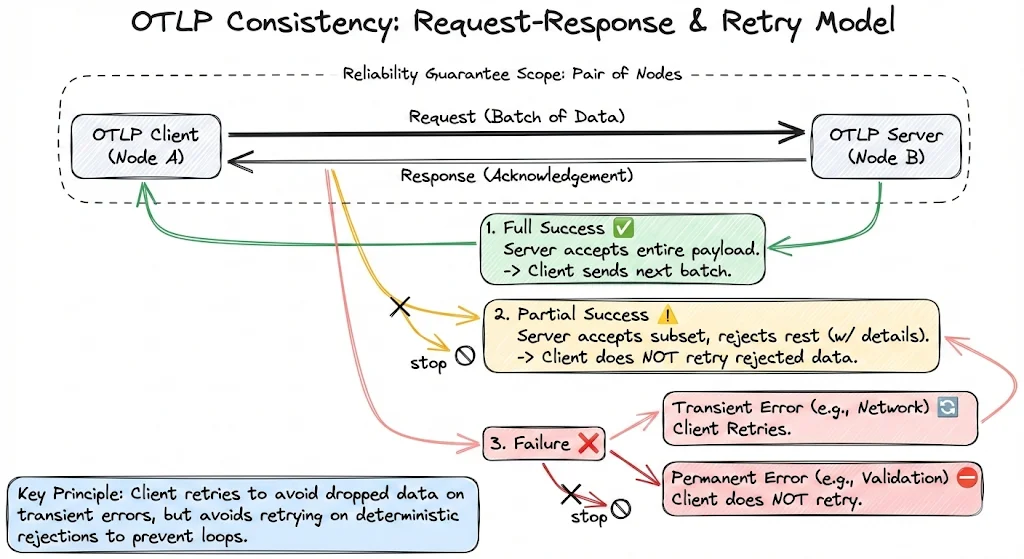

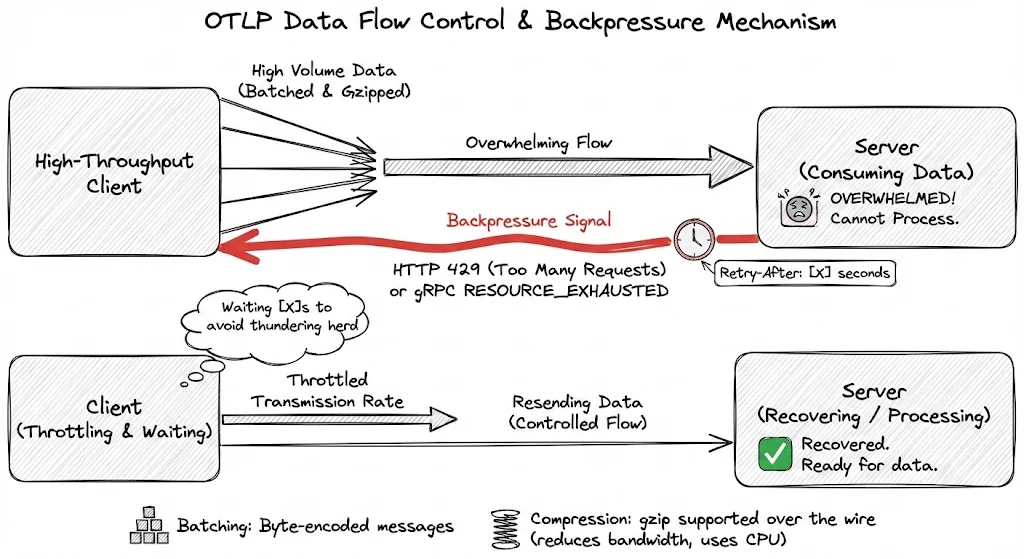

Built-in Backpressure and Flow Control

Backpressure occurs when a consumer cannot keep up with a producer, and forces the producer to slow down or block, to prevent crashes.

High-throughput OTLP clients can easily overwhelm the servers consuming their data. To handle these scenarios elegantly, OTLP integrates backpressure signalling directly into the protocol.

When a server is unable to deal with incoming data, rather than crashing silently, it responds with a specific signal (eg., HTTP 429 Too Many Requests response or RESOURCE_EXHAUSTED in gRPC). Crucially, it also includes a Retry-After header for HTTP, status codes and metadata for gRPC, that tells the client exactly how many seconds it should wait before trying again. This simple mechanism helps avoid the “thundering herd” problem, where clients continue retrying requests without delay, overwhelming the server completely.

Finally, OTLP supports gzip compression to support high-demand scenarios. This enables clients to significantly reduce network bandwidth by minimizing payload size, in exchange for some CPU cost (for compressing-decompressing the messages).

Transport Protocols: gRPC and HTTP

OTLP supports data transmission over two protocols: gRPC and HTTP. Both transports utilize the same underlying Protobuf schema, ensuring that your data remains consistent and corruption-free regardless of the protocol choice.

OTLP/gRPC: The Performant Default

By default, OTLP transmits data over gRPC on port 4317. It is the preferred medium for data transfer because of its efficiency.

gRPC, running on top of HTTP/2, leverages multiplexing- instead of opening a new TCP connection for each request, it allows multiple data streams (traces, logs, and metrics) to share the same TCP connection. This significantly reduces network overhead and CPU cost associated with managing connection handshakes, making it ideal for high-throughput environments.

OTLP/HTTP: Universal Fallback

While gRPC is performant, it may not be accepted in strict network environments. Certain firewalls, proxies or old web environments may not accept gRPC or the underlying HTTP/2 traffic. HTTP is also the standard for browser-based environments (such as SPAs), which cannot easily use gRPC.

In this mode, clients send data to signal-specific endpoints like /v1/traces via standard POST requests. OTLP over HTTP is flexible regarding payload formats: clients use Protobuf for data encoding by setting the Content-Type: application/x-protobuf header. Alternatively, for easier debugging or tools that lack Protobuf support, you can send standard JSON.

Using JSON in pre-production or test environments might be ideal, as engineers can easily read the payload without any decoding.

Watch Out: OTLP enforces strict rules for JSON to ensure data compatibility. Most commonly, OTLP requires traceId and spanId to be represented as hexadecimal strings, not base64.

- Correct:

"traceId": "5b8efff7..." - Incorrect:

"traceId": "W47/95gD..."(Base64)

Implementing OTLP in Your Tech Stack

You have now gained a deep understanding of what OTLP is, why it’s important, and how its internals work. The next logical step is to integrate OpenTelemetry into your tech stack. You can get started by following our documentation for Java instrumentation or Python instrumentation.

You will also require an observability platform to ingest and visualize your telemetry data when instrumenting your applications. SigNoz is built natively on OpenTelemetry and OTLP.

You can choose between various deployment options in SigNoz. The easiest way to get started with SigNoz is SigNoz cloud. We offer a 30-day free trial account with access to all features.

Those who have data privacy concerns and can't send their data outside their infrastructure can sign up for either enterprise self-hosted or BYOC offering.

Those who have the expertise to manage SigNoz themselves or just want to start with a free self-hosted option can use our community edition.