Top 7 LangSmith Alternatives for LLM Observability in 2026

TL;DR

- SigNoz: Best for unified LLM and full-stack application observability (traces, metrics, logs) in a single platform. It offers significantly cheaper tracing costs compared to specialized LLM-only tools.

- Langfuse: Best for teams wanting framework-agnostic observability with full self-hosting control, delivering deep evals and dataset tools without vendor lock-in, though it requires some setup for custom integrations.

- HoneyHive: Best for complex multi-agent setups needing session replays and automations, with MIT-licensed self-hosting and CI/CD flows, provided you don't mind the focus on production alerts over basic prototyping.

LangSmith by LangChain is a unified platform for developing, collaborating, testing, deploying, and monitoring LLM applications. Teams turn to it primarily for its tracing capabilities that make complex agent chains visible and debuggable, along with evaluation frameworks for testing prompts and a safe playground for experimentation. For teams already invested in LangChain, it's a natural starting point that delivers value quickly.

For early-stage projects or teams deeply embedded in the LangChain ecosystem, these features deliver solid value without requiring much setup. However, scaling reveals some friction points. Per-trace pricing climbs quickly as your production traffic grows, making costs harder to predict and manage. The tight coupling with LangChain becomes restrictive when you want to experiment with other frameworks or adopt a multi-framework architecture. Self-hosting exists as an alternative, but comes with data retention constraints and integration challenges that demand additional engineering effort to work around.

Top LangSmith Alternatives You Can Consider

In the upcoming sections, we'll explore top LangSmith alternatives that address scaling challenges, comparing framework flexibility like native support beyond LangChain, cost structures, and observability depth like evaluation frameworks or production monitoring features to help you find the solution that fits your LLM development workflow.

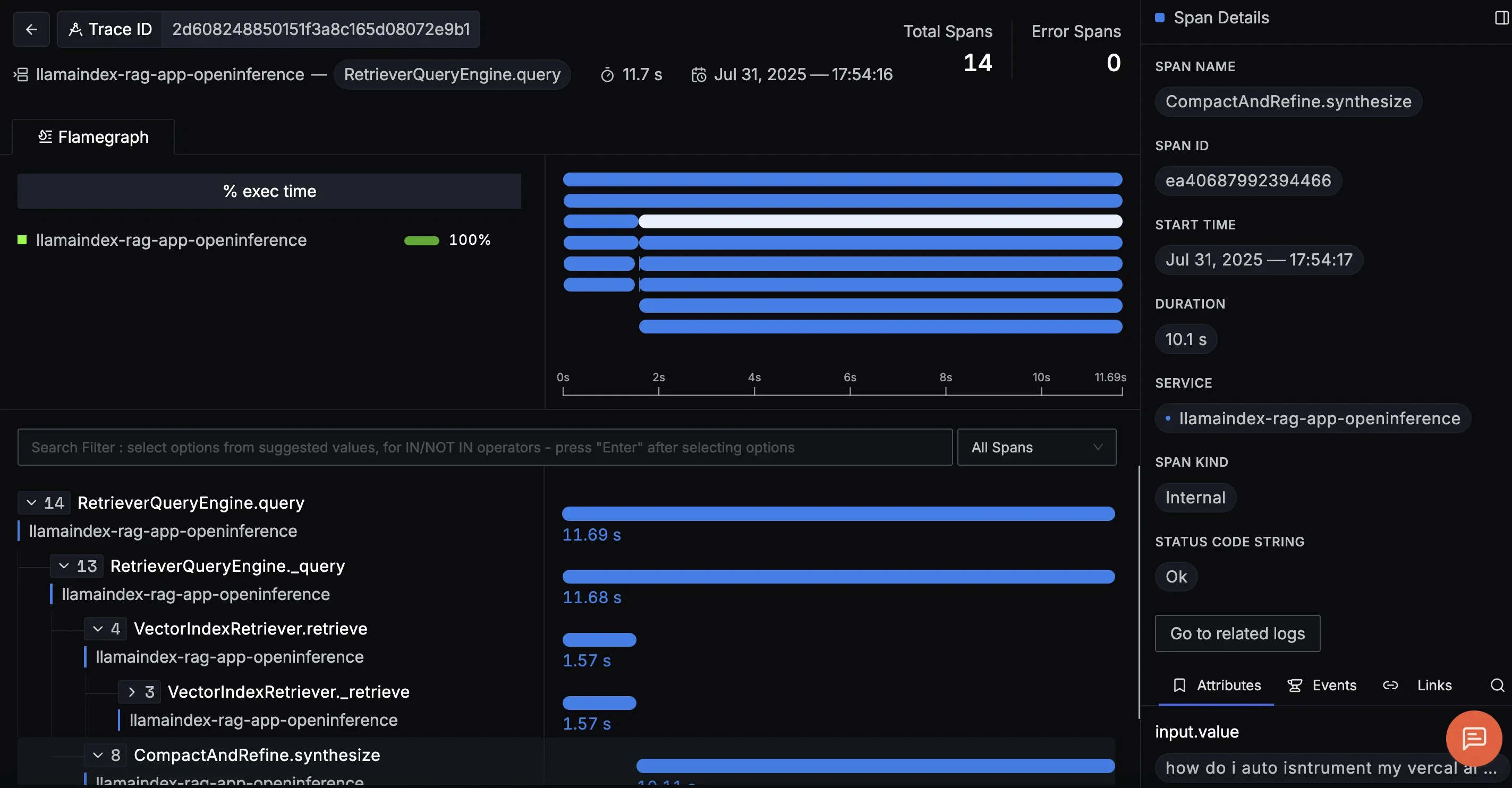

SigNoz: LLM + Application Observability in One Platform

SigNoz is an OpenTelemetry-native observability platform designed for full-stack production monitoring with broad framework support.

Key Features

- Full-Stack Production Monitoring: SigNoz provides distributed tracing for LLM request flows, metrics for latency/token usage/costs, and unified logs for error debugging, enhanced by pre-built dashboards for LLM-specific insights like token consumption and response times. Anomaly detection and signal correlation enable end-to-end visibility, integrating LLMs with infrastructure.

- OpenTelemetry-Native Architecture: SigNoz is an OpenTelemetry-native observability backend. It works with any LLM framework as long as you emit OpenTelemetry traces, metrics, or logs.

Why consider SigNoz over LangSmith?

SigNoz provides framework-agnostic observability through OpenTelemetry standards, supporting over 20 LLM frameworks with native instrumentation for LangChain, LlamaIndex, OpenAI, Anthropic, and custom setups. It offers a free open-source community edition for self-hosting with no licensing fees, managed SigNoz Cloud, and enterprise BYOC options for compliance-sensitive deployments with data sovereignty. LangSmith, on the other hand, integrates deeply with the LangChain ecosystem for LLM-specific tracing, evaluation, and debugging, providing features like Agent Builder for no-code prototyping, Studio for visual design, and evaluation frameworks with dataset management, optimized for agent-based development with managed cloud as the primary offering and enterprise self-hosting available.

Both platforms provide observability, but with different focuses. SigNoz emphasizes full-stack production monitoring with distributed tracing, metrics dashboards, anomaly detection, and signal correlation across LLMs and traditional services making it a better choice for end-to-end visibility in live environments without ecosystem lock-in. LangSmith, on the other hand, provides specialized evaluation frameworks with built-in quality measurement tools, offline/online evals, annotation queues for human feedback, and insights for performance analysis.

Both platforms support self-hosting and cloud options, but structure pricing differently. SigNoz uses transparent ingest-based pricing with startup discounts available, and self-hosting incurs only infrastructure costs, often significantly cheaper for metric-heavy workloads without per-user fees. LangSmith employs seat-based plus usage-driven pricing with a free Developer plan, paid tiers that scale with team size and trace volume, and custom enterprise pricing. Costs can increase for growing teams due to per-trace billing, though it includes generous free tiers for experimentation.

Get Started with SigNoz

You can choose between various deployment options in SigNoz. The easiest way to get started with SigNoz is SigNoz Cloud. We offer a 30-day free trial account with access to all features.

Those with data privacy concerns who can’t send their data outside their infrastructure can sign up for either the enterprise self-hosted or BYOC offering.

Those with the expertise to manage SigNoz themselves, or who want to start with a free, self-hosted option, can use our community edition.

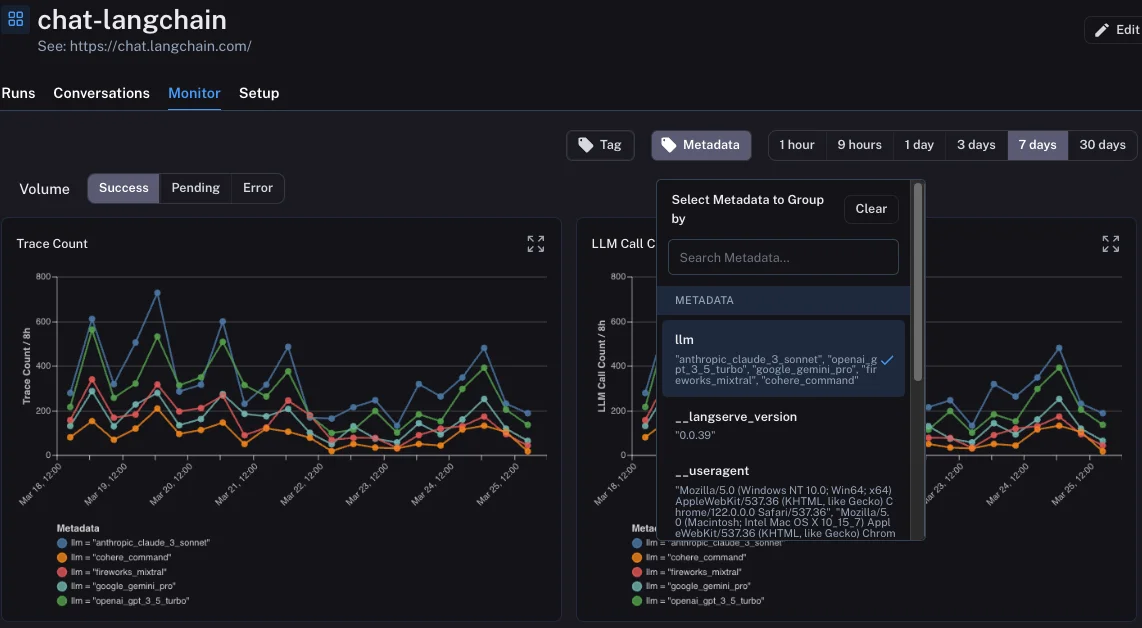

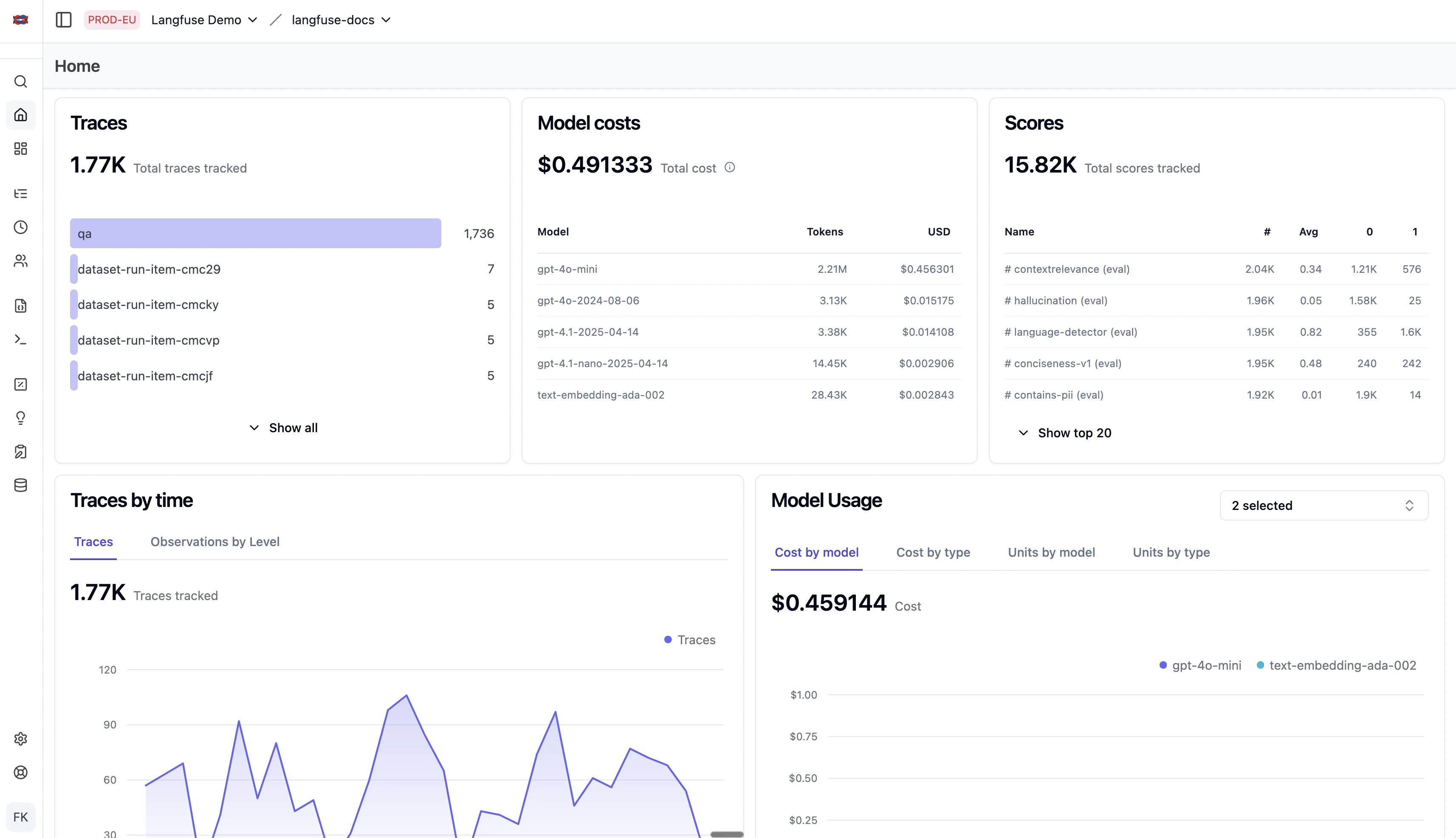

Langfuse: Self-Hosted Flexibility

Langfuse brings production-grade LLM observability to any framework while keeping your data under your control.

Key Features

- Framework-Agnostic Observability: Langfuse supports OpenTelemetry-based tracing that works across any LLM framework and native integrations with over 20 libraries. This eliminates vendor lock-in and enables consistent observability across diverse LLM stacks.

- Dataset Synthesis & Evaluation Studio: Automatically generates evaluation datasets from your production traces, creates adversarial test cases, and builds regression test suites.

Why consider Langfuse over LangSmith?

Langfuse gives you deployment flexibility, you can either use their cloud service or host it yourself with Docker or Kubernetes. Since it's open-source (MIT license), you control your data completely and can customize whatever you need. It's built to be framework-agnostic, so it works with any LLM stack, not just one ecosystem.

LangSmith, on the other hand is tightly integrated with LangChain, making it easy if you're already in that ecosystem. It excels at building and debugging agentic workflows with its graph-based tools and comes with powerful built-in evaluation features. It's primarily a managed service, though enterprise teams can arrange self-hosting.

Langfuse charges based on usage, which makes adding team members free. You also get S3-compatible storage to archive old data cheaply, keeping costs predictable even as your dataset grows to millions of traces. LangSmith pricing is seat-based with trace-based usage, meaning each new team member increases your bill. Enterprise pricing requires custom quotes, making it hard to predict costs when your AI workloads spike or your team expands.

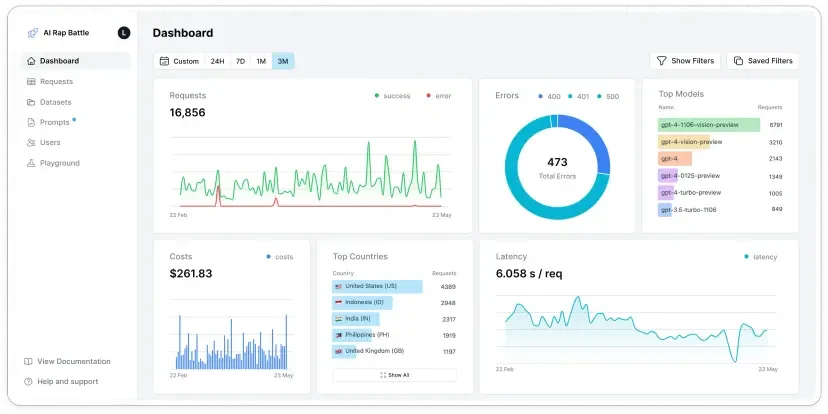

Helicone: Proxy-Based Monitoring

Helicone is an LLM observability platform built as a lightweight proxy for logging, monitoring, and cost management across multiple LLM providers.

Key Features

- Proxy-Based Architecture: Helicone operates as a lightweight proxy that routes LLM requests through its endpoint, enabling seamless integration with just a URL change and no code refactoring.

- Real-Time Feedback and User Analytics: Helicone captures end-user feedback directly within your LLM applications through built-in feedback widgets and APIs, automatically correlating user ratings with specific prompts, model responses, and cost metrics.

Why consider Helicone over LangSmith?

Helicone works with any LLM provider or framework through its simple proxy architecture. Whether you're using LangChain, LlamaIndex, Haystack, or calling APIs directly, Helicone tracks everything without vendor lock-in. You can self-host for data control or use their managed cloud service.

On the other hand, LangSmith is purpose-built for LangChain and LangGraph, offering zero-config setup if you're in that ecosystem. It provides deep tracing for agent workflows, visual graph debugging, and sophisticated evaluation tools that shine when you need to diagnose complex multi-step agents.

Pricing-wise, Helicone uses request-based pricing that stays predictable as volume scales, making it economical for production workloads. LangSmith charges per trace, which can add up quickly at production scale since every request counts individually.

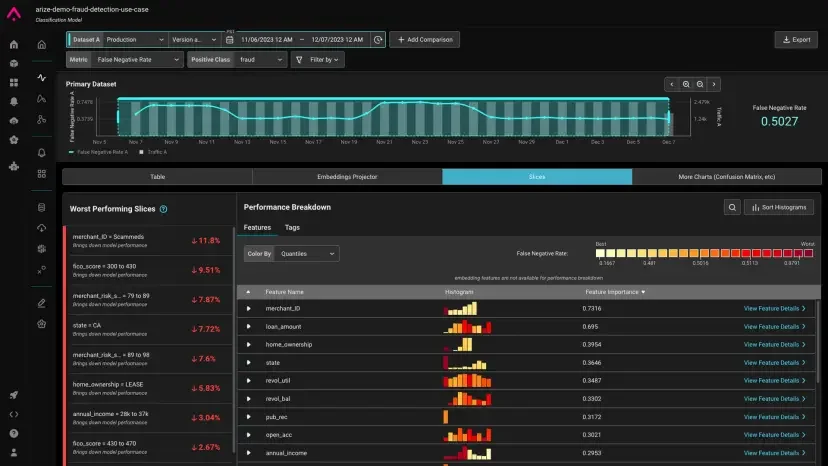

Arize Phoenix: ML-Native Tracing

Arize Phoenix merges traditional ML observability with modern LLM monitoring, excelling at drift detection and embedding analysis.

Key Features

- Embedding Visualization and Drift Detection: Phoenix provides interactive UMAP visualizations of embedding spaces, allowing you to explore clusters of similar prompts, responses, and retrieved documents in 2D/3D space.

- Advanced ML-Specific Tools: The platform provides embedding visualizations for semantic search optimization, drift detection for model performance shifts, fine-tuning support through dataset curation, and production safeguards, including real-time guardrails against hallucinations or bias.

Why consider Arize Phoenix over LangSmith?

Arize Phoenix is framework-agnostic and fully open-source, letting you deploy via Docker or Python without vendor lock-in. It brings ML-focused tools like embedding visualizations, drift detection, and specialized evaluations for hallucinations and RAG quality. Enterprise teams can upgrade to Arize AX for managed hosting with advanced security features and compliance certifications.

Alternatively, LangSmith integrates seamlessly with LangChain and LangGraph, offering zero-config setup for that ecosystem. It provides comprehensive tracing, interactive prompt playgrounds, and evaluation frameworks optimized for iterative development within LangChain workflows.

Phoenix's open-source version is completely free for self-hosting with no licensing fees, making it accessible for any team size. Arize AX uses custom enterprise pricing that scales with usage and includes compliance features. LangSmith offers usage-based pricing with a free tier and transparent paid plans that grow with your team.

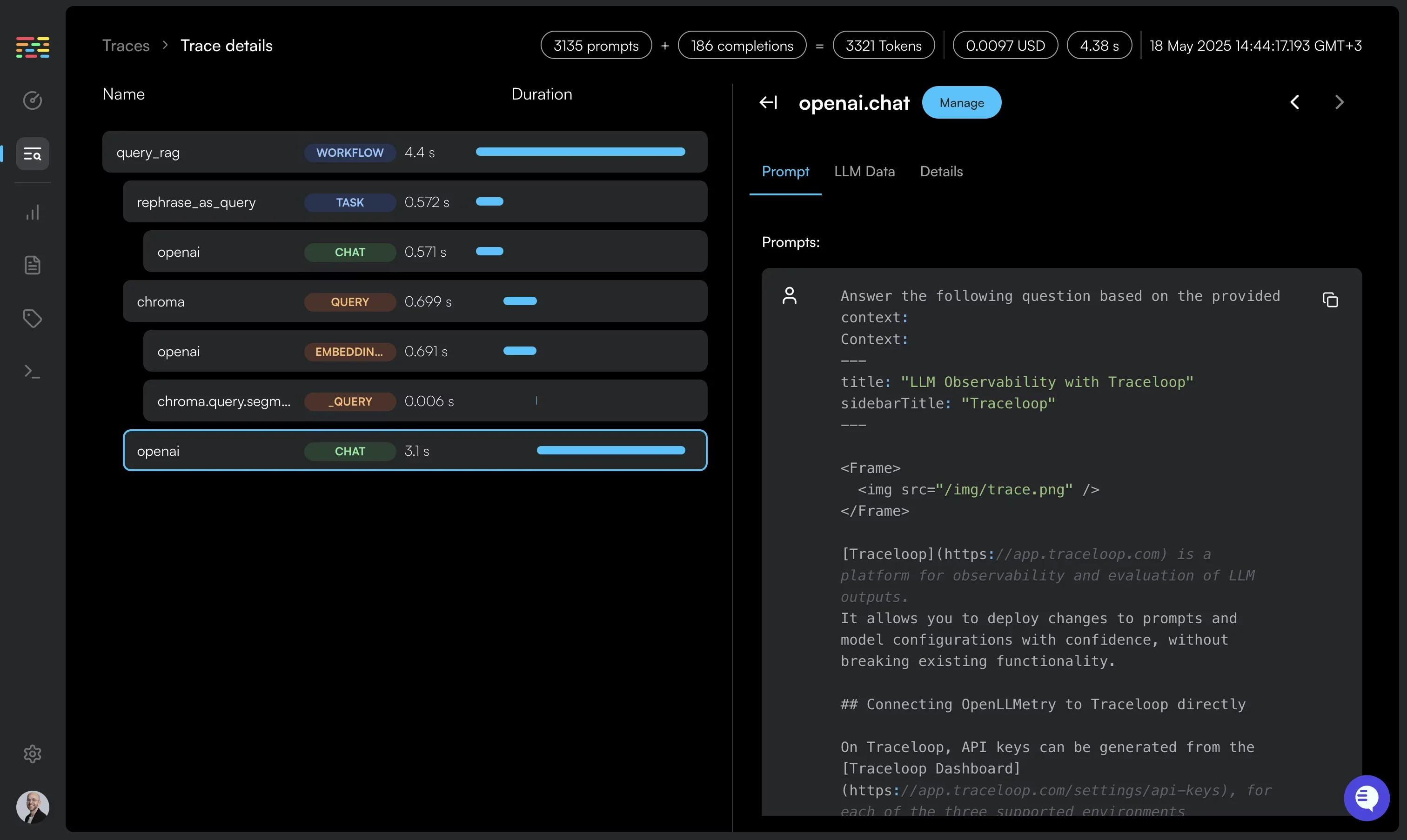

OpenLLMetry: Non-Intrusive OTel for Existing Stacks

OpenLLMetry leverages OpenTelemetry to slot LLM observability into your existing monitoring stack seamlessly.

Key Features

- OpenTelemetry-Native Architecture: OpenLLMetry automatically instruments LLM interactions as tracing spans with error correlation in RAG pipelines or multi-agent workflows, exporting data in OpenTelemetry format to over 25 backends.

- Privacy-First Local Deployment: OpenLLMetry can be deployed entirely on-premises or within your own VPC with complete data sovereignty, sending zero telemetry data to external servers.

Why consider OpenLLMetry over LangSmith?

OpenLLMetry is built on OpenTelemetry standards, making it vendor-neutral and compatible with your existing observability tools like Grafana, Datadog, or New Relic. It's fully open-source under Apache 2.0 and works with any framework like LangChain, Haystack, LlamaIndex, or custom implementations. You deploy it wherever you want and pipe data to the backend you already use.

LangSmith is a purpose-built platform for LangChain and LangGraph with seamless integration. It offers polished evaluation frameworks, human-in-the-loop workflows, and an intuitive collaborative interface designed for production monitoring within the LangChain ecosystem.

In pricing, OpenLLMetry's core SDK is completely free, and you only pay for your chosen backend. Optional managed hosting starts affordably without per-user fees. LangSmith uses a freemium model with usage-based pricing that scales with your team and trace volume. Costs are predictable for LangChain workflows, but can climb at high production volumes.

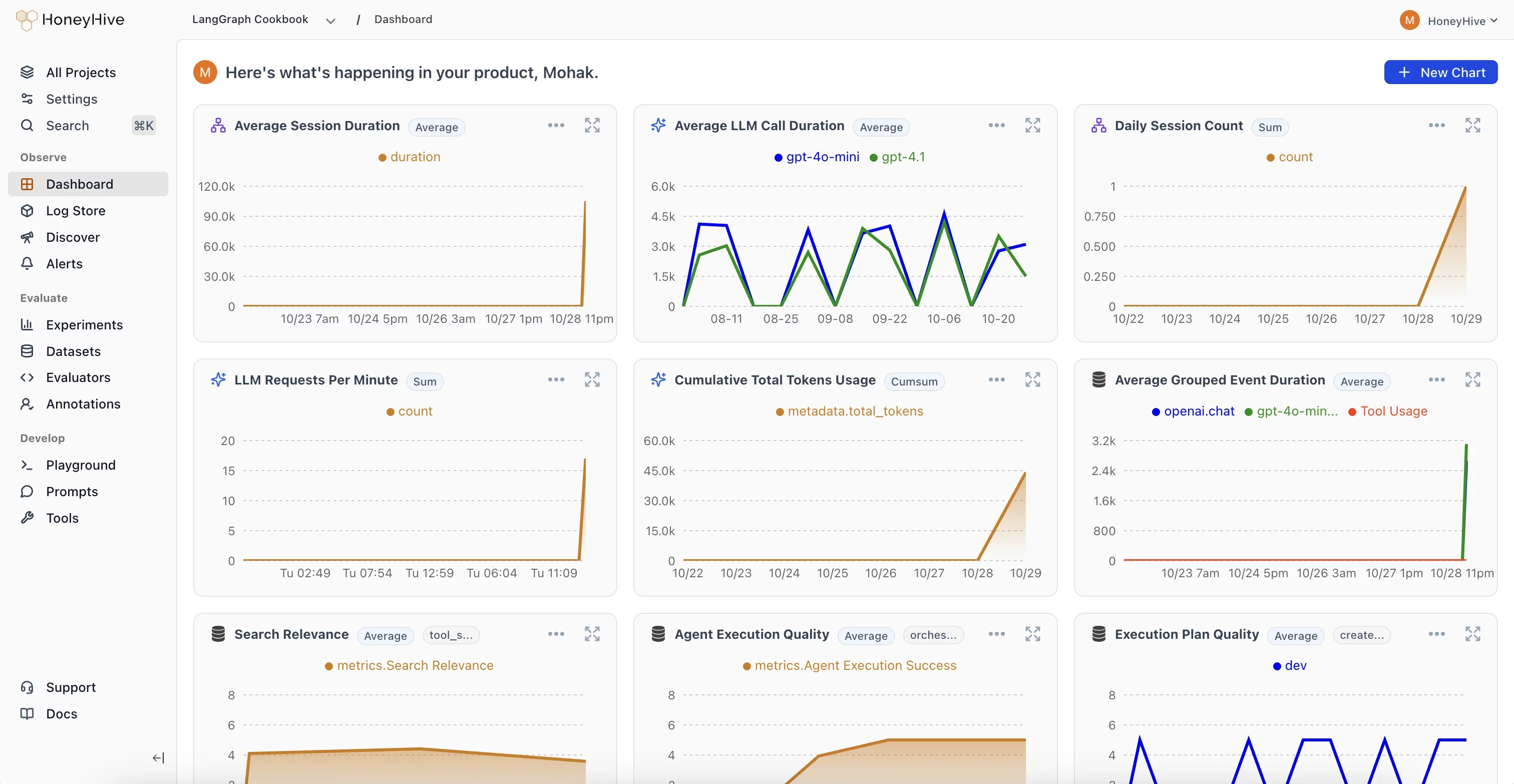

HoneyHive: Agent Reliability Platform

HoneyHive provides end-to-end observability and evaluation tooling purpose-built for complex multi-agent architectures in production.

Key Features

- Agent-Centric Observability: HoneyHive provides distributed tracing for multi-step AI pipelines involving prompts, retrieval, tool calls, and model outputs, with session replays and graph/timeline views for debugging multi-agent interactions.

- Production Automations: The platform offers continuous alerts for cost, safety violations, or quality drops, with automations that route failing prompts to human review queues, add problematic cases to datasets automatically, and integrate with CI/CD for automated test suites.

Why consider HoneyHive over LangSmith?

HoneyHive is framework-agnostic and built on OpenTelemetry standards, working seamlessly with LangGraph, AutoGPT, or any custom agent framework. HoneyHive is a commercial platform offering enterprise deployment options that include self-hosting or BYOC. It has Git-native versioning and CI/CD integration, making it feel like a developer tool.

LangSmith integrates deeply with LangChain and LangGraph for zero-friction debugging and tracing. It offers AI-powered insights like conversation clustering, asynchronous data collection to avoid latency, and comprehensive evaluation frameworks optimized for LangChain workflows.

Pricing-wise, HoneyHive pricing is event-based with published limits on the free tier (e.g., 10K events per month) and custom Enterprise plans for higher limits and deployment options. LangSmith uses trace-based pricing with a free tier for limited usage and paid plans that scale with volume. Costs can grow quickly in high-volume agent applications, though all evaluation features are included.

Confident AI: Testing-First Evaluation

Confident AI is a cloud-based LLM evaluation and observability platform built on the open-source DeepEval framework.

Key Features

- Research-Backed Evaluation Metrics: Confident AI provides over 50 metrics for agents, RAG, chatbots, and multi-turn interactions, including LLM-as-a-judge evaluators, golden datasets for regression testing, and drift detection.

- Hybrid Local-to-Cloud Workflow: The platform enables developers to run evaluations locally via the open-source DeepEval library before scaling to the cloud for team-wide observability, production monitoring, and human-in-the-loop annotations.

Why consider Confident AI over LangSmith?

Confident AI is framework-agnostic and built on its open-source DeepEval foundation, working with any LLM setup through Python-first workflows. Developers run evaluations locally as pytest-style unit tests, then scale to the cloud for team collaboration.

LangSmith integrates seamlessly with LangChain and LangGraph, offering native support for chains and agents with real-time streaming of execution steps. It provides an interactive playground for prototyping and enterprise self-hosting options, optimized for LangChain production workflows.

Pricing-wise, Confident AI uses simple per-user pricing with a forever-free tier and uses seat-based pricing plus usage limits or overages, including trace volume limits and retention tiers. LangSmith combines seat-based and consumption pricing with a free tier, then charges per user plus additional fees based on trace volume. Costs can grow at scale but provide deep value for high-volume LangChain applications.

Summary: Top LangSmith Alternatives

| Tool | Core Focus | Key Advantages Over LangSmith |

|---|---|---|

| Langfuse | Open-Source LLM Tracing with Strong Self-Hosting | Framework-agnostic with OpenTelemetry-based tracing and 20+ library integrations, eliminating vendor lock-in. Dataset synthesis and evaluation studio for automated adversarial testing. Deployment flexibility with MIT-licensed self-hosting via Docker or Kubernetes. |

| Helicone | Proxy-Based Monitoring | Proxy architecture for seamless integration via URL change without code refactoring, supporting any LLM provider or framework. Real-time feedback widgets and user analytics correlating ratings with prompts, models, and costs. |

| Arize Phoenix | ML-Native Tracing | Framework-agnostic and fully open-source with Docker or Python self-hosting for no vendor lock-in. Embedding visualizations for semantic search optimization and drift detection for model shifts. Advanced ML tools like fine-tuning support and real-time guardrails against hallucinations or bias. |

| OpenLLMetry | Non-Intrusive OTel for Existing Stacks | OpenTelemetry-native architecture for automatic instrumentation of LLM interactions with export to 25+ backends like Grafana or Datadog. Privacy-first local deployment with complete data sovereignty and no external telemetry. |

| HoneyHive | Agent Reliability Platform | Agent-centric observability with distributed tracing for multi-step pipelines and session replays or graph views. Production automations for continuous alerts on cost, safety, and quality with human review routing and CI/CD integration. |

| Confident AI | Testing-First Evaluation | Research-backed 50+ metrics for agents, RAG, and multi-turn with LLM-as-judge and golden datasets. Hybrid local-to-cloud workflow via open-source DeepEval for pytest-style, testing scaling to production monitoring. |

| SigNoz | Unified OTel for AI Pipelines | Full-stack production monitoring with distributed tracing, metrics, and logs and anomaly detection correlating AI with infrastructure. OpenTelemetry-native supporting high-cardinality efficiency. Flexible open-source self-host, BYOC, and cloud deployment with ingest-based transparent pricing and no per-user fees. |

We hope we answered all your questions about LangSmith alternatives. If you have more questions, feel free to use the SigNoz AI chatbot or join our Slack community.

You can also subscribe to our newsletter for insights from observability nerds at SigNoz, and get open-source, OpenTelemetry, and devtool-building stories straight to your inbox.