OpenTelemetry - Understanding Traces vs. Spans

Distributed systems have become increasingly complex, making monitoring and troubleshooting performance issues challenging. OpenTelemetry emerges as a powerful solution to this problem, offering standardized observability for cloud-native applications. At the heart of OpenTelemetry's tracing capabilities lie two fundamental concepts: traces and spans. Understanding the distinction between these elements is crucial for developers and operations teams seeking to implement robust observability in their systems.

What is OpenTelemetry?

OpenTelemetry is an open-source observability framework designed to generate and collect telemetry data from cloud-native software. It provides a unified set of APIs, libraries, agents, and instrumentation to capture distributed traces, metrics, and logs from your applications and infrastructure. The project emerged from the merger of OpenCensus and OpenTracing, two popular observability projects. This consolidation aimed to create a single, vendor-neutral standard for telemetry data collection and transmission.

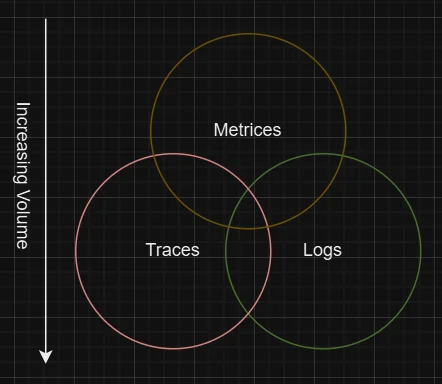

OpenTelemetry consists of three key components:

- Traces: Represent the journey of a request as it travels through the various components and services within a distributed system. Traces help identify performance bottlenecks, pinpoint the root causes of latency, and provide a holistic view of how services interact and respond under different conditions.

- Metrics: Capture numerical measurements of system performance over time, including values like CPU usage, memory consumption, error rates, and request latency. Metrics are typically gathered in intervals and can be used to analyze trends, set alerts, and quickly assess the health and performance of services.

- Logs: Record discrete events within the system, capturing detailed information about what happened at specific times. Logs provide the granular details needed to understand why and how issues occur, supplementing traces and metrics with information about specific errors, exceptions, or unexpected behavior within each component.

Why is it important?

The importance of OpenTelemetry lies in its ability to:

- Provide a consistent approach to instrumentation across different languages and frameworks: OpenTelemetry offers a unified API and SDKs for multiple languages, making it easier to instrument applications consistently. This eliminates the need to learn and manage separate instrumentation techniques for each language or framework, simplifying observability for teams that work with polyglot environments.

- Offer vendor-neutral data collection, allowing you to switch between various backend analysis tools: OpenTelemetry is built to work seamlessly with various observability backends, allowing you to select a tool that best suits your needs without being tied to a specific vendor. It includes the OpenTelemetry Collector, which can receive trace data in multiple formats. Additionally, the Collector offers processors and exporters, enabling you to export the collected data in your desired format.

- Reduce the overhead of maintaining multiple instrumentation libraries: OpenTelemetry combines tracing, metrics, and logging under one umbrella, reducing the burden of maintaining and updating multiple libraries. This unified approach also reduces technical debt and helps ensure compatibility, as there’s no need to maintain separate, potentially conflicting instrumentation solutions.

- Reduced overhead for telemetry data: OpenTelemetry minimizes the overhead on your application for generating and managing telemetry data. It decouples your application from the OpenTelemetry implementation by providing a user-friendly API for interaction. Telemetry is gathered by OpenTelemetry Collectors, which can receive, process, and export data in various formats.

- Enhance the overall observability of complex, distributed systems: OpenTelemetry enables comprehensive visibility across distributed systems by correlating traces, metrics, and logs. This holistic approach improves situational awareness, allowing teams to detect issues faster, understand their impact, and troubleshoot effectively across services and infrastructure layers, leading to higher system reliability and optimized performance.

Demystifying OpenTelemetry Traces

In OpenTelemetry, a trace captures the complete journey of a request as it navigates through different components in a distributed system. It serves as a detailed map, outlining each step of the request’s lifecycle from entry to final response.

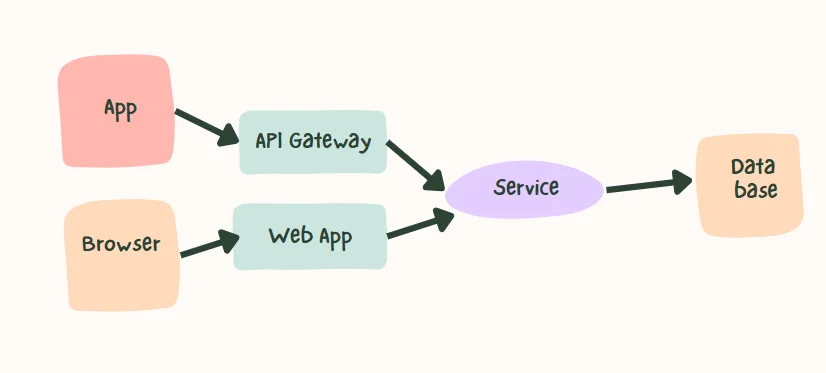

OpenTelemetry Tracing is especially beneficial in microservices architectures, where applications consist of numerous independent services collaborating to handle user requests. In such architectures, traces are invaluable as a single user action can initiate a series of internal service calls. Tracing these requests enables you to:

- Identify performance bottlenecks across service boundaries.

- Understand the dependencies between different components.

- Troubleshoot errors by pinpointing where in the request lifecycle they occur.

Anatomy of an OpenTelemetry Trace

To fully grasp the concept of traces, let's break down their key components:

Start and End Timestamps: Every trace has a defined beginning and end, marking the duration of the entire operation.

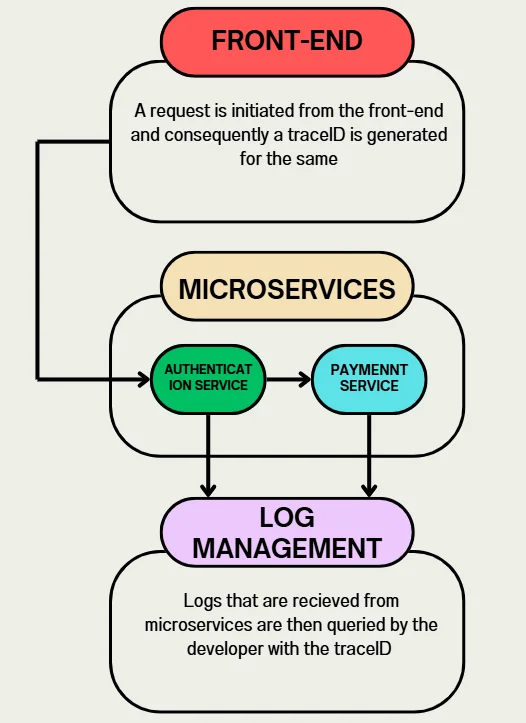

Trace Context: This is a set of globally unique identifiers that are propagated across service boundaries. It includes a Trace ID, Span ID and Trace Flags. Let’s take an example to understand this concept.

Imagine you're a user placing an order on an e-commerce site. You add items to your cart, proceed to checkout, and finalize the purchase. In the background, this entire flow is captured with tracing data, which helps developers monitor each part of the process for potential issues. Here’s how the flow goes:

Trace ID: The system then assigns a unique Trace ID, like

12345-abcde, to track your order from start to finish. This ID links every step of your transaction, ensuring each part of the flow is associated with your specific order.Span ID: Each operation within this trace is tracked with a Span ID, a unique identifier for each step. For instance:

- Adding items to your cart has a Span ID (

span1). - Checking out has a different Span ID (

span2). - Processing the payment has yet another Span ID (

span3).

By linking each Span ID to the same Trace ID, systems like Loki can group related spans, providing a full view of your order journey.

- Adding items to your cart has a Span ID (

Trace Flags: These flags contain additional metadata about your trace, like whether it was sampled for performance analysis or if an error occurred. For example, if your payment fails, Trace Flags might highlight an error in the payment span (

span3), making it easy for developers to pinpoint and troubleshoot the issue.

Using this approach, a developer can filter logs by the correlation ID (such as

correlation-id: 12345-abcde) to see all log entries related to your specific order. This way, if a step in your order process encounters an error, Loki can quickly surface the problem by connecting each span back to the Trace ID.Trace Propagation: The mechanism by which trace context is passed between different services or components. This ensures that all parts of a distributed operation are connected under the same trace.

Trace Propagation Flow In our example, once you placed the order, the frontend service assigned a unique Trace ID to represent your entire transaction. This ID traveled with your request to the backend Order Service, which processed it and handed it off to the Payment and Authentication services, each retaining and logging the Trace ID. By consistently passing the Trace ID across all these services, developers can follow the transaction end-to-end, easily identifying any issues (such as payment failure) by filtering logs with the shared Trace ID and viewing each service’s actions within the context of the complete order process.

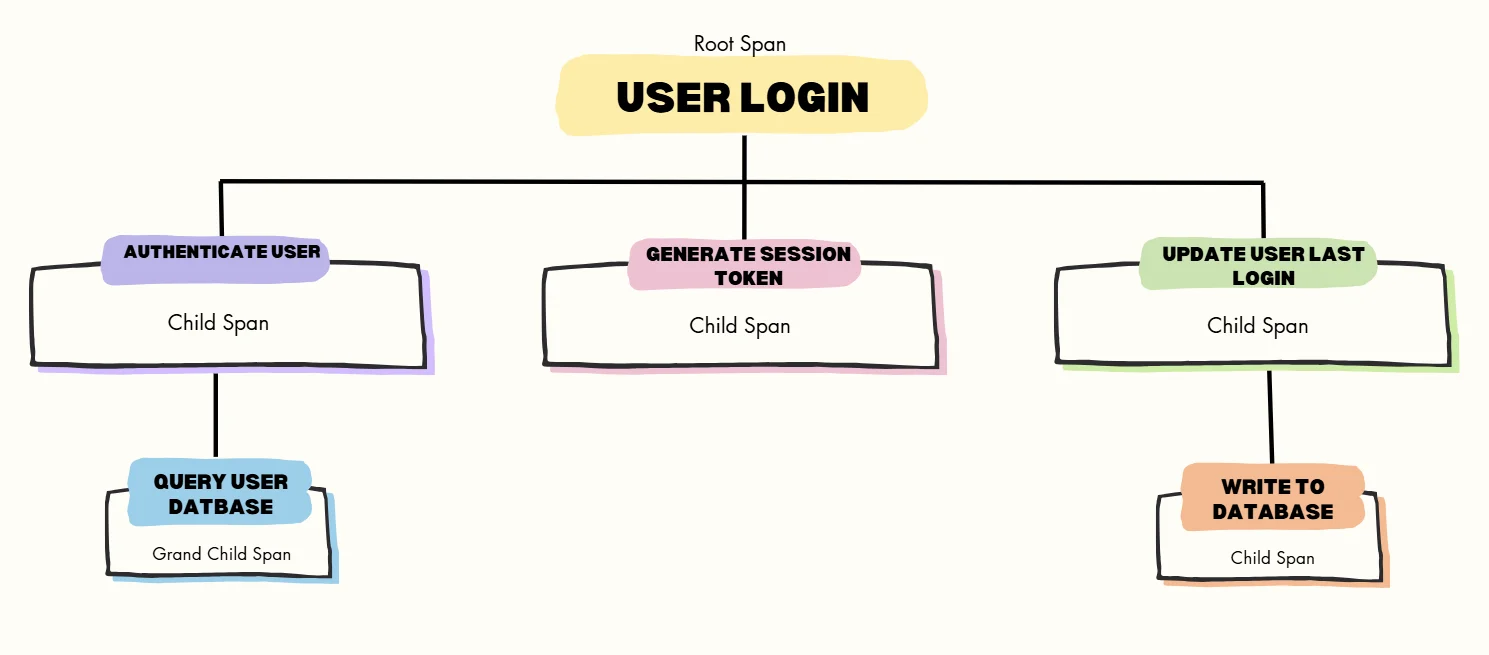

Span Hierarchy: This describes the connections between spans in a trace, typically organized in a tree structure. In OpenTelemetry, spans define causal relationships, allowing a trace to be viewed as a directed acyclic graph (DAG) of spans, where parent-child relationships are represented as edges. A trace includes multiple spans, with the root span representing the overall operation and child spans indicating sub-operations or service calls. As a request flows through a service, the trace context is propagated via trace headers, enabling downstream services to join the trace. Each service generates its own span, updating the trace context with its operation's duration and relevant metadata.

Here's a simplified example of how a trace might look in a microservices environment:

This structure allows you to see not just the overall duration of the login process, but also the time taken by each sub-operation and their relationships.

Deep Dive into OpenTelemetry Spans

While traces provide the big picture, spans focus on the details. A span represents a single operation within a trace — it's the most granular unit of work in OpenTelemetry tracing.

Each span encapsulates the following:

Operation Name

The operation name represents a description of the action the span is capturing, often related to the service or component executing it. For instance, an operation name could be HTTP GET /products for an HTTP request, DB SELECT products for a database query, or processOrder for a specific function. This label makes it easy to identify what each span represents, aiding in quick navigation and analysis of the trace.

Start And End Time

Every span records its precise start and end time, capturing the duration of the operation it represents. This timing data allows you to measure latency and understand the time spent on each operation. By comparing the timing of different spans, you can pinpoint which parts of a trace are taking the longest, helping identify bottlenecks and optimize performance across various system components.

Set Of Attributes

Spans can have attributes: key-value pairs that add context about the tracked operation. For example, in an eCommerce system, a span for adding an item to a cart might include the user ID, item ID, and cart ID. Attributes are best added at span creation to ensure availability for SDK sampling, though they can be updated later if needed.

Span Events

A Span Event can be considered a time-stamped log message or annotation linked to a Span, usually highlighting a significant moment within the Span's duration.

For instance, consider two scenarios in a web browser:

- Tracking a page load

- Indicating when a page becomes interactive

A span is more appropriate for the first scenario, representing an operation with a defined start and end. In contrast, a Span Event is ideal for the second scenario, as it captures a specific, meaningful moment in time.

SpanContext

SpanContext is a data structure provided by tracing libraries like OpenTelemetry to carry key identifying details about a span across process boundaries. It usually holds the trace ID, span ID, and trace flags, allowing distributed tracing systems to connect spans across services. This structure includes all essential information to identify a span within a trace and must be propagated to child spans and across process boundaries, passing tracing identifiers and options from parent to child spans.

TraceId: This is the identifier for a trace, generated as 16 randomly created bytes, making it globally unique with a high probability. TheTraceIdgroups all spans associated with a specific trace across all processes.SpanId: This identifies a span and is generated as 8 randomly created bytes, ensuring global uniqueness with a high probability. When passed to a child Span, this identifier serves as the parentSpanId.TraceFlags: This represents the options for a trace, encoded as a single byte (bitmap). It includes a sampling bit (mask 0x1) that indicates whether the trace is sampled.Tracestate: This carries vendor-specific tracing context in a list of key-value pairs, allowing different vendors to propagate additional information and interoperate with their legacy ID formats.Links: Links between spans also play a role in tracing. Refer to the next section for more details.

Links To Other Spans

A Span can link to multiple related Spans through SpanContext, across the same or different Traces. These links are useful for batched operations where a Span is triggered by several Spans processing individual items, or when a new Trace is created upon entering a service's trusted boundaries to represent long-running tasks. In scatter/gather patterns, a root operation spawns multiple downstream processes, consolidated into a single Span that links back to all the operations from the same Trace. Unlike traditional parent-child relationships, scatter/gather scenarios avoid setting a single parent Span, as they often involve multiple originating contexts.

Operation Status

Each span has a status, which can be one of three values:

| Status | Explanation |

|---|---|

Unset | It is the default status, indicating that the tracked operation completed successfully without errors. |

Error | It signifies that an error occurred during the tracked operation, such as an HTTP 500 error on a server. |

Ok | It means the span has been explicitly marked as error-free by the developer. While it may seem counterintuitive, it is not necessary to set a span status to Ok if it has completed without error, as this is covered by Unset. The Ok status serves to provide a clear "final call" on the span's status, ensuring that it is interpreted as "successful.” |

Span Operations

Let’s take a look at various span operations:

- HTTP requests: Spans can represent individual HTTP requests within an application, capturing the start and end times, request and response details, status codes, and latency. By tracking HTTP spans, you can see how long requests take, whether they encounter errors, and which endpoints are performing optimally. This helps identify bottlenecks and optimize the flow of requests through your system.

- Database queries: Spans are useful for tracing database queries, recording the time taken for each query execution, the type of query (e.g.,

SELECT,INSERT), and any error messages. By observing these spans, you can find slow or inefficient queries, analyze database performance, and optimize data access patterns to reduce latency and improve overall application performance. - Function calls: Spans can track individual function calls within your codebase, capturing details like execution time and function parameters. This is especially useful for monitoring the performance of critical functions, identifying functions that consume excessive time, and diagnosing issues within complex workflows or recursive function calls.

- Microservice interactions: In a microservices architecture, spans can represent the interactions between services, capturing the requests sent and responses received. These spans help visualize the dependencies between services and show how they impact each other’s performance. By tracing inter-service calls, you can identify issues in service communication, locate performance bottlenecks, and gain insights into the health and responsiveness of each service.

Each of these spans contributes to a complete trace, enabling detailed observability and helping teams understand the end-to-end journey of a request through the system.

Creating and Managing Spans

To create and manage spans effectively, you’ll be working with the OpenTelemetry Tracing API.

First, ensure you have the required packages installed. If not, you can download them using the following command:

pip install Flask opentelemetry-api opentelemetry-sdk opentelemetry-exporter-otlp

Let’s take a basic example in Python:

from flask import Flask

from opentelemetry import trace

from opentelemetry.sdk.resources import Resource

from opentelemetry.sdk.trace import TracerProvider

from opentelemetry.sdk.trace.export import BatchSpanProcessor

from opentelemetry.exporter.otlp.proto.grpc.trace_exporter import OTLPSpanExporter

# Initialize tracer provider with service name

resource = Resource(attributes={"service.name": "my_flask_service"})

trace.set_tracer_provider(TracerProvider(resource=resource))

tracer = trace.get_tracer(__name__)

# Configure OTLP exporter for tracing with SigNoz

span_exporter = OTLPSpanExporter(

endpoint="https://ingest.in.signoz.cloud:443",

headers=(("authorization", "Bearer INSERT_TOKEN_HERE"),)

# Replace with your token

) span_processor = BatchSpanProcessor(span_exporter)

trace.get_tracer_provider().add_span_processor(span_processor)

app = Flask(__name__)

@app.route('/')

def index():

with tracer.start_as_current_span("main_operation") as main_span:

# Perform main operation

with tracer.start_as_current_span("sub_operation") as sub_span:

# Perform sub-operation

sub_span.set_attribute("key", "value")

sub_span.add_event("sub-operation milestone reached")

main_span.set_status(trace.StatusCode.OK)

return "Tracing complete!"

if __name__ == "__main__":

app.run(debug=True)

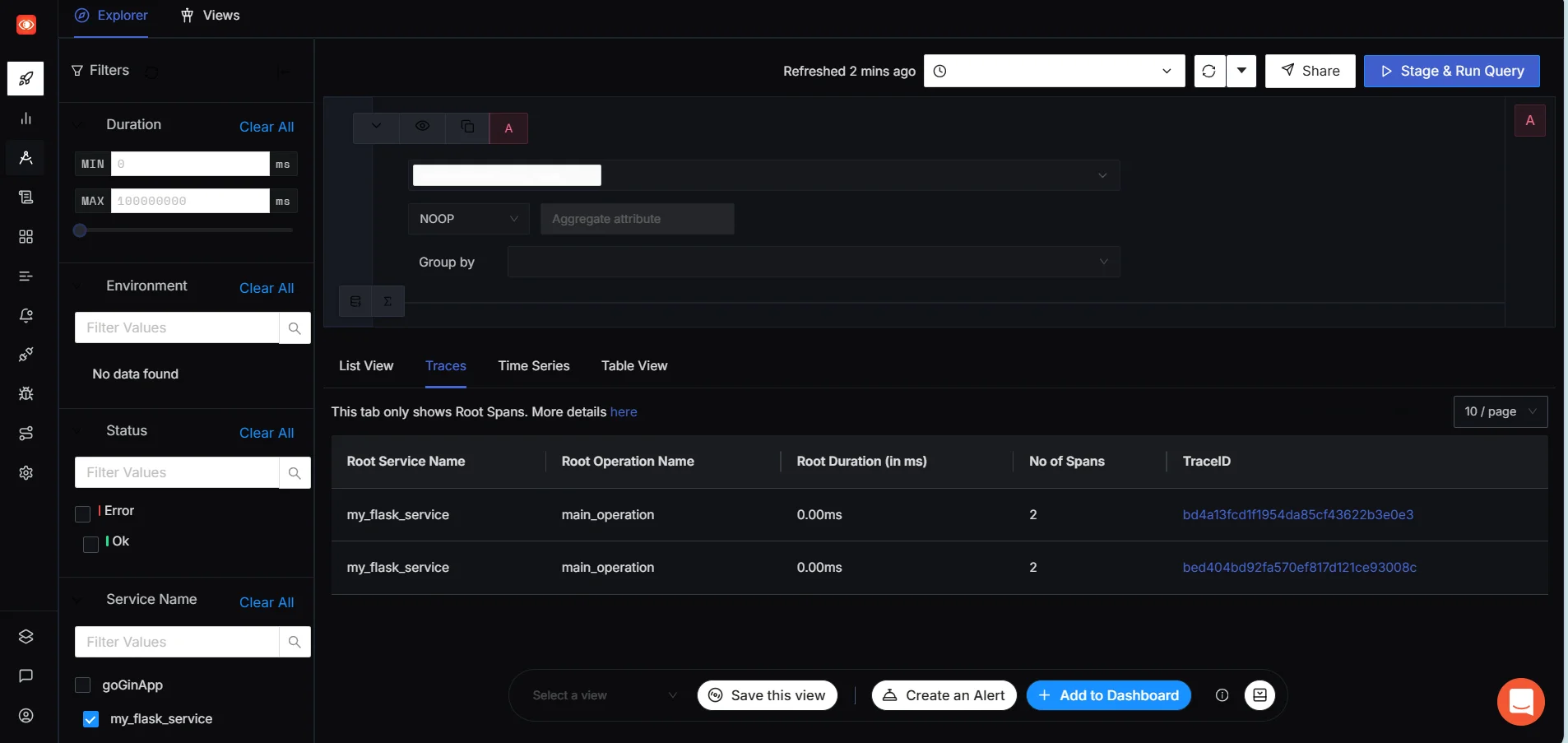

After accessing the endpoint at 127.0.0.1:5000, the message "Tracing complete!" will appear on the site. You can now visit SigNoz to review the traces that have been received.

Traces vs. Spans: Understanding the Relationship

Now that we've explored traces and spans individually, let's clarify their relationship:

| Concept | Relationship |

|---|---|

| Hierarchy of Spans in a Trace | Spans form a parent-child hierarchy, showing operation order and dependencies. The parent span represents a main operation, while child spans capture sub-operations. |

| Root Span | The root span is the trace's top-level span, representing the initial request (e.g., a frontend HTTP request) and sets contexts for all other spans. |

| Child Spans | Represent specific actions within the parent operation, breaking down the workflow into detailed steps that contribute to the main request. |

| Trace as a Tree | A trace resembles a tree: the trace itself is the full tree, and each span acts as a node within this tree. |

| Nodes and Branches | Each node in the tree is a span, with the root node as the root span. Branches represent the relationships between parent and child spans. |

| Benefits of Trace Structure | 1. Operation Flow: Visualize the path and dependencies within a request. |

- Latency Insight: Identify spans with high latency to target optimizations.

- Error Tracking: Trace errors in spans to see how they impact related operations. |

Implementing Tracing with OpenTelemetry

To implement tracing in your application using OpenTelemetry, follow these steps:

- Install the OpenTelemetry SDK: Use your language's package manager to install the necessary OpenTelemetry libraries.

- Initialize the SDK: Set up the global OpenTelemetry objects in your application's entry point.

- Create a Tracer: To obtain a tracer, access the global

TraceProvider. A Tracer Provider, also known asTracerProvider, serves as a factory for creating Tracers. Typically, a Tracer Provider is initialized once, aligning its lifecycle with that of the application. The initialization of the Tracer Provider also includes the setup of Resources and Exporters, making it the initial step in tracing with OpenTelemetry. In some language SDKs, a global Tracer Provider may already be initialized for you. - Instrument Your Code: Utilize the tracer to create spans around key operations in your code. A Tracer generates spans that provide additional details about specific operations, such as a service request. Tracers are obtained from Tracer Providers.

- Configure an Exporter: Configure an exporter to transmit your trace data to the selected backend system. Trace Exporters deliver traces to a consumer, which could be standard output for debugging and development, the OpenTelemetry Collector, or any open-source or vendor backend you prefer.

Here's a basic example in Python:

from opentelemetry import trace

from opentelemetry.sdk.trace import TracerProvider

from opentelemetry.sdk.trace.export import ConsoleSpanExporter, BatchSpanProcessor

from opentelemetry.trace.status import Status, StatusCode

# Initialize the SDK

trace.set_tracer_provider(TracerProvider())

tracer = trace.get_tracer(__name__)

# Configure the exporter

span_processor = BatchSpanProcessor(ConsoleSpanExporter())

trace.get_tracer_provider().add_span_processor(span_processor)

# Instrument your code

with tracer.start_as_current_span("main_operation") as span:

# Perform your operation

span.set_attribute("operation.value", 123)

span.add_event("Operation started")

# Simulate an error

span.set_status(Status(StatusCode.ERROR))

span.record_exception(Exception("Something went wrong"))

This example creates a simple trace with one span, adds an attribute and an event, and simulates an error condition.

Leveraging SigNoz for OpenTelemetry Trace Analysis

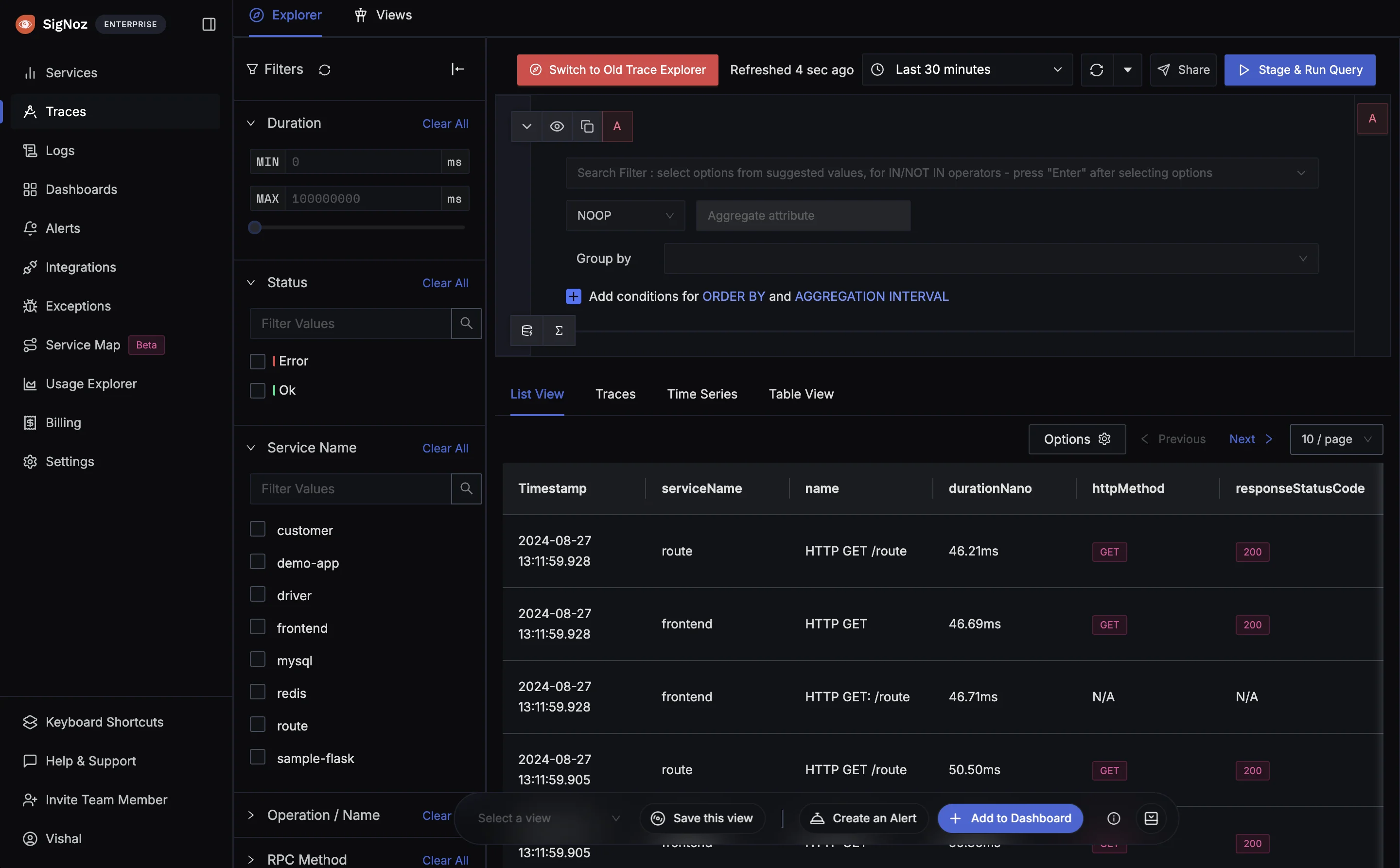

While OpenTelemetry provides the instrumentation, you need a robust backend system to analyze and visualize your trace data. SigNoz is an open-source, full-stack observability platform that seamlessly integrates with OpenTelemetry.

SigNoz offers several advantages for OpenTelemetry trace analysis:

- User-Friendly Visualization: SigNoz provides interactive and intuitive dashboards that make it easy to explore your traces and spans visually. Each trace can be broken down into spans, helping you understand the flow of requests across services. This visualization simplifies monitoring, enabling teams to quickly spot bottlenecks or irregularities in their system's behavior.

- Advanced Filtering: SigNoz allows you to filter and search through traces based on multiple criteria, such as service name, operation type, status codes, and specific time ranges. This powerful filtering helps you pinpoint specific traces or patterns, streamlining troubleshooting and focusing your analysis on relevant data.

- Performance Insights: With SigNoz, you gain detailed insights into your application's performance, including latency breakdowns across services, response times, and error rates. These metrics help you identify which components are consuming the most time, enabling you to make informed decisions to enhance your application’s responsiveness and reliability.

- Anomaly Detection: SigNoz leverages machine learning algorithms to detect anomalies in trace data automatically. By identifying unusual patterns in requests or latency, it highlights deviations from normal behavior that may indicate performance issues or potential failures, allowing you to address them before they impact users.

- Customizable Alerts: SigNoz allows you to set up tailored alerts based on specific trace data criteria, like latency thresholds or error rates. These alerts help you proactively monitor performance, ensuring your team is notified of issues before they escalate. With timely alerts, you can respond to potential problems quickly, minimizing downtime and improving user satisfaction.

SigNoz’s combination of visualization, filtering, insights, anomaly detection, and alerting makes it a comprehensive solution for managing and optimizing OpenTelemetry traces.

SigNoz Cloud is the easiest way to run SigNoz. Sign up for a free account and get 30 days of unlimited access to all features.

You can also install and self-host SigNoz yourself since it is open-source. With 24,000+ GitHub stars, open-source SigNoz is loved by developers. Find the instructions to self-host SigNoz.

OpenTelemetry Trace and Span: Best Practices

Adhering to best practices in trace and span implementation is crucial for effective observability with OpenTelemetry; let’s dive into the recommended approaches for optimizing trace accuracy and span utility for actionable insights.

| Trace | Span |

|---|---|

| Right Level of Granularity: Maintain a balance in span detail to avoid overloading your traces with minor operations while still capturing critical actions. Focus on spans that highlight key performance metrics and potential bottlenecks. | Attributes: Add relevant attributes to provide context. For example, in an HTTP request span, include the URL, method, and response status code. These attributes help to enrich the trace data and enable better understanding and troubleshooting of the requests. |

| Handle High-Cardinality Data: High-cardinality attributes, like user IDs, can increase storage and processing demands. Use sampling to manage data volume and prioritize capturing traces with errors or unusual latency. | Scope Management: Be mindful of span lifetimes and ensure they're closed properly to avoid leaks. Properly managing the start and end of spans prevents resource exhaustion and ensures accurate representation of the time taken for operations. |

| Propagate Context Correctly: Ensure trace context flows across all services and asynchronous tasks to keep the trace intact. This provides a complete view of request dependencies and execution flow. | Events: Use events to mark significant occurrences within a span's lifetime. Events allow you to capture specific milestones or important actions, such as when a request was received or when a processing step was completed, providing valuable insights during debugging and analysis. |

| Balance Performance Impact: Tracing can add overhead, so monitor and adjust data collection to avoid performance degradation. Focus tracing on essential transactions or error scenarios in high-performance environments. | Error Handling: Set the appropriate status on spans, especially when errors occur. This includes marking spans as ERROR when an exception is thrown, which helps in quickly identifying failure points and understanding the overall health of the system. |

Key Takeaways

- Traces provide end-to-end visibility of requests in distributed systems, while spans represent individual operations within those traces.

- OpenTelemetry offers a standardized approach to implementing tracing across different languages and frameworks.

- Effective use of traces and spans can significantly improve system observability and help in identifying performance bottlenecks.

- Tools like SigNoz can enhance the value of OpenTelemetry tracing data by providing powerful analysis and visualization capabilities.

- Implementing best practices in tracing can help balance the benefits of observability with performance considerations.

FAQs

What's the difference between a trace and a span in OpenTelemetry?

A trace represents the entire journey of a request through a distributed system, while a span represents a single operation within that journey. Traces are composed of one or more spans arranged in a hierarchical structure.

How do OpenTelemetry traces improve application performance?

OpenTelemetry traces provide visibility into the performance of individual components and their interactions within a distributed system. This allows developers to identify bottlenecks, optimize slow operations, and understand the impact of changes across the entire application.

Can OpenTelemetry traces work across different programming languages?

Yes, OpenTelemetry is designed to work across multiple programming languages. It provides consistent APIs and SDKs for various languages, allowing trace context to be propagated even in polyglot environments.

What are some common challenges when implementing distributed tracing?

Common challenges include:

- Ensuring proper context propagation across service boundaries

- Managing the volume of trace data in high-traffic systems

- Balancing the performance impact of tracing with its benefits

- Standardizing span naming and attribute conventions across teams

- Correlating trace data with other observability signals like logs and metrics