Monitoring LlamaIndex Telemetry with SigNoz

Overview

This guide walks you through instrumenting your Python-based LlamaIndex application and streaming telemetry data to SigNoz Cloud using OpenTelemetry. By the end of this setup, you'll be able to monitor AI-specific operations such as document ingestion, document retrieval, user querying, text generation, and user feedback within LlamaIndex, with detailed spans capturing request durations, node and query inputs, model outputs, retrieval scores, metadata, and intermediate steps throughout the pipeline.

Instrumenting your RAG workflows with telemetry enables full observability across the retrieval and generation pipeline. This is especially valuable when building production-grade developer-facing tools, where insight into model behavior, latency bottlenecks, and retrieval accuracy is essential. With SigNoz, you can trace each user question end-to-end, from prompt to response, and continuously improve performance and reliability.

To get started, check out our example LlamaIndex RAG Q&A bot, complete with OpenTelemetry-based monitoring (via OpenInference). View the full repository here.

Prerequisites

- A Python application using Python 3.8+

- LlamaIndex integrated into your app, with document ingestion and query interfaces set up

- Basic understanding of RAG (Retrieval-Augmented Generation) workflows

- A SigNoz Cloud account with an active ingestion key

pipinstalled for managing Python packages- Internet access to send telemetry data to SigNoz Cloud

- (Optional but recommended) A Python virtual environment to isolate dependencies

Instrument your LlamaIndex application

To capture detailed telemetry from LlamaIndex without modifying your core application logic, we use OpenInference, a community-driven standard that provides pre-built instrumentation for popular AI frameworks like LlamaIndex, built on top of OpenTelemetry. This allows you to trace your LlamaIndex application with minimal configuration.

Check out detailed instructions on how to set up OpenInference instrumentation in your LlamaIndex application over here.

Step 1: Install OpenInference and OpenTelemetry related packages

pip install openinference-instrumentation-llama-index \

opentelemetry-exporter-otlp \

opentelemetry-sdk

Step 2: Import the necessary modules in your Python application

from opentelemetry.exporter.otlp.proto.http.trace_exporter import OTLPSpanExporter

from opentelemetry.sdk.trace import TracerProvider

from opentelemetry.sdk.trace.export import BatchSpanProcessor

from opentelemetry.sdk.resources import Resource

from openinference.instrumentation.llama_index import LlamaIndexInstrumentor

Step 3: Set up the OpenTelemetry Tracer Provider to send traces directly to SigNoz Cloud

resource = Resource.create({"service.name": "<service_name>"})

provider = TracerProvider(resource=resource)

span_exporter = OTLPSpanExporter(

endpoint="https://ingest.<region>.signoz.cloud:443/v1/traces",

headers={"signoz-ingestion-key": "<your-ingestion-key>"},

)

provider.add_span_processor(BatchSpanProcessor(span_exporter))

<service_name>is the name of your service- Set the

<region>to match your SigNoz Cloud region - Replace

<your-ingestion-key>with your SigNoz ingestion key

Step 4: Instrument LlamaIndex using OpenInference and the configured Tracer Provider

Use the LlamaIndexInstrumentor from OpenInference to automatically trace LlamaIndex operations with your OpenTelemetry setup:

LlamaIndexInstrumentor().instrument(tracer_provider=provider)

📌 Important: Place this code at the start of your application logic — before any LlamaIndex functions are called or used — to ensure telemetry is correctly captured.

Your LlamaIndex commands should now automatically emit traces, spans, and attributes.

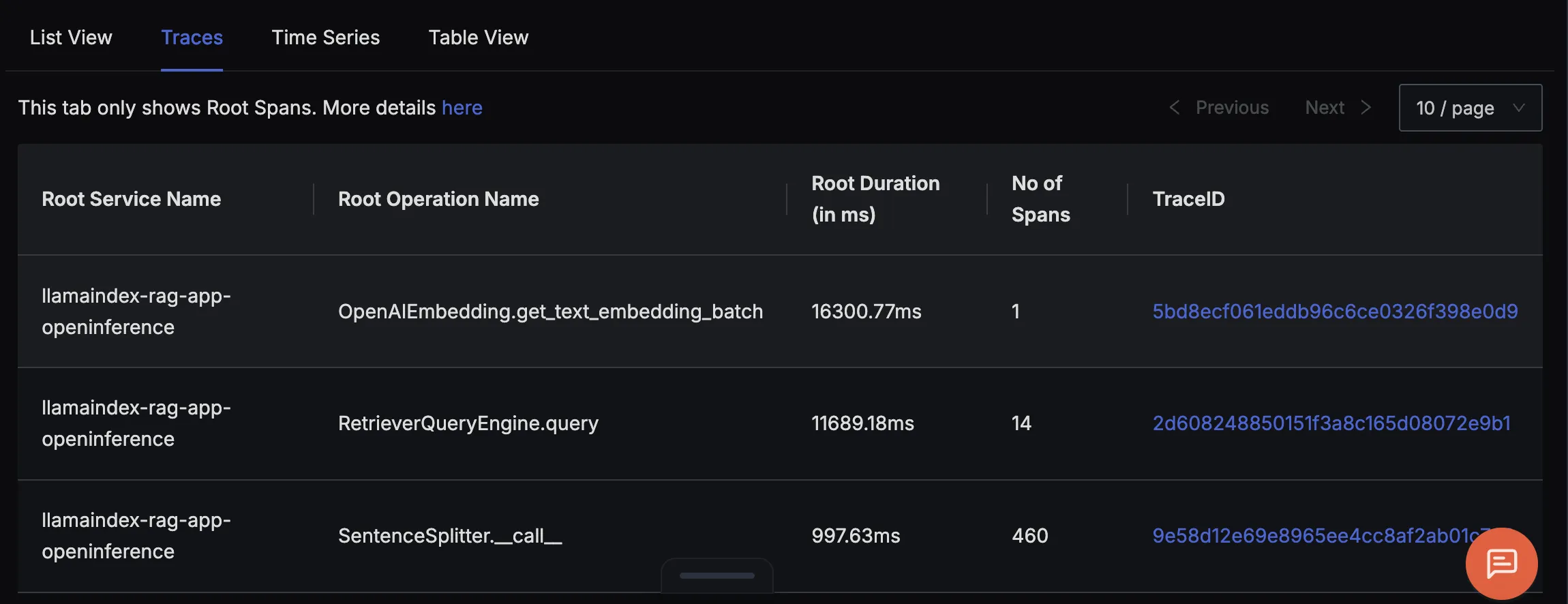

Finally, you should be able to view this data in Signoz Cloud under the traces tab:

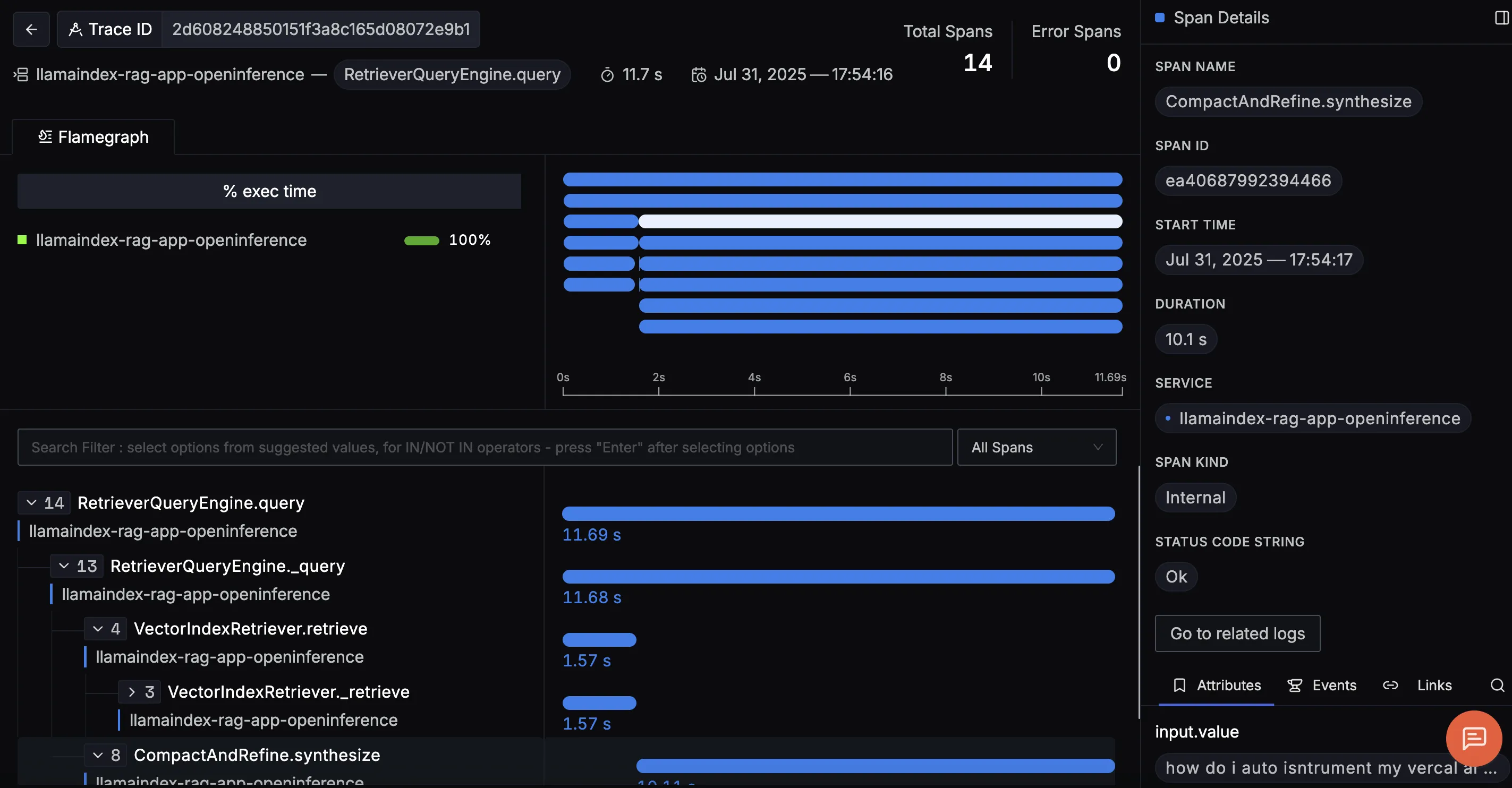

When you click on a trace ID in SigNoz, you'll see a detailed view of the trace, including all associated spans, along with their events and attributes.

Last updated: August 1, 2025

Edit on GitHub