How to Fix "Context Deadline Exceeded" Errors in Prometheus

Running into "Context Deadline Exceeded" errors in Prometheus? You're not alone. This pesky error pops up when Prometheus can’t scrape metrics from a target in time, leading to gaps in your data and potential monitoring blind spots. But don't worry—this guide is here to help you understand why it happens and, more importantly, how to fix it.

Understanding "Context Deadline Exceeded" Errors in Prometheus

What Is a "Context Deadline Exceeded" Error?

In Prometheus, a "Context Deadline Exceeded" error occurs when the server tries to scrape metrics from a target but runs out of time. Prometheus has a set time limit (defined by scrape_timeout), and if it doesn’t get the data before that clock runs out, it throws this error and moves on.

Impact on Monitoring and Data Collection: When these errors occur, they can have a significant impact:

- Data Gaps: Missed scrapes mean gaps in your metrics, which can lead to incomplete dashboards and reports.

- Missed Alerts: Without complete data, critical alerts might not trigger, leaving issues unnoticed until it’s too late.

- Increased Troubleshooting: Repeated errors lead to more time spent diagnosing and fixing problems, which could have been avoided.

Addressing "Context Deadline Exceeded" errors is essential for maintaining the integrity of your monitoring data and ensuring reliable system oversight.

Why Context Deadline Exceeded Errors Happen

Prometheus works by periodically scraping metrics from targets. Each target has a defined scrape_interval (how often it’s checked) and scrape_timeout (how long Prometheus waits before giving up). If the target doesn’t respond in time, you get a "Context Deadline Exceeded" error.

You’ll typically see this error in a few situations:

- Slow Responding Targets: If the target is under heavy load or poorly optimized, it might take too long to respond to Prometheus.

- Network Issues: Latency or packet loss between Prometheus and its targets can cause delays, leading to timeouts.

- Overloaded Prometheus Server: If Prometheus is handling too many tasks or has poorly optimized queries, it might not keep up with the scraping intervals.

Diagnosing Context Deadline Exceeded Errors

Effective troubleshooting starts with proper diagnosis. Here's how to identify and analyze "Context Deadline Exceeded" errors in your Prometheus setup:

- Check the Prometheus UI:

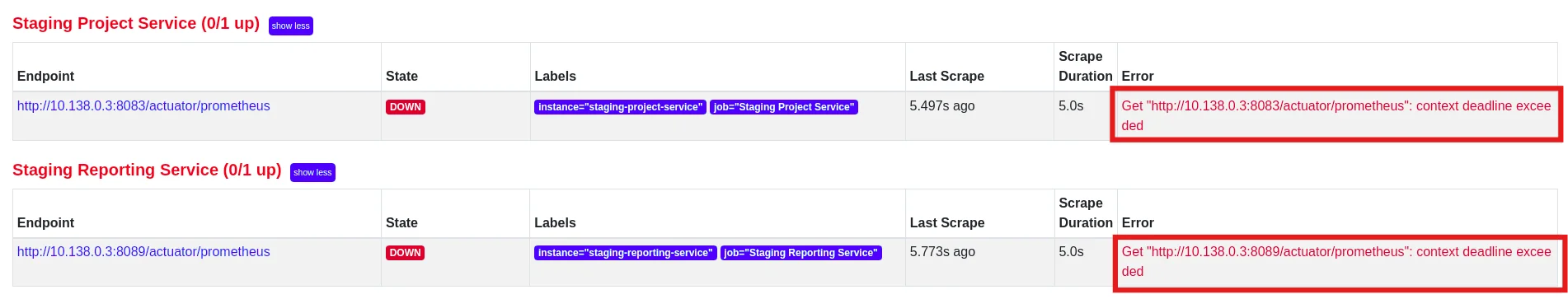

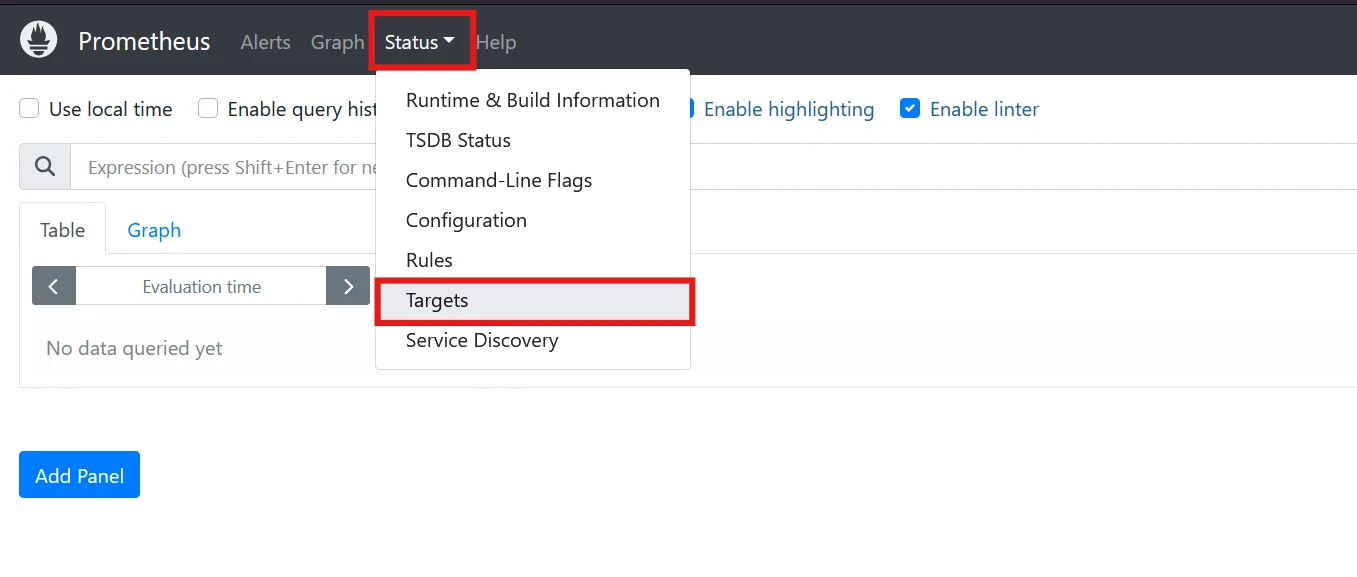

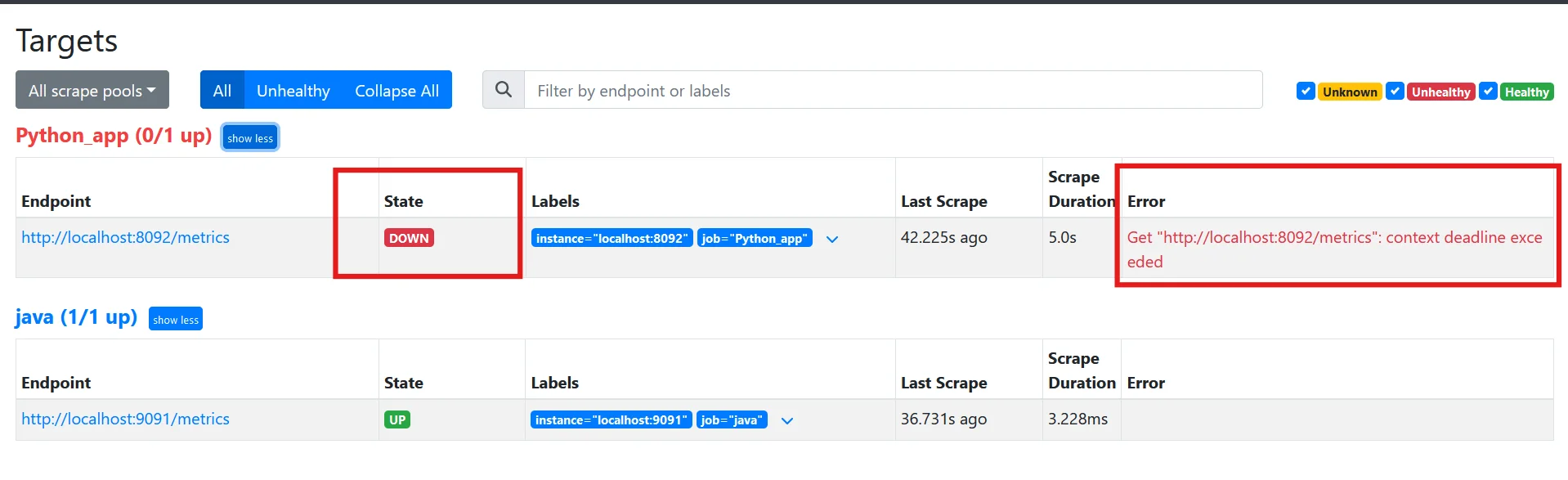

Navigate to the "Targets" page in the Prometheus web interface

Navigate to the Targets section in the Prometheus Web Interface Look for targets marked as "down" with error messages containing "context deadline exceeded"

Look for ContextDeadline Error in targets that are down

- Analyze Prometheus logs:

- Examine Prometheus server logs for entries containing "context deadline exceeded"

- Note the affected targets and the frequency of these errors

- Use Prometheus debug endpoints:

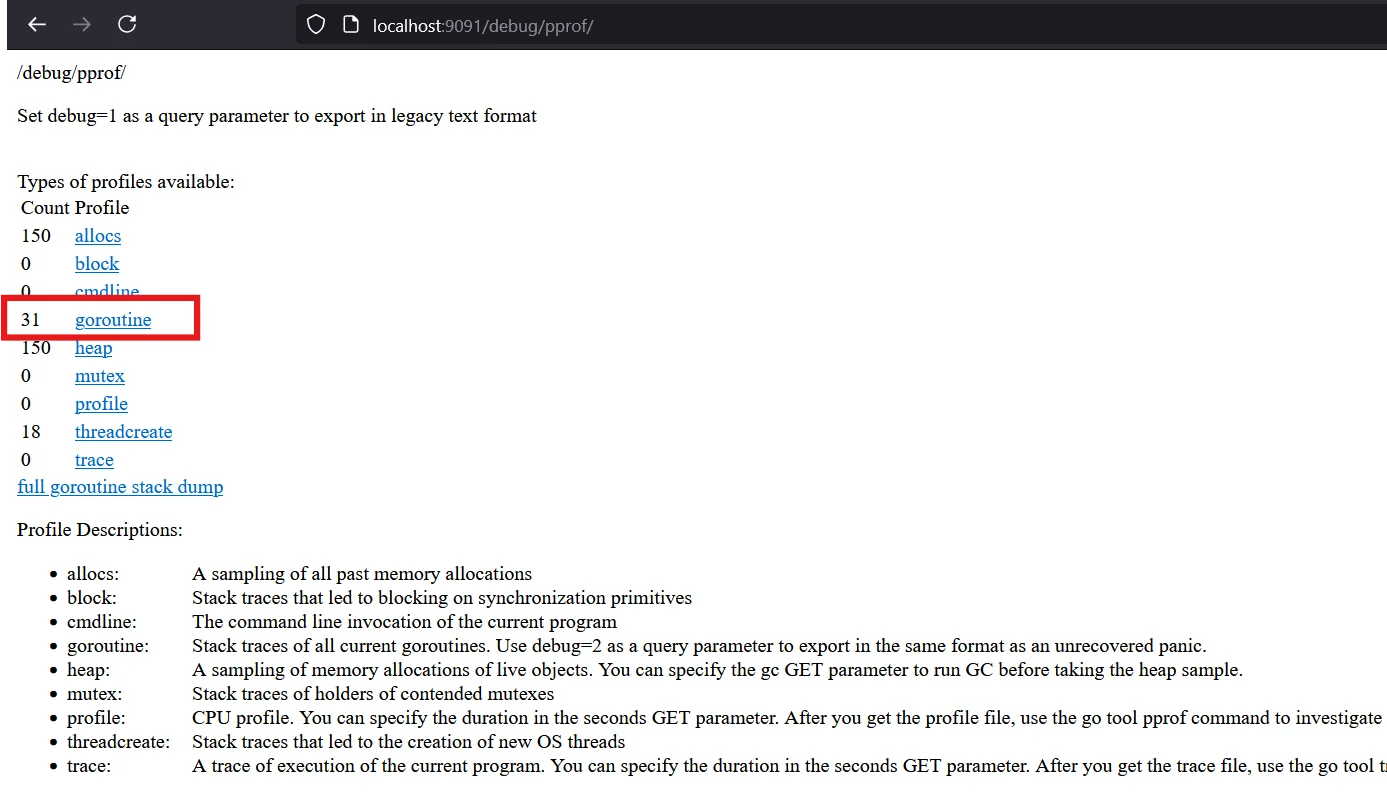

Access the

/debug/pprofendpoint on your Prometheus serverLook for goroutines stuck in scrape operations

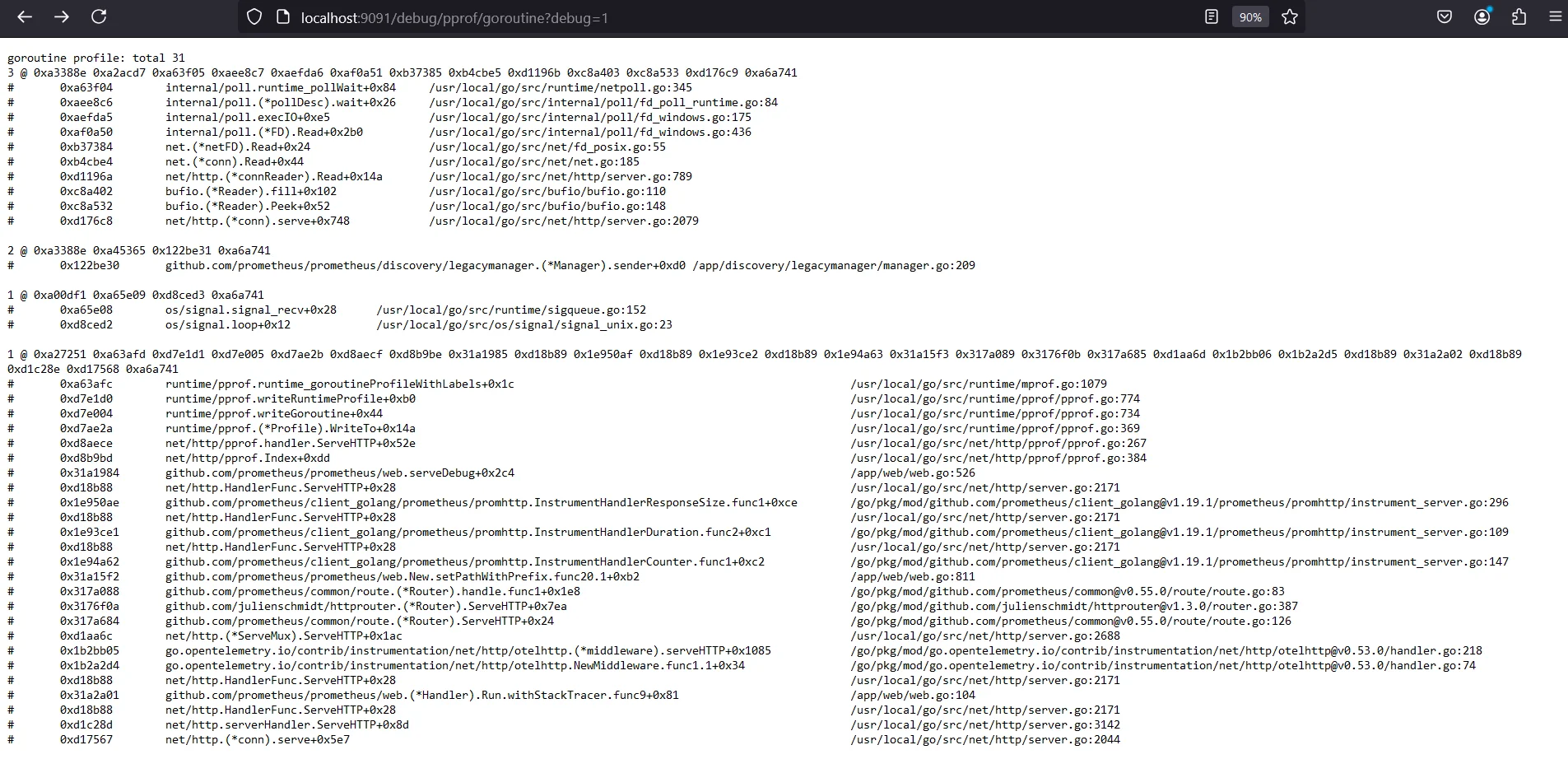

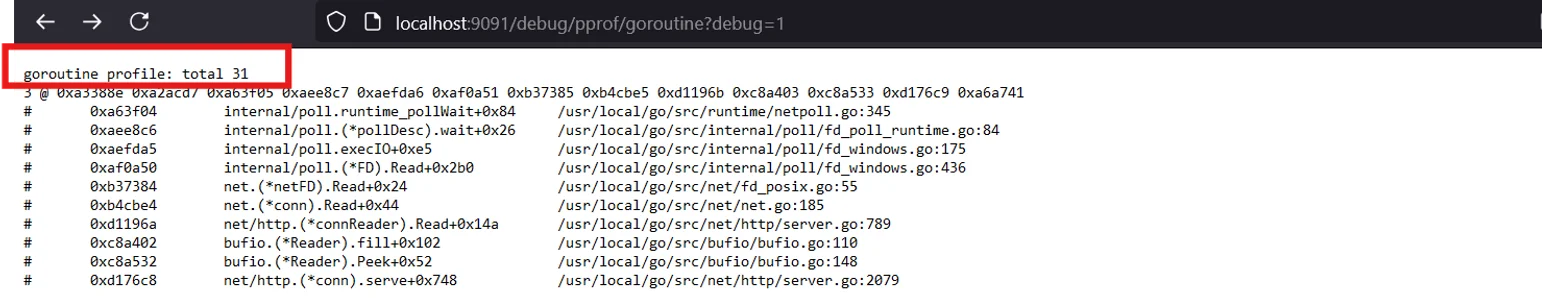

Accessing the /debug/pprof endpoint on Prometheus server You will notice a lot of data, which may appear difficult to read.

Let’s decode it one by one :

There are 31 goroutines active at the time of the profile capture.

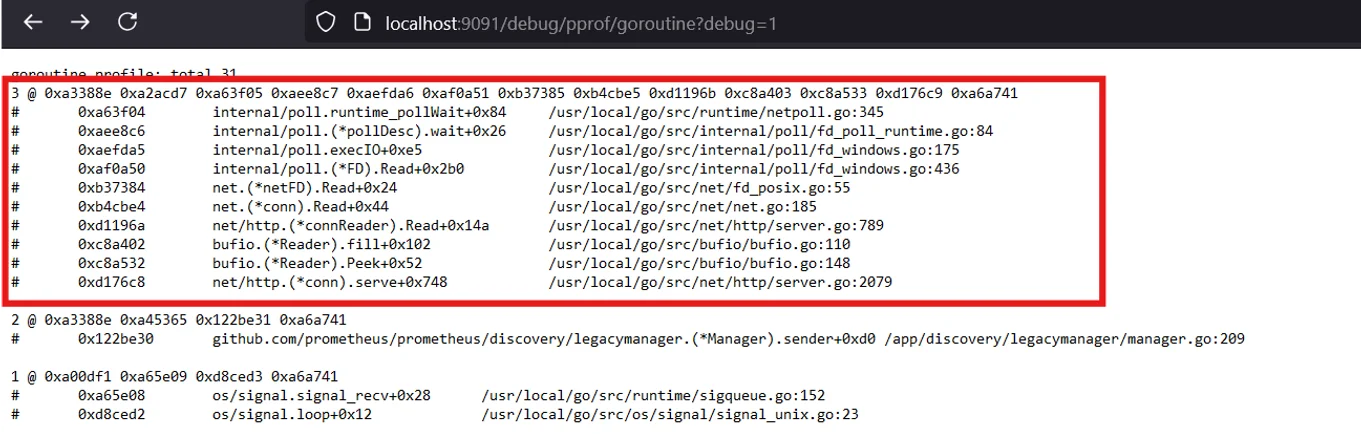

- Blocked Goroutines (3 instances) - "3 @ ..." indicates that 3 different goroutines are executing the same code path at this specific point in time.

- "3 @ ..." indicates that 3 different goroutines are executing the same code path at this specific point in time.

- The goroutines are stuck waiting for network-related operations.

internal/poll.runtime_pollWait: Waiting for I/O events on a file descriptor.internal/poll.FD.Read: Reading data from a file descriptor, typically a network socket.*net/http.(connReader).Read*: Indicates that this goroutine is part of an HTTP request handler and is reading data.*net/http.(conn).serve*: This suggests that the goroutine is handling an HTTP request.

- The goroutines are stuck waiting for network-related operations.

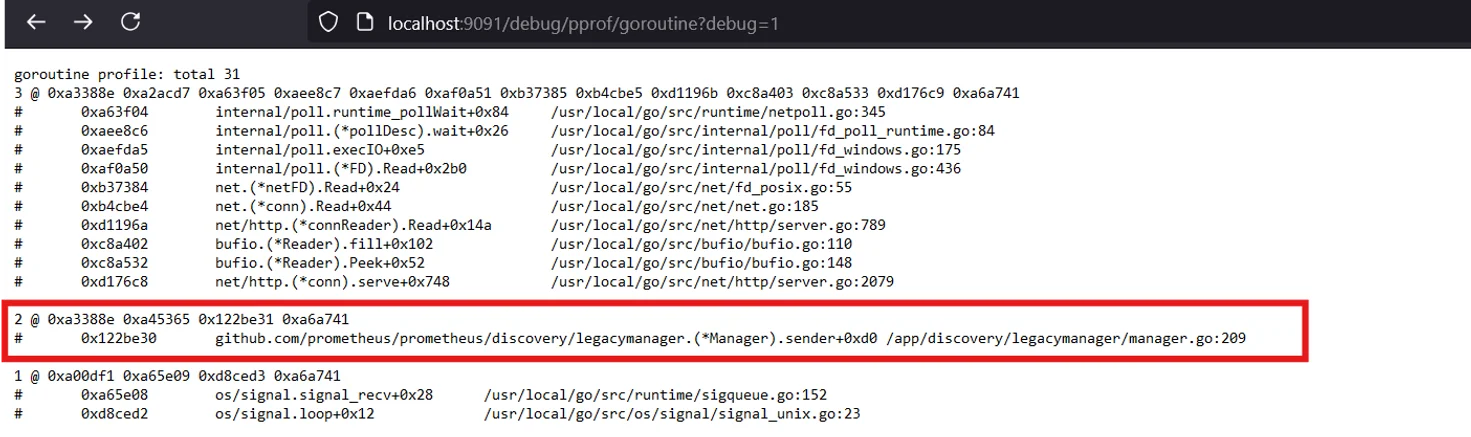

Discovery Manager Goroutines (2 instances)

- These goroutines are involved in Prometheus's discovery manager, which handles service discovery for targets.

- The

discovery/legacymanager.Manager.senderfunction is related to sending target discovery updates.

- The

From above, we can figure out that the goroutine is stuck waiting for network-related operations. Hinting at network-related issues while trying to read data.

- Leverage external tools:

Use network diagnostic tools like

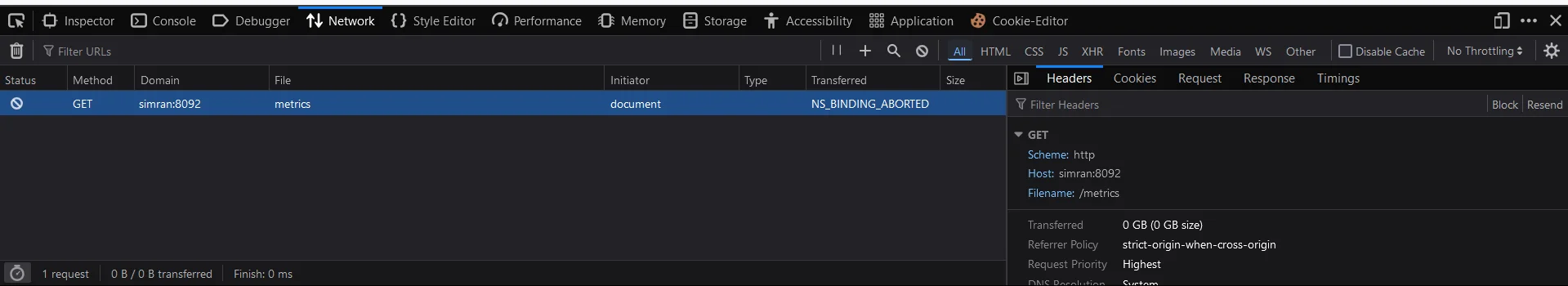

pingortracerouteto check connectivity. For example, sending GET requests to the server throws an error, hinting at something wrong on the target app’s end.

Checking target sever for Context Deadline Exceeded Errors Employ monitoring tools like SigNoz to gain deeper insights into your infrastructure.

By systematically investigating these areas, you can pinpoint the root causes of your timeout errors and take appropriate action.

Configuring Prometheus to Prevent Timeout Errors

Proper configuration is key to mitigating "Context Deadline Exceeded" errors. Here are essential steps to optimize your Prometheus setup:

Adjust global scrape_timeout settings:

global: scrape_timeout: 15sIncrease this value if you consistently see timeout errors across multiple targets.

Fine-tune job-specific settings:

scrape_configs: - job_name: 'example-job' scrape_interval: 30s scrape_timeout: 20sTailor these settings to the specific needs of each job or target group.

Implement relabeling:

relabel_configs: - source_labels: [__address__] target_label: __param_target - source_labels: [__param_target] target_label: instance - target_label: __address__ replacement: prometheus-proxy:9090Use relabeling to optimize scrape configurations and route requests through a proxy if needed.

Balance scrape frequency and resource usage: Avoid setting overly aggressive scrape intervals that might overwhelm your targets or Prometheus itself.

Best Practices for Prometheus Configuration

To maintain a robust Prometheus setup, adhere to these best practices:

- Fine-Tune Scrape Intervals and Timeouts

- Scrape Intervals: Your

scrape_intervaldetermines how often Prometheus pulls metrics from targets. A balance is key—set it too short, and you risk overwhelming your system; too long, and you might miss critical data. Typically, a 15 to 30-second interval works well, but for slower or less critical systems, consider a longer interval to reduce load. - Scrape Timeouts: Set the

scrape_timeoutjust below thescrape_interval. This ensures that if a target is slow, Prometheus doesn’t get stuck waiting and can move on to the next target. For example, with a 15-second interval, a timeout of 10-12 seconds is usually ideal.

- Scrape Intervals: Your

- Use Relabeling to Filter Metrics

- Prometheus can scrape a vast amount of data, but not all of it is useful.

- Use relabeling rules to filter out unnecessary metrics before they’re stored. This reduces the load on Prometheus and the chances of encountering timeout errors.

- Distribute Load with Service Discovery

- Leverage service discovery to dynamically manage your targets, especially in environments that change frequently (like Kubernetes). This allows Prometheus to efficiently update and manage scrape targets, reducing the chance of overloading the system.

- Implement High Availability (HA)

- Running multiple Prometheus instances in a high-availability setup can help distribute the scraping load and prevent any single instance from becoming a bottleneck.

- HA setups also provide redundancy, ensuring that monitoring continues even if one instance goes down.

By following these guidelines, you can create a more resilient and efficient Prometheus monitoring system.

Optimizing Target Systems to Reduce Timeout Errors

While Prometheus configuration is crucial, optimizing target systems can significantly reduce the occurrence of timeout errors:

- Improve target system performance:

- Allocate sufficient resources to metric collection processes

- Optimize database queries or API calls that generate metrics

- Implement caching mechanisms:

- Cache frequently accessed metrics to reduce computation time during scrapes

- Use tools like TSDB or Redis for efficient metric caching

- Optimize network connectivity:

- Ensure low-latency network paths between Prometheus and targets

- Consider using Prometheus Federation to bring scraping closer to targets

- Scale resources for complex metric endpoints:

- Horizontally scale services that expose resource-intensive metrics

- Consider breaking down complex metric endpoints into multiple, simpler endpoints

By addressing performance bottlenecks on target systems, you can significantly reduce the likelihood of timeout errors.

Implementing Advanced Techniques to Mitigate Timeouts

For more complex monitoring setups, consider these advanced techniques:

Use Prometheus Pushgateway:

- Ideal for batch jobs or unreliable targets

- Allows targets to push metrics to an intermediate gateway

push_config: endpoint: "http://pushgateway:9091/metrics"Implement circuit breakers:

- Use tools like Hystrix or resilience4j to handle temporary failures

- Prevent cascading failures due to unresponsive targets

Leverage specialized exporters:

- Offload metric collection processing to dedicated exporters

- Use efficient data formats like Protocol Buffers for faster serialization

Employ load balancing:

- Distribute scrape requests across multiple instances of high-volume targets

- Use DNS-based load balancing or dedicated load balancers

These techniques can help you build a more resilient monitoring infrastructure capable of handling complex and high-volume metric collection scenarios.

Monitoring and Alerting for Context Deadline Exceeded Errors

Proactive monitoring of your Prometheus setup is essential for maintaining its health:

Set up alerts for persistent timeout errors:

- alert: PersistentScrapeTimeout expr: rate(prometheus_target_scrapes_exceeded_sample_limit_total[5m]) > 0 for: 15m labels: severity: warning annotations: summary: "Persistent scrape timeouts detected" description: "Target {{ $labels.instance }} is experiencing consistent scrape timeouts."Create dashboards to visualize scrape performance:

- Monitor scrape duration trends

- Track the number of successful vs. failed scrapes

Implement SLOs for scrape success rates:

- Set targets for scrape success percentages

- Use error budgets to guide improvement efforts

Correlate timeout errors with system metrics:

- Look for patterns between timeouts and CPU, memory, or network usage

- Use this data to inform scaling decisions or performance optimizations

By implementing comprehensive monitoring and alerting for your Prometheus infrastructure, you can catch and address issues before they impact your overall monitoring effectiveness.

Enhancing Observability with SigNoz

Timeout issues, like the "Context Deadline Exceeded" errors in Prometheus, can be tricky to diagnose with metrics alone. SigNoz enhances your observability stack by providing detailed traces that show the path of requests through your application. By integrating SigNoz with Prometheus, you can correlate metric data with trace data to quickly identify where timeouts are occurring and why they are happening.

SigNoz is an open-source observability platform that provides deep insights into application performance, making it easier to monitor and troubleshoot complex systems. While Prometheus excels at collecting and alerting on metrics, SigNoz extends these capabilities with powerful tracing and logging features, enabling you to gain a more comprehensive understanding of your application’s behavior. SigNoz offers:

- Distributed tracing to pinpoint bottlenecks in your applications

- Detailed performance metrics that can complement Prometheus data

- Log management for correlating errors with system events

- Custom dashboards for visualizing Prometheus metrics alongside other telemetry data

SigNoz Cloud is the easiest way to run SigNoz. Sign up for a free account and get 30 days of unlimited access to all features.

You can also install and self-host SigNoz yourself since it is open-source. With 24,000+ GitHub stars, open-source SigNoz is loved by developers. Find the instructions to self-host SigNoz.

By leveraging SigNoz's capabilities, you can gain a holistic view of your system's performance and quickly identify the factors contributing to Prometheus timeout errors.

Key Takeaways

- "Context Deadline Exceeded" errors in Prometheus indicate scrape operations that exceed configured timeout limits.

- These errors can result from network issues, target system performance problems, or misconfiguration.

- Proper diagnosis involves checking Prometheus UI, logs, and using debug endpoints.

- Optimizing Prometheus configuration and target systems is crucial for preventing timeout errors.

- Advanced techniques like using Pushgateway and implementing circuit breakers can enhance reliability.

- Regular monitoring and alerting for timeout errors help maintain a healthy Prometheus setup.

- Integrating tools like SigNoz can provide deeper insights and complement Prometheus monitoring.

FAQs

What is the default scrape timeout in Prometheus?

The default scrape timeout in Prometheus is 10 seconds. However, this can be adjusted globally or per-job basis in the Prometheus configuration file.

Can Context Deadline Exceeded errors affect data accuracy?

Yes, these errors can lead to incomplete or missing data points, which may affect the accuracy of your metrics and potentially impact alerting and analysis.

How do I differentiate between network issues and target performance problems?

Use network diagnostic tools to check connectivity and latency. If network metrics are normal, investigate target system resources and metric generation processes for performance bottlenecks.

Is it possible to have different timeout settings for different targets?

Yes, Prometheus allows you to set different scrape_timeout values for each job in the scrape_configs section of your configuration file.