What is the Difference between DropWizard Metrics - Meters vs Timers

DropWizard Metrics provides powerful tools for monitoring Java applications, with Meters and Timers being two essential components. These metrics offer distinct approaches to measuring application performance, each with its own strengths and use cases. Understanding the differences between Meters and Timers is crucial for developers and DevOps professionals seeking to implement effective monitoring strategies. This article delves into the specifics of DropWizard Metrics Meters vs Timers, their implementation, and how to choose the right metric for your needs.

Understanding DropWizard Metrics

DropWizard Metrics is a Java-based library that offers a toolkit for measuring the behavior of critical components in your application. It helps developers gain insights into how their applications are performing by measuring critical runtime information such as response times, throughput, resource usage, and error rates. The goal is to provide a comprehensive set of tools to identify bottlenecks, monitor application health, and optimize performance.

The library's popularity stems from its simplicity and effectiveness in providing real-time insights into application behavior. Since its introduction, DropWizard Metrics has become a staple in many Java applications, offering developers a standardized way to instrument their code and gather valuable performance data. The purpose of DropWizard Metrics is similar to having a dashboard in a car—while driving, you can see your speed, fuel level, and engine status.

Overview of the Five Core Metric Types

DropWizard Metrics provides five core metric types that serve distinct purposes, ensuring comprehensive and accurate application performance monitoring. Each metric type helps developers track different aspects of their application:

Gauges: A gauge is the simplest type of metric that measures a single value at a specific point in time, making it suitable for tracking instantaneous values like memory usage, queue size, or the number of active threads.

Example of a Gauge:

package org.example; import com.codahale.metrics.MetricRegistry; import java.util.LinkedList; import java.util.Queue; public class Main { public static void main(String[] args) { MetricRegistry metrics = new MetricRegistry(); Queue<String> queue = new LinkedList<>(); // Register a gauge metric that monitors the queue size metrics.register("queue-size", (Gauge<Integer>) queue::size); // Simulate adding elements to the queue queue.add("Request 1"); queue.add("Request 2"); // Output: Should display the current size of the queue System.out.println("Queue size: " + metrics.getGauges().get("queue-size").getValue()); } }Here, the

queue-sizegauge tracks the number of items in a queue. Every time the gauge is called, it returns the current queue size. This is useful for monitoring data structures, like queues, that may vary in size as tasks are added or removed.Output:

Queue size: 2Counters: A counter is a metric that only increments or decrements, tracking a count of how many times an event has occurred. It’s useful for counting things like the number of requests received, the number of errors, or the number of tasks completed.

Example of a Counter:

package org.example; import com.codahale.metrics.*; public class Main { public static void main(String[] args){ MetricRegistry metrics = new MetricRegistry(); // Create a counter metric to track the number of processed requests Counter requestCounter = metrics.counter("requests"); // Increment the counter to simulate receiving requests requestCounter.inc(); // Increment by 1 requestCounter.inc(3); // Increment by 3 // Output: Should display the total number of requests System.out.println("Total requests: " + requestCounter.getCount()); } }The counter named

requeststrack how many requests have been processed. Callinginc()increments the counter by 1, andinc(3)adds 3 to the counter. Counters are useful for counting discrete events, like user logins or API calls.Output:

Total requests: 4Histograms: A histogram measures the statistical distribution of a set of values, tracking metrics like the minimum, maximum, median, percentiles, and standard deviation. It is preferred for measuring sizes and latency, like response times or the sizes of payloads, to understand the range and distribution of values.

Example of a Histogram:

package org.example; import com.codahale.metrics.*; public class Main { public static void main(String[] args) { MetricRegistry metrics = new MetricRegistry(); // Create a histogram metric to track response sizes Histogram responseSizes = metrics.histogram("response-sizes"); // Update the histogram with various response sizes (in bytes) responseSizes.update(500); // Response 1 responseSizes.update(1500); // Response 2 responseSizes.update(200); // Response 3 // Output: Displays the min, max, and mean response sizes System.out.println("Min response size: " + responseSizes.getSnapshot().getMin()); System.out.println("Max response size: " + responseSizes.getSnapshot().getMax()); System.out.println("Mean response size: " + responseSizes.getSnapshot().getMean()); } }This histogram records the distribution of response sizes. After updating it with three response sizes, we can retrieve statistics like the minimum, maximum, and mean sizes. Histograms help visualize the spread and frequency of values, making them useful for performance analysis.

Output:

Min response size: 200 Max response size: 1500 Mean response size: 733.33Meters: A meter measures the rate of events over time, such as the number of requests per second. It’s similar to a speedometer in a car, showing the rate at which events are occurring over time. It is ideal for tracking the rate of requests, error rates, or database transactions.

Example of a Meter:

package org.example; import com.codahale.metrics.*; public class Main { public static void main(String[] args) { MetricRegistry metrics = new MetricRegistry(); // Create a meter metric to track the rate of requests per second Meter requestsPerSecond = metrics.meter("requests-per-second"); // Mark the meter each time a request is received requestsPerSecond.mark(); // Request 1 requestsPerSecond.mark(5); // Simulate 5 more requests // Output: Displays the count and rate of events per second System.out.println("Total requests: " + requestsPerSecond.getCount()); System.out.println("Requests per second: " + requestsPerSecond.getOneMinuteRate()); } }The meter

requests-per-secondtracks the rate of incoming requests. By callingmark()each time a request is received, it calculates rates (e.g., events per second). This can help track traffic patterns in real-time, showing if a service is experiencing a surge or slowdown.Output:

Total requests: 6 Requests per second: [calculated value, depending on runtime duration]Timers: A timer is basically a combination of a histogram and a meter. It measures both the rate and duration of events. Used to track how long a particular process takes and how often it happens. It is ideal for measuring response times of API calls, database queries, or critical code sections.

Example of a Timer:

package org.example; import com.codahale.metrics.*; public class Test { public static void main(String[] args) { MetricRegistry metrics = new MetricRegistry(); // Create a timer metric to measure request durations Timer responses = metrics.timer("responses"); // Start timing a block of code Timer.Context context = responses.time(); try { // Simulate some work being done (e.g., a request is being processed) Thread.sleep(100); // Simulates request processing delay of 100ms } catch (InterruptedException e) { e.printStackTrace(); } finally { // Stop the timer and record the duration context.stop(); } // Output: Displays the total number of timed events and mean duration System.out.println("Total events: " + responses.getCount()); System.out.println("Mean response time: " + responses.getSnapshot().getMean() / 1_000_000 + " ms");} }The timer

responsesmeasures both the number of events and their duration. By timing specific blocks of code (in this case, simulating a 100ms delay), it collects data on how long each event takes, providing insights into process efficiency and potential bottlenecks.Output:

Total events: 1 Mean response time: 100.0 ms

Note: To use the examples in this article, ensure you have the following setup:

- For Gradle, add these dependencies in your

build.gradlefile:

dependencies {

implementation platform('io.dropwizard:dropwizard-bom:4.0.10')

implementation 'io.dropwizard:dropwizard-core'

implementation 'io.dropwizard.metrics:metrics-core:4.2.28'

}

- For Maven, you need to have the following changes in your

pom.xmlfile:

<dependencies>

<!-- Dropwizard Metrics Core Dependency -->

<dependency>

<groupId>io.dropwizard</groupId>

<artifactId>dropwizard-core</artifactId>

</dependency>

<dependency>

<groupId>io.dropwizard.metrics</groupId>

<artifactId>metrics-core</artifactId>

<version>4.2.28</version> <!-- Check for the latest version -->

</dependency>

</dependencies>

Importance of Choosing the Right Metric Type for Accurate Performance Measurement

Selecting the correct metric type is crucial for accurately representing the performance characteristics of an application. Misusing a metric type can lead to misleading data and incorrect conclusions. For example:

- Using a counter to track request duration is incorrect because counters only increase or decrease. Instead, a timer should be used, as it measures both rate and duration.

- Gauges should be used for instantaneous values, while histograms should be used when you want to understand the distribution of these values over time.

- Meters are suitable for tracking how often an event occurs (rate), while counters are better for cumulative counts.

Adoption of DropWizard Metrics in Java Applications

DropWizard Metrics became popular because of its simplicity and out-of-the-box support for collecting performance metrics in Java applications.

Key Adoption Factors:

- Ease of Use: DropWizard Metrics provided a simple, easy-to-use API that could be embedded into Java applications without requiring large dependencies.

- Broad JVM Support: It includes built-in tools for JVM monitoring (e.g., garbage collection, thread states, and memory usage).

- Integration with Reporting Tools: It supports various reporters like

ConsoleReporter,JMXReporter, andSlf4jReporterfor exporting metrics in different formats. - Proven Performance: DropWizard’s minimal overhead and reliable performance made it a popular choice for building high-performance applications.

What are Meters in DropWizard Metrics?

Meters in DropWizard Metrics are used to measure the rate of events over time. They provide insights into how frequently certain events occur, such as the number of requests received per second. Meters help developers monitor the performance and behavior of their applications in real-time.

Key Features of Meters

Meters offer a variety of essential features that enhance your ability to monitor and analyze event rates in your applications:

- Event Rate Measurement: Meters track how many events occur over a specified time period, allowing developers to assess application performance.

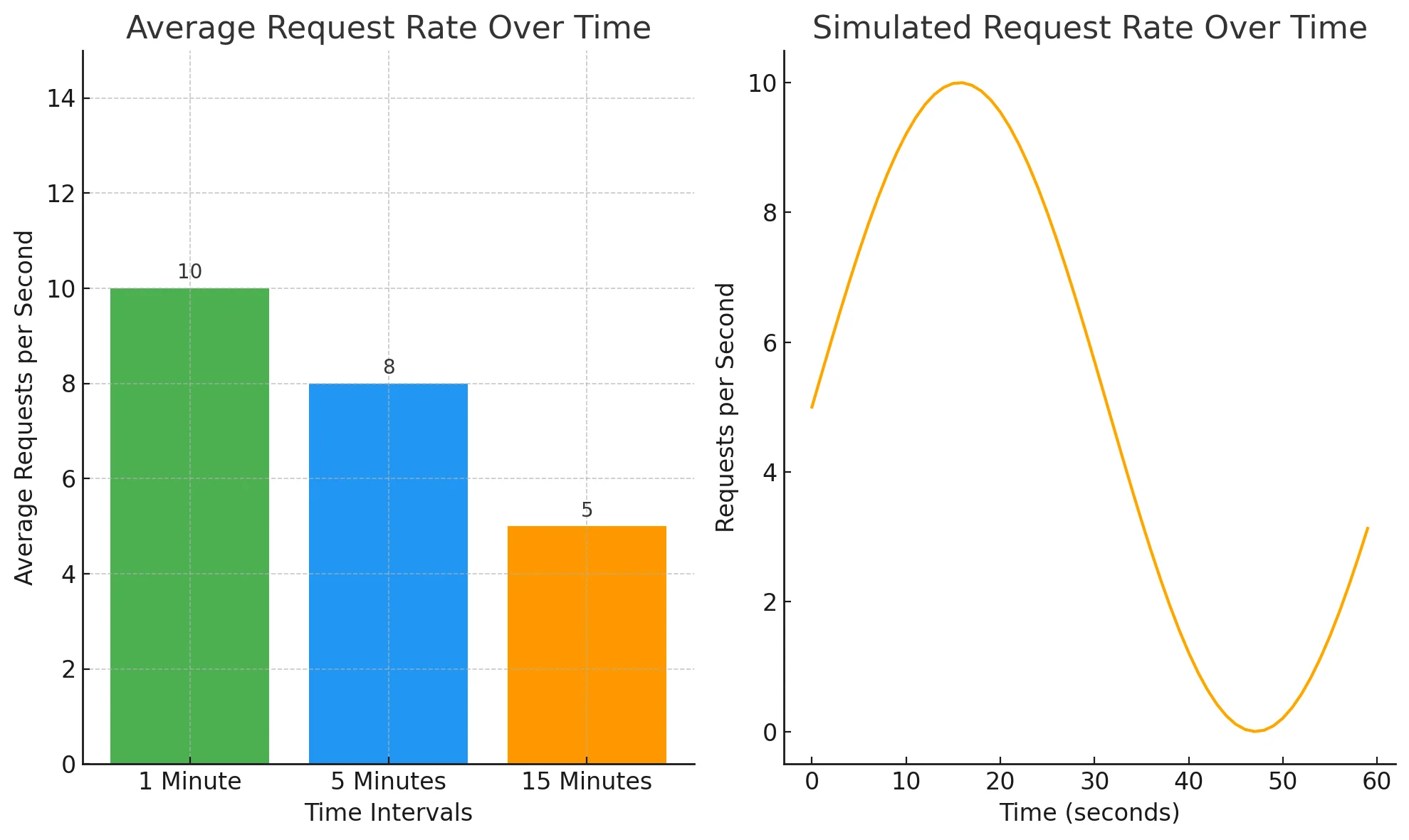

- Exponential Moving Averages: Meters calculate averages over 1-minute, 5-minute, and 15-minute intervals, giving a smooth representation of data trends over time.

Use Cases for Meters

Meters are versatile and can be applied in various scenarios to track the rate of significant events, including:

- Tracking API Calls: Measure the number of API requests received per second to monitor traffic and performance.

- Error Rates: Count the number of errors in the application to identify potential issues and bottlenecks.

- Throughput: Monitor how many transactions or operations are completed over a certain timeframe.

How Meters Calculate Moving Averages

DropWizard Meters use an exponentially weighted moving average (EWMA) to calculate event rates, which helps smooth out spikes and reflect recent activity more accurately. Each moving average is calculated using a different decay factor, providing the 1-minute, 5-minute, and 15-minute averages:

- 1-Minute Average: Updates the rate every 5 seconds to show a near real-time rate, reflecting short-term trends.

- 5-Minute Average: Calculated with a decay factor that favors data from the last 5 minutes, balancing responsiveness with stability.

- 15-Minute Average: Shows the long-term trend by smoothing out short-term variations and reflecting the overall event rate.

The decaying effect of each interval helps give more weight to recent events, ensuring that the metric adjusts to changing traffic or error patterns quickly.

Implementing Meters in DropWizard

Here’s an example to demonstrate the implementation of meters in DropWizard Metrics:

package org.example;

import com.codahale.metrics.*;

public class MeterExample {

public static void main(String[] args) throws InterruptedException {

MetricRegistry metrics = new MetricRegistry();

// Create a meter to track the rate of requests

Meter requestsPerSecond = metrics.meter("requests-per-second");

// Simulate incoming requests

for (int i = 0; i < 10; i++) {

requestsPerSecond.mark(); // Mark each request

Thread.sleep(100); // Simulate processing time (100 ms)

}

// Output: Displays total requests and moving averages

System.out.println("Total requests: " + requestsPerSecond.getCount());

System.out.println("1-minute rate: " + requestsPerSecond.getOneMinuteRate());

System.out.println("5-minute rate: " + requestsPerSecond.getFiveMinuteRate());

System.out.println("15-minute rate: " + requestsPerSecond.getFifteenMinuteRate());

}

}

Output:

Total requests: 10

1-minute rate: [calculated value based on timing]

5-minute rate: [calculated value based on timing]

15-minute rate: [calculated value based on timing]

This example showcases how Meters can help monitor the frequency of events like requests in real-time. The getOneMinuteRate(), getFiveMinuteRate(), and getFifteenMinuteRate() methods return the rate of requests over different time windows (1, 5, and 15 minutes). These rates are based on exponentially weighted moving averages.

The methods used in the above example serve different purposes:

mark(): Increments the meter by one, indicating that an event has occurred. You can also specify a different value to mark multiple events at once.getCount(): Retrieves the total count of events that have been marked.getOneMinuteRate(): Returns the average rate of events over the last minute.getFiveMinuteRate(): Returns the average rate of events over the last five minutes.getFifteenMinuteRate(): Returns the average rate of events over the last fifteen minutes.

Best Practices for Naming and Organizing Meters

- Use Descriptive Names: Choose names that clearly describe the action or event being tracked. For example,

api.requests.ratefor tracking API request rates ororders.processed.ratefor processed orders. - Consistent Naming Convention: Stick to a consistent naming pattern across your application to keep metrics organized and easily identifiable. For example, prefix with the system component like

db.for database-related metrics orservice.for service-level metrics. - Group Meters by Functionality: Organize meters by their purpose (e.g., tracking user requests, data processing, etc.), which can simplify monitoring and reporting.

- Use Tags or Labels (if supported): If your metric system supports tagging (such as Prometheus), consider adding tags to differentiate between variations of the same metric (e.g., by endpoint or region).

- Align Names with Business Logic: Reflect business-relevant actions in meter names. For example, use

user.signups.rateinstead of a genericrateif tracking new user registrations.

Common Pitfalls and How to Avoid Them with Meters

- Overuse of Meters: Tracking too many events with meters can lead to metric bloat and performance issues.

- Solution: Focus on high-value events, like critical business operations or high-volume events. Avoid tracking infrequent or low-priority actions with meters.

- Unclear Metric Naming: Vague or unclear names make it hard to identify the purpose of a meter.

- Solution: Use meaningful names with specific terms related to the business logic. Avoid generic terms like

rate1orrequestRate, which can cause confusion.

- Solution: Use meaningful names with specific terms related to the business logic. Avoid generic terms like

- Not Removing Stale or Deprecated Meters: Meters associated with outdated logic or removed components can lead to misleading data or unnecessary overhead.

- Solution: Periodically review and clean up unused or deprecated meters from your

MetricRegistry.

- Solution: Periodically review and clean up unused or deprecated meters from your

- Ignoring Memory and Resource Costs: Meters add overhead, and unoptimized usage can increase memory consumption.

- Solution: Use meters judiciously, especially in high-throughput areas. Regularly monitor their resource impact, especially if you have many meters in use.

Meter implementation in a Real-World Scenario

- Tracking API Requests Per Second

This is useful for monitoring API traffic patterns to understand request spikes, average load, or potential bottlenecks. It helps identify unusual request rates and informs decisions about scaling or throttling.

Here’s an example using Meters to monitor API request traffic, which can help identify spikes, average load, or bottlenecks. The code simulates varying API request traffic and shows how to use Meters to observe traffic patterns.

Example: Monitoring API Traffic Patterns

package org.example;

import com.codahale.metrics.*;

public class ApiTrafficMonitoring {

public static void main(String[] args) throws InterruptedException {

MetricRegistry metrics = new MetricRegistry();

Meter requestMeter = metrics.meter("api-request-meter");

// Simulate normal traffic (10 requests, 200ms apart)

simulateTraffic(requestMeter, 10, 200);

Thread.sleep(5000); // Wait to simulate idle time

// Simulate a spike in traffic (15 requests, 50ms apart)

simulateTraffic(requestMeter, 15, 50);

Thread.sleep(5000); // Wait to simulate idle time

// Simulate a bottleneck (5 requests, 500ms apart)

simulateTraffic(requestMeter, 5, 500);

// Print total requests and moving averages over time

System.out.println("Total requests: " + requestMeter.getCount());

System.out.println("1-minute rate: " + requestMeter.getOneMinuteRate());

System.out.println("5-minute rate: " + requestMeter.getFiveMinuteRate());

System.out.println("15-minute rate: " + requestMeter.getFifteenMinuteRate());

}

// Method to simulate varying API traffic

private static void simulateTraffic(Meter meter, int requestCount, int delay) throws InterruptedException {

for (int i = 0; i < requestCount; i++) {

meter.mark(); // Record each API request

Thread.sleep(delay); // Delay to simulate processing time between requests

}

}

}

Output:

Total requests: 30

1-minute rate: 0.75 req/sec

5-minute rate: 0.50 req/sec

15-minute rate: 0.35 req/sec

Here’s how the traffic simulation has been shown in the above code:

Traffic Simulation:

- Request Spikes: The spike in traffic (15 requests with short delay) will increase the request rate, which can be detected using the 1-minute rate.

- Average Load: The steady load over time (normal traffic) helps maintain a consistent rate, giving you an idea of the average API usage.

- Bottlenecks: The long delays simulate a bottleneck, which reduces the request rate, and the meter can help identify such performance issues by showing a drop in the rate.

Metrics:

getCount(): Total number of requests processed.getOneMinuteRate(),getFiveMinuteRate(),getFifteenMinuteRate(): Show the request rates averaged over the last 1, 5, and 15 minutes.

What are Timers in DropWizard Metrics?

Timers in DropWizard Metrics are used to measure both the rate and duration of events. They are primarily used for tracking how long specific operations take and how frequently they occur. For example, you can use a timer to monitor database query latency or API response times.

Key Features of Timers

Timers offer a range of powerful features that enhance performance monitoring:

- Duration Measurement: Timers accurately capture the time taken by a specific block of code, providing insights into the time spent in various operations.

- Rate Calculation: Timers also keep track of the rate at which these timed operations occur, similar to Meters.

- Combining Histogram and Meter Functionalities: Timers record a distribution of the time durations (like a Histogram) and also track the rate of occurrences (like a Meter).

Use Cases for Timers

Utilizing Timers can significantly improve observability in various scenarios, including:

- Tracking Method Execution Time: Measure how long certain methods or processes take to complete.

- Database Query Latency: Track the time it takes for database queries to execute, helping to detect slow queries.

- API Response Times: Calculate how long it takes to process an API request and monitor traffic patterns.

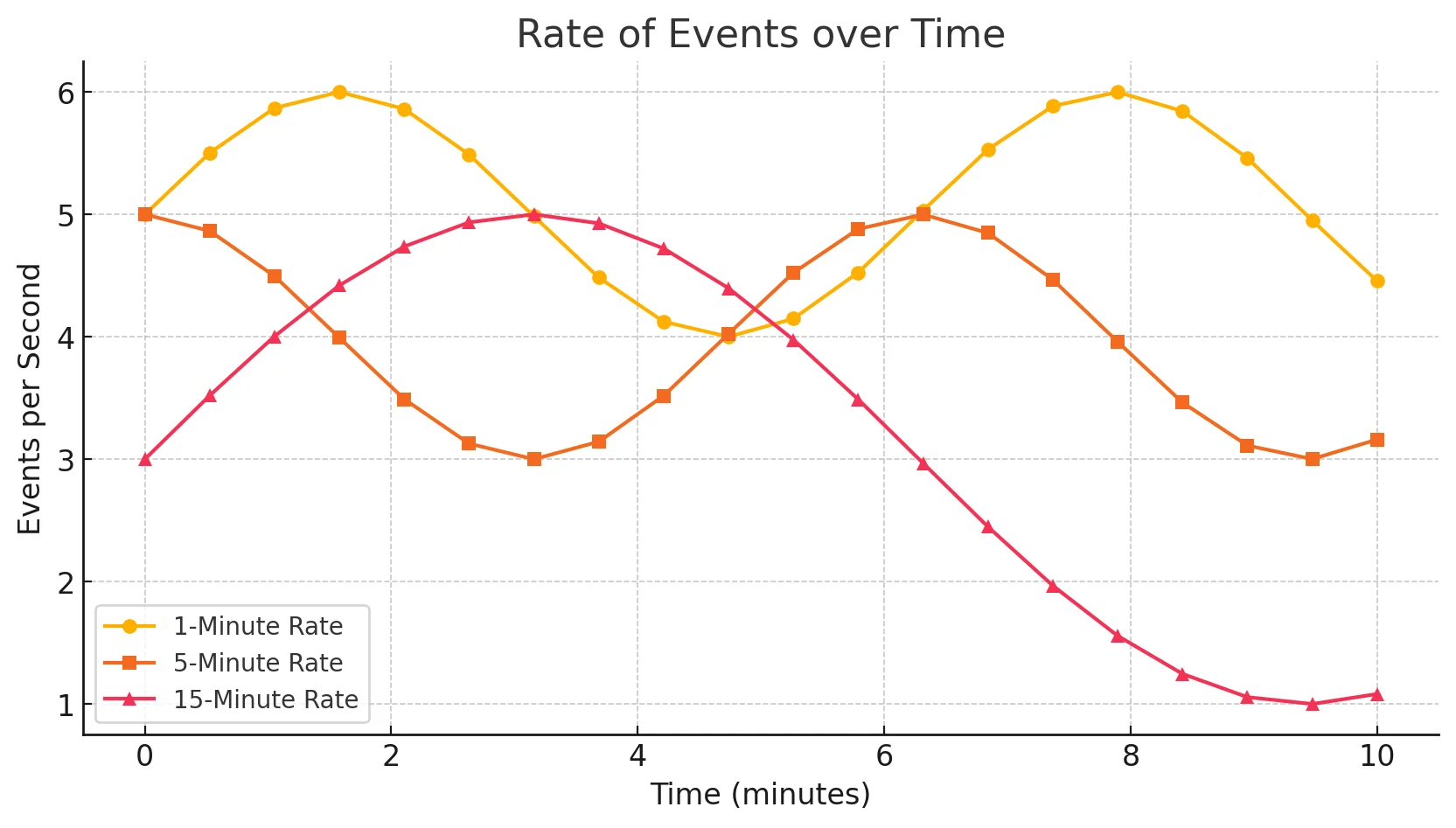

How Timers Combine Histogram and Meter Functionalities

Timers calculate:

Rate of Events: Using a meter internally, timers track how frequently a specific event (e.g., method execution) happens.

Demonstration of the rate of events - X-axis: Rate of events (requests per second)

- Y-axis: Time (e.g., minutes or seconds)

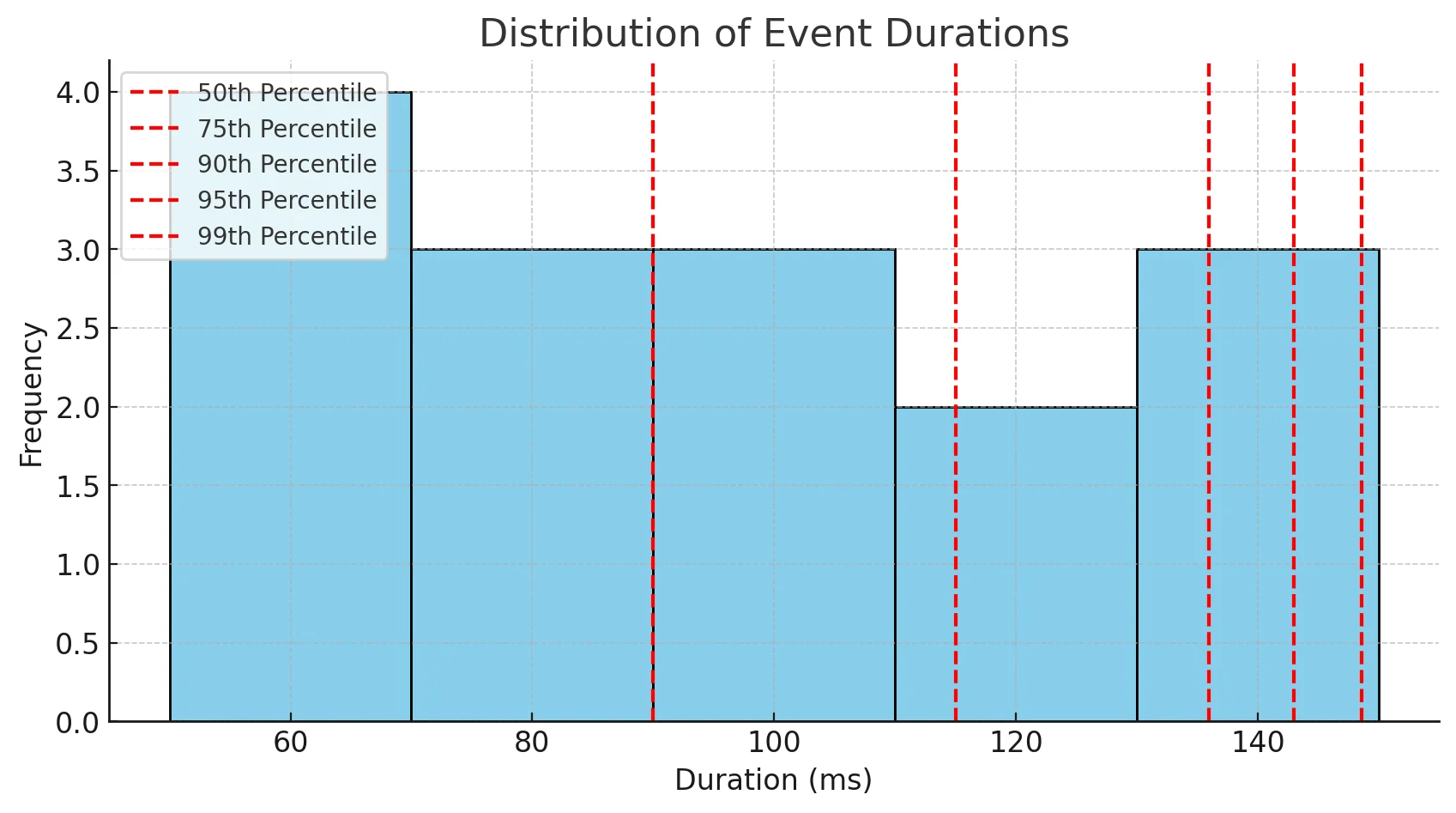

Distribution of Durations: Using a histogram internally, timers capture statistical data like mean, min, max, and percentiles for the recorded durations.

Distribution of event durations - X-axis: Duration (in milliseconds or seconds)

- Y-axis: Event types or intervals (e.g., database queries, API response times)

The red dashed lines indicate key percentile values (e.g., 50th, 75th, 90th). These values show how spread out the event durations are, helping to detect anomalies.

Implementing Timers in DropWizard

Here’s a code example demonstrating how to use Timers in DropWizard Metrics. This example helps simulate how you can track and analyze database query performance in a real-world application. With metrics like query count, mean query time, and request rates, you can monitor database health, detect slowdowns, and take action to maintain a smooth user experience, particularly during peak traffic.

package org.example;

import com.codahale.metrics.MetricRegistry;

import com.codahale.metrics.Timer;

public class TimerExample {

public static void main(String[] args) {

MetricRegistry metrics = new MetricRegistry();

// Create a timer to track database query durations

Timer dbQueryTimer = metrics.timer("db-query-duration");

// Start timing the database query

Timer.Context context = dbQueryTimer.time();

try {

// Simulate the database query

// Replace with your actual database query logic

executeDatabaseQuery();

} finally {

// Stop the timer and record the duration

context.stop();

}

// Output: Print basic statistics about the timer

System.out.println("Total recorded queries: " + dbQueryTimer.getCount());

System.out.println("Mean query time (ns): " + dbQueryTimer.getMeanRate());

System.out.println("1-minute rate: " + dbQueryTimer.getOneMinuteRate());

}

private static void executeDatabaseQuery() {

try {

// Simulate a database query by adding a delay

// 100 ms delay to simulate a query

Thread.sleep(100);

} catch (InterruptedException e) {

e.printStackTrace();

}

}

}

Output:

Total recorded queries: 1

Mean query time (ns): [calculated value]

1-minute rate: [calculated value based on timing]

MetricRegistryis the main class for managing and organizing metrics. It acts as a central hub for creating, registering, and retrieving metrics (such as timers, counters, and gauges).Timermeasures both the rate and duration of events. For example, it’s commonly used to track the duration of a specific code block or a task, like database queries or API request times.- time(): Starts the timer and returns a

Timer.Contextobject, which must be stopped later. - Timer.Context: Represents the context for the timer. It tracks the elapsed time until

context.stop()is called.

Best Practices for Naming and Organizing Timers

To maximize the effectiveness of your timers, adhere to these best practices for naming and organization:

- Use Descriptive Names: Include the context in the name (e.g.,

db-query-response-time,api-call-duration) to make it clear what the timer is measuring. - Organize by Context: Group related metrics together to provide context. For example, if you have multiple Meters for a specific service, consider naming them with a common prefix (e.g.,

user-service.api-requests,user-service.api-errors). - Avoid Over-Nesting: Use logical groupings but keep the hierarchy simple to prevent confusion in large applications.

- Consistent Naming Conventions: Stick to a consistent naming convention (e.g., lowercase with hyphens) across all your metrics for uniformity and easier management.

Common Pitfalls and How to Avoid Them

Being aware of common pitfalls can help you use timers more effectively and prevent potential issues:

- Creating Timers in Loops

- Description: Avoid creating new

Timerinstances inside a loop or in methods that are called frequently. - Consequence: This leads to excessive memory usage and potential memory leaks since each

Timercreates its own internal data structure. - Solution: Define the timer once at the class or method level and reuse it.

- Description: Avoid creating new

- Misusing

time()Method- Description: If the

Timer.Contextreturned bytime()is not properly stopped, it can lead to incomplete or inconsistent metrics. - Consequence: Metrics will be skewed (inaccurate, biased, or misleading due to improper collection, incomplete data points, or irregular patterns), with some timers running indefinitely, making it difficult to diagnose real performance issues.

- Solution: Always use a

try-finallyblock to ensurecontext.stop()is called even if exceptions occur.

- Description: If the

- Excessive Timers

- Description: Creating too many timers to track every small operation can overwhelm the system.

- Consequence: This leads to high memory consumption, CPU overhead, and cluttered metrics dashboards, making it hard to identify critical data points.

- Solution: Only create timers for key processes and operations that significantly impact performance. Use hierarchical naming to avoid redundancy.

Meters vs Timers: Key Differences and When to Use Each

The main differences between Meters and Timers lie in their focus and the granularity of data they provide:

1. Comparison of Measurement Focus

- Meters:

- Focus on event rate measurement only.

- Ideal for tracking how frequently events are happening, like request rate or error rate.

- E.g., Meters are perfect for monitoring requests per second or the number of successful logins per minute.

- Timers:

- Measure both rate and duration of events.

- Useful for scenarios where the execution time is also crucial, such as response time or database query latency.

- E.g., Timers are best for tracking API response times and method execution durations.

2. Granularity of Data

- Meters:

- Provide simple counts and rates, like the number of events per second or minute.

- No information on how long each event took.

- Output includes rates over 1, 5, and 15-minute moving averages.

- Timers:

- Offer detailed insights through statistical distribution of durations.

- Record metrics like min, max, mean, and percentiles (e.g., 50th, 75th, 90th).

- Timers can pinpoint performance bottlenecks by showing outliers or skewed durations.

3. Performance Overhead Considerations

- Meters:

- Lightweight; low overhead.

- Can be safely used in high-throughput applications without significant performance impact.

- Timers:

- Slightly heavier due to maintaining both rate and duration data.

- Avoid excessive use in high-frequency scenarios as it could lead to performance degradation.

4. Decision-Making: When to Use Meters vs. Timers

- Use Meters When:

- You only care about how often something happens (e.g., tracking user logins or error counts).

- Low overhead is crucial.

- Rate measurement over time is sufficient (no need for duration data).

- Use Timers When:

- You want to track how long something takes in addition to how often it happens.

- Detailed analysis of event durations is required, such as profiling method performance.

- Identifying slow operations is necessary (e.g., pinpointing slow database queries).

Advanced Usage: Combining Meters and Timers

When an application needs detailed performance insights, combining Meters and Timers can be extremely powerful. This strategy helps you capture both the frequency and duration of critical events, enabling a more comprehensive understanding of the system’s behavior.

Scenarios Where Using Both Meters and Timers is Beneficial

- Tracking API Endpoints: Use Meters to monitor the request rate and Timers to measure the response time for each endpoint. This gives you a holistic view of how often an API is called and how long it takes to respond.

- Database Performance Monitoring: Use a Meter to track query frequency and a Timer to measure the duration of each query. This can help identify high-traffic queries that may be causing performance bottlenecks.

- Error Tracking: Use Meters to count error occurrences (e.g., 4xx and 5xx HTTP responses) and Timers to measure the response time of requests that result in errors. Analyzing both can reveal if slower responses contribute to higher error rates.

Techniques for Correlating Meter and Timer Data

When using both metrics, it’s crucial to correlate them meaningfully. Here are some effective techniques:

- Naming Conventions: Use consistent names for Meters and Timers related to the same event (e.g.,

api.request.rateandapi.request.duration). This helps in easily aggregating and comparing data. - Tagging and Grouping: Add tags like

success,failure, ortimeoutto distinguish different outcomes for the same event. This enables grouping related metrics for specific conditions. - Visualization Tools: Use visualization tools to overlay Meter and Timer graphs on the same dashboard. SigNoz provides its own built-in dashboards and visualizations which can be utilized in this case. This helps in spotting correlations, like increased query rate affecting the duration.

Best Practices for Balancing Granularity and Performance

- Avoid Over-Instrumentation: Too many Meters and Timers can cause excessive overhead, leading to degraded performance. Focus on key areas that impact application behavior.

- Aggregate Similar Events: If multiple endpoints or methods perform similar tasks, aggregate them under a single metric instead of creating separate ones for each. E.g., use one Timer for

db.query.durationrather than individual Timers for each query. - Prioritize Key Metrics: Identify which Meters and Timers are most critical to your application’s performance and user experience. Focus on those metrics to avoid overwhelming your monitoring system.

Improving Application Performance Using Combined Meter and Timer Data

In this case study, we explore how to enhance application performance monitoring by utilizing both Meters and Timers from Dropwizard's metrics library. The scenario demonstrates tracking request rates and processing durations in a simulated API service.

Scenario

A web application needs to monitor its API performance to ensure it can handle varying loads and to identify potential bottlenecks in request processing. By implementing Meters to track the frequency of API calls and Timers to measure the duration of these requests, developers can gain valuable insights into application behavior and performance.

Here’s an example that purely uses Dropwizard’s metrics library, demonstrating how to use a Meter to track request rates and a Timer to monitor request processing durations.

import com.codahale.metrics.MetricRegistry;

import com.codahale.metrics.Meter;

import com.codahale.metrics.Timer;

public class DropwizardMetricsExample {

private final Meter requestMeter;

private final Timer responseTimer;

public DropwizardMetricsExample(MetricRegistry registry) {

// Initialize the Meter for tracking request rates

this.requestMeter = registry.meter("api.request.rate");

// Initialize the Timer for tracking request processing durations

this.responseTimer = registry.timer("api.request.duration");

}

public String processData() {

// Mark a new request for the meter

requestMeter.mark();

// Start the timer to measure processing duration

Timer.Context context = responseTimer.time();

try {

// Simulate some processing logic (e.g., database access, computation)

Thread.sleep(200);

// Return some dummy data

return "Data processed successfully!";

} catch (InterruptedException e) {

e.printStackTrace();

return "Error in processing data";

} finally {

// Stop the timer to record the duration

context.stop();

}

}

public static void main(String[] args) {

// Create a MetricRegistry instance

MetricRegistry registry = new MetricRegistry();

// Initialize the DropwizardMetricsExample with the registry

DropwizardMetricsExample example = new DropwizardMetricsExample(registry);

// Simulate multiple API requests

for (int i = 0; i < 5; i++) {

System.out.println(example.processData());

}

// Output metrics after processing

System.out.println("Total requests: " + example.requestMeter.getCount());

System.out.println("1-minute rate: " + example.requestMeter.getOneMinuteRate());

System.out.println("5-minute rate: " + example.requestMeter.getFiveMinuteRate());

System.out.println("15-minute rate: " + example.requestMeter.getFifteenMinuteRate());

System.out.println("Total duration (in nanoseconds): " + example.responseTimer.getSnapshot().getValues().length);

System.out.println("Mean request duration (ms): " + example.responseTimer.getSnapshot().getMean() / 1_000_000);

}

}

This setup effectively tracks request frequency and processing time, helping identify bottlenecks, predict server load, and monitor performance, using Dropwizard's metrics library.

MetricRegistry registry = new MetricRegistry(): ThisMetricRegistryinstance is used to create and register metrics such as meters and timers.requestMetertracks how many requests per second are processed by the application.responseTimermeasures the duration of each request processing.

Inside the processData Method:

requestMeter.mark(): This marks a new request, increasing the count in the meter.Timer.Context context = responseTimer.time(): This starts the timer for the duration of the request processing.Thread.sleep(200): This simulates a delay of 200 milliseconds, representing some business logic or external call, like a database query.context.stop(): Ensures the timer stops recording the time, even if an exception occurs.

After simulating a few requests, the metrics are printed:

- Total requests: The count of all requests marked by the meter.

- 1-minute, 5-minute, and 15-minute rates: The rate of requests handled by the application over these intervals.

- Total duration and Mean request duration: Shows the average time each request took to process.

Integrating DropWizard Metrics with SigNoz for Enhanced Observability

While DropWizard Metrics provides valuable insights, integrating with a comprehensive observability platform like SigNoz can enhance your monitoring capabilities. SigNoz offers advanced analytics and visualization tools that can help you make the most of your DropWizard Metrics data.

Benefits of Combining DropWizard Metrics with SigNoz's Advanced Analytics

Integrating DropWizard Metrics with SigNoz offers several advantages:

- Enhanced Visibility: By visualizing DropWizard metrics within SigNoz, teams can gain deeper insights into application performance, making it easier to identify bottlenecks and areas for improvement.

- Correlated Insights: SigNoz allows for the correlation of metrics collected from DropWizard with logs and traces, providing a holistic view of application behavior. This integration helps teams understand how metrics affect user experience and application reliability.

- Custom Dashboards: Users can create custom dashboards in SigNoz to display specific metrics from DropWizard, such as request rates and processing times. This personalization enables teams to focus on metrics most relevant to their operations.

- Alerting Capabilities: SigNoz supports setting up alerts based on the metrics collected from DropWizard. This proactive monitoring ensures that teams are notified of potential issues before they impact users.

- Historical Analysis: With SigNoz, teams can analyze historical metric data collected from DropWizard, allowing for trend analysis and better capacity planning.

SigNoz Cloud is the easiest way to run SigNoz. Sign up for a free account and get 30 days of unlimited access to all features.

You can also install and self-host SigNoz yourself since it is open-source. With 24,000+ GitHub stars, open-source SigNoz is loved by developers. Find the instructions to self-host SigNoz.

Visualizing and Analyzing Meter and Timer Data in SigNoz Dashboards

To effectively visualize and analyze Meter and Timer data in SigNoz, the first step is to integrate DropWizard Metrics with the platform. Below are the steps you need to follow:

- Set Up Your SigNoz Account

Begin by creating an account on SigNoz Cloud. This will allow you to access SigNoz's features for monitoring and analyzing your application metrics.

- Configure DropWizard Metrics

Next, ensure your application is configured to utilize DropWizard Metrics for capturing relevant metrics data.

Add DropWizard Metrics Dependency:

If you are using Maven, add the following dependency to your pom.xml file:

<dependency>

<!-- Dropwizard Metrics Core Dependency -->

<dependency>

<groupId>io.dropwizard</groupId>

<artifactId>dropwizard-core</artifactId>

<version>2.1.0</version> <!-- Make sure to use a compatible version -->

</dependency>

<groupId>io.dropwizard.metrics</groupId>

<artifactId>metrics-core</artifactId>

<version>4.2.3</version> <!-- Check for the latest version -->

</dependency>

For Gradle, add the following dependencies in your build.gradle file:

implementation 'io.dropwizard:dropwizard-core:2.1.0'

implementation 'io.dropwizard.metrics:metrics-core:4.2.18'

Basic Metrics Setup:

Here’s a basic example of setting up DropWizard Metrics within a Dropwizard application. This example demonstrates tracking the rate of API requests:

import com.codahale.metrics.Meter;

import com.codahale.metrics.MetricRegistry;

import io.dropwizard.Application;

import io.dropwizard.setup.Environment;

import io.dropwizard.Configuration;

import io.dropwizard.setup.Bootstrap;

public class MetricsApplication extends Application<Configuration> {

private final MetricRegistry metricRegistry = new MetricRegistry();

@Override

public void initialize(Bootstrap<Configuration> bootstrap) {

// Initialize SigNoz integration, if required

}

@Override

public void run(Configuration configuration, Environment environment) {

// Register the metrics registry with Dropwizard's environment

environment.metrics().register("api.metrics", metricRegistry);

// Create a meter for tracking request rates

Meter requestMeter = metricRegistry.meter("api.request.rate");

// Simulate a few requests

for (int i = 0; i < 10; i++) {

processRequest(requestMeter);

}

// Example output

System.out.println("Total requests recorded: " + requestMeter.getCount());

System.out.println("Request rate (requests per second): " + requestMeter.getMeanRate());

}

private void processRequest(Meter requestMeter) {

// Increment the meter for each request

requestMeter.mark();

try {

// Simulate processing delay

Thread.sleep(200);

} catch (InterruptedException e) {

e.printStackTrace();

}

}

public static void main(String[] args) throws Exception {

new MetricsApplication().run(args);

}

}

This code sets up a Meter within DropWizard to track request rates and simulate some requests. The requestMeter.mark() increments the counter for each request, capturing the rate of requests handled.

When you run this program, you should see output similar to the following:

BUILD SUCCESSFUL in 3s

2 actionable tasks: 2 executed

5:16:01 pm: Execution finished ':org.example.MetricsApplication.main()'.

- Visualize and Analyze Metrics in SigNoz

Once you have integrated DropWizard Metrics with SigNoz, you can start visualizing and analyzing Meter and Timer data through custom dashboards. Set up alerts based on specific thresholds, and conduct in-depth analyses of your application's performance over time.

For further guidance, refer to our documentation for detailed steps on sending metrics to SigNoz and configuring your environment for optimal observability.

Key Takeaways

- Meters are ideal for measuring event rates and throughput, providing a straightforward way to track the frequency of occurrences in your application.

- Timers combine duration measurement with rate calculation, offering a comprehensive view of both how often and how long operations take.

- Choose Meters for simple event counting and rate tracking, especially for high-frequency events.

- Opt for Timers when you need detailed duration analysis along with rate information.

- Combining Meters and Timers can provide deeper insights into application behavior, especially when correlated. It is beneficial for tracking API performance, database query latency, and error rates, helping identify bottlenecks and optimize performance.

- Integrating with platforms like SigNoz can enhance the value of DropWizard Metrics by providing advanced analytics and visualization capabilities.

FAQs

Can I use Meters and Timers interchangeably?

While Meters and Timers both measure event rates, they're not interchangeable. Meters are specifically designed for measuring the rate of events (e.g., requests per second), while Timers offer additional metrics by measuring the duration of those events. Use Meters for simple rate tracking and Timers when you need both rate and duration data.

How do Meters and Timers affect application performance?

Meters have minimal impact on performance and are suitable for high-frequency events. Timers have a slightly higher overhead due to additional calculations but provide more detailed metrics. In most cases, the impact is negligible, but consider using sampling for extremely high-volume operations.

What's the difference between a Histogram and a Timer in DropWizard Metrics?

A Histogram measures the statistical distribution of values over a stream of data, capturing how often certain value ranges occur. In contrast, a Timer combines the functionality of a Histogram (for measuring durations) with that of a Meter (for measuring rates). Use a Histogram when you need to track the distribution of values alone, and opt for a Timer when you want to measure both the duration of operations and the rate at which they occur.

How can I reset a Timer or Meter in DropWizard Metrics?

DropWizard Metrics doesn't provide a built-in method to reset Timers or Meters. If you need to reset metrics, consider creating a new instance or using a different metric type like Counters that can be manually reset. Alternatively, you can track the start time of your measurements and calculate delta values in your reporting logic.