How to Delete Time Series Alerts in Prometheus v2

Prometheus is a powerful monitoring and alerting toolkit, but managing its time series alerts can be challenging. You might need to delete specific alerts to maintain system efficiency or remove outdated data. This guide walks you through the process of deleting time series alerts in Prometheus v2, covering everything from prerequisites to best practices.

Understanding Time Series Alerts in Prometheus v2

Time series alerts in Prometheus are predefined rules that continuously evaluate the data collected from various monitored systems. Each alert corresponds to specific metrics and threshold values, enabling users to monitor particular states or values. For example, a user might set an alert for when memory usage consistently exceeds 80% over a five-minute window, indicating potential strain on system resources.

By setting alerting rules, users can define precise thresholds for various metrics—such as CPU usage, memory consumption, or latency—so that whenever a defined condition is met, an alert is triggered. This proactive approach to monitoring helps identify potential issues in systems before they escalate, allowing users to address them promptly.

Importance of Managing and Deleting Alerts

While creating alerts is essential, managing and deleting them is equally important. Over time, some alerts may become irrelevant as infrastructure changes or thresholds adjust. Old or redundant alerts can lead to "alert fatigue," where users are overwhelmed with too many notifications, many of which may no longer be useful. Effectively managing and deleting outdated alerts keeps the alerting system streamlined and relevant, ensuring that notifications signal only the issues requiring immediate attention.

Overview of Prometheus v2 Data Storage Structure

Prometheus v2 introduced a new data storage architecture that is designed to improve storage efficiency, especially for high-cardinality data like time series alerts. Key features of this structure include:

- Block Storage: Prometheus v2 organizes data into blocks that are typically two hours long. Each block consists of multiple time series samples and metadata, stored in a columnar format for efficient compression and quick retrieval. This approach reduces the amount of storage needed and speeds up data retrieval, making it suitable for handling large volumes of metrics.

- TSDB (Time Series Database): Prometheus uses a custom Time Series Database optimized for write-heavy, append-only workloads, minimizing the resource cost for ingesting large volumes of data.

- WAL (Write-Ahead Log): The WAL records incoming data points to disk before they're compacted into blocks, ensuring data durability and resilience in case of failures.

How are Alerts Stored as Time Series Data?

Prometheus treats alerts as special time series data that is generated by evaluating alerting rules on a set frequency. When an alerting rule is triggered:

- Alert Time Series Creation: Prometheus generates a new time series specifically for the alert, with labels describing the alert condition. For instance, an alert for high CPU might have labels such as

alertname="HighCPU",severity="critical", andinstance="host1". Each alert state is stored as a sample with a value of 1 (firing) or 0 (resolved). - Continuous Evaluation: The alert's time series data is updated at each evaluation interval, recording state changes as new samples in the time series. This enables both real-time monitoring and historical analysis of alert patterns.

- State Management: Alert states transition between firing and resolved based on the evaluation of alert conditions. The complete history of these state changes is preserved in the time series database for the configured retention period, enabling analysis of historical alert patterns.

Because alerts are embedded within the time series data, deleting alerts is not as simple as removing a rule; it requires an understanding of Prometheus's underlying data model to avoid unintended impacts on stored data.

Prerequisites for Deleting Time Series Alerts

Before proceeding to delete any alerts in Prometheus, it is crucial to ensure that you meet the following prerequisites. This will help safeguard your data and streamline the deletion process:

Enable the Admin API: The Admin API must be enabled in your Prometheus v2 configuration to manage alerts effectively. In order to use some of the advanced Prometheus features (like being able to call the snapshot API), you need to enable the Web API which can easily be done with command line flag, to do this, you need to add the following flag to your Prometheus startup command:

--web.enable-admin-apiEnabling this API provides access to administrative functions, including the ability to delete alerts, which is essential for maintaining the integrity of your monitoring setup.

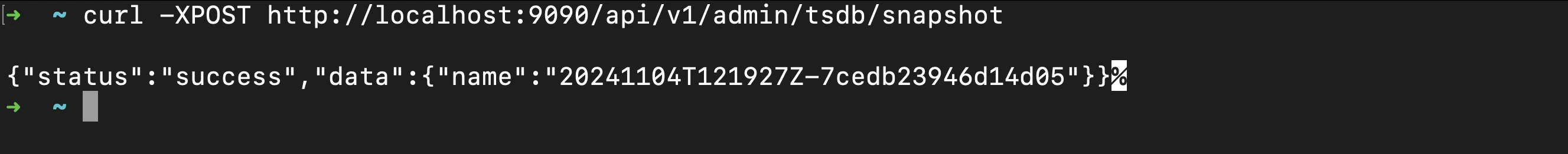

Once enabled you can make administrative requests, for example to trigger a snapshot creation like so:

curl -XPOST http://localhost:9090/api/v1/admin/tsdb/snapshot

Using Prometheus API to create a snapshot of current time series data Permissions: Ensure you have the necessary permissions to access and modify Prometheus data. This typically means having administrative privileges or being part of a user group that has been granted the required access rights. Without appropriate permissions, you may encounter errors or be unable to perform deletion actions.

Understand Label Matchers: Familiarize yourself with Prometheus label matchers, which are critical for targeting specific alerts accurately. Label matchers allow you to filter alerts based on their labels, ensuring that you delete only the alerts you intend to remove. For example, understanding how to use operators like

=,!=,=~, and!~will enable you to specify which alerts to delete more precisely.Backup Your Data: Always create a backup of your Prometheus data before performing any deletions. This is a crucial step to prevent data loss in case you need to restore alerts later. You can use the following command to back up your data:

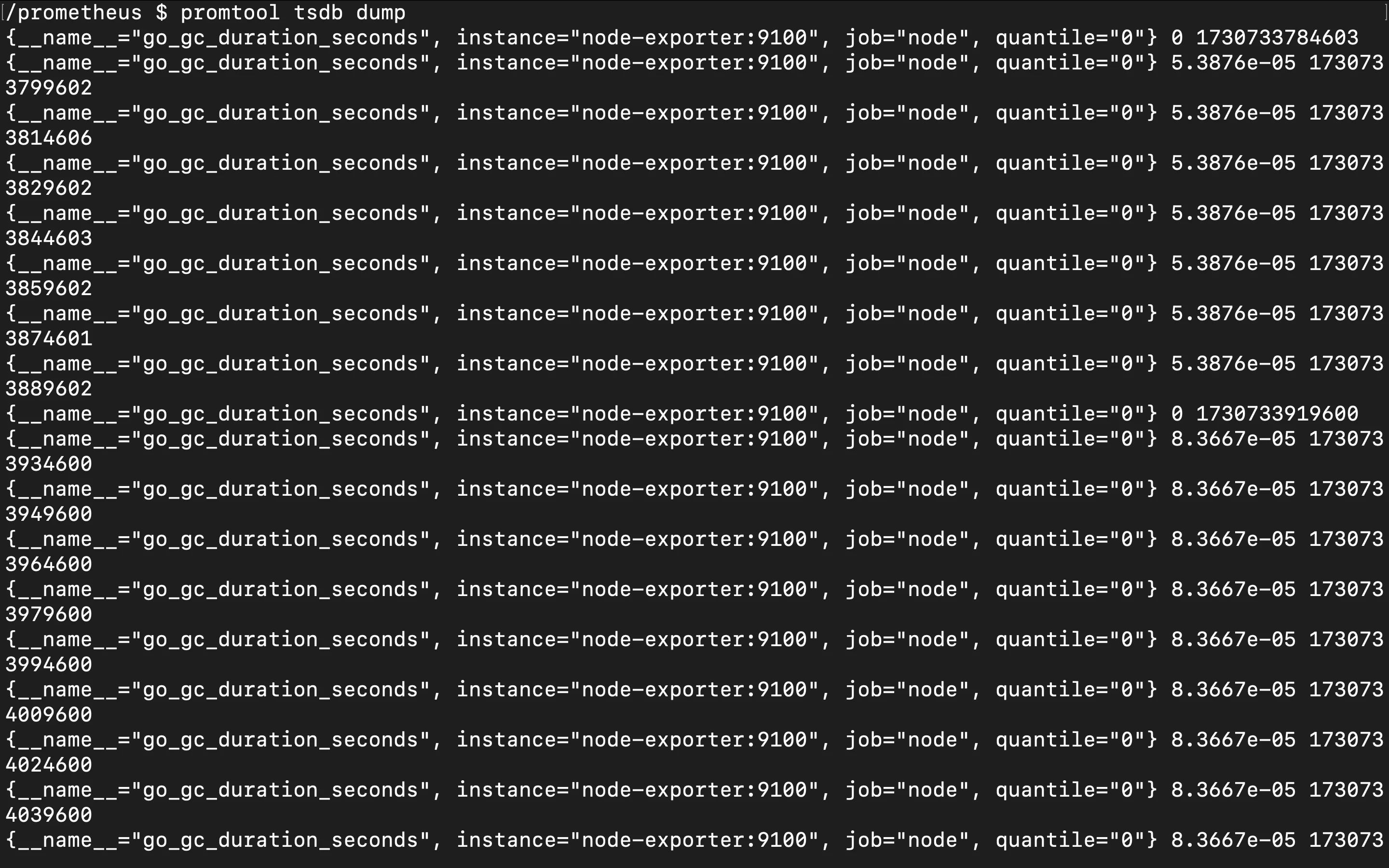

promtool tsdb dump <data-directory>

Extracting and backing up Prometheus time series data using `promtool tsdb dump` This command will create a snapshot of your current Prometheus state, allowing you to restore it if necessary. By having a backup, you can confidently manage your alerts, knowing you have a safeguard in place.

Step-by-Step Guide: Deleting Time Series Alerts

Deleting time series alerts in Prometheus requires careful execution to ensure accuracy and avoid unintended data loss. Follow these steps to safely delete alerts:

- Identify Alerts:

Before deleting alerts, you need to precisely identify which alerts you want to remove. Prometheus provides multiple ways to locate and verify alerts:

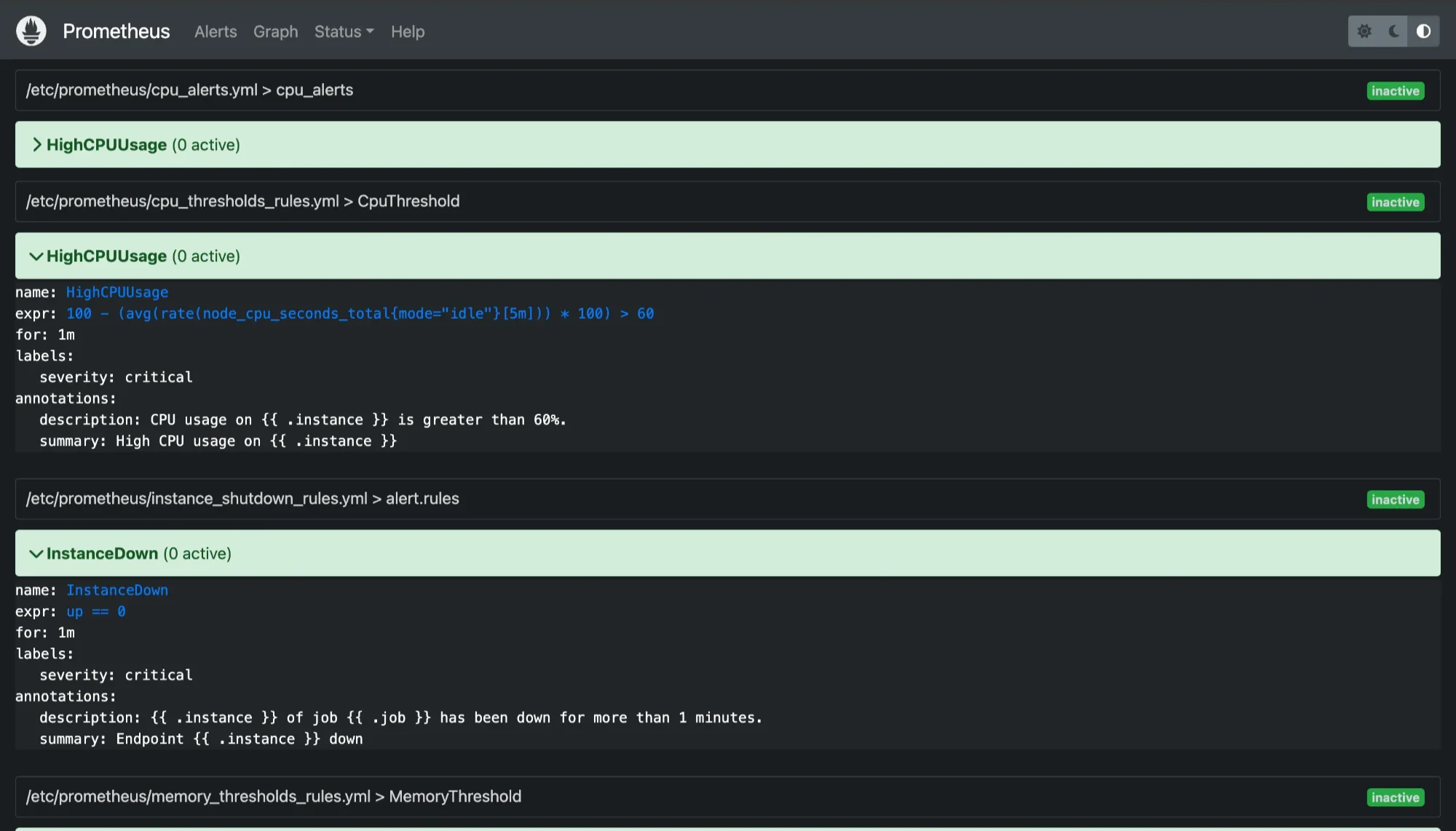

Using the Prometheus UI: Navigate to the Alerts page (typically at

/alerts) in your Prometheus web interface. This provides a visual overview of all configured alerts and their current states.Using PromQL Queries: You can use label matchers in PromQL to identify specific alerts. Label matchers let you precisely target alerts based on their labels, such as

alertname,severity, orinstance. To identify an alert in Prometheus, use a query with PromQL (Prometheus Query Language):# Query for a specific alert by name ALERTS{alertname="YourAlertName"} # Query with multiple label matchers ALERTS{alertname="YourAlertName", severity="critical", instance="instance-name"}For example, to locate an alert with the name "HighCPUUsage," you can use the following query:

ALERTS{alertname="HighCPUUsage"}

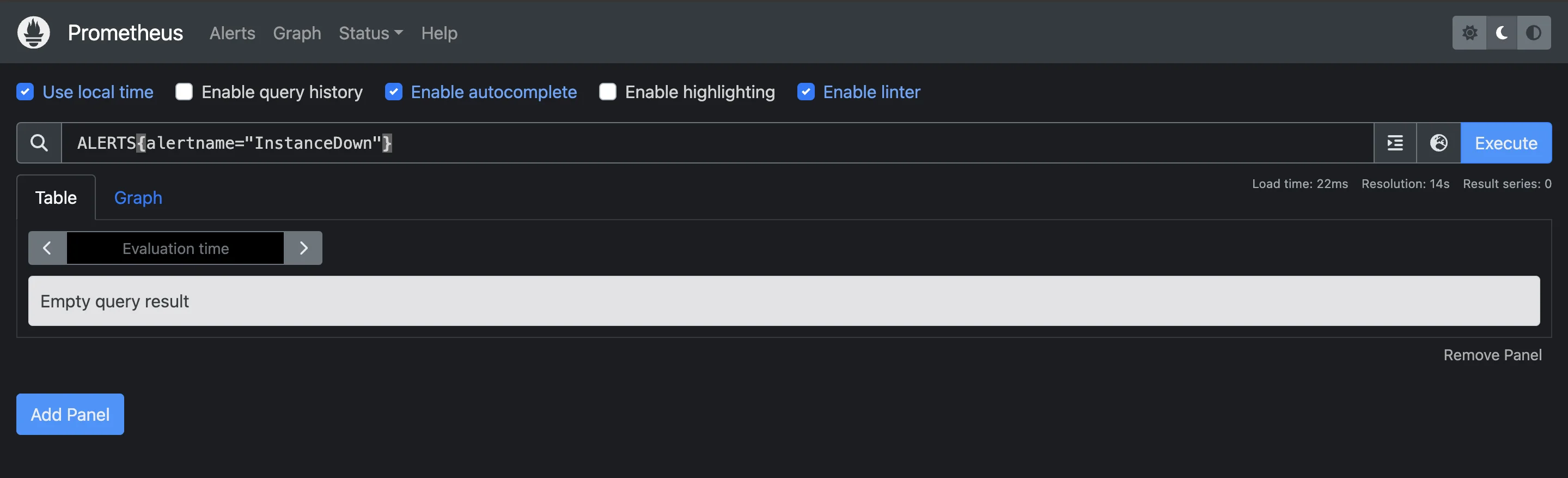

Prometheus Alerts Page This query will display any active time series associated with the "HighCPUUsage" alert, allowing you to confirm the alerts you want to delete. Use other label matchers as needed to narrow down results, especially when dealing with multiple alerts with similar names

- Construct the API Request:

Once you've identified the alerts, construct a DELETE request to Prometheus's Admin API. The Admin API endpoint for deleting time series is /api/v1/admin/tsdb/delete_series. This endpoint requires you to specify the time series you wish to delete using a match[] parameter, which accepts label matchers to target specific alerts:

Here's the basic structure of the request:

POST /api/v1/admin/tsdb/delete_series

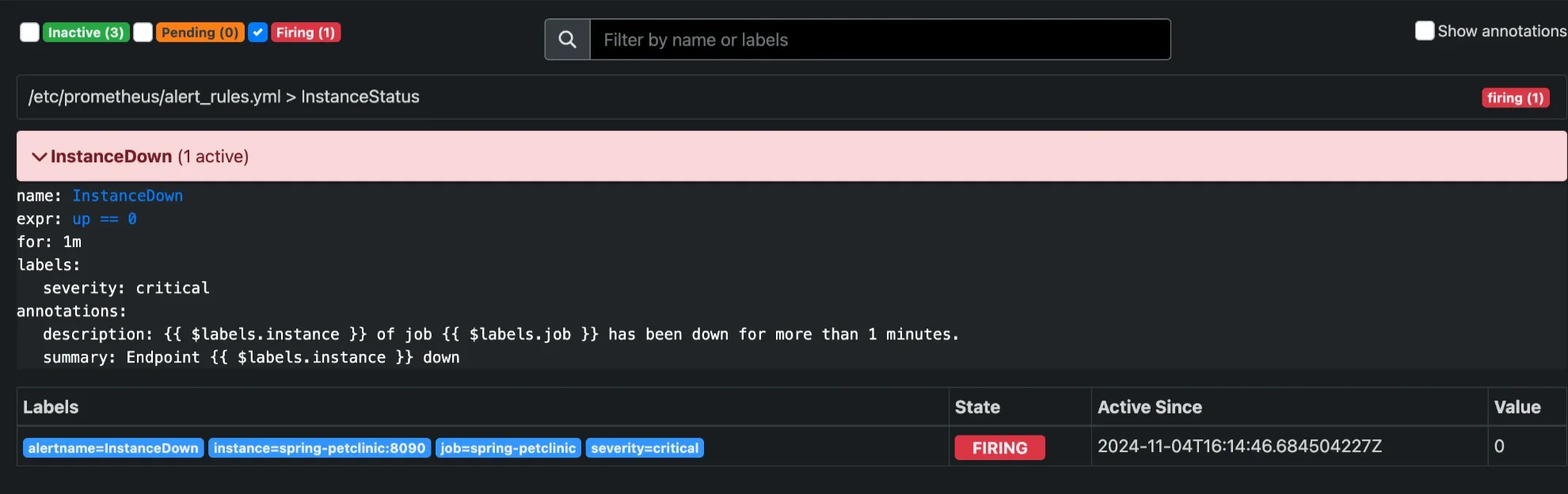

For example, to delete only the "InstanceDown" alert, use:

POST /api/v1/admin/tsdb/delete_series?match[]=ALERTS{alertname="InstanceDown"}

- Execute the Deletion:

To send the delete request, you can use curl or any other HTTP client tool capable of making POST requests. The example below shows how to execute the request with curl. Replace <http://localhost:9090> with the address of your Prometheus server if it's hosted on a different URL:

curl -X POST http://localhost:9090/api/v1/admin/tsdb/delete_series \

-d 'match[]=ALERTS{alertname="InstanceDown"}'

In this command:

- The

X POSToption specifies the HTTP POST method. - The

doption is used to send thematch[]parameter, identifying the alert series you want to delete.

Make sure the Admin API is enabled and that you have sufficient permissions to perform this action; otherwise, you may encounter access errors.

- Verify Deletion:

After executing the deletion request, it’s essential to verify that the specified alerts have been successfully removed from Prometheus. Run the same PromQL query you used in Step 1 to check if the alert series still exists.

ALERTS{alertname="InstanceDown"}

If the deletion was successful, this query should return no results for the specified alert name. This confirms that the alert data has been removed from the Prometheus database. If the alert still appears in your query results, double-check the API request parameters and try executing the delete command again

Using the TSDB Admin API

The TSDB (Time Series Database) Admin API in Prometheus is a powerful tool that allows users to manage time series data effectively. It provides several endpoints to perform administrative functions such as deleting time series data and cleaning up stale entries. Understanding how to use these endpoints is essential for maintaining an efficient monitoring system.

TSDB Admin API Endpoints

The TSDB Admin API offers two primary endpoints for managing time series data:

/api/v1/admin/tsdb/delete_series:- This endpoint is used to delete time series data that match the provided selectors. By using label matchers, you can specify which series to remove based on their associated labels. This is useful for cleaning up alerts that are no longer relevant or needed.

/api/v1/admin/tsdb/clean_tombstones:- After deleting time series data, Prometheus marks the data as deleted but does not immediately remove it from disk. This approach allows for efficient deletion processes but can leave behind "tombstones" (markers indicating deleted data). The

clean_tombstonesendpoint permanently removes these tombstones from the database, freeing up disk space and ensuring that the system remains efficient.

- After deleting time series data, Prometheus marks the data as deleted but does not immediately remove it from disk. This approach allows for efficient deletion processes but can leave behind "tombstones" (markers indicating deleted data). The

Syntax for Constructing Deletion Queries

When constructing deletion queries, it is crucial to use label matchers correctly to target specific alerts. The syntax for the delete series query requires the use of the match[] parameter, which specifies the labels to filter on.

For example, if you want to delete all series related to critical alerts from a specific instance, your query would look like this:

match[]=ALERTS{severity="critical",instance="server01:9100"}

This query effectively targets alerts that have a severity label set to "critical" and are coming from the instance "server01:9100." By using label matchers, you can ensure that you delete only the alerts you intend to remove, minimizing the risk of unintentionally affecting other data.

Examples of Common Deletion Scenarios

Here are a few common scenarios where you might need to use the TSDB Admin API for deletion:

Deleting a Specific Alert: If you have an alert named "HighMemoryUsage" that you want to remove, your request would be structured as follows:

match[]=ALERTS{alertname="HighMemoryUsage"}Bulk Deleting Alerts Based on Severity: If you want to delete all alerts with a specific severity level, such as "warning," the request would look like this:

match[]=ALERTS{severity="warning"}Deleting Alerts from a Specific Instance: To delete alerts related to a specific server, use a query like this:

match[]=ALERTS{instance="server02:9100"}

Each of these examples highlights how to construct deletion requests that are precise and targeted, which is vital for maintaining an organized monitoring environment.

Handling Errors and Troubleshooting

When using the TSDB Admin API, it’s important to handle potential errors effectively. The API will return responses that indicate whether the deletion was successful or if there were issues. Here are some common error handling strategies:

- Check API Responses: Always inspect the API response after sending a deletion request. A successful deletion will typically return a confirmation message or status code, while errors may provide specific reasons for the failure.

- Verify Query Syntax: If you encounter issues, ensure that your query syntax is correct. Incorrect label matchers or typos in the query can lead to unexpected results or errors.

- API Access: Ensure that the Admin API is enabled and that your user account has the necessary permissions to perform deletion actions. If you are unable to execute commands, consult with your system administrator to confirm your access rights.

Best Practices for Managing Prometheus Alerts

Effectively managing alerts in Prometheus is crucial for maintaining an efficient monitoring system. Here are some best practices to help you manage your Prometheus alerts effectively:

Regular Audits: Conducting regular audits of your alerts is a fundamental practice in managing your monitoring system. This involves systematically reviewing the alerts you have configured to identify any that may be outdated or unnecessary.

Steps to Perform Regular Audits:

- Schedule Periodic Reviews: Set a routine, such as monthly or quarterly, to examine your alerts.

- Engage Your Team: Collaborate with your team to discuss the relevance of each alert. This collaboration can provide insights into which alerts are useful and which are no longer applicable.

- Eliminate Redundant Alerts: Remove alerts that trigger frequently but provide little actionable information. Keeping only meaningful alerts ensures your team can focus on critical notifications.

Alert Retention Policies: Implementing alert retention policies is essential for managing the lifespan of alerts. This practice helps you maintain a clean and organized alerting system, making it easier to navigate and respond to active alerts.

Creating Alert Retention Policies:

- Define Expiration Criteria: Decide how long alerts should be retained. For instance, you might choose to expire alerts after 30 days if they haven’t triggered.

- Automate Expiration: Utilize Prometheus features or scripts to automate the expiration of old alerts. This helps maintain a manageable number of alerts and reduces clutter in your monitoring dashboard.

Automation: Automation can significantly streamline your alert management processes. By leveraging scripts and tools, you can automate repetitive tasks associated with alerts, which can save time and reduce errors.

Ways to Automate Alert Management:

- Use Alerting Rules: Take advantage of Prometheus’ built-in alerting rules to automate the creation and modification of alerts.

- Scripting: Develop scripts in programming languages like Python or Bash to handle routine tasks, such as updating alert configurations or cleaning up obsolete alerts.

- Configuration as Code: Consider using tools like Terraform to manage your alert configurations as code. This allows for version control and easier updates.

Performance Monitoring: Monitoring the performance of your alerting system is essential, especially after making changes such as deleting or modifying alerts. Keeping track of performance metrics helps ensure that your system runs efficiently and effectively.

Monitoring Performance:

- Track Key Metrics: Use Prometheus metrics to monitor query performance and system resource utilization. This can include metrics like query response times and CPU usage.

- Evaluate Changes: After any modifications to alerts, evaluate the impact on system performance. If you notice a degradation in performance, revisit your alerting rules to optimize them for better efficiency.

Alternative Approaches to Alert Management

When managing alerts in Prometheus, outright deletion is not always the best option. Instead, there are several alternative methods you can use to effectively manage alerts without losing important data. These methods not only help in organizing alerts better but also allow more control over your monitoring system.

- Recording Rules: Recording rules in Prometheus allow you to precompute frequently used queries and store their results as new time series. This can be especially useful for alerts, as you can aggregate multiple related alerts into a single time series. By doing this, you reduce the overall number of individual alerts, simplifying the system and improving performance. Rather than deleting alerts, you can use recording rules to compress or group them for easier management. For more information on using recording rules in Prometheus, refer to this detailed blog post.

- Alert Silencing: Instead of deleting alerts, you can silence them temporarily using Prometheus' Alertmanager. Silencing is a powerful feature that allows you to mute non-critical alerts during specific time periods, such as during maintenance windows or when you know the alert is irrelevant for the moment. This prevents the alert from triggering unnecessarily while keeping it in the system for future use.

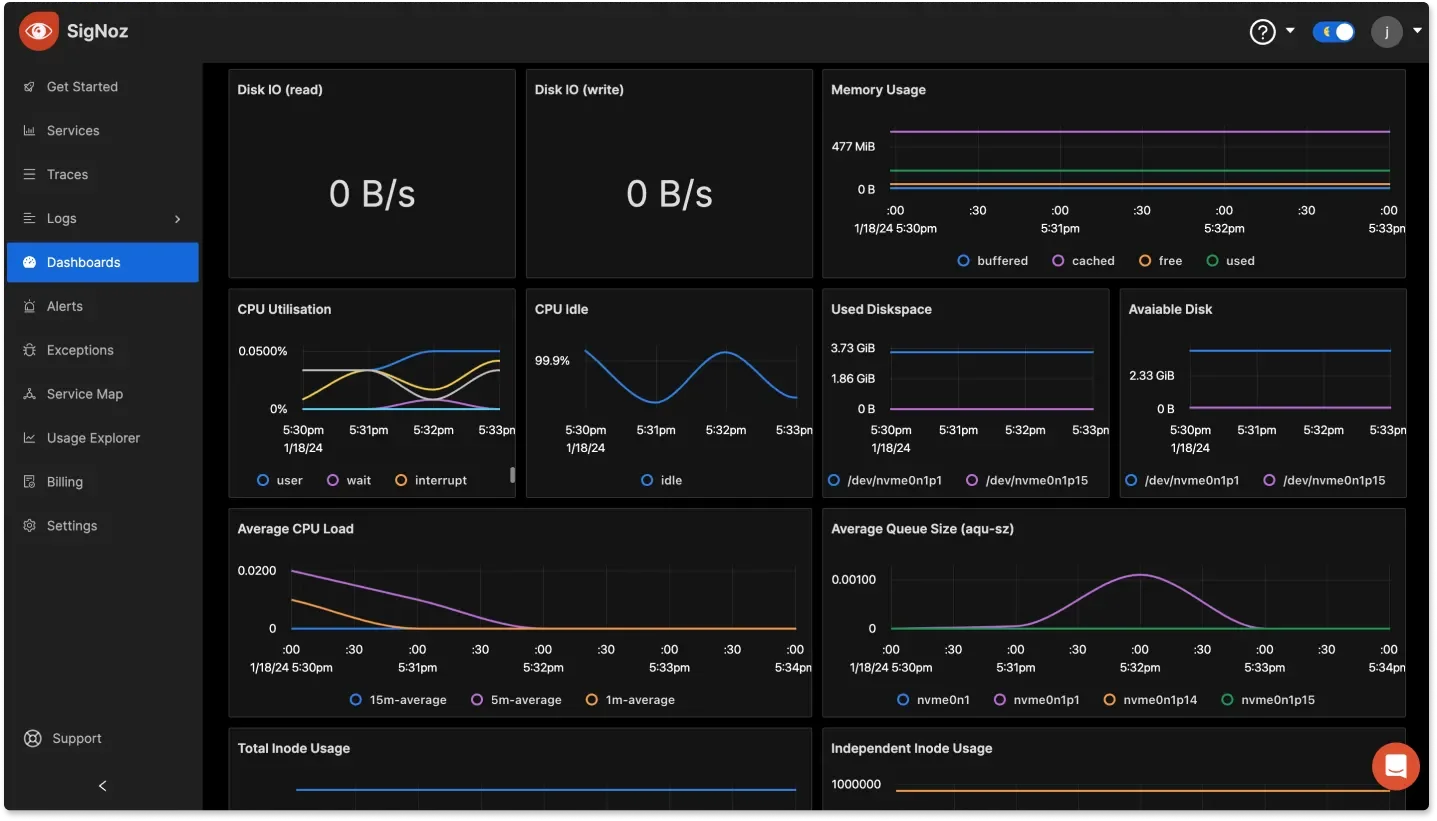

- External Tools: While Prometheus is great for metrics and alerting, managing alerts through its native interface can become complex over time, especially for large-scale systems. External tools like Grafana or SigNoz offer more intuitive, user-friendly interfaces for managing and visualizing alerts. With these tools, you can easily configure, view, and manage your alerts in a more structured manner. SigNoz, in particular, integrates with Prometheus but provides extended observability features, including easier management of alerts and metrics dashboards.

- Time-Based Auto-Expiration: For certain types of alerts, configuring time-based auto-expiration can help in keeping your system clean without manual deletions. Time-based expiration means setting an alert to automatically expire and be removed after a defined period, reducing the need for manual intervention. This is particularly useful for alerts that are relevant only for a short duration, such as alerts related to temporary spikes in usage.

Monitoring Alert Deletions with SigNoz

To effectively monitor your applications and systems, leveraging an advanced observability platform like SigNoz can elevate your monitoring strategy. SigNoz is an open-source observability tool that provides end-to-end monitoring, troubleshooting, and alerting capabilities across your entire application stack.

Built on OpenTelemetry—the emerging industry standard for telemetry data—SigNoz integrates seamlessly with your existing Managed Prometheus setup. Using the OpenTelemetry Collector’s Prometheus receiver, SigNoz ingests Prometheus metrics and correlates them with distributed traces and logs, delivering a unified observability solution. This integration allows you to:

- Enhanced Monitoring of Alert Deletions: Maintain your existing Prometheus setup while using SigNoz to monitor alert deletions specifically, tracking any changes or trends in alert configuration and understanding their impact on overall observability.

- Improved Root Cause Analysis: Correlate Prometheus metrics with traces and logs in SigNoz to pinpoint issues caused by alert deletions. This helps you quickly identify unintended consequences or performance issues following the removal of specific alerts.

- Detailed Analysis of Alerting Gaps: Utilize both PromQL and SigNoz’s advanced query builder to analyze potential gaps left by deleted alerts. This enables you to assess whether alert deletions may leave certain metrics unmonitored, ensuring coverage across your monitoring system.

- Centralized Dashboards for Alert Management: Create unified dashboards in SigNoz that display metrics, traces, and logs in real time, allowing you to oversee alert deletions alongside key performance indicators. This visibility helps you proactively monitor the effects of alert changes across your infrastructure.

For more information on monitoring alert deletions with SigNoz, refer to the Alert Management documentation.

Key Takeaways

- Prometheus offers an Admin API to manage alerts and time series data, but it’s important to understand how it works before using it. Deleting alerts is a permanent action, so always proceed with caution.

- By default, the Admin API is disabled for security reasons. Before using it, you’ll need to enable it, but ensure you have proper security measures in place. Leaving the API unsecured could expose your system to unauthorized access.

- When deleting alerts, it’s crucial to specify exactly what you want to delete. Prometheus uses label matchers to help you filter data. Be sure to define these filters accurately to avoid accidentally deleting important metrics or alerts.

- Keeping your alerting system clean and organized is essential for maintaining performance. Periodic audits of your alerts—such as monthly or quarterly cleanups—can help prevent performance slowdowns and unnecessary resource use.

- While Prometheus is excellent for metrics collection and alerting, managing complex alerting setups can become challenging. Tools like SigNoz offer a more comprehensive solution for managing alerts, providing enhanced observability features and easier alert management.

FAQs

Can I recover deleted time series alerts in Prometheus v2?

This depends on what was deleted:

- Alert Rules: Yes, these can be recovered if they are stored in version-controlled configuration files or backups.

- Alert Metrics (e.g.,

ALERTSandALERTS_FOR_STATE): No, once deleted, these time series metrics cannot be recovered unless a TSDB snapshot backup exists. However, new alert metrics will be generated automatically when Prometheus re-evaluates the alert rules.

Always ensure you have proper backups in place before deleting time series alerts, especially if the data is critical.

How often should I delete old or unused alerts?

The frequency of alert deletions depends on how you manage and use your monitoring system. As a general guideline, you should review and clean up old or unused alerts on a monthly or quarterly basis. This keeps your monitoring environment organized and avoids unnecessary clutter.

Does deleting alerts affect Prometheus' performance?

Yes, deleting a large number of alerts can temporarily impact the performance of Prometheus, particularly in terms of query speeds. When a significant deletion occurs, it’s best to keep an eye on your system’s performance to ensure everything runs smoothly afterward.

Are there any risks associated with deleting time series alerts?

Yes, the primary risks include accidentally deleting important data and facing a temporary performance slowdown after a large deletion. To minimize risks:

- Double-check your deletion queries.

- Schedule deletions during off-peak or low-traffic periods.

- Make sure critical data is backed up to avoid any accidental loss.

By following these best practices, you can manage your Prometheus alerts effectively and safely.