How Does Prometheus Work?

Prometheus works by scraping metrics from configured endpoints at specified intervals. These targets include applications, servers, databases, and more. They expose their metrics in a format Prometheus understands. Once Prometheus pulls metrics from these endpounts, it stores them for querying and retrieval when needed.

How Does Prometheus Work?

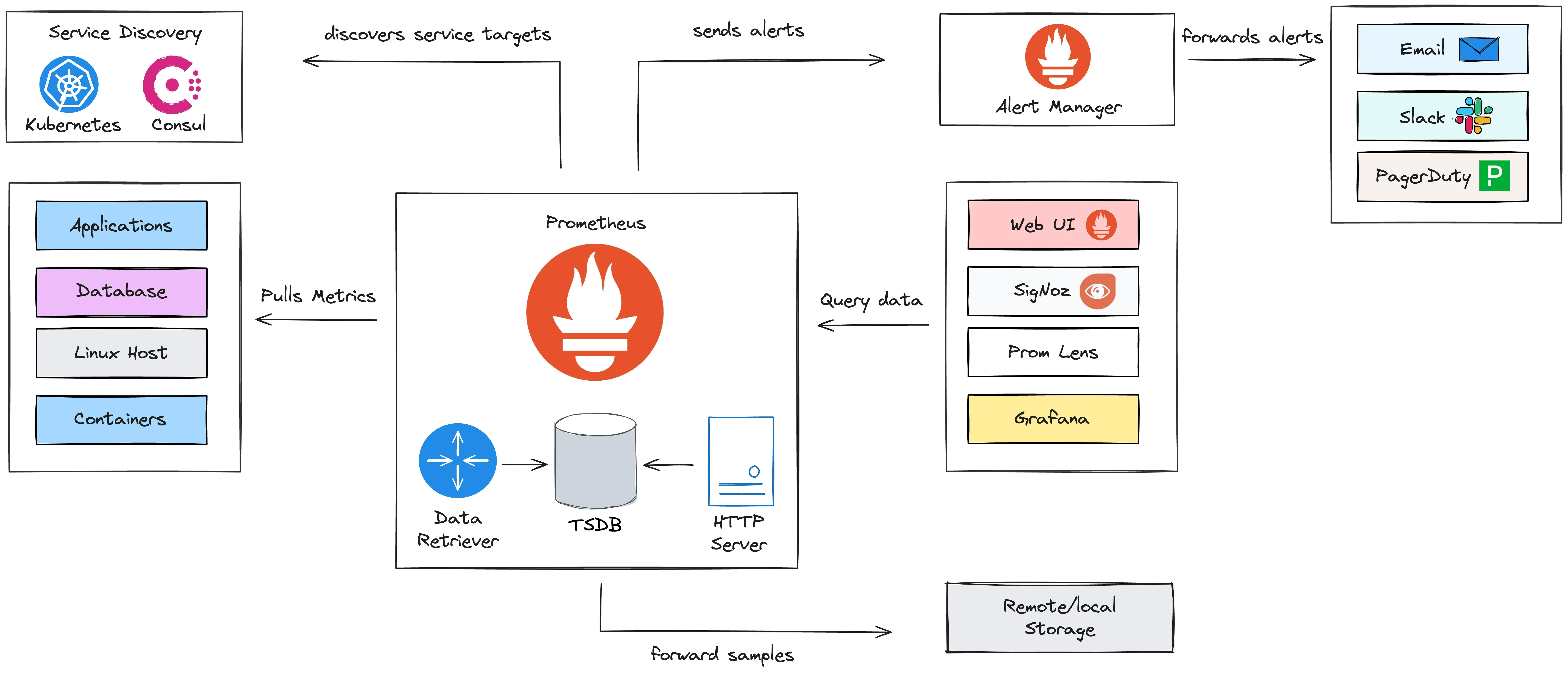

The architecture diagram below provides a summary of how Prometheus works as a monitoring tool.

The Prometheus Server

Prometheus has a core component called the Prometheus server, which does the main monitoring work. This server is composed of three parts:

- Time Series Database: This stores the collected metrics data (e.g., CPU usage, request latency) in a highly efficient format optimized for time-based queries.

- Data Retriever (Scraper): This worker actively pulls metrics from various sources, including applications, databases, servers, and containers. It then sends the collected data to the time series database for storage.

- HTTP Server API: This provides a way to query the stored metrics data. This API allows users to retrieve and visualize metrics through tools like the Prometheus dashboard, SigNoz, or Grafana.

Metrics Collection

Prometheus collects metrics by periodically scraping targets. A target is any system or service that exposes metrics via an HTTP endpoint. Each target provides units of monitoring specific to its domain. For instance, in an application, metrics might include request latency or number of exceptions, while for a server, metrics could be CPU usage or memory consumption. These units are known as Metrics.

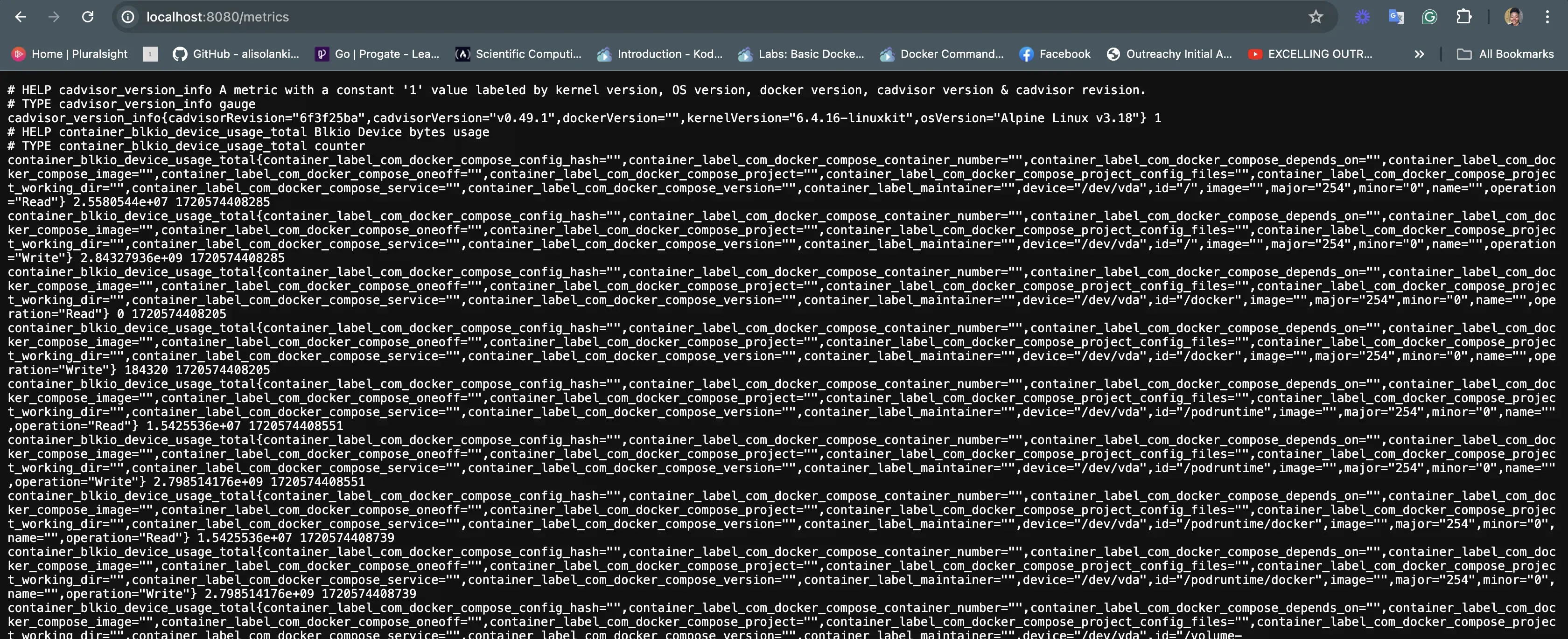

For Prometheus to pull metrics from a target, the target must expose a specific HTTP endpoint, typically at the /metrics path. The HTTP endpoints follow the format; hostaddress/metrics. This endpoint provides the current state of the target in a plain text format that Prometheus can understand.

Exporters

While some systems expose Prometheus endpoints by default, others don’t and require an exporter. An exporter is a service that retrieves metrics from targets and transforms them into a format compatible with Prometheus. These converted metrics are then exposed at the exporter's /metrics endpoint, where Prometheus can scrape them.

Prometheus has exporters for different services including MySQL, Elasticssearch, etc. It also provides client libraries for applications that convert metrics data in a Prometheus readable format.

Scrape Config File

Prometheus has a configuration file, usually named prometheus.yml that defines which targets to scrape and how often. For example:

scrape_configs:

- job_name: 'my-app'

scrape_interval: 5s

static_configs:

- targets: ['localhost:8080']

In the above scrape config, Prometheus is set to scrape metrics from an application running on localhost at port 8080, under the job name my-app. It will do this every 5 seconds.

Data Storage

Once metrics are collected, Prometheus stores them in its time-series database (TSDB). This TSDB is optimized for storing and querying time series data efficiently. It handles millions of metrics with ease and stores data in a compressed format to save space.

The data is stored locally, providing fast query performance and reliability. Each metric is stored along with a set of labels, which are key-value pairs that identify the metric's characteristics.

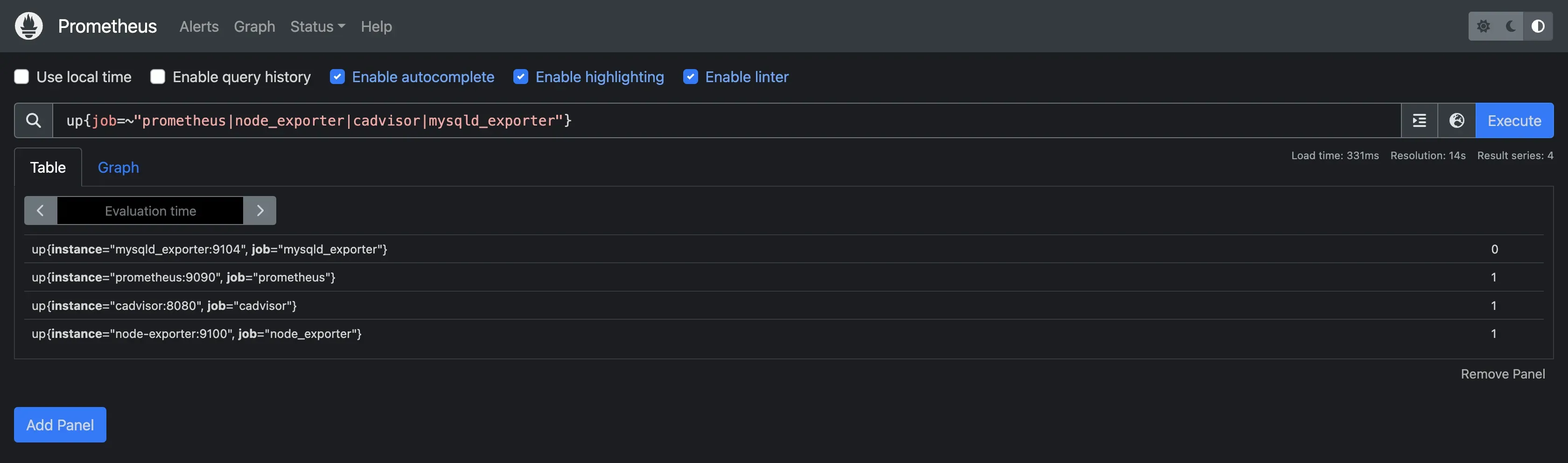

Data Query

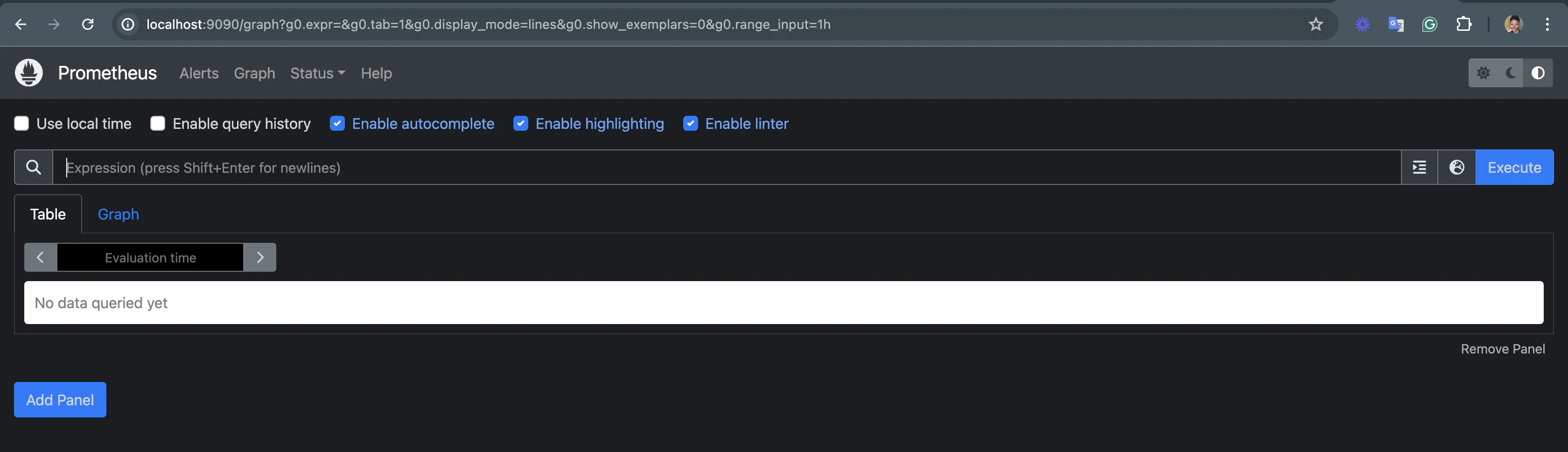

The stored metrics data can be queried when needed for visualization through the Prometheus web UI or a monitoring tool.

Prometheus provides a powerful query language called PromQL (Prometheus Query Language) to retrieve and manipulate time-series data. PromQL allows users to perform complex queries, aggregations, and transformations on the collected metrics.

Example of a PromQL query:

This query checks the status of targets (Prometheus, node_exporter, cadvisor, or mysqld_exporter) and returns whether they are up (1) or down (0).

Alerting

For effective monitoring, Prometheus includes a built-in alerting mechanism that allows users to define alerting rules based on PromQL queries. Alerting rules are defined to specify conditions under which alerts should be triggered. These rules evaluate metrics at regular intervals.

For example:

groups:

- name: example-alert

rules:

- alert: ContainerKilled

expr: 'time() - container_last_seen > 60'

for: 0m

labels:

severity: warning

annotations:

summary: Container killed (instance {{ $labels.instance }})

description: "A container has disappeared\n VALUE = {{ $value }}\n LABELS = {{ $labels }}"

The above alerting rule checks if a container has not been seen in the last 60 seconds and triggers an alert immediately with a warning severity, providing a summary and description with relevant details.

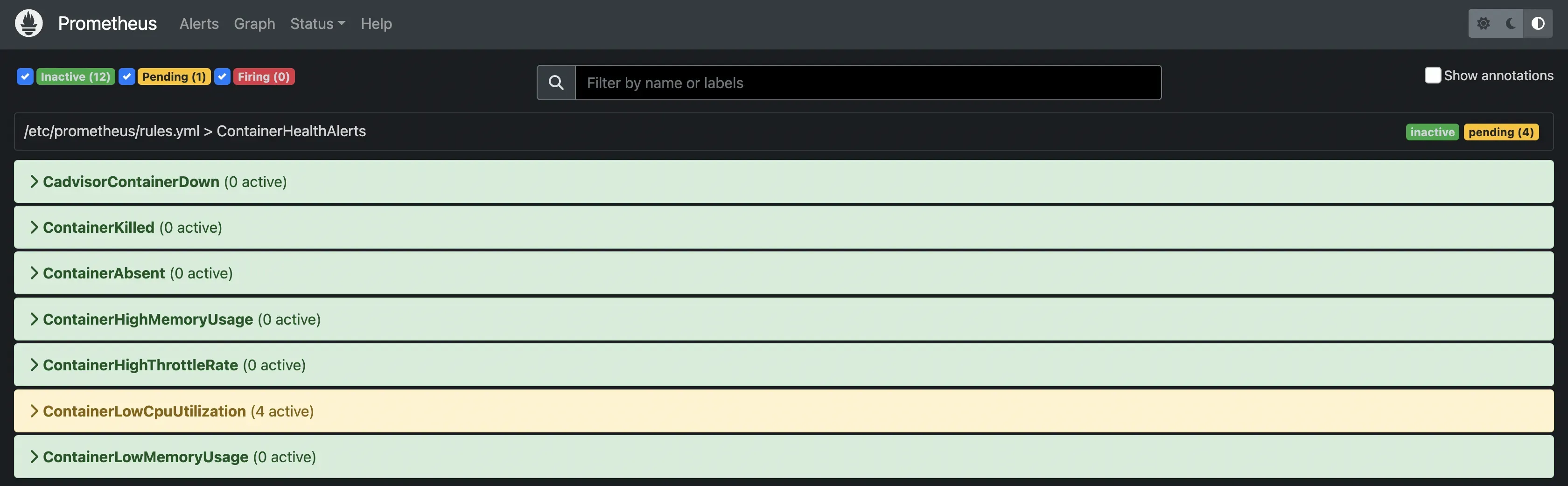

An alert can be in one of three states:

- Inactive: This is the default state of an alert. It means that the condition specified in the alerting rule is not currently met. No actions are taken when an alert is inactive.

- Pending: The is when the condition specified in the alerting rule has been met, but the

forclause hasn't expired yet. Theforclause specifies a duration for which the condition must remain true before the alert fires. This acts as a buffer to prevent alerts from triggering due to transient spikes or temporary issues. - Firing: The alert transitions to this state if the condition specified in the alerting rule remains true for the duration specified in the

forclause.

Alerting rules in Prometheus

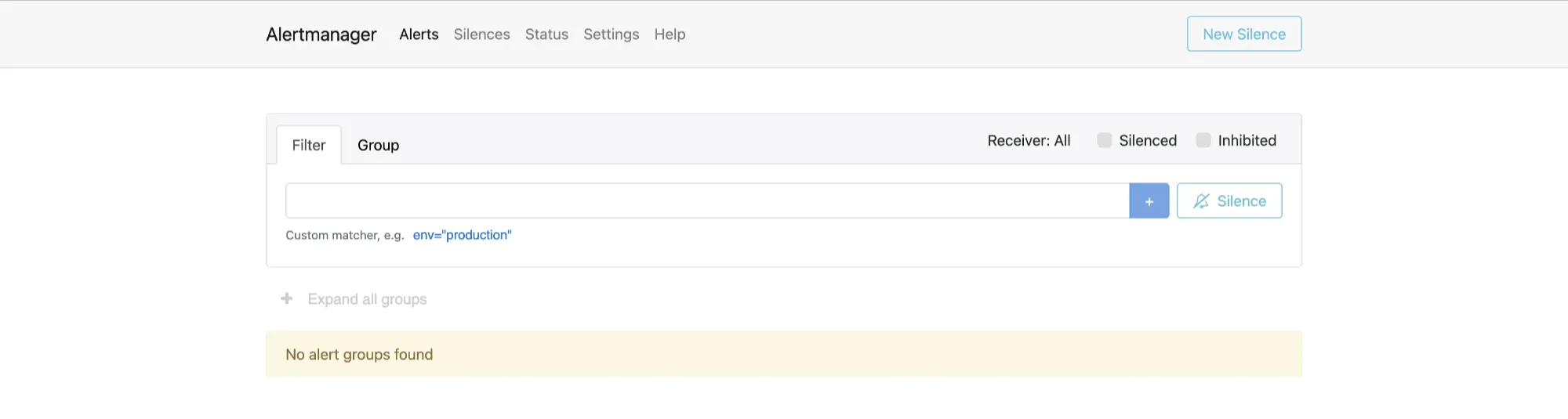

Alerts in the firing state are sent out the alert to Alertmanager, which then handles routing the alert to the configured receiver integrations (e.g., email, Slack, PagerDuty).

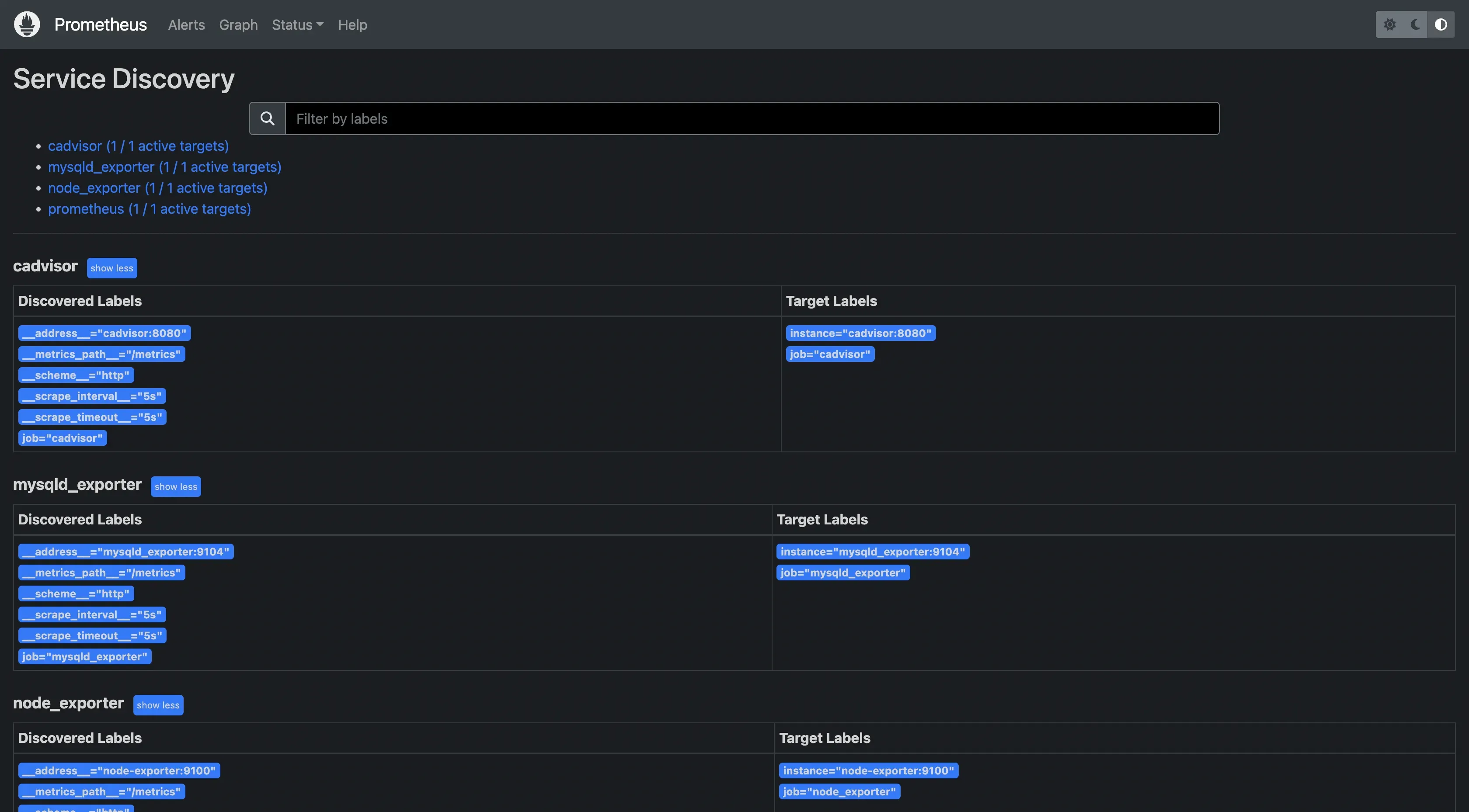

Service Discovery

Prometheus also supports various service discovery mechanisms to find targets automatically. This is particularly useful in dynamic environments like Kubernetes, where services may start and stop frequently. If you also deploy a new application or service in such environments, Prometheus immediately discovers it and starts monitoring it.

Example of how service discovery is configured for Kubernetes:

scrape_configs:

- job_name: 'kubernetes-apiservers'

kubernetes_sd_configs:

- role: endpoints

Other supported service discovery mechanisms include static configuration, DNS, Consul, Marathon, and more.

Quick Start with Prometheus

This quick start guide will help you get Prometheus up and running in a few simple steps.

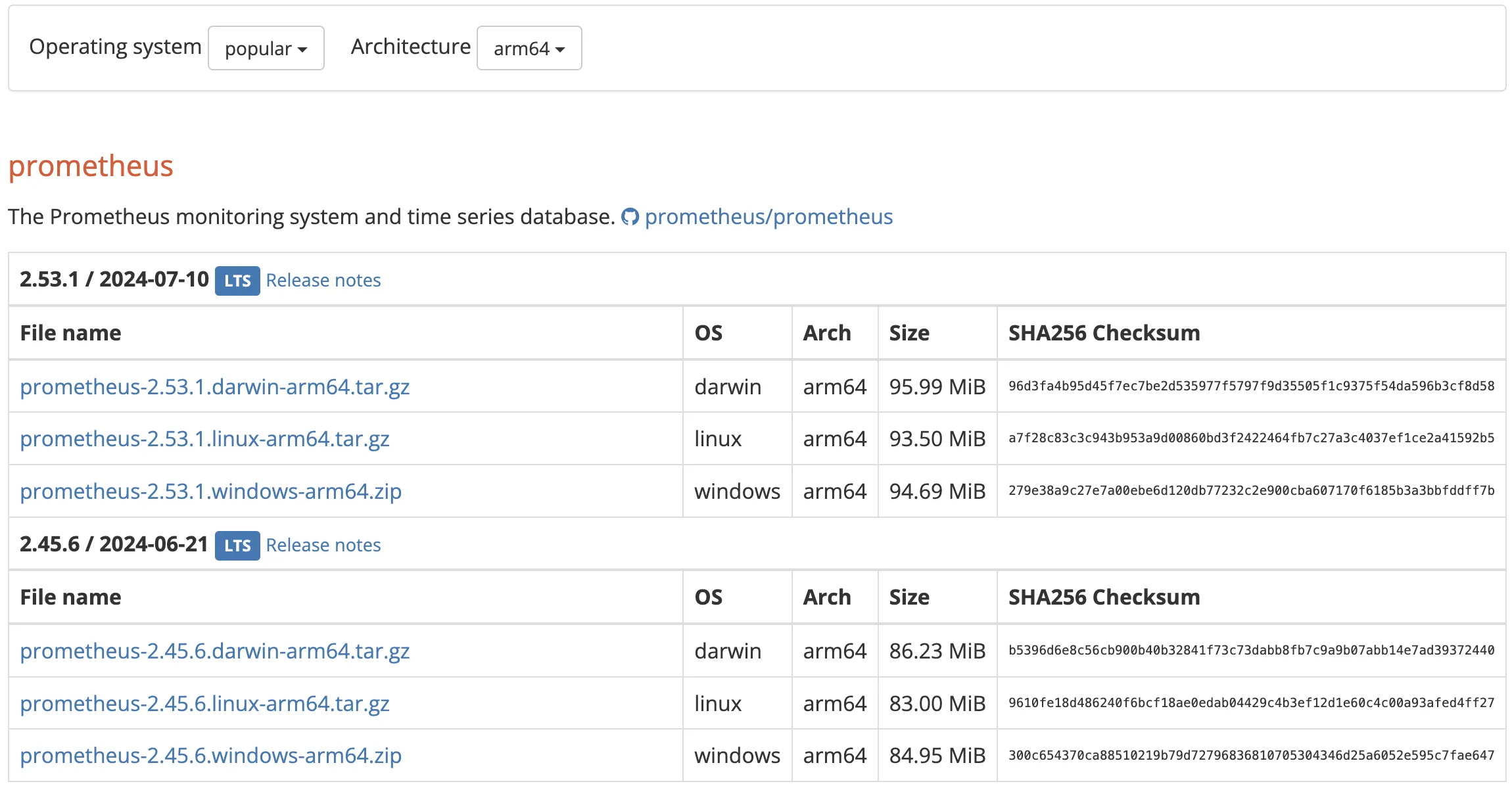

- Download Prometheus

To download Prometheus on your system, visit the Prometheus download page. Set your system architecture and identify the appropriate package for your operating system (Linux, macOS, Windows). Click on the download link for the latest stable release.

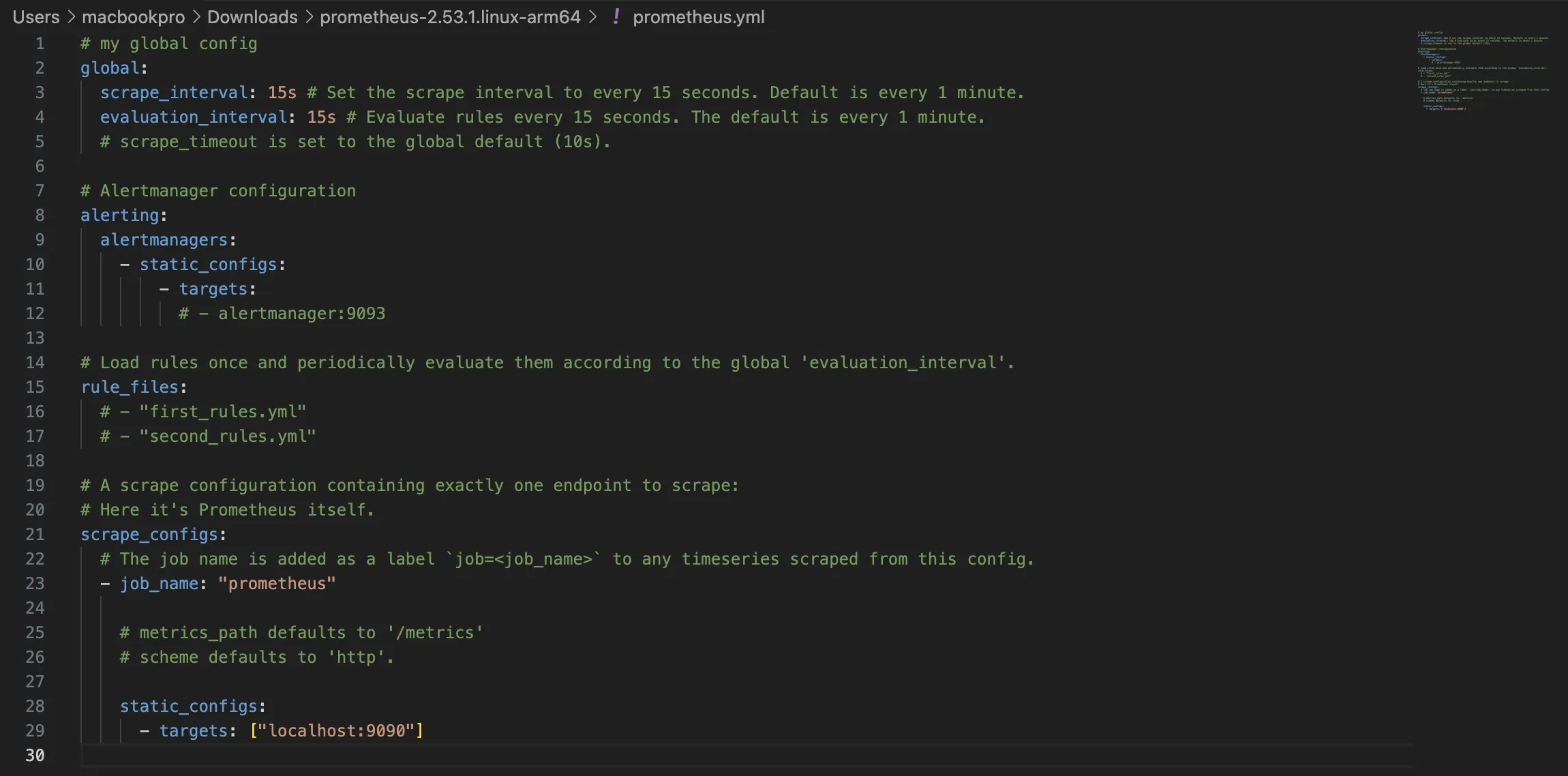

- Configure

prometheus.ymlfile

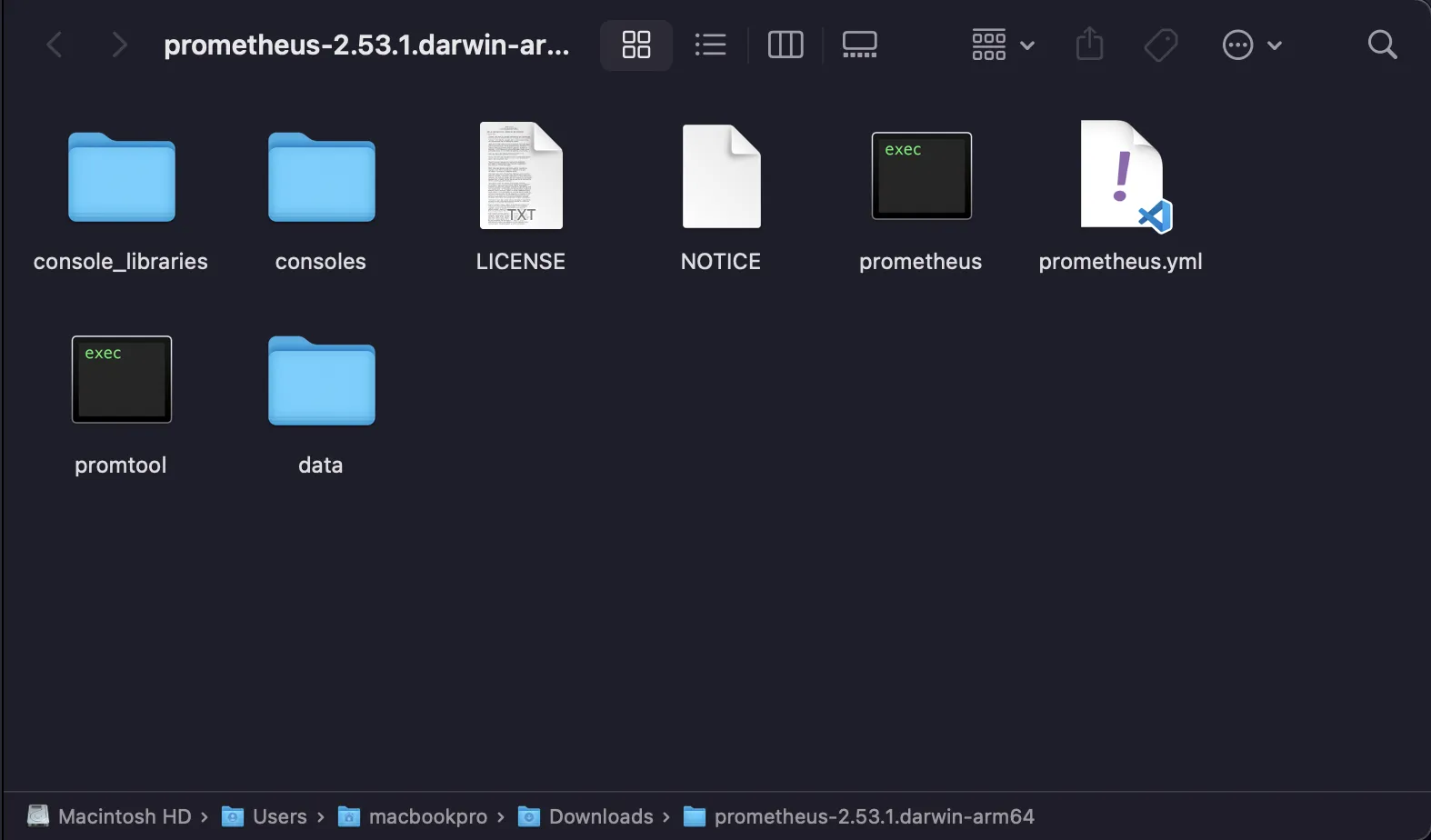

After downloading Prometheus, extract the tarball downloaded file. You'll find a file named prometheus.yml in the extracted directory.

Prometheus uses a configuration file named prometheus.yml to specify which targets to monitor and how to scrape them. Open prometheus.yml in a text editor.

In the prometheus.yml file, you will see that Prometheus is configured to scrape metrics from itself. You can add other scrape configurations for Prometheus to scrape metrics from different targets on your system.

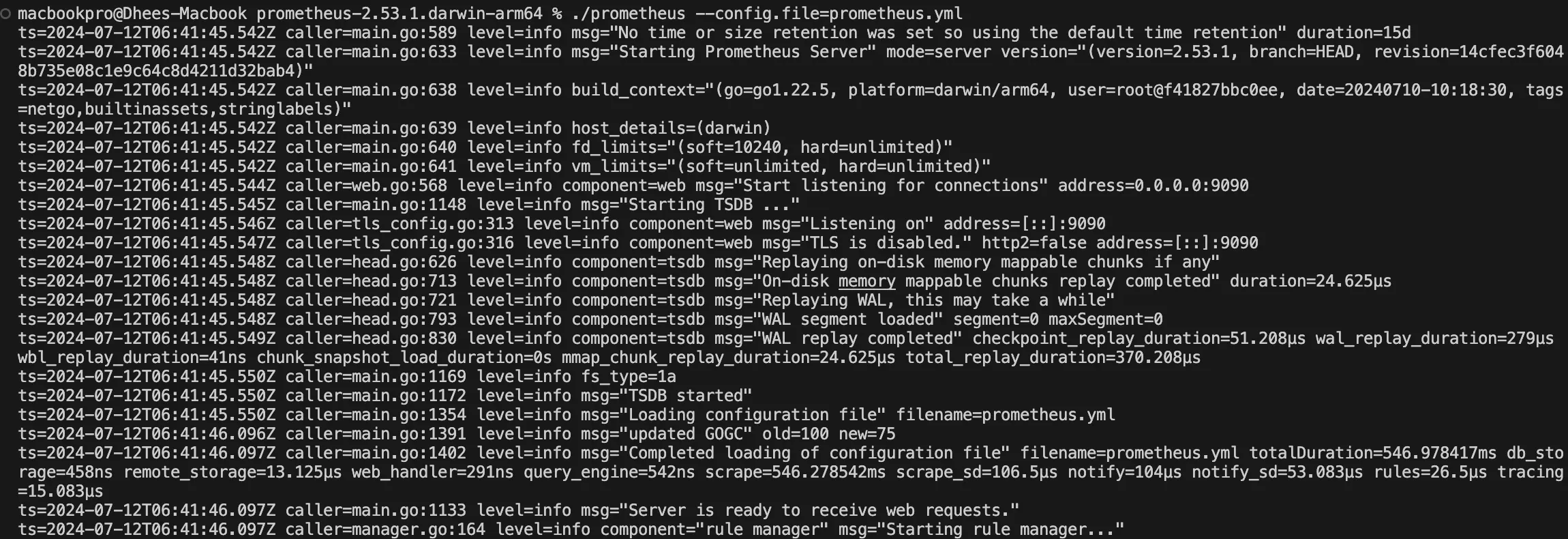

- Start Prometheus server

Navigate to the directory where you extracted Prometheus and execute the following command to start Prometheus:

./prometheus --config.file=prometheus.yml

This command will start Prometheus and use the specified configuration file to determine which targets to scrape.

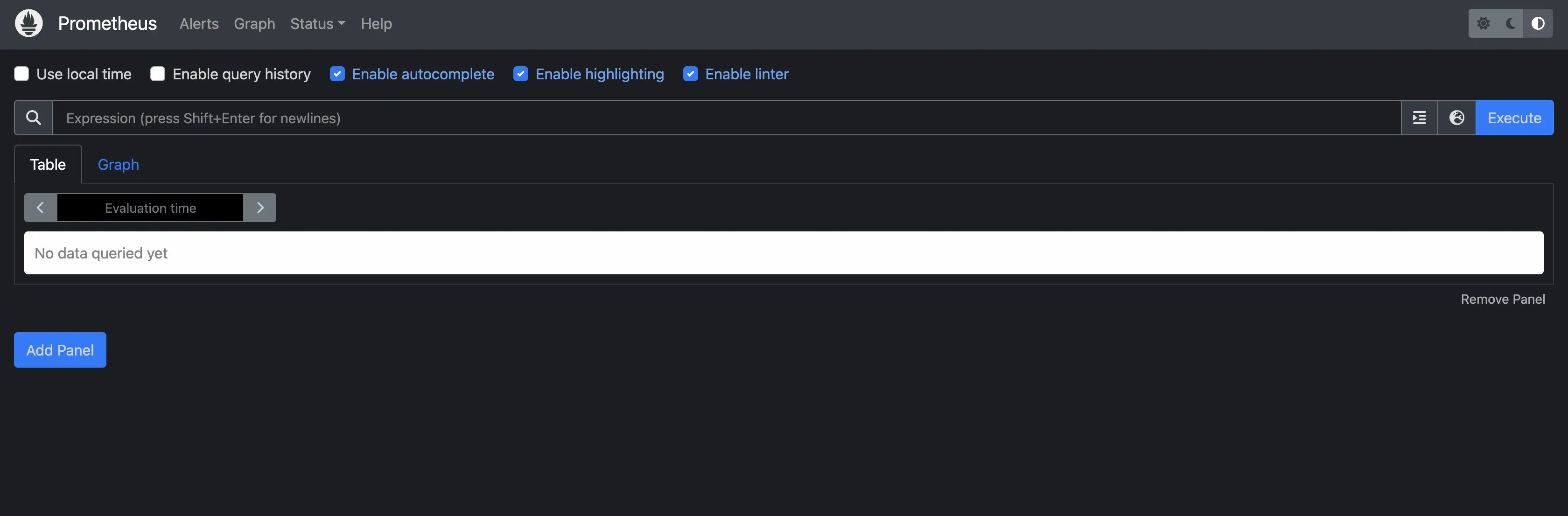

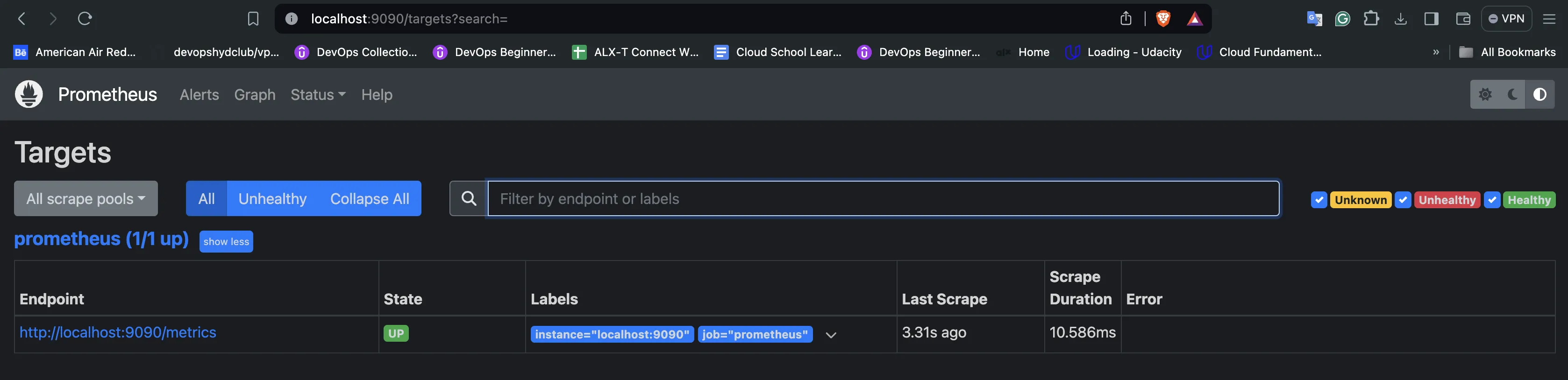

- Access the Prometheus UI

Once Prometheus has started, in your browser, visit localhost:9090 to access the Prometheus UI:

Conclusion

This article discussed how monitoring in Prometheus works. It highlighted some of the key components of Prometheus and their roles in monitoring.

Key takeaways:

- Prometheus collects metrics by scraping HTTP target endpoints exposing metrics in a Prometheus format.

- Exporters are used in converting metrics to Prometheus formats.

- Prometheus stores data in its time series database for retrieval at any time.

- Metrics data can be queried using PromQL, the Prometheus query language.

- Prometheus has a built-in Alert Manager component for alerting and routing