How to Monitor Pod Count in Prometheus - A Quick Guide

Monitoring pod count in Kubernetes is crucial for maintaining a healthy and efficient cluster. Prometheus, a popular open-source monitoring system, provides powerful tools to track and analyze pod metrics. This guide will show you how to get the number of pods running in Prometheus and leverage this information for effective cluster management.

Understanding Pod Count Monitoring in Kubernetes

Pod count monitoring in Kubernetes refers to the process of tracking the number of pods (running instances of containers) in a cluster to ensure the application’s availability, scalability, and proper resource utilization. Kubernetes dynamically adjusts pod counts using mechanisms like Horizontal Pod Autoscaling (HPA) based on workload demands. This makes real-time monitoring essential for maintaining Service Level Agreements (SLAs), preventing downtime, and optimizing resource usage.

Importance of Monitoring Pod Count for Cluster Health

Monitoring pod count is vital for maintaining the health and stability of your Kubernetes cluster. The number of running pods directly affects how efficiently your resources are being used and whether your applications are running smoothly. Here are some key reasons why monitoring pod count is important:

- Resource Optimization Monitoring pod count helps ensure that your system is using resources efficiently. By keeping track of how many pods are running, you can make sure you're not overusing or underusing your CPU, memory, or storage resources. If too many pods are running, you may be wasting resources; if too few are running, your applications may not perform optimally.

- Scaling Decisions In a dynamic environment like Kubernetes, where workloads can change frequently, you need to know when to scale your applications up (add more pods) or down (reduce the number of pods). Pod count trends over time can show you when demand is increasing, helping you decide when to automatically scale up or when to scale down to save on resources.

- Troubleshooting Changes in pod count that are unexpected—like sudden spikes or drops—can be a sign of problems in your cluster. For example, if pods are frequently being restarted or terminated, it could indicate an issue like a failed deployment, resource constraints (e.g., running out of memory), or a misconfiguration in your Kubernetes setup. Monitoring pod count helps you catch these problems early so you can resolve them quickly.

Role of Prometheus in Kubernetes Monitoring

Prometheus plays a crucial role in Kubernetes monitoring due to its powerful integration with the Kubernetes ecosystem. Its native support for service discovery allows Prometheus to automatically discover new pods and services as they are deployed, without requiring manual intervention. This automatic discovery ensures that your monitoring adapts in real-time, maintaining visibility over the dynamically changing environment in Kubernetes clusters.

Moreover, Prometheus is designed to efficiently scrape metrics from various Kubernetes components, such as nodes, pods, and services. It monitors resource usage like CPU, memory, and network bandwidth, and provides insights into the overall health of the cluster.

Some important roles Prometheus plays in Kubernetes monitoring include:

- Service Discovery: When you deploy new pods or services, Prometheus instantly detects them and begins collecting metrics without any manual configuration changes. This is essential in dynamic environments where workloads change frequently.

- Labels for Metrics Grouping: Prometheus uses Kubernetes labels to organize and group metrics efficiently. This makes it easy to filter and sort metrics by specific parameters like namespaces, applications, or pods, providing better granularity and control over what’s being monitored.

- Horizontal Pod Autoscaling: Prometheus metrics can be leveraged by Kubernetes' Horizontal Pod Autoscaler (HPA) to dynamically scale your applications based on real-time performance metrics, such as CPU or memory usage, ensuring that your applications run optimally under varying loads.

Key Metrics Related to Pod Count

Several key metrics are useful when monitoring pod count in Kubernetes, including:

kube_pod_info: Provides basic information about each pod, such as its namespace, labels, and node placement.kube_pod_status_phase: Tracks the current status of pods, such as whether they are pending, running, or completed. This is useful for understanding the health of your pods.kube_pod_container_status_restarts_total: Counts the number of times containers within pods have restarted. Frequent restarts can indicate issues with the application or resource shortages.kube_pod_status_ready: Indicates whether a pod is currently ready to serve traffic, which is critical for ensuring that your application remains available.

How to Query Pod Count in Prometheus

In this section, we'll walk through querying pod count in Prometheus using PromQL. By the end, you’ll know how to retrieve and analyze pod metrics to monitor your Kubernetes cluster.

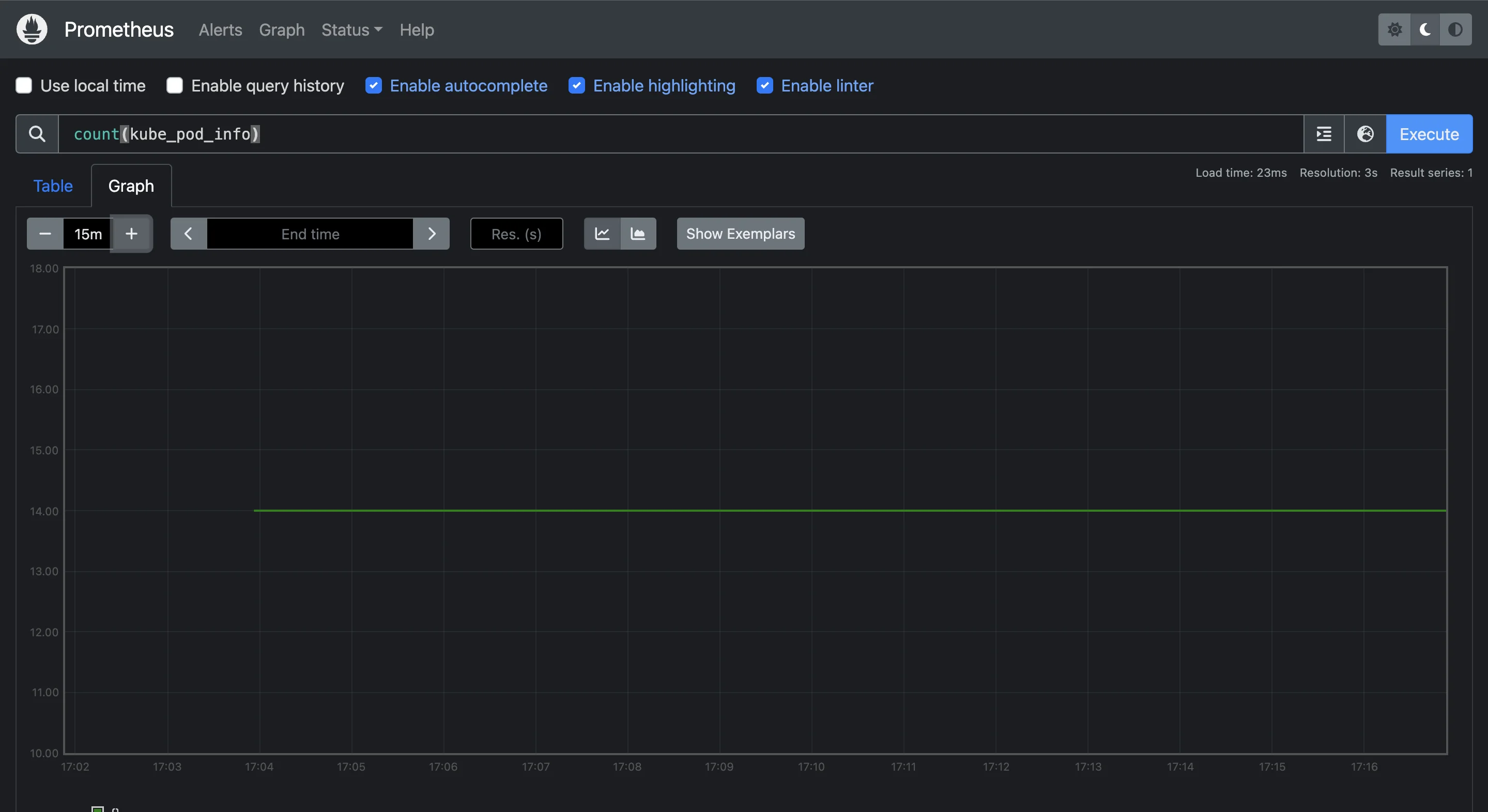

- Basic Pod Count Query

To get the total number of pods running across all namespaces, use this basic query:

count(kube_pod_info)

This query gives you a snapshot of how many pods are running in your cluster at the moment. It’s a good starting point for understanding the overall scale of your applications.

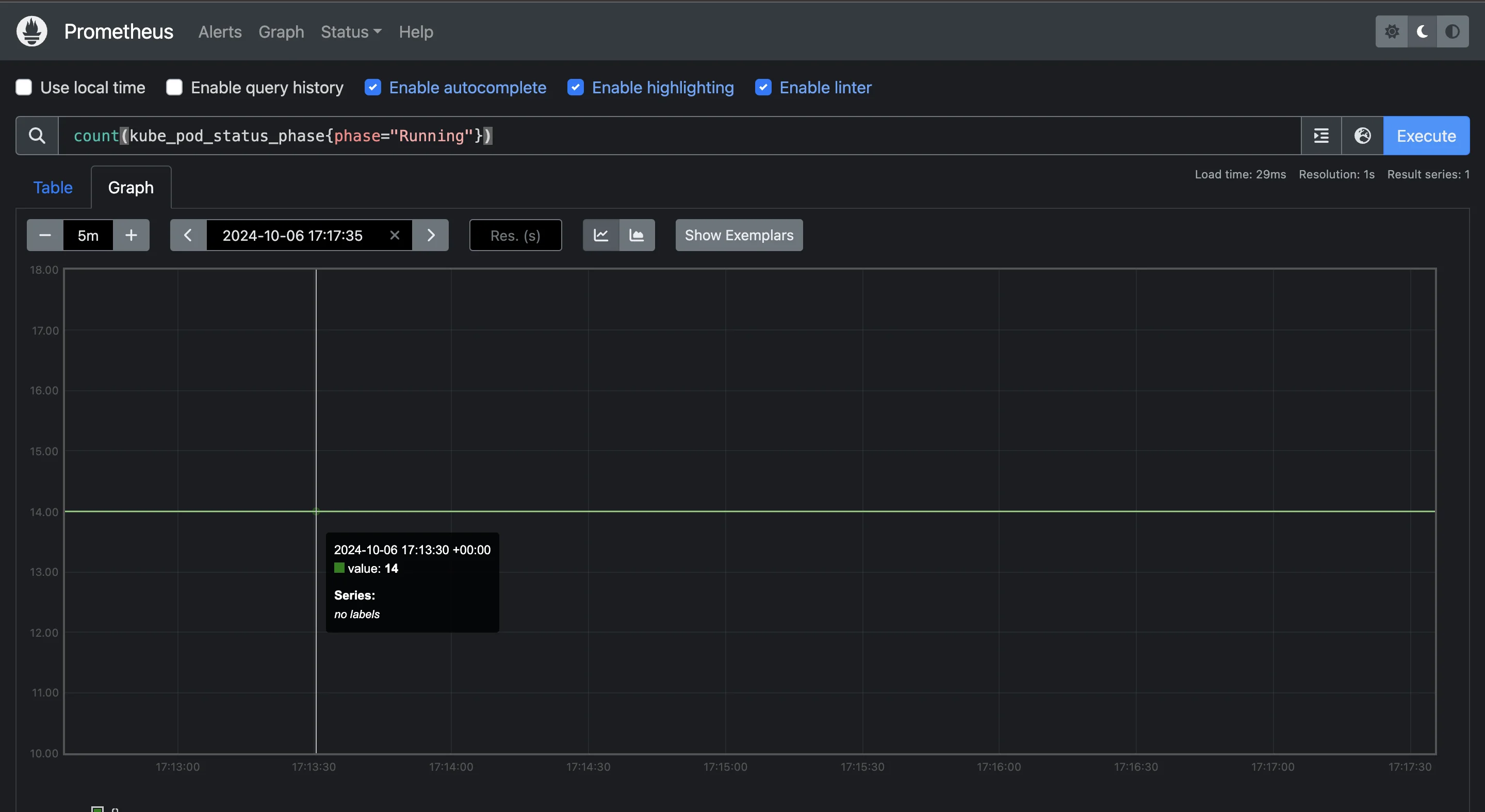

- Count Running Pods

If you want to know how many pods are specifically in the "Running" phase, use this query:

count(kube_pod_status_phase{phase="Running"})

This is useful when you need to ensure that your applications are actively running, as pods in other phases (e.g., "Pending" or "Failed") may indicate issues.

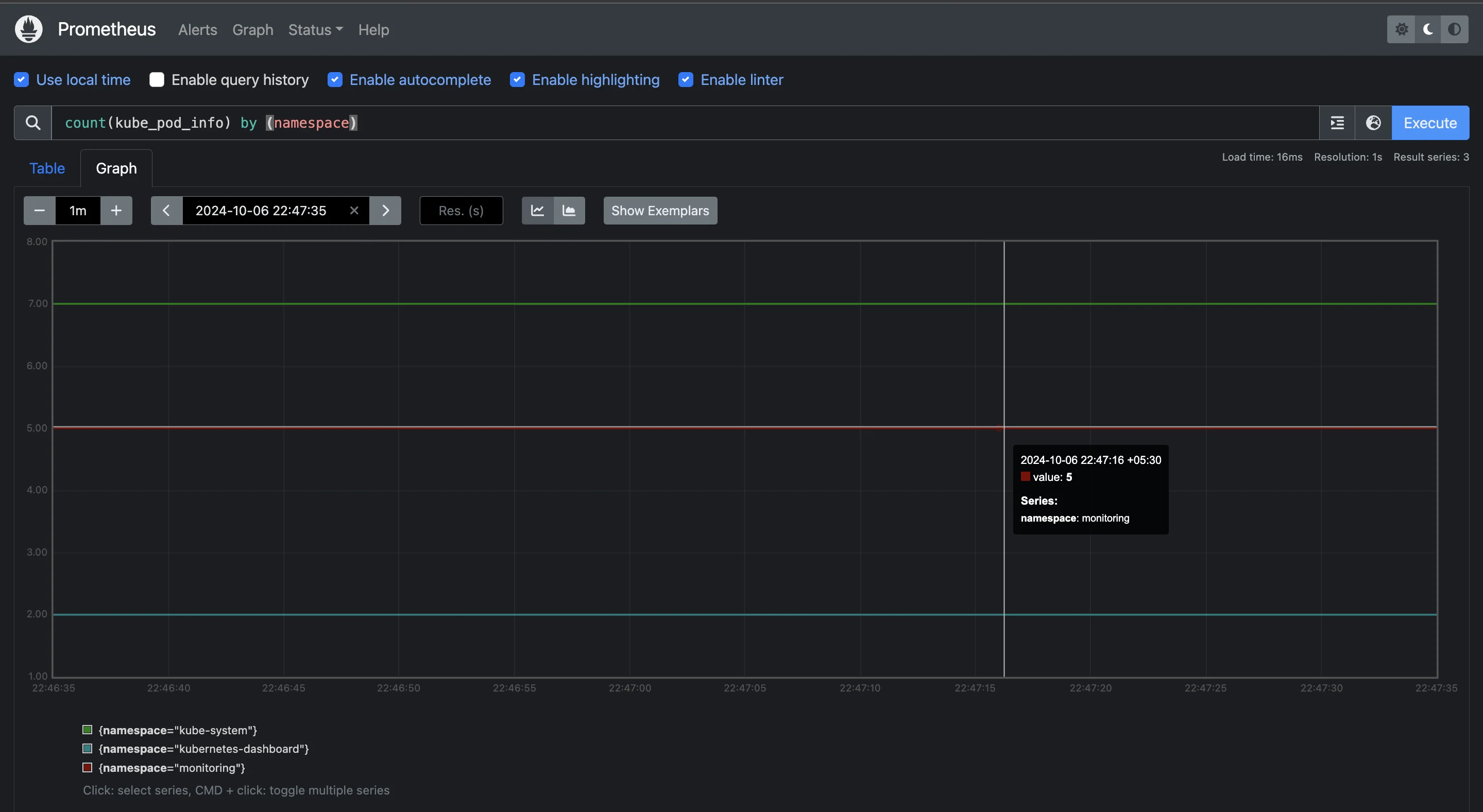

- Count Pods by Namespace

If you have multiple namespaces in your cluster, it’s helpful to break down pod count by namespace. Use the following query:

count(kube_pod_info) by (namespace)

This query gives you a clearer picture of how pods are distributed across different namespaces, helping you understand resource allocation at a more granular level.

Advanced Pod Count Queries

For more detailed insights, try these advanced queries:

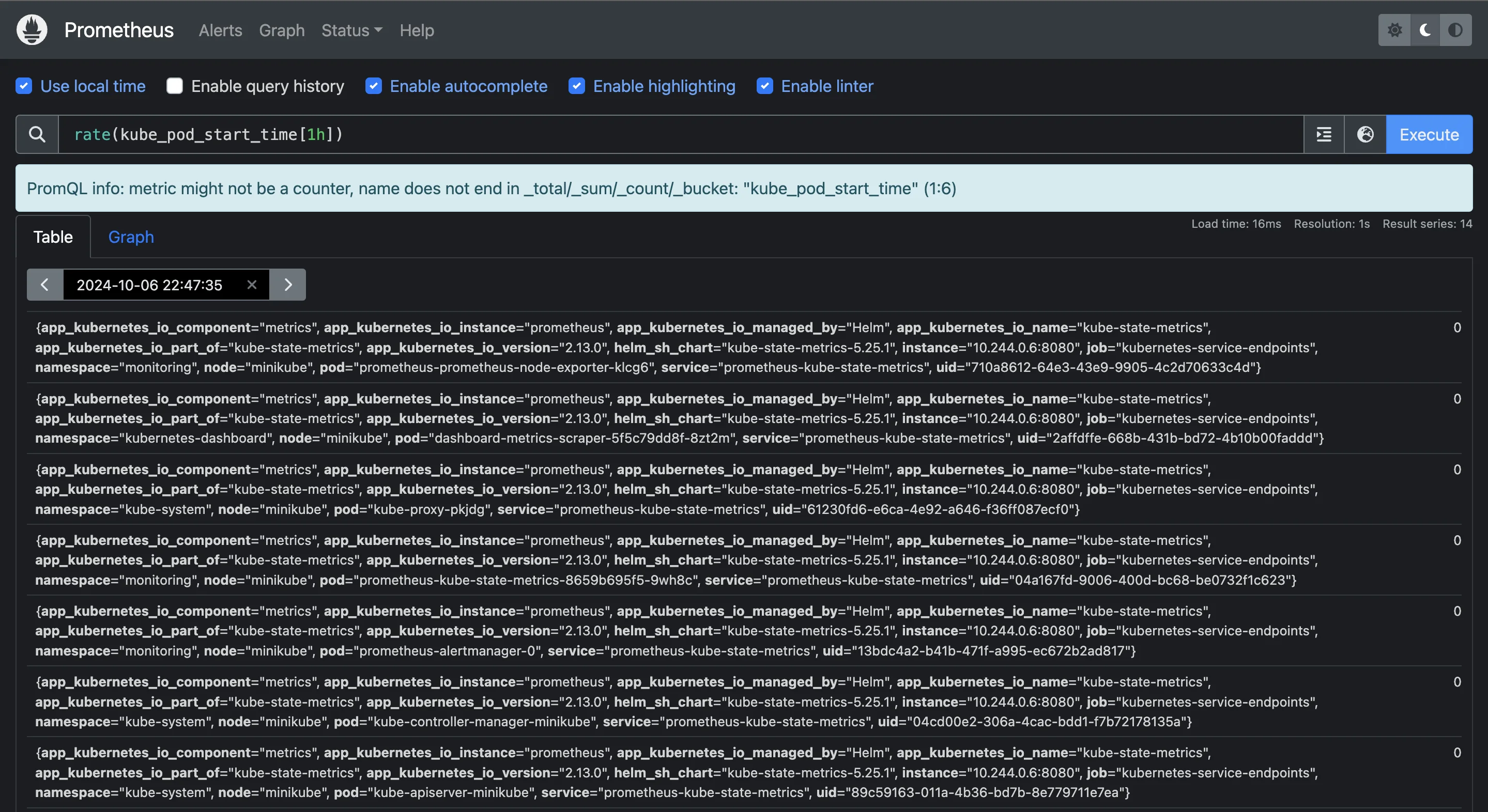

- Track Pod Count Changes Over Time

To monitor how the number of pods is changing over time, use this query:

rate(kube_pod_start_time[1h])

This query shows the rate of pod creation over the last hour, helping you understand how your cluster scales and responds to workload changes.

- Detect Abnormal Pod Count Fluctuations

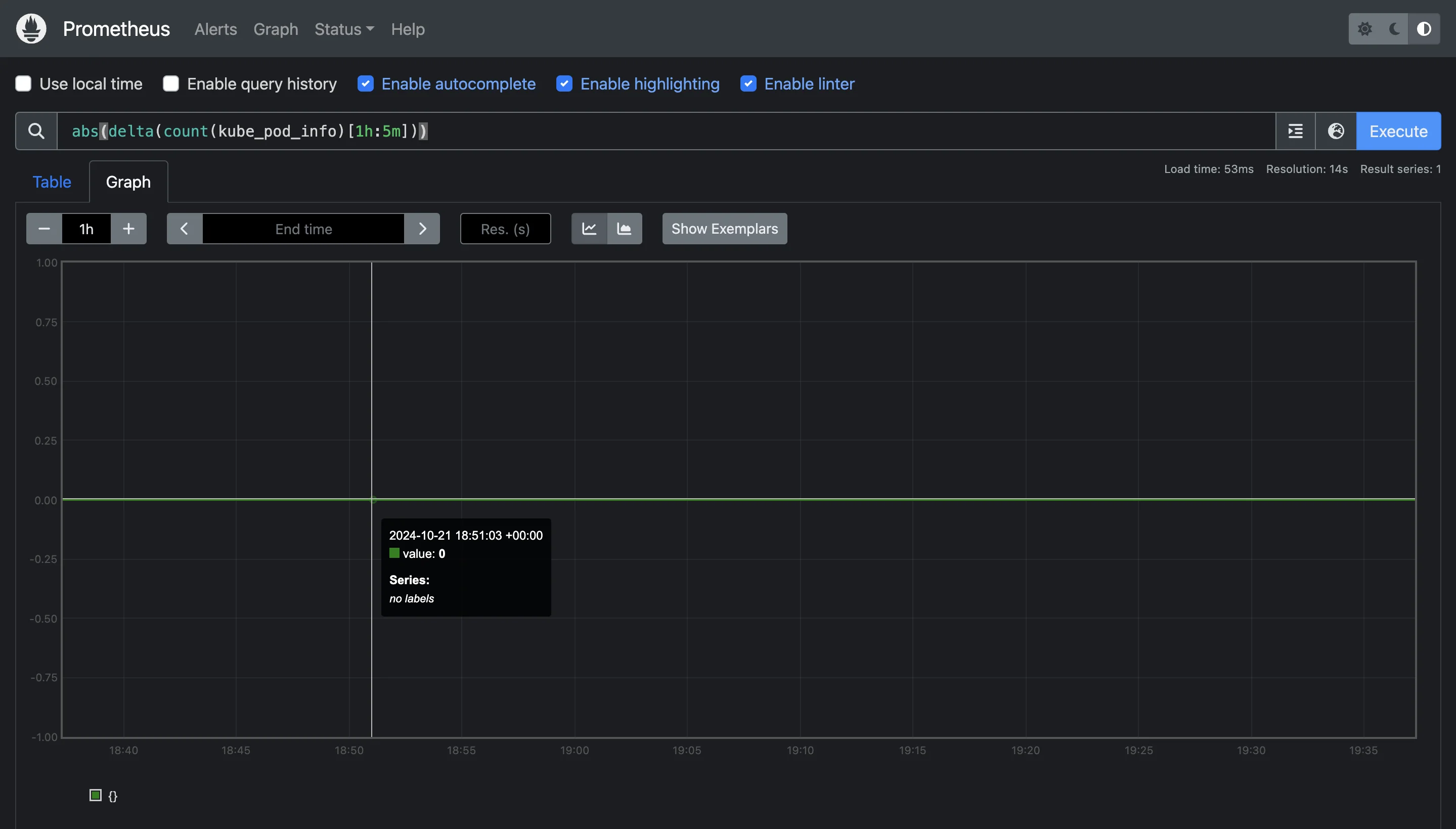

If you want to detect sudden spikes or drops in the number of pods, this alert query can help:

abs(delta(count(kube_pod_info)[1h:5m]))

This query tracks changes in the number of pods over the last hour (sampled every 5 minutes) and returns the magnitude of the change (ignoring whether it increased or decreased). This is useful for spotting unexpected behavior that might need investigation.

As there is no fluctuation and the pods are running well, the query returns a zero value.

Best Practices for Pod Count Monitoring

Keeping track of the number of pods ensures that applications are scaling as expected and that resources are utilized efficiently. Below are some best practices to follow for robust pod count monitoring:

- Set Up Alerting Thresholds for Pod Count Anomalies: One of the key benefits of monitoring pod count is being able to catch issues early. By setting up alerts in Prometheus, you can automatically get notified when there are unexpected changes in the number of running pods. For example, you can trigger an alert when the pod count drops suddenly or increases beyond expected levels.

- Implement Pod Autoscaling Based on Metrics: To manage workloads more efficiently, use the Horizontal Pod Autoscaler (HPA). HPA automatically adjusts the number of running pods based on real-time metrics like CPU or memory usage. By implementing autoscaling, you can ensure that your cluster adapts to demand fluctuations without manual intervention, scaling up when traffic increases and scaling down during periods of low usage.

- Correlate Pod Count with other Cluster Metrics: Monitoring pod count on its own provides useful information, but for a more comprehensive view of your cluster's health, you should correlate pod count with other key metrics such as CPU usage, memory consumption, and network traffic. By analyzing these metrics together, you can better understand the root causes of changes in pod count, whether they are related to increased resource usage or deployment changes.

- Regular Reviews: Over time, the demands on your cluster will change, and so will your resource allocation. Periodically review how many pods are running and whether they are using the right amount of resources (CPU, memory). Adjust resource requests and limits for each pod as needed to ensure that you’re not over-provisioning (wasting resources) or under-provisioning (risking pod failure).

Visualizing Pod Count Data with Grafana

Integrating Grafana with Prometheus unlocks powerful visualization capabilities that enhance your ability to monitor pod count and other Kubernetes metrics in a more intuitive and insightful way. Visualizing pod count data allows you to quickly identify trends, spot potential issues, and make data-driven decisions about scaling and resource allocation.

Creating Dashboards for Pod Count Metrics

A well-structured Grafana dashboard helps you consolidate pod count data and other relevant metrics into a single view. To create a dashboard in Grafana, refer to this guide.

When designing your dashboard for pod count metrics, consider adding these key metrics to ensure comprehensive visibility and insights into your Kubernetes environment:

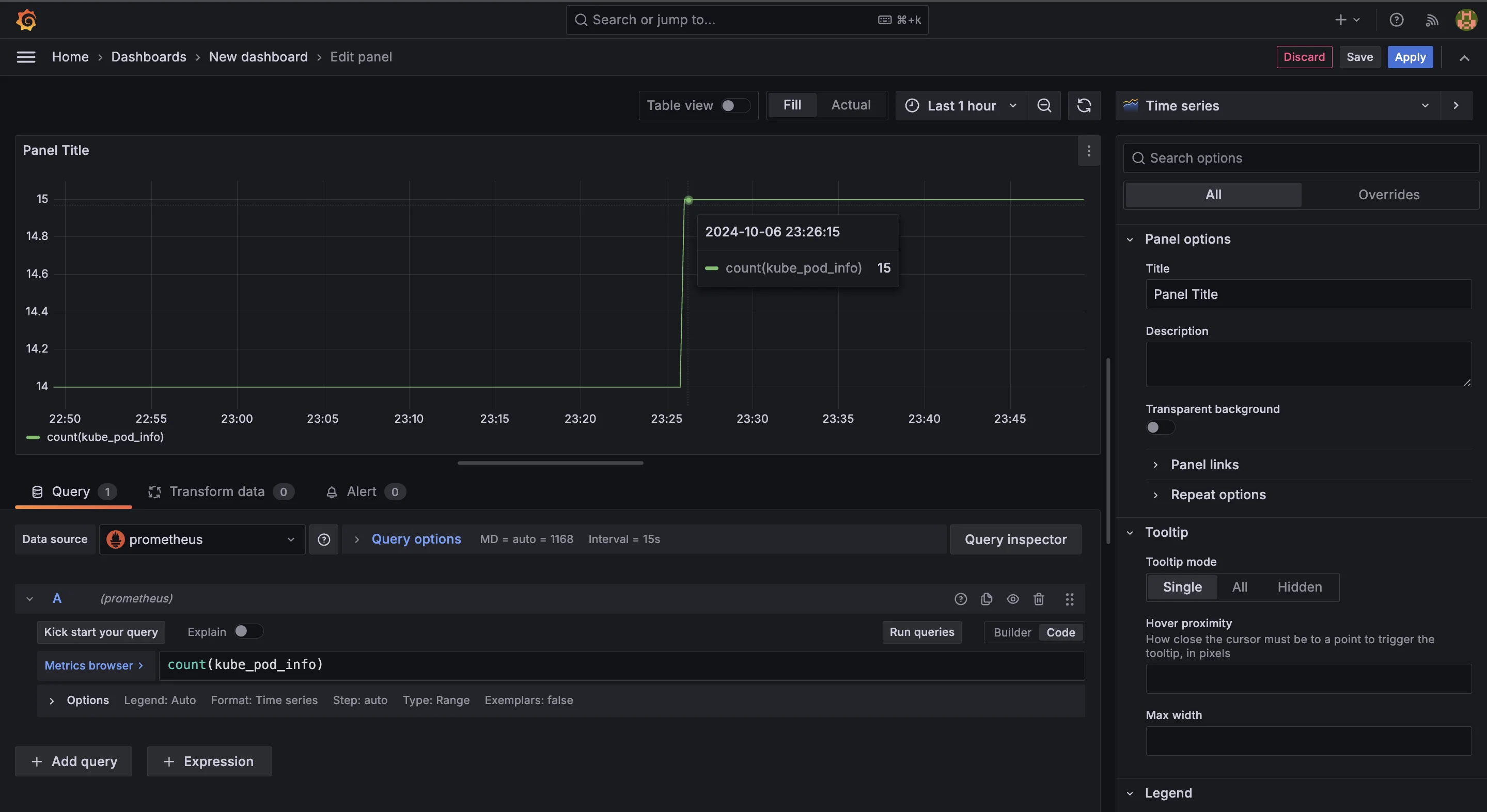

- Total Pod Count

Create a panel that shows the total number of pods running in your Kubernetes cluster. This metric gives you a real-time snapshot of your cluster’s current workload. The query for this would be:

count(kube_pod_info)

This will display the overall pod count across all namespaces, helping you track the scale of your applications at a glance.

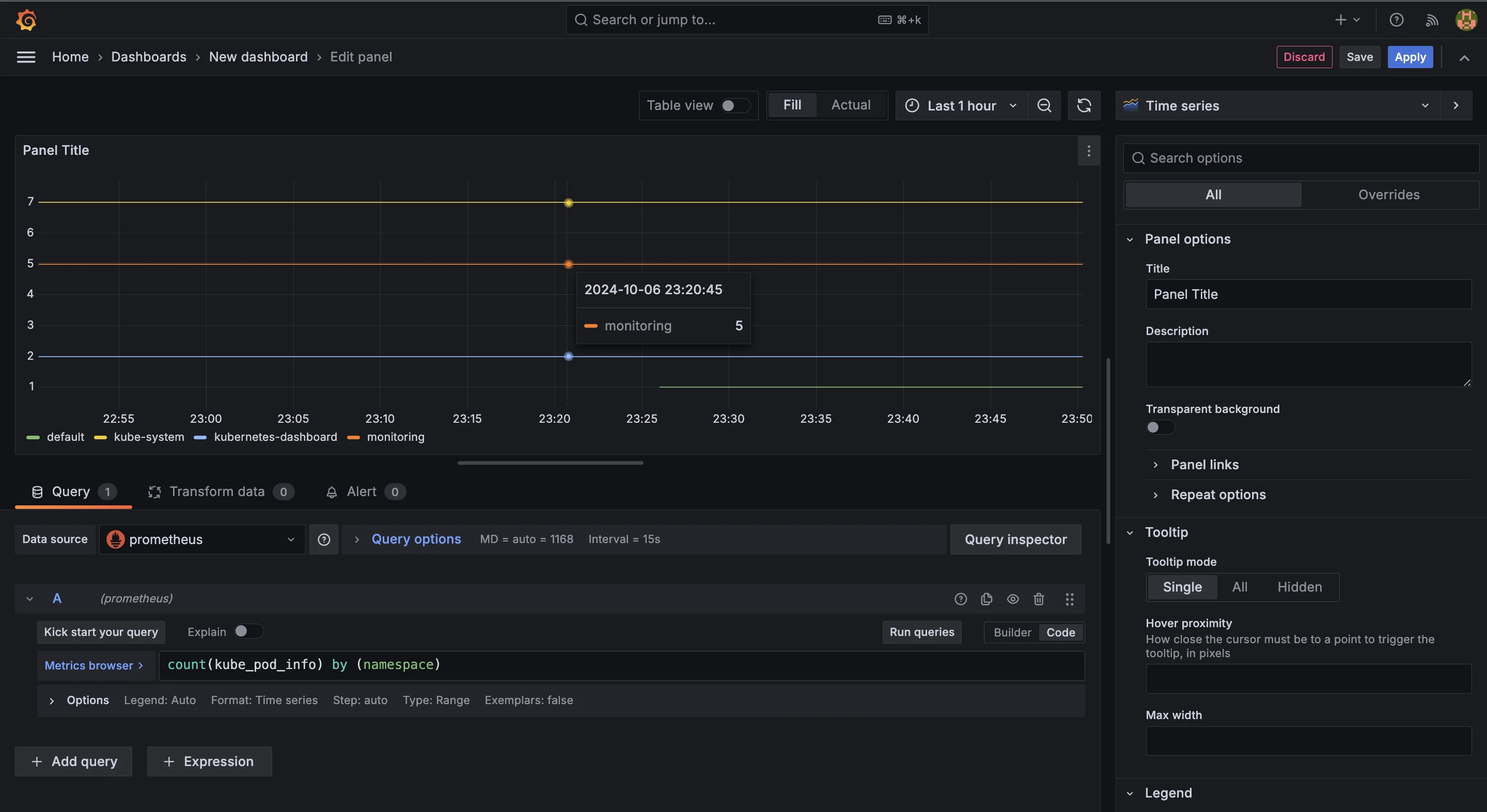

- Pod Count by Namespace

For better granularity, create a panel that breaks down pod count by namespace. This is useful for clusters running multiple applications, as it helps you understand how resources are distributed across different teams or services. Use the following query:

count(kube_pod_info) by (namespace)

This query groups the pod count by namespace, giving you visibility into which parts of your cluster are consuming the most resources.

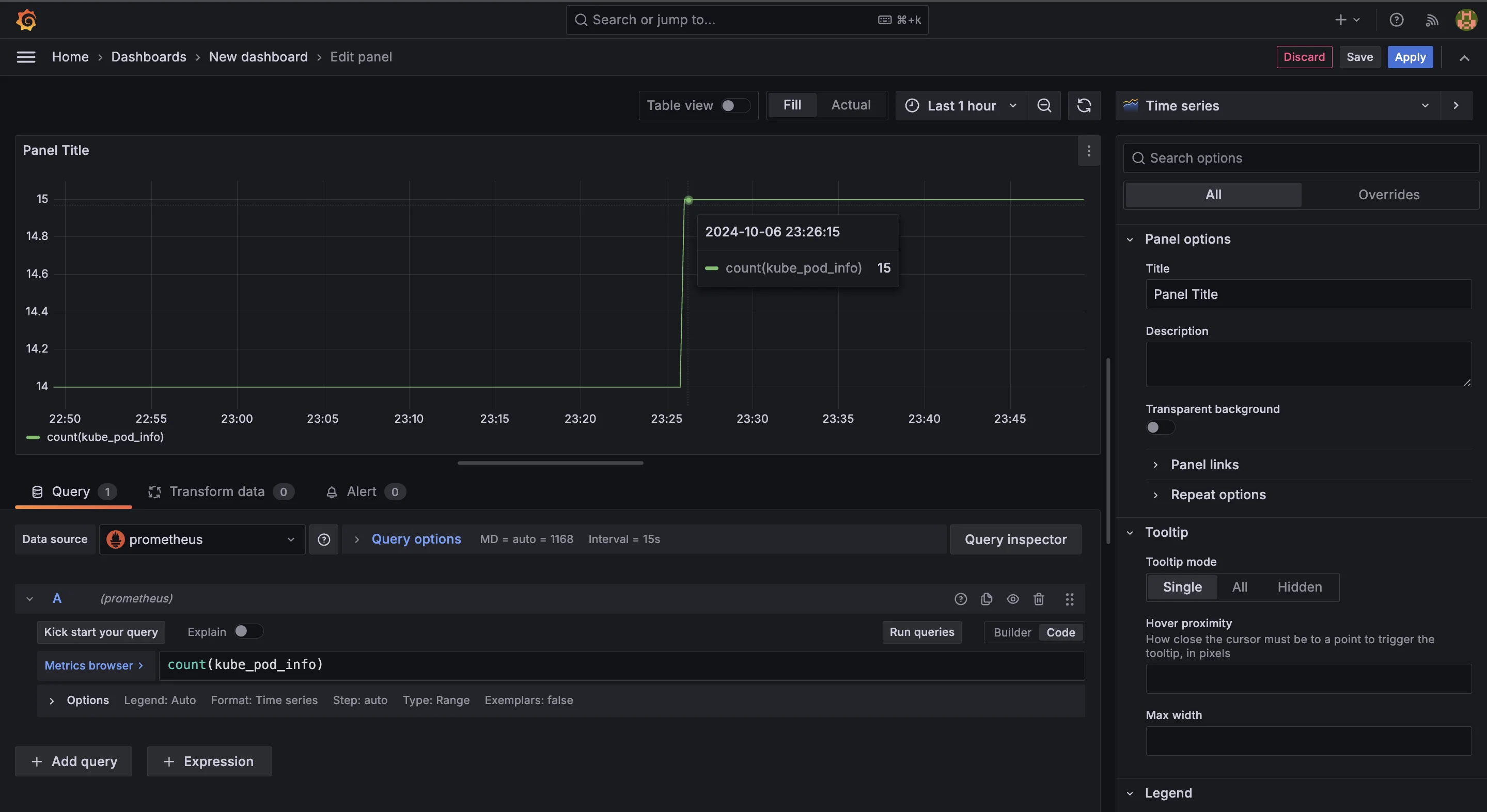

- Pod Count Trends Over Time

Understanding how your pod count changes over time is critical for identifying patterns, such as scaling events or resource bottlenecks. Create a time-series panel that shows pod count trends over a defined period (e.g., the past 24 hours)

count(kube_pod_info)

Then, apply a time range in the Grafana panel to display how the number of pods has fluctuated. This is particularly useful for spotting anomalies like sudden increases or decreases in the number of running pods.

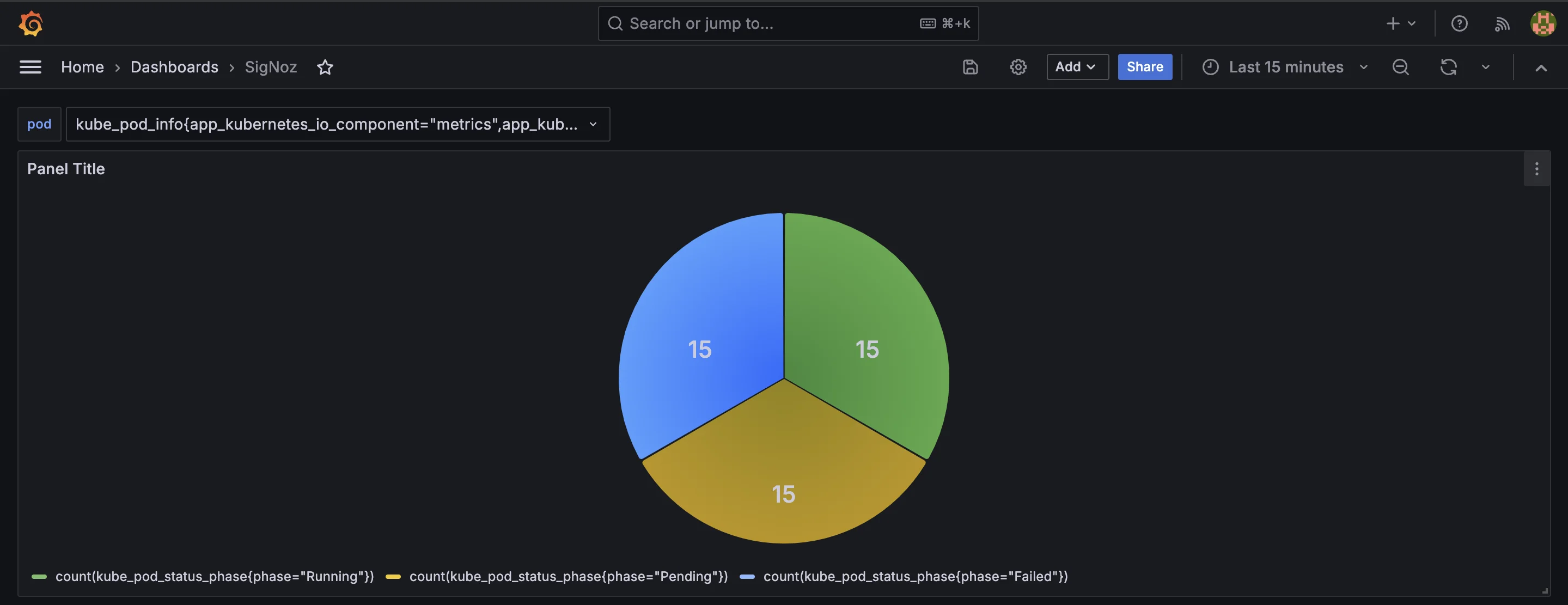

Designing Effective Visualizations for Pod Status

To monitor the health of your pods effectively, it’s essential to track the distribution of pods across different statuses—such as Running, Pending, and Failed. This can quickly show if any pods are stuck in an undesired state. Use the following queries for the different statuses:

For running pods:

count(kube_pod_status_phase{phase="Running"})For pending pods:

count(kube_pod_status_phase{phase="Pending"})For failed pods:

count(kube_pod_status_phase{phase="Failed"})

These queries will give you the counts of pods in each phase.

Visualizing this data as a pie chart or stacked bar chart in Grafana provides a clear view of the overall pod health.

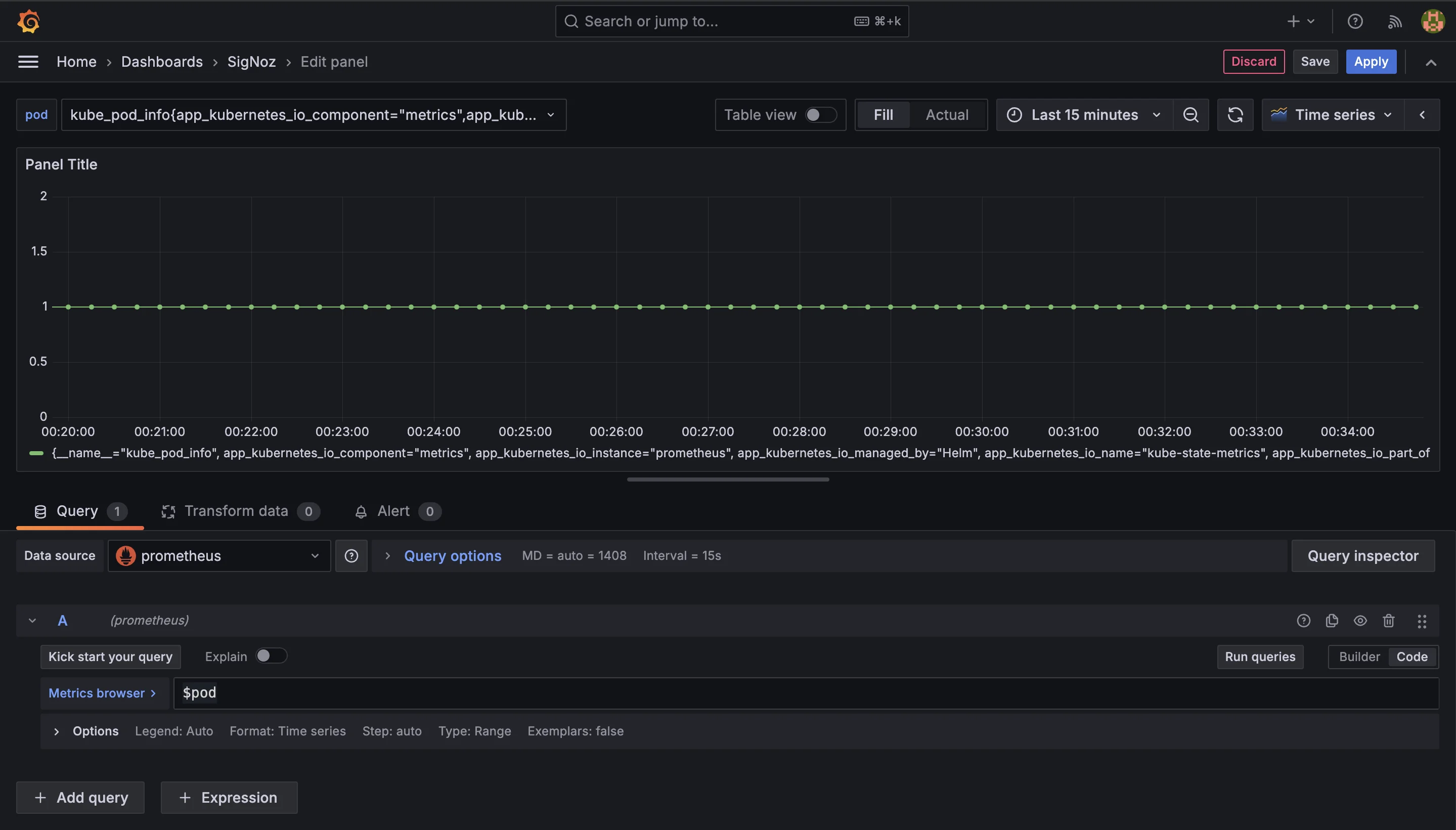

Setting Up Time-Based Pod Count Graphs

Understanding how your pod count changes over time is critical for identifying patterns, such as scaling events or resource bottlenecks. Create a time-series panel that shows pod count trends over a defined period (e.g., the past 24 hours):

count(kube_pod_info)

Apply a time range in the Grafana panel to display how the number of pods has fluctuated. This is particularly useful for spotting anomalies like sudden increases or decreases in the number of running pods.

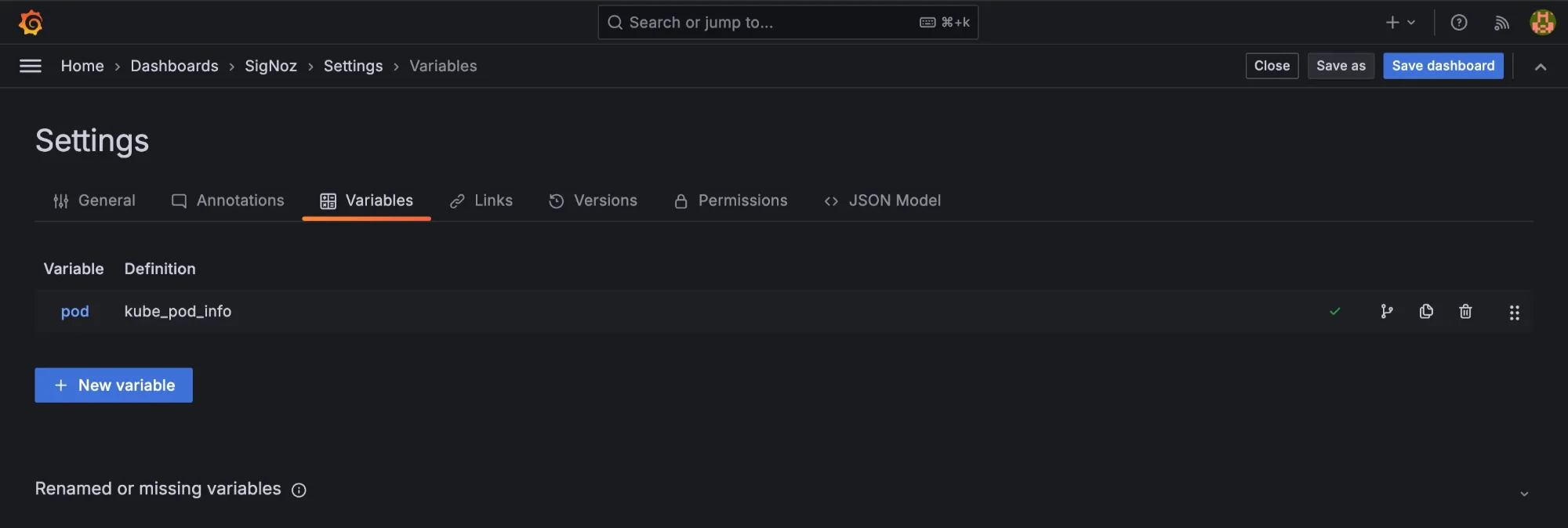

Implementing Drill-Down Views for Detailed Pod Information

Grafana allows you to create drill-down views for detailed information on specific pods. You can set up links in your dashboard panels that direct users to detailed metrics for specific pods or namespaces. This provides an easy way to investigate issues further without navigating away from the main dashboard.

For example, you can use Grafana variables to create dynamic dashboards that filter metrics based on the selected pod or namespace. This level of interactivity enhances your ability to troubleshoot issues in real-time.

Troubleshooting Common Pod Count Issues

Unexpected changes in pod count can be a sign that something is wrong in your Kubernetes cluster. Whether you notice sudden increases, decreases, or fluctuations in the number of running pods, there are a few key steps to take when troubleshooting these issues. Here’s how you can address common problems effectively

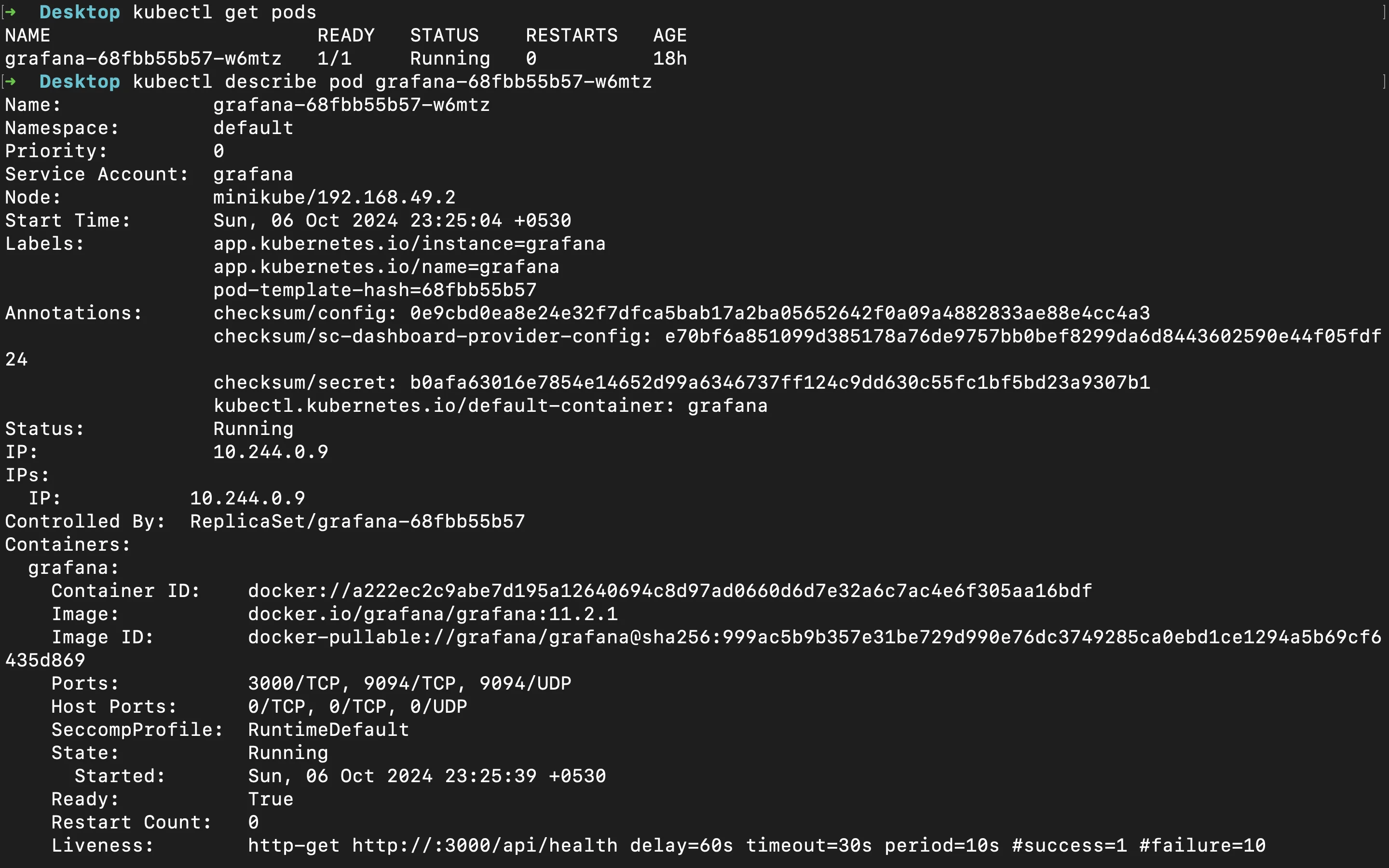

- Check for Failed Schedules

Sometimes, pods fail to be scheduled on nodes due to various reasons, such as resource constraints or node issues. To identify scheduling problems:

Use the command

kubectl describe pod <pod-name>to get detailed information about the pod.Look for messages in the Events section of the output, which may indicate why the pod couldn’t be scheduled (e.g., insufficient CPU, memory, or unavailable nodes).

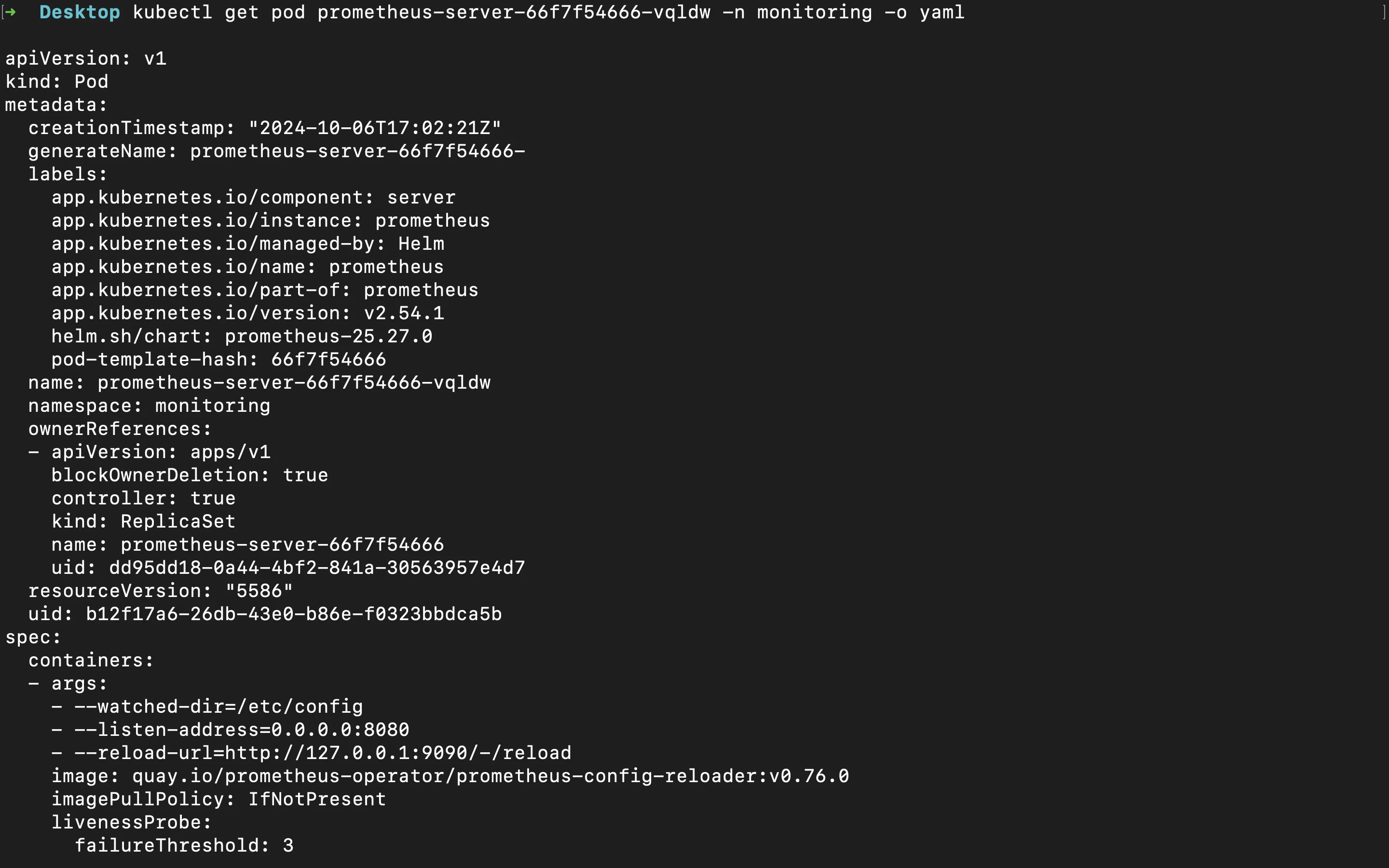

Kubernetes pod description

- Review Resource Constraints

A common reason for failed pods or fluctuating pod counts is that the nodes in your cluster don’t have enough resources (CPU, memory, etc.) to handle the scheduled pods. To ensure this isn’t the issue:

Check the resource requests and limits for your pods using the command

kubectl get pod <pod-name> -o yaml.Make sure your nodes have sufficient available resources by using

kubectl describe nodesorkubectl top nodesto check the current resource usage on each node.

Kubernetes pod description in yaml format

If your nodes are running low on resources, you may need to either increase the number of nodes in your cluster or optimize the resource requests and limits for your pods.

- Examine Pod Logs

If a pod is crashing or failing to start, it’s essential to look at the logs to understand what went wrong. Pod logs can provide valuable insight into why a container inside the pod is failing:

Use the command

kubectl logs <pod-name>to retrieve the logs from the pod.If the pod contains multiple containers, specify the container name like this:

kubectl logs <pod-name> -c <container-name>.

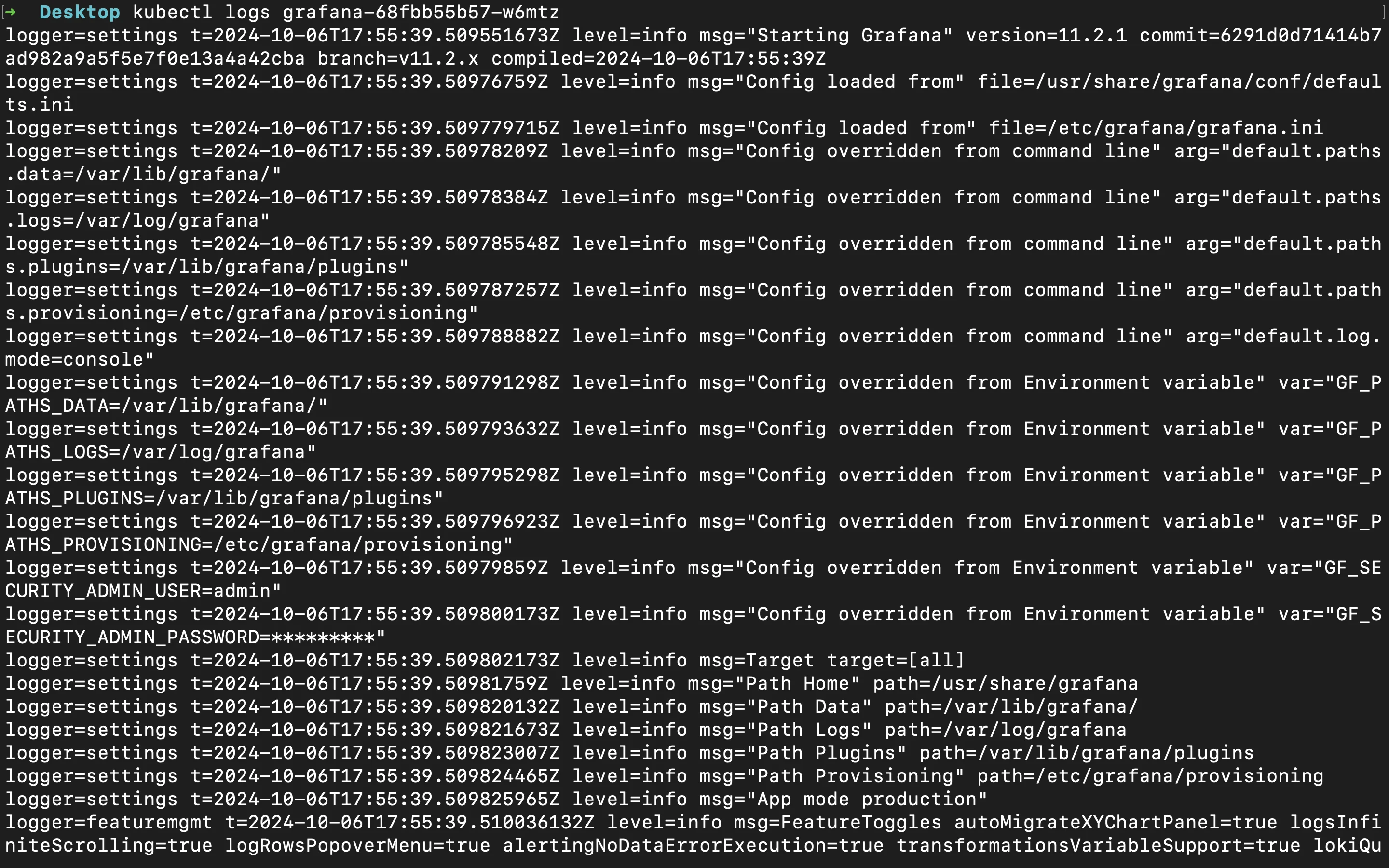

Pod logs

Look through the logs for errors or exceptions that may explain the pod’s failure. Common issues include application crashes, misconfigurations, or missing dependencies.

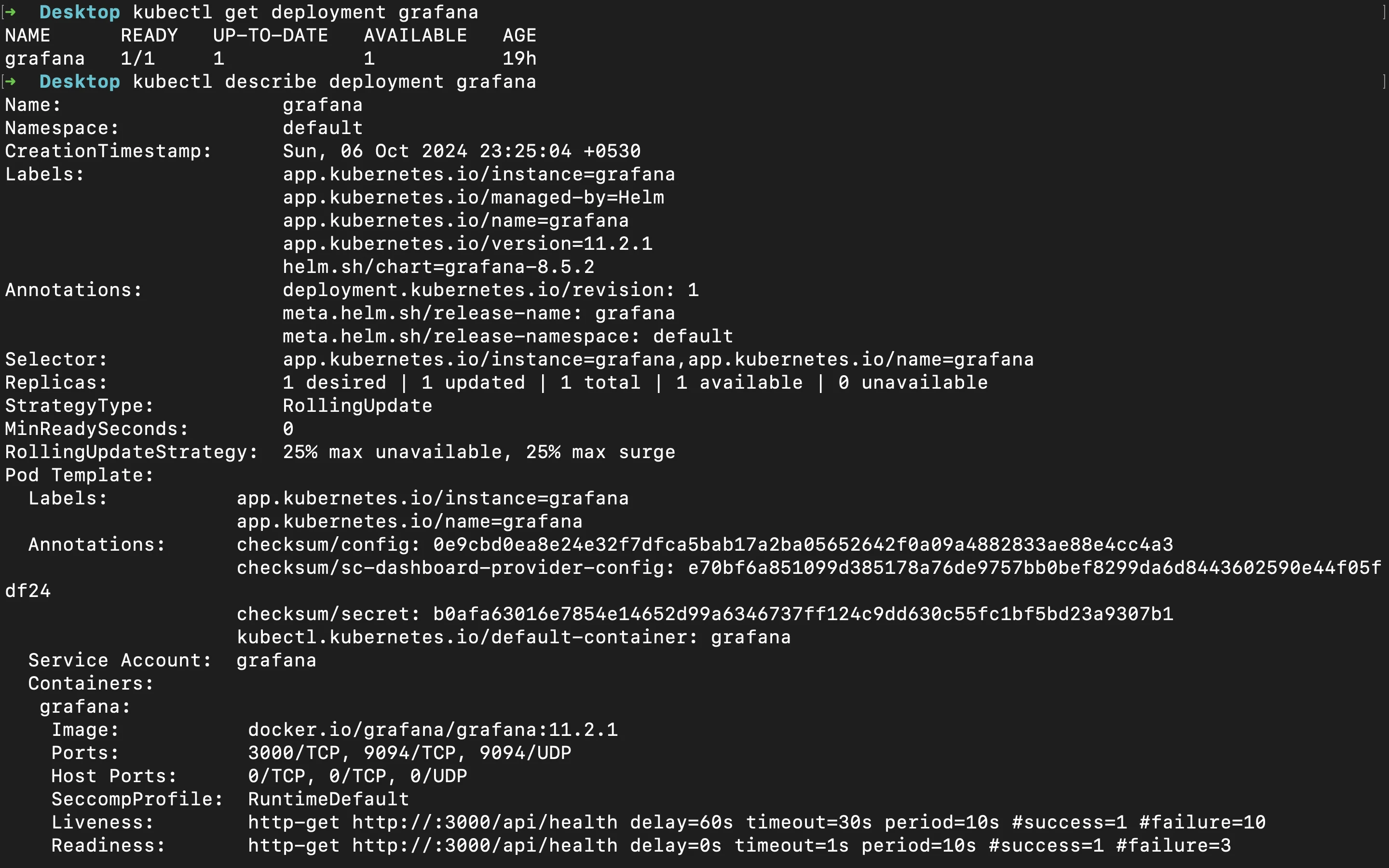

- Verify Deployments

Sometimes, recent changes in your Kubernetes Deployments can cause pod count fluctuations. This could happen due to rolling updates, misconfigured deployment settings, or incorrect scaling rules. To investigate:

Use

kubectl get deployment <deployment-name>to see the current status of your deployments.Check for any recent deployment updates or changes to scaling rules by using

kubectl describe deployment <deployment-name>.

More info about pod deployment

Rolling updates or changes in replica counts can cause a temporary increase or decrease in pod count. If the pod count changes unexpectedly after a deployment, review the deployment's update strategy and replica settings.

Enhancing Pod Monitoring with SigNoz

To effectively monitor your Kubernetes applications, using an advanced observability platform like SigNoz can significantly enhance your monitoring capabilities. SigNoz is an open-source observability tool that provides complete end-to-end visibility across your entire application stack, ensuring that you can monitor, troubleshoot, and alert on system performance with ease.

Powered by OpenTelemetry, an industry-standard framework for collecting telemetry data (logs, metrics, and traces), SigNoz seamlessly integrates with your existing Prometheus and Grafana setup. OpenTelemetry’s robust capabilities allow you to gather and analyze crucial data from your applications and infrastructure. This ensures you have a unified observability solution that not only improves your ability to monitor pods and Kubernetes performance but also allows for detailed tracing and deep insights.

Benefits of using SigNoz for End-to-End Monitoring

By integrating SigNoz into your monitoring workflow, you unlock several significant advantages:

- Comprehensive Monitoring: Gain a complete view of your Kubernetes pods, with metrics, traces, and logs in one place.

- Proactive Problem Resolution: Real-time alerts and detailed traces allow your team to catch and fix pod-related issues before they impact users.

- Data-Driven Optimizations: Analyze how your system handles scaling and resource usage during traffic spikes and optimize your Kubernetes pod performance with advanced querying capabilities.

To get started with Enhancing your Pod Monitoring with SigNoz, follow the steps in this section.

SigNoz Cloud is the easiest way to run SigNoz. Sign up for a free account and get 30 days of unlimited access to all features.

You can also install and self-host SigNoz yourself since it is open-source. With 24,000+ GitHub stars, open-source SigNoz is loved by developers. Find the instructions to self-host SigNoz.

Key Takeaways

- Pod count monitoring is vital for ensuring the overall health and efficiency of your Kubernetes cluster. It helps you track the number of running application instances and optimize resource usage.

- Prometheus provides a robust way to analyze pod metrics through PromQL queries, enabling you to gain insights into your system's behavior and make data-driven decisions.

- Grafana enhances monitoring by visualizing pod count data, allowing you to easily spot trends, issues, and performance bottlenecks through interactive dashboards.

- Regular review and troubleshooting of pod count fluctuations are crucial for maintaining a stable and healthy cluster. Unexpected changes can indicate resource constraints or deployment issues.

- Tools like SigNoz can complement Prometheus, offering additional capabilities for deeper Kubernetes monitoring and more detailed performance analysis.

FAQs

What is the difference between pod count and container count?

A pod can contain one or more containers. The pod count refers to the number of application instances running in your Kubernetes cluster, whereas the container count provides a more detailed view of the individual processes inside those pods.

How often should I monitor pod count in a production environment?

In most production environments, monitoring pod count every 15 to 30 seconds is adequate. This frequency provides a good balance between real-time insights and system performance. For highly dynamic workloads—such as those experiencing frequent scaling events or during critical operations like deployments—you may want to monitor more frequently for better visibility.

Can I use Prometheus to monitor pods across multiple Kubernetes clusters?

Yes, Prometheus can monitor pods across multiple Kubernetes clusters. You’ll need to configure Prometheus to scrape metrics from each cluster. This can be done by setting up additional Prometheus instances or using Federation to aggregate data.

What are some common causes of unexpected pod count fluctuations?

Several factors can cause unexpected changes in pod count, including:

- Automated scaling events: Kubernetes might scale pods up or down based on resource usage or custom metrics (like CPU or memory consumption).

- Rolling updates: During deployment updates, old pods are terminated, and new ones are started, leading to temporary fluctuations.

- Node failures or network issues: If a node fails or faces network issues, the pods running on it may be rescheduled elsewhere, causing changes in pod count.

- Resource constraints: If there are insufficient resources (like CPU or memory), Kubernetes might prevent new pods from being scheduled or scale down existing pods.

- Misconfigured liveness or readiness probes: These probes monitor pod health, and if they detect issues, Kubernetes might restart the affected pods, resulting in fluctuations.

By keeping an eye on pod count and understanding these common causes, you can ensure better stability and performance in your Kubernetes environment.