Kubernetes Operators - How to Build Your First One

A Kubernetes Operator is a software extension to the Kubernetes platform. It allows you to manage stateful or complex applications (like databases or message queues) in a way that's more automated and reliable than manual scripting or basic deployments.

Kubernetes doesn't inherently know how to handle the specific needs of stateful apps like databases. For instance, if a database pod crashes, simply restarting it might not be enough, you might need to recover data from a backup or reconfigure a cluster leader. An Operator handles these application-specific steps automatically. It captures the "operational knowledge" of human admin things, like handling failures, rolling updates, or compliance checks, and encodes it into reusable code.

In this guide, we will take a deep dive into Kubernetes Operators (k8s Operators).

What is a Kubernetes Operator?

A Kubernetes Operator is a method of packaging, deploying, managing and scaling complex applications, particularly stateful ones, by leveraging its two primary parts: a Custom Resource Definition (CRD) and a Custom Controller.

It follows the "operator pattern", which combines API specifications (defining the desired state) with automation logic (the logic to achieve that desired state), allowing operators to handle tasks that go beyond basic Kubernetes primitives like Deployments or StatefulSets. The Operator understands the nuances of the software it manages. For instance, a PostgreSQL Operator knows that before upgrading a database, it should trigger a snapshot and ensure the secondary nodes are ready before touching the primary.

How does a Kubernetes Operator work?

The Operator runs on Kubernetes, extending its capabilities to manage complex, stateful workloads (like databases or message queues) in a declarative way, without modifying Kubernetes’s core code.

It consists of three core components: the API, the Controller, and the necessary Kubernetes RBAC (Role-Based Access Control) resources.

API (Application Programming Interface)

This component defines the desired configuration and state of the application, which the operator manages. It consists of the following parts:

Custom Resource Definition (CRD): This is a custom blueprint/template you add to the Kubernetes API. It allows you to define a new object type.

Custom Resource (CR): This is the actual instance you create (e.g., "my-prod-db"). It contains the Desired State, i.e., how many nodes you want, what version to use, and backup schedules.

How does it work?

- You define a CRD in a YAML manifest and apply it to the cluster (e.g., via

kubectl apply). - This registers a new API endpoint, like

/apis/myapp.example.com/v1/namespaces/*/databases. - Users then create instances of this custom resource (e.g., a

MyDatabaseobject) specifying configurations like replica count, storage size, or backup schedules. - Example YAML Snippet (for a hypothetical Database CRD):

apiVersion: apiextensions.k8s.io/v1

kind: CustomResourceDefinition

metadata:

name: databases.myapp.example.com

spec:

group: myapp.example.com

versions:

- name: v1

served: true

storage: true

schema:

openAPIV3Schema:

type: object

properties:

spec:

type: object

properties:

replicas: { type: integer }

storageSize: { type: string }

- Once the above is applied, you can create a custom resource like:

apiVersion: myapp.example.com/v1

kind: Database

metadata:

name: my-db

spec:

replicas: 3

storageSize: 10Gi

Controller

The controller is the brain of the Operator. It is a custom application (often written in Go, using the controller-runtime library) that watches for changes to your custom resources and ensures the cluster's actual state matches the desired state.

How does it work? (the reconciliation pattern)

- The Operator runs as a Deployment or DaemonSet in the cluster, using Kubernetes libraries (informer and client-go) to watch the API server for events on your CRDs (e.g., creation, updates, deletions).

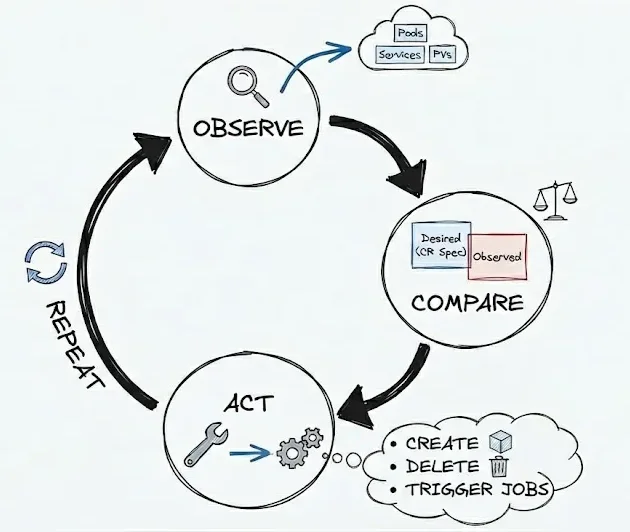

- It implements a reconciliation loop. It is an infinite control loop that runs periodically (e.g., every 10 seconds) or reactively (on events). This loop follows these steps:

- Observe: Fetch the current state of the world (e.g., query pods, services, or PVs related to the custom resource).

- Compare: Differentiate the observed state against the desired state from the CR spec (e.g., "We want 3 replicas, but only 2 pods are running").

- Act: Emit imperative commands to Kubernetes (via the API) to converge the states (e.g., create a new pod, scale a StatefulSet, or trigger a backup job). If something fails, it retries or logs errors.

- Repeat: Go back to step 1. This makes the system resilient to failures.

RBAC (Role-Based Access Control) Resources

RBAC defines the security boundary of an operator, controlling exactly which Kubernetes APIs its reconciliation loop is allowed to call.

How does it work?

- Every operator action (GET, LIST, WATCH, CREATE, UPDATE, DELETE) is sent to the Kubernetes API server.

- It is then validated against RBAC rules.

- If the rule is explicitly permitted, then only the action is executed.

Popular Examples of Kubernetes Operators

The ecosystem has matured significantly since CoreOS introduced the Operator pattern in 2016. You can find hundreds of operators, including many Red Hat-certified and vendor-certified ones, on OperatorHub.io, alongside community operators.

| Operator | Use Case | Key Functionality |

|---|---|---|

| Prometheus Operator | Monitoring | Automatically configures scrape targets and manages Prometheus instances. |

| Strimzi | Apache Kafka | Manages Kafka brokers, topics, and users within a cluster. |

| OpenTelemetry Operator | Telemetry Collection | Manages telemetry collection in your cluster and sending that data to an observability backend like SigNoz. |

| Cert-Manager | Security | Automates the issuance and renewal of TLS certificates. |

| PGO (Crunchy Data) | PostgreSQL | Handles high availability, S3 backups, and point-in-time recovery. |

| Istio Operator | Service Mesh | Manages the complex installation and upgrade of service mesh components. |

Kubernetes Operator vs Kubernetes Helm

A common point of confusion for DevOps engineers is whether to use Helm or an Operator. Both help you manage applications on Kubernetes, but they serve very different purposes.

Helm

It is a package manager. It is great for "Day 1" operations, such as templating your YAML files and installing an application. However, once the app is installed, Helm doesn't "watch" it. If a database node goes out of sync, Helm won't fix it.

Operator

It is for "Day 2" operations. They manage the application's lifecycle after it is installed. It can handle a rolling upgrade of a database without downtime, something that is very difficult to achieve with a pure Helm chart.

Summary Table: Operator vs Helm

| Feature | Helm (Package Manager) | Operator (Operational Brain) |

|---|---|---|

| Primary Goal | Packaging: Easily install and upgrade sets of Kubernetes resources. | Automation: Encode a human's knowledge on how to run a specific app. |

| Where it lives | Mostly on your laptop (CLI). It sends files to the cluster. | Inside your cluster as a Pod that never stops running. |

| Awareness | Knows how to template YAML files. | Knows how the application works (e.g., how to fix a broken database). |

| Scope | "Day 1": Install, Upgrade, Rollback. | "Day 2": Backups, scaling, recovery, and self-healing. |

Hands-On Demo: Build a Kubernetes Operator (Step by Step)

In this hands-on demo, we'll walk through building a simple Kubernetes Operator from scratch using Kubebuilder, one of the most popular frameworks for operator development. This example will create a Database operator that automatically manages database deployments based on custom resource specifications.

By the end of this tutorial, you'll understand how the pieces fit together: the CRD definition, the controller reconciliation loop, and how Kubernetes watches for changes to keep your application in the desired state.

Prerequisites

Minikube: You’ll need a local cluster to deploy and test your operator. You can install it from the Official Minikube Installation Guide.Go (≥ 1.22): Kubebuilder is built on Go. Ensure your$GOPATHand$GOBINare correctly set in your environment variables. You can download it from the Official Go Installation Guide.kubectl: The standard CLI for interacting with Kubernetes. You can download and install it from the Official K8s Installation Guide.Docker: Required for building your operator's container images and running your local cluster. You can download and install it from the Official Docker Installation Guide.Kubebuilder (latest stable release (v4.x): We’ll use it to scaffold our project and generate the API and controllers. You can install it from the Kubebuilder Book.

Create a directory and run all the following steps in that same directory.

Step 1: Create the Operator Project

This step sets up controller-runtime, creates RBAC, manager, and builds scaffolding using Kubernetes-approved defaults. At this point, you’ve created the Operator control plane.

kubebuilder init \

--domain example.com \

--repo github.com/example/database-operator

Step 2: Create the Custom Resource (CRD)

This step defines a new Kubernetes API that your Operator will manage.

kubebuilder create api \

--group myapp \

--version v1 \

--kind Database

When prompted, choose:

✔ Create Resource? yes

✔ Create Controller? yes

Step 3: Define the Database Spec

This is where you define what users can declare in YAML. Replace DatabaseSpec struct with the following code, in api/v1/database_types.go .

type DatabaseSpec struct {

Replicas int32 `json:"replicas"`

Image string `json:"image"`

}

Generate code and manifests:

make generate

make manifests

Step 4: Implement the Reconciliation Logic with Imports

This is the core of the Operator. Here you encode operational knowledge into code. Edit the Core Operator Logic in internal/controllers/database_controller.go.

Ensure your controller has these imports (keep the existing ones, add missing):

import (

"context"

appsv1 "k8s.io/api/apps/v1"

corev1 "k8s.io/api/core/v1"

metav1 "k8s.io/apimachinery/pkg/apis/meta/v1"

"k8s.io/apimachinery/pkg/runtime"

ctrl "sigs.k8s.io/controller-runtime"

"sigs.k8s.io/controller-runtime/pkg/client"

myappv1 "github.com/example/database-operator/api/v1"

)

Now update the Reconcile function:

func (r *DatabaseReconciler) Reconcile(

ctx context.Context,

req ctrl.Request,

) (ctrl.Result, error) {

var db myappv1.Database

if err := r.Get(ctx, req.NamespacedName, &db); err != nil {

return ctrl.Result{}, client.IgnoreNotFound(err)

}

deploy := &appsv1.Deployment{

ObjectMeta: metav1.ObjectMeta{

Name: db.Name,

Namespace: db.Namespace,

},

Spec: appsv1.DeploymentSpec{

Replicas: &db.Spec.Replicas,

Selector: &metav1.LabelSelector{

MatchLabels: map[string]string{"app": db.Name},

},

Template: corev1.PodTemplateSpec{

ObjectMeta: metav1.ObjectMeta{

Labels: map[string]string{"app": db.Name},

},

Spec: corev1.PodSpec{

Containers: []corev1.Container{

{

Name: "database",

Image: db.Spec.Image,

Env: []corev1.EnvVar{

{

Name: "POSTGRES_PASSWORD",

Value: "examplepassword",

},

},

},

},

},

},

},

}

if err := ctrl.SetControllerReference(&db, deploy, r.Scheme); err != nil {

return ctrl.Result{}, err

}

if err := r.Create(ctx, deploy); err != nil {

return ctrl.Result{}, client.IgnoreAlreadyExists(err)

}

return ctrl.Result{}, nil

}

What this function does: Read the current cluster state → Compare it with the desired state (Database.spec) → Create missing resources → Repeat forever (reconciliation loop)

Replace the SetupWithManager function with the code below, to tell the operator to watch the deployment.

func (r *DatabaseReconciler) SetupWithManager(mgr ctrl.Manager) error {

return ctrl.NewControllerManagedBy(mgr).

For(&myappv1.Database{}).

Owns(&appsv1.Deployment{}).

Complete(r)

}

Step 5: Start Your Kubernetes Cluster

This step ensures you have a local cluster to run against. Make sure Docker is up before you start the local cluster.

minikube start

Step 6: Install the CRDs into the Cluster

This registers your custom API with Kubernetes.

make install

Verify:

kubectl get crds | grep databases

# Output:

# databases.myapp.example.com 2025-12-26T12:03:25Z

Step 7: Run the Operator Locally

This runs the controller against your cluster. Leave this terminal running.

make run

Step 8: Create a Database Custom Resource

Create a database.yaml file and paste the code into it.

apiVersion: myapp.example.com/v1

kind: Database

metadata:

name: my-db

spec:

replicas: 2

image: postgres:15

Apply it:

kubectl apply -f database.yaml

Step 9: Observe the Operator in Action

This confirms the controller reconciled the resource.

kubectl get databases

kubectl get deployments

kubectl get pods

Step 10: Test your Operator by Breaking the System

This step demonstrates that the Operator reconciles intent, not just Pods.

Step 10.1: Verify the Database intent still exists

First, confirm that the Database custom resource is still present.

kubectl get database my-db

This resource represents the desired system intent.

Step 10.2: Observe Deployments

kubectl get deployments -w

When we delete the deployment, you can watch it here. Leave the terminal running.

Step 10.3: Manually delete the Deployment (simulate drift)

Now, in the new terminal, intentionally break the system by deleting the Deployment created by the Operator.

kubectl delete deployment my-db

Step 10.4: Explanation

A Deployment cannot recreate itself once deleted. But as you see, the deployment is still present. How?

Your Operator detected drift and restored the Deployment. It noticed that reality no longer matched declared intent and fixed it.

kubectl get deployments -w

# Output

# NAME READY UP-TO-DATE AVAILABLE AGE

# This is the original Deployment running normally.

# my-db 2/2 2 2 80s

# my-db 2/2 2 2 87s

# The Deployment was deleted and a new Deployment object was created

# Its AGE reset to 0 seconds

# my-db 0/2 0 0 0s

# my-db 0/2 0 0 0s

# my-db 0/2 0 0 0s

# my-db 0/2 0 0 0s

# Pods being created, ready & replica count stabilizing

# my-db 0/2 2 0 0s

# my-db 1/2 2 1 1s

# my-db 2/2 2 2 1s

Step 11: Clean Up

You can removes the custom resource definitions, the managed Deployment, and stop the Operator by following the below steps.

- Stop the Operator

Ctrl + C

- Delete the Database custom resource

kubectl delete database my-db

- Verify the Deployment is gone

kubectl get deployments

- Remove the CRD (Optional)

make uninstall

Congratulation! You just created your personalized Database Operator, learned how it works and manage it.

Monitor your Operator with OpenTelemetry

Now that your Database Operator is reconciling resources, the next step is to observe it:

- Instrument the operator code with OpenTelemetry (Go SDK) to emit traces/metrics around each reconciliation.

- Deploy the OpenTelemetry Operator to manage collectors and auto-instrumentation in your cluster.

- Configure the collector to send telemetry to SigNoz, so that you get dashboards for Kubernetes infra metrics, Traces, Logs and events for failed reconciliations.

If you want a complete walkthrough, check out A full Kubernetes observability setup with OpenTelemetry and SigNoz guide.

Best Practices for Designing and Deploying Kubernetes Operators

Designing a production-ready Kubernetes Operator requires applying proven operator best practices, to ensure reliability at scale.

Design for Single Responsibility: Manage one application or service per operator to keep logic focused and testing simple, avoid "mega-operators" handling unrelated components like MongoDB and Redis.

Use Declarative APIs in CRDs: Define the desired state (e.g., replicas: 3) in specs, not imperative actions, to enable GitOps and let the operator handle implementation details.

Keep Reconcile Loops Idempotent and Efficient: Ensure repeated reconciliations converge without side effects or thrashing; check current vs. desired state before changes and use backoff for retries.

Leverage Operator Frameworks like SDK or Kubebuilder: Use these for boilerplate (e.g., RBAC, metrics) to focus on business logic, ensuring consistency and easier onboarding.

Implement Observability and Thorough Testing: Expose metrics, structured logs, and Kubernetes events; unit/integration test with tools like envtest and scorecard for production confidence.

Common Pitfalls and Trade-offs

While Operators provide immense power, they come with challenges that are often discussed in community circles like Reddit.

Permission Sprawl: Many Operators require cluster-admin or broad cluster-scoped permissions to function, as they need to manage CRDs, Namespaces, and RBAC across the cluster. In highly restricted multi-tenant environments, this can be a security concern.

Complexity and Operator Sprawl: Writing and maintaining an Operator are software engineering tasks. If you have ten different Operators from ten different vendors, you now have ten extra services running in your cluster, each consuming memory and CPU. Debugging an Operator stuck in a failed reconciliation loop can be more difficult than debugging a simple Pod.

Opaque Logic: When an Operator manages an application, it abstracts the complexity. This is a benefit until something goes wrong. If the Operator logic is not well-documented, it can feel like a black box is making changes to your infrastructure that you don't fully understand.

Real-World Use Cases and Examples of Operators in Action

While the Operator pattern is powerful in theory, its true value lies in automating "Day 2" operations (such as upgrades, backups, and scaling). These examples show how industry-standard tools use Operators to manage complex infrastructure at scale.

OpenShift Container Platform (Red Hat): OpenShift, Red Hat's enterprise Kubernetes distribution, relies on Operators for nearly every core component, including the ingress controller, logging stack, and etcd database. Cluster Operators bootstrap services during installation and orchestrate rolling updates, ensuring high availability and reliability for the infrastructure software running on Kubernetes. This approach has made OpenShift a cornerstone for enterprise deployments, managing thousands of clusters globally.

Elastic Cloud on Kubernetes (ECK) for Search and Analytics: Elastic's ECK Operator deploys and manages Elasticsearch, Kibana, and Beats clusters natively on Kubernetes, supporting advanced features such as frozen indices and security integrations. Used by enterprises for logging, search, and observability, it enables seamless scaling and self-healing, as seen in production setups monitoring Kubernetes infrastructure itself.

FAQ

What is the Kubernetes Operator?

An Operator is a method of packaging, deploying, and managing a Kubernetes application. It takes "human operational knowledge", like how to upgrade a database or take a backup, and encodes it into software. It uses Custom Resources (CRDs) to manage your applications as if they were native Kubernetes objects (like Pods or Services).

What is the difference between a Controller and an Operator?

Controller watches the state of your cluster and makes changes to move it toward the desired state (e.g., the ReplicaSet controller ensures 3 pods are always running).

Operator is specific type of controller that is "application-aware." All Operators are controllers, but not all controllers are Operators. An Operator knows the app's internal logic (e.g., a MySQL Operator knows it must sync data before shutting down a node).

Why write a Kubernetes Operator?

You write an Operator when you have a complex or stateful application that requires manual intervention to run correctly. You would write one to automate:

- Self-healing: Detecting and fixing app-specific failures.

- Upgrades: Managing schema migrations or version hops without downtime.

- Scale: Automatically reconfiguring a cluster when you add more nodes.

How does a Kubernetes Operator work? (The Reconciliation Loop)

Operators work on a Reconciliation Loop, repeating below steps:

Observe: It watches the Custom Resource (CR) you created.

Diff: It compares the Actual State (what is running) to the Desired State (what your YAML says).

Act: It performs the necessary actions (coding logic) to make the actual state match your desired state.

Hope we answered all your questions regarding Kubernetes Operators.

You can also subscribe to our newsletter for insights from observability nerds at SigNoz, get open source, OpenTelemetry, and devtool building stories straight to your inbox.