Mastering Java App Monitoring in Docker - A Practical Guide

Java applications in Docker containers offer powerful benefits, but they also present unique monitoring challenges as containerization becomes increasingly popular, understanding how to monitor and troubleshoot Java apps in this environment effectively is crucial. This guide will equip you with the knowledge and tools to master Java app monitoring in Docker, ensuring optimal performance and quick issue resolution.

Understanding Java Applications in Docker Containers

Docker containers provide a lightweight, portable environment for deploying and running Java applications. Containers are isolated environments that include everything required to run an application such as code, libraries, system tools, etc. When combined with Java applications, Docker containers provide a consistent and reproducible environment for Java applications.

Benefits of Running Java Apps in a Docker Environment:

- Portability: Run your Java app consistently across different environments.

- Isolation: Keep your app and its dependencies separate from other systems.

- Scalability: Easily scale your application by spinning up new containers.

Key Differences Between Monitoring Traditional and Containerized Java Applications

- Lifecycle Management: Traditional apps have stable lifecycles, while containerized apps are often short-lived, requiring adaptable monitoring.

- Resource Allocation: Traditional apps use dedicated resources, but containers have strict CPU and memory limits, demanding careful monitoring.

- Deployment Environment: Traditional setups are static, whereas containerized environments are dynamic and can cause resource contention.

- Networking: Traditional networking is straightforward, but containerized setups use complex virtual networks, and need specialized monitoring.

- Logging and Monitoring Tools: Traditional apps use system-level tools, while containerized apps need solutions for handling logs and metrics across multiple instances.

- Security and Isolation: Traditional apps focus on OS-level security; containers require monitoring for process-level isolation and vulnerabilities.

- Scalability: Traditional scaling is hardware-intensive, while containers can scale rapidly, necessitating dynamic monitoring tools.

Essential Tools for Monitoring Java Apps in Docker

To effectively monitor Java applications in Docker, you need a combination of container monitoring tools and Java-specific utilities. Here are some essential tools to consider:

- Container Monitoring Tools:

- cAdvisor: Provides container-level resource usage and performance data.

- Prometheus: A powerful metrics collection and alerting system.

- Grafana: Creates visualizations and dashboards for your metrics.

- SigNoz: An open-source APM tool with robust support for containerized environments.

- Java-Specific Monitoring Tools:

- JConsole: A built-in Java monitoring tool compatible with Docker.

- VisualVM: Offers detailed analysis of JVM performance and thread states.

- Log Aggregation Tools:

- ELK Stack (Elasticsearch, Logstash, Kibana): Collects and analyzes logs from multiple containers.

- Fluentd: A flexible log collector that integrates well with Docker.

- APM Solutions:

- SigNoz: Provides end-to-end tracing and performance monitoring for Java apps.

- Dynatrace: Offers AI-powered, full-stack monitoring capabilities.

Setting Up Basic Monitoring Infrastructure

Prerequisites

- Docker and Git: Ensure these are installed on your machine.

- Basic understanding of Docker, Prometheus, Fluentd, and Docker Compose: While not strictly required, familiarity with these tools will be helpful.

Steps Involved

- Docker Initialization and Metrics Exposure Run

docker initin your project's root directory to set up basic Docker configurations or environment variables. Add the following configuration to your Docker daemon configuration file, typically found at:

- Windows:

C:\ProgramData\Docker\config\daemon.json - Linux:

/etc/docker/daemon.json - macOS:

~/Library/Containers/com.docker.docker/Data/vms/0/config.json

{

"metrics-addr" : "127.0.0.1:9323",

"experimental" : true

}

To enable experimental features of Docker, experimental can be set to true during developmnet and testing. The matrics-addr enables Docker to expose metrics on port 9323, which can be scraped by monitoring tools like Prometheus.

- Implementing Metrics Collection (Prometheus)

To collect and visualize Docker metrics, install Prometheus from its official website and modify the configuration file (prometheus.yml) in the Prometheus installation directory. Here's a sample configuration:

global:

scrape_interval: 15s

scrape_configs:

- job_name: 'docker'

static_configs:

- targets: ['localhost:9323']

This configuration instructs Prometheus to collect metrics from the Docker daemon every 15 seconds by accessing the endpoint at localhost:9323.

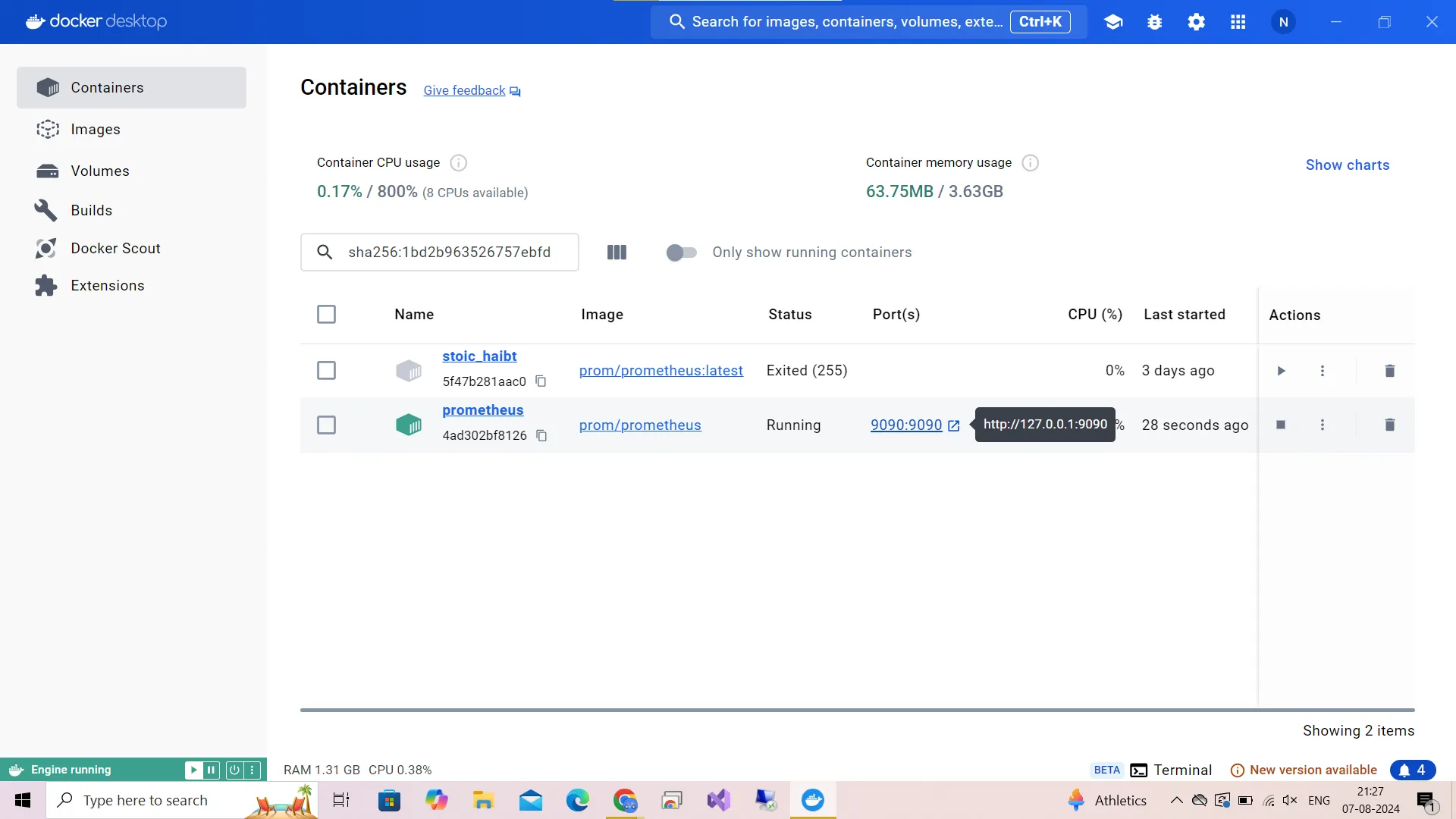

Note: Prometheus’ Image can also be pulled from Docker CLI using the command:

docker pull prom/prometheus:latest

In that case we are not required to locate prometheus.yml and modify it for default variables like localhost or scrape interval. These can be easily modified in the Docker UI when we run its container.

- Setting Up Centralized Logging (Fluentd)

To centralize and manage container logs, use Fluentd as the logging driver for Docker. You can configure Docker Compose to use Fluentd without modifying the actual configuration files, by specifying the logging options when running the container. Here's an example configuration:

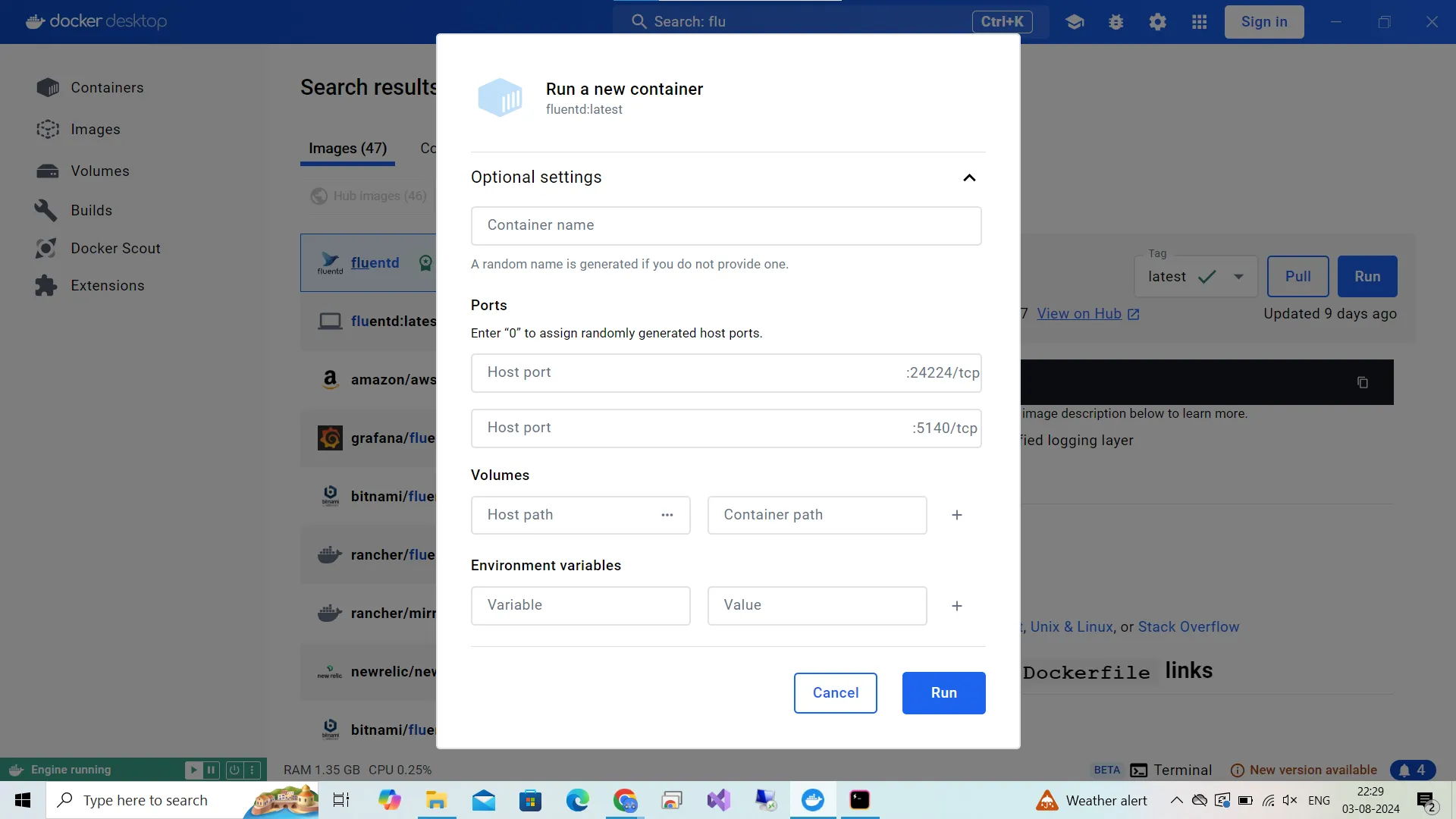

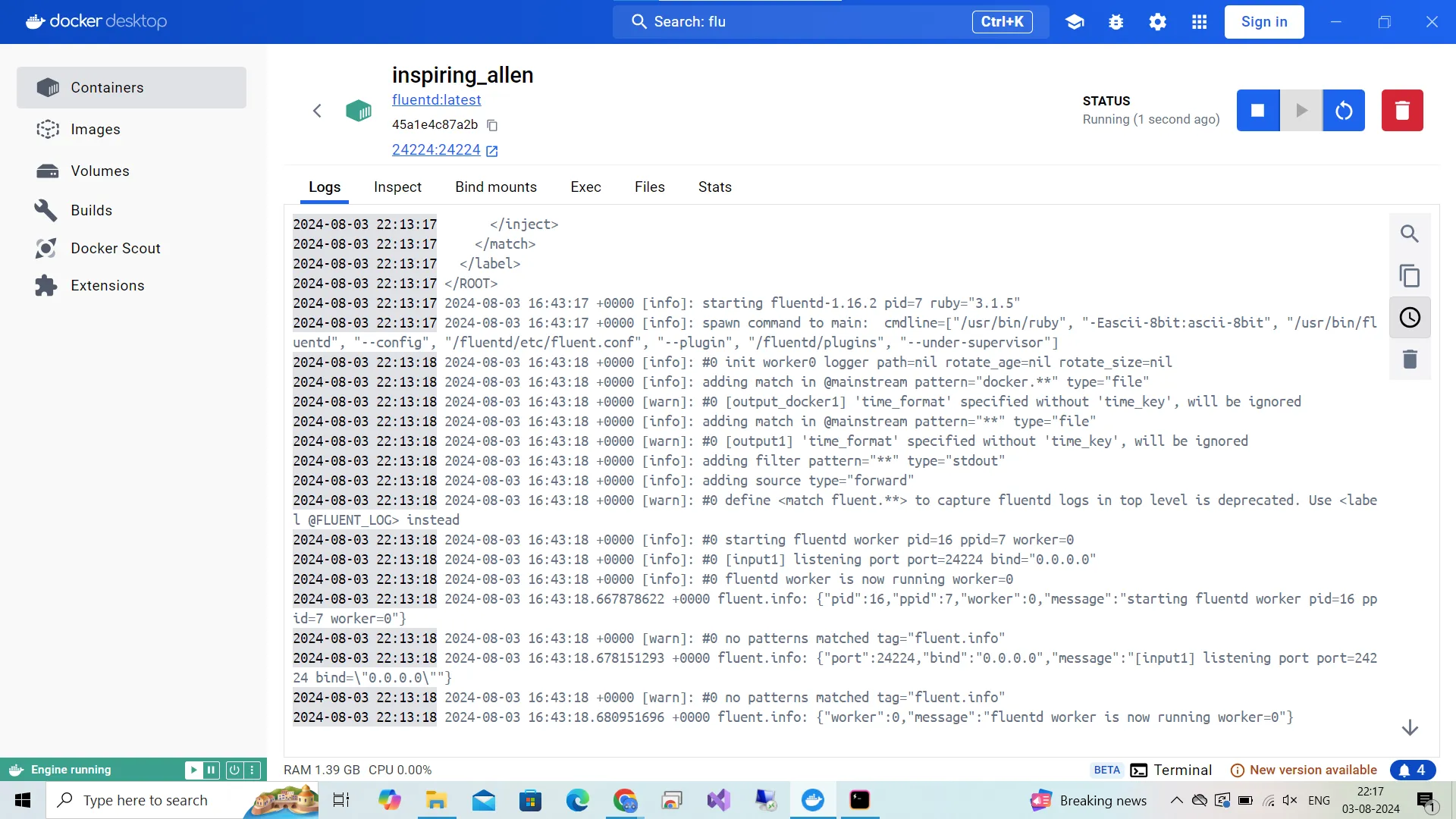

This setup directs container logs to a Fluentd instance running on localhost:24224. You can pull the Fluentd image and run it in Docker, adjusting variables like fluentd-address as needed when starting the container.

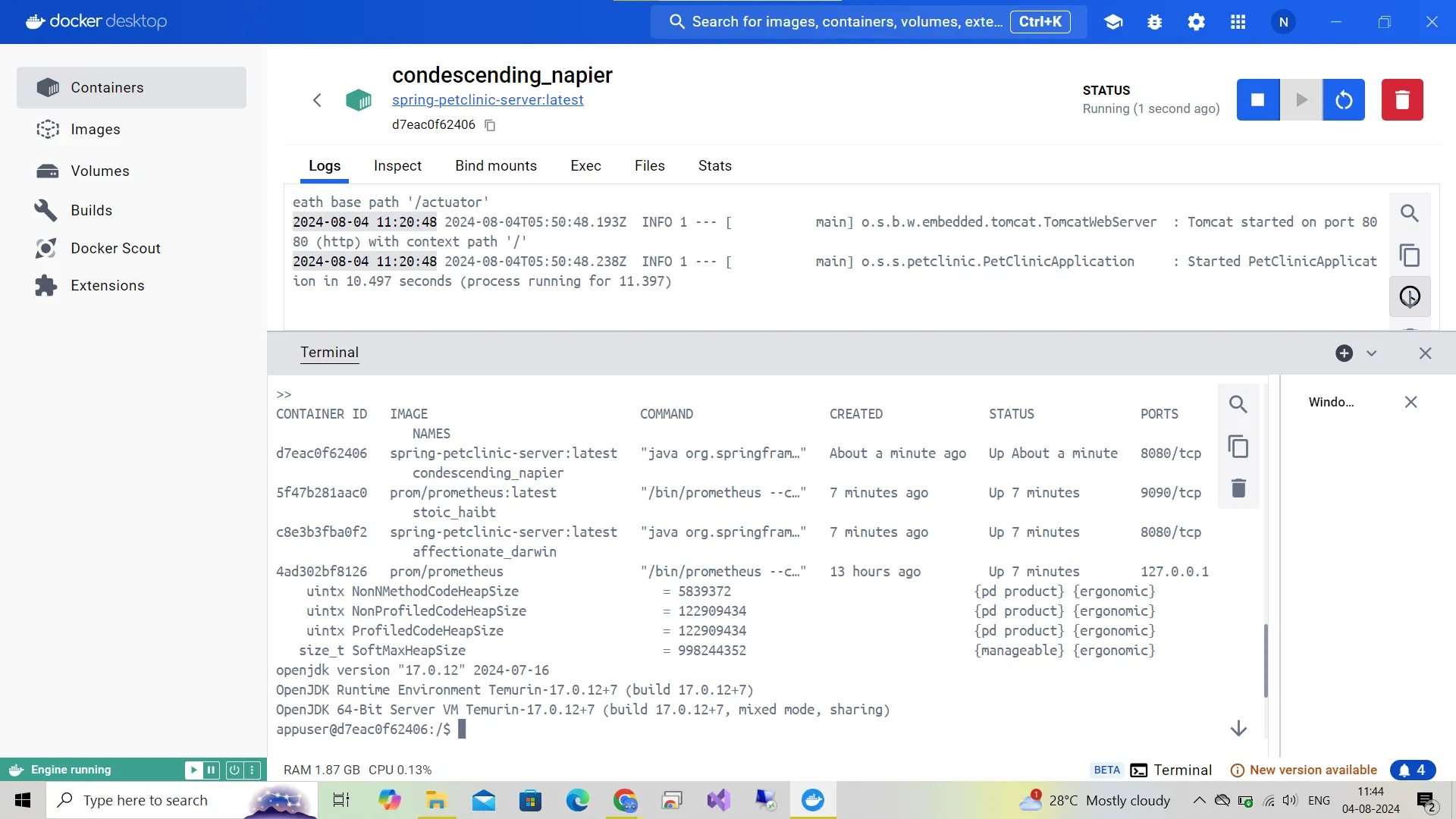

Pull the Fluentd Image: If you haven't already pulled the Fluentd image, you can do so using the

docker pullcommand.docker pull fluent/fluentdRun the Fluentd Container: Start the Fluentd container without specifying a custom configuration file. The default configuration will be used.

docker run -d -p 24224:24224 fluent/fluentdThe above command instructs docker to start a new container in

di.e. detached mode(in background). Thep 24224:24224tells it to map port 24224 on the host to port 24224 in the container. This allows Fluentd to receive logs on this port. Lastly,fluent/fluentdspecifies the Fluentd image to use.This directs container logs to a Fluentd instance running on localhost:24224.

To perform the same using the fluent.conf file in /fluentd/etc/fluent.conf we can add the code below:

logging:

driver: fluentd

options:

fluentd-address: localhost:24224

This is the screen you will see on running the container.

- Establishing Alerting (Optional)

For alerting, you can set up Prometheus Alertmanager or integrate with third-party services like PagerDuty. This allows you to send notifications based on defined alert rules, helping you quickly respond to any issues that arise in your Dockerized environment.

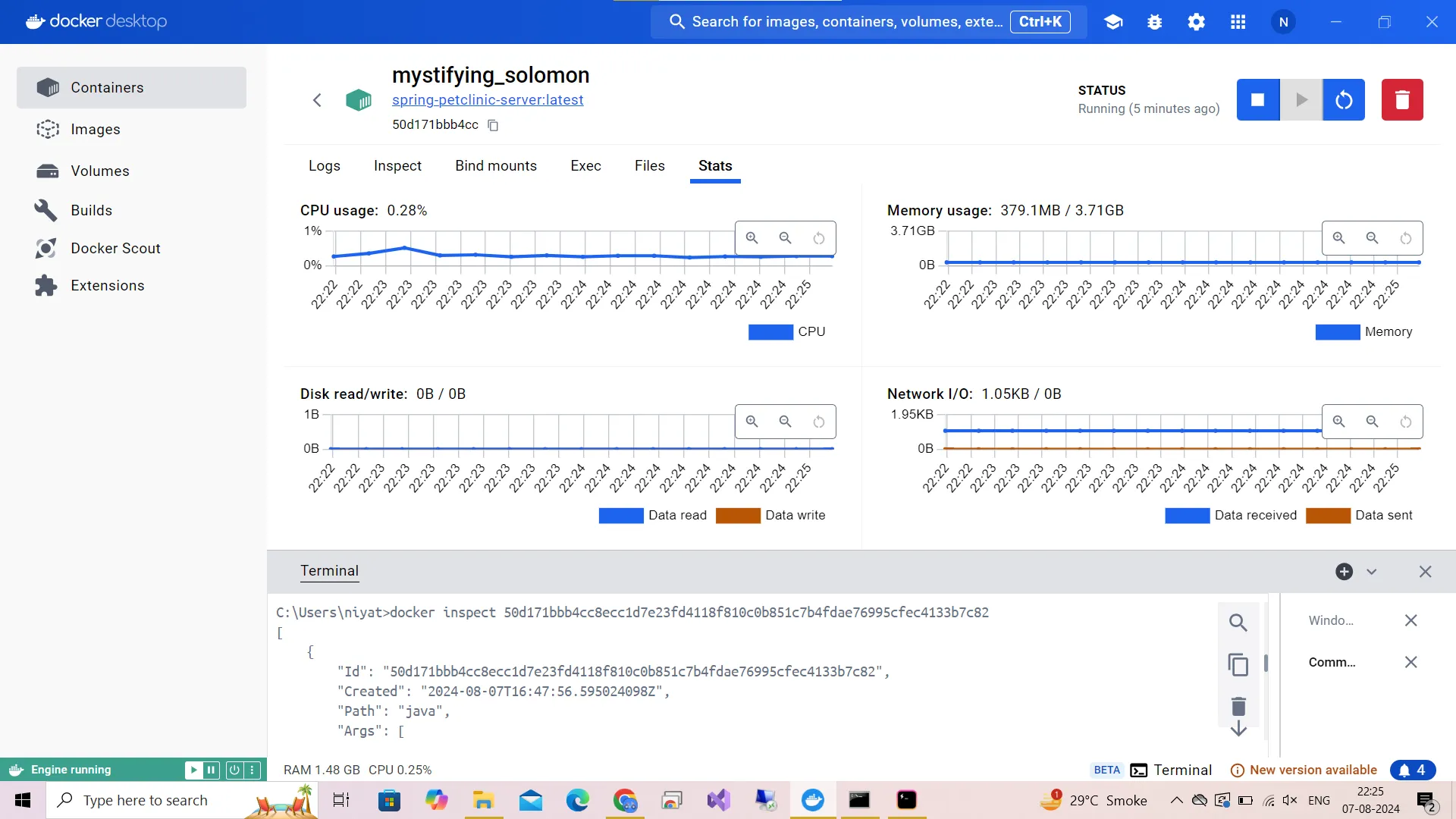

Key Metrics to Monitor for Java Apps in Docker

To ensure optimal performance of your Java application in Docker, focus on these key metrics:

- CPU Usage and Throttling:

- Monitor container CPU usage and any throttling events.

- Track JVM CPU consumption within the container.

- Memory Utilization:

- Observe container memory usage and limits.

- Monitor JVM heap usage and garbage collection metrics.

- Network I/O:

- Track network traffic in and out of the container.

- Monitor connection pool statistics for database or service connections.

- Container-Specific Metrics:

- Restart count: Indicates stability issues.

- Uptime: Helps identify unexpected restarts or crashes.

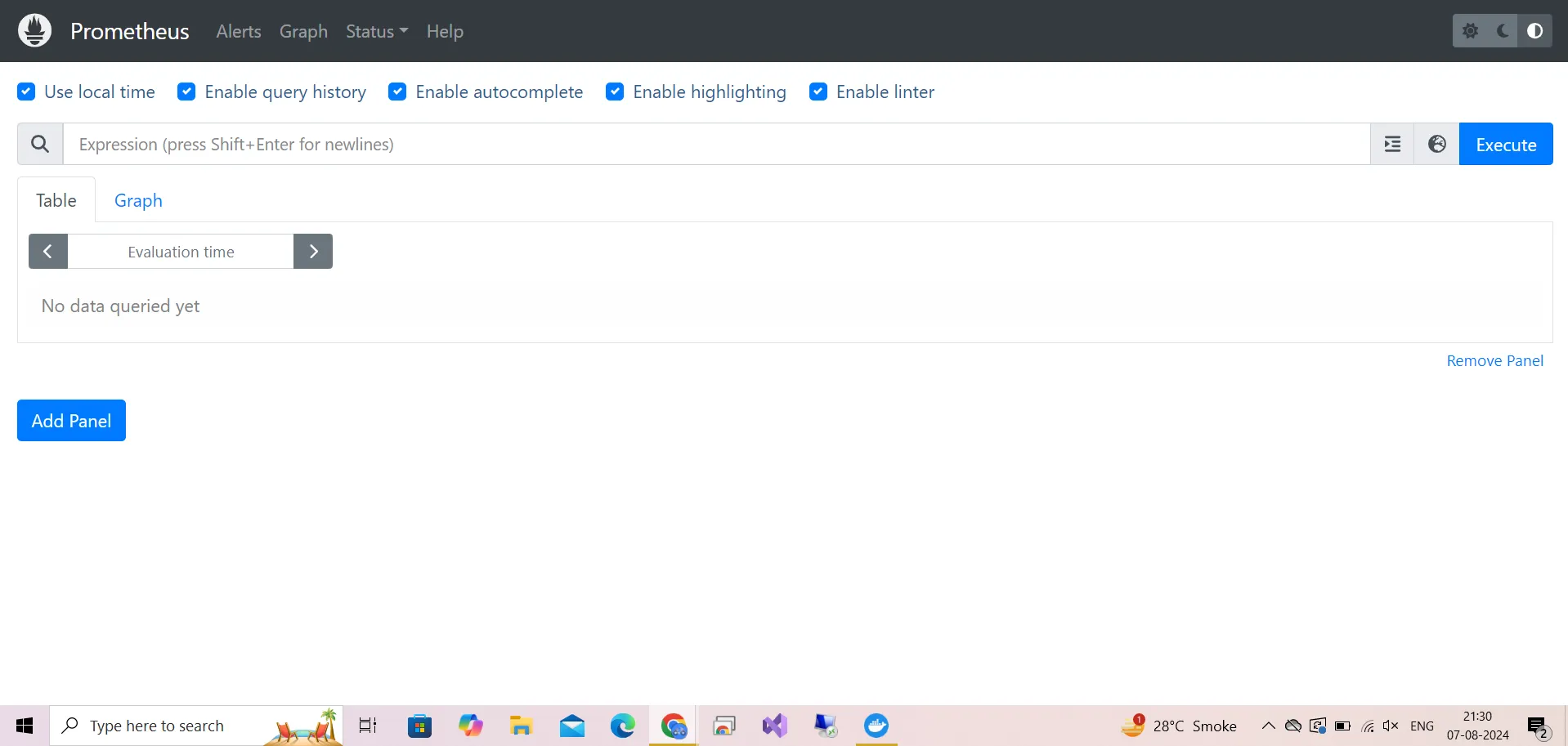

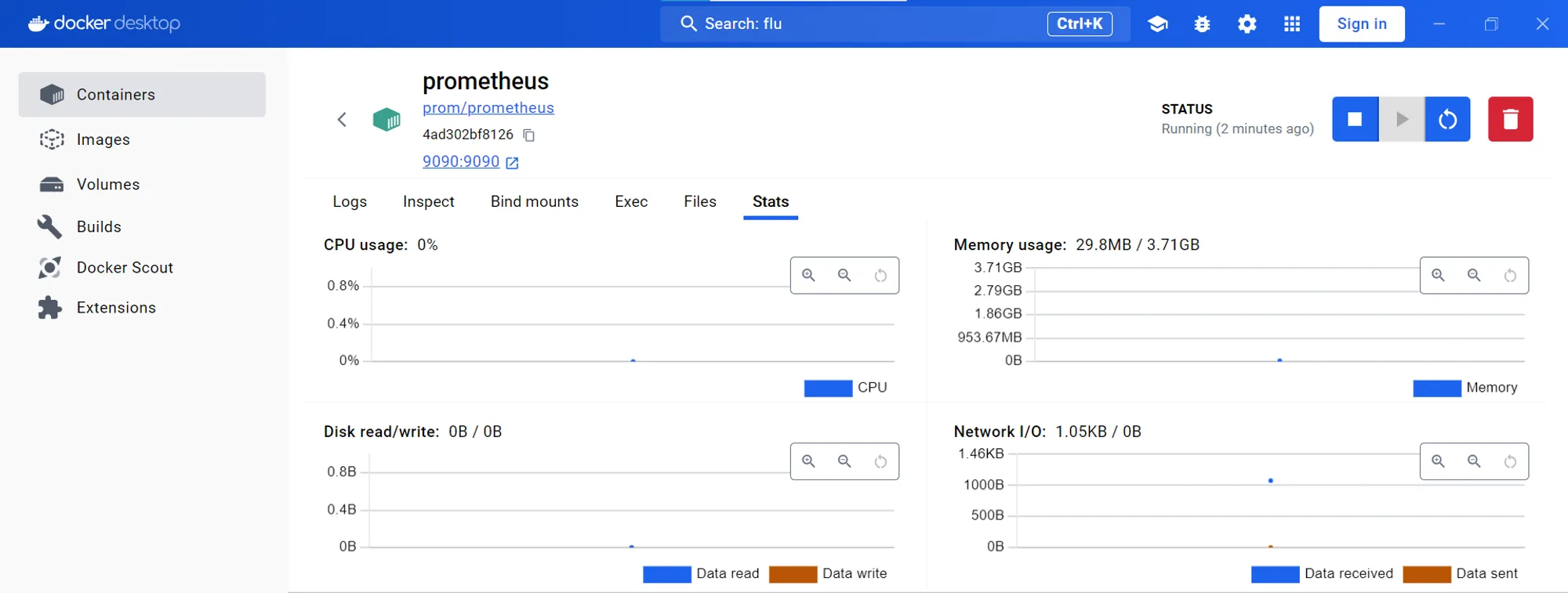

Prometheus can be used to monitor stats. Docker provides a tab to view the stats as well, and we also open the website locally on the local host server for the UI.

Prometheus can be configured to collect and visualize metrics from Docker containers, Java applications, and other services. It provides:

- Detailed Metrics: Custom metrics collection and complex queries using PromQL.

- Alerts: Set up alerts based on metric thresholds.

- Visualization: Integration with Grafana for advanced dashboards and data visualization.

To access the Prometheus UI:

Navigate to

http://localhost:<localhost portnumber>in your web browser or view it in Docker stats. By default the port number is9090:9090.

link to prometheus local host

Prometheus Browser page As we can see it provides various options for us like Graphs, Alerts, Status, etc.

Use PromQL to query metrics and visualize them.

Visualizing Prometheus Stats

We can also use SigNoz with the Docker to monitor and analyze our Java Applicaiton. To instrument your Java application to send metrics to SigNoz. You can use OpenTelemetry for this purpose.

Setting Up SigNoz with Docker

To install SigNoz and monitor your applications, follow these steps:

Clone the SigNoz Repository:

git clone -b main https://github.com/SigNoz/signoz.git && cd signoz/deploy/Run SigNoz Using Docker Compose:

docker compose -f docker/clickhouse-setup/docker-compose.yaml up -dVerify Installation:

docker psEnsure all containers are running correctly.

Access the Dashboard:

- Open your browser and navigate to

http://localhost:3301/.

- Open your browser and navigate to

For detailed installation steps and additional options, refer to the SigNoz Docker Installation Guide.

Interpreting Java-Specific Metrics in Docker Context

Understanding Java metrics in a containerized environment requires special consideration:

JVM Heap Usage: Container memory limits can affect JVM memory allocation. Ensure your JVM settings align with container resources.

Run the command to list the running containers

docker psRun the command with the container ID to print the JVM heap usage.

java -XX:+PrintFlagsFinal -version | grep HeapSize

JVM heap usage in Docker

CPU, memory usage and other stats Garbage Collection: Analyze GC patterns to optimize memory usage and reduce performance impact.

Memory Leaks: Identify potential leaks by monitoring long-term memory usage trends.

Thread States: Correlate Java thread states with container resource usage to pinpoint performance bottlenecks.

Troubleshooting Common Issues in Dockerized Java Apps

When issues arise in your containerized Java application, consider these common problems and their solutions:

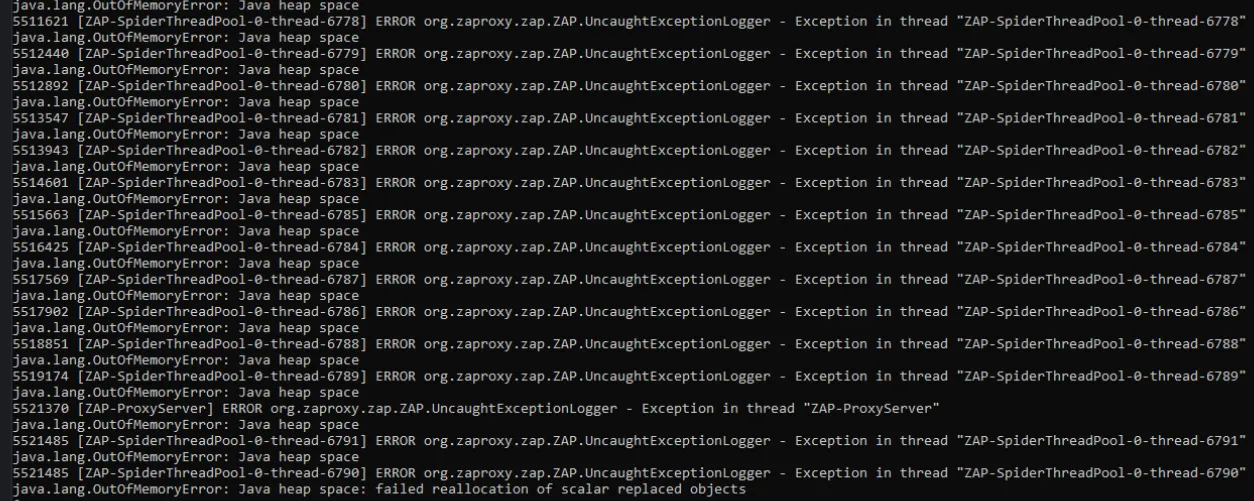

Java OutOfMemoryErrors:

- Cause: Insufficient memory allocation or memory leaks.

The error may look something like this in the terminal

Java out of memory error Possible Solutions:

Adjust container memory limits and JVM heap settings as follows:

- Increase Heap Size

java -Xms512m -Xmx2048m -jar your-app.jar- Identify and Fix memory leaks

java -XX:+HeapDumpOnOutOfMemoryError -XX:HeapDumpPath=/path/to/dumps -jar your-app.jar- Optimize JVM settings for frequent Garbage collections

java -XX:+UseG1GC -XX:MaxGCPauseMillis=200 -jar your-app.jar- Restarting the container can also sometimes solve the issue.

CPU Throttling:

- Cause: Container CPU limits are too restrictive.

- Possible Solutions:

Increase CPU limits or optimize Java code for better CPU utilization. The

docker updatecommand can be useful to increase the limits of the CPU.docker update --cpu-shares 5120 -m 3000M ml-tmContinuously monitor CPU utilization to identify trends and potential issues.

Network-Related Issues:

- Cause: Misconfigured network settings or connection pool exhaustion.

- Possible Solutions:

- Verify Docker network configuration; optimize connection pool settings.

- Always close connections in a final block or use try-with-resources to automatically close connections. Unused open connections until not collected by the garbage collector occupy the space.

- Implement mechanisms to detect and log connection leaks. Some connection pool libraries, like HikariCP, provide built-in leak detection features.

Classpath and Dependency Problems:

- Cause: Incorrect JAR file inclusion or version conflicts.

- Possible Solutions:

- Review the Docker image build process; use dependency management tools like Maven or Gradle.

- Make sure to use the latest stable versions only, but if there are still some dependency issues, different classloaders can be used. This might be complicated to implement and give unexpected problems which need to be addressed.

- Maven can be customized to run with different jar files of the same plugin. The

<executions>and<executions>tags are used.

Advanced Debugging Techniques

For complex issues, employ these advanced debugging methods:

- Remote Debugging:

Enable remote debugging in your Docker container:

ENV JAVA_TOOL_OPTIONS -agentlib:jdwp=transport=dt_socket,server=y,suspend=n,address=*:5005Configures the JVM to start a remote debugging server that listens on port 5005.

transport=dt_socketspecifies socket transport,server=ymeans the JVM acts as the debug server,suspend=nmeans the JVM won't wait for a debugger to connect before starting, andaddress=*allows connections from any IP.

- JVM Flight Recorder:

JVM Flight Recorder (JFR) is a feature of the Java Virtual Machine (JVM) that provides a comprehensive and low-overhead way to collect detailed performance data and diagnostic information.

docker exec -it <container_id> jcmd <pid> JFR.startStarts Java Flight Recorder for the JVM process with the given PID, capturing detailed runtime information for performance analysis. You can also specify options like recording duration and file output.

- Distributed Tracing:

- Implement OpenTelemetry for end-to-end request tracing across microservices. To learn more about OpenTelemetry visit.

- Thread Dumps and Heap Analysis:

Generate thread dumps, useful for diagnosing thread-related issues such as deadlocks or performance bottlenecks.:

docker exec -it <container_id> jcmd <pid> Thread.printOutputs the state of all threads in the JVM process with the given PID, including their stack traces.

Perform heap analysis using tools like jmap and MAT (Memory Analyzer Tool).

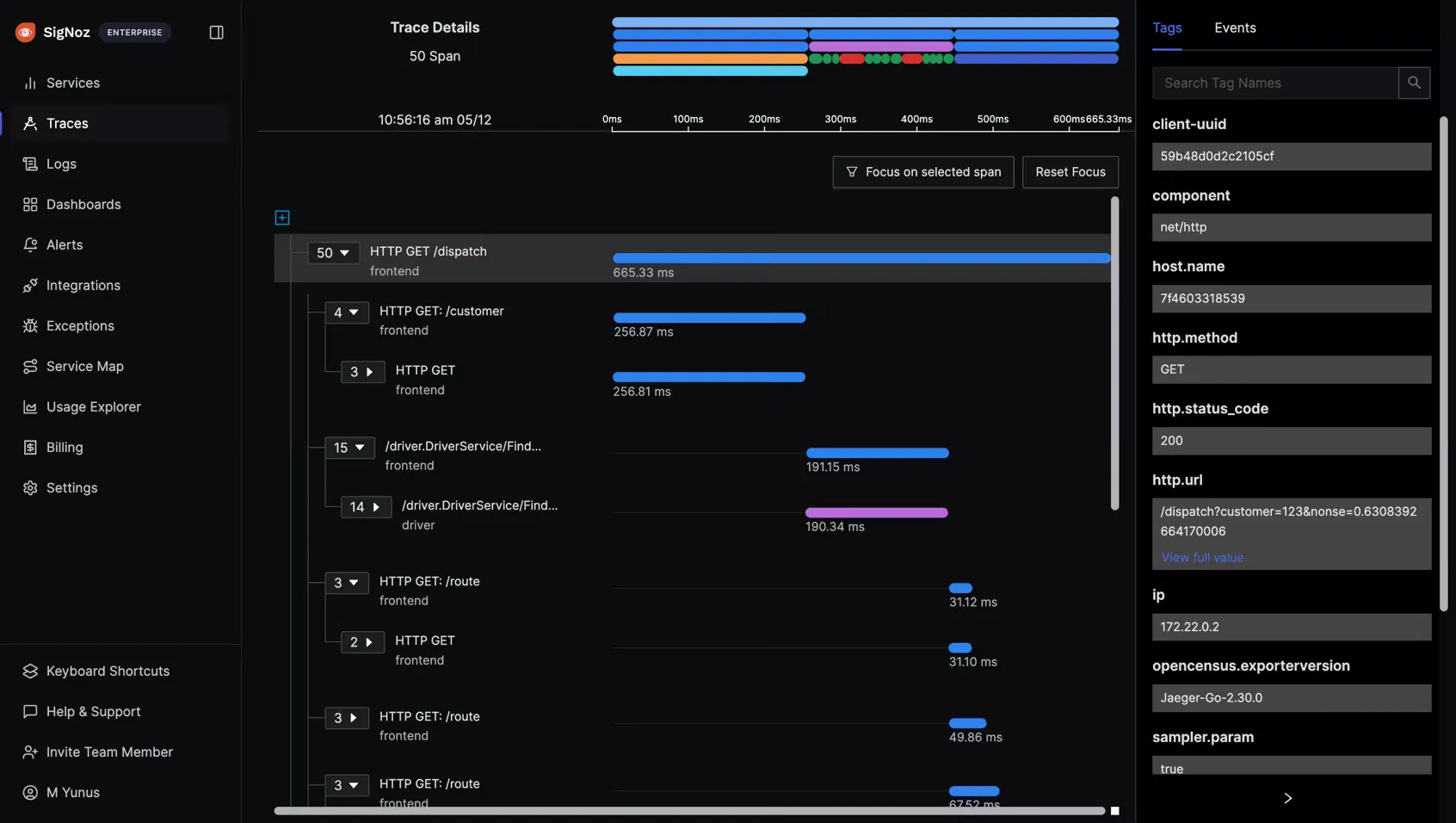

Monitoring Java App with SigNoz

SigNoz provides a comprehensive solution for monitoring Java applications in Docker containers.

SetUp SigNoz

SigNoz Cloud is the easiest way to run SigNoz. Sign up for a free account and get 30 days of unlimited access to all features.

You can also install and self-host SigNoz yourself since it is open-source. With 24,000+ GitHub stars, open-source SigNoz is loved by developers. Find the instructions to self-host SigNoz.

Instrument your Java application with OpenTelemetry.

Configure the OpenTelemetry agent to send data to SigNoz.

View metrics, traces, and logs in the SigNoz dashboard.

For detailed instructions, refer to the SigNoz Java monitoring guide.

Best Practices for Java App Monitoring in Docker

Follow these best practices to optimize your Java app monitoring in Docker:

- Implement proper resource limits and requests:

- Set appropriate CPU and memory limits for your containers.

- Use resource requests to ensure minimum required resources.

- Optimize JVM settings for containerized environments:

- Use the

XX:+UseContainerSupportflag for better resource awareness. - Set appropriate heap size limits based on container memory.

- Use the

- Design effective logging strategies:

- Use structured logging formats (e.g., JSON) for easier parsing.

- Implement log rotation to manage log file sizes.

- Implement health checks and readiness probes:

- Add Docker health checks to monitor application status.

- Use Kubernetes readiness probes for proper service discovery.

Key Takeaways

- Monitoring Java apps in Docker requires both container and JVM-specific tools.

- Focus on key metrics like CPU, memory, GC, and container-specific data.

- Troubleshoot by correlating container metrics with Java-specific issues.

- Implement best practices for resource management and JVM optimization.

FAQs

How does Docker affect Java application performance?

Docker can impact Java app performance through resource constraints and isolation. Proper configuration of both Docker and JVM settings is crucial for optimal performance.

What are the best tools for monitoring Java apps in Docker?

A combination of container monitoring tools (e.g., cAdvisor, Prometheus) and Java-specific tools (e.g., JConsole, VisualVM) provides comprehensive monitoring. APM solutions like SigNoz offer integrated monitoring for containerized Java apps.

How can I diagnose memory leaks in a Dockerized Java application?

Use JVM heap analysis tools in conjunction with container memory metrics. Monitor long-term memory usage trends and analyze heap dumps to identify potential leaks.

What JVM settings should I optimize for Java apps running in containers?

Key settings include -XX:+UseContainerSupport, appropriate heap size limits, and garbage collection tuning. Adjust these based on your container's resource limits and application requirements.

Starts Java Flight Recorder for the JVM process with the given PID, capturing detailed runtime information for performance analysis. You can also specify options like recording duration and file output.