Implementing Observability Architecture - A Practical Guide

Observability architecture is crucial for maintaining and optimizing modern, complex IT systems. As applications grow in scale and complexity, traditional monitoring approaches fall short. This guide will walk you through the process of implementing an effective observability architecture, from understanding its core concepts to practical implementation strategies.

What is Observability Architecture?

Observability architecture is a framework that allows you to understand the internal state of your IT systems based on their external outputs. It goes beyond traditional monitoring by providing deeper insights into system behavior, performance, and health.

The three main pillars of observability are:

- Logs: Detailed records of events within your system.

- Metrics: Quantitative measurements of system performance over time.

- Traces: Records of requests as they flow through distributed systems.

While logs, metrics, and traces are the three main pillars of observability, they are not the only components that contribute to a comprehensive observability strategy. Other important pillars include:

- Profiles: Profiles provide detailed insights into the performance characteristics of your application, such as CPU and memory usage at the code level. This helps identify performance bottlenecks and optimize resource usage in your system.

- Events: Events capture significant occurrences within your system, such as deployments, configuration changes, or incidents. By correlating these events with logs, metrics, and traces, you can gain a deeper understanding of how specific actions impact your system's behavior and performance.

Unlike traditional monitoring, which focuses on predefined sets of known issues, observability allows you to investigate and resolve unforeseen problems. It provides a holistic view of your system, enabling you to ask new questions and gain insights you didn't anticipate needing.

Key components of an observability architecture include:

- Data Collection Mechanisms: These are the tools and processes used to gather telemetry data (logs, metrics, traces) from various sources such as applications, infrastructure, and external services. Examples include instrumentation libraries like OpenTelemetry, agents like Fluentd, and exporters that collect and forward data to your observability system.

- Data Storage and Processing Systems: Once data is collected, it needs to be stored and processed efficiently. This involves databases and processing engines that can handle large volumes of data while allowing for quick retrieval and analysis. Common choices include Elasticsearch for logs, Prometheus for metrics, and distributed tracing systems like Jaeger or Zipkin.

- Visualization and Alerting Tools: These tools allow you to monitor the collected data in real time and set up alerts for potential issues. Visualization tools like Grafana or Kibana provide dashboards that help you understand trends and patterns in your data. Alerting systems notify you when certain thresholds are breached, enabling rapid response to potential problems.

- Analysis and Correlation Engines: These components are responsible for making sense of the collected data by correlating logs, metrics, and traces. They use algorithms and sometimes AI/ML models to detect anomalies, predict failures, and provide insights that are crucial for troubleshooting and optimizing system performance. SigNoz excels here by offering built-in correlation capabilities that link metrics, logs, and traces, allowing for a more comprehensive analysis of your system’s behavior.

Why Implement Observability Architecture?

Implementing an observability architecture offers several significant benefits:

- Improved System Reliability: By providing deep insights into system behavior, observability helps you identify and resolve issues before they impact users.

- Faster Incident Response: With comprehensive data at your fingertips, you can quickly pinpoint the root cause of problems and reduce Mean Time To Resolution (MTTR).

- Enhanced Decision-Making: Data-driven insights enable better decisions about system optimization, capacity planning, and feature development.

- Cost Optimization: By understanding resource usage patterns, you can allocate resources more efficiently and reduce unnecessary expenses.

Designing an Effective Observability Architecture

To design an effective observability architecture, follow these steps:

- Establish Clear Goals: Start by defining what you want to achieve with observability. Consider whether your focus is on improving system performance, enhancing security, optimizing user experience, or something else. Clear goals will guide your decisions and help prioritize your efforts.

- Choose Data Collection Strategies: Decide on the methods you'll use to collect logs, metrics, and traces from your systems. This might involve deploying agents on your servers, integrating SDKs into your applications, or using API-based approaches. Make sure your chosen strategies align with your goals and can capture the necessary data comprehensively.

- Implement Scalable Architecture: As your application grows, so will the volume of telemetry data. Design your observability architecture to scale easily, ensuring it can handle increasing data volumes without compromising performance. This might involve using distributed systems, load balancing, and cloud-native technologies to maintain efficiency as demand increases.

- Ensure Data Security: Observability data often includes sensitive information, so it's crucial to protect it. Implement encryption to secure data in transit and at rest. Use access controls to restrict who can view or manipulate the data. Additionally, ensure your observability practices comply with relevant regulations and industry standards, such as GDPR or HIPAA, to maintain trust and avoid legal issues.

Key Design Principles

- Ease of Use: Design user-friendly interfaces and workflows that are easy for everyone to use. This helps teams across the organization quickly get on board and start using the tools without much hassle.

- Automated Correlation: Set up systems that automatically connect and analyze logs from different sources. This makes it easier to spot and fix issues quickly, reducing downtime and keeping everything running smoothly.

- Cloud-Native Approach: Leverage cloud services for scalability and flexibility in modern, distributed environments.

- AI/ML Integration: Integrate artificial intelligence and machine learning to provide advanced data analysis. This helps teams identify trends, predict problems before they happen, and make better decisions based on the insights gained.

Building the Observability Pipeline

An effective observability pipeline is essential for collecting, processing, and analyzing telemetry data from your systems. It typically consists of four main stages:

- Data Ingestion: This is the first step where you collect logs, metrics, and traces from various sources within your system, such as applications, servers, and network devices. It's important to use standardized formats and protocols, like OpenTelemetry, to ensure consistency and compatibility across different tools and platforms.

- Data Processing: Once the data is ingested, it needs to be processed to make it more useful. This stage involves filtering out unnecessary data, aggregating information to reduce volume, and enriching the data by adding context (e.g., metadata or tags). For example, you might parse logs to extract key fields, calculate derived metrics from raw data, or add contextual information to traces to make them easier to analyze.

- Data Storage: After processing, the data needs to be stored in a way that allows for efficient retrieval and analysis. Different types of data are best suited to different types of databases. For instance, time-series databases like Prometheus are ideal for storing metrics, as they can handle large volumes of timestamped data. Document stores like Elasticsearch are well-suited for logs, and ClickHouse, used by tools like SigNoz, is effective for storing traces due to its high-performance querying capabilities.

- Data Visualization: The final stage is making the data actionable through visualization and alerting. This involves creating dashboards that provide a clear, real-time view of your system’s health and performance. Tools like Grafana are popular for building these dashboards, but you can also create custom user interfaces tailored to your specific needs. Additionally, set up alerts that notify your team when critical thresholds are breached, enabling quick response to potential issues.

Implementing Tracing in Your Architecture

Distributed tracing is essential for gaining visibility into the flow of requests across complex, microservices-based systems. Here’s how to implement tracing effectively:

- Understand Key Concepts: Before diving into implementation, it's important to understand the fundamental concepts of distributed tracing. Key terms include:

- Spans: Individual units of work within a trace, representing specific operations or steps in a request’s journey through the system.

- Trace IDs: Unique identifiers that link all spans together, forming a complete trace that shows the end-to-end journey of a request.

- Context Propagation: The process of passing trace information (like trace IDs and span IDs) across service boundaries, ensuring that the entire trace is preserved as a request moves through different components.

- Instrument Applications: To capture traces, you need to add trace points to your application code. This is done by instrumenting your applications with tracing libraries or SDKs. OpenTelemetry is a widely used, vendor-neutral option that supports multiple programming languages and integrates well with various observability backends. By instrumenting your code, you ensure that each service in your system generates and reports spans, contributing to the overall trace.

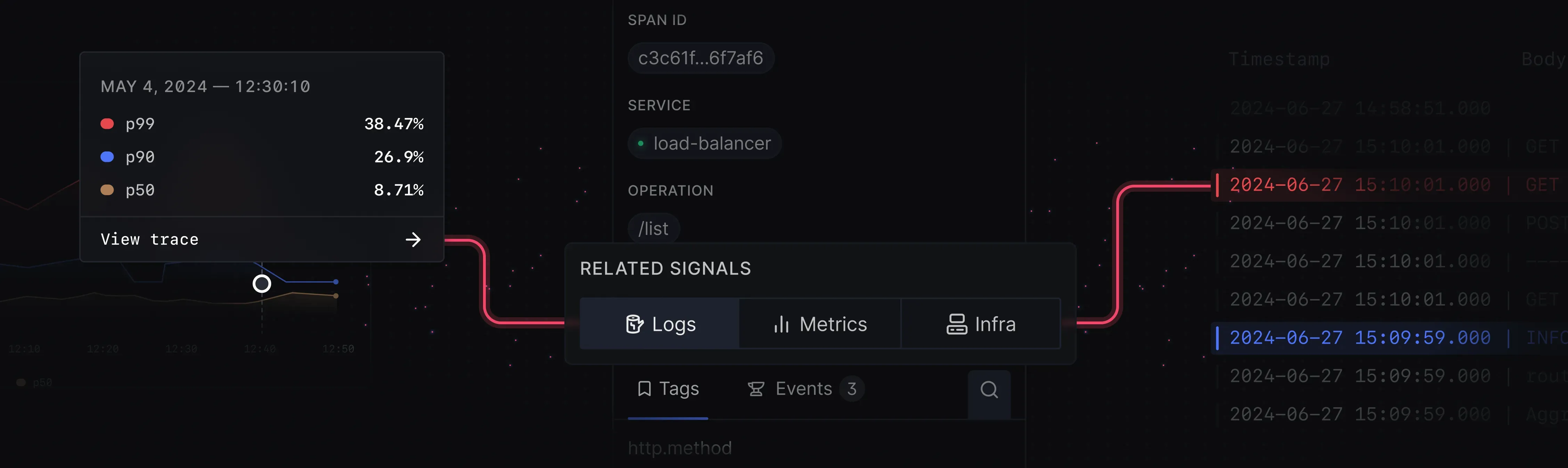

- Correlate with Logs and Metrics: For a comprehensive view of your system’s performance, it’s crucial to link traces with related logs and metrics. This correlation allows you to see how a specific trace correlates with logs generated during its execution or how metrics like response times are affected by particular traces. Ensuring that your tracing solution supports this integration will provide deeper insights and make troubleshooting more efficient.

- Implement Sampling: In high-volume systems, capturing every single trace can be overwhelming and resource-intensive. To manage this, implement sampling strategies that reduce the amount of tracing data while still capturing enough information to be useful. Intelligent sampling methods, such as retaining traces with errors or high latency, can help maintain a representative dataset without overloading your storage or processing resources.

Leveraging Metrics for System Health Insights

Metrics are essential for quantifying your system's performance and overall health. To effectively leverage metrics, follow these steps:

- Identify Key Performance Indicators (KPIs): Start by defining the metrics that are most critical to your business and technical goals. KPIs might include response times, error rates, throughput, and resource utilization (like CPU and memory usage). These metrics should directly reflect the health and performance of your application, helping you focus on what truly matters.

- Implement Custom Metrics: In addition to standard metrics, create custom metrics that are tailored to your application’s specific behavior and business logic. For example, if you run an e-commerce platform, you might track the number of successful checkouts or the average time users spend in the checkout process. Custom metrics give you deeper insights into areas that are unique to your application, enabling more precise monitoring and optimization.

- Set Up Meaningful Alerts: Configure alerts based on the thresholds of your key metrics. For instance, you might set an alert for when CPU usage exceeds 80% or when error rates surpass a certain percentage. These alerts should be actionable, meaning they indicate a situation that requires attention before it escalates into a major issue. Properly tuned alerts help your team respond quickly to potential problems, reducing downtime and maintaining system reliability.

- Use Metrics for Capacity Planning: Regularly analyze your metrics to understand trends and predict future resource needs. For example, if your metrics show a steady increase in traffic, you might need to plan for additional server capacity to handle the load. By using metrics in capacity planning, you can optimize resource allocation, avoid bottlenecks, and ensure that your system scales effectively as demand grows.

Maximizing the Value of Logs

Logs provide detailed context for system events. To extract maximum value from logs:

- Implement structured logging: Use a consistent, structured format for your logs, such as JSON. Structured logs are easier to parse, search, and analyze because they follow a predictable pattern. This consistency allows you to automate the extraction of key fields, filter logs based on specific criteria, and perform more advanced queries, making your logs much more useful for troubleshooting and analysis.

- Centralize Log Management: Aggregate logs from all sources into a central repository for unified analysis. Tools like Elasticsearch, Logstash, and Kibana (the ELK stack) can help you achieve unified log management and analysis.

- Use Log Analytics: Utilize log analytics tools to gain insights from your log data. These tools can help you identify patterns, detect anomalies, and correlate events across your system. For example, you can use them to spot trends in error logs, understand the sequence of events leading to an incident, or monitor application behavior over time.

- Balance Retention and Cost: Logs can grow rapidly in volume, leading to significant storage costs. To manage this, implement log retention policies that balance the need to keep historical data for troubleshooting, auditing, or compliance purposes with the costs of storing that data. For instance, you might retain critical logs for a longer period while archiving or discarding less important logs after a shorter time.

Integrating SigNoz for Comprehensive Observability

SigNoz is an open-source observability platform that can significantly enhance your observability architecture. Here's how to leverage SigNoz:

- Installation: SigNoz offers both cloud and self-hosted options. Choose the one that best fits your needs.

- Data Collection: Use OpenTelemetry’s SDKs and agents to collect logs, metrics, and traces from your applications.

- Visualization: Utilize SigNoz's built-in dashboards or create custom views for your specific needs.

- Alerting: Set up alerts based on logs, metrics, or trace data to proactively identify issues.

SigNoz Cloud is the easiest way to run SigNoz. Sign up for a free account and get 30 days of unlimited access to all features.

You can also install and self-host SigNoz yourself since it is open-source. With 24,000+ GitHub stars, open-source SigNoz is loved by developers. Find the instructions to self-host SigNoz.

Overcoming Common Challenges in Observability Implementation

Implementing an observability architecture requires addressing several key considerations:

- Data Volume: Use intelligent sampling and aggregation to manage high data volumes without losing important insights.

- Cost Management: Optimize data retention policies and leverage cloud-native storage solutions to control costs.

- Data Quality: Implement standardized logging practices and data validation to ensure consistent, high-quality data.

- Cultural Adoption: Foster a culture of observability by providing training and highlighting the benefits to all teams.

Future Trends in Observability Architecture

As technology evolves, so does observability. Keep an eye on these emerging trends:

- AI-Driven Anomaly Detection: Machine learning and AI are becoming more common in observability. These technologies will help automatically detect unusual patterns in your system's data, predicting potential issues before they become major problems. This means less manual monitoring and faster responses to issues.

- Edge Computing Observability: With the rise of edge computing, where data processing happens closer to the source (like IoT devices), observability tools will need to adapt. Observability solutions will become more capable of handling data from distributed, low-latency environments, ensuring that even edge systems are monitored effectively.

- AIOps Integration: AIOps (Artificial Intelligence for IT Operations) is about using AI to automate IT operations. Observability tools will increasingly integrate with AIOps platforms, allowing for automated responses to incidents. This means that instead of just alerting you to a problem, the system might automatically take steps to fix it.

- Advanced Visualization: As systems grow more complex, understanding their behavior becomes more challenging. New techniques in data visualization will make it easier to explore and interpret complex data, helping you quickly grasp what's happening in your system and where attention is needed.

Key Takeaways

- Observability architecture is essential for managing modern, complex IT systems.

- Implement a holistic approach covering logs, metrics, and traces.

- Design your architecture for scalability, flexibility, and ease of use.

- Leverage tools like SigNoz for comprehensive observability solutions.

- Continuously evolve your observability strategy to meet changing needs.

FAQs

What's the difference between monitoring and observability?

Monitoring focuses on tracking predefined sets of metrics and logs to detect known issues. Observability, on the other hand, provides a more comprehensive view of your system, allowing you to investigate and understand unforeseen issues by exploring data from multiple angles.

How do I choose the right observability tools for my organization?

Consider factors such as your tech stack, scale of operations, budget, and specific observability needs. Look for tools that support all three pillars of observability (logs, metrics, and traces), offer good integration capabilities, and provide user-friendly interfaces. Open-source solutions like SigNoz can be a good starting point.

Can observability architecture help with compliance and security?

Yes, observability can significantly enhance compliance and security efforts. By providing comprehensive logs and audit trails, it helps in meeting regulatory requirements. Additionally, observability can help detect security anomalies and provide insights for forensic analysis in case of security incidents.

How do I calculate the ROI of implementing an observability architecture?

To calculate ROI, consider factors such as:

- Reduction in downtime and associated costs

- Improved efficiency in troubleshooting and debugging

- Enhanced customer satisfaction due to better system performance

- Optimized resource allocation and associated cost savings

Compare these benefits against the costs of implementing and maintaining the observability architecture.