Understanding Observability Pipelines - A Practical Guide

Observability pipelines are specialized systems that streamline the collection, processing, and analysis of telemetry data (such as logs, metrics, and traces), enabling organizations to gain deep insights into their application’s performance, security, and behavior. By centralizing data management, enhancing data quality, and optimizing costs, observability pipelines are indispensable for modern DevOps and Security teams.

This article delves into the key components, benefits, challenges, and best practices for implementing best practices, while also exploring future trends that are shaping their evolution.

What is an Observability Pipeline?

An observability pipeline, also known as a telemetry pipeline, manages, optimizes, and analyzes telemetry data(metrics, logs, traces) from several sources, ensuring that this data is processed and sent to the appropriate observability tools.

Observability is the ability to understand and diagnose the behavior and performance of a system by examining its outputs. It entails gathering, monitoring, and analyzing telemetry data to acquire a thorough knowledge of system behavior, performance, and potential security risks.

Observability pipelines parse and share data into the correct format, route it to the appropriate observability tools, optimize it by reducing low-value data, enrich it with additional context, and enable teams to make informed decisions while significantly reducing costs.

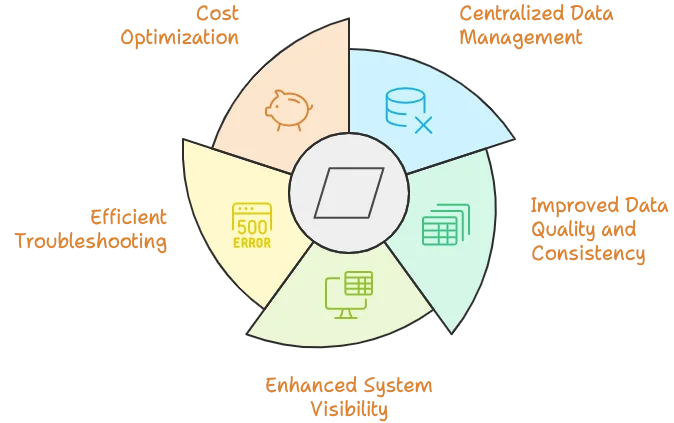

Benefits of Implementing an Observability Pipeline

- Centralized Data Management Observability pipelines streamline the collection, processing, and storage of observability data, making it easier to manage large volumes of data efficiently.

- Improved Data Quality and Consistency: By filtering and enriching raw data, observability pipelines ensure that only relevant, high-quality data is used for analysis, leading to more meaningful insights.

- Enhanced System Visibility: Observability pipelines correlate data from various sources, providing a comprehensive view of the system’s performance and health.

- Efficient Troubleshooting: With organized and accessible data, observability pipelines enable faster identification and resolution of issues, reducing downtime.

- Cost Optimization: By managing data efficiently, observability pipelines help reduce storage and processing costs, optimizing overall expenses.

Why Observability Pipelines are Crucial for Modern Systems

Modern distributed systems generate massive amounts of telemetry data from various sources. This data explosion presents several challenges:

- Data Volume and Variety: Logs, metrics, and traces are produced at an unprecedented scale, requiring methods to handle and analyze this data efficiently.

- Data Quality: Raw data is often noisy and inconsistent, making it difficult to extract meaningful insights without proper processing.

- System Complexity: Microservices, containerization, and cloud-native architectures introduce numerous components that must be monitored, increasing the complexity of the observability landscape.

- Cost Management: The storage and processing of large volumes of observability data can be prohibitively expensive if not managed efficiently.

How Observability Pipelines Work:

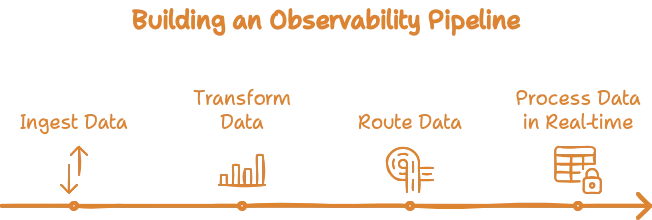

Observability pipelines operate through a series of stages that ensure the efficient handling of data from collection to analysis:

- Data Ingestion: The pipeline begins with data collectors and agents, which gather data from various sources, including application logs, system metrics, and distributed traces.

- Data Transformation: Raw data undergoes transformation, where it is normalized, filtered, and enriched with additional context to make it actionable and relevant.

- Data Routing: The Processed data is sent to appropriate destinations, such as time-series databases for metrics, log management systems for logs, and tracing backends for distributed traces.

- Real-time Processing: Many observability pipelines support stream processing, allowing for immediate analysis and alerting on incoming data. This capability is essential for proactive monitoring and rapid response to issues.

Key Components of an Observability Pipeline

- Data Collectors and Agents:

Data collectors and agents are essential for gathering observability data from various sources within an infrastructure or application. These include log collectors, metric collectors, and tracing agents. They capture metrics, logs, and traces, and ensure that this data is transmitted to the central processing layer.

Examples: Fluentd, Logstash, Telegraf, OpenTelemetry Collector.

- Central Processing and Aggregation Layer

The central processing and aggregation layer receives data from collectors/agents, processes it, and prepares it for storage or analysis. This involves normalizing, filtering, and consolidating the data, preparing it for storage and analysis.

Examples: Apache Kafka, Apache Flink, AWS Kinesis.

- Data Storage and Retention Mechanisms

Data storage and retention mechanisms are responsible for efficiently storing processed data and managing its lifecycle. This includes defining how long data is retained and handling archiving and purging.

Examples: Time-series databases for metrics (e.g., InfluxDB, Prometheus), document stores for logs (e.g., Elasticsearch), and specialized tracing backends for distributed traces (e.g., Jaeger, Zipkin).

- Integration with Observability Tools and Platforms

Observability tools ensure that the collected and processed data can be effectively visualized, analyzed, and acted upon. This includes creating dashboards, setting up alerts, and providing insights into system performance. Examples: SigNoz, Grafana, Kibana, Datadog.

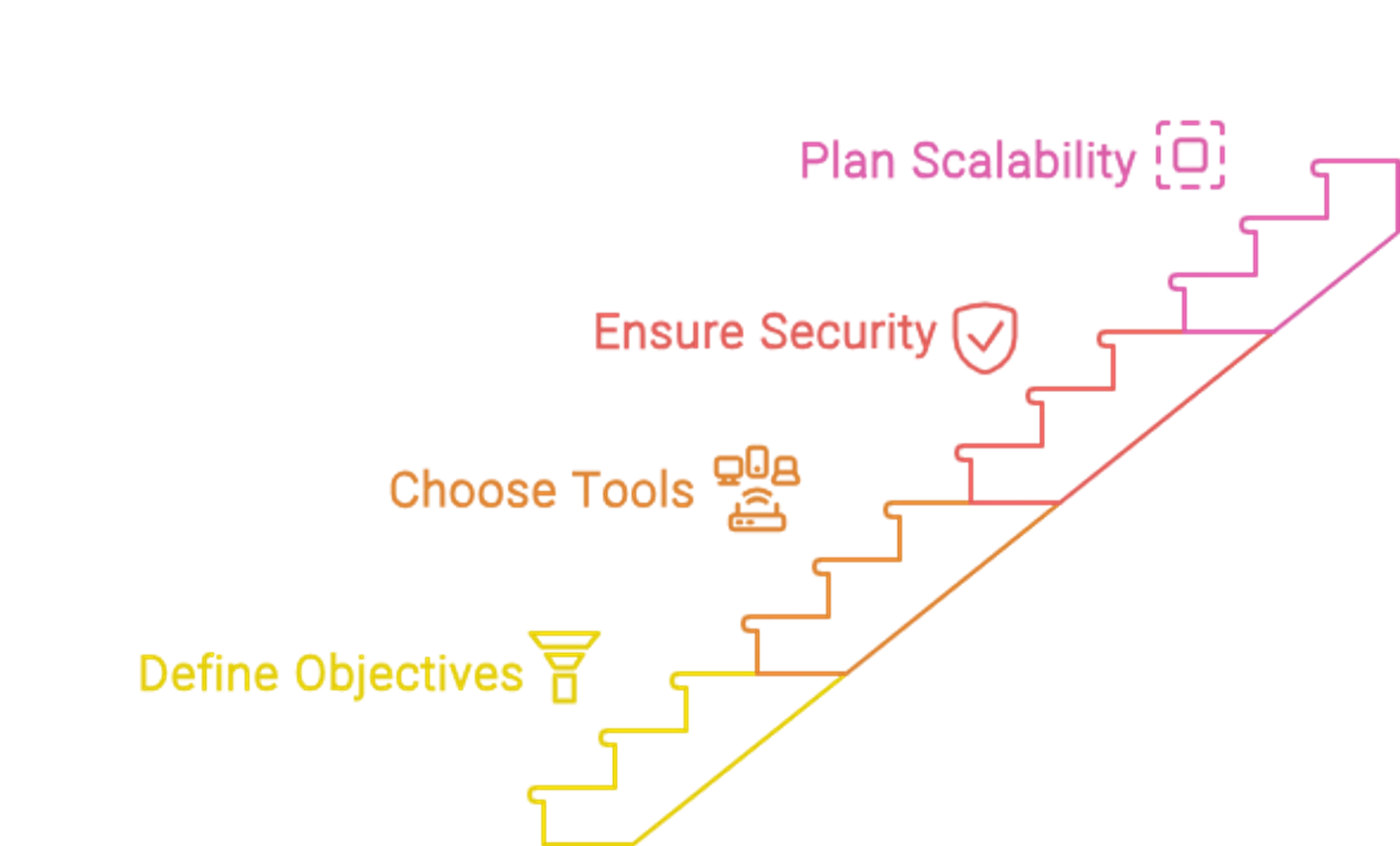

Implementing an Observability Pipeline: Best Practices

Implementing an observability pipeline is a complex task that requires careful planning and execution. Here are some best practices to guide you through the process:

- Define Clear Objectives: Identify what you want to achieve, such as reducing mean time to resolution (MTTR) for incidents or improving application performance. Clear objectives will guide your decisions throughout the implementation process.

- Choose the Right Tools: Select technologies that align with your infrastructure and goals, considering factors like scalability, ease of integration, and community support.

- Ensure Data Security and Compliance: Implement robust security measures to protect sensitive data. This includes using encryption for data in transit and at rest, implementing strict access controls, and ensuring compliance with relevant data protection regulations.

- Plan for Scalability: Design your observability pipeline to handle increasing data volumes as your system grows. Use distributed systems for data processing, implement data sampling techniques, and consider cloud-based solutions for elasticity and scalability.

Common Challenges in Observability Pipeline Implementation

When implementing observability pipelines, you might encounter:

1. Integration Complexity:

Integrating observability tools with existing systems, especially in a microservices architecture, can be complex. Different services may require different monitoring approaches, and ensuring data collection across the entire stack can be difficult.

Solution:

- Standardized Instrumentation: Use standard libraries and frameworks for instrumentation across all services. This ensures consistency in how data is collected and reported.

- Automated Deployment: Integrate observability tools into CI/CD pipelines to automate the deployment and configuration of observability across all services. You can implement this by following the steps.

- Choose an open-source tool for observability (monitoring, tracing, and logging) such as SigNoz.

- Ensure that your application code is instrumented to generate the necessary telemetry data. This can be done by integrating SDKs or libraries specific to the tools you are using.

- Use automated scripts like Ansible playbooks to apply instrumentation across all services during the CI/CD process.

- Integrate observability as a stage in your CI/CD pipeline, alongside common stages such as

- Instrumentation Verification: Ensure that the code is properly instrumented before it is deployed.

- Monitoring Configuration: Automatically configure monitoring tools to start and track the new or updated services.

- Logging Setup: Set up centralized logging configurations, such as sending logs to a SigNoz cloud.

- Add automated tests to verify that observability is correctly configured after deployment. This can include checking that all telemetry data is generated and flowing to the correct destinations.

2. Data Overload:

With observability, you collect a large volume of logs, metrics, and traces. Managing and making sense of this vast amount of data can be overwhelming, leading to noise rather than actionable insights.

Solution:

- Prioritize Key Metrics: Collect and analyze metrics directly related to business outcomes and system performance.

- Data Aggregation and Filtering: To reduce noise in your system’s logs, it’s important to use tools that enable data aggregation, filtering, and sampling. Setting appropriate log levels allows you to focus on capturing the most relevant information and avoid analyzing unnecessary information. For insights on effectively filtering and managing logs, consider exploring strategies discussed in our article on syslog.

- Automated Anomaly Detection: Use machine learning methods to automatically detect anomalies and reduce false positives.

3. Complex Infrastructure:

As systems evolve to become more intricate, and distributed, traditional monitoring methods become insufficient. Particularly in microservice architecture systems, the complexity of tracking requests across multiple services and understanding their impact on overall performance can be daunting.

Solution:

- Distributed Tracing: Implement distributed tracing to follow requests as they traverse through different services. This helps in understanding latency and pinpointing the root cause of issues.

- Automated Correlation: Use tools like SigNoz that automatically correlate logs, metrics, and traces, providing a comprehensive view of an incident or performance issue.

4. Security and Compliance

Collecting and storing telemetry data can raise security and compliance concerns, especially in regulated industries. Sensitive information may inadvertently be captured in logs or traces.

Solution:

Access Controls: Apply strict access control to observability data, ensuring that only authorized personnel can view or modify it.

Compliance Auditing: Use tools that provide auditing and reporting capabilities to ensure your observability implementation complies with industry regulations.

Optimizing your Observability Pipeline

To get the most out of your observability pipeline:

- Implement Data Filtering and Sampling: To reduce the volume of data without losing critical information, implement dynamic sampling techniques based on the importance of requests and filter out noisy or redundant data early in the pipeline.

- Develop Effective Data Retention Policies: Balance data availability with storage costs by keeping high-resolution data for short periods and aggregating or downsampling older data.

- Leverage Machine Learning for Anomaly Detection: Use machine learning algorithms to identify unusual patterns in your data. Implement automated baseline creation and set up dynamic thresholds for alters, enabling proactive monitoring and faster issue resolution.

- Continuously Monitor and Refine: Treat your observability pipeline as a critical system that requires ongoing monitoring and optimization. Regularly review performance metrics and adjust your pipeline based on changing needs and evolving system requirements.

SigNoz: A Modern Solution for Observability Pipelines

SigNoz is an open-source observability platform that simplifies the setup and maintenance of observability pipelines. It integrates metrics, logs, and traces into a single platform, providing a comprehensive solution for current observability requirements.

For a comprehensive guide on how to set up observability using SigNoz and OpenTelemetry, including detailed instructions and best practices, please refer to this article: Achieving Observability with SigNoz and OpenTelemetry

SigNoz Cloud is the easiest way to run SigNoz. Sign up for a free account and get 30 days of unlimited access to all features.

You can also install and self-host SigNoz yourself since it is open-source. With 24,000+ GitHub stars, open-source SigNoz is loved by developers. Find the instructions to self-host SigNoz.

Future Trends in Observability Pipelines

The field of observability is rapidly evolving. Here are key trends to watch:

- AI-Driven Analysis: Machine learning will play a larger role in automated root cause analysis, predictive alerting, and anomaly detection. These technologies will help operators identify and resolve issues more quickly and accurately.

- Edge Computing: As more computing moves to the edge, observability pipelines will need to extend to these environments. This shift will enable local data processing, reduce latency for critical alerts, and improve data privacy by keeping sensitive information closer to its source.

- eBPF Integration: Extended Berkeley Packet Filter (eBPF) technology will enhance observability by providing deeper kernel-level insights and more efficient data collection. This technology allows for more granular monitoring and improved performance without the need for intrusive agents.

- Unified Observability Platforms: The trends towards unified observability platforms will continue, with increasingly converging to offer seamless integration of metrics, logs, and traces. These platforms will reduce cognitive load for operators, simplify tooling, and provide a more holistic view of system performance and health.

Key Takeaways

- Observability pipelines are essential for managing the complexity of modern, distributed systems.

- They provide centralized data collection, processing, and routing capabilities that enhance system visibility and streamline troubleshooting.

- Implementing an observability pipeline can lead to significant improvements in system performance, reduced costs, and faster resolution of issues.

- Continuous optimization and adaptation are crucial for maintaining the effectiveness of your observability pipeline over time.

FAQs

What's the difference between a monitoring pipeline and an observability pipeline?

A monitoring pipeline typically focuses on collecting and analyzing predefined metrics. An observability pipeline, on the other hand, handles a broader range of data types (metrics, logs, and traces) and allows for more flexible querying and analysis of system behavior.

How do observability pipelines help reduce data storage costs?

Observability pipelines can reduce storage costs by:

- Filtering out unnecessary data early in the process, ensures that only relevant data is stored.

- Implementing intelligent sampling techniques that reduce data volume without sacrificing critical information.

- Aggregating and downsampling data over time decreases storage requirements while maintaining the ability to analyze trends and historical patterns.

- Routing data to appropriate storage tiers based on its value and access patterns, optimizing resource usage.

Can observability pipelines handle both structured and unstructured data?

Yes, observability pipelines are designed to handle diverse data types. They can process structured data like metrics, semi-structured data like JSON logs, and unstructured data like plain text logs. The pipeline’s transformation stage can normalize and enrich the data, making it more useful for analysis and decision-making.

What are the security considerations when implementing an observability pipeline?

Key security considerations when implementing an observability pipeline include:

- Encrypting data in transit and at rest to protect against unauthorized access.

- Implementing strong access controls and authentication mechanisms to ensure that only authorized personnel can access sensitive data.

- Ensure compliance with data protection regulations, such as GDPR, or HIPPA, to avoid legal and financial penalties.

- Regularly auditing and monitoring the pipeline itself to detect and address potential security issues.

- Sanitizing sensitive information before it enters the pipeline to prevent accidental exposure or misuse of critical data.