Optimizing OpenAI API Performance - Reducing Latency

OpenAI's powerful language models have revolutionized AI-powered applications, but their effectiveness hinges on one critical factor: latency. As a developer, you know that slow API response times can frustrate users and hinder your application's performance. How can you harness the full potential of OpenAI's API while ensuring lightning-fast responses? This guide will walk you through practical strategies to optimize your OpenAI API integration, focusing on reducing latency and improving overall performance.

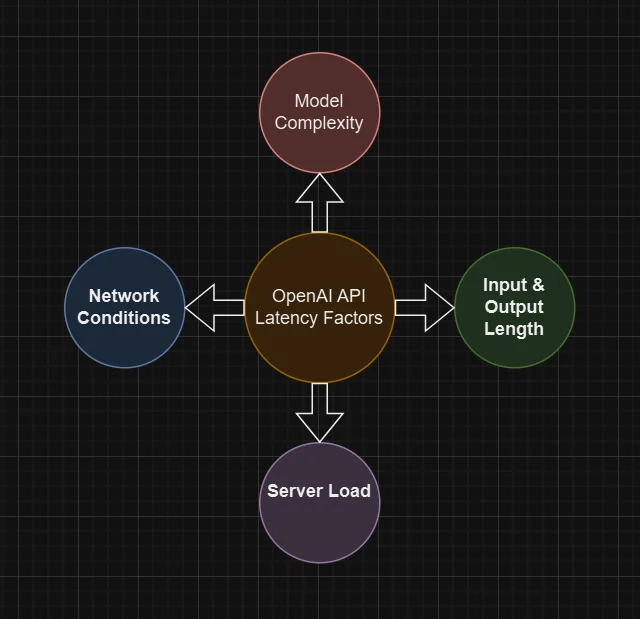

Understanding OpenAI API Latency

API latency refers to the time it takes for the OpenAI API to process your request and return a response. High latency can significantly impact user experience, especially in real-time applications or chatbots. Several factors contribute to OpenAI API response times:

Model complexity: When we talk about latency, the first thing that probably pops into your head is inference speed. This is all about how quickly the language model processes tokens, and it’s typically measured in tokens per minute (TPM) or tokens per second (TPS). One of the biggest factors affecting inference speed is the model size. Generally, smaller models tend to run faster and are more cost-effective. In fact, if you use them wisely, they can sometimes even outperform larger models like GPT-4!

To keep the performance high with these smaller models, here are a few strategies you might consider:

- Train Through Examples in Prompts: Including a few-shot example or two within your prompts helps the model better understand the desired output by providing concrete examples to follow.

- Fine-Tuning or Distillation: Fine-tuning a smaller model on your specific data can really help tailor its responses to your needs. Distillation, on the other hand, is a process where you train a smaller model to mimic the behavior of a larger one, capturing its strengths without the overhead.

Input length: While it’s true that reducing the number of input tokens can lower latency, it’s often not a game changer. For example, trimming your prompt by 50% might only improve latency by around 1-5% (ref). Unless you're dealing with really massive contexts, like lengthy documents or complex images, it might be better to focus your energy on other optimization strategies. But what if you are working with those larger contexts or are determined to squeeze out every last bit of performance after trying everything else?

Here are some techniques to help you reduce input tokens:

- Fine-Tune the Model: This can help eliminate the need for lengthy instructions or examples, making your prompts more concise while still getting great results.

- Filter Context Input: Clean up your context by pruning retrieval-augmented generation (RAG) results or stripping away unnecessary HTML. This can help streamline your inputs significantly.

- Maximize Shared Prompt Prefix: Put changing parts like RAG results or conversation history towards the end of the prompt. This way, the beginning of the prompt can be cached (stored for reuse) by many LLM providers, so the model doesn’t have to repeatedly process the same information. This helps reduce the number of tokens processed in each request, making everything faster.

Output length:

- For Natural Language: If you want more concise responses, try asking the model to keep it brief—something like “under 20 words” or “be concise” can work wonders. You can also use few-shot examples or fine-tune the model to encourage shorter replies.

- For Structured Output: In this case it’s all about minimizing syntax. Shorten function names, omit named arguments, and combine parameters whenever you can.

- Use

max_tokensorstop_tokens: You can set these parameters to cut off generation early, saving both time and tokens.

Note: Every output token you trim is a (milli)second gained!

Server load: When you're dealing with API responses, server load can really play a significant role. High traffic can lead to slower responses, which is something you definitely want to avoid. One effective way to manage this is through load balancing. This technique helps distribute requests evenly across your available servers, preventing any one server from becoming a bottleneck. Placing a load balancer in front of your servers can automatically route incoming requests to the server with the least load. This helps ensure that no single server is overwhelmed.

Network conditions: Your network conditions play a crucial role in how quickly data moves between your application and the API. If you have a strong, stable connection, you’ll enjoy faster response times and smoother interactions. On the flip side, a weak or unstable connection can lead to delays and interruptions, making your experience less enjoyable.

Quick Guide: 5 Steps to Reduce OpenAI API Latency

Optimize input tokens and prompt engineering:

To keep your API responses fast, it’s essential to craft concise and clear prompts, because when it comes to generating a lot of tokens, it can lead to increased latency. Here are some effective strategies to reduce that:

- Lower

max_tokens: If your requests are generating a similar number of tokens, setting a lowermax_tokensparameter can help cut down on latency. It’s all about giving the model less to work with. - Include Stop Sequences: Adding stop sequences can prevent the model from generating unnecessary tokens. For instance, if you want a list with a specific number of items, you could use

11. as a stop sequence. This way, the generation stops once it hits11, giving you just the 10 items you want. - Generate Fewer Completions: Lower the values of

nandbest_ofwhenever possible. Here,nrefers to how many completions you want for each prompt, whilebest_ofis about selecting the one with the highest log probability per token. If both are set to 1 (the default), the total number of generated tokens will be at most equal to yourmax_tokens.

Note: If you set

norbest_ofto greater than 1, each request will produce multiple outputs. In this case, you can think of the total number of generated tokens as[max_tokens * max(n, best_of)].- Lower

Implement caching strategies: One great way to boost your response times is by implementing caching strategies. By storing and reusing responses for frequent or similar requests, you can save yourself from making repeated calls to the API. To make the most of caching, your application should be designed to pull from cached data whenever possible. Remember to invalidate that cache whenever new information comes in. There are different ways through which you could set this up:

- Database Storage: If your application needs to handle larger datasets or requires persistent storage, consider using a database, as it is great for keeping data organized and easily accessible.

- Filesystem Storage: For simpler applications, storing data in the filesystem might be a good fit. This approach is straightforward and can be useful for quick access to static files or logs.

- In-Memory Cache: If speed is your priority, an in-memory cache is a fantastic choice. This method allows for rapid access to frequently used data, significantly reducing response times.

Use efficient API calling patterns: To optimize your API usage, focus on efficient calling patterns. One key strategy is to batch requests and avoid unnecessary calls.

Batching is especially helpful when sending multiple requests to the same endpoint. Instead of making individual requests for each prompt, combine them into a single request, as the prompt parameter can accommodate up to 20 unique prompts. This reduces the number of calls but may increase the total tokens generated, potentially slowing response times.

If instant responses aren’t critical, utilize the Batch API to submit large collections of requests without impacting your synchronous request limits, streamlining processing without sacrificing performance. For quicker responses, keep in mind the OpenAI API’s limits on requests and tokens per minute. If you're reaching the requests-per-minute cap but still have token capacity, batching multiple tasks can boost your throughput, especially with smaller models that manage larger batches effectively. When sending a batch, just pass a list of strings to the prompt parameter, making it a simple yet effective change that can bring significant benefits.

Before we see some examples, let’s see how to set the

API KEYfor Windows PowerShell:$env:OPENAI_API_KEY="your_api_key_here"Case 1: Program without Batching

from openai import OpenAI client = OpenAI() num_stories = 10 prompt = "Once upon a time," # Serial example, with one story completion per request for _ in range(num_stories): response = client.completions.create( model="curie", prompt=prompt, max_tokens=20, ) # Print story print(prompt + response.choices[0].text.strip()) # Use .strip() to remove any leading/trailing whitespace- Execution: Each story is generated one at a time in a loop.

- API Calls: The code makes 10 separate API calls (one for each story).

- Output Handling: The output is printed immediately after each request, which can lead to slower performance due to the overhead of multiple requests.

Possible Output :

Once upon a time, there was a brave knight who lived in a small village. Once upon a time, a little girl found a magical forest behind her house. Once upon a time, a mysterious dragon appeared in the kingdom, causing panic. ...Note that specific output might vary according to model’s response.

Case 2: Program with batching

from openai import OpenAI # API key will be picked up from the environment variable client = OpenAI() num_stories = 10 prompts = ["Once upon a time,"] * num_stories # Batched example, with 10 story completions per request response = client.completions.create( model="curie", prompt=prompts, max_tokens=20, ) # Match completions to prompts by index stories = ["" for _ in prompts] # Use enumerate to get index for index, choice in enumerate(response.choices): stories[index] = prompts[index] + choice.text.strip() # Print stories for story in stories: print(story)- Execution: All story prompts are sent to the API in a single request.

- API Calls: The code makes just one API call to generate all stories at once.

- Output Handling: The output is processed after the API call completes, which can be more efficient and faster for larger numbers of stories.

Choose the right model: It’s all about picking the right model that fits your needs while keeping an eye on performance and speed. OpenAI’s API offers a range of models, each with its own strengths and complexities. If you’re looking for something that can handle intricate and varied responses, the advanced GPT-4 is your go-to. Just keep in mind that it may take a bit longer to process your requests. On the flip side, if you want quicker and more budget-friendly chat completions, you might consider models like GPT-4o-mini. While they’re faster and cheaper, they might not always hit the mark in terms of accuracy or relevance.

Leverage parallel processing: Why not supercharge your API calls by using asynchronous programming to handle multiple requests at once? When working with a large language model (LLM), parallelization can be a game changer, especially if your tasks don’t depend on each other. Think of it this way: just like two shirts take the same time to dry as one, you can split your calls into parallel requests to save time. But what if your steps do need to happen in a certain order? You can still take advantage of speculative execution. This is especially handy for classification tasks where one outcome is more likely than others, like moderation. Let’s see a quick approach:

- Start step 1 (input moderation) and step 2 (story generation) at the same time.

- Check the result of step 1.

- If it’s not what you expected, you can cancel step 2 (and try again if needed).

- If your guess for step 1 is correct, you’ve effectively run it without any added delay!

Scaling: If you're looking to enhance your application's performance, consider scaling it out horizontally. This approach allows you to manage incoming requests from various sources by deploying extra servers or containers to share the workload. Just remember, if you choose this route, your architecture should be prepared to support multiple nodes, and you'll need effective load balancing mechanisms to distribute traffic evenly.

On the other hand, you might think about vertical scaling, which means upgrading a single node's resources. This could involve boosting your server’s capabilities to handle more load. If you go this route, ensure your application is optimized to leverage these enhanced resources effectively. Both methods have their benefits, so pick the one that best fits your needs!

Analyzing OpenAI API Response Times

Understanding typical response times helps set realistic expectations and identify optimization opportunities. Here's an overview of average latencies for different OpenAI models:

- GPT-3.5-turbo: 500ms - 1500ms

- GPT-4: 1000ms - 3000ms

- Davinci: 1500ms - 3500ms

Note: These times can vary based on input complexity and server load. GPT-3.5 models generally offer faster response times compared to GPT-4, making them suitable for latency-sensitive applications.

To measure API latency accurately:

- Use Built-in Timing Functions: Most programming languages offer built-in functions to measure time. For instance, in Python, you can use

time.time()or thetimeitmodule. This allows you to capture the duration of API calls easily, giving you precise insights into how long each request takes. - Implement Server-side Logging: Setting up logging for your API calls helps you capture more than just response times. By logging the request and response details along with timestamps, you can analyze performance over time, identify patterns, and pinpoint any anomalies or delays.

- Utilize API Monitoring Tools: Tools like SigNoz provide a comprehensive way to monitor your API performance. They can track metrics such as response times, error rates, and throughput, allowing you to visualize trends and identify bottlenecks in real-time. This proactive monitoring helps you react quickly to any performance issues that arise. For OpenAI-specific setup, see the OpenAI monitoring guide.

Benchmarking OpenAI API Performance

Setting up a robust latency tracking system is essential for optimizing your API usage. Here's how to get started:

- Create a Testing Suite: Build a suite that simulates various API calls. This means using different scenarios that your application might encounter. Think about edge cases and typical use cases alike.

- Record Response Times: Capture the response times for various input lengths and model types. It’s important to test not just the average responses but also the extremes, like very short or very long inputs, to see how they affect latency.

- Test from Multiple Locations: Run your tests from various geographic locations. Network latency can vary significantly based on where you’re making requests from, so this helps you understand how geography impacts performance.

- Analyze the Data: Once you have your data, dig into it to identify patterns and outliers in API response times. Look for trends over different times of day or specific types of requests. This analysis can be invaluable for understanding how your application will perform under real-world conditions.

Once you’ve gathered and analyzed your benchmarks, you can use this information to:

- Set Performance Baselines: Establish what "normal" performance looks like for your application. This will help you spot any anomalies quickly.

- Identify Areas for Optimization: By understanding where delays occur, you can target specific areas for improvement. This could mean tweaking your code, adjusting how you interact with the API, or even considering different models.

- Make Informed Decisions: Armed with your data, you can make better choices about model selection and API usage. For instance, you might find that a different model provides faster responses for specific tasks, which could enhance your application’s overall performance.

- Identify areas for optimization

- Make informed decisions about model selection and API usage

Advanced Techniques for Latency Optimization

- Implement streaming responses: Consider implementing streaming responses to enhance your application's interactivity. By using the streaming API, you can start processing responses as soon as the model generates tokens, rather than waiting for the entire output. Just set

stream: truein your request, and you’ll begin receiving tokens right away. While this doesn’t reduce the overall time to get all the tokens, it does speed up the arrival of the first token. This is especially useful if you want to display partial progress or if you plan to halt the generation partway through. It can significantly improve user experience, so it’s definitely worth experimenting with streaming to see how it can benefit your application.

for chunk in openai.ChatCompletion.create(

model="gpt-3.5-turbo",

messages=[{"role": "user", "content": "Tell me a story"}],

stream=True

):

content = chunk["choices"][0].get("delta", {}).get("content")

if content is not None:

print(content, end="")

- Fine-Tune Models: Consider customizing models to fit your specific needs by fine-tuning them with relevant data. This approach can greatly enhance inference times and ensure that the outputs align closely with your expectations, boosting both speed and accuracy.

- Leverage Azure OpenAI: It can provide reduced latency, particularly in certain regions. Azure's robust infrastructure is designed to optimize connectivity and performance, making it an excellent choice for applications that demand low latency. If you use Azure OpenAI, follow the Azure OpenAI monitoring guide for instrumentation steps.

- Optimize Network Connectivity: Take steps to enhance your application's network performance by utilizing a Content Delivery Network (CDN). This will cache responses closer to your users, reducing load times. Additionally, consider deploying your application nearer to OpenAI’s servers to further minimize latency and create a smoother experience for your users.

Best Practices for OpenAI API Integration

- Implement proper error handling and retries: Use exponential backoff for retries to avoid overwhelming the API during issues. With linear backoff, you retry at fixed intervals (e.g., every 2 seconds), which can still put a consistent load on the API, especially if multiple clients are doing the same thing. On the other hand, exponential backoff increases the wait time between retries (e.g., 2 seconds, 4 seconds, 8 seconds), giving the API more breathing room to recover. This gradual increase in delay helps reduce the likelihood of causing a traffic spike, which could further slowdown the service or trigger rate limits.

import time

import openai

def api_call_with_retry(max_retries=3):

for attempt in range(max_retries):

try:

response = openai.Completion.create(

engine="text-davinci-002",

prompt="Translate the following English text to French: 'Hello, world!'",

max_tokens=60

)

return response

except openai.error.RateLimitError as e:

if attempt == max_retries - 1:

raise

time.sleep(2 ** attempt) # Exponential backoff

- Manage rate limits: Implement a queue system to control the rate of API calls and avoid hitting rate limits. Rate limits are like guardrails for OpenAI’s API, setting boundaries on how often a user or client can access the services within a given timeframe. These restrictions help ensure fair usage and maintain the performance and reliability of the system for everyone.

- Balance latency with cost: It’s crucial to weigh the benefits of faster, pricier models against slower, more budget-friendly alternatives. When transitioning your prototype into production, budgeting for operational costs becomes a key challenge. OpenAI uses a pay-as-you-go pricing model, where charges are based on the number of tokens processed—roughly 1,000 tokens are equivalent to about 750 words. To estimate your expenses, you’ll need to project your token usage by considering factors like traffic levels, user interaction frequency, and the volume of data being processed. A helpful way to think about cost management is to view it as a function of the number of tokens and the price per token. There are two main strategies to reduce costs:

- Lowering the Cost Per Token: This can be achieved by using smaller models for certain tasks, which can help you save money.

- Reducing Token Usage: You can decrease the number of tokens needed by using shorter prompts, fine-tuning your models, or caching frequently asked user queries to avoid repetitive processing.

Note: To assist with cost estimation, you can also try OpenAI’s interactive tokenizer tool. Additionally, the API and playground provide token counts in their responses. Once you have your application running smoothly with the most capable model, experiment with other models to see if they can deliver similar results with lower latency and costs.

Monitoring and Improving API Performance with SigNoz

Real-time monitoring is crucial for maintaining optimal OpenAI API performance. SigNoz which is an open-source APM tool, can help you track and analyze your API calls effectively.

Benefits of using SigNoz for OpenAI API monitoring:

- Real-time Latency Tracking: Get immediate insights into how your API performs at any moment, helping you respond quickly to performance issues.

- Detailed Performance Metrics: Access comprehensive metrics, including response times, error rates, and throughput, to understand your API’s behavior in depth.

- Custom Dashboards for API-Specific Insights: Create tailored dashboards that focus on the metrics most relevant to your OpenAI API usage, allowing for easier monitoring and analysis.

- Anomaly Detection and Alerting: Automatically identify unusual patterns in your API performance and receive alerts, so you can take action before issues impact users.

SigNoz Cloud is the easiest way to run SigNoz. Sign up for a free account and get 30 days of unlimited access to all features.

You can also install and self-host SigNoz yourself since it is open-source. With 24,000+ GitHub stars, open-source SigNoz is loved by developers. Find the instructions to self-host SigNoz.

Once set up, use SigNoz to:

- Monitor API Call Latencies Over Time: Track how latencies change over days, weeks, or months to identify trends and make informed decisions.

- Identify Performance Bottlenecks: Dive deep into specific API calls to find where delays occur, whether in processing time, network issues, or other factors.

- Set Up Alerts for Abnormal Response Times: Configure alerts to notify you immediately if response times exceed your defined thresholds, enabling proactive management.

- Analyze the Impact of Optimization Efforts: After implementing changes or optimizations, use SigNoz to evaluate their effectiveness and understand how they influence performance.

Future-proofing Your OpenAI API Integration

- Stay Updated: Make it a habit to regularly check OpenAI’s documentation and changelogs for the latest optimization tips and API updates. You never know when a new feature or a response time improvement might come along therefore staying informed allows you to take full advantage of any enhancements that could really benefit your applications.

- Adapt to New Model Versions: OpenAI is always rolling out new models with better capabilities. Don’t hesitate to dive in and test these models as they become available. Each version may come with its own set of trade-offs in performance and accuracy, so it’s key to evaluate how they fit your needs. You might discover that a newer model offers just the efficiency or quality boost you’ve been looking for.

- Implement A/B Testing: Keep refining your approach by comparing different optimization strategies through A/B testing. For instance, try out various models, configurations, or request formats to see which one delivers the best performance or user experience. This data-driven method empowers you to make decisions grounded in real-world results, not just hunches.

- Balance Performance and Accuracy: While it’s important to optimize for speed, don’t lose sight of model accuracy. Sacrificing too much accuracy for faster results can lead to frustrating user experiences or incorrect outputs. Take the time to thoroughly test your application and regularly review performance metrics, ensuring that both latency and accuracy are meeting your expectations.

Key Takeaways

- Efficient prompt engineering and token management significantly impact OpenAI API response times.

- Choosing the right model and implementing caching can drastically reduce latency.

- Continuous monitoring and benchmarking are essential for maintaining optimal performance.

- Balancing latency, cost, and accuracy is key to successful API integration.

FAQs

What is considered a good latency for OpenAI API calls?

A good latency depends on your specific use case, but generally:

- For real-time applications: Aim for < 1000ms

- For non-real-time applications: < 3000ms is often acceptable

How does the choice of OpenAI model affect latency?

Smaller models like GPT-3.5-turbo typically offer faster response times compared to larger models like GPT-4. However, larger models may provide more accurate or complex outputs.

Can fine-tuning models help reduce API latency?

Yes, fine-tuning can potentially reduce latency by creating a more specialized model that requires less processing time for your specific use case.

What are the trade-offs between latency and cost when using OpenAI API?

Faster models or more frequent API calls generally incur higher costs. You'll need to balance the need for speed with your budget constraints. Consider using smaller models or implementing caching strategies to optimize both latency and cost.