How to Fix “PQ Could Not Resize Shared Memory" Error

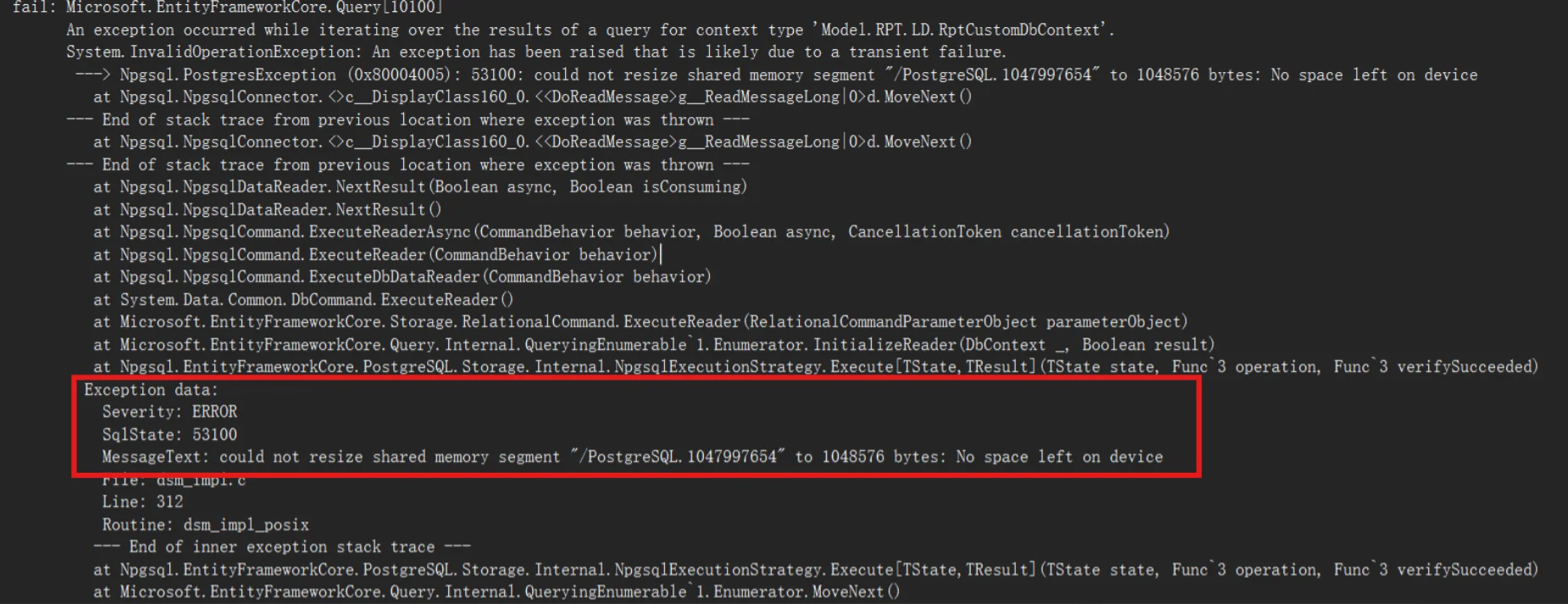

If you’re running PostgreSQL in Docker, you’ve probably come across the dreaded "PQ: Could Not Resize Shared Memory" error. It can stop your database in its tracks and mess with your app’s performance. Understanding why this happens and how to fix it is key to keeping your PostgreSQL setup running smoothly. This guide provides comprehensive strategies to resolve this error, from quick fixes to long-term optimizations.

What Causes the "PQ: Could Not Resize Shared Memory" Error?

PostgreSQL uses shared memory to let its processes access common data. When PostgreSQL can’t resize the shared memory segment, you get hit with the error: "pq: could not resize shared memory segment. No space left on the device." It can severely impact database operations, preventing new connections and potentially causing inconsistencies. Don’t be fooled by the “No space left on device” part—this usually refers to memory, not disk space.

Common Causes of Shared Memory Resizing Issues

Here are some common reasons why you might encounter shared memory resizing issues:

- Docker's Default Shared Memory Limit: Docker containers come with a default shared memory limit of 64MB, which often isn’t enough for PostgreSQL.

- System-wide Shared Memory Restrictions: Your operating system might have strict limits on shared memory allocation.

- Insufficient Disk Space: The error message might be misleading; it’s often about memory, not disk space.

- Misconfigured PostgreSQL Settings: Parameters like

shared_buffersmight be set too high, leading to excessive memory demands.

Quick Fix: Increasing Docker Shared Memory Size

For Docker users, a quick solution involves increasing the shared memory allocation for the PostgreSQL container. Here's how:

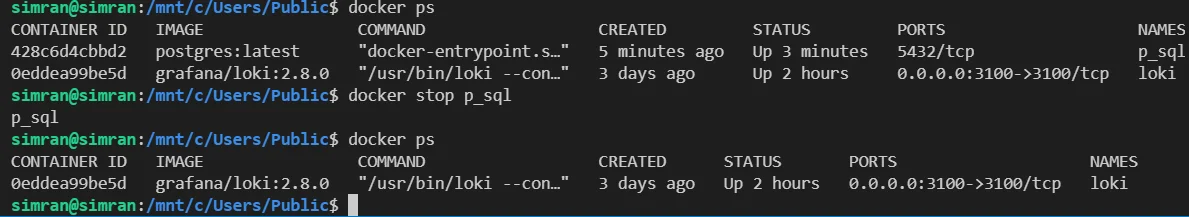

Stop your PostgreSQL container:

docker stop <container_name>

Stopping PostgreSQL container Restart the container with increased shared memory:

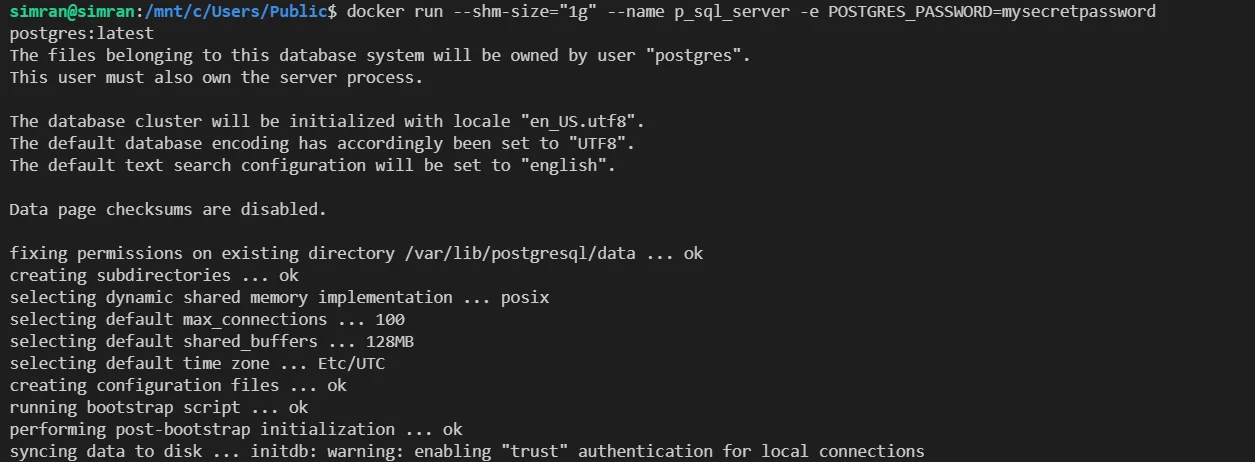

docker run --shm-size="1g" --name <container_name> -e POSTGRES_PASSWORD="<password>" <your_postgres_image>

Restarting PostgreSQL container with increased shared memory

This command allocates 1GB of shared memory to the container. Adjust the value based on your specific needs and system resources.

Considerations:

- Ensure your host system has sufficient memory to accommodate the increase.

- This change is temporary; you must specify the

-shm-sizeoption each time you start the container.

Long-term Solutions for Shared Memory Management

While the quick fix gets your container back on its feet, you’ll want to address the root causes to prevent future errors. Here’s how to do that.

1. Optimize PostgreSQL Settings

Tweaking PostgreSQL’s memory-related settings can help you avoid running into shared memory limits:

shared_buffers: This parameter defines how much memory PostgreSQL uses for caching data. Aim to set it to about 25% of your system’s total RAM.

shared_buffers = 1GBwork_mem: This controls how much memory is used for operations like sorting. Start with 4MB and adjust based on your system’s load.

work_mem = 4MBmaintenance_work_mem: This setting is for maintenance tasks like

VACUUMandCREATE INDEX.maintenance_work_mem = 64MB

Make sure to test each change carefully to find the sweet spot for your configuration.

Note: These configurations can be updated by making use of the PostgreSQL configuration file which needs to be used for starting the container.

2. Manage Resources in Containers

If you’re using Docker or Kubernetes, properly allocating memory to your containers is key.

In Docker Compose, you can add memory limits like this:

services: postgres: shm_size: '1gb'In Kubernetes, you’ll want to set resource requests and limits for your pods:

resources: requests: memory: "1Gi" limits: memory: "2Gi"

3. Monitor Your Memory Usage

You can avoid running into shared memory errors by keeping an eye on PostgreSQL’s memory usage. Use monitoring tools like SigNoz to track shared memory consumption and get alerts when it’s getting close to the limit.

Troubleshooting Shared Memory Issues in Different Environments

Shared memory problems can manifest differently across various deployment environments:

- Bare-metal installations:

Check system-wide shared memory limits:

sysctl -a | grep kernel.shmAdjust limits in

/etc/sysctl.confif necessary.

- Virtual machines:

- Ensure the VM is allocated sufficient memory.

- Check hypervisor settings for any memory limitations.

- Kubernetes:

Use resource requests and limits in pod specifications:

resources: requests: memory: "1Gi" limits: memory: "2Gi"Consider using a StorageClass that supports volume expansion for persistent volumes.

Best Practices to Prevent Shared Memory Errors

Shared memory errors in PostgreSQL can severely impact performance and stability. To prevent these errors, it’s essential to follow best practices for managing and configuring shared memory. Here are some effective strategies:

1. Configure Shared Memory Appropriately

Adjust

shared_buffers: This setting controls the amount of memory PostgreSQL uses for caching data. Ensure it's set to a value that balances performance and available system memory. For most systems, a common recommendation is to allocate 25% of the total system RAM.ALTER SYSTEM SET shared_buffers = '1GB'; -- Adjust based on your system

2. Monitor Shared Memory Usage

Regular Monitoring: Use tools like

pg_stat_activityandpg_stat_databaseto monitor memory usage. Track the amount of memory used and check for potential issues.SELECT * FROM pg_stat_activity; SELECT * FROM pg_stat_database;Check for Errors: Review PostgreSQL logs for any warnings or errors related to shared memory. Configure your logging to capture detailed information.

SET log_min_messages = 'WARNING'; -- Adjust the logging level as needed

3. Optimize PostgreSQL Configuration

Tune

work_mem: This setting controls the amount of memory allocated for operations like sorting and hashing. Set it appropriately to avoid excessive memory consumption during complex queries.ALTER SYSTEM SET work_mem = '4MB'; -- Adjust based on query needsAdjust

maintenance_work_mem: This setting determines the amount of memory used for maintenance tasks like vacuuming and indexing. Ensure it’s sufficient to avoid long-running maintenance operations.ALTER SYSTEM SET maintenance_work_mem = '64MB'; -- Adjust based on workload

4. Proper Resource Allocation

- Avoid Overcommitment: Ensure that PostgreSQL does not overcommit system memory. Properly size your database and its resources to avoid hitting limits.

- Regularly Reevaluate Settings: As your database grows and your workload changes, regularly review and adjust memory settings to align with current needs.

5. Perform Routine Maintenance

Regular Vacuuming: Perform regular vacuum operations to prevent bloat and manage memory efficiently.

VACUUM ANALYZE;Index Management: Regularly review and optimize indexes to improve query performance and memory usage.

6. Plan for Scaling

- Scale Resources: If you consistently hit memory limits, consider scaling up your hardware or adjusting PostgreSQL’s configuration to better handle the load.

- Use Connection Pooling: Implement connection pooling to manage the number of active connections and reduce memory overhead.

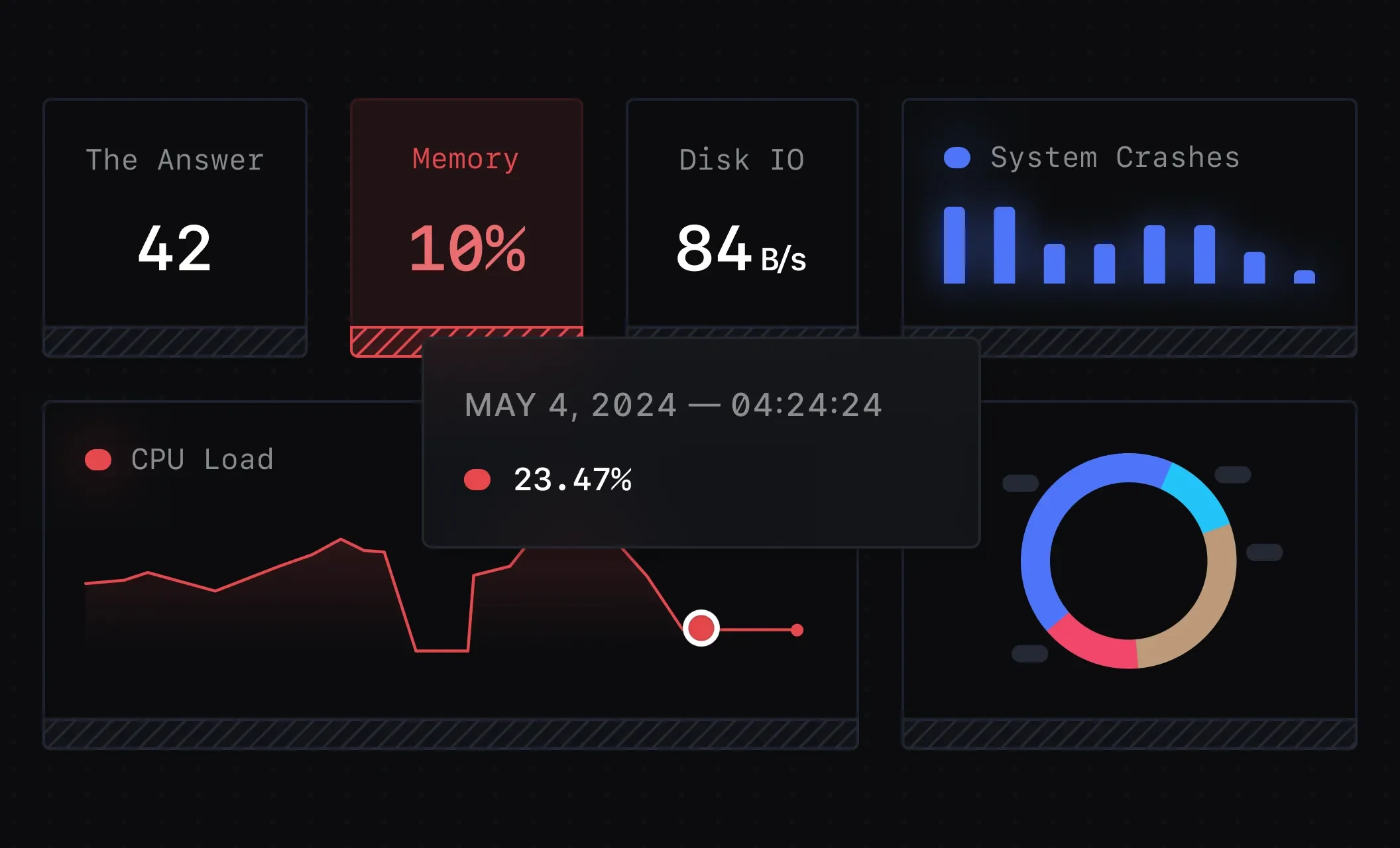

Monitoring PostgreSQL Performance with SigNoz

While optimizing memory settings and following best practices help in preventing issues, it’s equally important to continuously monitor PostgreSQL performance to identify and address potential problems before they impact your database. Monitoring tools provide real-time insights into your database’s health, allowing you to detect anomalies, track resource usage, and make informed decisions.

SigNoz emerges as a powerful tool for monitoring PostgreSQL performance. SigNoz offers comprehensive observability features, allowing you to track various metrics and logs, including those related to memory usage, query performance, and overall database health.

How SigNoz Enhances PostgreSQL Monitoring

SigNoz integrates with PostgreSQL to provide:

Real-Time Metrics: Visualize memory usage, query performance, and other critical metrics in real-time dashboards.

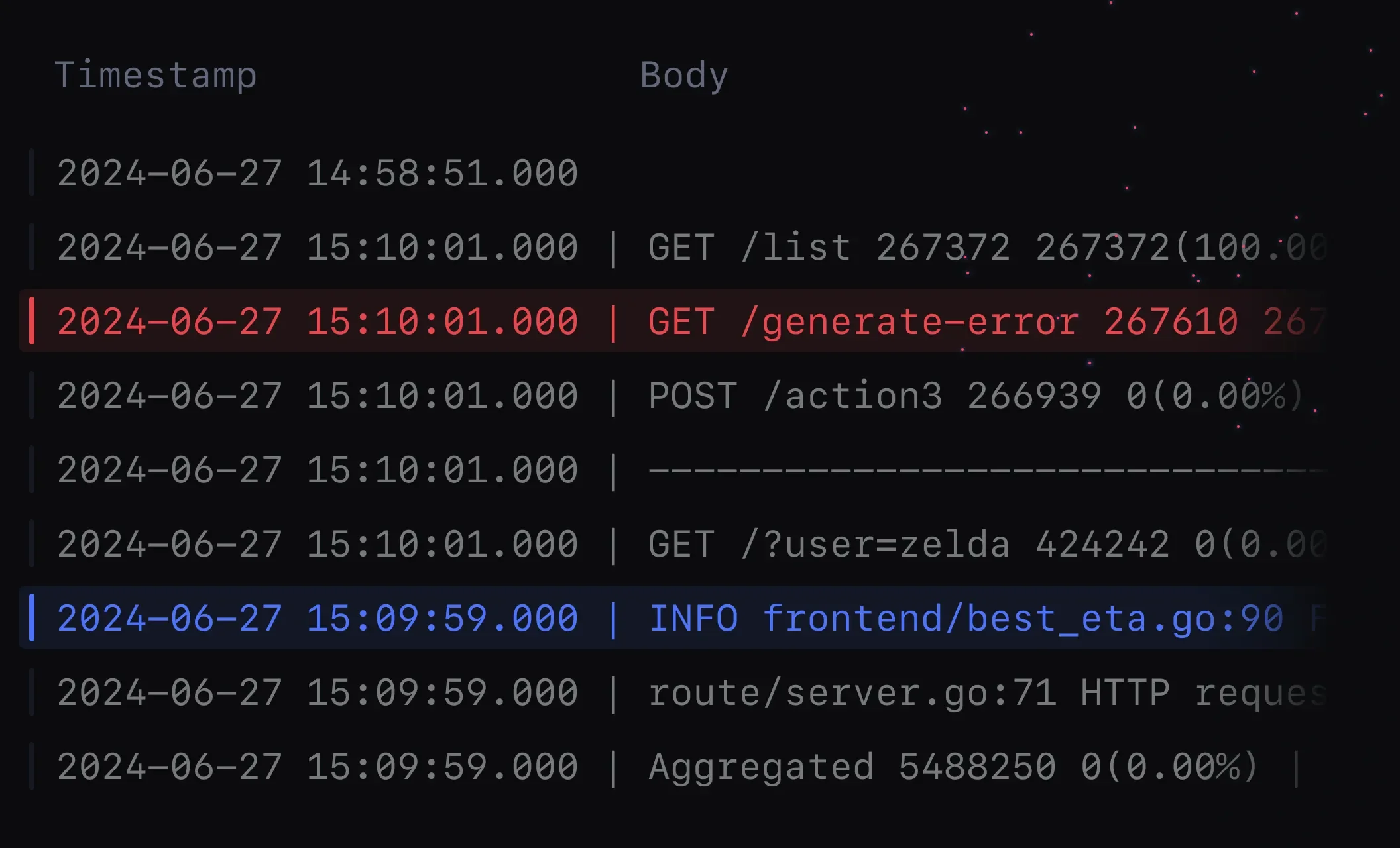

Real time Monitoring Dashboards in SigNoz Detailed Logs: Access detailed logs to analyze and troubleshoot issues effectively.

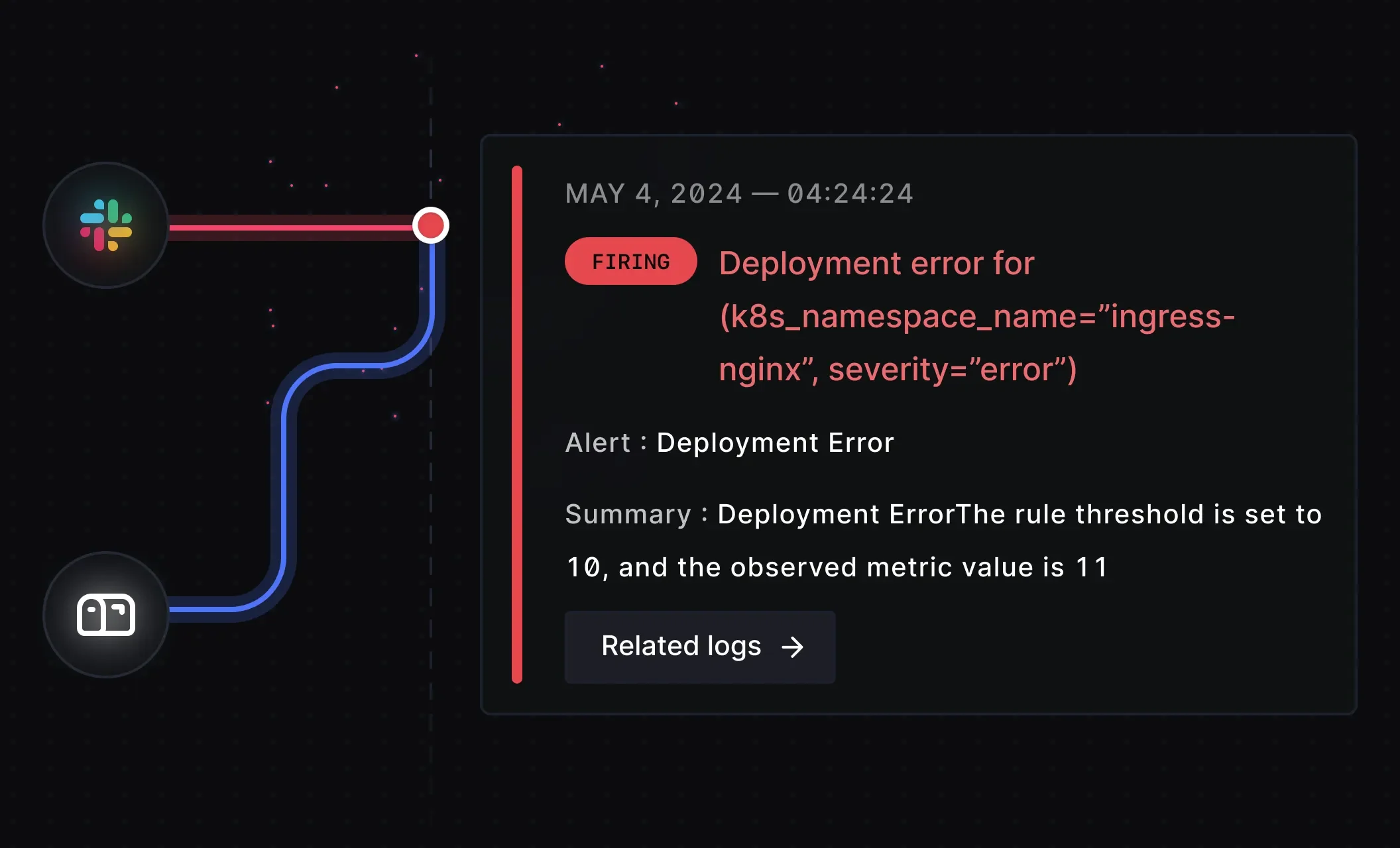

Analyzing logs in SigNoz Custom Alerts: Set up alerts for specific conditions, such as high memory usage or slow queries, to stay informed about potential issues.

Setting up Custom Alerts and Notifications in SigNoz

SigNoz Cloud is the easiest way to run SigNoz. Sign up for a free account and get 30 days of unlimited access to all features.

You can also install and self-host SigNoz yourself since it is open-source. With 24,000+ GitHub stars, open-source SigNoz is loved by developers. Find the instructions to self-host SigNoz.

Key Takeaways

- Shared memory is crucial for PostgreSQL performance; understanding its management is essential.

- Docker containers often require manual adjustment of shared memory allocation.

- Proper configuration of both PostgreSQL and system parameters can prevent most shared memory errors.

- Regular monitoring and proactive management are key to maintaining database stability and performance.

FAQs

What is shared memory in PostgreSQL and why is it important?

Shared memory in PostgreSQL is a segment of RAM used by the database to cache frequently accessed data and facilitate inter-process communication. It's crucial for performance as it significantly reduces disk I/O and speeds up query execution.

How can I check the current shared memory usage in my PostgreSQL instance?

You can check shared memory usage using the PostgreSQL system view pg_shmem_allocations:

SELECT * FROM pg_shmem_allocations;

This query shows all shared memory allocations and their sizes.

In PostgreSQL version 12 and earlier, the pg_shmem_allocations system catalog doesn't exist, as it was introduced in PostgreSQL 13 to provide insight into shared memory allocations. So there is no direct way to fetch the details. Instead you can make use of the command

SELECT buffers_checkpoint, buffers_clean, buffers_backend, buffers_alloc

FROM pg_stat_bgwriter;`

// Shared buffers are a key part of shared memory in PostgreSQL. You can check how much of the shared buffers are being used by looking at the pg_stat_bgwriter view.

Are there any risks associated with increasing shared memory size?

While increasing shared memory can improve performance, it also comes with risks:

- Overconsumption of system memory, potentially impacting other processes

- Increased startup time for PostgreSQL as it allocates larger memory segments

- Potential system instability if shared memory is set too high relative to available RAM

Always test changes thoroughly and increase shared memory gradually.

Can shared memory issues affect PostgreSQL performance even without errors?

Yes, insufficient shared memory can impact performance without triggering explicit errors. Symptoms include:

- Slower query execution times

- Increased disk I/O

- Higher CPU usage

Regular monitoring of PostgreSQL performance metrics can help identify these issues before they become critical.