Prometheus Pushgateway - How to Monitor Short Lived Batch Jobs

Prometheus uses a pull-based monitoring model, actively scraping exposed metrics endpoints from services at regular intervals (e.g., every 60 seconds). However, this pull-based monitoring fails to monitor the short-lived service-level batch jobs, i.e., jobs that are terminated before Prometheus can scrape them.

This is where Prometheus Pushgateway is used. It acts as a metrics cache (temporary storage), where short-lived jobs push metrics before they complete, and then make them available for Prometheus to scrape later.

The following guide explains what the Prometheus Pushgateway is and how it works. You will also find a hands-on demo on how to set up the Prometheus pushgateway to receive metrics from short-lived production jobs, such as database backup and ETL jobs, and how to visualise the collected metrics using OpenTelemetry and SigNoz.

What is Prometheus Pushgateway?

In simple terms, the Prometheus Pushgateway is a lightweight HTTP server/service that allows short-lived jobs to push their metrics before terminating. It stores those metrics and exposes them for Prometheus to scrape later.

The official Prometheus documentation has a distinct warning: We only recommend using the Pushgateway in certain limited cases.

They recommend Pushgateway for a single valid scenario, i.e., capturing the outcome of a service-level batch job.

How does the Prometheus Pushgateway work?

Unlike the standard Prometheus model, where the server "pulls" data, the Pushgateway sits in the middle. It accepts "pushed" metrics from your short-lived processes and acts as a metrics cache, holding that data for Prometheus to scrape.

- The Batch Job Runs: Your backup operation or ephemeral data processing task starts, runs its logic, and calculates metrics (e.g.,

backup_duration_seconds,records_processed). - The Push: Before the job exits, it sends an HTTP POST request to the Pushgateway containing these metric values.

- The Holding Phase: The job terminates and disappears. However, the metrics are now stored in the Pushgateway's memory.

- The Scrape: Prometheus wakes up on its defined schedule (e.g., every 15 seconds), connects to the Pushgateway, and scrapes the stored metrics as if they were coming from a running service.

Demo: Setting Up Prometheus Pushgateway for Receiving Metrics from Batch Jobs

In this demo, you will learn how to set up the Prometheus Pushgateway to receive metrics from batch jobs, collect them using the OpenTelemetry Collector (via Prometheus receiver), and set up a dashboard and alerting using SigNoz.

Prerequisite

Docker: Required to pull the images and run the scripts to simulate batch jobs. You can install it from the Official Docker Documentation.SigNoz Cloud Account: You will need it to generate Ingestion API keys and create a dashboard for visualization.

Step 1: Create the folder

Create a folder name prometheus-pushgateway-demo. This will be our main folder

mkdir prometheus-pushgateway-demo && cd prometheus-pushgateway-demo

Step 2: Create a Docker Compose file

Create a docker-compose.yml file.

touch docker-compose.yml

Step 3: Add Prometheus Pushgateway Service

Copy the following code into docker-compose.yml.

services:

# Prometheus Pushgateway

pushgateway:

image: prom/pushgateway:v1.11.2

container_name: pushgateway

restart: unless-stopped

ports:

- "9091:9091"

command:

- --persistence.file=/data/metrics

- --persistence.interval=5m

volumes:

- pushgateway-data:/data

healthcheck:

test: ["CMD", "wget", "-q", "--spider", "http://localhost:9091/-/healthy"]

interval: 30s

timeout: 10s

retries: 3

volumes:

pushgateway-data:

Code Breakdown:

image: Uses the official Prometheus Pushgateway Docker image pinned to version v1.11.2 to ensure consistent and predictable behaviour.

--persistence.file: Persists pushed metrics to /data/metrics so they survive container restarts instead of staying only in memory.

--persistence.interval: Flushes in-memory metrics to disk every 5 minutes to reduce data loss on crashes.

volumes: Mounts the pushgateway-data Docker volume at /data, backing the persistence file with durable storage.

Step 4: Start the service and verify if it’s running

docker compose up -d

Check the logs to see if the service is listening on the port 9091.

docker logs pushgateway

You should be able to see following output:

ts=2026-01-14T04:19:07.714Z level=info caller=main.go:82 msg="starting pushgateway" version="(version=1.11.2, branch=HEAD, revision=ace6bf252df95246501059f17ace076f1081144e)"

ts=2026-01-14T04:19:07.714Z level=info caller=main.go:83 msg="Build context" build_context="(go=go1.25.3, platform=linux/arm64, user=root@0ade354853d8, date=20251030-11:51:02, tags=unknown)"

ts=2026-01-14T04:19:07.717Z level=info caller=tls_config.go:354 msg="Listening on" address=[::]:9091

ts=2026-01-14T04:19:07.717Z level=info caller=tls_config.go:357 msg="TLS is disabled." http2=false address=[::]:9091

Step 5: Create Batch Job

Create a sub-folder in prometheus-pushgateway-demo.

mkdir -p sample-jobs/python && cd sample-jobs/python

Add a Python script named database_backup.py. This will be used to create a job to simulate a database backup batch job in production.

touch database_backup.py

Copy the following code into it.

#!/usr/bin/env python3

"""

Database Backup Job - Simulated Batch Process

──────────────────────────────────────────────

Key functionality:

• Simulates periodic database backup operations

• Randomly succeeds (95%) or fails

• Measures duration, size, table count

• Logs results in human-readable format

"""

import os

import time

import random

import logging

from datetime import datetime

# Configuration

RUN_INTERVAL = int(os.getenv('RUN_INTERVAL', '120')) # seconds between runs

# Logging setup

logging.basicConfig(

level=logging.INFO,

format='%(asctime)s - %(levelname)s - %(message)s'

)

logger = logging.getLogger(__name__)

def simulate_database_backup():

"""

Simulates a database backup operation.

Returns metrics about the backup.

"""

logger.info("Starting database backup simulation...")

# Simulate backup duration (30-180 seconds, scaled down for demo)

duration = random.uniform(2.0, 10.0)

time.sleep(duration)

# Simulate success/failure (95% success rate)

success = random.random() > 0.05

# Simulate backup size (1GB - 50GB in bytes)

backup_size = random.randint(1_000_000_000, 50_000_000_000) if success else 0

# Simulate number of tables

tables_count = random.randint(50, 200) if success else 0

return {

'duration': duration,

'success': success,

'size_bytes': backup_size,

'tables_count': tables_count,

'database': random.choice(['orders_db', 'users_db', 'inventory_db']),

}

def main():

"""Main loop - runs backup simulation continuously."""

logger.info(f"Database Backup Job started")

while True:

try:

# Run backup simulation

result = simulate_database_backup()

# Log result

status = "SUCCESS" if result['success'] else "FAILED"

logger.info(

f"Backup {status}: database={result['database']}, "

f"duration={result['duration']:.2f}s, "

f"size={result['size_bytes'] / 1_000_000_000:.2f}GB, "

f"tables={result['tables_count']}"

)

except Exception as e:

logger.error(f"Error in backup job: {e}")

# Wait for next run

logger.info(f"Waiting {RUN_INTERVAL}s until next run...")

time.sleep(RUN_INTERVAL)

if __name__ == '__main__':

main()

Step 6: Configure the Batch Job to send Metrics

To configure the batch job to send the metrics, we will need to update our script with the following changes.

Import component to expose metrics to Prometheus

from prometheus_client import CollectorRegistry, Gauge, Counter, push_to_gatewayAdd configuration to push metrics to Prometheus

# Configuration PUSHGATEWAY_URL = os.getenv('PUSHGATEWAY_URL', 'http://localhost:9091') JOB_NAME = 'database_backup' INSTANCE_NAME = os.getenv('INSTANCE_NAME', 'db-primary')Add function

push_metrics(), it creates a fresh set of Prometheus Gauge metrics for a single backup run (duration, size, status, last success time, table count), labels them with the database name and instance, and pushes them to the Prometheus Pushgateway for scraping and monitoring.def push_metrics(backup_result): """ Push backup metrics to Pushgateway. """ registry = CollectorRegistry() # Gauge: Duration of backup duration_gauge = Gauge( 'backup_job_duration_seconds', 'Duration of the database backup operation in seconds', ['database', 'instance'], registry=registry ) duration_gauge.labels( database=backup_result['database'], instance=INSTANCE_NAME ).set(backup_result['duration']) # Gauge: Backup size size_gauge = Gauge( 'backup_job_size_bytes', 'Size of the backup file in bytes', ['database', 'instance'], registry=registry ) size_gauge.labels( database=backup_result['database'], instance=INSTANCE_NAME ).set(backup_result['size_bytes']) # Gauge: Last success timestamp (important for staleness detection) if backup_result['success']: last_success_gauge = Gauge( 'backup_job_last_success_timestamp', 'Unix timestamp of the last successful backup', ['database', 'instance'], registry=registry ) last_success_gauge.labels( database=backup_result['database'], instance=INSTANCE_NAME ).set(time.time()) # Gauge: Status (1=success, 0=failure) status_gauge = Gauge( 'backup_job_status', 'Status of the last backup (1=success, 0=failure)', ['database', 'instance'], registry=registry ) status_gauge.labels( database=backup_result['database'], instance=INSTANCE_NAME ).set(1 if backup_result['success'] else 0) # Gauge: Tables backed up tables_gauge = Gauge( 'backup_job_tables_backed_up', 'Number of tables backed up', ['database', 'instance'], registry=registry ) tables_gauge.labels( database=backup_result['database'], instance=INSTANCE_NAME ).set(backup_result['tables_count']) # Push to gateway try: push_to_gateway( PUSHGATEWAY_URL, job=JOB_NAME, registry=registry, grouping_key={'instance': INSTANCE_NAME} ) logger.info(f"Metrics pushed to {PUSHGATEWAY_URL}") except Exception as e: logger.error(f"Failed to push metrics: {e}") raiseReplace your

main()with the code below. It contains a function call topush_metrics(result)were we are passing the results fromsimulate_database_backup().def main(): """Main loop - runs backup simulation continuously.""" logger.info(f"Database Backup Job started") logger.info(f"Pushgateway URL: {PUSHGATEWAY_URL}") logger.info(f"Job Name: {JOB_NAME}") logger.info(f"Instance: {INSTANCE_NAME}") logger.info(f"Run Interval: {RUN_INTERVAL}s") while True: try: # Run backup simulation result = simulate_database_backup() # Log result status = "SUCCESS" if result['success'] else "FAILED" logger.info( f"Backup {status}: database={result['database']}, " f"duration={result['duration']:.2f}s, " f"size={result['size_bytes'] / 1_000_000_000:.2f}GB, " f"tables={result['tables_count']}" ) # Push metrics push_metrics(result) except Exception as e: logger.error(f"Error in backup job: {e}") # Wait for next run logger.info(f"Waiting {RUN_INTERVAL}s until next run...") time.sleep(RUN_INTERVAL)

Step 7: Create a Dockerfile & requirements file

Create a Dockerfile & requirements.txt file under prometheus-pushgateway-demo/sample-jobs.

Copy following code into Dockerfile.

FROM python:3.11-slim WORKDIR /app COPY requirements.txt . RUN pip install --no-cache-dir -r requirements.txt COPY . . CMD ["python", "python/database_backup.py"]The Dockerfile builds a lightweight Python 3.11 container, installs dependencies, copies the application code, and runs

database_backup.pywhen the container starts.Add below to

requirements.txt.prometheus-client>=0.20.0 requests>=2.31.0

Step 8: Add and bring up the backup-job service into Docker Compose

Add a backup job service in

docker-compose.ymlso the backup job can run as a managed, repeatable container that starts automatically, shares networking with Pushgateway, and can push metrics reliably without manual execution.# Sample Job: Database Backup (runs every 2 minutes for demo) backup-job: build: context: ./sample-jobs dockerfile: Dockerfile container_name: backup-job restart: unless-stopped environment: - PUSHGATEWAY_URL=http://pushgateway:9091 - JOB_TYPE=database_backup - RUN_INTERVAL=120 command: ["python", "python/database_backup.py"] depends_on: pushgateway: condition: service_healthyBring up the service.

docker compose up backup-job -dVerify metrics are being sent. You can visit

port 9091onlocalhostand you should be able to see metrics.

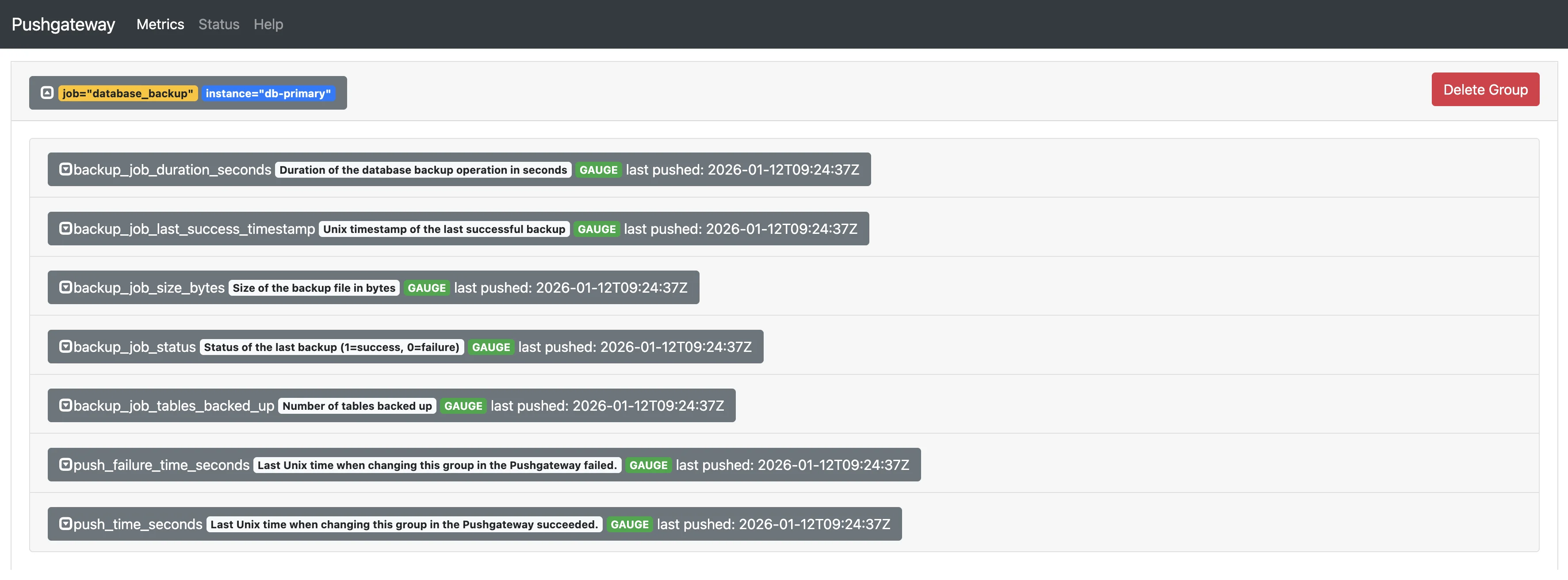

Database backup job metrics sent to Prometheus Pushgateway

Step 9: Configure OpenTelemetry Collector to send metrics to SigNoz

As verified in the previous step, metrics are being sent to the pushgateway. Now you will configure OTel Collector to scrape these metrics from Pushgateway and send them to SigNoz for visualization and alerting. You can read our OpenTelemetry Collector guide to learn more.

Create a sub-folder named

otel-collectorunderprometheus-pushgateway-demo.mkdir otel-collector && cd otel-collectorCreate a

config.yamlfile. It will contain our configuration to scrape the Pushgateway and API key & path to send metrics to SigNoz.touch config.yamlCopy the following code into it.

# This configuration scrapes metrics from Prometheus Pushgateway # and exports them to SigNoz Cloud. receivers: # Prometheus receiver to scrape Pushgateway prometheus: config: scrape_configs: - job_name: 'pushgateway' scrape_interval: 15s # IMPORTANT: honor_labels must be true to preserve # the original job and instance labels from pushed metrics honor_labels: true static_configs: # Use 'pushgateway:9091' when running in docker-compose # Use 'host.docker.internal:9091' if pushgateway runs on host - targets: ['pushgateway:9091'] # OTLP receiver for applications sending OTLP directly otlp: protocols: grpc: endpoint: 0.0.0.0:4317 http: endpoint: 0.0.0.0:4318 processors: resourcedetection: detectors: ["system"] batch: exporters: otlp: endpoint: "https://ingest.<Region>.signoz.cloud:443" tls: insecure: false headers: "signoz-ingestion-key": "Ingestion API Key" debug: verbosity: normal extensions: health_check: pprof: zpages: service: extensions: [health_check, pprof, zpages] pipelines: metrics: receivers: [prometheus, otlp] processors: [resourcedetection, batch] exporters: [otlp, debug] traces: receivers: [otlp] processors: [batch] exporters: [otlp] logs: receivers: [otlp] processors: [batch] exporters: [otlp]Code Breakdown:

**

receivers.prometheus**Configures the OpenTelemetry Collector to scrape Prometheus-formatted metrics from the Pushgateway.honor_labels: true: Preserves the originaljobandinstancelabels set by the application when metrics were pushed.targets: ['pushgateway:9091']: Points the scraper to the Pushgateway service inside the Docker network.exporters.otlp: Sends collected telemetry to SigNoz Cloud using the OTLP protocol.endpoint: Defines the SigNoz Cloud ingestion endpoint for your selected region.signoz-ingestion-key: Authenticates the collector with SigNoz Cloud. Remember to replace it. You can follow Generate Ingestion API Key to create it.pipelines.metrics: Defines the metrics flow from Prometheus and OTLP receivers through processors to SigNoz.pipelines.traces: Defines how traces received via OTLP are processed and exported.pipelines.logs: Defines how logs received via OTLP are processed and exported.

Step 10: Add otel-collector service in docker-compose

Add OTel-collector service to

docker-composeso the OpenTelemetry Collector runs as a managed container that scrapes Pushgateway metrics, receives OTLP data, and reliably exports everything to SigNoz Cloud# OpenTelemetry Collector for SigNoz Cloud otel-collector: image: otel/opentelemetry-collector-contrib:0.143.0 container_name: otel-collector restart: unless-stopped command: ["--config=/etc/otel-collector-config.yaml"] volumes: - ./otel-collector/config.yaml:/etc/otel-collector-config.yaml:ro depends_on: pushgateway: condition: service_healthyYAML Breakdown:

image: Uses the official OpenTelemetry Collector Contrib image pinned to version0.143.0for stability and feature consistency.container_name: Assigns a fixed name (otel-collector) for easier discovery, logs, and debugging.restart: Keeps the collector running automatically unless explicitly stopped.command: Starts the collector with a custom configuration file path.volumes: Mounts the SigNoz Cloud configuration file into the container as read-only so the runtime config matches your local setup.depends_on: Delays collector startup until Pushgateway is healthy to avoid failed scrapes at boot.Bring up the service.

docker compose up otel-collector -d

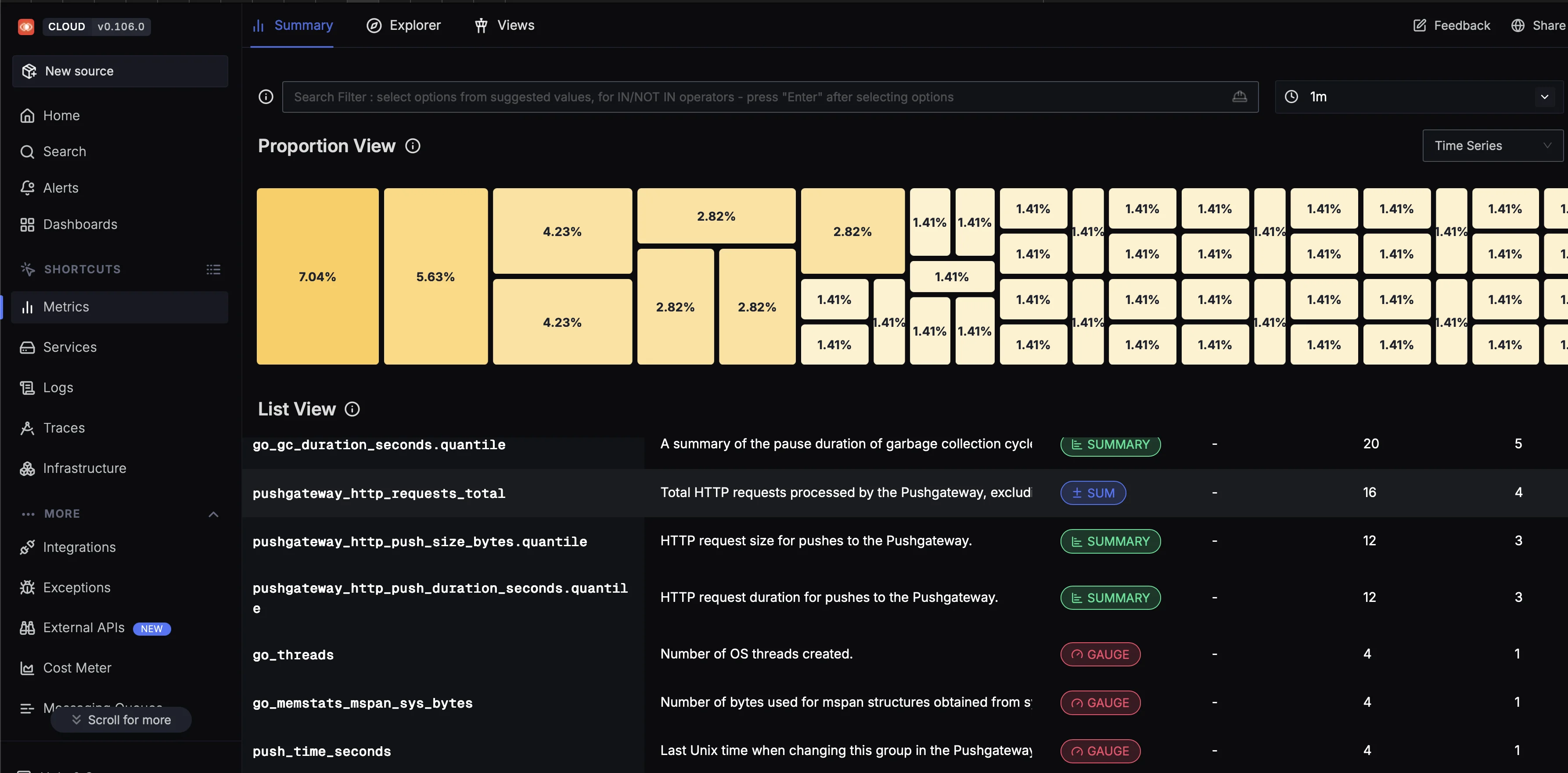

Step 11: Verify if metrics are being sent to SigNoz

You can log in to your SigNoz cloud account and open metrics tab. Wait 2-3 minutes, and you should see your metrics flow in.

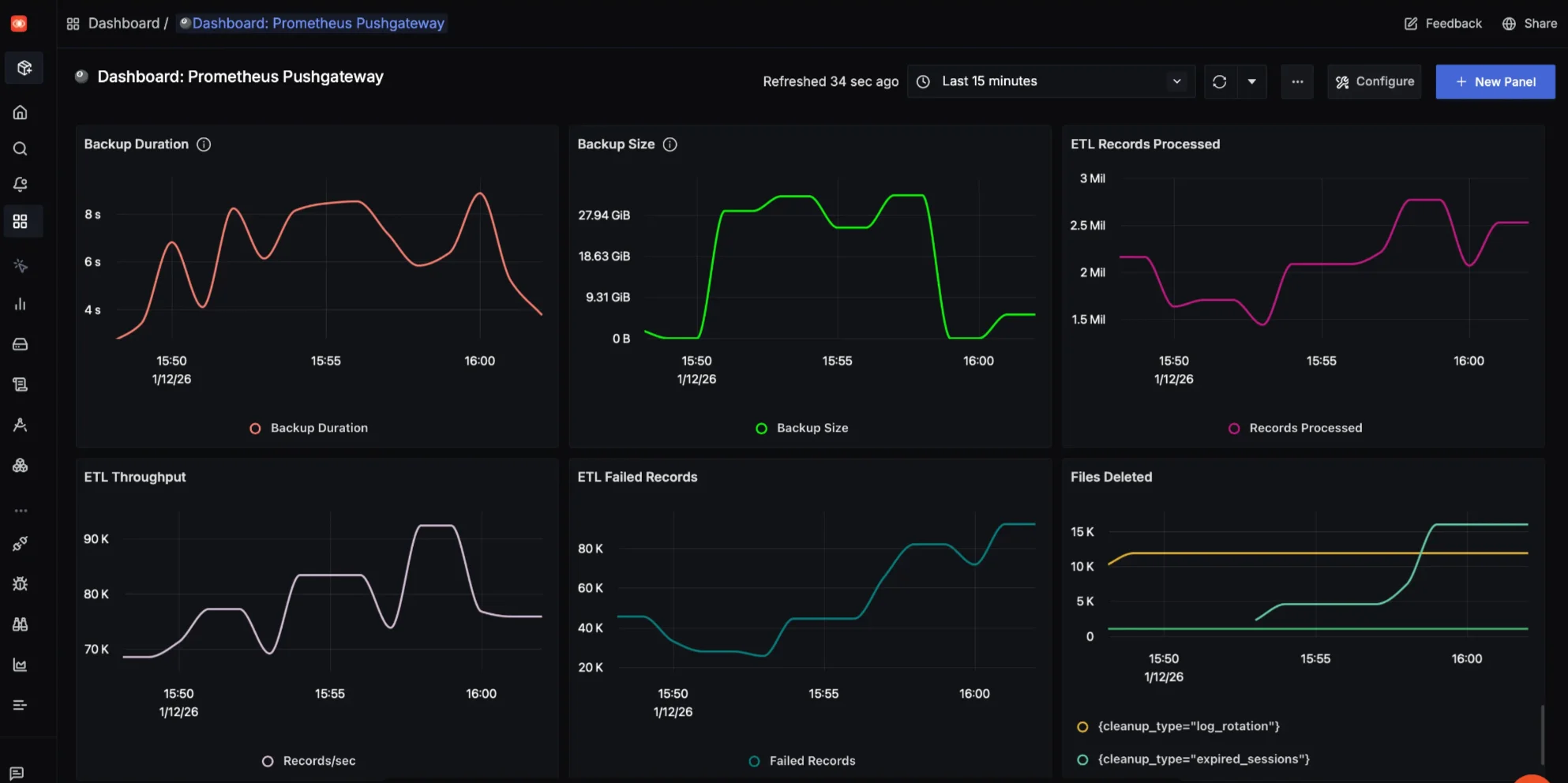

Step 12: Visualization in SigNoz Cloud

You can follow our Create Custom Dashboard docs to create a similar dashboard. You can also import below dashboard configurations by either downloading or copying the prometheus-pushgateway.json and import it while creating the dashboard. You can follow our docs on Import Dashboard in Signoz for detailed steps.

Step 13: Cleanup

Once you are done experimenting, run the following command to stop and remove the container.

docker compose down -v

Best Practice for using Prometheus Pushgateway

The current best practices (as of 2025–2026) for using the Prometheus Pushgateway, based on official Prometheus documentation, community experience, and common production patterns, are as follows:

Use it only for truly short-lived jobs

Pushgateway is designed for batch jobs that start, do work, and exit before Prometheus can scrape them, not for services, daemons, or request-driven workloads.

Always control the metric lifecycle explicitly

Metrics pushed to the Pushgateway do not expire automatically, so jobs must delete their metrics on completion or failure to avoid stale or misleading data.

Avoid high-cardinality labels at all costs

Labels such as timestamps, UUIDs, request IDs, or user IDs permanently increase time-series count and can quickly destabilize your monitoring system.

Use clear and stable grouping labels

Use job plus stable service-level labels (e.g., env, cluster, region, team). Avoid instance/machine labels for Pushgateway’s recommended use case.

Never treat Pushgateway as a source of truth

Pushgateway is a temporary cache, not a metrics database, and its data represents past executions rather than current system state.

Do not use it for real-time alerting

Alerts based on Pushgateway metrics often fire incorrectly because pushed values may be hours or days old and no longer reflect reality.

Run it close to the jobs that push metrics

Deploy Pushgateway in the same cluster or network as the batch jobs to reduce push failures and avoid cross-environment metric mixing.

Secure the endpoint aggressively

Pushgateway accepts unauthenticated writes by default, so network policies, authentication, or a reverse proxy are essential in production setups.

Monitor Pushgateway itself

Track the number of exposed metrics and scrape success to detect silent metric buildup or stuck jobs before they cause downstream issues.

Prefer pull-based or OpenTelemetry-native approaches when possible

If a workload can expose metrics long enough to be scraped, or can emit telemetry via OpenTelemetry, those approaches are usually safer and more scalable than pushing.

Troubleshooting Cheat Sheet: Prometheus Pushgateway

Use quick “if this happens, then check this" signals to diagnose missing metrics, label issues, persistence problems, and scrape failures in Pushgateway setups.

| Symptom | Likely Cause | How to Verify | Fix |

|---|---|---|---|

| Metrics disappear after restart | Persistence not enabled | Restart Pushgateway and check metrics | Enable --persistence.file and mount a volume |

| Metrics overwrite each other | Same job and instance labels | Inspect pushed labels in /metrics | Use unique job/instance per producer |

| Old metrics never removed | Pushgateway has no TTL | Check timestamps in /metrics | Explicitly delete metrics via Pushgateway API |

| Push fails with connection error | Wrong Pushgateway URL | Curl /metrics endpoint | Fix hostname/port or Docker network |

| Metrics missing in SigNoz | Collector not scraping Pushgateway | Check otel-collector logs | Verify Prometheus receiver config |

| High metric cardinality | Dynamic labels (timestamps, IDs) | Inspect label values | Remove high-cardinality labels |

| Push succeeds but metrics stale | Job stopped pushing | Check last push time | Ensure periodic push or cleanup on exit |

| Pushgateway unhealthy | Service not responding | Hit /-/healthy endpoint | Restart container or check logs |

FAQ

When should I use Pushgateway?

You should only use it in very specific scenarios:

- Service-level batch jobs: Jobs that run, complete, and disappear (e.g., a daily backup script, a cron job that processes data).

- Ephemeral scripts: Processes that run for seconds or minutes, making them impossible for Prometheus to scrape reliably on a standard 15s or 30s interval.

When should I NOT use Pushgateway?

- Standard Services: Web servers, databases, or long-running daemons (e.g., Cassandra, Nginx) should be scraped directly.

- Firewall Traversal: Do not use it solely to extract metrics from a secure network. Use PushProx or specific remote-write configurations instead.

- Converting Pull to Push: If you simply prefer push architecture, Pushgateway is not the right tool; it creates a single point of failure and bottlenecks.

Does Pushgateway automatically delete old metrics?

No, if a job pushes a metric (e.g., backup_success_timestamp) and then never runs again, Pushgateway will continue exposing that metric forever (or until the gateway is restarted).

It is best practice to manually delete metrics using the API when they are no longer relevant, or design your metrics (like timestamps) so that "stale" data is obvious in your graphs.

What happens if the Pushgateway restarts?

By default, it stores metrics only in memory. If it crashes or restarts, all metrics are lost. If you have enabled persistence using the --persistence.file flag to save metrics to disk at regular intervals, then restarting won’t affect your data.

Can multiple instances of a job push to the same Gateway?

Yes, but you must be careful with grouping. Metrics are grouped by a grouping key (usually job and instance). If two scripts use the same grouping key, the second one will overwrite the metrics of the first. If they use different keys (e.g., instance=worker-1 vs instance=worker-2) both sets of metrics will stay in the Gateway until explicitly deleted.

Hope this guide has helped you send metrics from your batch jobs to the Prometheus Pushgateway and visualise them in SigNoz.

You can also subscribe to our newsletter for insights from observability nerds at SigNoz, and get open-source, OpenTelemetry, and devtool-building stories straight to your inbox.