What is OpenTelemetry? [Everything You Need to Know]

Observability used to be a fragmented mess. You had one agent for logs, a different library for metrics, and a proprietary SDK for distributed tracing. If you wanted to switch vendors, you had to rewrite your instrumentation code from scratch.

OpenTelemetry (OTel) fixed this.

It has become the second most active project in the CNCF (Cloud Native Computing Foundation), right behind Kubernetes. By standardizing how applications generate and transmit telemetry data, OpenTelemetry ensures you own your data, not your vendor.

This guide covers what OpenTelemetry is, how its architecture works, and why it is now the default choice for modern infrastructure.

What is OpenTelemetry [in a nutshell]?

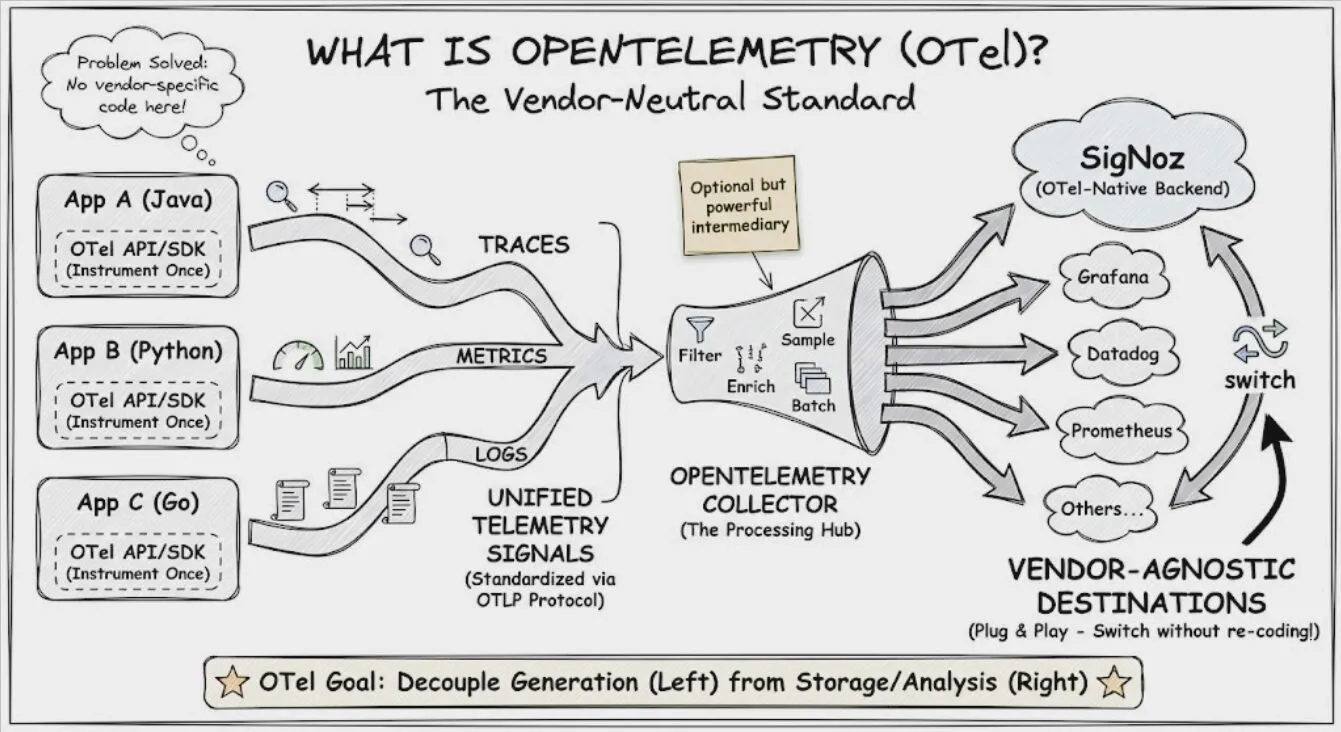

OpenTelemetry is an open-source observability framework that lets you generate, collect, and export telemetry data (traces, metrics, and logs). It is not a storage backend or a visualization tool. Instead, it acts as the universal language and delivery system for your telemetry data.

Think of OpenTelemetry as the plumbing. It gathers data from your applications and infrastructure, processes it, and pipes it to the backend of your choice, whether that's SigNoz, Prometheus, Jaeger, or others.

It formed in 2019 through the merger of two major projects: Google's OpenCensus and the CNCF's OpenTracing.

The goal was to unify the industry on a single standard for instrumentation to enable:

Unified Telemetry

OpenTelemetry unifies the three core pillars of observability into a single data stream.

- Traces: These track the journey of a request as it moves through a distributed system. A trace is made of "spans," where each span represents a specific operation (like a database query or an HTTP request). Traces tell you where the problem is.

- Metrics: These are numerical data points measured over time, such as CPU usage, memory consumption, or request rates. Metrics tell you when a problem occurs.

- Logs: These are timestamped text records of events, often containing error messages or status updates. Logs tell you why the problem happened.

By using OTel for all three, you gain the ability to correlate them automatically. For example, you can look at a specific trace and immediately see the logs generated during that exact timeframe, carrying the same context tags.

Vendor Agnostic

Using OTel, you will NOT be tied to a single vendor. It's very similar to plug and play. You can very easily plug a vendor/ observability backend of your choice.

Cross-Platform

Supports various languages [Java, Python, Go, etc.] and platforms, making it versatile and adaptive for different development environments.

The OpenTelemetry Architecture

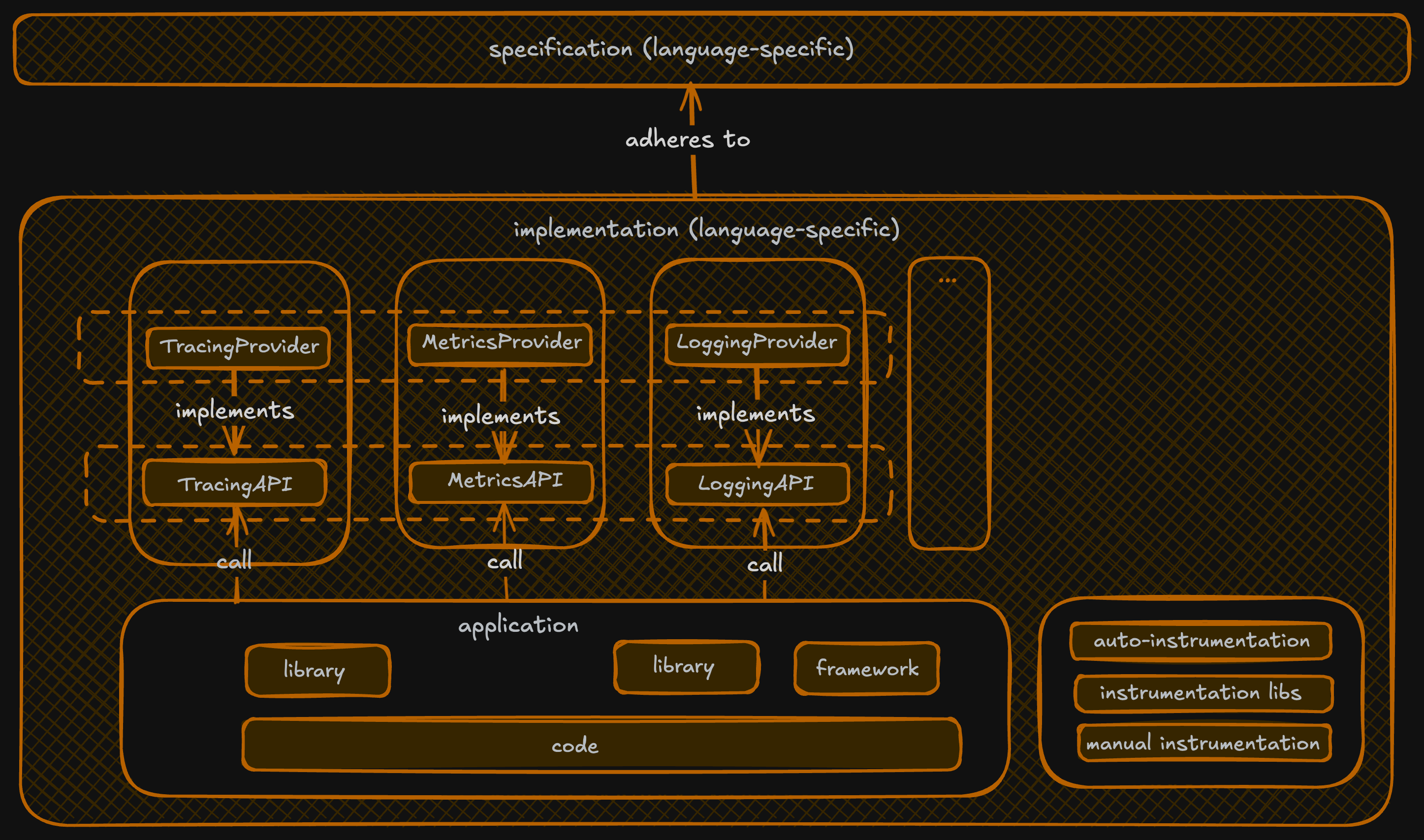

At the heart of OTel is its specification, a formal set of guidelines that defines how telemetry data should be generated, processed, and exported. The specification ensures interoperability across programming languages, tools, and vendors, providing a consistent model for observability data.

The primary purpose of the OTel specification is to create a vendor-neutral standard for telemetry. It ensures that whether you're instrumenting an application in Go, Java, Python, or any other supported language, the data produced follows the same structure, semantics, and protocols.

The OpenTelemetry architecture consists of the following key components:

1. OTel API & OTel SDK

Beginners often confuse these two, but the distinction is vital for stability.

- The API (Interface): This is what you use to instrument your code. It contains the classes and methods to create spans and record metrics. It is purely an interface, if you import the API but don't install an SDK, your code runs but produces no data (a "no-op" implementation). This ensures that instrumentation dependencies don't break your app logic.

- The SDK (Implementation): The SDK plugs into the API to actually handle the data. It applies sampling rules, adds resource attributes (like

service.nameork8s.pod.name), batches the data, and sends it to an exporter.

2. The OpenTelemetry Protocol (OTLP)

OTLP is the native language of OpenTelemetry. It is a highly efficient protocol used to transmit data from the SDK to the Collector, or from the Collector to a backend. It supports both gRPC and HTTP transport. While OTel supports other formats (like Zipkin or Jaeger), OTLP is the recommended default protocol for efficiently transporting telemetry.

3. The OpenTelemetry Collector

The OTel Collector is a vendor-agnostic proxy that sits between your applications and your backend. While optional, it is highly recommended for production environments.

It performs three main jobs:

- Receive: It accepts data in various formats (OTLP, Jaeger, Prometheus, etc.).

- Process: It cleans and modifies data. You can filter out health-check traces, scrub PII (Personally Identifiable Information), or add infrastructure tags.

- Export: It sends the data to one or more backends simultaneously.

You can deploy the Collector as an Agent (a daemon running on every host) or as a Gateway (a centralized service).

4. Semantic conventions

These define common attribute names (e.g., http.request.method, db.system) for uniformity across different components and services. This ensures that a database call looks the same whether it comes from a Python app or a Java app.

The specification is what keeps OpenTelemetry modular, extensible, and future-proof. It's what enables you to instrument once and choose (or switch) backends later whether that's SigNoz, Prometheus, Grafana, Datadog, or any other observability tool that supports OTel.

How does OpenTelemetry work?

We have already skimmed through the fact that OTel lets us collect, process and export telemetry data. Let's look at this in greater detail.

1/ It all begins by instrumenting your code using OpenTelemetry's APIs. This tells the system what to measure, like HTTP latencies, DB queries, or error events and how to capture those signals.

2/ The SDKs in your application pool collect the data generated by instrumentation. Then it is transported for processing and exporting.

3/ The data reaches the OTel Collector, which acts as the processing hub. Here, telemetry can be sampled, filtered to reduce noise, or enriched using metadata from other systems. This step adds valuable context to raw signals.

4/ The processed data is then converted, if needed, into formats expected by observability backends and passed to exporters. Exporters are responsible for delivering the data to its destination.

5/ Before data leaves the Collector, it may undergo additional time-based batching and is then routed to one or more backend systems like SigNoz or any other cloud APM service.

Thus, we understand that OTel enables a telemetry pipeline that begins inside your application and ends in your observability backend:

Now let's break down why betting on OpenTelemetry might be the best observability move you make.

Why Developers Choose OpenTelemetry

The shift to OpenTelemetry isn't just about following a trend; it solves specific engineering pain points.

No More Vendor Lock-in

In the past, instrumenting code meant importing a vendor-specific agent (e.g., the Datadog or New Relic agent). If their pricing changed or you wanted a different tool, you had to rip out that code and re-instrument everything.

With OTel, you instrument once using the standard API. To switch vendors, you simply change a configuration line in your Collector or Exporter. Your application code remains untouched.

Consistent Context Propagation

Debugging microservices is difficult when context gets lost between boundaries. OTel standardizes context propagation. When Service A calls Service B, OTel injects headers (like W3C Trace Context) that carry the trace ID. This ensures that even if the services are written in different languages (e.g., Go calling Python), the trace remains unbroken.

Observability as Code

OTel moves observability from a "siloed operational task" to a developer concern. By using open standards, library maintainers are now adding OTel instrumentation natively. For example, many database drivers and HTTP frameworks now come with OTel hooks built-in, meaning you get telemetry simply by using the library.

Challenges and Considerations

While OTel is powerful, it comes with a learning curve.

Configuration Complexity: The Collector is extremely flexible, which means it can be complex to configure correctly. Managing sampling rates and memory limits on the Collector requires attention.

Version Maturity: Tracing is fully stable across most languages. Metrics are stable in major languages but still evolving in others. Logging is the newest signal and has varying levels of maturity depending on the language SDK.

OpenTelemetry vs. Prometheus & Jaeger

A common confusion is how OTel compares to existing tools.

| Feature | OpenTelemetry | Prometheus | Jaeger |

|---|---|---|---|

| Primary Role | Telemetry Generation & Collection | Storage & Querying (Metrics) | Storage & Visualization (Traces) |

| Signals | Traces, Metrics, Logs | Metrics only (mostly) | Traces only |

| Backend? | No | Yes | Yes |

OTel vs. Prometheus: They are complementary. OTel can scrape metrics and export them to Prometheus. Alternatively, OTel can replace the scraping mechanism entirely, sending metrics directly to a backend that supports PromQL (like SigNoz).

OTel vs. Jaeger: Jaeger is a backend for storing and viewing traces. OTel is the pipeline that sends data to Jaeger. Note that the Jaeger client libraries have been deprecated in favor of OpenTelemetry SDKs.

Getting Started With OpenTelemetry: The Workflow

Implementing OpenTelemetry generally follows three steps.

1. Instrumentation

You can instrument your application in two ways:

- Auto-Instrumentation (Zero-code): Ideal for getting started quickly. You attach an agent to your running application (e.g., the Java JAR agent or Python distro). It automatically captures HTTP requests, database queries, and standard metrics without you writing a single line of code.

- Manual Instrumentation: Used when you need custom business data. You import the API and manually create spans to track specific logic, such as

process_payment()orcalculate_inventory().

2. Collection

Configure the OTel Collector to receive data from your app. A basic config.yaml might look like this:

receivers:

otlp:

protocols:

grpc:

http:

processors:

batch:

exporters:

otlp:

endpoint: "ingest.us.signoz.cloud:443" # use your region

headers:

"signoz-ingestion-key": "${SIGNOZ_INGESTION_KEY}"

service:

pipelines:

traces:

receivers: [otlp]

processors: [batch]

exporters: [otlp]

3. Visualization

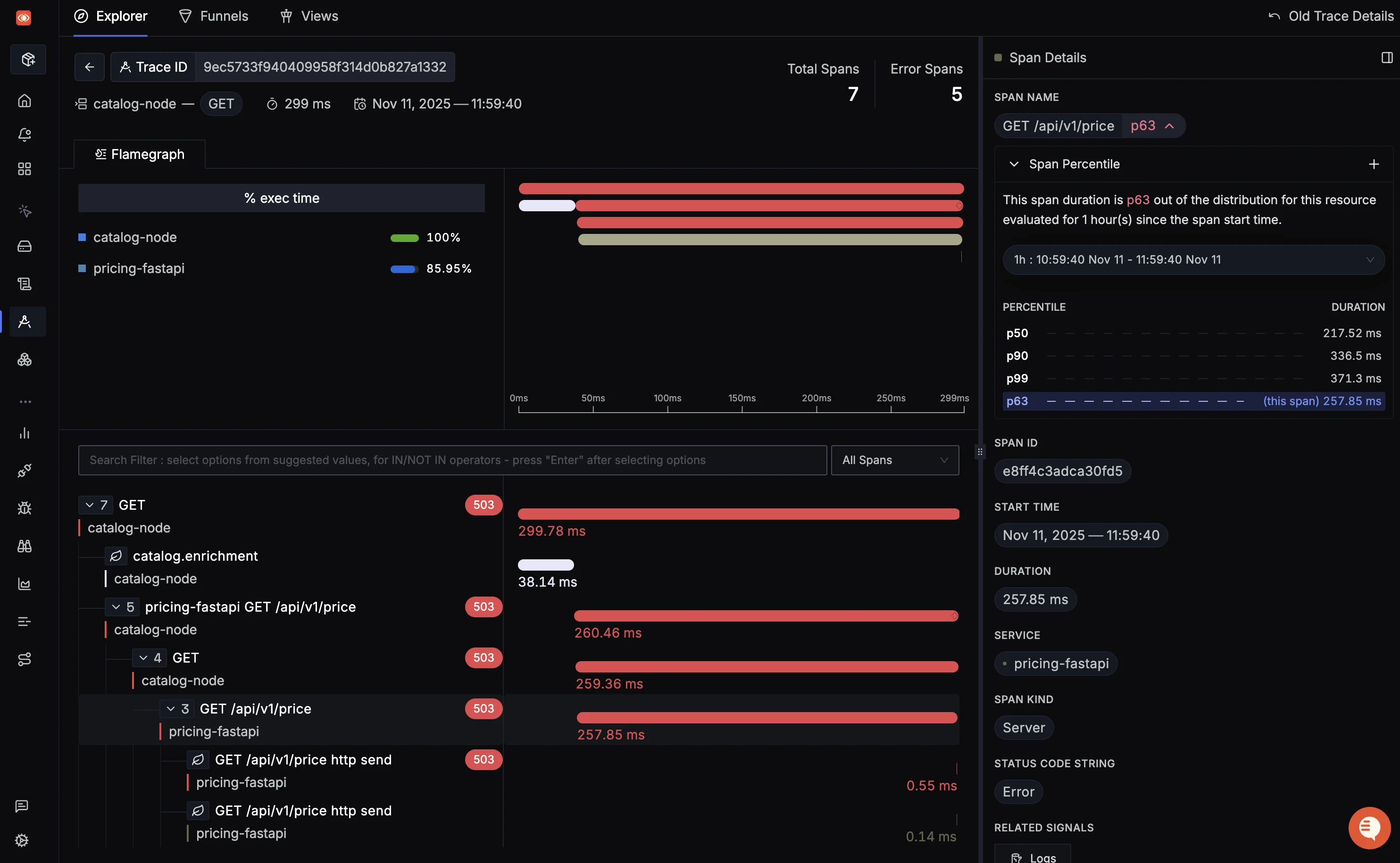

Point the exporter to a backend system like SigNoz to analyze the data.

SigNoz + OpenTelemetry = 🔥

SigNoz is an observability platform built from the ground up with the idea of being OpenTelemetry native. In an OTel-based observability stack, SigNoz is a backend and visualisation layer for your telemetry data. We fully leverage OTel's semantic conventions, providing deeper, out-of-the-box insights.

We've launched some features that doubles down on our OTel-native approach.

- Trace Funnels: Intelligently sample and analyze traces to focus on what's important.

- External API Monitoring: Gain visibility into the performance of third-party APIs your application depends on.

- Out-of-the-box Messaging Queue Monitoring: Effortlessly monitor popular queuing systems.

SigNoz offers multiple deployment options to suit your needs:

- SigNoz Cloud: A fully-managed, scalable solution for teams that want to focus on their core business without the overhead of managing an observability platform.

- SigNoz Enterprise: For organizations with strict data residency or privacy requirements, we offer a self-hosted enterprise edition (bring-your-own-cloud or on-premise) with dedicated support and advanced security features.

- SigNoz Community: A self-hosted, open-source version that's perfect for getting started and for teams with the capability to manage their own infrastructure.

Next Steps..

Now that you've gotten a basic understanding of what OpenTelemetry is, here are a few next steps that you can try.

- Instrumenting your application with OpenTelemetry

- Setting up the OpenTelemetry Demo Application

- Instrumenting your infra with OpenTelemetry

With these next steps, you'll be well on your way to building systems that are easier to observe, debug, and improve. Thank you, OpenTelemetry, for making observability accessible for everyone! ❤️

Hope we answered all your questions regarding What is OpenTelemetry. If you have more questions, feel free to use the SigNoz AI chatbot, or join our slack community.

You can also subscribe to our newsletter for insights from observability nerds at SigNoz, get open source, OpenTelemetry, and devtool building stories straight to your inbox.