30+ Observability User Stories

One of the best ways to learn about a domain is to learn how the top companies have done it. Here's a compilation of 30+ curated articles from engineering blogs of top companies like Uber, Netflix, GitHub etc. on observability, monitoring and site reliability.

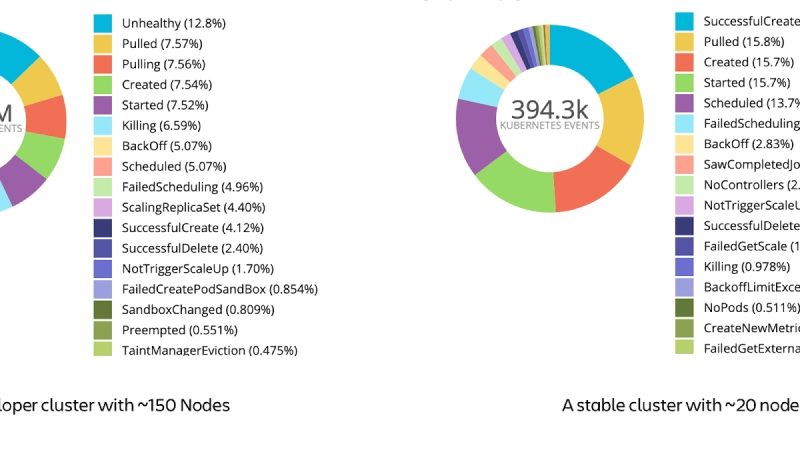

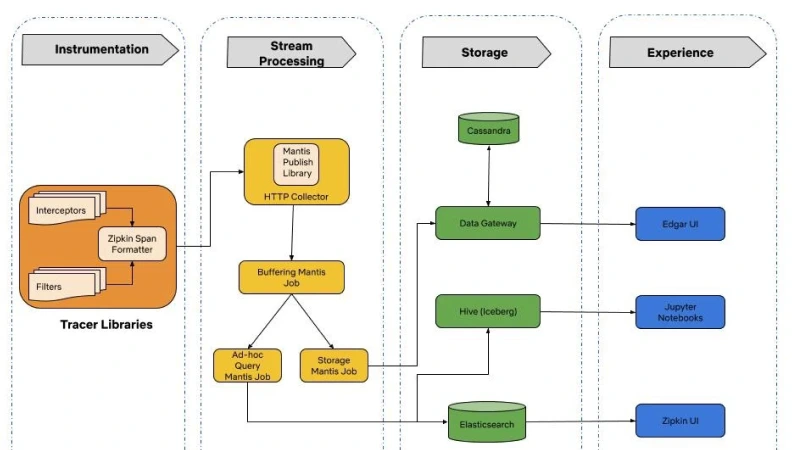

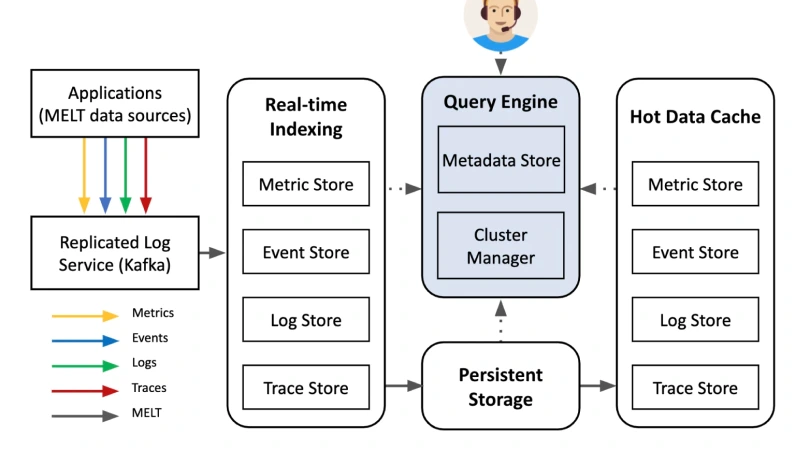

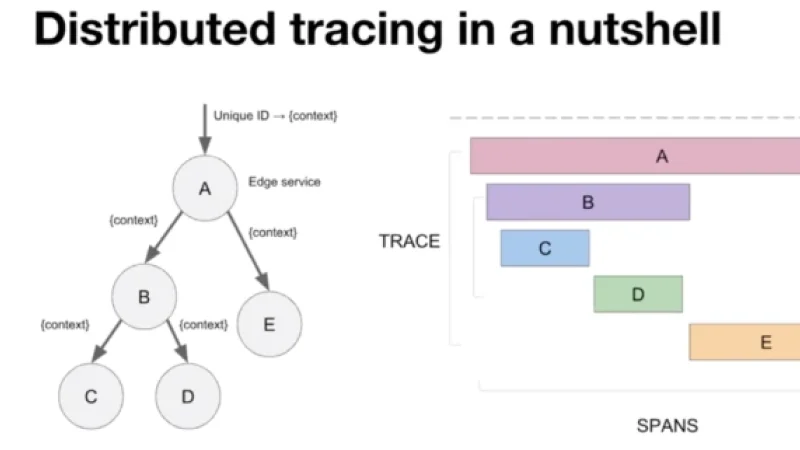

Building Netflix's Distributed Tracing infrastructure

In this blog, Netflix engineering team describes how they designed the tracing infrastructure behind Edgar. Edgar helps Netflix troubleshoot distributed systems.

Lessons from Building Observability Tools at Netflix

5 key learnings of Netflix engineering team from building observability tools. Scaling log ingestion, contextual distributed tracing, analysis of metrics, choosing observability database and data visualization.

Edgar: Solving Mysteries Faster with Observability

Author describes Edgar, a self-service tool for troubleshooting distributed systems, which also pulls in additional context from logs, events and metadata.

Achieving observability in async workflows

In this article, Netflix engineering team describes how they built an observability framework for a content production facing application that uses asynchronous workflows.

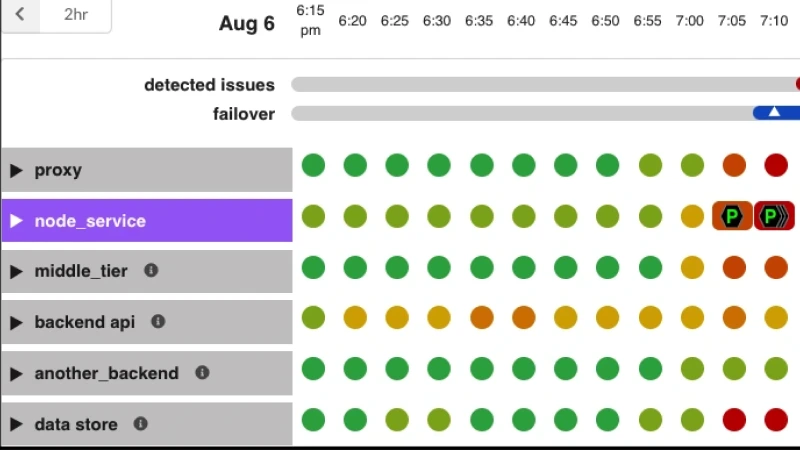

Telltale: Netflix Application Monitoring Simplified

Netflix team describes Telltale, their application monitoring tool. Telltale monitors the health of over 100 Netflix production-facing applications with an intelligent alerting system.

Towards Observability Data Management at Scale

This research paper explores the challenges and opportunities involved in designing and building Observability Data Management Systems. Written by authors from Brown University, MIT, Intel, and Slack.

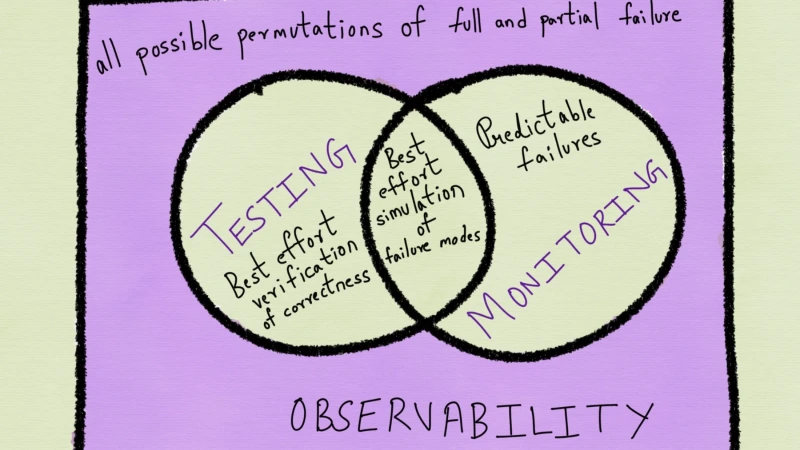

Monitoring and Observability

Cindy Sridharan explains the differences between monitoring and observability along with a brief overview of observability's origin. Read how observability complements monitoring.

We Replaced Splunk at 100TB Scale in 120 Days

What do you do when your monitoring vendor becomes financially unviable and you're at 100TB daily ingestion volumes. Read on to find out how Groupon replaces Splunk in 120 days despite the scale.

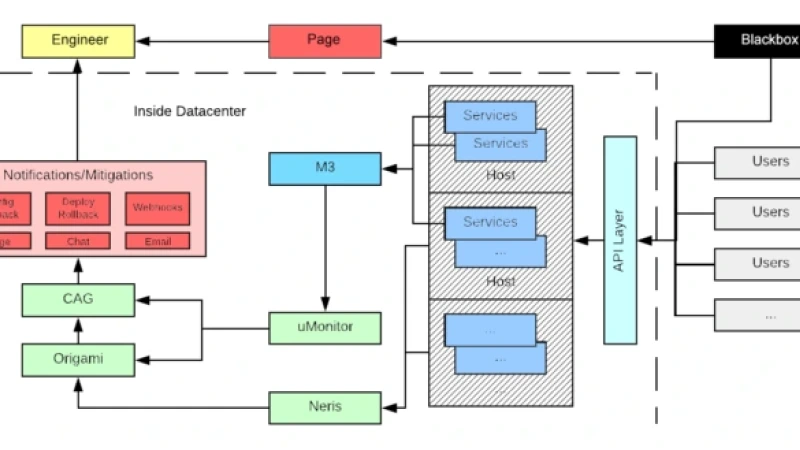

Observability at Scale: Building Uber’s Alerting Ecosystem

Find out how Uber's Observability team built a robust and scalable metrics and alerting pipeline responsible for detecting, notifying engineers of issues with their services as soon as they occur.

Optimizing Observability with Jaeger, M3, and XYS at Uber

Videos with presentations on Jaeger, an open-source distributed tracing system created at Uber, XYS, an internal sampling service at Uber, and M3, Uber's open source metrics stack.

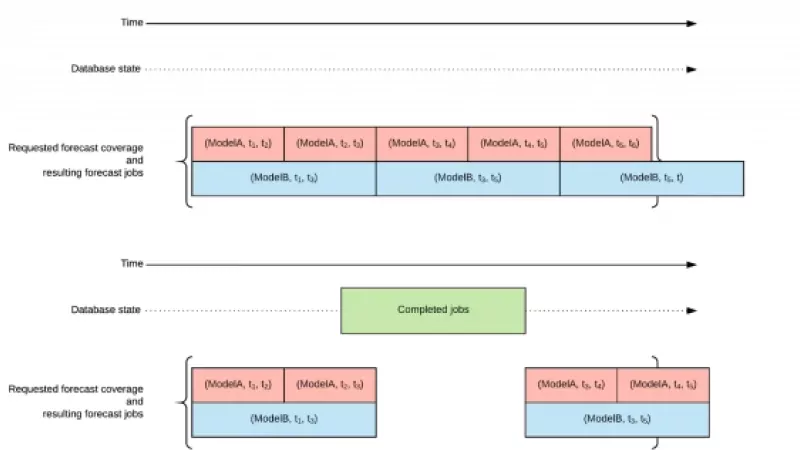

Engineering a Job-based Forecasting Workflow for Observability Anomaly Detection

An interesting read on how Uber's observability team overhauled the anomaly detection platform's workflow by introducing alert backtesting. Read how it led to a more intelligent alerting system.

Introducing uGroup: Uber’s Consumer Management Framework

This article introduces Uber's uGroup, a new observability framework to monitor the state of Kafka consumers. Read on to find out the challenges Uber's team faced with Kafka consumer side monitoring.

Eats Safety Team On-Call Overview

This article gives a detailed overview of the On-Call culture at UberEats safety team. It includes everything from their current processes to how they train new engineers for their On-Call turn.

Telemetry and Observability at BlackRock

This blog gives a detailed overview of the telemetry platform at BlackRock. The platform is responsible for overseeing the health, performance, and reliability of BlackRock's investment technology.

Our journey into building an Observability platform at Razorpay

Detailed talk of how Razorpay, the fintech unicorn from India moved from paid APM tools to open-source observability platforms. Great insights on when does it make sense to build vs buy for observability use-cases.

How we monitor application performance at GO-JEK

In this article, the Go-Jek team explains how they went about building their performance monitoring stack using StatsD, Telegraf, InfluxDB and Grafana.

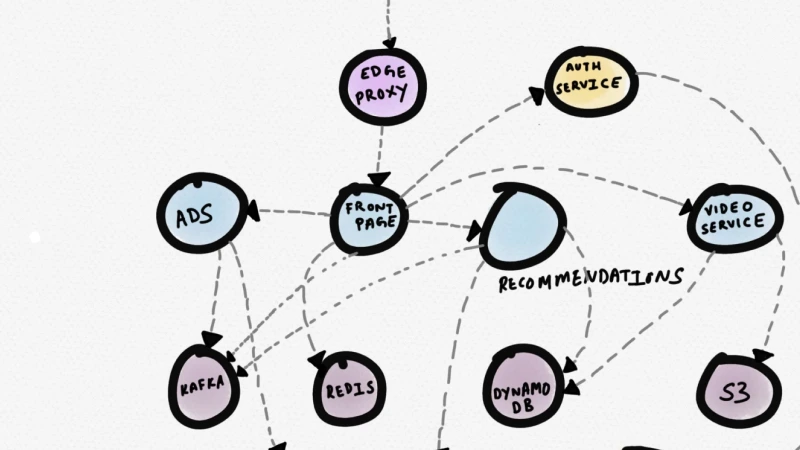

Distributed Tracing — we’ve been doing it wrong

Cindy Sridharan discusses the problems with distributed tracing, specifically with traceviews and spans. Then she suggests alternatives to traceview with details on service-centric views and service topology graphs.

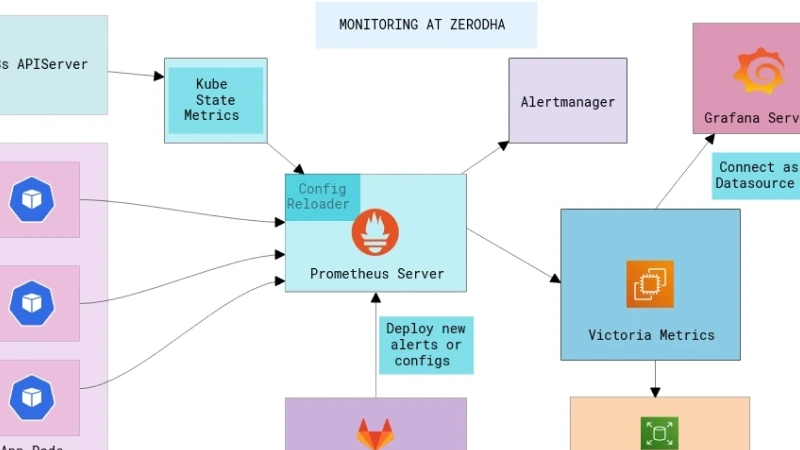

Infrastructure monitoring with Prometheus at Zerodha

This article explains how Zerodha went about setting up infrastructure monitoring with Prometheus. Zerodha handles about 15% of daily retail trading volume across all stock exchanges in India.

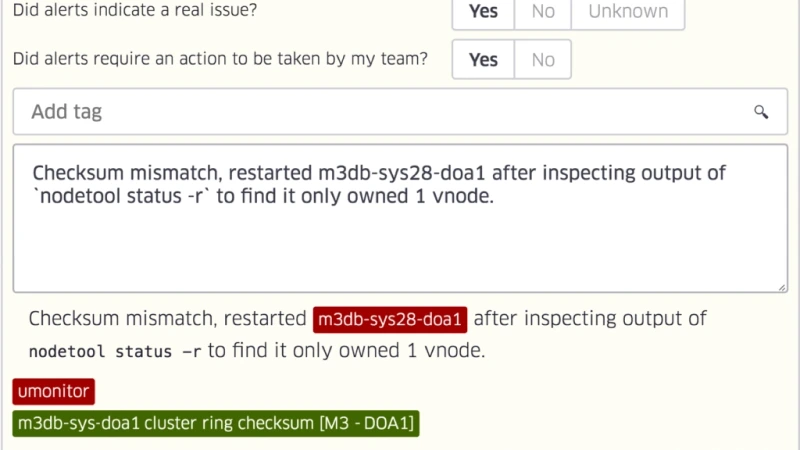

Engineering Uber’s On-Call Dashboard

On-Calls are stressful for engineers. This article gives an overview of Uber's on-call dashboard and its flagship features that ensures Uber's on-call teams are set up for success.

Structured Logging: The Best Friend You’ll Want When Things Go Wrong

Article from a Grab engineer explaining what is structured logging, why is it better, and how the Grab team built a framework that integrates well with their current Elastic stack-based logging backend.

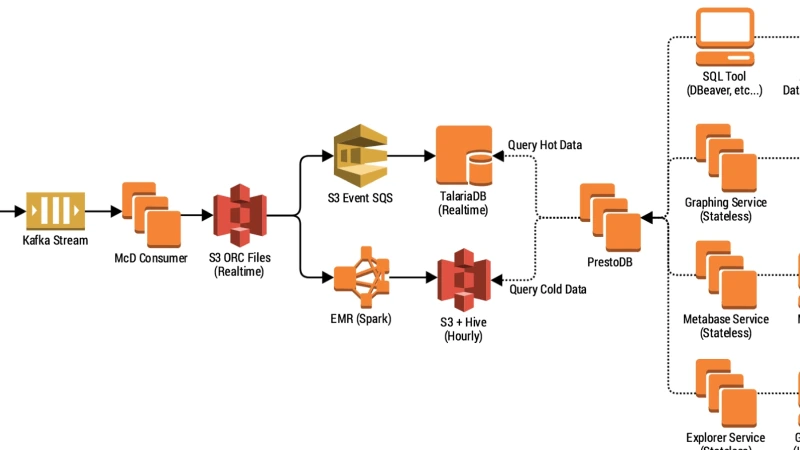

A Lean and Scalable Data Pipeline to Capture Large Scale Events and Support Experimentation Platform

One of the major challenges of Observability is data storage. In this article, the Grab eng. team shares the lessons learned in building a system that ingests and processes petabytes of data for analytics.

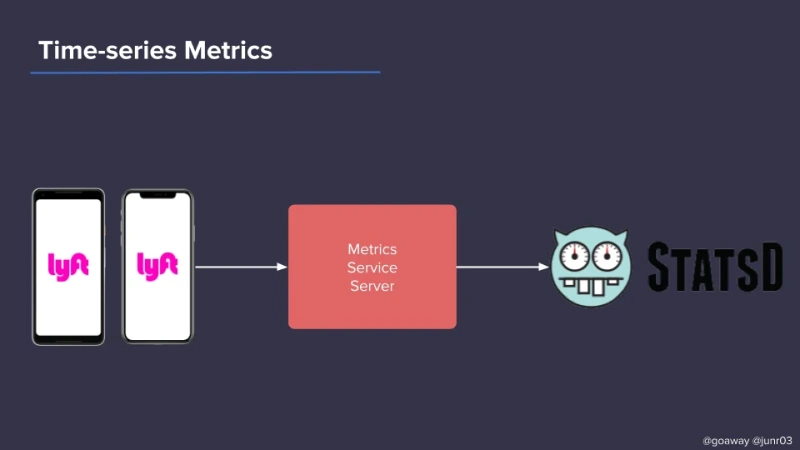

Observability for the Missing Hop

This article shares how Lyft engineering team expanded their observability efforts to include mobile clients. Mobile clients run Envoy Mobile(an in-built tool) to send out time-series data.

Speeding Ahead with a Systematic Approach to Web Performance

This article by Lyft eng. team introduces the Hierarchy of Web performance needs - a system that can identify the most impactful performance needs of an organization building web applications.

How Hulu Uses InfluxDB and Kafka to Scale to Over 1 Million Metrics a Second

Hulu tech explains how they used InfluxDB and Kafka to Scale to Over 1 Million Metrics a Second. Read on for insights on challenges encountered, their initial and final architecture.

Why (and how) GitHub is adopting OpenTelemetry

In this blog, GitHub explains why they're adopting OpenTelemetry for its Observability practices. According to GitHub, OpenTelemetry will allow them to standardize telemetry usage making it easier for developers to instrument code.

Service Outage at Algolia due to SSL certificates

In this article, algolia's eng. team explains how they handled an unusual situation when two of the root certification authorities expired, one cross-signed by the other.

Analysis of recent downtime & what we’re doing to prevent future incidents

Asana eng. team explains the reason behind a recent downtime and provides a detailed technical explanation of what happened and how they intend to prevent this kind of problem in the future.

How Asana uses Asana: Security incident response

In this article, Asana's eng. team explains how they use Asana for security incident response. You can gather insights on critical components of a security incident response.

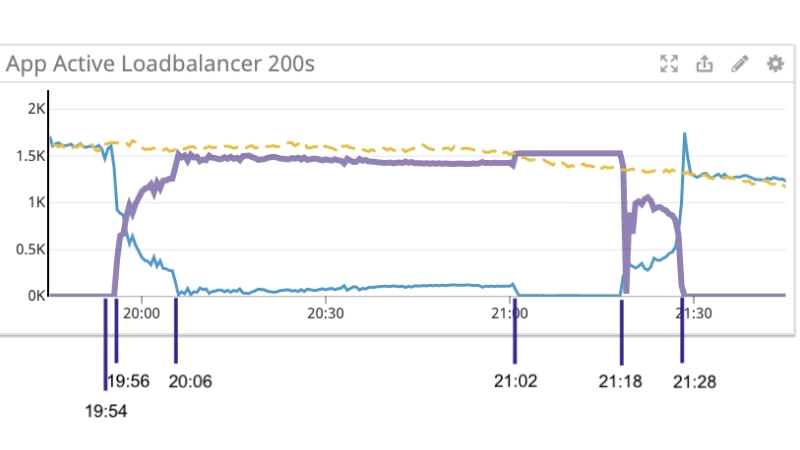

How Asana ships stable web application releases

In this article, the author explains the process of web application releases at Asana, how they roll back broken releases and what they have done to deploy application code three times a day.

Playing the blame-less game

Incident management is a complex process involving different stakeholders. In this article, we get insights into ASOS's Blameless Postmortem process for incident management in their distributed teams.

A day in the life of Head of Reliability Engineering

This blog gives us a glimpse of a day in the life of Cat who is the Head of Reliability Engineering - technology operations at ASOS.