OpenTelemetry Events vs Logs - Key Differences Explained

In the world of modern observability, managing and interpreting vast amounts of telemetry data is crucial for ensuring application health and performance. OpenTelemetry has emerged as a leading standard for collecting telemetry data, encompassing events, logs, metrics, and traces. While metrics and traces are often discussed in detail, events and logs play equally vital roles but can sometimes be misunderstood or used interchangeably.

Understanding the key differences between OpenTelemetry events and logs is essential for designing an effective observability strategy. This article delves into the key differences between OpenTelemetry events and logs, exploring their unique characteristics, use cases, and implementation strategies.

What are OpenTelemetry Events and Logs?

OpenTelemetry is an open-source observability framework designed to help developers collect, process, and export telemetry data from their applications. By standardizing how telemetry is gathered, OpenTelemetry enables seamless integration with various monitoring and observability tools, ensuring better insights into application performance and reliability.

Events in OpenTelemetry

In OpenTelemetry, events are discrete, time-stamped occurrences in an application’s life cycle that provide specific information about what happened at a particular time. These can represent user actions (login attempts, purchases), system state changes (service start/stop), or significant milestones in application processing. Each event typically includes attributes, such as timestamps, severity levels, and additional metadata that describe the event.

Example of an Event

Consider an e-commerce application that generates an event when a user places an order. The event might include:

- Timestamp: When the order was placed.

- Event Type:

Order Placed - Attributes:

user_id: Unique identifier of the user.order_id: Unique identifier of the order.total_amount: Total value of the order.payment_status: Status of the payment (e.g., pending, completed).

Logs in OpenTelemetry

Logs in the OpenTelemetry framework are structured or unstructured records of events that capture detailed information about the state and behavior of an application. They can provide insights into errors, warnings, or informational messages that are crucial for debugging and understanding application flow. Logs are typically timestamped and can include various attributes for contextual information.

Example of a Log

Using the same e-commerce application, a log entry might be generated when an error occurs while processing an order:

- Timestamp: When the error occurred.

- Log Level:

ERROR - Message:

Failed to process payment for order #12345 - Attributes:

order_id:12345user_id:67890error_code:PAYMENT_FAILUREerror_message:Insufficient funds

Importance of Events and Logs in Application Telemetry

Both events and logs are essential components of application telemetry, offering different perspectives on system behavior and performance:

- Enhanced Context and Detail: Events provide fine-grained details within traces, while logs offer broader application context, allowing engineers to pinpoint specific issues or performance bottlenecks.

- Debugging and Troubleshooting: When integrated with traces and metrics, events and logs can significantly improve debugging by providing both high-level and in-depth perspectives on the application's operations.

- Correlated Insights: By correlating logs, events, and traces, engineers can gain a comprehensive view of the application flow, helping identify dependencies and troubleshoot issues in distributed systems.

Key Differences Between OpenTelemetry Events and Logs

Understanding the distinctions between events and logs is crucial for effective application monitoring. Here’s a summary of their core differences:

| Aspect | OpenTelemetry Events | OpenTelemetry Logs |

|---|---|---|

| Structure | Highly structured data points with predefined fields and formats | Semi-structured or unstructured, allowing flexibility in content |

| Temporal Aspect | Captures specific moments in time (discrete occurrences) | Continuous stream of information, showing chronological activity |

| Granularity | Focused on specific actions or state changes with precise details | Broader system information, ranging from detailed debug messages to system status |

| Query & Analysis | Easily searchable and analyzable due to structured data | Requires complex parsing and analysis tools, especially for unstructured data |

| Use Case Example | User actions, state changes (e.g., user authentication success) | Detailed context (e.g., logs recording authentication methods and debug messages) |

Let’s dive deeper into each key difference.

Structure

Events: In OpenTelemetry, events are highly structured data associated with specific spans, detailing precise actions or states within an application's trace. They include attributes like

timestamp,traceId, andspanId, which enable detailed tracking and analysis of events.Example Event:

{ "name": "user.login", "timestamp": "2024-01-01T10:00:00Z", "attributes": { "user_id": "12345", "login_method": "oauth", "success": true, "client_ip": "192.168.1.1", "user_agent": "Mozilla/5.0...", "authentication_provider": "Google" }, "traceId": "abcdef123456", "spanId": "789012", "severity": "INFO", "dropoff_timestamp": "2024-01-01T10:00:00.123Z" }Logs: Logs, on the other hand, are often semi-structured or even unstructured text records. They capture diverse details, including messages and contextual information like service name, error details, and severity level. Logs are useful for recording general system activity and error messages

Example Log

- Unstructured log

2024-01-01 10:00:00 INFO [AuthService] User 12345 logged in successfully via OAuthb. Structured log

{ "timestamp": "2024-01-01T10:00:00Z", "level": "INFO", "service": "AuthService", "message": "User login successful", "context": { "user_id": "12345", "method": "oauth", "ip_address": "192.168.1.1" }, "stack_trace": null }

Temporal Aspects

- Events: Events represent discrete, point-in-time occurrences tied to specific spans, marking milestones or notable actions in an application's workflow, such as a user action (e.g., submitting a form, clicking a button) or a system change (e.g., service start or stop). Events provide a snapshot of a state or action at a precise time.

- Logs: Logs serve as a continuous record of system activity, tracking state changes, ongoing conditions, and status reports over time. This makes logs valuable for monitoring application health and identifying recurring issues.

Granularity

- Events: Events are highly granular and focus on specific actions within a trace. They help illuminate individual steps within a larger process, making them ideal for tracing fine details in real-time application operations. For example, an event may log when a particular transaction is initiated, providing detailed attributes about that singular occurrence.

- Logs: Logs provide broader system information, giving context beyond individual actions. They capture general application behavior and are used for understanding overall system status, making them ideal for auditing and identifying larger patterns.

Query and Analysis Capabilities

- Events: Due to their structured nature and association with spans, events are highly searchable and analyzable. They can be filtered and queried with precision, especially when troubleshooting specific trace paths or investigating the flow of a request.

- Logs: Logs are typically less structured and more variable in format, which can make querying and analysis more challenging. However, structured logging in OpenTelemetry improves log filtering and querying capabilities, enhancing their usability for root cause analysis and historical data insights.

Use Cases for Events vs Logs

Choosing between events and logs depends on your specific monitoring and observability needs. Here are some scenarios where each shines:

Event Use Cases

- User Actions: Tracking specific user interactions like clicks, form submissions, or feature usage. For example, capturing when a user logs in or clicks a "Buy Now" button.

- State Changes: Recording changes in application or system state, such as order status updates or configuration changes. For example, tracking when an order moves from "Pending" to "Shipped.”

- Performance Monitoring: Capturing start and end times of critical operations for precise timing analysis. For example, measuring how long it takes for an API call to complete.

Log Use Cases

- Debugging: Detailed error messages and stack traces for troubleshooting. For example, Logging a stack trace when an exception occurs during order processing.

- Compliance: Maintaining audit trails of system access and data modifications. For example, Logging each time a user accesses sensitive data or modifies a critical setting.

- System Health: Ongoing records of resource utilization, uptime, and general system status. For example, Logging memory consumption and system load over time to detect potential issues.

Complementary Use of Events and Logs for Comprehensive Observability

Combining events and logs provides a full-spectrum view of application behavior:

- Events offer fine-grained insight into specific actions, while logs provide context, helping teams understand why a particular event occurred.

- In error monitoring, events can alert the team to a specific failure (like a

payment.failedevent), while logs provide the detailed trace needed to debug and resolve the issue.

Below is an example of how events and logs work together to create an integrated observability solution:

First, install the necessary OpenTelemetry packages:

pip install opentelemetry-api opentelemetry-sdk

Then, consider the following Python script that processes a purchase order:

from opentelemetry import trace

from opentelemetry.sdk.trace import TracerProvider

from opentelemetry.sdk.trace.export import ConsoleSpanExporter, BatchSpanProcessor

import logging

# Set up basic logging

logging.basicConfig(level=logging.DEBUG)

# Set up OpenTelemetry

provider = TracerProvider()

processor = BatchSpanProcessor(ConsoleSpanExporter())

provider.add_span_processor(processor)

trace.set_tracer_provider(provider)

# Get a tracer

tracer = trace.get_tracer(__name__)

def process_purchase(order_id: str, items: list, amount: float):

with tracer.start_as_current_span("process_purchase") as span:

# Add span attributes

span.set_attributes({

"order.id": order_id,

"order.amount": amount,

"order.items_count": len(items)

})

# Add event for purchase completion

span.add_event("purchase_completed", {

"order_id": order_id,

"amount": amount,

"items": len(items)

})

# Log the processing

logging.info(f"Processing order {order_id}: {len(items)} items, total ${amount:.2f}")

logging.debug(f"Order {order_id} details: {items}")

# Example usage

if __name__ == "__main__":

# Sample order data

sample_order = {

"order_id": "ORD-123",

"items": ["SKU001", "SKU002", "SKU003"],

"amount": 99.99

}

# Process the purchase

process_purchase(

sample_order["order_id"],

sample_order["items"],

sample_order["amount"]

)

This script sets up OpenTelemetry tracing and logging to track the processing of a purchase order, capturing essential details such as order ID, amount, and item count, while exporting the trace information to the console.

When the script is executed, the following output is generated:

INFO:root:Processing order ORD-123: 3 items, total $99.99

DEBUG:root:Order ORD-123 details: ['SKU001', 'SKU002', 'SKU003']

{

"name": "process_purchase",

"context": {

"trace_id": "0x78725386a268c64b5c74cac5abcc1be8",

"span_id": "0x7ed5611ad0432eea",

"trace_state": "[]"

},

"kind": "SpanKind.INTERNAL",

"parent_id": null,

"start_time": "2024-11-01T09:50:01.675075Z",

"end_time": "2024-11-01T09:50:01.675179Z",

"status": {

"status_code": "UNSET"

},

"attributes": {

"order.id": "ORD-123",

"order.amount": 99.99,

"order.items_count": 3

},

"events": [

{

"name": "purchase_completed",

"timestamp": "2024-11-01T09:50:01.675096Z",

"attributes": {

"order_id": "ORD-123",

"amount": 99.99,

"items": 3

}

}

],

"links": [],

"resource": {

"attributes": {

"telemetry.sdk.language": "python",

"telemetry.sdk.name": "opentelemetry",

"telemetry.sdk.version": "1.22.0",

"service.name": "unknown_service"

},

"schema_url": ""

}

}

The output logs indicate that the system is processing an order with ID "ORD-123," which contains three items totaling $99.99, and it captures detailed trace information, including the trace ID, span ID, and attributes related to the order. Additionally, it records an event for the purchase completion, showing the timestamp and relevant attributes for tracking within OpenTelemetry.

From the output generated by the provided script, we can see how events and logs work together to create an integrated observability solution in the following ways:

Real-Time Notifications via Events: The event recorded in the output—

"name": "purchase_completed"—serves as a real-time indicator that a specific action has taken place: the completion of a purchase. This event includes attributes such asorder_id,amount, anditems, providing immediate insights into the transaction's details. It allows teams to quickly identify that an important milestone in the order processing has been reached, prompting further analysis if needed.Detailed Context from Logs: The logs provide contextual information that supplements the event. For example, the log entry:

INFO:root:Processing order ORD-123: 3 items, total $99.99 DEBUG:root:Order ORD-123 details: ['SKU001', 'SKU002', 'SKU003']captures not only the action (processing the order) but also specific details about the order, such as the total amount and the items included. This context is crucial for understanding the circumstances surrounding the event and helps teams comprehend the broader operational picture.

Linking Events and Logs for Troubleshooting: If issues arise—such as a payment failure—teams can refer to the recorded event and its associated logs to diagnose the problem. For instance, if the

purchase_completedevent triggers an alert for an error, the logs will provide information about what happened during processing (e.g., the items involved and the total amount). This linkage enables more effective troubleshooting by allowing teams to follow the trail from the event to its contextual details.Correlated Data for Enhanced Analysis: The output combines both event and log information, allowing teams to correlate the transaction's success (the event) with the detailed processing steps (the logs). This correlation aids in understanding patterns and behaviors within the application, such as the typical flow of transactions and how different variables (like the number of items) impact outcomes.

How OpenTelemetry Handles Events and Logs

OpenTelemetry simplifies telemetry data collection by offering a unified framework for handling events and logs. This standardization improves observability and allows for seamless correlation with traces and metrics, giving developers a holistic view of system behavior.

Handling Events with OpenTelemetry

Events can be managed through two primary mechanisms: span events within the Tracing API and a dedicated Events API.

- Span Events: In OpenTelemetry, events are primarily implemented as span events within the Tracing API. This approach allows events to be recorded as part of spans, which are fundamental units of work that represent operations in your application. By linking events to spans, developers can maintain contextual awareness throughout their tracing, enabling better correlation between different telemetry signals and providing insights into application performance.

- Events API: OpenTelemetry also includes a dedicated Events API, designed to enhance event management capabilities. This API consists of two main components:

- EventLoggerProvider: This is the entry point of the Events API. It provides access to

EventLoggerinstances, serving as a factory for creating loggers for various components of your application. By centralizing the management of event loggers, the provider simplifies the process of emitting events across different application contexts. - EventLogger: The

EventLoggeris responsible for emitting events. It allows applications to record significant occurrences with structured data, enabling developers to capture and track events such as user interactions, system alerts, or status changes effectively.

- EventLoggerProvider: This is the entry point of the Events API. It provides access to

It's important to note that the Events API is still under development, which means that its features and functionalities may evolve over time. While both mechanisms exist in the specification, developers currently work primarily with span events through the Tracing API.

For example:

from opentelemetry import trace

from opentelemetry.sdk.trace import TracerProvider

from opentelemetry.sdk.trace.export import SimpleSpanProcessor, ConsoleSpanExporter

tracer_provider = TracerProvider()

tracer = tracer_provider.get_tracer(__name__)

console_exporter = ConsoleSpanExporter()

span_processor = SimpleSpanProcessor(console_exporter)

tracer_provider.add_span_processor(span_processor)

with tracer.start_as_current_span("user_login") as span:

# Add attributes to the span itself

span.set_attribute("event.type", "user_login")

span.set_attribute("user_id", "12345")

span.set_attribute("auth_method", "2FA")

# Add an event with its own attributes

span.add_event("login_attempt", {

"status": "success",

"timestamp": 1679580000000

})

# Add another event

span.add_event("User logged in successfully")

print("Emitted a user login event")

In this example, the user_login event captures structured data such as the user ID, login status, and authentication method. This structure makes events easy to analyze and correlate with other telemetry data.

Handling Logs with OpenTelemetry

For logs, OpenTelemetry integrates seamlessly with existing logging frameworks like Python's logging module. It enhances these logs by adding consistent metadata and context, allowing developers to correlate logs with traces, metrics, and other telemetry data.

Example of Logging with OpenTelemetry:

import logging

from opentelemetry.instrumentation.logging import LoggingInstrumentor

# Instrument logging to add context and set format

LoggingInstrumentor().instrument(set_logging_format=True)

# Log an informational message with additional context

logging.info("Application started", extra={"app_version": "1.2.3", "env": "production"})

In this example, the log entry includes additional metadata such as the application version and environment, making it easier to search, filter, and correlate logs across different systems.

Benefits of OpenTelemetry's Unified Approach to Telemetry Data

OpenTelemetry’s unified approach to handling events and logs offers several significant benefits:

- Comprehensive Observability: By combining events, logs, and traces within a single framework, OpenTelemetry enables a holistic view of application behavior and performance. This comprehensive observability helps teams quickly identify issues and understand their root causes.

- Simplified Data Management: A unified data model reduces the complexity of managing different telemetry data types, making it easier for teams to collect, store, and analyze data without needing to juggle multiple systems.

- Improved Correlation: With standardized attributes and context propagation, developers can correlate logs with traces and events, enhancing trouble

- Flexibility and Extensibility: OpenTelemetry's architecture allows organizations to extend and customize their telemetry implementations, adapting to specific needs without losing the benefits of standardization and integration.

- Standardization of Data Models: OpenTelemetry promotes standardization in data models for both events and logs, ensuring consistency across telemetry data. This uniformity facilitates easier interpretation and integration with analytics tools, leading to more accurate and actionable insights.

- Seamless Integration with Backend Systems: OpenTelemetry is designed to integrate smoothly with various backend systems and analysis tools, enabling teams to leverage existing infrastructure while enhancing their observability capabilities. This integration simplifies the process of gathering insights and reporting on application performance, ensuring that organizations can maintain robust monitoring solutions without significant overhead.

Best Practices for Implementing Events and Logs with OpenTelemetry

To maximize the value of your telemetry data, consider these best practices:

Choose Wisely: Use events for specific, structured occurrences and logs for broader, contextual information.

Consistent Naming: Establish a clear naming convention for events and log messages to ensure consistency across your application.

Structured Data: Even for logs, use structured data where possible to facilitate easier analysis:

logging.info("User action", extra={"action": "login", "user_id": "12345"})Avoid Duplication: Don't repeat information in both events and logs. Instead, use them to complement each other.

Performance Considerations: Be mindful of the performance impact, especially when emitting high-frequency events or verbose logs.

Context Propagation: Utilize OpenTelemetry's context propagation to link events and logs with relevant traces and spans.

Regular Review: Periodically review your event and log implementations to ensure they're providing valuable insights without unnecessary noise.

Leveraging SigNoz for OpenTelemetry Events and Logs

SigNoz is an open-source observability platform that enables developers to monitor their applications effectively. With built-in support for OpenTelemetry, SigNoz facilitates the seamless collection, visualization, and analysis of telemetry data, giving you real-time insights into your application’s performance.

How SigNoz Simplifies the Collection and Analysis of Events and Logs

With SigNoz, users can easily capture a wide range of telemetry data, including traces, metrics, and logs, through OpenTelemetry's robust SDKs. This integration allows for a unified approach to observability, where events and logs are not treated as isolated entities but are instead part of a cohesive telemetry ecosystem. SigNoz provides powerful query capabilities and visualization tools that help teams analyze performance issues and user interactions in real-time, significantly reducing the time to identify and resolve incidents.

Getting Started with SigNoz Cloud

SigNoz Cloud offers a managed observability platform that simplifies the setup process, removing the need to maintain your infrastructure. It provides powerful tools for real-time monitoring and historical analysis, helping you gain insights into your application's health and performance.

Sign Up for SigNoz Cloud: Create your account on SigNoz Cloud to access its powerful observability platform. This managed service eliminates the hassle of infrastructure maintenance, allowing you to focus on deriving insights from your data.

SigNoz Cloud is the easiest way to run SigNoz. Sign up for a free account and get 30 days of unlimited access to all features.

You can also install and self-host SigNoz yourself since it is open-source. With 24,000+ GitHub stars, open-source SigNoz is loved by developers. Find the instructions to self-host SigNoz.

Explore the Sample Application: SigNoz comes with a sample application pre-configured to help you explore its capabilities. This application allows you to visualize how telemetry data is captured and displayed, making it easier to understand the features available.

Instrumentation: For detailed guidance on instrumenting your applications, refer to the blog post on Instrumenting Applications with OpenTelemetry.

Data Visualization: Once your application is instrumented and sending data, you can utilize SigNoz’s powerful analytics features. Check guides on SigNoz to learn how to set up dashboards tailored to your specific needs, allowing for effective monitoring of key performance indicators.

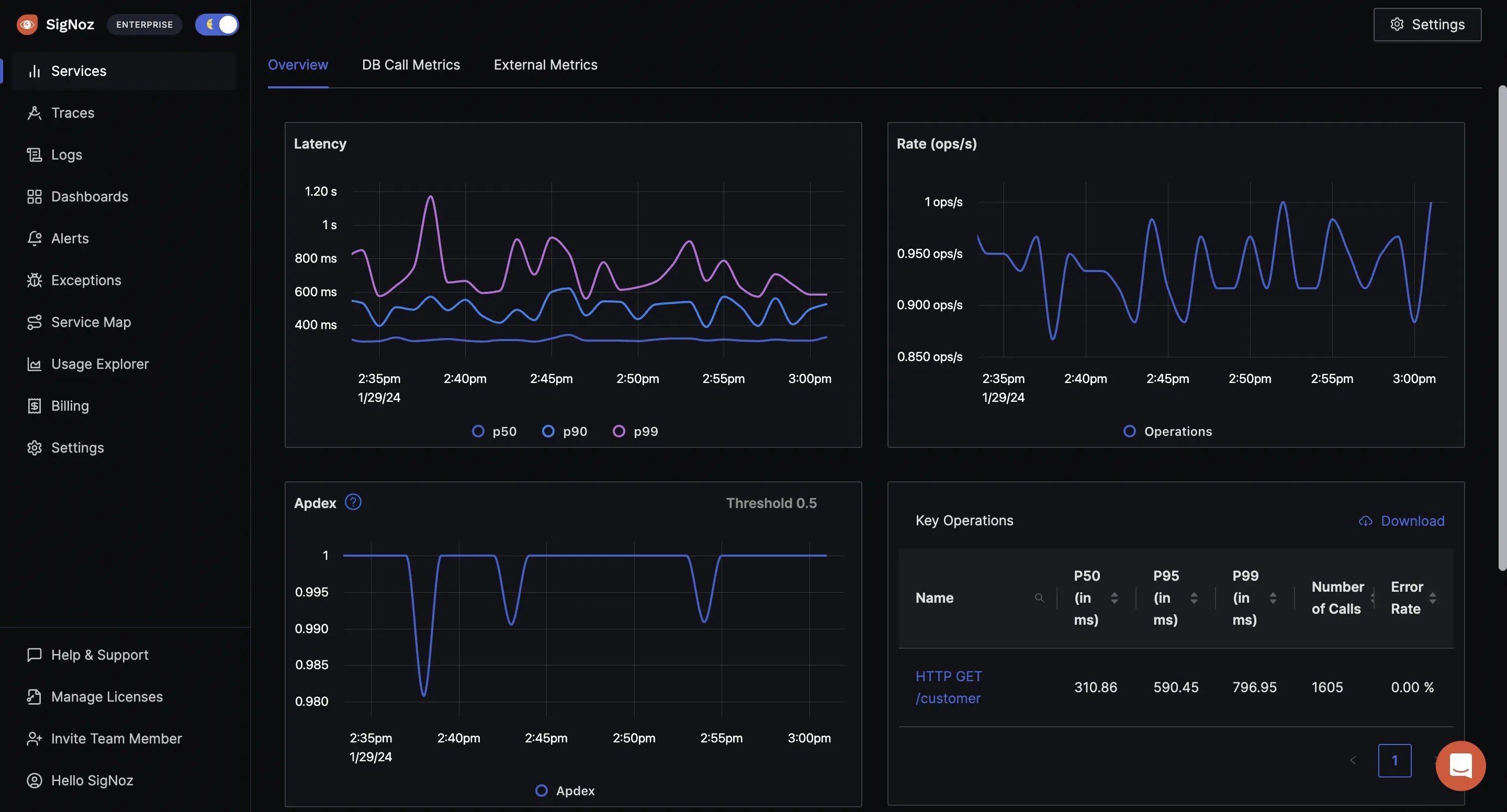

SigNoz Analysis Dashboard

SigNoz Cloud provides powerful tools for real-time monitoring and historical analysis, helping you gain insights into your application's health and performance.

Future Trends in OpenTelemetry Events and Logs

As observability practices evolve, several trends are shaping the future of OpenTelemetry events and logs:

- AI-Driven Analysis: Machine learning algorithms will increasingly be used to analyze events and logs, providing predictive insights and anomaly detection.

- Real-Time Processing: The ability to process and act on events and logs in real-time will become more critical, enabling faster response to issues.

- Enhanced Correlation: Future OpenTelemetry versions may offer tighter integration between events, logs, traces, and metrics, providing a more holistic view of system behavior.

- Automated Instrumentation: Tools that automatically instrument applications for events and logs will become more sophisticated, reducing the manual effort required for comprehensive observability.

- Privacy and Compliance: As data regulations evolve, OpenTelemetry will likely incorporate more advanced features for data anonymization and compliance management in events and logs.

Key Takeaways

- Events capture specific, structured occurrences, while logs provide broader, often less structured context.

- Choose events for precise tracking of actions and state changes; use logs for debugging and continuous monitoring.

- OpenTelemetry offers a standardized approach to both events and logs, simplifying data collection and analysis.

- Effective observability strategies often combine events and logs for comprehensive insights.

- Tools like SigNoz can significantly streamline the management and analysis of OpenTelemetry events and logs.

- Future trends point towards more integrated, AI-driven approaches to event and log analysis in observability practices.

FAQs

What's the main difference between OpenTelemetry events and logs?

Events are structured, point-in-time occurrences, while logs are continuous, often semi-structured records of system activity. Events are typically more specific and easier to query, while logs provide a broader context.

Can I use both events and logs in my OpenTelemetry implementation?

Yes, and it's often beneficial to do so. Events and logs complement each other, providing both specific, structured data points and broader contextual information.

How do OpenTelemetry events relate to distributed tracing?

Events can be associated with specific spans in a distributed trace, providing additional context about what occurred during that part of the transaction. This association helps in understanding the sequence and timing of events within a larger operation.

Are there performance implications when choosing between events and logs?

Yes, events generally have less performance overhead due to their structured nature and typically lower volume. Logs, especially if verbose, can have a more significant impact on performance. It's important to strike a balance based on your observability needs and system resources.