OpenTelemetry vs Loki - Choosing the Right Observability Tool

In the ever-evolving landscape of cloud-native applications, observability has become a critical aspect of maintaining and troubleshooting complex systems. As you navigate this terrain, you'll encounter two prominent tools: OpenTelemetry and Loki. But how do they compare, and which one should you choose for your observability needs? This article dives deep into the world of OpenTelemetry and Loki, exploring their features, use cases, and how they stack up against each other.

What are OpenTelemetry and Loki?

OpenTelemetry is an open-source observability framework designed for cloud-native software. It provides a comprehensive approach to collecting, processing, and exporting telemetry data from your applications and infrastructure, including metrics, traces, and logs.

Loki, on the other hand, is a log aggregation system inspired by Prometheus. It's built with efficiency and cost-effectiveness in mind, focusing primarily on log management and analysis.

While OpenTelemetry casts a wide net over the observability landscape, Loki zeroes in on logs. This fundamental difference shapes their features, use cases, and overall approach to observability.

Key Features of OpenTelemetry

OpenTelemetry boasts several features that make it a powerful choice for comprehensive observability:

- Vendor-neutral data collection: You can collect and transmit data without being tied to a specific backend or vendor.

- Multi-language support: OpenTelemetry works with numerous programming languages and frameworks, making it versatile for diverse tech stacks.

- Automatic instrumentation: Many languages offer auto-instrumentation, reducing the manual work required to implement observability.

- Standardized data model: OpenTelemetry uses a consistent data model across different types of telemetry, simplifying data correlation and analysis.

- Extensible SDKs and APIs: OpenTelemetry offers extensible SDKs and APIs, allowing developers to customize how telemetry data is captured and processed.

OpenTelemetry Collector

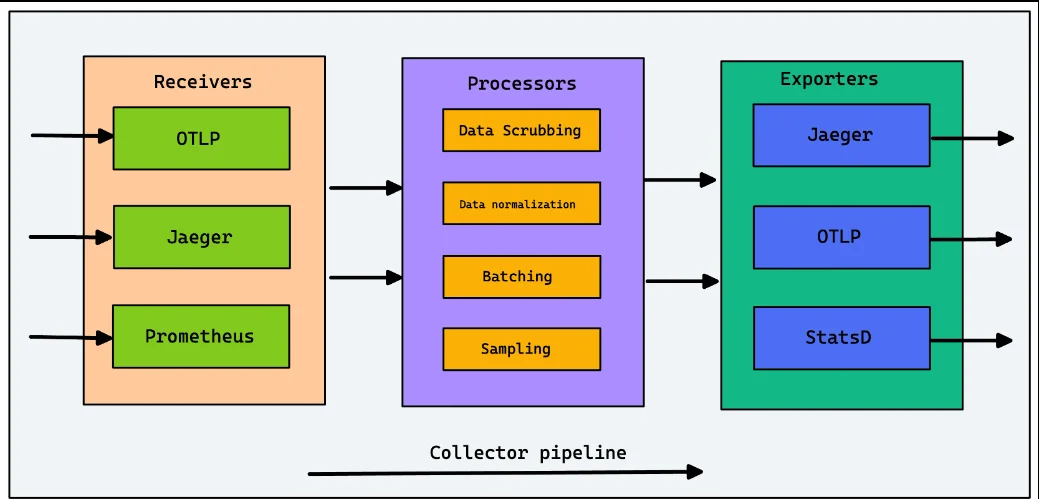

The OpenTelemetry Collector plays a crucial role in the OpenTelemetry ecosystem. It acts as a vendor-agnostic way to receive, process, and export telemetry data.

Here's what makes it special:

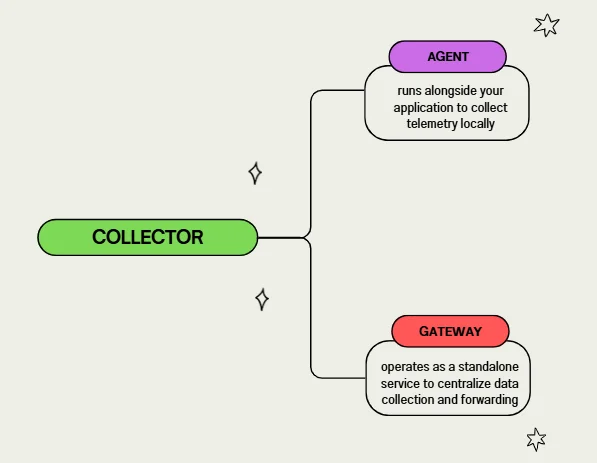

Flexible deployment: The Collector can be deployed in two main modes—either as an agent running alongside your application to collect telemetry locally or as a gateway, operating as a standalone service to centralize data collection and forwarding.

Modes of Collector Deployment This flexibility ensures that the Collector fits seamlessly into various infrastructure setups, whether distributed or centralized.

Advanced data processing: Beyond simply forwarding data, the Collector allows for robust data processing. You can modify, filter, and enrich telemetry data in-flight, ensuring that only relevant, cleaned, and enhanced data is sent to your backend systems. This helps reduce noise and improve the quality of your observability data.

Extensibility: The Collector’s modular design supports a wide array of receivers, processors, and exporters. This means you can easily customize it to meet your specific telemetry needs, adding functionality for specialized data pipelines, or integrating with multiple backends in parallel.

Unified telemetry pipeline: By handling traces, metrics, and logs in a single pipeline, the OpenTelemetry Collector simplifies the complexity of managing multiple telemetry streams. This makes it easier to correlate different types of data and gain a more holistic view of system performance.

Loki's Unique Approach to Logging

Loki is a log management solution from Grafana Labs, designed to streamline log storage and retrieval without the heavy indexing used by traditional log systems, making it an efficient choice for teams focused on scalable observability.

It takes a different approach to log management, focusing on efficiency and cost-effectiveness:

Minimal Indexing Architecture : Unlike conventional log management systems that index the full content of logs, Loki uses a minimalist approach by only indexing metadata, such as labels. This means that instead of maintaining massive indexes of all log data, Loki limits its indexing to crucial attributes (like tenant IDs, log labels, and timestamps).

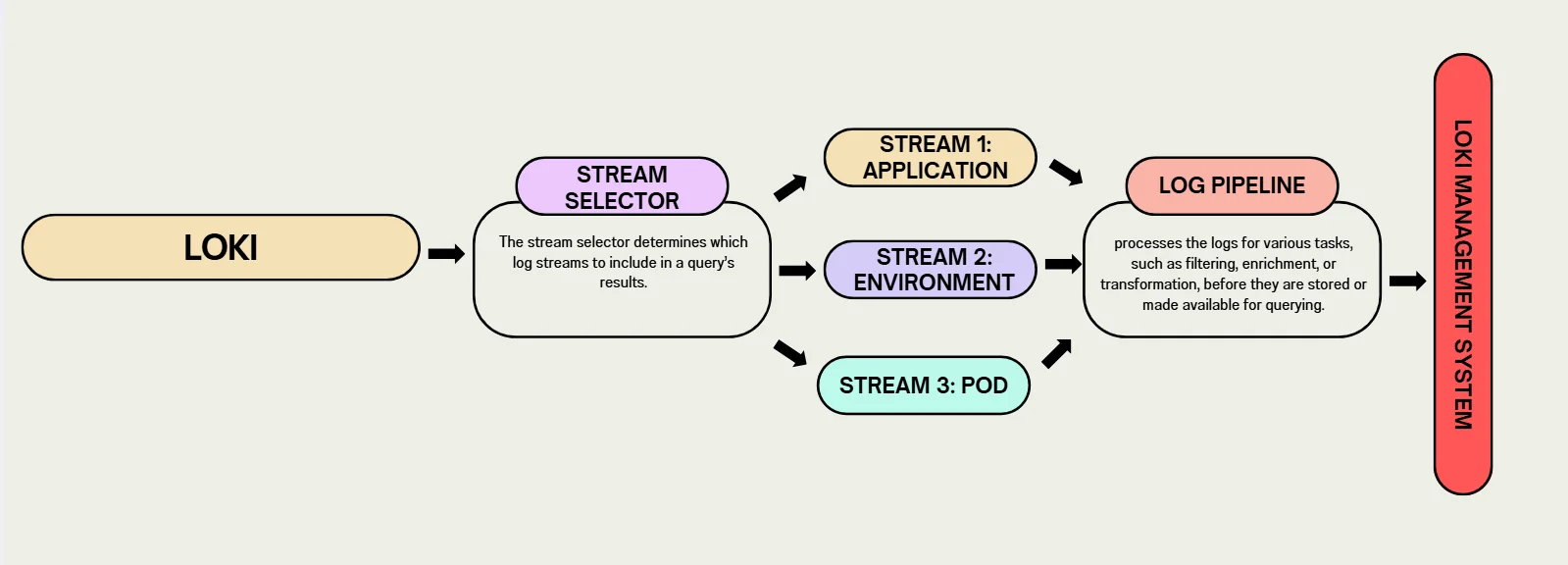

Log Streams and Labels: Similar to the time-series data model in Prometheus, Loki organizes logs into streams, which are groups of logs associated with a unique set of labels.

Log streams and labels These labels (e.g.,

application,environment, orpod) are key to filtering, querying, and analyzing logs. By allowing you to query logs based on their labels rather than content, Loki optimizes the search process, making it faster to retrieve specific logs without sifting through an entire log file. This structure is especially helpful in modern cloud-native environments where logs from distributed microservices need to be efficiently tracked and analyzed.Multi-Tenancy Support: Loki is designed with multi-tenancy in mind, enabling you to manage logs from multiple sources—whether different teams, environments, or applications—while ensuring isolation between them. When running in multi-tenant mode, Loki uses the

X-Scope-OrgIDHTTP header to assign a tenant ID to each log stream. For instance, if a company has multiple teams, such as Development and Marketing, each team can send logs with their respectiveX-Scope-OrgIDvalues, like dev-team for Development and marketing-team for Marketing. This tenant ID not only partitions data in both memory and long-term storage but also ensures that different log streams are kept isolated from each other. This feature is particularly valuable for large organizations or managed services, where different departments or customers need separate logging environments. In scenarios where Loki is not running in multi-tenant mode, the header is ignored, and the system assigns a default tenant ID (fake), which will appear in the index and stored chunks.Cost-Effective Storage: One of the highlights of Loki's design is its object storage backend, which refers to the capability to efficiently manage large volumes of unstructured data. This backend supports cloud storage services like Amazon S3, Google Cloud Storage, and Azure Blob Storage, making it both cost-effective and scalable. Using the index shipper approach (introduced in Loki 2.0), Loki separates index and chunk files in object storage, allowing it to handle massive log volumes without significant increases in operational costs, ensuring high availability and resilience.

Indexing Efficiency: Conventional Systems vs. Loki

Conventional log management systems often index a broader set of attributes, including the full content of logs, user IDs, and session data. In contrast, Loki employs a minimalist indexing strategy, focusing solely on crucial metadata such as labels (e.g., tenant IDs, log labels, and timestamps).

| Factors | Conventional Systems | Loki |

|---|---|---|

| Storage Costs | The need to maintain extensive indexes results in higher storage requirements. | By indexing only a smaller subset of attributes, Loki can significantly lower storage costs. |

| CPU Usage | More complex indexing processes demand significant CPU resources, impacting overall performance. | With less data to process, Loki reduces CPU usage, allowing for faster querying and log retrieval |

Promtail: Loki's Log Shipper

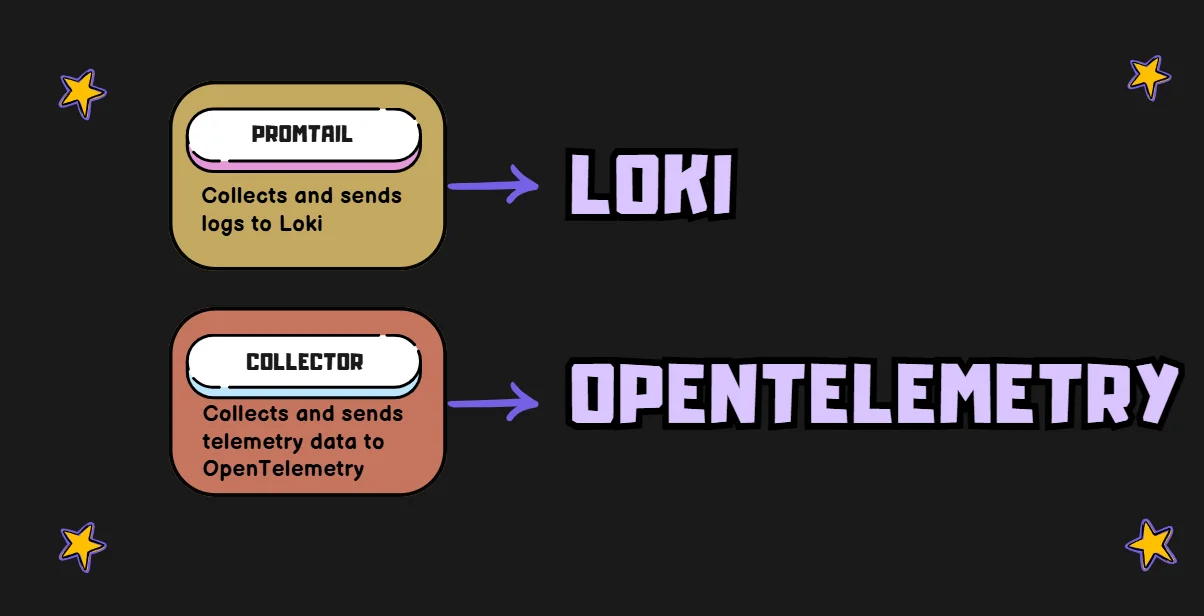

Promtail is to Loki what the Collector is to OpenTelemetry. It's responsible for gathering logs and sending them to Loki. It can currently tail logs from two sources: local log files and the systemd journal. Promtail's main functions include discovering targets, attaching labels to log streams, and pushing them to a Loki instance.

Key features include:

- Log tailing: Promtail can tail logs from various sources, such as Windows events, log files and

systemd journalswhich is basically a Linux-based logging system that centralizes and organizes logs from system services and applications. This ensures comprehensive log collection across different environments. - Label addition: It automatically adds labels to your logs based on file paths or custom rules you define such as application name, environment (like

productionordevelopment), or log level (such aserrororinfo), making it easier to organize and query logs efficiently in Loki. - Flexible discovery: Similar to Prometheus, Promtail uses scrape configs for discovering log sources. A

scrape config(scrape configuration) is a set of rules or instructions that tells Promtail where and how to find log sources in your system. It defines the log locations to monitor, how to parse these logs, and any filters to apply. For instance, a config might specify a folder likeC:\logs\app.logto capture logs from a specific application. This flexibility allows it to adapt to different environments and configurations, ensuring it captures logs from all relevant sources - Push model: Instead of relying on traditional pull-based systems that periodically retrieve or “pull” log data from sources on a fixed schedule, Promtail uses a push model to send logs to Loki in real-time. Promtail can also be configured to receive logs from another Promtail or any Loki client by exposing the Loki Push API with the

loki_push_apiscrape config. This ensures faster ingestion and processing of logs, making it more responsive to real-time issues. We’ll learn more about this in the next section. - Support for compressed files: Promtail now has native support for ingesting compressed files. If a discovered target has decompression configured, Promtail will lazily decompress the compressed file and push the parsed data to Loki.

Promtail Mock Setup

By configuring the loki_push_api in Promtail, you can set up a Promtail instance to act as an intermediary that receives logs from other Promtail instances or Loki-compatible clients.

To set up a Promtail configuration file for sending logs from a standalone host:

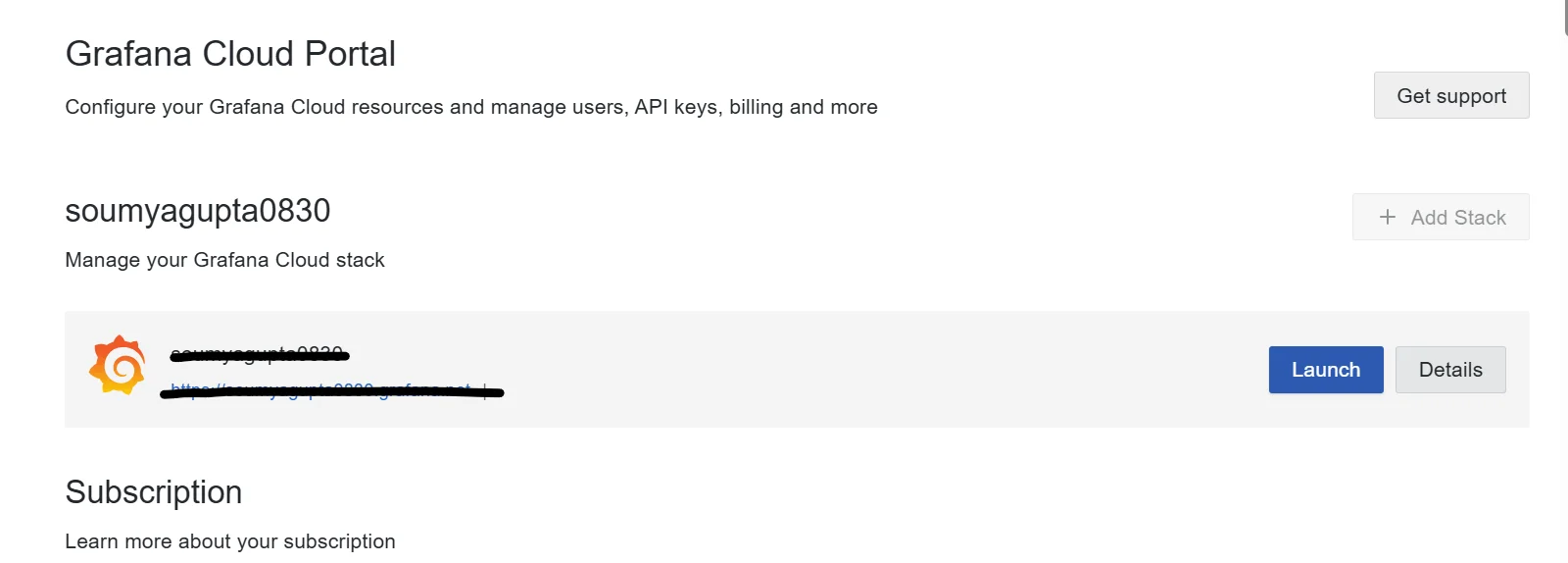

Log into Grafana Cloud: Access your Grafana Cloud account and select Launch.

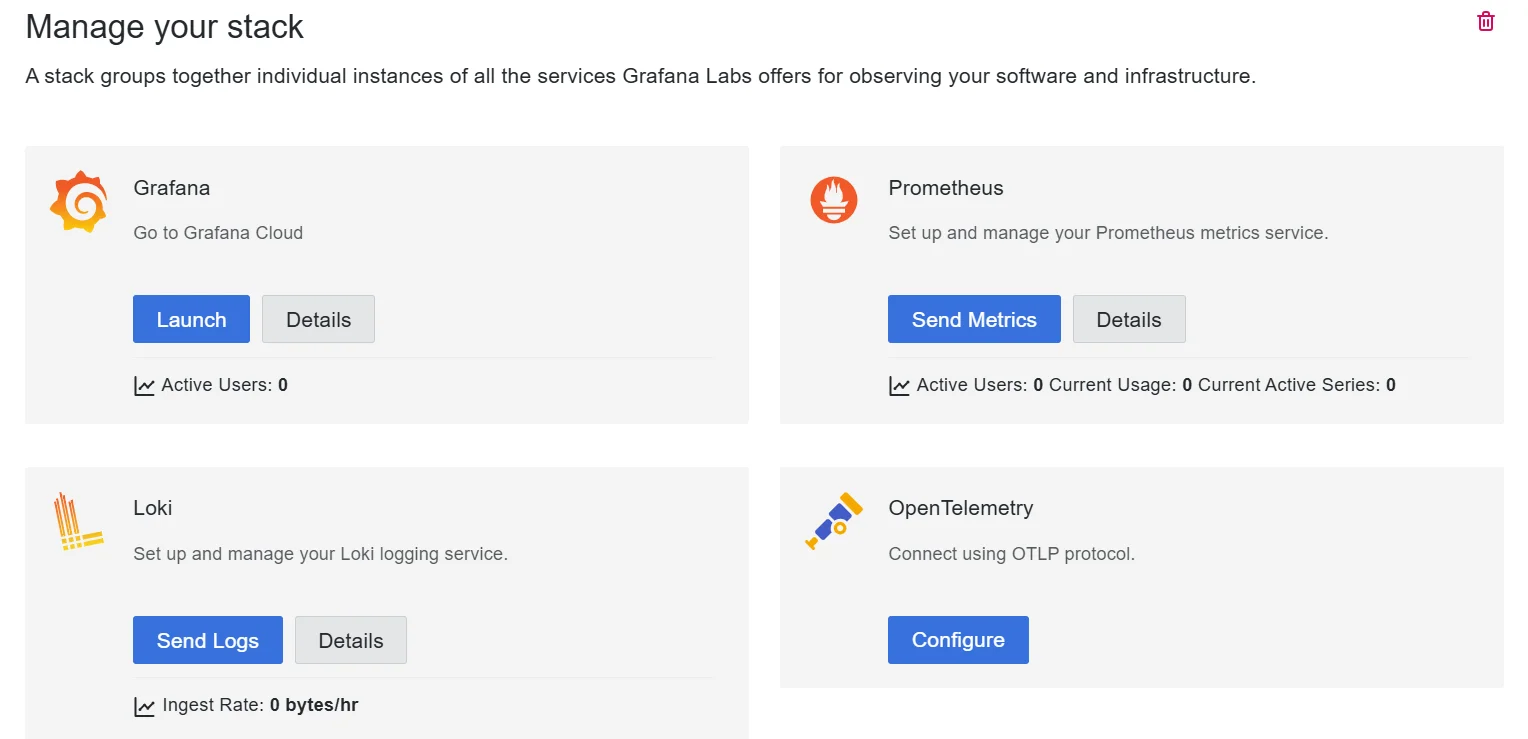

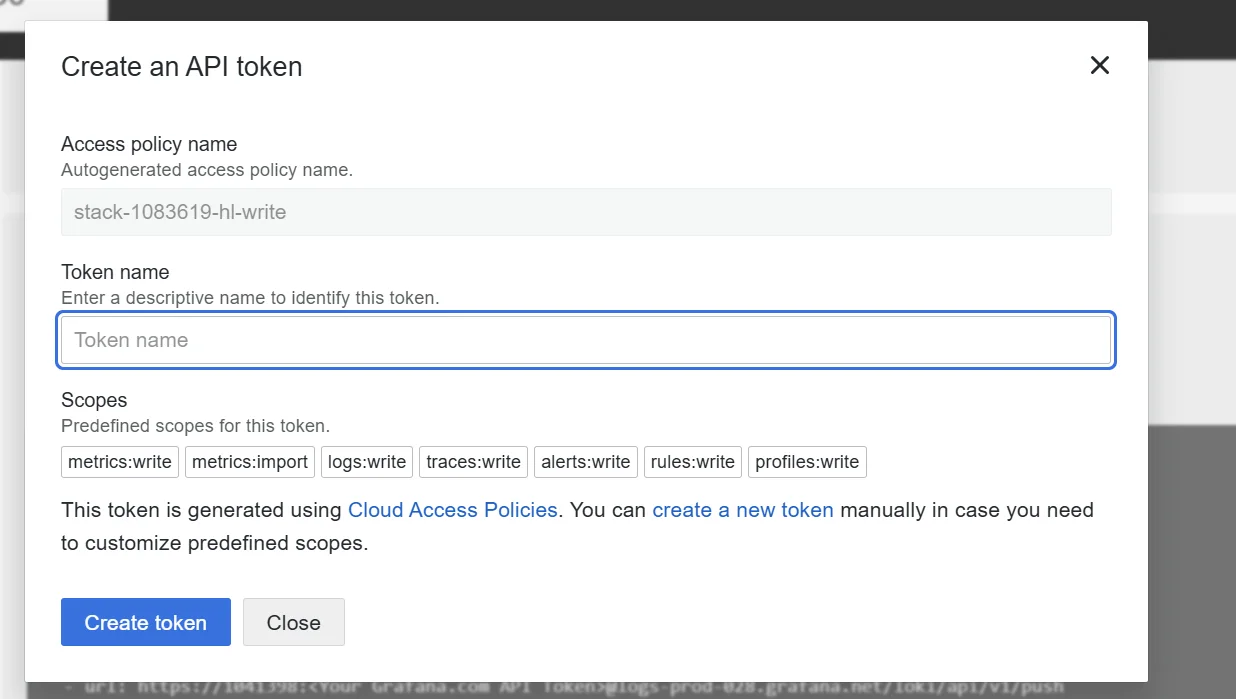

2. Go to the Manage Your Stack Page: Once there, choose Loki and select Send Logs. 3. Select Standalone Host: Choose the standalone host option and assign a name to the token that this integration will use.

3. Select Standalone Host: Choose the standalone host option and assign a name to the token that this integration will use. 4. Review and Save the Configuration File: Scroll down to view the configuration file. Replace

4. Review and Save the Configuration File: Scroll down to view the configuration file. Replace

<GrafanaCloudToken>in the config below with the generated API token and make sure to save it topromtail/config.yaml.

server:

http_listen_port: 0

grpc_listen_port: 0

positions:

filename: /tmp/positions.yaml

clients:

- url: https://<GrafanaCloudToken>@logs-prod-028.grafana.net/loki/api/v1/push

scrape_configs:

- job_name: system

static_configs:

- targets:

- localhost

labels:

job: varlogs

__path__: /var/log/*.log

Comparing OpenTelemetry and Loki

Now that we've explored both tools, let's compare them across several dimensions:

| Factors | OpenTelemetry | Loki |

|---|---|---|

| Scope | Covers metrics, traces, and logs for full-stack observability. | Focuses primarily on log management and analysis. |

| Data Model | Uses a unified data model for all telemetry types. | Employs a log stream model with labels, similar to Prometheus. |

| Scalability | Designed for distributed tracing, can handle large-scale systems. | Built for horizontal scaling, efficient for high-volume log ingestion. |

| Ecosystem Integration | Wide support across various observability tools and backends. | Tightly integrated with Grafana and the Prometheus ecosystem. |

Use Cases: When to Choose OpenTelemetry or Loki

Choosing between OpenTelemetry and Loki depends on your specific needs:

OpenTelemetry excels in:

- Distributed systems requiring end-to-end tracing: If you're working with microservices or distributed architectures, OpenTelemetry is ideal for capturing and correlating traces across services to diagnose performance bottlenecks and ensure seamless user experiences.

- Environments needing a unified approach to metrics, traces, and logs: OpenTelemetry offers a one-stop solution for full observability, making it easier to manage all telemetry data types (metrics, traces, and logs) in one place. This is crucial for teams looking for a standardized approach to monitoring and debugging.

- Multi-language applications where consistent observability is crucial: OpenTelemetry supports a wide range of programming languages, ensuring you have consistent telemetry across different components of your stack, no matter what technologies are in play.

Loki shines in:

- Cost-effective log management at scale: For teams focused on logs, Loki’s efficient storage and index-free architecture provide a cost-effective solution, especially for high-volume log ingestion without the need for complex indexing.

- Setups already using Grafana for visualization: If you’re already using Grafana for metrics visualization, integrating Loki for log management is a natural extension. It allows you to view logs alongside metrics in the same dashboard, making it easier to correlate the two and troubleshoot issues faster.

- Scenarios where quick log querying and correlation are primary concerns: With Loki’s label-based querying, you can quickly filter and search through logs to pinpoint relevant data. This makes it ideal for scenarios where rapid log retrieval and analysis are crucial, such as during incident response.

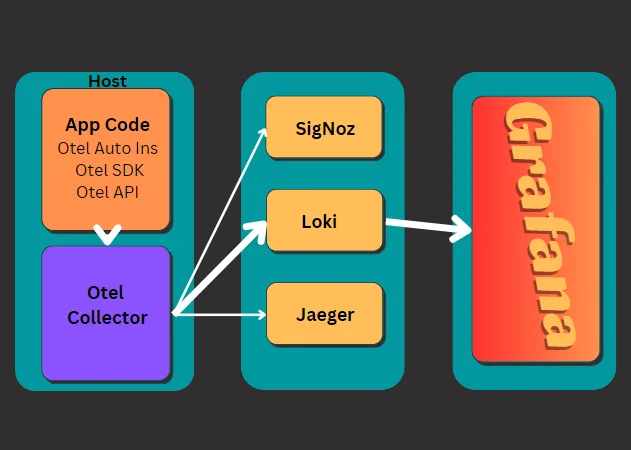

Consider a hybrid approach for teams looking to get the best of both worlds. You can use OpenTelemetry for comprehensive data collection across traces, metrics, and logs, while utilizing Loki as a backend for log storage and querying. This way, you leverage OpenTelemetry’s full observability capabilities while keeping your log management efficient and cost-effective with Loki

Performance and Scalability Considerations

When evaluating OpenTelemetry and Loki for your observability needs, consider these performance aspects:

| Factors | OpenTelemetry | Loki |

|---|---|---|

| Resource Usage | Can have higher overhead due to its comprehensive approach. | Generally, more lightweight, focusing solely on log management. |

| Scaling Strategies | Scale the Collector horizontally for high-volume data processing. | Use a distributed deployment model with separate read and write paths. |

| Query Performance | Trace queries can be complex but powerful for debugging. | Log queries are typically faster due to the index-free architecture. |

| Optimize Performance | Use sampling in OpenTelemetry to reduce data volume for high-traffic services. | Design your label schemes carefully to balance query flexibility and performance. |

Implementing OpenTelemetry and Loki Together

Integrating OpenTelemetry with Loki can provide a powerful observability solution.

When logs are ingested into Loki via the OpenTelemetry protocol (OTLP) ingestion endpoint, part of the data is stored as Structured Metadata. To ensure the logs are accepted, you need to enable allow_structured_metadata in your Loki configuration file. Otherwise, Loki will reject the log payload as malformed.

limits_config:

allow_structured_metadata: true

Let’s take an in-depth look at how it’s done:

- Use the OpenTelemetry Collector: Set up the OpenTelemetry Collector to gather telemetry data from your applications, including logs, metrics, and traces. The Collector acts as an intermediary, standardizing the way telemetry is collected and processed.

- Configure the Loki Exporter: In the Collector configuration, use the Loki exporter to send logs directly to Loki. This ensures that your application logs, collected by OpenTelemetry, are efficiently stored and managed in Loki’s index-free architecture.

- Visualize with Grafana: Leverage Grafana to visualize and query logs stored in Loki, alongside metrics and traces collected from other backends. This unified view allows you to easily correlate logs with performance metrics and traces, making troubleshooting faster and more intuitive.

Integrating OpenTelemetry and Loki provides the flexibility to gather and manage all your telemetry data in a standardized way, while ensuring cost-effective and scalable log storage. OpenTelemetry’s broad support for different telemetry types and Loki’s log-centric efficiency make this combination highly effective for modern observability stacks.

Future Trends in Observability Tools

The observability landscape is rapidly evolving. Here are some trends to watch:

- OpenTelemetry Adoption: As OpenTelemetry continues to mature, it’s increasingly becoming the industry standard for collecting and correlating telemetry data. More vendors are building native support for OpenTelemetry, streamlining integration into various observability stacks. This widespread adoption will likely lead to greater interoperability between tools and easier onboarding for organizations.

- Loki Evolution: Loki is evolving beyond simple log management. With ongoing updates, it’s adding more advanced features like log compaction, improved query performance, and deeper integrations with tools like Grafana and Prometheus. These enhancements are making Loki an even more attractive option for teams focused on cost-effective and scalable log storage.

- Convergence of Telemetry Types: The traditional separation between logs, metrics, and traces is gradually dissolving. As observability platforms become more sophisticated, they offer integrated analysis across all telemetry types, allowing teams to view logs, metrics, and traces in a single context. This convergence enables faster troubleshooting and a more holistic understanding of system behavior.

- AI in Observability: Artificial intelligence and machine learning are playing an increasingly critical role in observability. Tools that leverage AI can now detect anomalies, predict potential system failures, and even automate root cause analysis. As AI continues to advance, we’ll see more automated troubleshooting, helping teams resolve incidents faster and with less manual effort.

- Unified Observability Dashboards: The trend toward unified dashboards is gaining momentum, with platforms integrating data from logs, metrics, and traces into a single pane of glass. Tools like Grafana are making it easier to visualize data from various sources, providing teams with a more cohesive view of system health and performance.

Enhancing Observability with SigNoz

When considering your observability strategy, SigNoz stands out as an open-source, OpenTelemetry-native Application Performance Monitoring (APM) tool. It seamlessly integrates with OpenTelemetry, making it a great option for teams looking for a full-stack observability solution. Here’s why SigNoz can be a game-changer:

- Full Compatibility with OpenTelemetry: SigNoz is built to work natively with OpenTelemetry, meaning you can collect and analyze traces, metrics, and logs without worrying about complex integrations.

- Unified Dashboard for Telemetry Data: With SigNoz, you get a single dashboard that offers a consolidated view of metrics, traces, and logs. This unified approach eliminates the need to juggle multiple tools and makes it easier to monitor and troubleshoot across your entire stack.

- Advanced Querying and Visualization: SigNoz provides powerful querying features, enabling you to dig deep into telemetry data for more insightful analysis. Its intuitive visualization capabilities help you quickly spot anomalies, identify bottlenecks, and optimize system performance.

- Easy Setup and Deployment: One of SigNoz’s standout features is its straightforward setup process. Whether you’re deploying on-premises or in the cloud, SigNoz offers flexible deployment options that suit various environments and scalability needs.

SigNoz Cloud is the easiest way to run SigNoz. Sign up for a free account and get 30 days of unlimited access to all features.

You can also install and self-host SigNoz yourself since it is open-source. With 24,000+ GitHub stars, open-source SigNoz is loved by developers. Find the instructions to self-host SigNoz.

Key Takeaways

- OpenTelemetry provides a comprehensive, vendor-neutral observability framework.

- Loki offers efficient, cost-effective log management with strong Grafana integration.

- Your choice between OpenTelemetry and Loki depends on your specific use cases and existing infrastructure.

- Combining OpenTelemetry and Loki can create a powerful, full-stack observability solution.

- SigNoz offers an OpenTelemetry-native option for unified observability.

FAQs

Can OpenTelemetry and Loki be used together?

Yes, OpenTelemetry and Loki can be used together. You can configure the OpenTelemetry Collector to send logs to Loki, combining OpenTelemetry's data collection capabilities with Loki's efficient log storage and querying.

How does OpenTelemetry handle logs compared to Loki?

OpenTelemetry treats logs as one part of a unified telemetry data model, alongside metrics and traces. Loki, on the other hand, is purpose-built for log management, offering efficient storage and querying specifically for log data.

Is Loki only suitable for Grafana-based setups?

While Loki integrates seamlessly with Grafana, it's not limited to Grafana-based setups. Loki exposes an HTTP API that can be used by other visualization tools or custom applications. However, the Grafana integration is a significant strength of Loki.

What are the main differences in query languages between OpenTelemetry and Loki?

OpenTelemetry doesn't define its own query language; the query language depends on the backend you're using to store and analyze the data. Loki uses LogQL, a query language inspired by PromQL (Prometheus Query Language), which is designed specifically for querying and analyzing log data.