Overview

This documentation provides a detailed walkthrough to send the Google CCloud Storage logs directly to SigNoz.

Prerequisites

- Google Cloud account with administrative privilege or Logging Admin privilege.

- Access to a project in GCP

Setup

Get started with Cloud Storage Audit Log Configuration

Enable the Google Cloud Storage Audit Logs using the following steps:

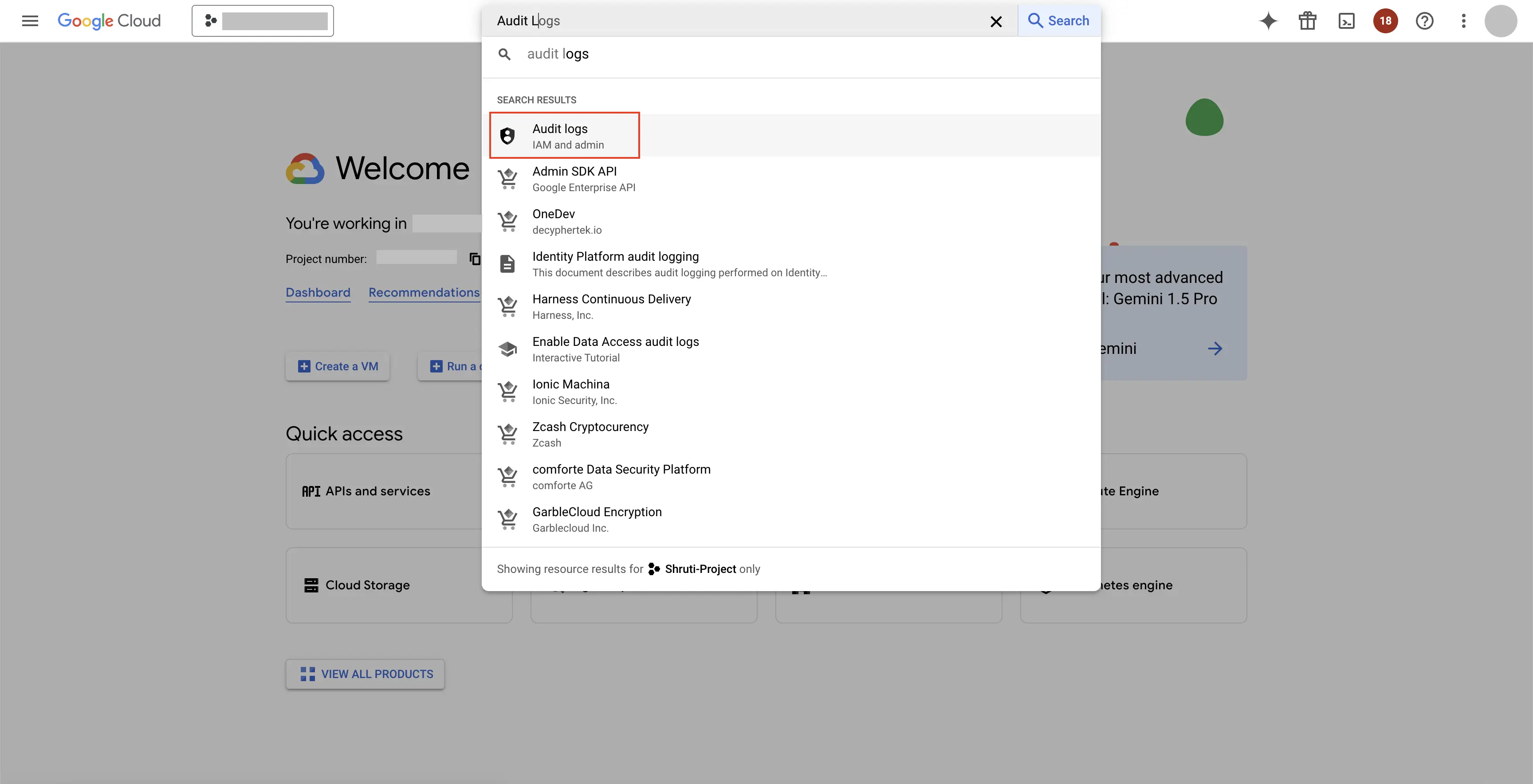

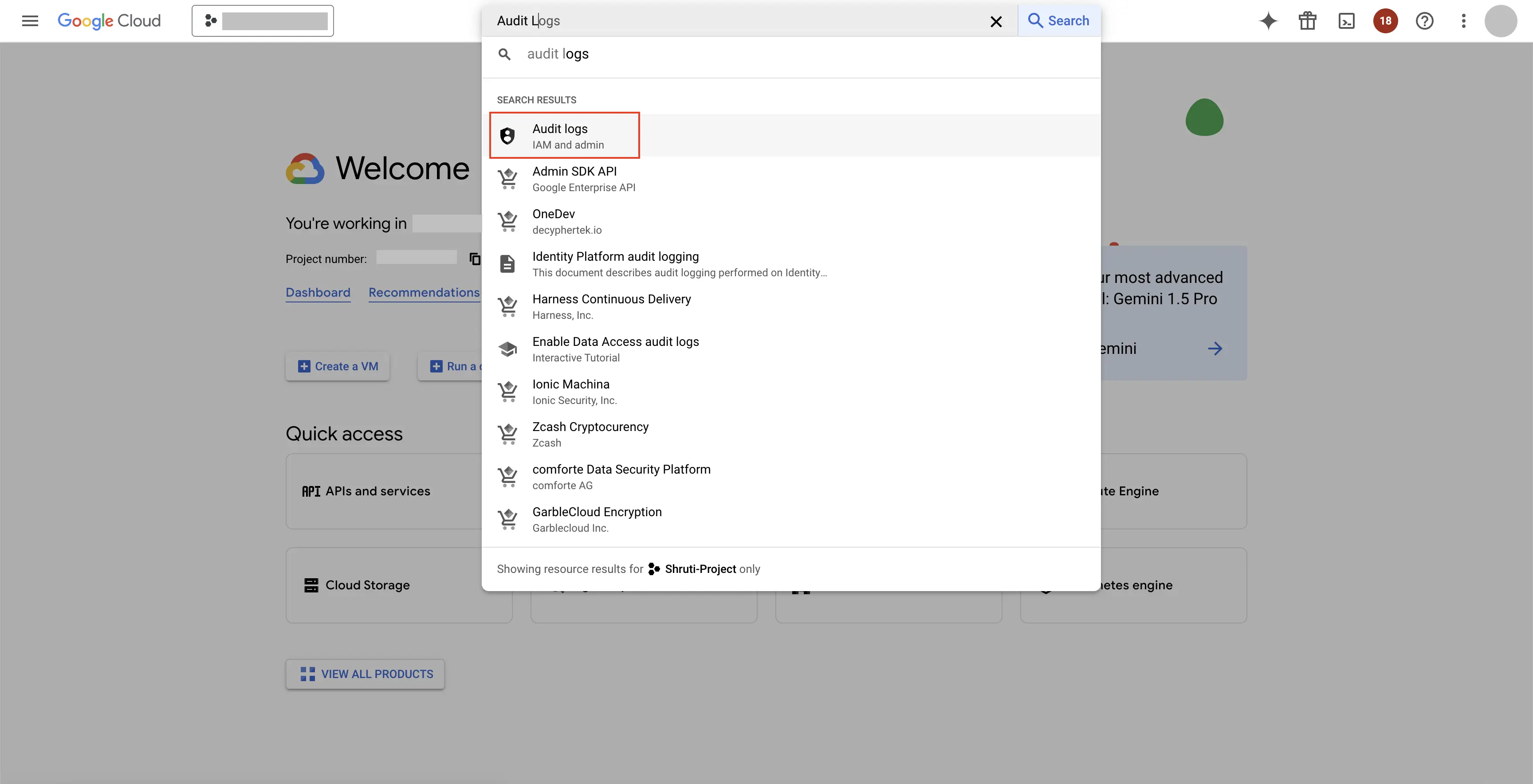

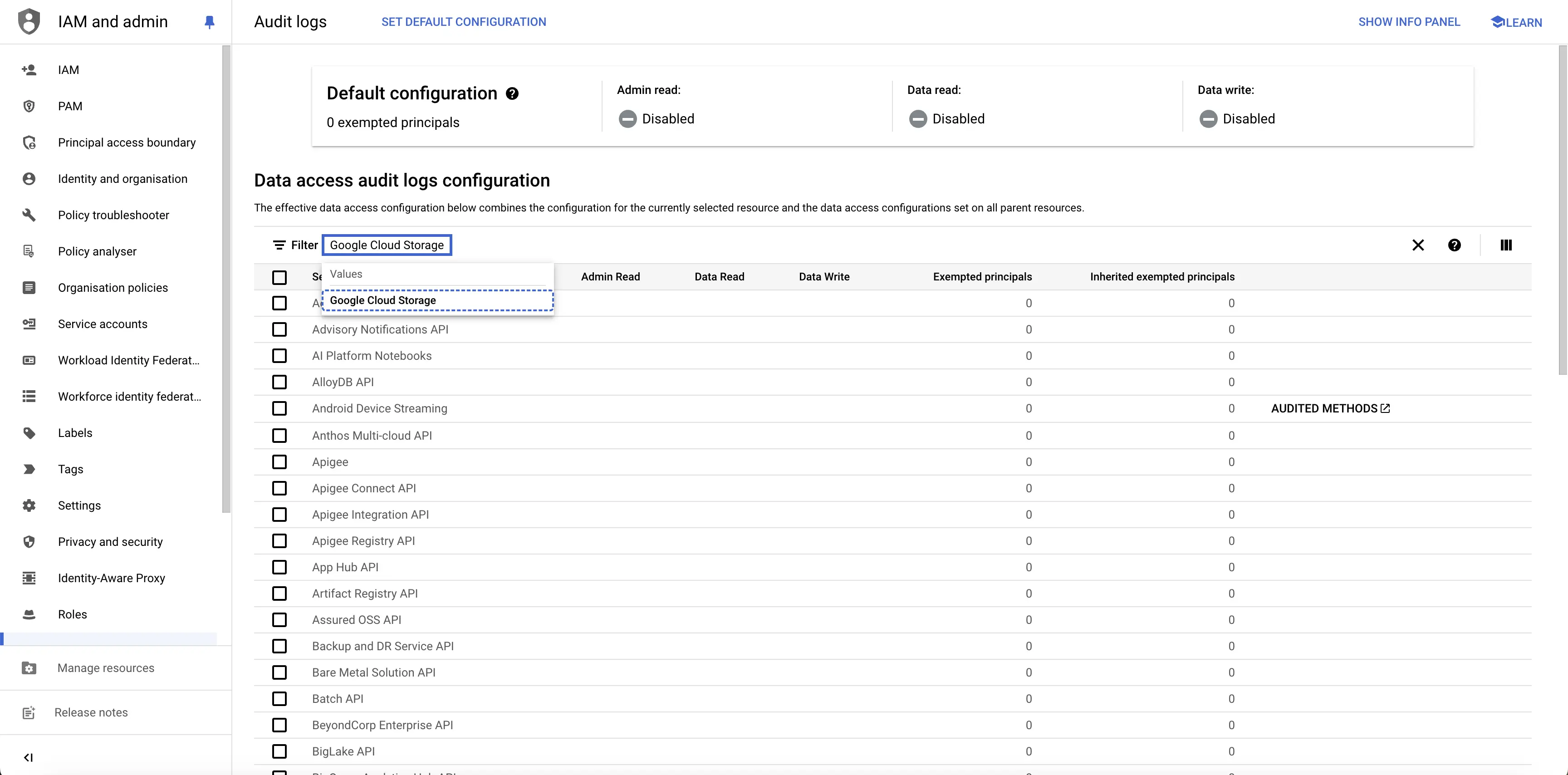

Step 1: Go to your GCP console and search for Audit Logs, go to Audit Logs under IAM and Admin service.

Go to Audit Logs

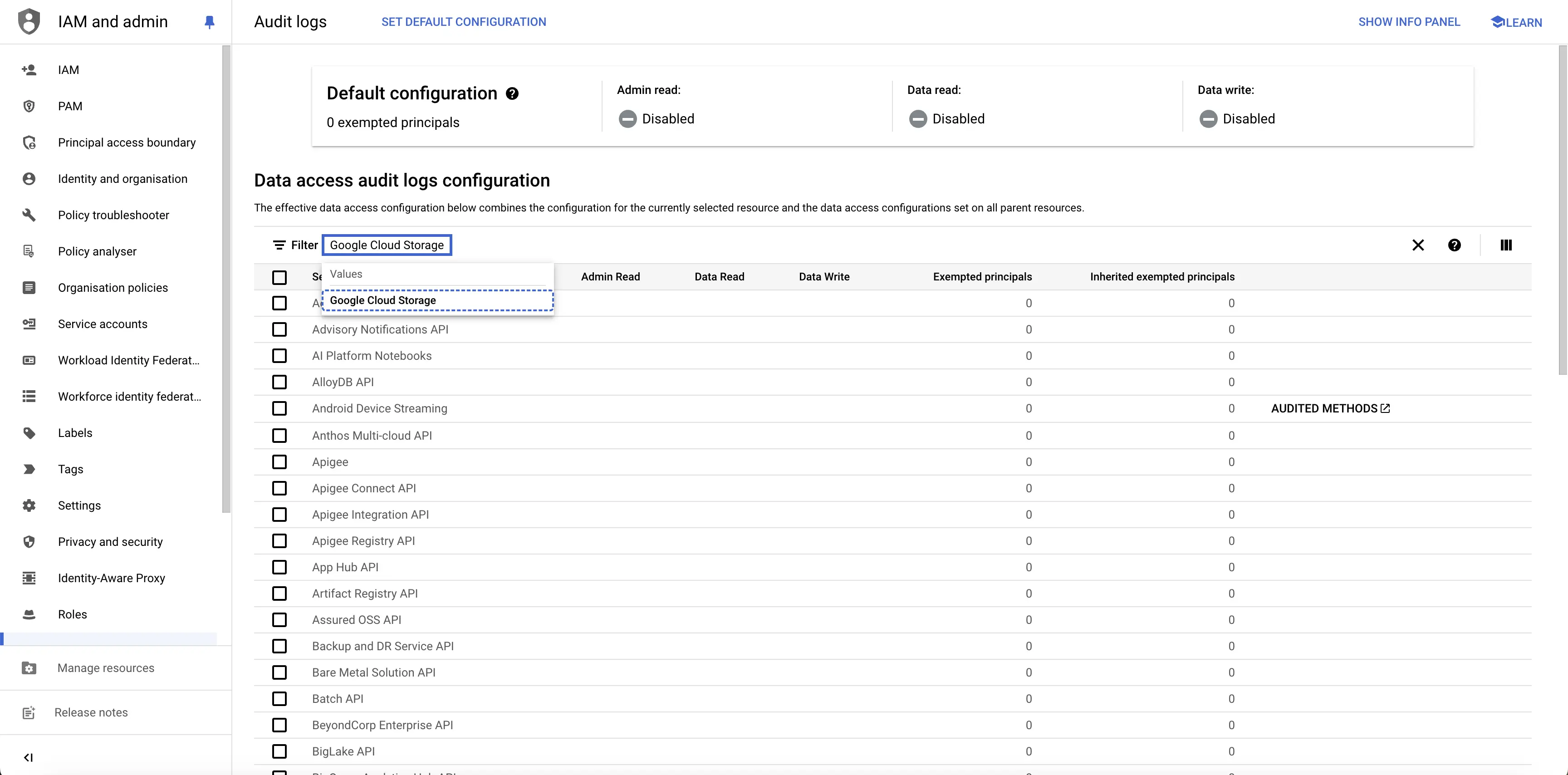

Step 2: In the Data access audit logs configuration table, search for "Google Cloud Storage" in the search bar of the table, and click on the same.

Search for Google Cloud Storage in Data Access Audit Logs Configuration

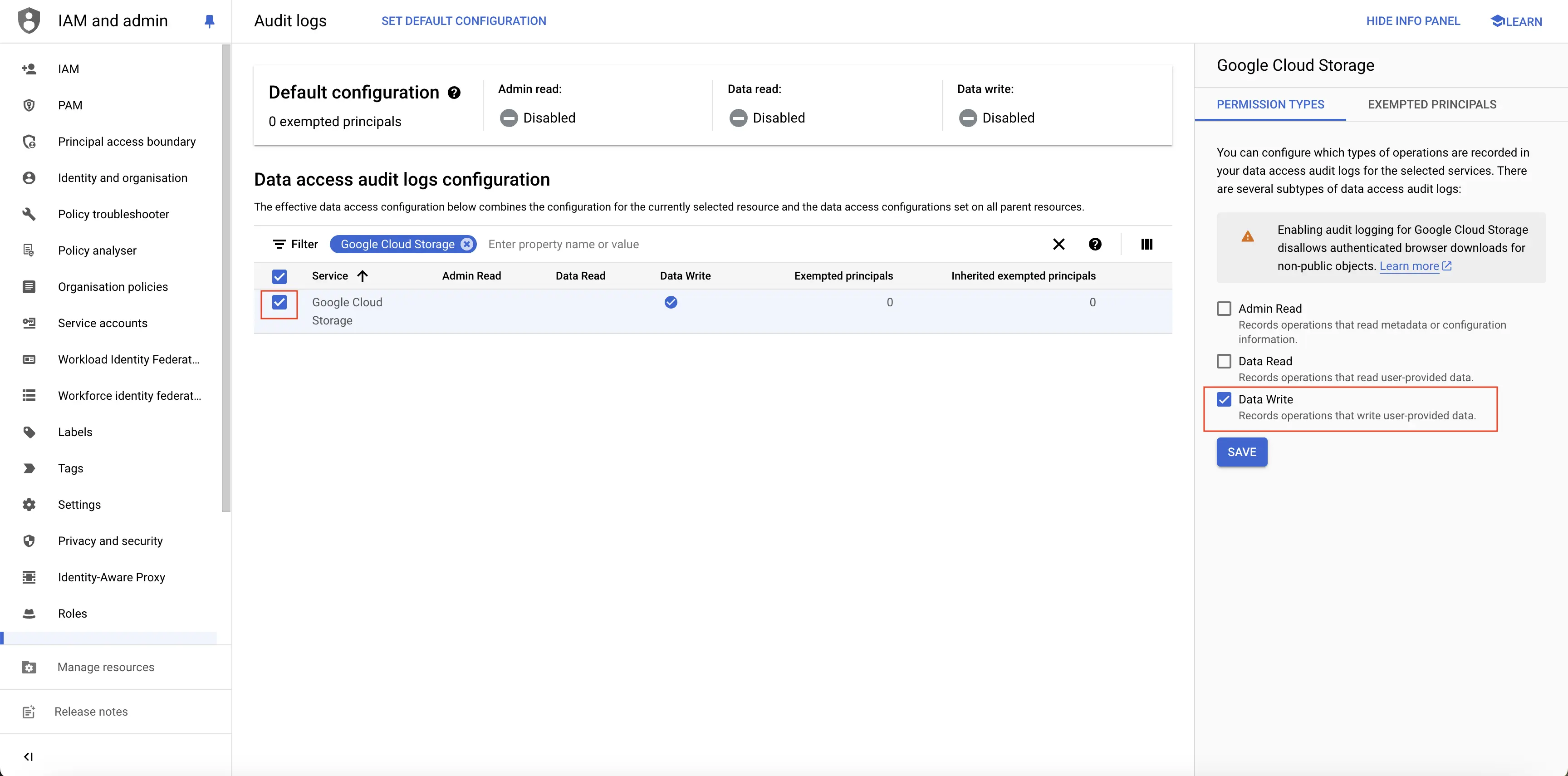

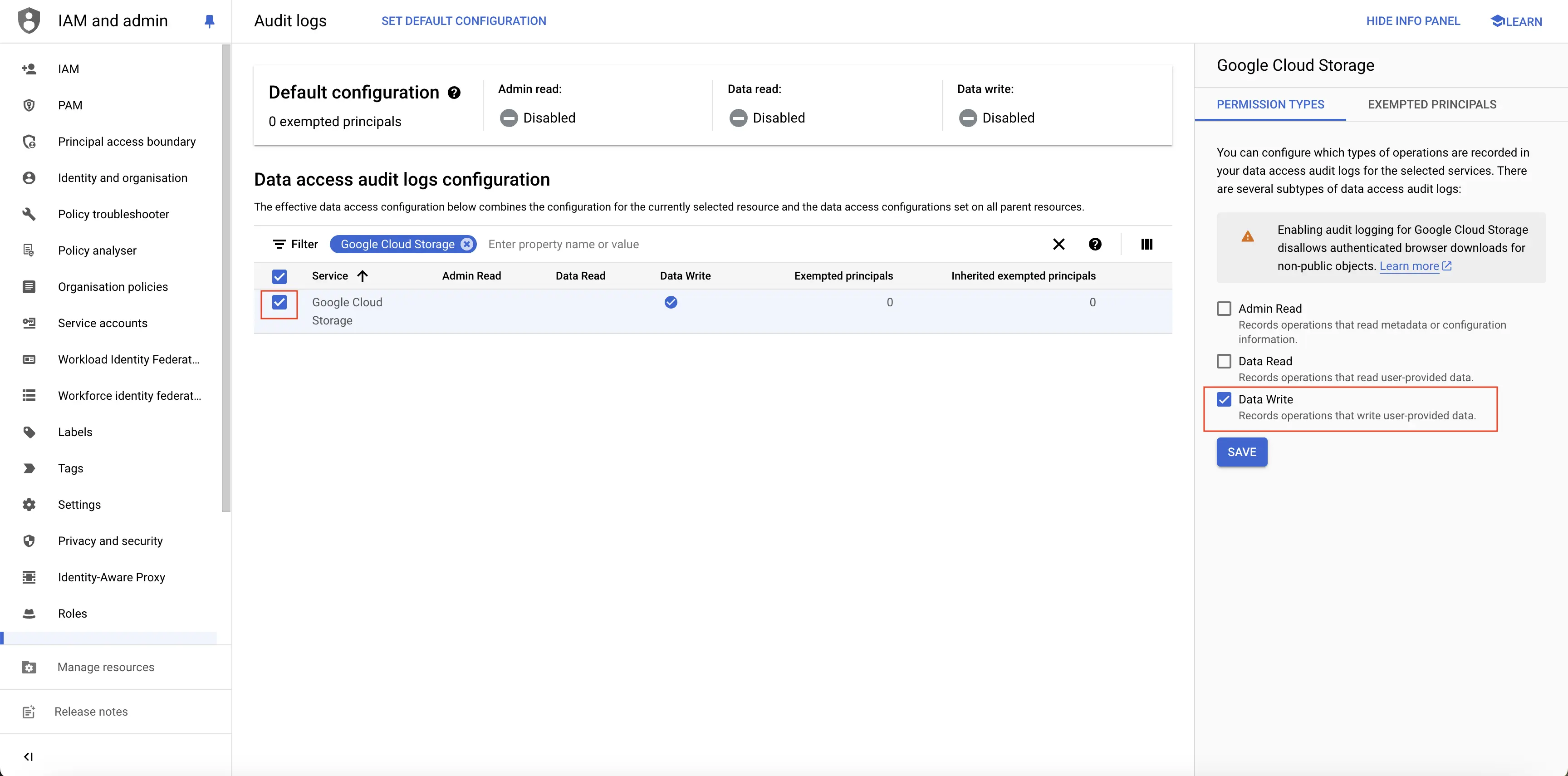

Step 3: You can select the Google Cloud Storage record by clicking on the check box to the left of the record. This will popup the permission settings to the right of the screen.

Check the Data Write option, and click SAVE button. This will enable the data write audit logs on the Google Cloud Storage service.

You can choose to select Admin Read and Date Read options as well from the Permission Types popup. But note that choosing those options can lead to huge number of audit logs, eventually even increasing the cost.

Enable Data Write Audit Logs

Create PubSub Topic

Follow the steps mentioned in the Creating Pub/Sub Topic document to create the Pub/Sub topic.

Create Log Router to Pub/Sub Topic

Follow the steps mentioned in the Log Router Setup document to create the Log Router.

To ensure you filter out only the Compute Engine logs, use the following filter conditions:

resource.type="gcs_bucket"

Setup OTel Collector

Follow the steps mentioned in the Creating Compute Engine document to create another Compute Engine instance. We will be installing OTel Collector on this instance.

Install OTel Collector as agent

Firstly, we will establish the authentication using the following commands:

- Initialize

gcloud:

gcloud init

- Authenticate into GCP:

gcloud auth application-default login

Let us now proceed to the OTel Collector installation:

Step 1: Download otel-collector tar.gz for your architecture

wget https://github.com/open-telemetry/opentelemetry-collector-releases/releases/download/v0.135.0/otelcol-contrib_0.135.0_linux_amd64.tar.gz

Step 2: Extract otel-collector tar.gz to the otelcol-contrib folder

mkdir otelcol-contrib && tar xvzf otelcol-contrib_0.135.0_linux_amd64.tar.gz -C otelcol-contrib

Step 3: Create config.yaml in the folder otelcol-contrib with the below content in it. Replace <region> with the appropriate SigNoz Cloud region. Replace SIGNOZ_INGESTION_KEY with what is provided by SigNoz:

Note: The googlecloudpubsub receiver’s encoding was updated from the deprecated raw_text to text_encoding. This configuration has been tested and works with otelcol-contrib v0.135.0.

extensions:

text_encoding:

encoding: utf-8

receivers:

otlp:

protocols:

grpc:

endpoint: 0.0.0.0:4317

http:

endpoint: 0.0.0.0:4318

googlecloudpubsub:

project: <gcp-project-id>

subscription: projects/<gcp-project-id>/subscriptions/<pubsub-topic's-subscription>

encoding: text_encoding

processors:

batch: {}

resource/env:

attributes:

- key: deployment.environment

value: prod

action: upsert

exporters:

otlp:

endpoint: "ingest.<region>.signoz.cloud:443"

tls:

insecure: false

headers:

"signoz-ingestion-key": "<SigNoz-Key>"

service:

extensions: [text_encoding]

pipelines:

traces:

receivers: [otlp]

processors: [batch]

exporters: [otlp]

metrics:

receivers: [otlp]

processors: [batch]

exporters: [otlp]

logs:

receivers: [otlp, googlecloudpubsub]

processors: [batch, resource/env]

exporters: [otlp]

Step 4: Once we are done with the above configurations, we can now run the collector service with the following command:

From the otelcol-contrib, run the following command:

./otelcol-contrib --config ./config.yaml

Run in background

If you want to run OTel Collector process in the background:

./otelcol-contrib --config ./config.yaml &> otelcol-output.log & echo "$!" > otel-pid

The above command sends the output of the otel-collector to otelcol-output.log file and prints the process id of the background running OTel Collector process to the otel-pid file.

If you want to see the output of the logs you’ve just set up for the background process, you may look it up with:

tail -f -n 50 otelcol-output.log

Invoking Cloud Storage logs

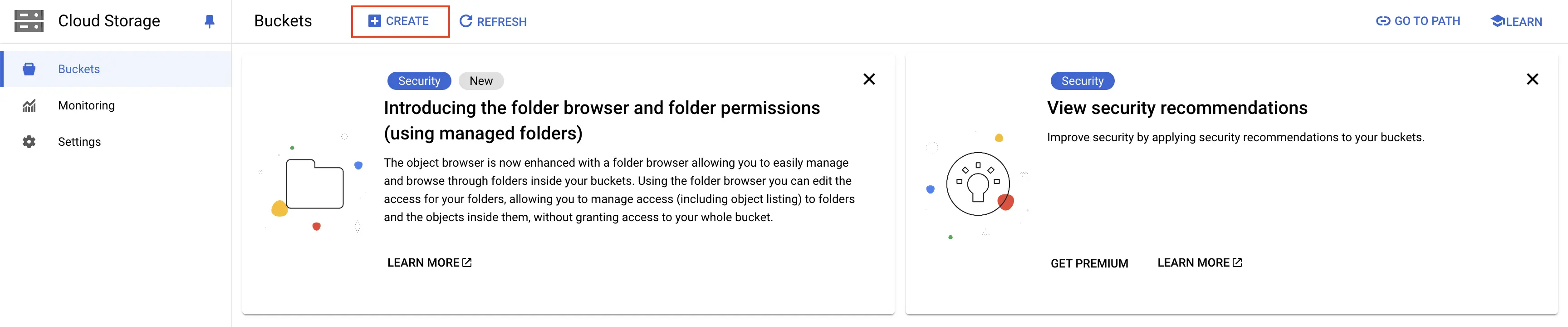

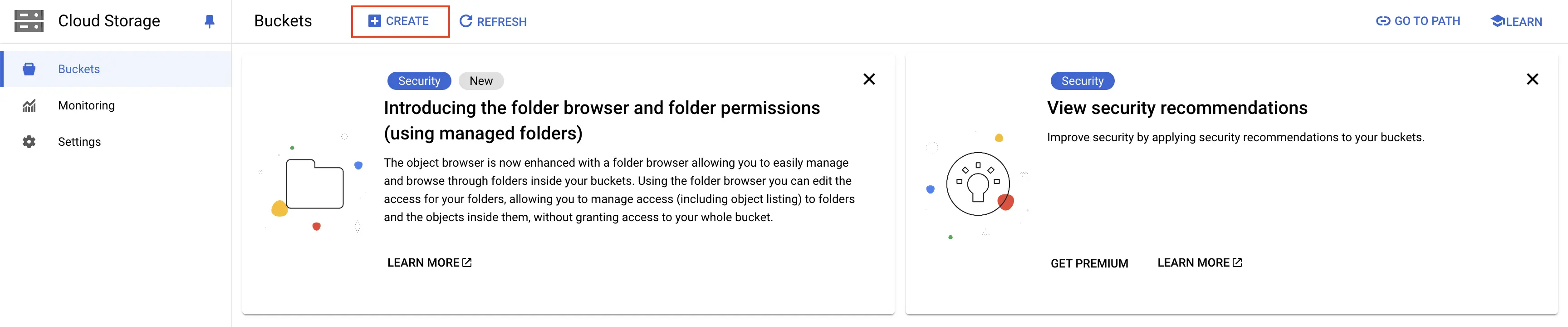

Step 1: On the GCP console, search for Cloud Storage, go to Cloud Storage service.

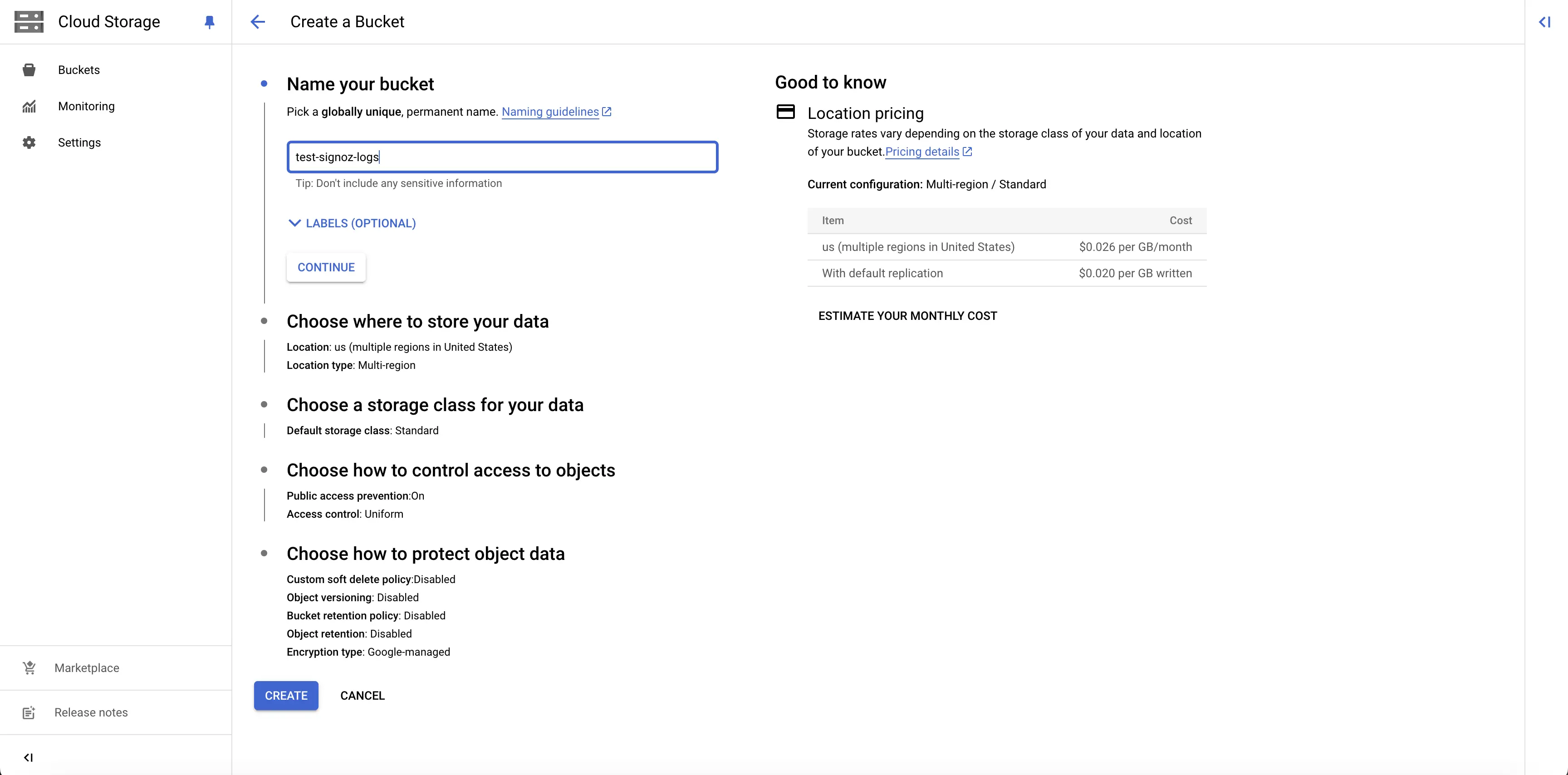

Step 2: Click on the CREATE button at the top of the Cloud Storage page to create a bucket.

Create Cloud Storage Bucket

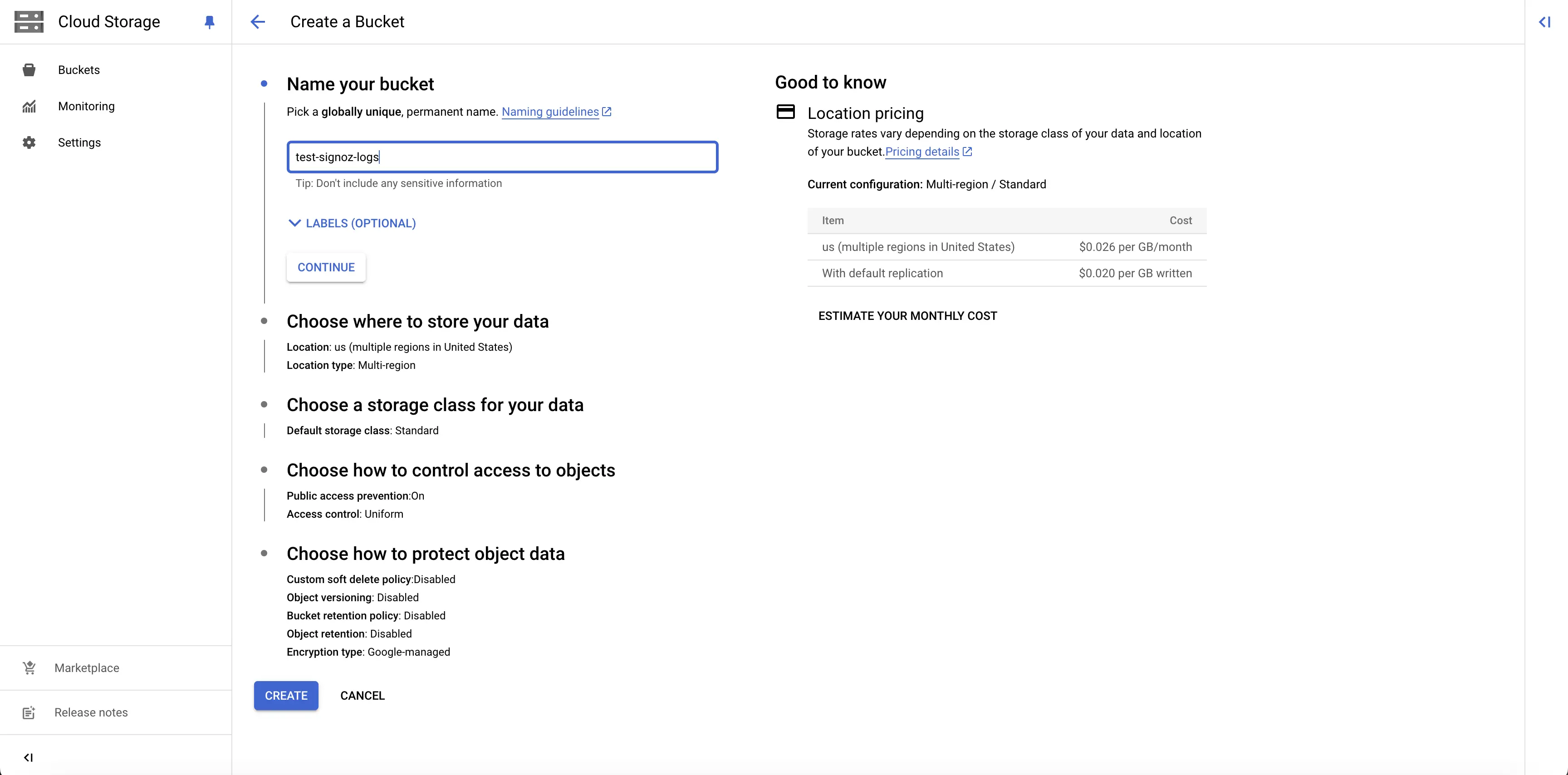

Step 3: On the Create a Bucket page, provide an appropriate name for the bucket in the Name your bucket section, and click on CONTINUE.

In the Choose where to store your data section, choose an appropriate location type, and click on CONTINUE. You can select an appropriate option in the rest of the sections as per your requirements, or let them be as default.

After filling the complete form, click on the CREATE button at the bottom of the page. This will create the new bucket.

Name the Cloud Storage Bucket

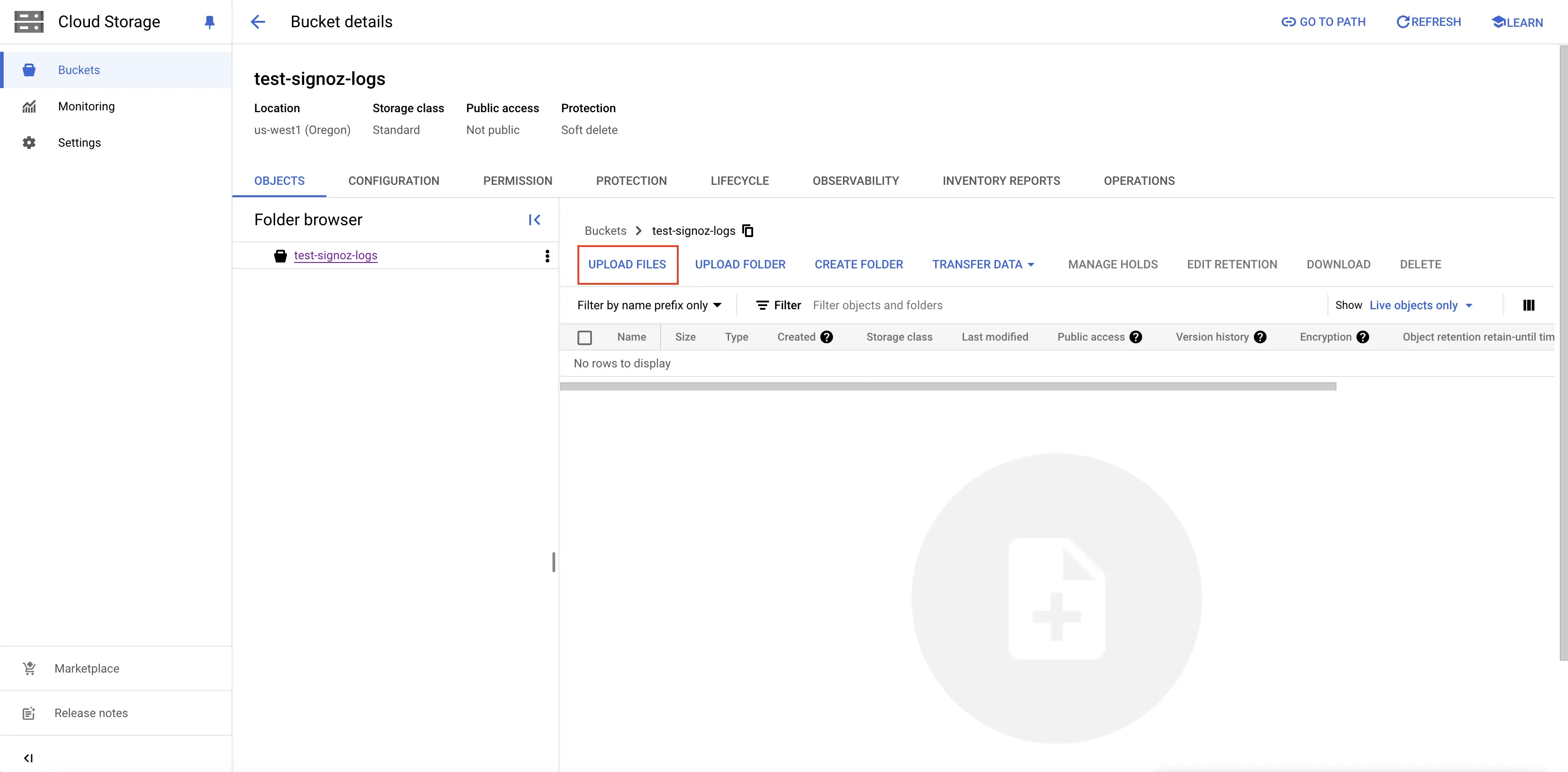

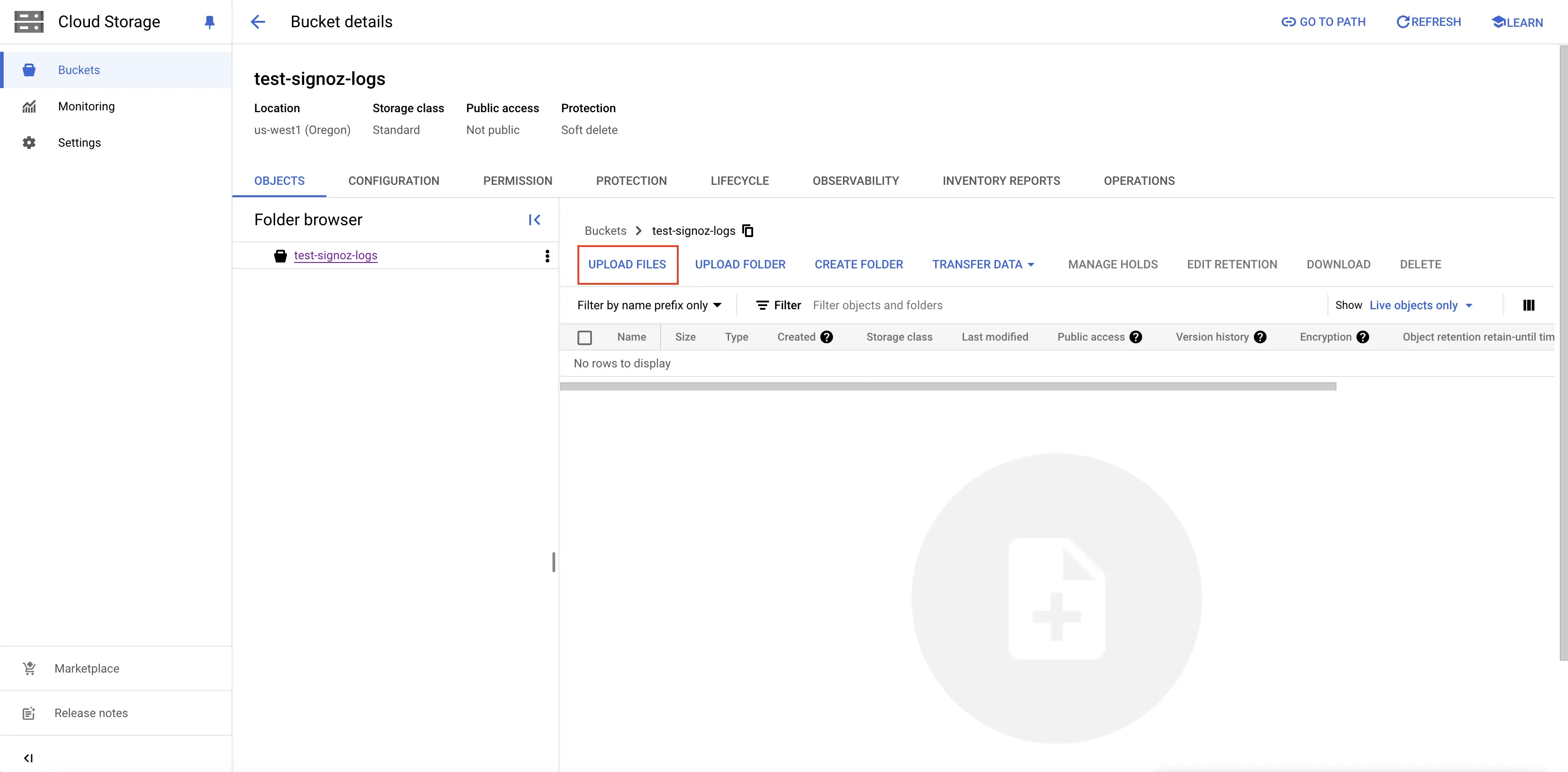

Step 4: Optionally, you can also upload files to the newly created bucket. For that, click on the UPLOAD FILES button, and select the file you want to upload.

Upload File to Cloud Storage Bucket

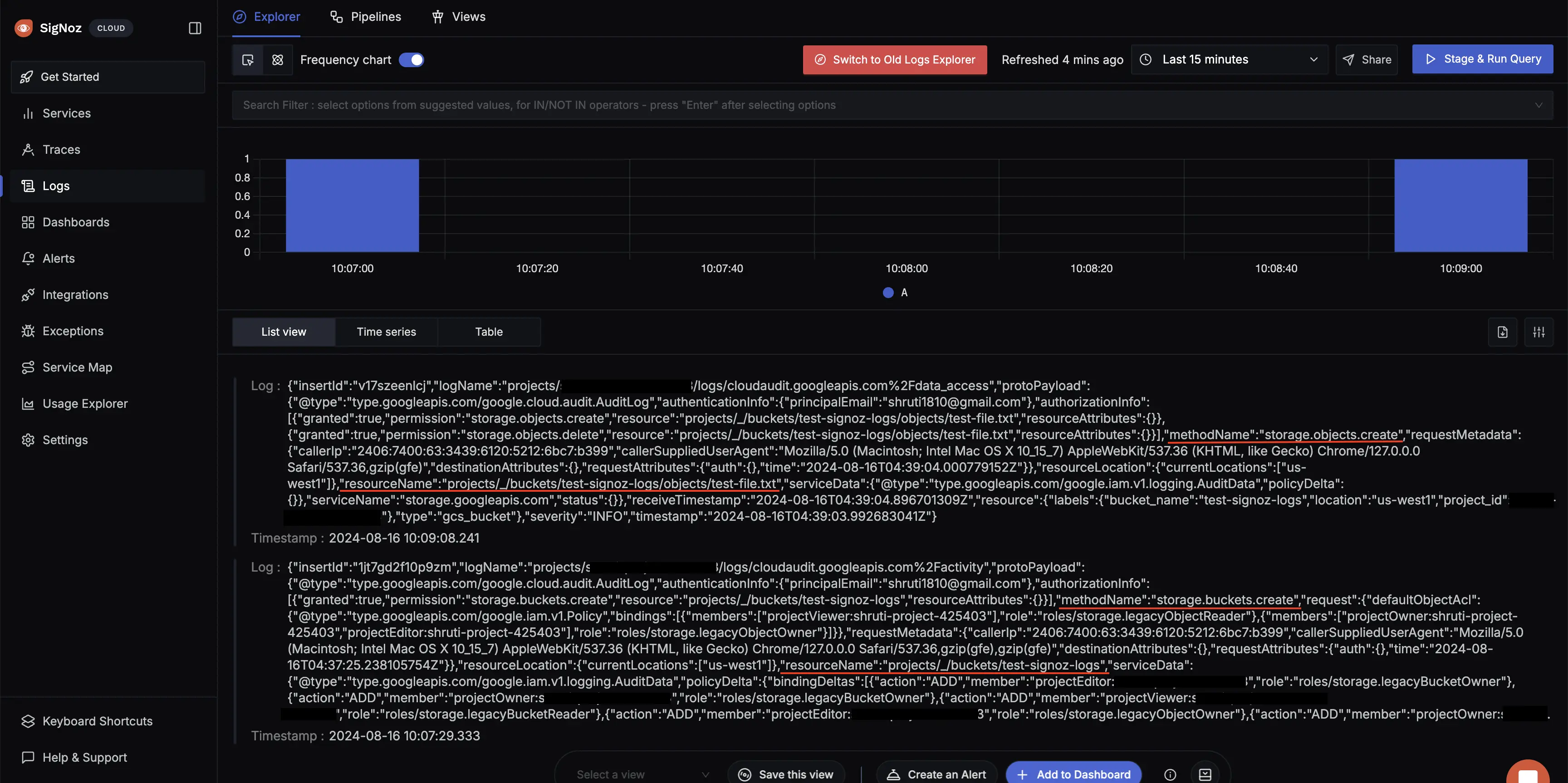

This will generate the Cloud Storage logs which you can now see on the SigNoz Cloud.

Cloud Storage Logs in SigNoz Cloud

Prerequisites

- Google Cloud account with administrative privilege or Logging Admin privilege.

- Access to a project in GCP

For more details on how to configure Self-Hosted SigNoz for Logs, check official documentation by Self-Hosted SigNoz and navigate to the "Send Logs to Self-Hosted SigNoz" section.

Setup

Get started with Cloud Storage Audit Log Configuration

Enable the Google Cloud Storage Audit Logs using the following steps:

Step 1: Go to your GCP console and search for Audit Logs, go to Audit Logs under IAM and Admin service.

Go to Audit Logs

Step 2: In the Data access audit logs configuration table, search for "Google Cloud Storage" in the search bar of the table, and click on the same.

Search for Google Cloud Storage in Data Access Audit Logs Configuration

Step 3: You can select the Google Cloud Storage record by clicking on the check box to the left of the record. This will popup the permission settings to the right of the screen.

Check the Data Write option, and click SAVE button. This will enable the data write audit logs on the Google Cloud Storage service.

You can choose to select Admin Read and Date Read options as well from the Permission Types popup. But note that choosing those options can lead to huge number of audit logs, eventually even increasing the cost.

Enable Data Write Audit Logs

Create PubSub Topic

Follow the steps mentioned in the Creating Pub/Sub Topic document to create the Pub/Sub topic.

Create Log Router to Pub/Sub Topic

Follow the steps mentioned in the Log Router Setup document to create the Log Router.

To ensure you filter out only the Compute Engine logs, use the following filter conditions:

resource.type="gcs_bucket"

Setup OTel Collector

Follow the steps mentioned in the Creating Compute Engine document to create the Compute Engine instance.

Install OTel Collector as agent

Firstly, we will establish the authentication using the following commands:

- Initialize

gcloud:

gcloud init

- Authenticate into GCP:

gcloud auth application-default login

Let us now proceed to the OTel Collector installation:

Step 1: Download otel-collector tar.gz for your architecture

wget https://github.com/open-telemetry/opentelemetry-collector-releases/releases/download/v0.135.0/otelcol-contrib_0.135.0_linux_amd64.tar.gz

Step 2: Extract otel-collector tar.gz to the otelcol-contrib folder

mkdir otelcol-contrib && tar xvzf otelcol-contrib_0.135.0_linux_amd64.tar.gz -C otelcol-contrib

Step 3: Create config.yaml in the folder otelcol-contrib with the below content in it. We are using the clickhouselogsexporter in this case.

Note: The googlecloudpubsub receiver’s encoding was updated from the deprecated raw_text to text_encoding. This configuration has been tested and works with otelcol-contrib v0.135.0.

extensions:

text_encoding:

encoding: utf-8

receivers:

otlp:

protocols:

grpc:

endpoint: 0.0.0.0:4317

http:

endpoint: 0.0.0.0:4318

googlecloudpubsub:

project: <gcp-project-id>

subscription: projects/<gcp-project-id>/subscriptions/<pubsub-topic's-subscription>

encoding: text_encoding

processors:

batch: {}

resource/env:

attributes:

- key: deployment.environment

value: prod

action: upsert

exporters:

clickhouselogsexporter:

dsn: tcp://clickhouse:9000/

timeout: 5s

sending_queue:

queue_size: 100

retry_on_failure:

enabled: true

initial_interval: 5s

max_interval: 30s

max_elapsed_time: 300s

service:

extensions: [text_encoding]

pipelines:

logs:

receivers: [otlp, googlecloudpubsub]

processors: [batch, resource/env]

exporters: [clickhouselogsexporter]

Step 4: Once we are done with the above configurations, we can now run the collector service with the following command:

From the otelcol-contrib, run the following command:

./otelcol-contrib --config ./config.yaml

Run in background

If you want to run OTel Collector process in the background:

./otelcol-contrib --config ./config.yaml &> otelcol-output.log & echo "$!" > otel-pid

The above command sends the output of the otel-collector to otelcol-output.log file and prints the process id of the background running OTel Collector process to the otel-pid file.

If you want to see the output of the logs you’ve just set up for the background process, you may look it up with:

tail -f -n 50 otelcol-output.log

Invoking Cloud Storage logs

Step 1: On the GCP console, search for Cloud Storage, go to Cloud Storage service.

Step 2: Click on the CREATE button at the top of the Cloud Storage page to create a bucket.

Create Cloud Storage Bucket

Step 3: On the Create a Bucket page, provide an appropriate name for the bucket in the Name your bucket section, and click on CONTINUE.

In the Choose where to store your data section, choose an appropriate location type, and click on CONTINUE. You can select an appropriate option in the rest of the sections as per your requirements, or let them be as default.

After filling the complete form, click on the CREATE button at the bottom of the page. This will create the new bucket.

Name the Cloud Storage Bucket

Step 4: Optionally, you can also upload files to the newly created bucket. For that, click on the UPLOAD FILES button, and select the file you want to upload.

Upload File to Cloud Storage Bucket

This will generate the Cloud Storage logs which you can now see on SigNoz.