Overview

This guide walks you through migrating metrics from the ELK Stack (specifically Metricbeat) to SigNoz. You will:

- Check your Metricbeat modules and configuration

- Set up metric collection with OpenTelemetry

- Map Metricbeat modules to OpenTelemetry receivers

- Validate metrics are flowing correctly to SigNoz

Key Differences: ELK vs SigNoz

| Aspect | ELK Stack | SigNoz |

|---|---|---|

| Collection | Metricbeat, Elastic Agent | OpenTelemetry Collector |

| Data Model | Elasticsearch Documents | OpenTelemetry Metrics |

| Query Language | KQL, Lucene, TSVB | Query Builder, PromQL, ClickHouse SQL |

| Storage | Elasticsearch (Indices) | ClickHouse (Columnar) |

SigNoz uses the OpenTelemetry Collector for metric collection, which offers a wide range of receivers to replace Metricbeat modules.

Prerequisites

Before starting, ensure you have:

- A SigNoz account (Cloud) or a running SigNoz instance (Self-Hosted)

- Access to your Metricbeat configuration (

metricbeat.yml,modules.d/) - Administrative access to deploy the OpenTelemetry Collector

Step 1: Assess Your Current Metrics

Before migrating, inventory what you're collecting with Metricbeat.

Identify Active Modules

Review your metricbeat.yml and modules.d/ directory to see which modules are enabled. Common modules include:

system(CPU, memory, disk)docker(Container stats)kubernetes(Kubelet stats)prometheus(Scraping endpoints)nginx,redis,mysql, etc.

Categorize Your Metrics

Group your metrics by source type:

| Metricbeat Module | OTel Receiver | Migration Path |

|---|---|---|

| system | hostmetrics | Use Host Metrics Receiver |

| docker | docker_stats | Use Docker Stats Receiver |

| kubernetes | K8s Infra Chart | Use K8s Infra Chart |

| prometheus | prometheus | Use Prometheus Receiver |

| Other Modules | Various | Check OTel Registry to configure OTel receivers |

Step 2: Set Up the OpenTelemetry Collector

Install the OpenTelemetry Collector if you haven't already:

Install the OpenTelemetry Collector in your environment.

Configure the OTLP exporter for metrics

Step 3: Migrate Each Metric Source

Work through each module from your inventory. For a complete list of supported receivers, see Send Metrics to SigNoz.

From System Metrics

Replace the Metricbeat system module with the hostmetrics receiver.

Metricbeat system:

- module: system

period: 10s

metricsets: ['cpu', 'memory', 'network', 'filesystem']

Migration: Follow the Host Metrics Collection Guide to set up the OpenTelemetry Collector with the hostmetrics receiver. This guide provides connection details and configuration examples.

From Docker Metrics

Replace the Metricbeat docker module with the docker_stats receiver. See Docker Container Metrics for detailed configuration.

Metricbeat docker:

- module: docker

period: 10s

hosts: ['unix:///var/run/docker.sock']

OTel docker_stats:

receivers:

docker_stats:

endpoint: "unix:///var/run/docker.sock"

collection_interval: 10s

timeout: 5s

metrics:

container.cpu.utilization:

enabled: true

From Kubernetes Metrics

For Kubernetes environments, we recommend using the SigNoz K8s Infra Helm chart.

When installed, it automatically collects all necessary metrics (node, pod, container, volume) without requiring manual receiver configuration.

See Install K8s Infra for setup instructions.

From Prometheus Endpoints

If you used Metricbeat's prometheus module to scrape endpoints, use the OTel prometheus receiver. See Prometheus Metrics for detailed configuration.

Metricbeat prometheus:

- module: prometheus

period: 10s

hosts: ['localhost:9090']

metrics_path: /metrics

OTel prometheus:

receivers:

prometheus:

config:

scrape_configs:

- job_name: 'my-app'

scrape_interval: 10s

static_configs:

- targets: ['localhost:9090']

From Application Custom Metrics

If you were sending custom metrics using Elastic APM agents, migrate to OpenTelemetry Metrics SDKs.

- Initialize the MeterProvider in your application.

- Create instruments (Counters, Gauges, Histograms).

- Record values.

See Send Metrics to SigNoz for language-specific guides.

From Cloud Integrations

If you were using Metricbeat modules for cloud providers (AWS, Azure, GCP), use SigNoz's native cloud integrations or the OpenTelemetry Collector's cloud receivers.

- AWS: AWS Cloud Integrations

- Azure: Azure Monitoring

- GCP: GCP Monitoring

From Synthetics

If you were using Elastic Uptime (Heartbeat), migrate to SigNoz HTTP Host Metrics.

SigNoz supports monitoring HTTP endpoints for availability and latency. See Monitor HTTP Endpoints.

Step 4: Configure Processors

To replicate Metricbeat's metadata enrichment (like add_host_metadata, add_kubernetes_metadata), configure OTel processors.

Resource Detection

Replaces add_host_metadata and add_cloud_metadata.

processors:

resourcedetection:

detectors: [env, system, ec2, gcp, azure]

timeout: 2s

Validate

Verify metrics are flowing correctly by comparing against your source inventory.

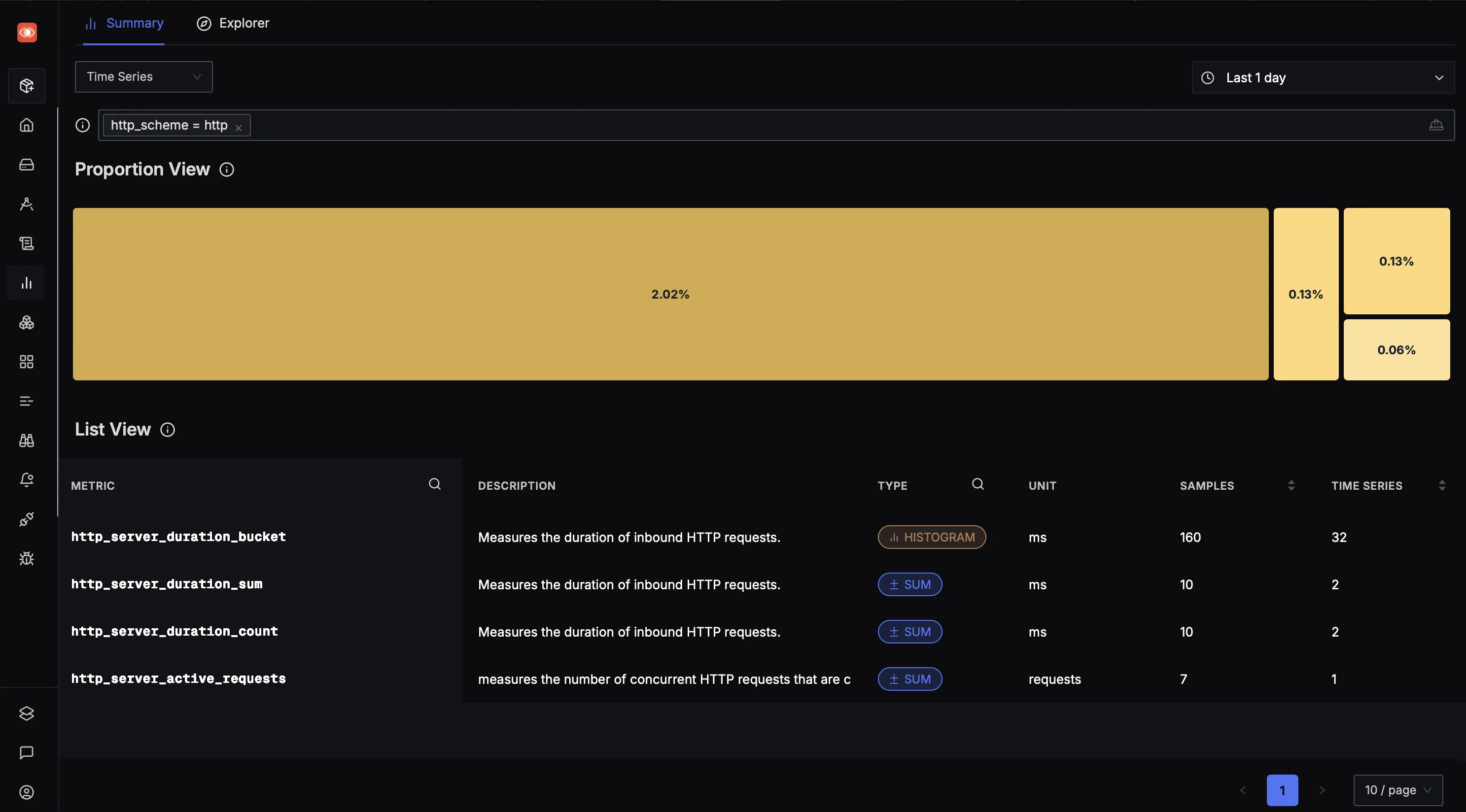

Check Metrics Are Arriving

- In SigNoz, navigate to Metrics in the left sidebar.

- Use the List View to browse available metrics.

- Search for metrics like

system_cpu_time,container_cpu_usage_seconds, etc.

Troubleshooting

Metrics not appearing in SigNoz

- Check Collector status: Verify the OpenTelemetry Collector is running.

- Verify endpoint: Confirm

ingest.<region>.signoz.cloud:443matches your account region. - Check ingestion key: Ensure

signoz-ingestion-keyheader is set correctly. - Test connectivity: Verify outbound HTTPS (port 443) is allowed.

Missing attributes

If metrics appear but lack expected attributes (like host.name or k8s.pod.name):

- Check processors: Ensure

resourcedetectionprocessor is enabled in the pipeline. - Verify permissions: Ensure the Collector ServiceAccount has permissions to read K8s metadata.

Metric names differ

OpenTelemetry metric names may differ from Metricbeat names.

- Metricbeat:

system.cpu.total.pct - OTel:

system.cpu.utilization

Use the OpenTelemetry Registry to find exact metric names for each receiver.

Next Steps

Once your metrics are flowing to SigNoz:

- Create dashboards to visualize metrics

- Set up alerts based on your metrics

- Migrate traces for end-to-end observability