CloudWatch Cost Optimization Playbook [Part 2]

CloudWatch cost optimization is not a one-time cleanup. It is an ongoing loop where you find the driver, make a change, verify the impact, and repeat.

In Part 1, we explored every CloudWatch billing bucket — metrics, logs, alarms, dashboards, API requests, vended logs, and advanced features. This guide assumes you understand those mechanics and are ready to do something about them.

This guide will help you establish a baseline, apply optimizations bucket by bucket, understand why some optimizations fail, and evaluate when CloudWatch's pricing structure itself becomes the obstacle.

Step 1 - Establish Your Baseline

You cannot reduce what you have not measured. Before making any changes, you need a clear picture of where your CloudWatch spend is going.

Using AWS Cost Explorer

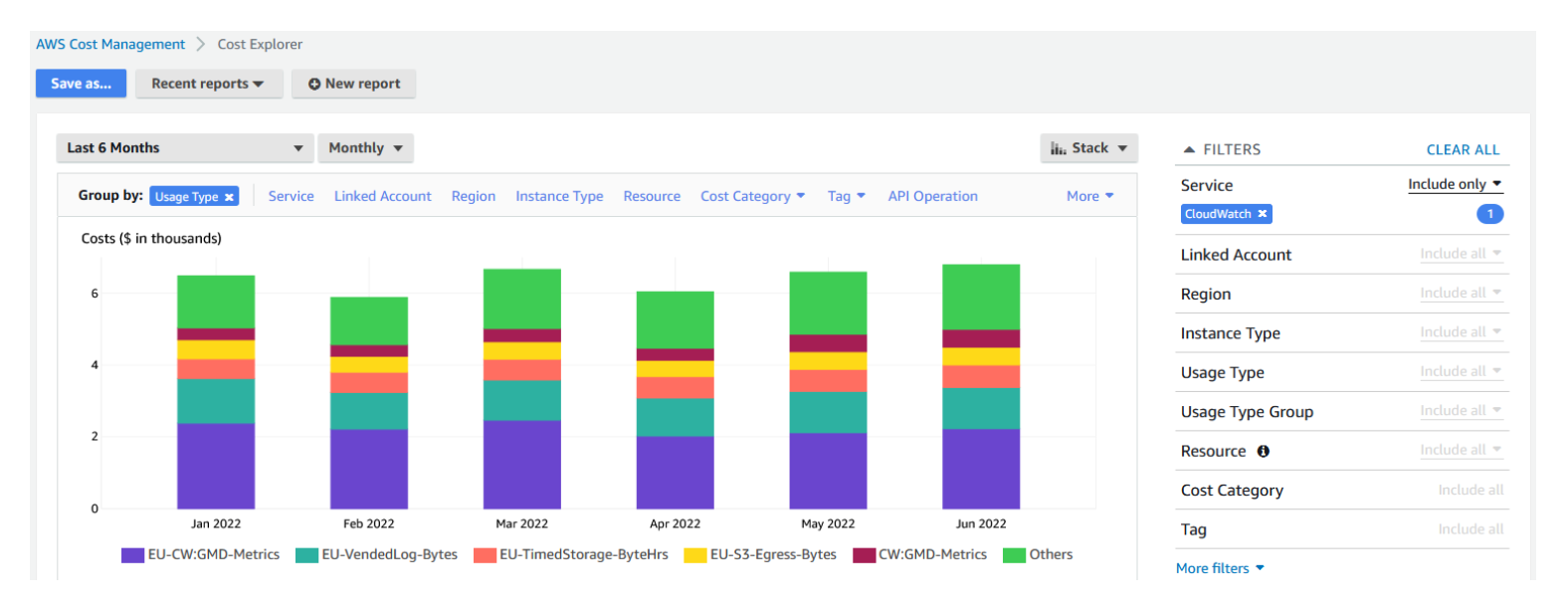

AWS Cost Explorer is the fastest way to see your CloudWatch spend by service and usage type.

- Open Cost Explorer in the AWS Console.

- Filter by Service = CloudWatch.

- Group by Usage Type to break down costs across different billing axes (metrics, logs, alarms, API requests, etc.).

- Set the time range to the last 3 months to identify trends.

The Usage Type dimension is the critical grouping. It separates CW:MetricMonitorUsage and CW:AlarmMonitorUsage from log-related usage types like DataProcessing-Bytes and DataProcessingIA-Bytes (typically region-prefixed in Cost Explorer, e.g., USE1-DataProcessing-Bytes), giving you the bucket-level breakdown that the invoice summary hides.

If Cost Explorer shows that 70% of your CloudWatch spend is log ingestion, you do not need to optimize alarms. Go directly to the log ingestion section.

Using CloudWatch Usage Metrics

CloudWatch publishes its own usage metrics in the AWS/Usage namespace. These give you near-real-time visibility into:

CallCountfor API operations (how manyPutMetricData,GetMetricDatacalls you are making).ResourceCountfor metrics and alarms (how many custom metrics or alarms exist right now).

You can set alarms on these usage metrics to catch runaway growth before it hits your invoice. For example, alarm on ResourceCount for custom metrics with a threshold that represents 120% of your expected count.

Step 2 - The Optimization Playbook, Bucket by Bucket

With your baseline in hand, you know which buckets are costing you the most. The sections below cover each one — what drives the cost, how to bring it down, and how to confirm it actually dropped.

Metrics: The Dimension Problem

Most metric cost comes from one place: the number of unique metric time series, which is driven by dimensions. As we covered in Part 1, each unique combination of metric name, namespace, and dimension values counts as a separate billable metric.

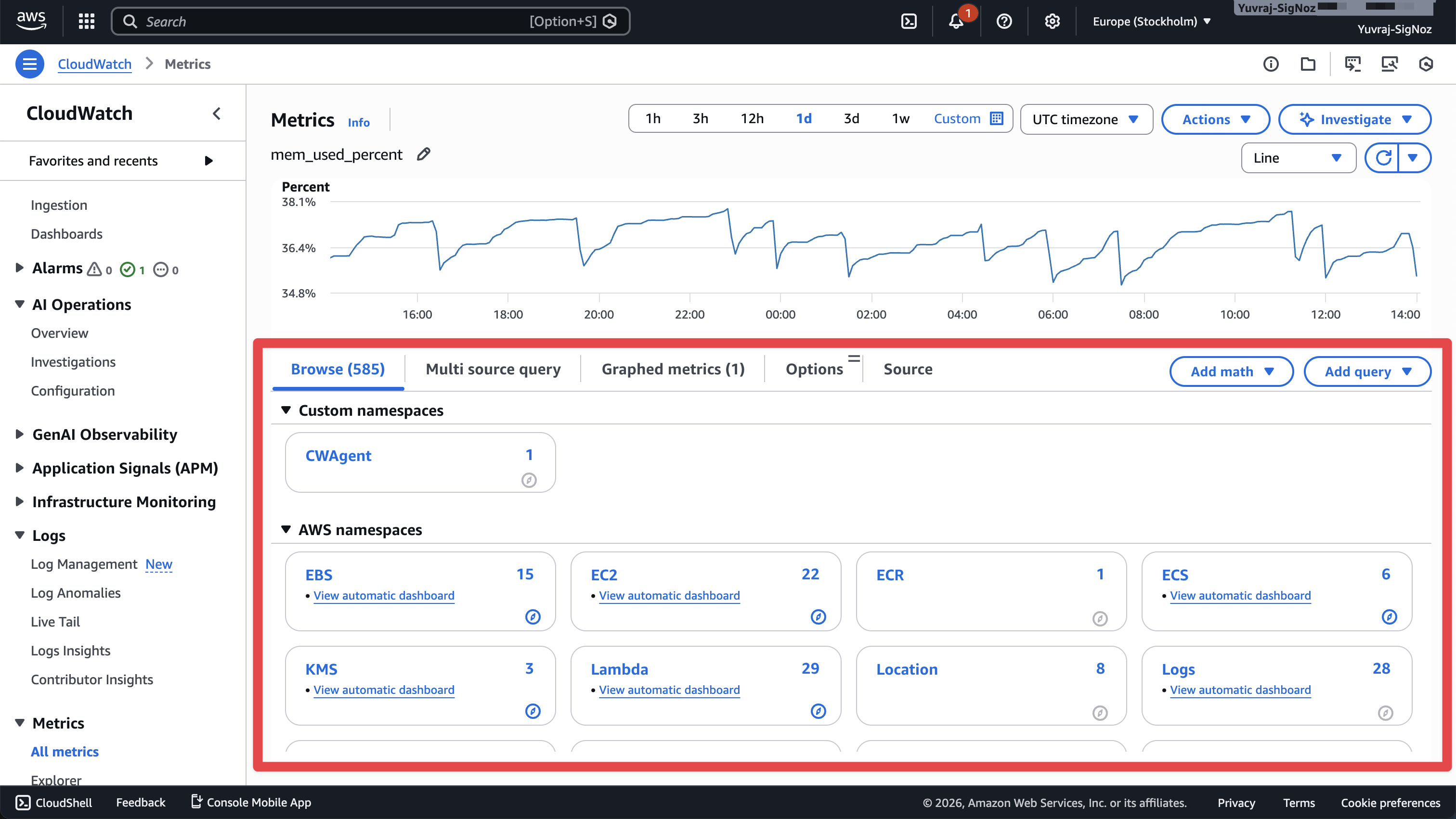

The first thing to check is whether you are paying for metrics nobody uses. Open Metrics → All metrics in the CloudWatch console and look for namespaces with metrics that have no associated alarms or dashboards. You can use the ListMetrics API to enumerate active metrics, then cross-reference with DescribeAlarms and GetDashboard to find metrics not attached to any alarm or dashboard. To check whether a metric is actually being read by your tooling, review CloudTrail for GetMetricData and GetMetricStatistics calls over the past 30 days. Note that ListMetrics only returns metrics that have received data in the past two weeks, so treat it as a view of currently active metrics, not a complete usage-history report.

Next, review your PutMetricData calls for dimension cardinality. If any dimension has more than ~50 unique values (status codes, endpoint names, customer tiers), then evaluate whether you can aggregate at the application level instead. Publishing api.latency with dimensions {endpoint, status_code, region, customer_tier} creates far more billable metrics than aggregating down to {endpoint, status_code} and tracking the rest in your application logs.

Finally, check whether you are paying for resolution you do not need. For example, EC2 detailed monitoring publishes metrics every 60 seconds and charges custom metric rates. Basic monitoring publishes every 5 minutes for free. For non-critical instances, switching to basic monitoring eliminates those charges entirely.

To verify: compare CW:MetricMonitorUsage in Cost Explorer against your baseline after making changes. Since metrics are prorated hourly, reductions show up within the same billing cycle.

Logs: Cut the Ingestion, Not the Visibility

Log ingestion is almost always the largest line item on a CloudWatch bill. At $0.50 per GB, it does not take much volume to generate meaningful spend. The goal is to control what you ingest, how long you keep it, and where it ends up.

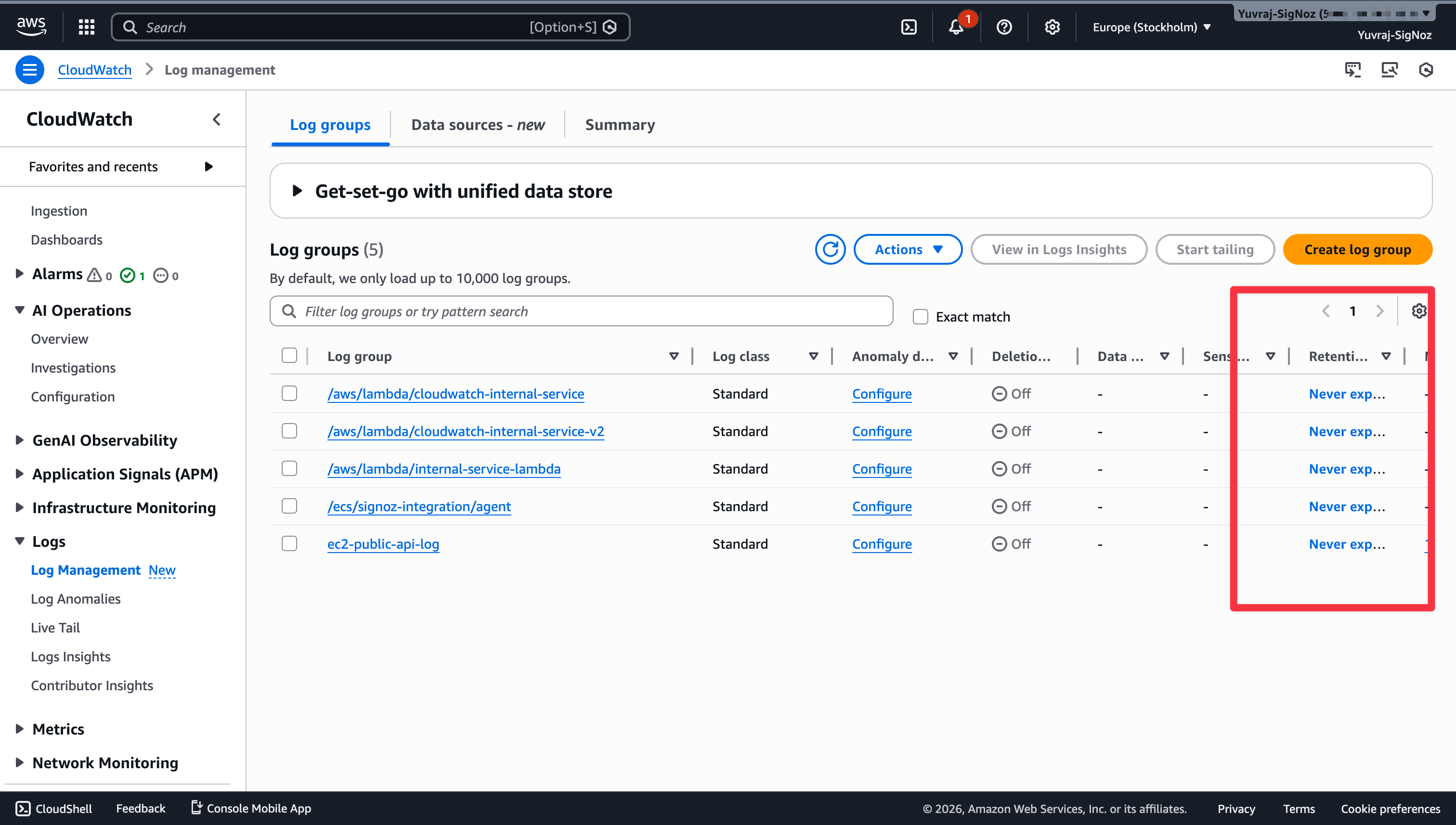

CloudWatch Logs default to indefinite retention, and most teams never change it. Storage at $0.03/GB-month is cheap enough that nobody notices, until a year of logs has accumulated and the storage line item keeps growing with no upper bound.

# List log groups with no retention set

aws logs describe-log-groups --query 'logGroups[?!retentionInDays].logGroupName'

Set retention to the minimum your compliance requires: 7 days for debug logs, 30 days for application logs, 90 days for audit logs. If you need long-term storage, export to S3 first as S3 storage rates are significantly cheaper than CloudWatch log storage.

If you are sending logs to both CloudWatch and another destination, ask whether CloudWatch needs to retain them at all. Use subscription filters to forward logs directly to the final destination and set CloudWatch retention to 1 day. You pay for ingestion either way, but you stop paying for storage.

For log groups that are primarily archival (things you rarely query but need to keep), switch to the Infrequent Access log class at $0.25/GB, half the standard rate. Logs Insights queries still work on this class. What you lose is Live Tail, metric filters, alarming from logs, and data protection, so only use this for log groups where you were never using those features anyway.

The highest-leverage move is filtering at the source, because the cheapest log is the one you never ingest. Check whether DEBUG-level logs are enabled in production, whether health check requests are being logged on every hit, and whether duplicate logs are being sent from multiple agents or sidecars — all common sources of unnecessary ingestion volume.

To verify: monitor ingestion volume via CloudWatch's own IncomingBytes metric on each log group, or check Cost Explorer's CW:DataProcessing-Bytes usage type. Changes should be visible within 24-48 hours.

Alarms: Audit the Orphans, Downgrade the Anomaly Detection

Alarm costs are driven by two things: how many you have, and what type they are. The first problem is usually orphaned alarms, the ones targeting metrics or resources that no longer exist.

Alarms stuck in INSUFFICIENT_DATA for weeks are almost certainly pointing at deleted resources, costing money every month for zero value — delete them.

The second problem is anomaly detection alarms used where static thresholds would work fine. As we covered in Part 1, each anomaly detection alarm uses 3 metrics internally (the metric plus upper and lower bounds), costing $0.30/month at standard resolution (3× the standard rate) or $0.90/month at high resolution. If a metric has stable, well-understood behavior (CPU above 80%, disk above 90%), a static threshold does the same job at a fraction of the cost. Reserve anomaly detection for metrics with genuinely seasonal or unpredictable baselines.

If you have groups of alarms that a human always checks together, consider consolidating them into a single composite alarm ($0.50/month). The underlying metric alarms still exist, but the composite alarm can replace redundant notification alarms and reduce operational noise.

To verify: count your alarms before and after using describe-alarms and compare by type. Anomaly detection replacements show up in the next billing cycle.

Dashboards: Consolidate or Delete

Dashboards are usually a small line item ($3.00 each beyond the 3 free), but they accumulate in teams that create one per microservice per environment. If you have 50 dashboards, that is $141/month.

Check whether multiple dashboards can be merged, a single dashboard monitoring 3 related services is cheaper than 3 separate ones. Any dashboard nobody has viewed in 60+ days is probably safe to delete.

API Requests: Watch for Polling Loops

API request costs are low for most teams, but they spike when external tools poll CloudWatch at high frequency. Third-party monitoring tools or custom scripts that call GetMetricData or GetMetricStatistics every few seconds can generate millions of requests per month.

If you have tools scraping CloudWatch to feed a separate system, consider switching to Metric Streams (push-based) instead of polling (pull-based), though note that Metric Streams have their own per-update cost. If polling is the only option, check whether you can reduce the frequency. Changing a 10-second interval to 60 seconds is a 6× reduction in API calls.

Vended Logs: Filter Before Delivery

VPC Flow Logs, Route 53 resolver query logs, and API Gateway access logs can generate massive volumes. Vended logs are billed per GB delivered, and the effective cost varies by destination because pricing tiers and rates can differ by destination type and region, and destinations like S3 or Firehose add their own service charges. Regardless of destination, you can reduce what gets delivered in the first place.

Consider using flow log filtering to capture only rejected traffic or specific subnets, and adjusting the aggregation interval based on your actual analysis needs. Every GB you do not generate is a GB you do not pay to deliver or store.

If you primarily need long-term storage and occasional batch analysis rather than real-time querying, consider routing vended logs to S3 instead of CloudWatch Logs. You will still incur vended-log delivery charges, and S3 adds its own storage and request costs — but S3 Standard storage runs ~$0.023/GB-month compared to CloudWatch's $0.03/GB-month, and S3 lifecycle policies can transition old logs to Glacier or Deep Archive for even cheaper archival. Compare totals for your destination and region before committing.

Step 3 - Why Optimization Sometimes Fails

You run through the playbook, cut metrics, set retention policies, remove orphan alarms — and then three months later, the bill is back where it started (or higher). This is frustrating, and it happens because some cost growth is structural rather than operational.

Your infrastructure is growing

More services, more instances, more log volume. If your application fleet grows 20% quarter-over-quarter, your CloudWatch costs grow at least 20% even if your per-unit costs stay the same. Optimization buys you headroom, but it does not change the growth curve.

New features get enabled without cost awareness

A teammate enables Container Insights on a new cluster, or a deployment pipeline starts publishing detailed monitoring on all instances. Each of these decisions is individually reasonable, but they compound. Without a process that reviews CloudWatch cost impact before enabling features, optimization becomes a recurring cleanup job rather than a permanent fix.

Cardinality creeps back in

You clean up metric dimensions today, but a new service deployment next month adds a high-cardinality tag. Without guardrails (linting PutMetricData calls, enforcing allowlists for dimensions), the problem returns.

The optimization ceiling exists

There is a floor below which CloudWatch costs cannot go for a given workload. If you run 50 services that each need metrics, logs, and alarms, the base cost of monitoring those 50 services in CloudWatch is fixed by the pricing structure. You can optimize the usage, but you cannot change the rates.

When you hit this ceiling that is when you have already removed waste, consolidated alarms, filtered logs, and reduced dimensions, the remaining cost is the structural price of using CloudWatch as your observability platform. At this point, the question changes from "how do I optimize my CloudWatch usage?" to "is CloudWatch the right pricing model for my workload?"

When Optimization Is Not Enough - Evaluating the Platform

If you have done the work in this playbook and your CloudWatch costs are still above your budget, the issue may not be your usage patterns. CloudWatch's pricing model bills across 7+ independent axes, each with its own unit, rate, and free tier, and the total grows with every new service you monitor.

This is where evaluating an alternative platform becomes a practical decision. Teams that have gone through this process typically report three pain points that optimization alone cannot fix:

Pricing unpredictability: CloudWatch's multi-dimensional billing makes it hard to forecast costs. INFO-level debug logging can produce large daily ingest bills. Adding a single high-cardinality dimension to custom metrics can multiply costs overnight. Teams report spending more time understanding and predicting their CloudWatch bill than actually reducing it.

Incident-time UX friction: During an outage, you need logs, metrics, traces, and service health in one place. CloudWatch spreads these across separate consoles with different query languages and navigation patterns. Trace-to-log correlation often requires manually copying IDs between interfaces. The context-switching tax is highest exactly when speed matters most.

Per-query billing during investigations: Logs Insights charges per GB scanned, and API polling adds incremental costs. During incidents, when engineers run dozens of exploratory queries against large log groups, the billing model works against the urgency. Teams report hesitating before running broad queries because of cost awareness, which slows down debugging.

SigNoz as a practical alternative

SigNoz is a unified observability platform built natively on OpenTelemetry that combines metrics, traces, and logs in a single interface with usage-based pricing:

- Logs and traces: $0.3 per GB ingested. Pricing is ingestion-based with configurable hot/cold retention — there is no per-query scan charge like CloudWatch Logs Insights.

- Metrics: $0.1 per million samples. Dashboards and alerts are included, not billed as additional line items.

- No per-host or per-user pricing: Your bill scales with data volume, not infrastructure size or team headcount.

Since SigNoz is built on OpenTelemetry, if you are already using OTel collectors or SDKs, the instrumentation stays the same. You point your exporter to SigNoz instead of CloudWatch.

Getting AWS data into SigNoz

The most common adoption path teams follow is to keep CloudWatch as a telemetry source for AWS-managed services while making SigNoz the primary investigation and alerting layer:

- CloudWatch Metric Streams push AWS infrastructure metrics to SigNoz via the OTel Collector.

- CloudWatch Log subscriptions forward logs to SigNoz.

- Application-level OTel instrumentation sends traces and metrics directly to SigNoz, bypassing CloudWatch entirely.

SigNoz offers a one-click AWS integration that uses CloudFormation and Firehose to set up the data pipeline automatically. For teams that want more control over costs, a manual OTel Collector setup gives you finer-grained control over what gets collected and forwarded.

Start by sending a copy of your data to SigNoz while keeping CloudWatch active. Verify that SigNoz covers your observability needs, then gradually shift your primary monitoring over. This avoids a risky all-at-once migration.

Exporting data via Metric Streams or log subscriptions adds AWS charges. For Metric Streams, you pay the per-update fee ($0.003/1K updates). For log forwarding via subscription filters, you still pay CloudWatch Logs ingestion ($0.50/GB) plus whatever the downstream destination charges. These AWS-side costs go away only when you stop routing data through CloudWatch and instrument directly with OTel. The one-click integration path incurs additional AWS charges compared to the manual OTel Collector setup, so factor this into your migration plan.

Get Started with SigNoz

You can choose between various deployment options in SigNoz. The easiest way to get started with SigNoz is SigNoz cloud. We offer a 30-day free trial account with access to all features.

Those who have data privacy concerns and can't send their data outside their infrastructure can sign up for either enterprise self-hosted or BYOC offering.

Those who have the expertise to manage SigNoz themselves or just want to start with a free self-hosted option can use our community edition.

Conclusion

CloudWatch cost optimization follows a loop:

- Measure — Use Cost Explorer and Usage Metrics to find the biggest buckets.

- Reduce — Apply bucket-specific moves: dimension cleanup, retention policies, alarm audit, log filtering.

- Verify — Check that costs actually dropped using the same measurement tools.

- Repeat — New deploys, new features, and infrastructure growth reintroduce costs. Optimization is ongoing.

When the loop stops producing results, when you have cut waste and the remaining cost is the structural price of CloudWatch's billing model, that is when you evaluate whether a platform with a different pricing structure is the more sustainable path.

You've reached the end of the series!

Congratulations on completing "CloudWatch Pricing & Cost Optimization Series".

Note: All prices are for the US East (N. Virginia) region as of February 2026. Verify against official pricing pages for current rates.