Full-Stack Observability Essentials - A Comprehensive Guide

Full-stack observability is a crucial aspect of modern IT environments. It provides a holistic view of your entire technology stack, enabling you to identify and resolve issues quickly. This comprehensive guide will walk you through the essentials of full-stack observability, its key components, and how to implement it effectively in your organization.

What is Full-Stack Observability?

Imagine you work at a tech company using an Application Performance Monitor (APM) to track application performance, a separate tool for infrastructure monitoring, and another for network monitoring. When a latency issue arises, the APM indicates that the application is running slow, but it doesn't reveal whether the problem is with the database, the network, or the server resources. This forces you to manually sift through logs and metrics from different sources to identify the root cause of the problem.

A better way to approach this scenario is to use Full-Stack Observability (FSO).

Once configured with your application, you can simply open its dashboard. The FSO tool will show you that the database queries are taking longer than usual and highlight increased network latency due to a recent configuration change. With these insights, you can quickly identify the root cause and address the issues, whether it's optimizing the database queries or reverting the network configuration change.

FSO helps us to reduce mean time to resolution (MTTR) and minimize downtime, which leads to money savings.

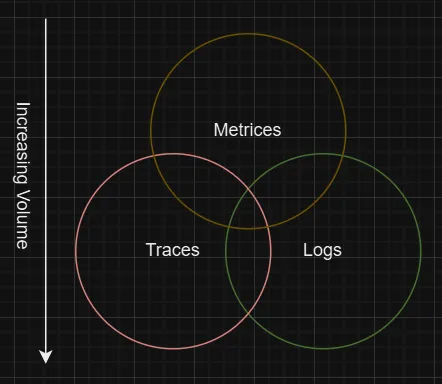

The three pillars of full-stack observability are:

- Logs: Detailed records of events and actions within your system.

- Metrics: Quantitative measurements of system performance and behavior.

- Traces: Visual representations of request flows through your application.

Installing the FSO on your organization's infrastructure and configuring your financial application to send logs, metrics, and traces to FSO, provides a holistic view of the system. Once the tool is integrated seamlessly with the system you can also create custom dashboards to monitor critical metrics and set up alerts for any anomalies.

Key Components of Full-Stack Observability

Let's dive deeper into the three key components of full-stack observability:

Logs: These are time-stamped records of events in your system. Logs provide context for what's happening in your application. They include details such as:

- Error messages

- User actions

- System events

Example log entry:

2023-05-12 14:30:15 [INFO] User 'john_doe' logged in successfullyLogs frequently contain intricate details about how an application processes requests therefore parsing logs can also reveal valuable insights into the application's performance. An effective observability solution should enable log analysis and facilitate the capture of log data and its correlation with metric and trace data, especially for security-related incidents. Good logging practice is also vital to power an observability platform across your system; thus, we have some log management practices, which include log generation, collection, storage, and retention. Often, it’s recommended to use a machine learning optimization tool to ensure that you aren’t paying more for your logs than you need to. One of the best practices that enhances the role of logs in observability is log aggregation.

Imagine facing an outage where you’re trying to SSH into multiple servers to manually pinpoint the root cause and piece together the issue. On the other hand, imagine having a system where all your logs are systematically collated and centralized in a log management service, allowing for a swift, comprehensive overview and efficient troubleshooting.

Log aggregation is a powerful way to remove the “noise” from a complex system as a result of a distributed system. Log aggregation can bring the scattered logs into a centralized repository for easier access, monitoring, and analysis.

A prerequisite of log aggregation is ensuring functional and structured log generation from the system. The following list depicts the requirements of log aggregation:

- Log sources: Identify the log sources you need to aggregate (Application logs, Database logs, Security logs, etc.) to ensure complete and desired visibility

- Log management solution: Determine the destinations and methods for sending logs collected from different sources.

- Log Collection: Devise an aggregation strategy for your desired needs for automatic collection and transportation of data. Construct an automated logging pipeline for collecting, parsing, and shipping the logs, or configure your application's logging framework for transmitting logs.

- Parsing, filtering, and transmitting the logs: Often, logs are sent to different systems via Pipeline wherein distinct systems might have different logging standards, which is why it is necessary to change them into a format that is common enough to make log analysis and correlation once the data is centralized. Parsing rules for log collection, filtering out irrelevant or sensitive data, standardizing key attributes, sampling logs to remove duplicates, normalizing field names and attributes, and structuring data in a format can help fulfill that goal.

- Logs Centralization: Once logs are collected and processed, it is time for them to be monitored, analyzed, and alerted. To reduce data loss and maintain data integrity during aggregations due to any possible interruptions, consider employing a queuing mechanism.

Metrics: These are numerical measurements of your system's performance over time. Metrics help you understand trends and patterns in your system's behavior. Common metrics include:

- CPU usage

- Memory consumption

- Request latency

- Response time

- Error Rates

The collected metrics depend upon your systems, configurations as well as needs. However, there are four most common metrics that are considered essential for observability:

- Latency: Total time it takes for a request to be processed.

- Saturation: The way resources are used. (CPU or memory usage)

- Traffic: The volume and flow of requests in the system.

- Errors: Rate of errors and exceptions that occurred in the system.

Example metric:

http_requests_total{method="GET", endpoint="/api/users"} 1234Metrics can help in quickly identifying the scope and impact during an incident. After the issue is resolved, the root cause can be revealed through a detailed analysis which is why they are useful in the case of performance monitoring for Full Stack Observability.

- Firstly, for being instrumental in performance optimization and tuning, as they pinpoint performance bottlenecks within an application's subsystems by tracking latency and throughput helping the user make informed decisions about where to allocate resources for maximum impact.

- Secondly since querying a time-series database is relatively faster than querying log data it proves to be a more efficient means of generating alerts

- Metrics can also prove useful in the case of business intelligence as we can get valuable insights into customer preferences via metrics related to user engagement, feature usage, etc.

Traces: These track the journey of a request through your system, especially in distributed architectures. Traces help you understand:

- The path of a request

- Time spent in each component

- Bottlenecks in your system

- Sequences of Services

In environments characterized by distributed architectures, tracing is critical in understanding and optimizing complex software systems

Let’s consider a scenario wherein you’d like to see the impact of cache hits and misses. You can perform sampling a fraction of user HTTP requests and analyze the time allocations for interactions with underlying systems like databases and caches by tracing.

It provides insights into the operational dynamics of an application without overwhelming the system with data since it considers specific functions or requests of interest tracks their execution time and allows you to understand the intricate paths requests take, including interactions with databases and caches, and how these interactions influence overall response times. While metrics analysis focuses on overall system health and efficiency, distributed tracing dives deeper into interactions between services within a distributed architecture. It uses unique IDs for requests across remote procedure calls (RPCs), gathering trace data from various processes and servers. This allows for tracking how requests move through different parts of the system, providing a comprehensive view of their journey across service boundaries. This approach enhances visibility and simplifies monitoring and troubleshooting in complex, distributed environments, and microservice architectures, where requests travel through multiple loosely coupled services.

Note: A distributed trace consists of several spans, each representing a fundamental unit that captures a specific operation within a distributed system. These operations can range from an HTTP request to a database call or the processing of a message from a queue.

Example trace visualization:

[Frontend] --> [API Gateway] --> [User Service] --> [Database] 10ms 20ms 50ms 30ms

By correlating these components, you gain comprehensive insights into your system's behavior and performance.

These components have complementary roles with each other, for instance, you can detect a problem through metrics, diagnose it with logs, and use tracing to understand the flow of requests that led to the issue. while metrics are ideal for recording event occurrences and reporting the status of resources (CPU and memory, logs are ideal for capturing detailed narratives of events and traces are useful in visualizing the journey of a request as it moves through various services in a microservices setup, requiring a unique identifier for each trace to ensure traceability.

Why Full-Stack Observability is Essential

Modern software architectures are increasingly complex. Microservices, serverless functions, and distributed systems have made traditional monitoring approaches insufficient.

Nowadays it is essential to possess detailed structured data about your applications for the sake of making sure they operate at optimal performance. More companies are taking a shift to microservice architecture for granular scaling of individual components and distributed systems for the sake of spreading workloads and reducing the impact of single points of failure along with elastic scaling. Each microservice produces its own set of logs which increases data volume. Not only this, usage of multiple containers on top of every Virtual machine (VM) also contributes to an increasing data volume since they have multiple labels and are changing oftentimes as it leads to new metrics adding onto the data. This increasing volume of data makes the system complex and filled with “noise”.

A Full Stack Observability platform solves this issue to provide a comprehensive view into all the systems and get the most value out of the humongous amount of collected and stored data.

Full-stack observability addresses these challenges by:

- Improving system reliability: You can quickly identify and resolve issues before they impact users.

- Enhancing performance: Detailed insights allow you to optimize your system for better performance.

- Reducing Mean Time to Resolution (MTTR): With comprehensive data, you can pinpoint and fix problems faster.

- Supporting DevOps practices: Observability fosters collaboration between development and operations teams.

For example, imagine an e-commerce platform experiencing slow response times. With full-stack observability, you can:

- Check metrics to identify high CPU usage on specific services: Using tools like SigNoz , we can gather and visualize metrics from various sources. Additionally, we ensure that alerts are configured for specific thresholds. When an alert is triggered, we can investigate the metrics to identify which services are experiencing high CPU usage by examining historical trends, per-service CPU usage graphs, or per-instance or per-container CPU usage.

- Analyze traces to find slow database queries: As we read earlier, traces provide a detailed view of the execution path of requests through the system which can help in identifying bottlenecks by showing the time spent in each component, such as API calls, database queries, and external service interactions.

- Review logs to spot any error messages or unusual patterns: We can first aggregate logs from various sources while making sure they're indexed for efficient filtering and searching and then review these logs on a regular basis either by setting up alerts for critical level errors or using ML algorithms to detect anomalies in log patterns.

Full Stack Observability enables you to quickly diagnose and resolve the issue, minimizing downtime as well as improving user experience.

Imagine you’re operating a web application, and you encounter an error message that causes the site to go down. The time between the issue occurring and its resolution is called Mean Time to Resolution (MTTR).

The purpose of every organization is to minimize MTTR as it determines the overall quality as well as stability of your application. Because of the complex and distributed applications used these days troubleshooting such an error while minimizing MTTR can prove to be quite a challenge especially if the applications are run on multiple hosting environments and are distributed around the world with each request routed to different instances. Tracking down certain requests without knowing what exactly is happening within the architecture or knowing where the issue exists can prove to be quite meaningless with the use of FSO tools.

How to Implement Full-Stack Observability

Implementing full-stack observability requires a strategic approach. Here's a step-by-step guide to get you started:

- Assess your current monitoring capabilities:

- Identify gaps in your existing monitoring setup: Depending on your organization's needs, one monitoring system may address all of your use cases, or you may want to use a combination of systems such as:

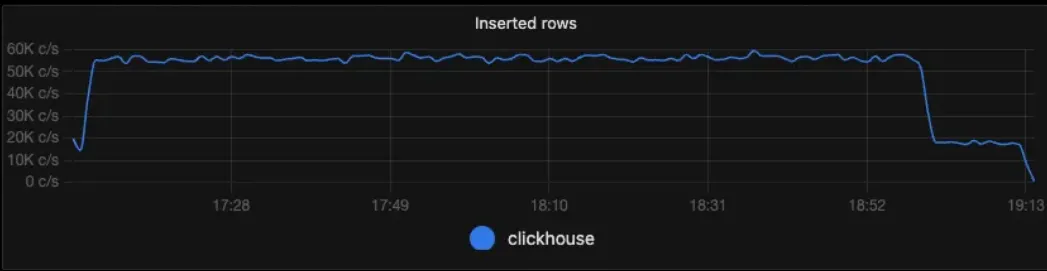

Speed: for an organization, the speed and freshness of data, as well as the speed of data retrieval, are crucial factors. Freshness impacts your Mean Time to Resolution (MTTR), as outdated data might lead to incorrect decisions. If there is a long delay between an action (the cause) and seeing its impact in your monitoring (the effect), you might mistakenly think a change had no effect or draw false correlations between cause and effect. When dealing with large amounts of data, faster retrieval speeds can enhance performance.

SigNoz insertion speed(count/sec) SigNoz insertion speed(count/sec)

For example, speeding up slower graphs is easier if the monitoring system can create and store new time series based on incoming data, allowing it to precompute answers to common queries.

Alerts: Classifying alerts according to their severity allows for proportional responses and helps avoid unnecessary noise that can distract on-call engineers. For example, if all nodes are experiencing the same high error rate, you can send a single alert for the global error rate instead of individual alerts for each node.

Interfaces: A robust monitoring system should display time-series data in graphs and structure data in tables or various chart styles. Different audiences, such as management and SREs, require different views of the same data. You may need to graph metrics across different aggregations—like machine type, server version, or request type—in real-time. Your team should be comfortable performing ad hoc drilldowns to identify correlations and patterns in the data.

- Determine which components of your stack lack visibility: Using dependency analysis tools like OpenTelemetry, we can analyze dependencies and visualize trace data, highlighting areas with missing traces or incomplete monitoring. Application Performance Monitoring (APM) tools provide a holistic view of your stack's performance and identify areas lacking visibility. Additionally, collaborating with SREs, developers, and operations teams helps gather insights on known visibility issues.

- Identify gaps in your existing monitoring setup: Depending on your organization's needs, one monitoring system may address all of your use cases, or you may want to use a combination of systems such as:

- Select appropriate observability tools:

- Consider open-source options like SigNoz for metrics, as it provides quantifiable data points commonly used to monitor cloud infrastructure, signaling when and where problems occur and, Jaeger for tracing to visualize the chain of events in microservice interactions and isolate issues when something goes wrong. Jaeger, also known as Jaeger Tracing, follows the path of a request through a series of microservice interactions.

- Evaluate commercial platforms that offer integrated solutions, such as O11y, SigNoz, DataDog, etc., based on various factors such as if it can help you troubleshoot quickly without a detailed knowledge of the codebase for the person using it or if it helps you identify the error before the customer does.

- Instrument your applications and infrastructure:

- Use logging libraries to generate structured logs which can help in maintaining and easy parsing of the log records for diagnosing issues. You can structure your logs using handlers, formatters, or by using the

structloglibrary - Implement metrics collection in your code and infrastructure since they help understand trends, identify anomalies, and make informed decisions to optimize performance.

- Add tracing to track requests across your distributed system improves visibility and makes it easier to monitor and troubleshoot in complex, distributed setups as well as within microservice architectures, where requests traverse multiple loosely coupled service

- Use logging libraries to generate structured logs which can help in maintaining and easy parsing of the log records for diagnosing issues. You can structure your logs using handlers, formatters, or by using the

- Create a culture of observability:

- Train your team on the importance of observability. The journey to full-stack observability begins with building internal support for IT teams. Communicating the value of full-stack observability and the benefits for cybersecurity, compliance, and cloud cost optimization to business and technical stakeholders is encouraged. Use informative content and informal meetings to help them understand the benefits and secure budget support for these initiatives.

- Encourage developers to add meaningful logs and metrics. A data-centric approach that relies on contextual information can help organizations to "connect the dots" and make informed decisions. Poor data quality leads to incorrect conclusions and decision-making, affecting business outcomes and network performance. Therefore, it is essential to prioritize data quality and maintain it by using proper tools and processes to validate and verify data accuracy, consistency, and completeness.

- Integrate observability throughout the development lifecycle to ensure end-to-end visibility by incorporating instrumentation in the design, planning, testing, deployment, and maintenance phases. By embedding observability into the development process, organizations can identify and address issues before they escalate. It also enhances system performance by pinpointing bottlenecks and optimization opportunities, improves security by detecting anomalous behavior and vulnerabilities, and enables teams to make informed decisions based on real-time data and analytics, leading to more efficient and effective development practices.

Overcoming Common Challenges

As you implement full-stack observability, you may encounter some challenges:

Data silos: Logs, metrics, and traces frequently originate from different sources and systems, resulting in data silos. Aggregating and correlating this disparate data to achieve a unified view can be challenging. This fragmentation can lead to reduced visibility and collaboration across organizations, delay incident response times, and diminish the overall effectiveness of observability practices.

To address this, opt for cross-functional collaboration between teams responsible for network infrastructure, application development, and operations to enhance understanding of network performance and expedite problem resolution. Additionally, integrate observability tools to provide a cohesive view of your system.

Data volume: As we discussed earlier distributed cloud-based systems as each microservice might produce its own set of logs, which can lead to a higher volume of data and delayed response. We can resolve this by implementing sampling, filtering, aggregation and, retention of data as well as advanced querying to manage large volumes of data without losing important information.

Effective visualization: Design dashboards to deliver clear, actionable insights at a glance. Effective data visualization and interpretation require integrating anomaly detection. Start by identifying anomalies through log observability, and once your logs are aggregated, use machine learning tools like Flow Anomaly or Error Volume Anomaly to detect deviations from the norm. To manage micro-releases on customer-facing platforms, incorporate Benchmark Reports and Version Tags to trace each system change, assess its impact, and visualize the outcomes.

Privacy and security: Ensure that your observability practices comply with data protection regulations and security best practices. Implement strong data governance practices, including data anonymization, compliance audits, secure data storage, and transport mechanisms, to reduce the risk of sensitive data being exposed to unauthorized parties during observability processes. Establish clear data usage policies and access rights to ensure adherence to international standards and compliance regulations, such as GDPR and HIPAA.

Leveraging OpenTelemetry for Full-Stack Observability

OpenTelemetry is an open-source project that provides a standardized approach to observability. It aims to address the fragmentation and complexity of how telemetry data is collected and processed in distributed systems. It seeks to replace the myriads of proprietary agents and formats with a unified, vendor-neutral, and open-source standard for instrumenting applications, collecting signals (traces, metrics, and logs), and exporting them to various analysis backends and enables seamless integration with multiple monitoring and observability platforms.

Note: OpenTelemetry focuses solely on collecting and delivering telemetry data, leaving the generation of actionable insights to dedicate analysis tools and platforms.

Here the term ‘telemetry data’ means the information gathered from various deployments of software applications, systems, and devices for monitoring and analysis purposes.

The most common types of telemetry data include:

- Metrics: are numerical measurements that quantify system health or performance, such as CPU usage, memory consumption, request latency, and error rates.

- Traces: are records of a path taken by a request as it moves through a distributed system for highlighting dependencies and bottlenecks.

- Logs: signify actions, errors, and other relevant information that help with understanding system behavior and troubleshooting issues are recorded.

- Events: are structured records that contains contextual information about what it took to complete a unit of work in an application (commonly a single HTTP transaction).

- Profiles: provides insights into resource usage (CPU, memory, etc.) within the context of code execution.

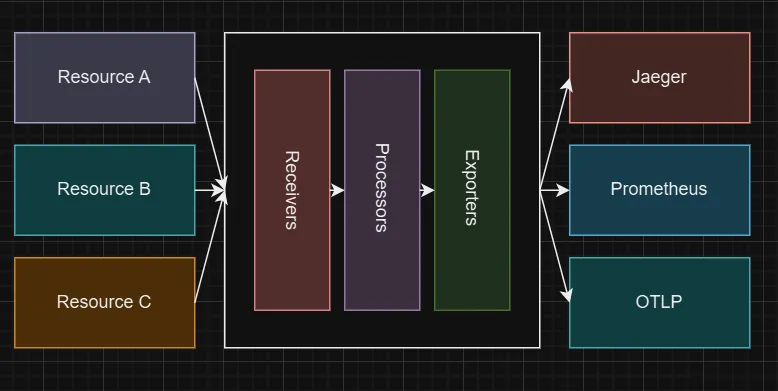

The framework is centered around several key components: the OpenTelemetry API, SDK, and Collector. These elements work together to generate, collect, and export telemetry data to various backends.

It offers:

A unified SDK, API, and data specification are used for instrumenting your code. The API outlines the data types and programming interfaces for creating and manipulating telemetry data across various languages to ensure consistency in data generation and handling. The SDK implements the OpenTelemetry API in various languages, handling tasks like sampling, context propagation, processing, and exporting telemetry data. It also supports automatic instrumentation via integrations and agents which helps in minimizing manual coding to capture metrics and traces. The data specification includes a vendor-agnostic protocol (OTLP) for transmitting telemetry data within the OpenTelemetry ecosystem. It defines data formats, semantic conventions, and communication mechanisms for telemetry signals, ensuring consistency and simplifying data analysis and correlation.

A collector is used for processing and exporting telemetry data, serving as an intermediary between your instrumented applications and the backend systems where you analyze and visualize your data. It operates as a standalone binary process (which can function as a proxy or sidecar) that receives telemetry data in various formats, processes and filters it, and then sends it to one or more configured observability backends.

OTel Collector visual depiction The OpenTelemetry Collector’s receiver is used to get data into the OTel Collector. If you’re sending out instrumentation data from your application code, you would use the native OpenTelemetry Protocol (OTLP) format, which means that you’ll be using the OTLP receiver.

If you’re receiving metrics data from your infrastructure in some different data format (e.g. satsd, Jaeger, Prometheus), you’ll need to use a receiver that ingests that format to convert it to OTLP. The OTel Collector sends data to multiple back-ends by way of exporters. These translate OTLP to a vendor-specific back-end format.

- Receivers: ingests telemetry data in different formats from various sources.

- Processors: to process the ingested telemetry data by filtering, aggregating, enriching or, transforming it.

- Exporters: to deliver the processed data to one or more observability backends in a desired format.

- Connectors: for bridging different pipelines within the Collector, facilitating seamless data flow and transformation between them. They function as both exporters for one pipeline and receivers for another.

- Extensions: provide additional functionality such as health checks, profiling, and configuration management. They do not require direct access to telemetry data.

Vendor-agnostic instrumentation, allows you to switch between different observability backends, in other words, the code you write using OpenTelemetry SDK and API is vendor-agnostic, meaning it is compatible with any backend supported by OpenTelemetry like Jaeger, Prometheus, Datadog, etc.

The OpenTelemetry Protocol (OTLP) is a vendor-neutral, open-source specification for encoding, transporting, and delivering telemetry data between different components within the OpenTelemetry ecosystem.

It facilitates seamless communication across various parts of your observability stack, regardless of the specific tools or platforms you use. This flexibility helps avoid vendor lock-in and allows you to select the tools that best meet your needs.

Note: OpenTelemetry supports ingesting data in other protocols through appropriate receivers, and it can convert data from one format to another to simplify integration with different backends

To implement OpenTelemetry:

- Install the OpenTelemetry SDK in your application

- Instrument your code using the OpenTelemetry API

- Configure the OpenTelemetry Collector to receive and export your telemetry data

- Choose an observability backend to store and visualize your data

Refer to our previous articles for a detailed step-by-step instruction on how to implement OpenTelemetry in your system:

JS - https://signoz.io/opentelemetry/nodejs-tutorial-overview/

Golang - https://signoz.io/opentelemetry/go/

Java - https://signoz.io/opentelemetry/java-agent/

By standardizing data formats and transmission, OpenTelemetry facilitates seamless integration with various monitoring and observability platforms. This ensures that organizations are not tied to a specific vendor or proprietary solution, allowing them to choose the best tools for their needs. OpenTelemetry allows the collection of telemetry data from diverse sources and export to multiple platforms with minimal configuration changes, enabling you to switch to the desired backend and providing insights using desired tools/formats, thereby avoiding vendor lock-in OpenTelemetry’s flexibility in handling data such as aggregating, enriching, filtering, and processing telemetry data before export, optimizes data flow and minimizes the resource impact on instrumented applications.

A Better Way to Think About Observability

We talked about how one can visualize logs, metrics, and traces as the three pillars of observability however thinking of them as isolated pillars can create a false dichotomy. SigNoz believes that they are interconnected as log data can always be aggregated to build meaningful metrics, we can generate granular, attribute-based metrics using trace data or derive application metrics from trace span data. At SigNoz, we envision observability as a mesh or network rather than a set of pillars (metrics, traces, and logs). This interconnected approach allows for more powerful correlations and faster problem-solving. Correlation of different signals can help in troubleshooting complex systems as well as reducing MTTR which is what observability should be, a unified system that integrates various signals to help solve customer problems more quickly. A single data store, like a columnar database, can efficiently handle aggregation queries and perform detailed analysis. Imagine you encounter an error in the system, with the power of SigNoz and O11y you can detect a problem through metrics, diagnose it with logs, and use tracing to understand the respective flow of requests that led to the issue. Ultimately, observability tools should evolve from basic dashboards to smart systems that answer specific questions about your infrastructure and applications, offering a more integrated and powerful way to identify and resolve issues swiftly.

Best Practices for Maximizing Full-Stack Observability

To get the most out of your full-stack observability implementation:

- Implement effective tagging: Use consistent tags across logs, metrics, and traces to correlate data easily. This also simplifies filtering and searching through large datasets for the purpose of finding relevant information, especially for complex and distributed systems, which oftentimes generate an increasing amount of data.

- Design meaningful dashboards: Create dashboards that provide actionable insights at a glance. Determine which metrics are most important for your system’s performance and health. Common metrics include CPU usage, memory usage, error rates, request latency, and throughput. Customize a mix of visualizations (graphs, charts, tables) to highlight important trends and anomalies.

- Integrate observability into your CI/CD pipeline: Automatically update your observability configuration as your system evolves. You can use infrastructure-as-code (IaC) tools to manage and update observability configurations automatically. Another way to integrate observability is by storing your observability configurations in version control systems like Git to track changes and enable rollbacks if needed. You can also implement automated tests to validate your observability configurations and ensure they are correctly applied. It allows your observability setup to adapt quickly to changes in your application.

- Continuously refine your approach: Regularly review and update your observability strategy to ensure it meets your evolving needs. Observability is not a set-and-forget process. It requires ongoing refinement to adapt to the evolving needs of your system and business requirements. Establish feedback loops with stakeholders (developers, ops teams, business users) to gather insights and feedback on your observability setup. Continuously update and optimize your observability tools, configurations, and practices based on the feedback and evolving requirements. Invest in training and educating your team on best practices and new tools in the observability space.

By following these best practices, you'll be well-equipped to harness the power of full-stack observability and maintain high-performing, reliable systems.

Key Takeaways

- Full-stack observability provides a comprehensive view of your entire technology stack

- Logs, metrics, and traces are the three pillars of observability

- Implementing full-stack observability improves system reliability and performance

- OpenTelemetry offers a standardized approach to observability implementation

- Continuous refinement is key to long-term observability success

FAQs

What's the difference between monitoring and full-stack observability?

Monitoring typically focuses on predefined metrics and thresholds, while full-stack observability provides a more comprehensive view of your system's behaviour, allowing you to ask and answer complex questions about your system's performance and health.

How does full-stack observability improve incident response times?

Full-stack observability provides detailed insights into your system's behaviour, allowing you to quickly identify the root cause of issues and implement solutions, thus reducing Mean Time to Resolution (MTTR).

Can full-stack observability help with cost optimization in cloud environments?

Yes, full-stack observability can help identify underutilized resources, performance bottlenecks, and inefficient processes, enabling you to optimize your cloud resource usage and reduce costs.

What are the key considerations when choosing observability tools?

Consider factors such as ease of integration, scalability, data retention capabilities, visualization features, and support for your technology stack when selecting observability tools.