How to Fix Duplicate Log Messages in Python Logging

Python's logging module is a powerful tool for tracking events in your applications. However, developers often encounter a frustrating issue: log messages appear twice. This duplication can clutter your logs, making debugging more challenging and potentially impacting application performance. Let's dive into the causes of this problem and explore effective solutions to ensure your logs are clean, accurate, and helpful.

Understanding the Duplicate Log Message Problem

Duplicate log messages occur when the same log entry is recorded more than once. This redundancy is not just annoying—it can lead to confusion during debugging and unnecessarily bloat your log files. The primary factors behind this issue are:

- Multiple handlers attached to the same logger: A frequent cause of duplicate log messages is having several log handlers configured. In Python's logging system, handlers determine where the log messages go, such as to a file, console, or network. When multiple handlers are set up to handle the same messages, each one processes the message, leading to duplicates in your output.

- Multiple Loggers: If multiple loggers are used for the same events, you might end up with duplicates. This usually happens when different parts of the code use different loggers or if the loggers aren't set up to share the same handler. Each logger might generate its own log entry, leading to redundant messages.

- Improper configuration of logger hierarchies: If you attach a handler to a logger and also to one or more of its ancestor loggers, you might see the same log message appear multiple times. This happens because loggers are organized in a hierarchy, and if handlers aren’t managed properly across parent and child loggers, you could end up with duplicates. To avoid this, make sure handlers are attached only to the specific loggers you want and not to their ancestor loggers.

- Unintended logger inheritance in multi-module applications: In larger applications with multiple modules, you might run into issues where log entries show up more than once. This happens because if a logger in one module inherits handlers from a parent module, the same log message can get processed multiple times if both the parent and child loggers have their own handlers. To prevent this from happening, it's a good idea to set up and manage loggers for each module separately.

We'll revisit these points for a more detailed discussion later in the article.

Root Logger vs. Child Logger Behavior

Understanding the hierarchy of loggers in Python is crucial for preventing duplicates. The logging module uses a tree-like structure:

The root logger sits at the top of the hierarchy. It is the default logger created automatically by python’s logging module. It is responsible for capturing all log messages unless explicitly overridden. This logger is initialized with a default configuration such as the default level is set to

WARNINGand it can be accessed usinglogging.getLogger()without any arguments. It can be customized with handlers and formatters.Child loggers are explicitly created using dot notation, forming a hierarchy such as

A.B.Crepresents,Aas a child logger of the root logger,A.Bas child logger of parent loggerAand similarly,A.B.Cas a child of parent loggerA.B.Root Logger (implicitly '') | ├── A (Logger 'A') | | | ├── A.B (Logger 'A.B') | | | └── A.B.C (Logger 'A.B.C')Child loggers inherit configuration from their parent loggers, but they can also have their own handlers, formatters, and levels. This allows for fine-grained control over logging output at different parts of an application.

Quick Guide: Fixing Duplicate Logs in Python

Before diving into detailed solutions, here's a quick checklist to address common causes of duplicate logs:

Check for multiple handlers: Ensure you're not adding redundant handlers to your root loggers.

The root logger sits at the top of the logger hierarchy. If there's an extra handler attached to it that we don’t need, it might cause messages to be duplicated, and it’s a good to remove it.

Firstly, let’s list all handlers attached to the root logger:

import logging # Setup root logger with multiple handlers root_logger = logging.getLogger() root_logger.setLevel(logging.DEBUG) # Adding multiple handlers for demonstration handler1 = logging.StreamHandler() handler2 = logging.StreamHandler() root_logger.addHandler(handler1) root_logger.addHandler(handler2) # Check the handlers for handler in root_logger.handlers: print(handler)Output

<StreamHandler (NOTSET)> <StreamHandler (NOTSET)>This indicates that two

StreamHandlerinstances are attached to the root logger. We can then remove the redundant handlers by:if len(root_logger.handlers) > 0: # Remove the first handler root_logger.removeHandler(root_logger.handlers[0])Now, let’s verify if they have been removed.

for handler in root_logger.handlers: print(handler)Output:

<StreamHandler (NOTSET)>Change

logger.propagatesettings for loggers:Centralized logging with

logger.propagate=true: If you have several loggers in your application, each with its own handler, it can lead to duplicate log messages in your output. This can be solved by attaching a single handler to the root logger and letting log messages propagate up to it. This approach consolidates logging and prevents duplication.Isolated logger handling with

logger.propagate=false: If you have a custom logger for a specific module with its own handler to isolate that component in your application, enabling propagationpropagate = Truecould lead to duplicate log entries. This happens because the custom logger’s handler processes the log messages, and the same messages are then propagated to the root logger’s handler, resulting in redundancy. To avoid this duplication, you should setpropagate = Falseon the custom logger, ensuring that its log messages are not forwarded to the root logger and preventing multiple outputs of the same log entry.

import logging

# Setup root logger (implicitly named '')

root_logger = logging.getLogger()

root_handler = logging.StreamHandler()

root_handler.setLevel(logging.DEBUG)

root_logger.addHandler(root_handler)

root_logger.setLevel(logging.DEBUG)

# Child Logger 1: Has its own handler

child_logger1 = logging.getLogger('A') # This logger is a child of the root logger

child_handler1 = logging.StreamHandler()

child_handler1.setLevel(logging.DEBUG)

child_logger1.addHandler(child_handler1)

child_logger1.setLevel(logging.DEBUG)

child_logger1.propagate = False # Prevents messages from being sent to parent loggers

# Child Logger 2: No custom handler

child_logger2 = logging.getLogger('A.B') # This logger is a child of 'A'

child_logger2.setLevel(logging.DEBUG)

# No handler added to child_logger2

child_logger2.propagate = True # Messages will be sent to parent loggers

# Child Logger 3: No custom handler

child_logger3 = logging.getLogger('A.B.C') # This logger is a child of 'A.B'

child_logger3.setLevel(logging.DEBUG)

# No handler added to child_logger3

child_logger3.propagate = True # Messages will be sent to parent loggers

# Logging messages

root_logger.debug('Debug message from root logger.')

child_logger1.debug('Debug message from child_logger1.') # Handled only by child_logger1's handler

child_logger2.debug('Debug message from child_logger2.') # Propagates up to 'A' and handled by root logger

child_logger3.debug('Debug message from child_logger3.') # Propagates up to 'A.B', then 'A', and finally to the root logger

In the above code, child_logger1 has its own custom handler and so we used propagate=false whereas for child_logger2 and child_logger3 that requires no custom handler, we’ve set the propagation to true.

Output:

Debug message from root logger.

Debug message from child_logger1.

Debug message from child_logger2.

Debug message from child_logger3.

Centralize logger configuration: Use a consistent configuration approach across your application. Take a look at how loggers are used in your codebase. Ensure that the same logger is used consistently throughout the application. If you find that different loggers are being used for the same events, try consolidating them into a single logger. This will help prevent duplicate log entries and keep your logging clean and manageable. Let’s take an example,

import logging # Setup root logger (implicitly named '') root_logger = logging.getLogger() root_handler = logging.StreamHandler() root_handler.setLevel(logging.DEBUG) root_logger.addHandler(root_handler) root_logger.setLevel(logging.DEBUG) # Setup first logger logger1 = logging.getLogger('logger1') logger1.setLevel(logging.DEBUG) # No handler added to logger1, so it will propagate up to root_logger # Setup second logger logger2 = logging.getLogger('logger2') logger2.setLevel(logging.DEBUG) # No handler added to logger2, so it will propagate up to root_logger # Log a message with both loggers logger1.debug('This is a debug message.') logger2.debug('This is a debug message.')Output

This is a debug message. This is a debug message.As you can see, the root logger outputs the same log entry twice in the output. In order to avoid this duplication, it is recommended to consolidate such loggers into one such as:

# Create a single logger consolidated_logger = logging.getLogger('consolidated_logger') consolidated_logger.setLevel(logging.DEBUG) # Add a handler to the consolidated logger file_handler = logging.StreamHandler() file_handler.setLevel(logging.DEBUG) consolidated_logger.addHandler(file_handler) # Log messages with the consolidated logger consolidated_logger.debug('This is a debug message.')Output

This is a debug message.

Identifying Sources of Duplicate Logging

To effectively tackle duplicate logs, you need to identify their source. Here are some strategies:

Examine configuration files: Review your logging configuration files (e.g.,

logging.conforlog_config.yaml) for any inconsistencies or redundant handlers. In larger applications, especially those with multiple modules, it's important to configure loggers in each module independently. This helps ensure that loggers don’t unintentionally inherit or duplicate configurations from other modules, keeping your logging setup clean and effective.Use debug statements: Temporarily add debug prints to trace the flow of log messages through your application. To make sure handlers aren't triggered multiple times for the same log entry, you can include specific handlers in your configuration. Additionally, you might temporarily insert trace-level logs to pinpoint exactly where log messages are being generated and processed. This can help you spot any redundant log processing paths and clean up your logging setup.

Analyze handler setup: Check each module for logger and handler configurations. Check for instances where handlers might be added multiple times. Make sure that each handler is added only once to each logger. Adding handlers to both parent and child loggers can lead to messages being processed more than once. To prevent this, remove handlers from parent loggers if you want to avoid duplication in child loggers.

# In the parent logger logger_a = logging.getLogger('app') for handler in logger_a.handlers[:]: logger_a.removeHandler(handler) # Add handlers only to specific loggersInspect multi-module interactions: In complex applications, loggers from different modules may interact unexpectedly. Pay special attention to imported modules and their logging setups. Let’s take an example,

# module_ a import logging # Module A configures a logger with a file handler logger = logging.getLogger('app') file_handler = logging.FileHandler('module_a.log') file_handler.setFormatter(logging.Formatter('%(name)s - %(levelname)s - %(message)s')) logger.addHandler(file_handler) # Set logger level to DEBUG logger.setLevel(logging.DEBUG) # module_b # Module B reconfigures the same logger name with a console handler logger = logging.getLogger('app') # Same logger name as module_a console_handler = logging.StreamHandler() console_handler.setFormatter(logging.Formatter('%(levelname)s: %(message)s')) logger.addHandler(console_handler) # Overwrites previous logging level to ERROR logger.setLevel(logging.ERROR) # main module from module_a import logger as logger_a from module_b import logger as logger_b # Ensuring basic config in main # This can interfere with module settings logging.basicConfig(level=logging.DEBUG) # Intended for file, will not appear if overridden logger_a.debug('Debug message from module_a') # Intended for console logger_b.error('Error message from module_b')Output

ERROR: Error message from module_b ERROR:app:Error message from module_bThe logger in

module_bconfigured with a console handler affects the logger’s behavior set inmodule_a. The outputERROR:app:Errormessage frommodule_bshows thatmodule_b's console handler is overridingmodule_a's file handler, causing all messages to appear on the console instead of the file.Trace Imports and Initializations: Check that modules aren’t initializing or configuring logging in ways that might cause unexpected interactions.

The

logging.basicConfig(level=logging.DEBUG)call in [m](http://main.py/)ain.pyaffects the global logging configuration and can override settings from modules. In this case, it doesn’t directly impact the output, but it can cause unexpected behavior if configurations are not isolated properly. For instance, ifbasicConfighad set different handlers or levels, it might have altered the logging setup ofmodule_aandmodule_b.Consistency in Logger Naming: Ensure logger names are used consistently across modules. Inconsistent naming can lead to confusion, accidental overlap, or misconfiguration. Both

module_aandmodule_buses the same logger nameapp. The outputERROR:app:Errormessage frommodule_bdemonstrates that using the same name for loggers across modules can lead to overlapping configurations. Theconsole_handlerfrommodule_bis taking effect and overriding thefile_handlerfrommodule_a.Module-Specific Configurations: Verify that modules with unique logging needs have their configurations properly isolated. This helps prevent unintended interactions with other modules. The logger in

module_breconfigures the same logger nameappthatmodule_aconfigured. This results in all log messages being handled bymodule_b’s console handler due to the level set toERROR. This shows that configurations meant for different modules are interfering with each other because they’re not isolated, causing logs to be handled in a manner that wasn’t intended.

Step-by-Step Solutions for Common Scenarios

Let's address some specific situations where duplicate logs often occur:

Fixing duplicate logs in a single module

Use logging.getLogger(__name__) to create module-specific loggers. Loggers come with various attributes and methods but remember that you should never instantiate them directly. Always use the module-level function logging.getLogger(name) to get a logger. Multiple calls to getLogger() with the same name will return a reference to the same Logger object. To avoid confusion, make sure you don’t initialize multiple loggers with the same name. It's best to initialize your logger with a specific name parameter, like this:

import logging

logger = logging.getLogger(name)

Resolving issues in multi-module applications

- Create a Central Logging Configuration: Set up a central logging configuration function or file to maintain a single source of truth for your logging setup. This ensures consistency across various modules and simplifies updates to logging settings.

- Import and Use the Central Configuration: Import this configuration into all your modules. This way, you avoid discrepancies and ensure that every part of your application follows the same logging setup.

- Avoid Adding Handlers in Individual Modules: Unless absolutely necessary, don’t add handlers in individual modules. This helps prevent duplicate log entries. Instead, rely on the central configuration to handle the setup of handlers.

Here’s an example:

# In logging_config.py

import logging

def configure_logging():

logging.basicConfig(level=logging.INFO)

logger = logging.getLogger('app')

logger.propagate = False

handler = logging.StreamHandler()

logger.addHandler(handler)

# In other modules

from logging_config import configure_logging

import logging

configure_logging()

logger = logging.getLogger('app.module_name')

In the example, we import configure_logging and get our loggers from the central configuration. By calling configure_logging() in each module, we apply the central logging setup without adding extra handlers in the individual modules. This approach helps avoid duplicate log entries and ensures a consistent logging configuration across the application.

Addressing duplicates in web frameworks (e.g., Django)

Web frameworks often have their own logging configurations. To prevent duplicates:

- Review the framework's default logging setup. Frameworks like Django typically have predefined handlers and loggers which might conflict with your custom configuration if not properly managed.

- Customize the configuration to suit your needs without adding redundant handlers.

- Use the framework's recommended logging practices.

- Address Duplicate Logs Due to Django’s Auto-Reloading Feature. If you’re using Django’s development server (

runserver) withDEBUGset toTrue, you might notice that log messages are output twice. This happens because the auto-reloader process initializes the application a second time. To avoid this issue, you can run the server with the--noreloadoption:

bashCopy code

python manage.py runserver --noreload

This prevents the auto-reloader from running and avoids the duplication of log messages. Alternatively, you can investigate why run is called twice in the Django dev server context to better understand and handle this behavior.

If you need to address this at a more granular level, you can modify the server command to prevent initialization code from running twice. Here’s an example of how you can detect if the process is running in the auto-reloader and skip certain initialization code:

import os

from django.contrib.staticfiles.management.commands.runserver import Command as RunserverCommand

class Command(RunserverCommand):

def run(self, *args, **options):

if os.environ.get('RUN_MAIN') != 'true':

self.stdout.write('About to start running on ' + self.addr)

super(Command, self).run(*args, **options)

In this advanced solution:

- Environment Variable Check: The code checks for the

RUN_MAINenvironment variable, which Django sets to 'true' in the process spawned by the auto-reloader. - Conditional Execution: It ensures that specific initialization code only runs in the main process and not in the auto-reloaded process.

For Django, you might configure logging in settings.py:

LOGGING = {

'version': 1,

'disable_existing_loggers': False,

'handlers': {

'console': {

'class': 'logging.StreamHandler',

},

},

'root': {

'handlers': ['console'],

'level': 'INFO',

},

}

Advanced Techniques for Complex Applications

For a more sophisticated logging needs, let us consider these advanced techniques:

Using filter objects to control log output

Filters provide fine-grained control over which log records are output. It let us fine-tune which log records are output. This allows us to meet specific logging needs, such as filtering out duplicate messages or focusing on particular types of log entries.

class DuplicateFilter(logging.Filter):

def __init__(self):

self.msgs = set()

def filter(self, record):

rv = record.msg not in self.msgs

self.msgs.add(record.msg)

return rv

logger = logging.getLogger()

logger.addFilter(DuplicateFilter())

# Example usage

logger.info("This message will be logged only once.")

logger.info("This message will be logged only once.")

Output

2024-09-03 12:47:22,197 - INFO - This message will be logged only once.

This filter ensures that each unique message is logged only once. The DuplicateFilter class achieves this by using a set to track messages that have already been logged. The filter method checks if the message is already in the set; if it isn’t, the message is logged and then added to the set. This approach helps prevent redundant logs and keeps the logging output clear and concise, especially in complex applications.

Implementing custom handlers for specific logging needs

Custom handlers allow you to route logs to specific destinations or process them uniquely:

import logging

# Define a custom handler to prevent duplicates

class DeduplicationHandler(logging.Handler):

def __init__(self):

super().__init__()

self.logged_messages = set() # Set to keep track of logged messages

def emit(self, record):

message = self.format(record) # Format the log record

if message not in self.logged_messages:

self.logged_messages.add(message) # Add message to the set if not already logged

print(message) # Replace this with logic to route the log, e.g., writing to a file or database

# Create a logger

logger = logging.getLogger(__name__)

logger.setLevel(logging.DEBUG)

# Add the custom deduplication handler to the logger

dedup_handler = DeduplicationHandler()

logger.addHandler(dedup_handler)

# Example log messages

logger.info("This is a test log message.")

logger.info("This is a test log message.") # This duplicate message will be ignored

logger.error("This is an error message.")

logger.error("This is an error message.") # This duplicate message will be ignored

Output

2024-09-03 12:48:57,625 - INFO - This is a test log message.

2024-09-03 12:48:57,625 - ERROR - This is an error message.

In the code, we use a CustomDeduplicationHandler that extends logging.Handler and overrides the emit method. This handler uses a logged_messages set to track and prevent duplicate messages. When a log message is emitted, the emit method checks if the message is already in the set. If it’s new, it logs the message and adds it to the set; if it’s a duplicate, it’s ignored. We add this handler to the logger and set the logger to the desired log level.

Strategies for logging in multi-threaded environments

In multi-threaded applications, use thread-safe logging techniques to ensure that log messages from multiple threads are handled correctly and consistently:

Use

logging.getLogger()to get thread-safe logger instances, you ensure that the logging system handles concurrency issues internally. So, when we calllogging.getLogger(name), it returns a logger instance which is configured to be thread-safe. Theloggingmodule manages internal locks to ensure that log records are processed correctly even when multiple threads generate logs simultaneously. Let’s take an example,import logging # Get a thread-safe logger instance logger = logging.getLogger('my_logger') logger.setLevel(logging.DEBUG)Here, the logger instance returned by

getLogger()is thread safe. You can use this logger in different threads without worrying about race conditions or data corruption.Consider using

QueueHandlerandQueueListenerfor asynchronous logging. For applications with high logging volume, usingQueueHandlerandQueueListenercan help manage logging asynchronously and reduce contention between threads.QueueHandlersends log records to a queue, whichQueueListenerprocesses in a separate thread.import logging import logging.handlers import queue import threading # Create a queue for log records log_queue = queue.Queue() # Define a QueueHandler to put log records into the queue queue_handler = logging.handlers.QueueHandler(log_queue) # Create a logger and add the QueueHandler logger = logging.getLogger('my_async_logger') logger.setLevel(logging.DEBUG) logger.addHandler(queue_handler) # Define a handler to process log records from the queue console_handler = logging.StreamHandler() console_handler.setFormatter(logging.Formatter('%(asctime)s - %(message)s')) # Define a QueueListener to process log records queue_listener = logging.handlers.QueueListener(log_queue, console_handler) queue_listener.start() # Function to log messages from multiple threads def worker(): logger.info('Log message from worker thread') # Start threads threads = [threading.Thread(target=worker) for _ in range(5)] for t in threads: t.start() for t in threads: t.join() # Stop the QueueListener when done queue_listener.stop()This setup allows multiple threads to log messages concurrently without blocking each other, as the

QueueListenerhandles log processing asynchronously. After all threads finish logging, theQueueListeneris stopped to ensure all messages are processed.Implement locks if you're creating custom handlers that aren't inherently thread safe. If you create custom log handlers or perform logging in complex multi-threaded environments, you might need to ensure that these custom components are thread safe. For instance, if your custom handler writes to a shared file or database, you should use threading locks to prevent concurrent write operations from causing issues.

import logging import threading class ThreadSafeHandler(logging.Handler): def __init__(self): super().__init__() self.lock = threading.Lock() def emit(self, record): with self.lock: # Example: Write log message to a file with open('logfile.log', 'a') as f: f.write(self.format(record) + '\\n') # Configure logger to use the custom thread-safe handler logger = logging.getLogger('thread_safe_logger') handler = ThreadSafeHandler() handler.setFormatter(logging.Formatter('%(asctime)s - %(message)s')) logger.addHandler(handler) logger.setLevel(logging.DEBUG)In this example,

ThreadSafeHandleruses a lock to synchronize access to the file, ensuring that log messages from different threads are written safely.

Best Practices for Python Logging Configuration

To maintain clean and efficient logging:

- Centralize logging configuration: Use a single configuration file or function to set up logging across your application.

- Utilize configuration files: Use

YAMLorJSONfiles for logging configuration, making it easy to adjust settings without code changes.

# logging_config.yaml

version: 1

disable_existing_loggers: False

handlers:

console:

class: logging.StreamHandler

level: INFO

root:

level: INFO

handlers: [console]

This YAML file sets up a simple logging configuration with a console handler that logs messages at the INFO level and above.

- Implement environment-specific logging: Adjust logging behavior based on the application's environment (development, staging, production). This ensures that you get the right level of detail depending on where the application is running.

import os

import yaml

import logging.config

env = os.getenv('ENVIRONMENT', 'development')

with open(f'logging_config_{env}.yaml', 'r') as f:

config = yaml.safe_load(f.read())

logging.config.dictConfig(config)

This code dynamically loads a configuration file based on the ENVIRONMENT variable. For instance, logging_config_development.yaml might include debug-level logging, while logging_config_production.yaml would only include critical errors.

- Regularly audit logging behavior: Periodically review your logs to ensure they're providing the necessary information without redundancy and ensure that log outputs align with your application's needs.

Debugging Techniques for Persistent Duplicate Logs

If you're still seeing duplicates after applying the above solutions:

- Temporary log isolation: Disable all but one logger to identify the source of duplication. By disabling loggers one by one, you can isolate which logger is causing the duplicates. This helps in narrowing down the root cause of the issue.

logging.getLogger('suspected_logger').disabled = True

Leverage

logging.Logger.disabled: Temporarily disable loggers to isolate the issue. Setlogger.disabled = Truefor specific loggers that you suspect might be causing the duplicates. This approach helps you confirm whether a particular logger is involved in generating duplicate messages.import logging # Disable the logger that might be causing duplicates logger = logging.getLogger('app.module') logger.disabled = True # Perform your tests or operations here # Re-enable the logger after testing logger.disabled = FalseThis method allows you to control which loggers are active and helps determine if disabling certain loggers resolves the duplication problem.

Use log analysis tools: Tools like log_analyzer can help identify patterns in your logs. These tools can help you detect patterns, anomalies, or redundancy in your log messages. They often provide features like filtering, sorting, and visualizing log data to make it easier to spot issues

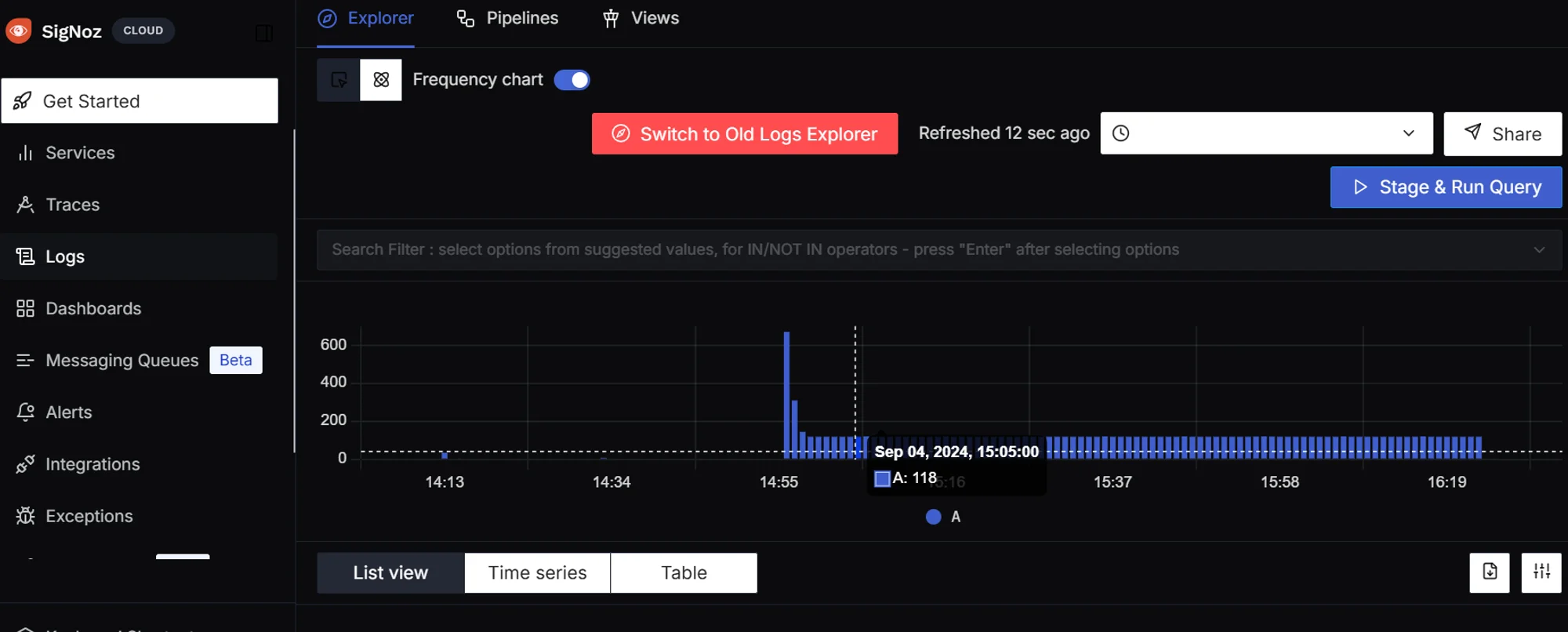

Enhancing Logging with SigNoz for Distributed Systems

For complex, distributed applications, consider using advanced observability tools like SigNoz. SigNoz offers:

- Distributed tracing to correlate logs across services. By correlating logs with traces, you can see the end-to-end journey of requests through your system, making it easier to identify performance bottlenecks or errors.

- Performance metrics alongside logs for comprehensive debugging and to give you a broader view of system behavior, helping you understand the context of logs and troubleshoot issues more effectively.

- Custom dashboards for visualizing log data and performance metrics in a way that suits your needs, facilitating better monitoring and analysis.

Get started with SigNoz by integrating SigNoz with your Python application:

Install the required packages:

pip install flask opentelemetry-api opentelemetry-sdk opentelemetry-exporter-otlp

We can then setup a sample flask app that sends the logs to SigNoz using OpenTelemetry

import logging

from flask import Flask

from opentelemetry import trace

from opentelemetry.sdk.resources import Resource

from opentelemetry.sdk.trace import TracerProvider

from opentelemetry.sdk.trace.export import BatchSpanProcessor

from opentelemetry.exporter.otlp.proto.grpc.trace_exporter import OTLPSpanExporter

from opentelemetry.sdk._logs import LoggerProvider, LoggingHandler

from opentelemetry.sdk._logs.export import BatchLogRecordProcessor

from opentelemetry.exporter.otlp.proto.grpc._log_exporter import OTLPLogExporter

# Configure OpenTelemetry resources

resource = Resource(attributes={"service.name": "flask-app"})

# Trace configuration

trace.set_tracer_provider(TracerProvider(resource=resource))

tracer = trace.get_tracer(__name__)

otlp_span_exporter = OTLPSpanExporter(

endpoint="http://localhost:4317", # Local SigNoz endpoint

insecure=True # Set to True if using HTTP

)

span_processor = BatchSpanProcessor(otlp_span_exporter)

trace.get_tracer_provider().add_span_processor(span_processor)

# Log configuration

logger_provider = LoggerProvider(resource=resource)

otlp_log_exporter = OTLPLogExporter(

endpoint="http://localhost:4317", # Local SigNoz endpoint

insecure=True # Set to True if using HTTP

)

logger_provider.add_log_record_processor(BatchLogRecordProcessor(otlp_log_exporter))

otel_handler = LoggingHandler(level=logging.INFO, logger_provider=logger_provider)

# Configure root logger

root_logger = logging.getLogger()

root_logger.setLevel(logging.INFO)

root_logger.addHandler(otel_handler)

# Initialize Flask app

app = Flask(__name__)

@app.route('/')

def hello():

with tracer.start_as_current_span("main"):

root_logger.info("This log will be correlated with the trace")

return "Hello, SigNoz!"

if __name__ == '__main__':

app.run(host='0.0.0.0', port=5000)

Verify by navigating to the SigNoz Logs Dashboard and see if the logs have been received.

By correlating logs with traces, SigNoz provides a powerful tool for debugging complex systems and identifying the root cause of issues, including duplicate logs.

Key Takeaways

- Always check for multiple handlers and logger propagation

- Use

propagate=Falsefor child loggers to prevent duplication - Centralize logging configuration for consistency

- Regularly audit and test logging behavior across modules

- Consider advanced tools like SigNoz for complex, distributed systems

FAQs

Why do my Python logs appear twice even with a simple setup?

This often occurs when you have handlers attached to both the root logger and a custom logger. Ensure you're not adding redundant handlers and consider setting propagate=False on your custom logger.

How can I prevent log duplication in Django applications?

Review Django's default logging configuration in settings.py. Customize it to suit your needs without adding extra handlers. Use Django's LOGGING dictionary to configure loggers, handlers, and formatters consistently.

What's the difference between StreamHandler and FileHandler in Python logging?

StreamHandler outputs logs to a stream (usually sys.stdout or sys.stderr), which typically displays in the console. FileHandler writes log messages to a file. Choose based on where you want your logs to appear.

Can log duplication affect application performance?

Yes, especially in high-volume logging scenarios. Duplicate logs can increase I/O operations, consume more storage, and make log analysis more time-consuming. It's crucial to eliminate duplicates for both clarity and performance reasons.