OpenTelemetry Collector vs Exporter - Key Differences Explained

When implementing OpenTelemetry in your applications, understanding its data pipeline components is essential. Among these, collectors and exporters often cause confusion due to their complementary but distinct roles. The decision to use a collector, an exporter, or both significantly influences the efficiency, scalability, and flexibility of your observability pipeline. Let’s explore how these components fit into the broader telemetry ecosystem and why the right choice matters.

Quick Guide: Collector vs Exporter

When working with OpenTelemetry, understanding the difference between Collectors and Exporters is key to building an effective observability pipeline. Both components play critical roles but serve different purposes. Here's a quick comparison:

| Aspect | Collector | Exporter |

|---|---|---|

| Purpose | Aggregates, processes, and routes telemetry data from multiple sources to multiple destinations. | Sends telemetry data to a specific backend or observability platform. |

| Functionality | Acts as a central hub, performing operations like batching, filtering, sampling, and transformation of data. | Simply transfers data from the application or collector to the designated backend system. |

| Deployment | Runs as a standalone service, typically deployed independently of the instrumented application. | Embedded within the application, Collector, or SDK to communicate with the backend. |

| Flexibility | Supports complex pipelines, enabling data from various sources to be routed to multiple destinations. | Limited to handling a single destination per instance. |

| Integration | Works with multiple protocols, receivers, and exporters to support diverse data sources and backends. | Specific to the backend being used (e.g., Prometheus, Elasticsearch, Jaeger). |

| Use Case | Ideal for centralizing telemetry data collection and processing in distributed systems. | Used for direct communication between the application or collector and a backend system. |

| Examples | OpenTelemetry Collector, Fluent Bit. | Prometheus Exporter, OTLP Exporter, Jaeger Exporter. |

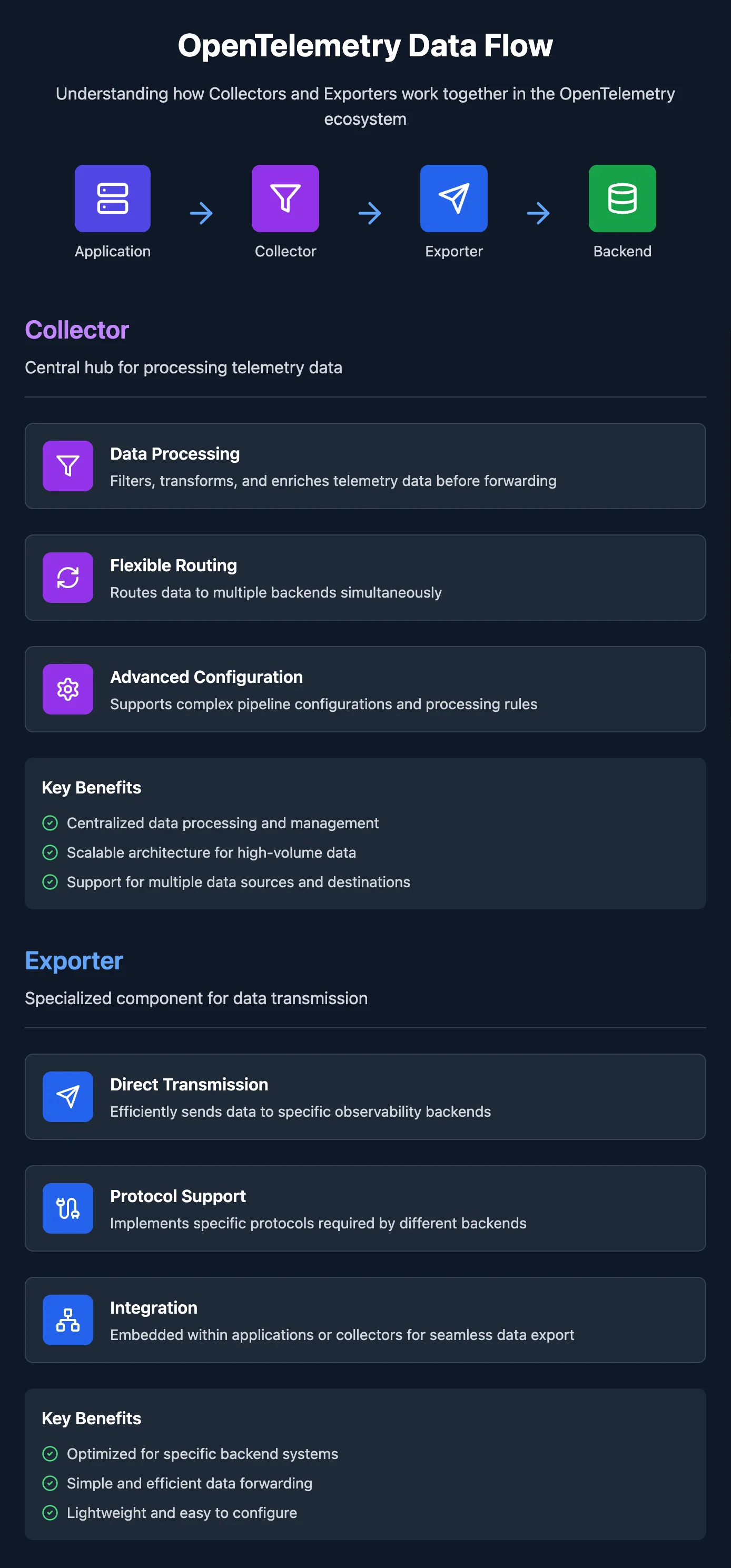

As shown in the infographic below, Collectors act as a central processing hub that can handle multiple data sources and destinations, while Exporters specialize in efficiently transmitting data to specific backends. Together, these components form a flexible and scalable foundation for modern observability pipelines.

Understanding OpenTelemetry Components

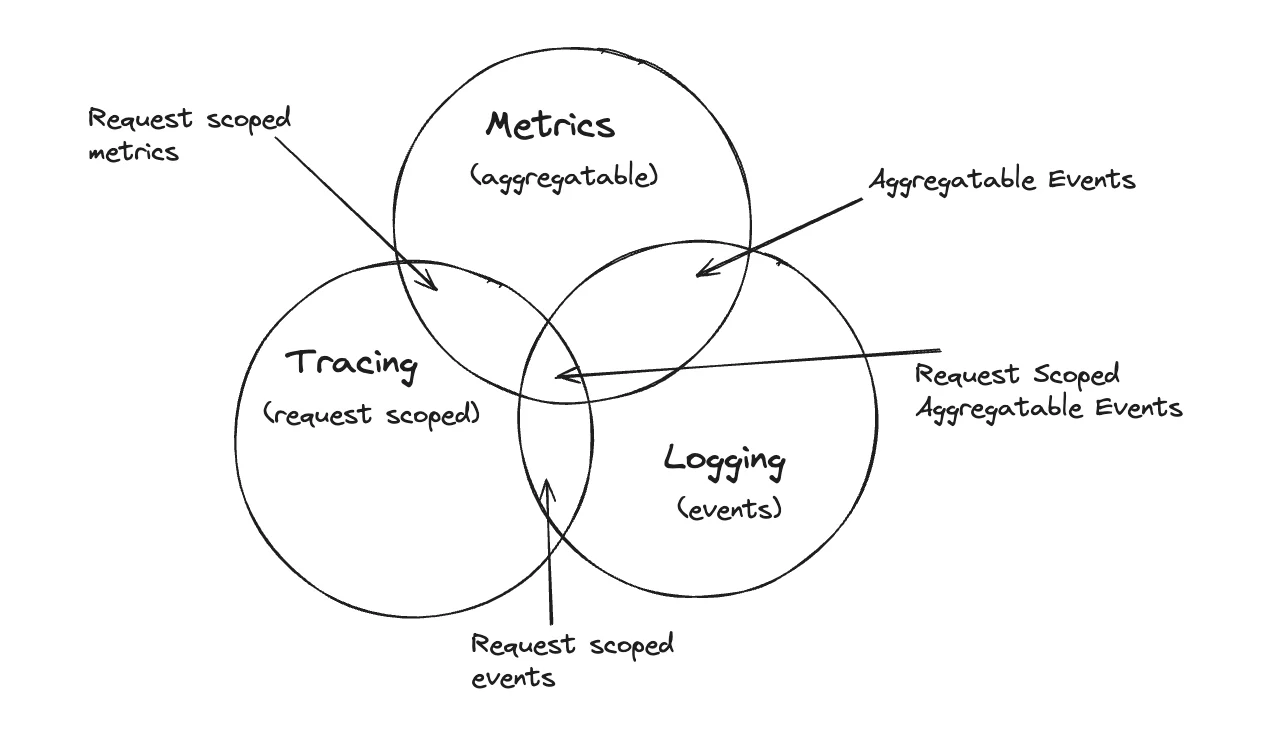

Telemetry data refers to the collection of data points that provide insights into the performance, behavior, and health of software systems. In the context of observability, telemetry data typically falls into three categories:

- Metrics: Metrics are quantitative metrics that provide different aspects of an application's performance in a numerical representation. They provide a high-level overview of the functionality and state of the system. Common metrics include resource utilization (CPU, memory, and disc usage), error rates (the frequency of errors occurring), and response times (the amount of time it takes for the program to react to a request).

- Traces: Traces provide detailed records of transactions as they flow through an application. They track the path of individual requests and show how they interact with various system components. Traces can show how a user request moves through different services, databases, and third-party APIs. They highlight the duration of each step, helping to identify slow or failing components.

- Logs: Logs are text-based records of events and errors within an application. They provide a chronological record of what has happened within the system. Logs can include error messages, debug information, and audit trails. They record events such as user logins, database queries, and application errors.

Why Is Telemetry Data Important?

Telemetry data plays a crucial role in modern software systems, offering insights into how applications and infrastructure perform. Here's why it matters:

- Enhanced Visibility: Telemetry data provides a continuous and real-time overview of a system's behavior and health. By monitoring this data, teams can detect potential problems before they escalate, ensuring systems remain stable and reliable.

- Root Cause Analysis: In complex distributed systems, identifying the cause of a failure or performance issue can be challenging. Telemetry data, such as logs and traces, helps pinpoint where and why a problem occurred, making it faster and easier to resolve issues.

- Optimized Performance: With access to detailed metrics and trends, organizations can make informed decisions to optimize system performance. This might include reallocating resources, fine-tuning application behavior, or improving user experiences.

- Compliance and Auditing: Logs and metrics serve as verifiable records for security, compliance, and regulatory needs. They ensure systems are operating within defined guidelines and provide a transparent trail for audits when needed.

Overview of OpenTelemetry’s Architecture

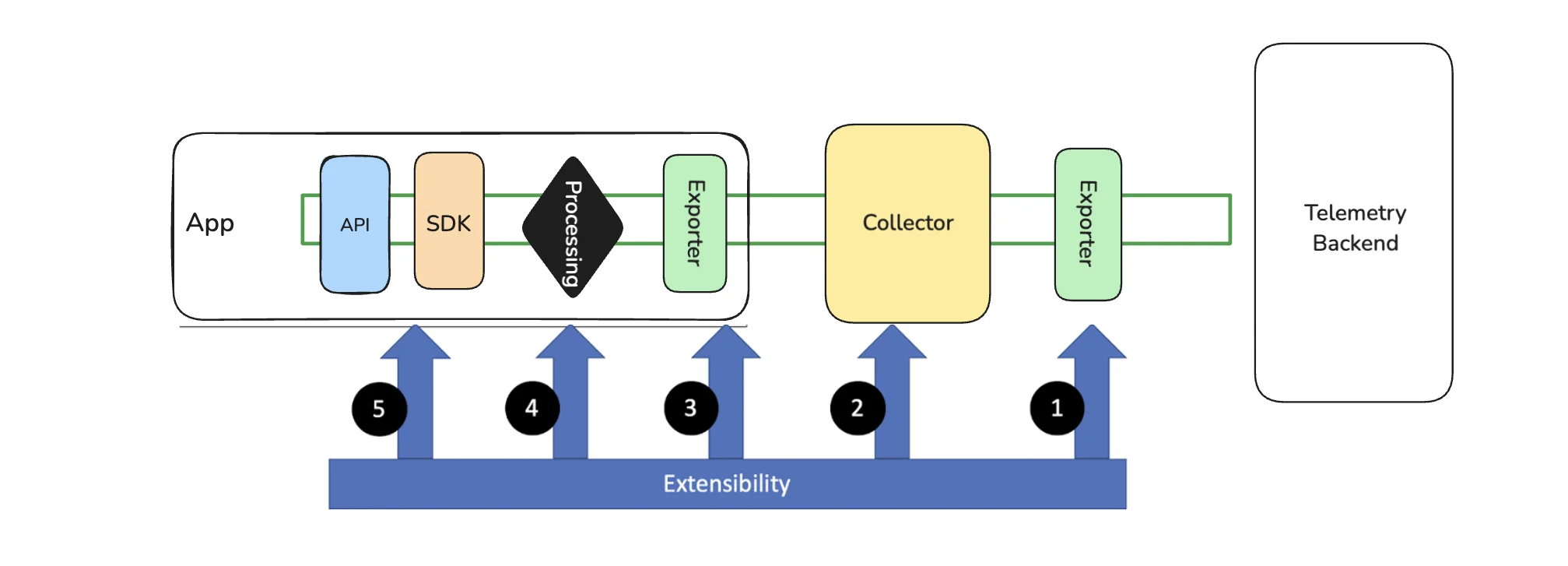

OpenTelemetry is an open-source framework designed to standardize the collection, processing, and export of telemetry data such as metrics, logs, and traces. It simplifies observability by offering a unified approach to capturing system insights while allowing seamless integration with various observability backends like Prometheus, Jaeger, SigNoz and Elasticsearch.

OpenTelemetry's modular architecture is built to be flexible and extensible, ensuring it can fit into diverse observability pipelines. The core components of its architecture include:

- Instrumentation Libraries: These are libraries added to applications to generate telemetry data such as traces, metrics, and logs. They can be integrated manually or automatically using agents. OpenTelemetry SDKs provide the tools needed for this instrumentation process, making it easier to capture and monitor application behavior.

- SDKs & API: OpenTelemetry provides SDKs and APIs that work together to collect, process, and export telemetry data. SDKs are libraries that manage data collection and send it to a backend or a Collector, while the API offers a consistent way to instrument applications across different programming languages.

- Collector: The OpenTelemetry Collector is an independent service designed to receive telemetry data from multiple sources, process it, and export it to one or more observability platforms. It acts as a centralized hub for managing telemetry pipelines.

- Exporters: Exporters are components that send telemetry data from applications or the Collector to specific observability backends, such as Prometheus, Jaeger, or Datadog. They ensure that data is formatted and transmitted in a way the backend can understand.

- OpenTelemetry Protocol (OTLP): OTLP is an open-source, vendor-neutral protocol used to transfer telemetry data such as traces, metrics, and logs. It defines how data is structured, encoded, and transmitted across networks. The OTLP exporter is responsible for sending this data to backends for further analysis and visualization.

The modular design ensures interoperability and scalability, making OpenTelemetry adaptable to various environments.

Role of Different Components in the Data Pipeline

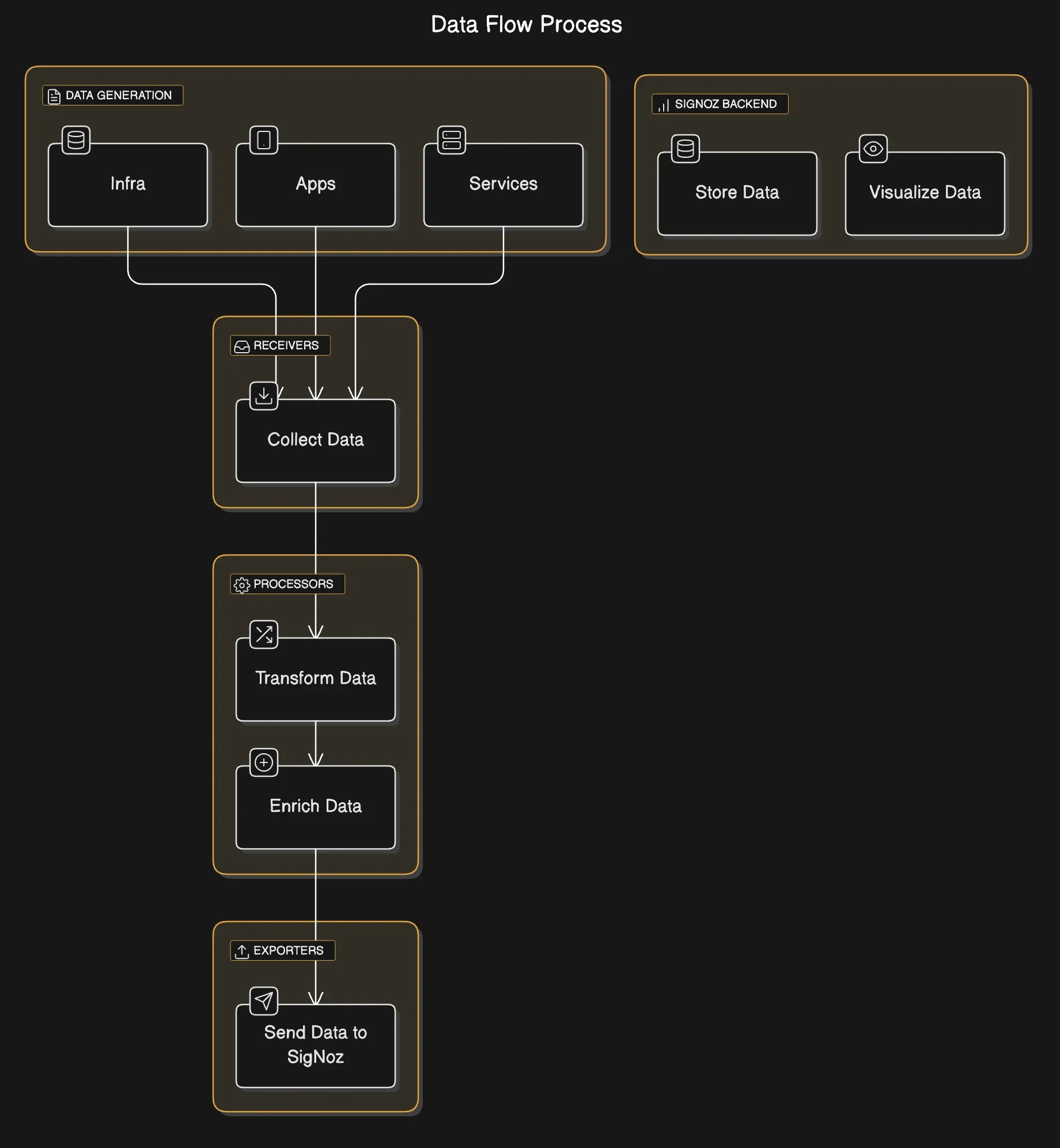

OpenTelemetry simplifies telemetry management by breaking it into distinct roles within the pipeline:

- Receivers: The entry point for telemetry data, responsible for collecting it from sources such as applications, agents, or other Collectors. Examples include HTTP, gRPC, and Kafka receivers.

- Processors: Intermediate components that modify or enrich the telemetry data. For example, processors may filter sensitive data, aggregate metrics, or apply sampling rules for traces.

- Exporters: Responsible for sending telemetry data to a specific backend or storage system. Each backend (e.g., Prometheus, Elasticsearch) typically requires its corresponding Exporter.

- Collector: Functions as a central hub that combines the above components. It allows for complex pipelines, enabling data from various sources to be processed and routed to multiple destinations.

This separation of responsibilities ensures that the telemetry pipeline remains efficient, modular, and easy to manage.

Common Misconceptions About Collectors and Exporters

Despite their distinct roles, collectors and exporters are often misunderstood. Here are a few common misconceptions:

- Misconception: Collectors are mandatory for OpenTelemetry setups.

- Reality: While Collectors are powerful and versatile, they are not required in simple setups. Applications can use Exporters directly to send telemetry data to backends.

- Misconception: Exporters can process and enrich telemetry data.

- Reality: Exporters are designed for data forwarding only. Processing tasks, such as filtering or enriching data, are handled by Collectors or application code.

- Misconception: Collectors and Exporters are interchangeable.

- Reality: Collectors and Exporters complement each other. The Collector acts as a centralized processing hub, while Exporters serve as the final step to deliver data to specific backends.

What is an OpenTelemetry Collector?

The OpenTelemetry Collector is a core component of the OpenTelemetry ecosystem simplifies and enhances observability. It is a vendor-agnostic, flexible tool that acts as a central hub for collecting, processing, and exporting telemetry data such as metrics, logs, and traces. Think of it as a smart mail sorting facility: it gathers data from multiple sources, processes it based on your requirements, and then routes it to one or more observability backends.

Core Functionality and Purpose

The primary role of the OpenTelemetry Collector is to simplify and optimize the telemetry pipeline. It decouples telemetry data generation from backend-specific integrations, providing flexibility and scalability in managing observability data.

Key responsibilities include:

- Receiving Data: Ingests telemetry data from various sources using different protocols (e.g., OTLP, Jaeger, Zipkin).

- Processing Data: Applies transformations, filtering, or enrichment to ensure the data is clean, optimized, and ready for export.

- Exporting Data: Forwards telemetry data to one or more backends, including Prometheus, Elasticsearch, Jaeger, Datadog, and others.

By offloading processing and export tasks from applications, the Collector reduces overhead on your services while providing a centralized point of control for telemetry pipelines.

Key Features of Collectors

The OpenTelemetry Collector is designed with modularity and flexibility in mind. Key features include:

- Receivers:

Receivers are the starting point of the OpenTelemetry Collector’s pipeline. They are responsible for ingesting telemetry data from various sources, such as applications, agents, or other collectors. With support for multiple protocols like gRPC, HTTP, Kafka, and OTLP, receivers enable the Collector to integrate with diverse systems. This flexibility ensures that the Collector can accommodate a wide range of telemetry formats and seamlessly connect with distributed applications.

Example:

An OTLP receiver to collect trace data:

receivers:

otlp:

protocols:

grpc:

endpoint: 0.0.0.0:4317

http:

endpoint: 0.0.0.0:4318

- Processors

Processors enhance and optimize telemetry data after it is ingested. They perform tasks like batching, filtering, sampling, and data enrichment. For instance, the Batch Processor groups telemetry data into manageable chunks to improve export efficiency, while the Attributes Processor adds or removes metadata, such as custom tags, to align the data with business needs. Processors play a crucial role in improving the quality and usability of telemetry data before it reaches its destination.

Example:

Batching telemetry data for efficiency:

processors:

batch:

timeout: 1s

send_batch_size: 512

- Exporters

Exporters handle the delivery of processed telemetry data to observability backends. They support a wide array of systems, including Prometheus, Jaeger, Elasticsearch, and OTLP, ensuring compatibility with popular monitoring and analysis tools. Exporters format the data as required by the backend, manage network communication, and enhance reliability through features like batching and retries.

Example:

An OTLP exporter configured to send data to a backend:

exporters:

otlp:

endpoint: backend-service:4317

- Pipelines

Pipelines are the workflows that link receivers, processors, and exporters to handle specific types of telemetry data, such as metrics, traces, or logs. Each pipeline is highly configurable, allowing you to tailor the data flow according to your observability requirements. This modular approach enables the Collector to process and route telemetry data efficiently, regardless of the complexity of the system.

Example:

Basic pipeline configuration example for handling metrics, logs and traces:

service:

pipelines:

metrics:

receivers: [otlp]

processors: [batch]

exporters: [otlp]

traces:

receivers: [otlp]

processors: [batch]

exporters: [otlp]

logs:

receivers: [otlp]

processors: [batch]

exporters: [otlp]

To learn more about the OpenTelemetry Collector, check out our OpenTelemetry Collector: Architecture and Configuration Guide.

Deployment Models: Agent vs Gateway

The OpenTelemetry Collector can be deployed in two primary ways, depending on your system’s needs: Agent Deployment or Gateway Deployment.

Agent Deployment:

In the agent deployment model, the Collector runs alongside applications, typically as a sidecar container in environments like Kubernetes or as a local daemon on standalone systems. The primary purpose of this deployment is to act as a lightweight, localized telemetry collector that captures data generated by the application it accompanies. This model is ideal for scenarios where minimal latency is crucial, such as collecting telemetry data from a single microservice within a Kubernetes pod. By being colocated with the application, it simplifies telemetry collection and minimizes the overhead associated with data transmission.

Gateway Deployment:

In the gateway deployment model, the Collector operates as a centralized service that aggregates telemetry data from multiple sources across the infrastructure. Its purpose is to process, transform, and manage data from a range of applications and services before forwarding it to one or more observability backends. This deployment is particularly useful in distributed environments, such as Kubernetes clusters, where centralizing telemetry management simplifies data flow and improves scalability. For example, a gateway Collector can aggregate telemetry from an entire cluster and export it efficiently to platforms like Prometheus or Jaeger.

###Choosing a Model

Deciding between agent and gateway deployment depends on the scale and complexity of your system. The agent deployment model is well-suited for lightweight setups or single-node applications, where the focus is on reducing resource usage and keeping telemetry collection close to the source. Conversely, the gateway deployment model is ideal for distributed systems, offering centralized processing and the flexibility to manage and route telemetry data to multiple destinations efficiently.

Benefits of Using Collectors

The OpenTelemetry Collector offers several benefits that enhance the flexibility, scalability, and efficiency of your observability pipeline, making it a cornerstone for modern monitoring strategies. They include:

- Vendor Neutrality: The OpenTelemetry Collector enables a vendor-agnostic observability pipeline by decoupling telemetry data collection from specific backends. This means you can seamlessly switch or integrate with multiple observability tools, such as Prometheus, Jaeger, or Elasticsearch, without altering your instrumentation. This flexibility empowers organizations to adapt their observability strategy as requirements evolve.

- Centralized Processing: By consolidating data collection, processing, and exporting in one component, the Collector simplifies telemetry management. Instead of configuring multiple agents or exporters for different applications, the Collector acts as a single hub to manage telemetry pipelines. This centralization not only reduces complexity but also ensures consistent processing rules across all data sources

- Scalability: The Collector is built to handle large volumes of telemetry data efficiently. Its modular pipelines and batching capabilities allow it to scale with your infrastructure, accommodating growing workloads without compromising performance. Whether you're monitoring a single application or a distributed system with thousands of services, the Collector can be configured to manage the data effectively.

- Flexibility: The Collector is highly configurable, supporting a variety of protocols (e.g., OTLP, HTTP, gRPC) and backend systems. It also allows for custom transformations and enrichment of telemetry data through processors, enabling you to tailor the pipeline to meet specific organizational or operational needs. This adaptability makes it a versatile tool for diverse observability setups.

- Reduced Overhead on Applications: By offloading the processing and exporting of telemetry data to the Collector, applications are freed from the burden of managing these tasks. This improves application performance and ensures that telemetry responsibilities do not interfere with critical business processes. Developers can focus on building features, knowing that the Collector is handling the telemetry pipeline efficiently.

By leveraging these benefits, the OpenTelemetry Collector becomes a cornerstone of scalable and robust observability practices, suitable for both small-scale setups and complex, distributed systems.

What is an OpenTelemetry Exporter?

An OpenTelemetry Exporter is a critical component in the observability pipeline that sends telemetry data—logs, metrics, and traces—from OpenTelemetry SDKs or Collectors to backend systems for storage, analysis, and visualization. Acting as a bridge between OpenTelemetry components (like SDKs and Collectors) and observability platforms, it ensures data compatibility, efficient transmission, and reliable delivery.

The Exporter’s main role is to:

Format Data:

Exporters convert telemetry data into the required format (e.g., OTLP, Prometheus, or Jaeger) to ensure it is compatible with the backend.

Transmit Data:

They handle communication protocols (e.g., gRPC, HTTP) to send data efficiently over the network.

Ensure Reliability:

Many exporters implement batching, retries, and buffering to improve performance and prevent data loss.

Types of Available Exporters

OTLP Exporters

The OpenTelemetry Protocol (OTLP) is the native protocol for OpenTelemetry, designed for maximum compatibility and performance. OTLP exporters are:

- Supported by most observability platforms.

- Capable of handling logs, metrics, and traces seamlessly.

- Ideal for modern setups prioritizing interoperability and scalability.

Vendor-Specific Exporters

These exporters are tailored to specific backend systems provided by observability vendors. They are:

- Optimized for the unique features of the vendor’s platform.

- Often provided as part of the vendor’s OpenTelemetry SDK distribution.

- Examples include AWS X-Ray, Datadog, and New Relic exporters.

Standard Protocol Exporters

For legacy or established systems, exporters supporting protocols like Jaeger and Zipkin are widely used. These:

- Ensure compatibility with existing observability setups.

- Are suitable for organizations transitioning to OpenTelemetry while retaining legacy systems.

Configuration Options

Configuring an exporter involves specifying the target backend, customizing connection settings, and enabling performance features like batching.

Below is an example configuration in Python:

# Example Python Exporter Configuration

from opentelemetry.exporter.otlp.proto.grpc.trace_exporter import OTLPSpanExporter

from opentelemetry.sdk.trace import TracerProvider

from opentelemetry.sdk.trace.export import BatchSpanProcessor

# Set up the Tracer Provider

tracer_provider = TracerProvider()

# Configure the OTLP Exporter

otlp_exporter = OTLPSpanExporter(

endpoint="http://collector:4317"

# Replace with your collector's endpoint

)

# Attach a Batch Span Processor for better performance

span_processor = BatchSpanProcessor(otlp_exporter)

tracer_provider.add_span_processor(span_processor)

Key Points for Beginners:

- The endpoint specifies where the telemetry data will be sent (e.g., a collector or backend).

- The BatchSpanProcessor improves performance by grouping telemetry data into batches before export.

Batching and Performance Considerations

Batching is an essential aspect of exporter configuration, designed to improve efficiency and reliability in telemetry data transmission. By grouping multiple telemetry items into a single payload, batching minimizes the overhead associated with frequent small transmissions. This not only optimizes resource usage but also enhances network efficiency and reduces costs, making it a critical feature for high-volume telemetry pipelines.

Batch Size Configuration:

Adjust the batch size based on the telemetry volume and backend capacity. Larger batches enhance throughput but may increase memory usage, while smaller batches lower memory consumption but lead to more frequent transmissions.

Timeout Settings:

Configure appropriate timeouts to ensure the exporter does not hang if the backend is unresponsive. Moderate timeout values strike a balance between responsiveness and system reliability.

Retry Logic:

Enable retry mechanisms to avoid data loss during temporary failures. Implementing exponential backoff strategies ensures that backend systems are not overwhelmed during outages, maintaining a steady flow of telemetry data.

To learn more about the OpenTelemetry Collector in detail, check out the blog OpenTelemetry Exporters - Types and Configuration Steps.

Key Differences Between OpenTelemetry Collectors and Exporters

While both collectors and exporters are essential components of an OpenTelemetry pipeline, they serve distinct purposes and play different roles in managing and transmitting telemetry data. Understanding their differences is crucial for designing an efficient observability setup.

- Architectural Placement and Responsibility

Collectors

- Act as an intermediary between telemetry data sources (applications or SDKs) and backends.

- Serve as a standalone service capable of receiving, processing, and forwarding telemetry data.

- Can be deployed in various modes (agent or gateway) to suit specific environments.

Responsibility: Collectors provide a flexible, centralized system for receiving, processing, and routing telemetry data across various sources and backends.

Exporters

- Embedded within SDKs or collectors to send telemetry data directly to a specific backend.

- Handle the formatting and transmission of data to match the requirements of the destination.

Responsibility: Exporters are dedicated to delivering telemetry data to the final observability platform or storage system.

Example: In a typical setup, an application SDK generates telemetry data, which is then sent to a collector. The collector processes the data and uses an exporter to forward it to a backend like Prometheus or Jaeger.

- Data Handling Capabilities

Collectors

- Data Processing: Collectors can filter, transform, and enrich data before forwarding it. For example, they can add metadata (like environment tags) to all telemetry items.

- Aggregation: Useful for reducing telemetry data volume by summarizing metrics or combining similar traces.

- Data Routing: Collectors can send data to multiple backends simultaneously, making them ideal for multi-platform observability.

Example Use Case: A collector could receive traces from various microservices, filter out non-critical traces, enrich the remaining ones with deployment details, and route them to both a tracing platform and a logging backend.

Exporters

- Direct Data Transfer: Exporters are optimized for efficiently transmitting data to a single backend.

- No Processing: Unlike collectors, exporters do not modify or enrich telemetry data. They simply package it in the format required by the target system.

Example Use Case: An OTLP exporter sends unmodified telemetry data from a collector to an OpenTelemetry-compatible backend.

- Scalability Aspects

Collectors

- Designed for scalability, especially in distributed systems.

- Can handle high volumes of telemetry data from multiple sources, making them ideal for large-scale environments.

- Provide buffering and retry mechanisms to ensure data is not lost during temporary outages.

Scalability Tip: In a Kubernetes environment, deploy collectors as a DaemonSet (agent mode) for host-level monitoring or as a Deployment (gateway mode) for centralized telemetry processing.

Exporters

- While efficient, exporters are limited in scalability due to their direct data transfer nature.

- Often rely on collectors to manage high-volume data and handle complex processing tasks before export.

Scalability Tip: Use collectors to aggregate and process telemetry data, reducing the burden on exporters and enabling smoother backend integration.

- When to Use Which Component

Use Collectors When

- You need to process or transform telemetry data (e.g., filtering, sampling, or enrichment).

- Your setup involves routing data to multiple backends.

- You want a centralized point for telemetry data collection and processing in a distributed system.

Example: In a multi-cloud environment, a collector can route metrics to Prometheus, traces to Jaeger, and logs to a cloud-native logging solution.

Use Exporters When

- You need to send telemetry data directly from an application or collector to a single backend.

- Data processing or routing is not required.

- You are working in a small or simple environment where minimal components are preferred.

Example: A Python application with the OTLP exporter configured in its SDK directly sends traces to an OpenTelemetry-compatible backend for analysis.

Implementing Telemetry with SigNoz

To effectively monitor your applications and systems, leveraging an advanced observability platform like SigNoz can significantly enhance your monitoring strategy. SigNoz is an open-source observability tool that offers end-to-end monitoring, troubleshooting, and alerting capabilities across your entire application stack.

Built on OpenTelemetry, SigNoz unifies telemetry data by seamlessly integrating Prometheus and Grafana metrics with traces and logs. This powerful integration provides a complete, end-to-end view of system performance, offering deeper insights and surpassing traditional monitoring setups.

SigNoz Cloud is the easiest way to run SigNoz. Sign up for a free account and get 30 days of unlimited access to all features.

You can also install and self-host SigNoz yourself since it is open-source. With 24,000+ GitHub stars, open-source SigNoz is loved by developers. Find the instructions to self-host SigNoz.

Benefits of SigNoz

Let us now look at some of the benefits of SigNoz.

- OpenTelemetry Compatibility: SigNoz supports OpenTelemetry natively, allowing seamless integration with OpenTelemetry’s SDK and collector.

- Full-Stack Observability: SigNoz includes logging, metrics, and visual dashboards alongside distributed tracing, giving teams a comprehensive view of system health.

- Self-Hosting Options: SigNoz can be hosted on-premises or in private cloud environments, allowing for secure data control and compliance with internal data governance policies.

- Cost-Effective Scaling: SigNoz offers a straightforward pricing model that can become more budget-friendly over time, particularly for high-traffic systems.

For more information on implementing Telemetry with SigNoz, check out the Implementing OpenTelemetry in Spring Boot - A Practical Guide.

Cloud vs OSS options

To effectively monitor modern applications and systems, it’s essential to choose the right observability solution that aligns with your organization’s goals and capabilities. Observability tools generally fall into two categories: Cloud-Based Solutions and Open-Source Software (OSS) Options. Each has unique advantages and trade-offs, making it important to understand their differences to make an informed decision.

| Aspect | Cloud-Based Solutions | Open-Source Software (OSS) Options |

|---|---|---|

| Setup and Maintenance | Minimal setup; managed by the provider, including upgrades and scaling. | Requires manual setup, configuration, and ongoing maintenance by the team. |

| Scalability | Automatically scales to handle large data volumes. | Scalability depends on the team’s ability to manage infrastructure resources. |

| Cost | Subscription-based; costs depend on usage, such as data volume or retention. | Free to use, but requires investment in infrastructure, time, and expertise. |

| Flexibility | Limited to the features and customization options provided by the vendor. | Fully customizable; can be tailored to meet specific requirements. |

| Vendor Lock-In | Data and workflows are tied to the service provider. | No vendor lock-in; full control over the pipeline and data. |

| Features | Offers advanced features like AI-driven insights, integrated dashboards, and automated alerts. | Features depend on the specific OSS tools used (e.g., Prometheus, Grafana). |

| Skill Requirements | Low; suitable for teams with little expertise in observability tools. | High; requires in-depth knowledge of observability tools and infrastructure. |

| Examples | Datadog, New Relic, AWS CloudWatch, Microsoft Azure Monitor. | OpenTelemetry, SigNoz, Prometheus, Grafana. |

Best Practices

Implementing OpenTelemetry effectively requires careful consideration of deployment strategies, configuration optimization, and performance tuning. By following these best practices, you can ensure a scalable, efficient, and reliable observability pipeline for your applications.

Collector Deployment Strategies

Deploying the OpenTelemetry Collector correctly is essential for ensuring the smooth collection and processing of telemetry data. Choose the deployment model that best fits your environment:

- Agent Deployment: For lightweight setups, deploy the collector as a sidecar or daemon on each host to monitor local applications. This setup reduces latency and simplifies telemetry collection in single-host or containerized environments like Kubernetes.

- Gateway Deployment: For centralized data processing, use the collector as a gateway to aggregate telemetry data from multiple sources or services. This approach is ideal for distributed systems or large-scale environments like microservices architectures.

- Secure Data Transmission: Always use TLS (Transport Layer Security) for secure data transmission to protect telemetry data from interception. Enable trusted certificates to simplify configuration and ensure secure communication.

Exporter Configuration Optimization

Exporters play a crucial role in sending telemetry data to observability backends. Proper configuration of exporters ensures efficient data transmission and reliable delivery.

- Batching Configuration: Configure batch sizes to balance throughput and memory usage. Larger batches are more efficient but require more memory, while smaller batches reduce memory usage but may result in more frequent transmissions.

- Timeout Settings: Set reasonable timeouts for data export operations to prevent long delays caused by unresponsive backends. Moderate timeouts strike a balance between reliability and responsiveness.

- Enable Retry Logic: Enable retries for transient failures, such as network disruptions or backend unavailability. Implementing exponential backoff prevents overwhelming the backend during temporary outages.

Data Sampling Considerations

Sampling helps control the volume of telemetry data without sacrificing visibility. It’s particularly useful in high-traffic environments to manage resource consumption and costs.

- Sampling Strategy: Choose between head-based sampling (a fixed percentage of all data) for predictability or tail-based sampling (capturing significant traces like errors) for more relevant insights.

- Centralized Sampling: Apply sampling in the collector if your applications generate high volumes of telemetry. This centralizes the logic and reduces overhead on individual applications, improving overall efficiency.

Performance Tuning Tips

Optimizing performance is crucial for maintaining a responsive and scalable observability pipeline.

- Monitor Resource Usage: Regularly monitor the CPU and memory usage of collectors to avoid resource exhaustion. Use monitoring tools like Prometheus to track metrics such as CPU usage, memory consumption, and queue sizes.

- Adjust Batch Sizes Dynamically: Adjust batch sizes based on the telemetry load. During peak traffic, increase batch sizes for better throughput, and reduce them during off-peak hours to save resources.

- Use Sampling in High-Traffic Environments: Combine sampling with batch size adjustments in environments with very high telemetry volumes. This helps prevent resource overload while maintaining important data for analysis.

By following these best practices for collector deployment, exporter configuration, data sampling, and performance tuning, you can ensure that your OpenTelemetry setup remains efficient, scalable, and reliable across different environments.

FAQs

What's the main difference between collector and exporter?

A collector is a standalone service designed to receive, process, and route telemetry data from multiple sources. In contrast, an exporter is a library component responsible for sending telemetry data to a specific backend system.

Can I use exporters without a collector?

Yes, you can use exporters to send data directly to backend systems. However, incorporating collectors offers additional advantages, such as data transformation, filtering, and routing flexibility, which are not typically available with standalone exporters.

How do collectors improve telemetry data quality?

Collectors enhance telemetry data quality by providing capabilities such as filtering out irrelevant data, transforming it into the desired format, and enriching it with additional metadata. Additionally, they ensure reliable data transmission through buffering and retry mechanisms in case of transient network issues.

Which exporters are most commonly used?

The OpenTelemetry Protocol (OTLP) exporters are widely adopted due to their compatibility with modern observability platforms. Other commonly used exporters include Jaeger, for distributed tracing, and Prometheus, for metrics collection and monitoring in specific scenarios.