How to Reduce Prometheus High Memory Usage

Prometheus, a powerful open-source monitoring and alerting toolkit, has become a cornerstone of modern observability stacks. However, many users face a common challenge: high memory consumption. This issue can lead to resource constraints and increased operational costs. You might wonder, "Why does Prometheus consume so much memory?" — a question we'll thoroughly explore in this article. We'll delve into the underlying causes of Prometheus' memory usage, provide strategies for optimization, and introduce alternative solutions for efficient monitoring at scale.

Understanding Prometheus Memory Consumption

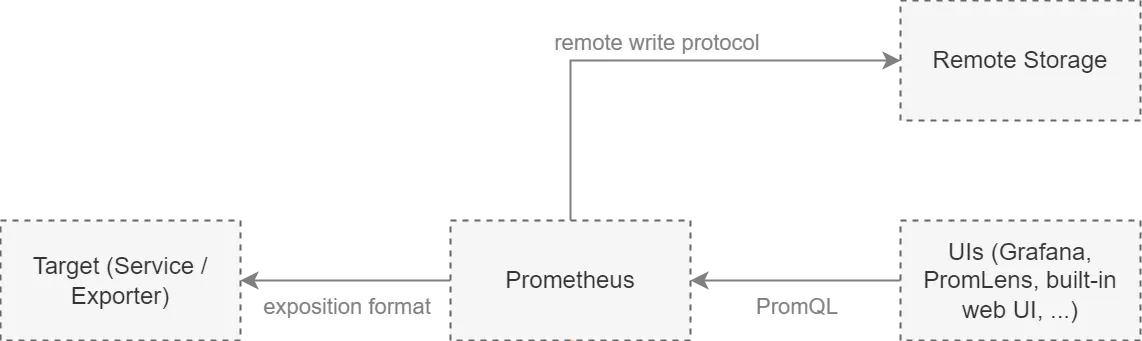

Prometheus scrapes metrics from targets (applications or systems) at regular intervals and stores them in a time-series database (TSDB). Its architecture is built to handle high-cardinality data and provide real-time insights. However, this flexibility and power come at the cost of high memory and storage usage, especially in large environments.

Overview of Prometheus' Data Storage Model

Prometheus stores data in chunks and indexes each time series for fast querying. When metrics are scraped from targets, they are initially stored in memory (often referred to as "head" memory) before being flushed to disk. This model ensures low-latency access to recent metrics, but it also contributes to high memory usage.

Prometheus' time-series data can be very granular, with many labels attached to each metric. Each unique combination of labels generates a new time series, which increases memory consumption.

The relationship between time series data and memory usage is direct: more time series means more memory consumption. This correlation is crucial to understanding Prometheus' resource requirements.

Why Does Prometheus Use So Much RAM?

Prometheus's memory consumption is often explained by a rule of thumb that indicates it uses approximately 3 kilobytes (kB) of memory per time series. This rule helps users anticipate the resource requirements for their monitoring setup, especially as the number of monitored time series increases.

Breakdown of the 3kB Rule:

- Memory Components:

- Time Series Data: Each time series stores a series of timestamps and corresponding values, which contributes to the overall memory usage.

- Metadata Storage: Prometheus maintains metadata for each time series, including labels (key-value pairs). The overhead from these labels can be significant, especially when numerous labels are utilized, as each label adds to the memory footprint.

- Indexing and Internal Structures: Prometheus employs various data structures for indexing and querying time series data, which also contributes to the memory consumption.

- Scaling Impact:

- When scaling from hundreds to thousands or millions of time series, the memory requirement can escalate quickly. For example, if you have 1,000 time series, the memory usage might be around 3 MB. However, with 1 million time series, it can balloon to approximately 3 GB, demonstrating how crucial it is to manage the number of series and their associated labels.

- Strategies for Mitigation:

- To optimize memory usage, consider minimizing the number of labels and configuring retention policies to reduce the amount of historical data retained.

Here's a breakdown of why Prometheus's memory usage can be significant:

- Cardinality: Each unique combination of metrics and labels creates a new time series. High cardinality — a large number of distinct label combinations — dramatically increases memory usage.

- In-memory storage: Recent data is kept in memory for fast access, contributing to high RAM usage.

- Query processing: Complex PromQL queries can cause temporary spikes in memory consumption during execution.

- Scrape frequency: More frequent data collection leads to more data points in memory at any given time.

Factors Contributing to High Memory Usage in Prometheus

Several factors can exacerbate Prometheus' memory consumption:

- Excessive time series: High cardinality due to poorly designed labels or overly granular metrics can lead to an explosion in the number of time-series.

- Frequent scraping: While shorter scrape intervals provide more up-to-date data, they also increase the volume of data in memory.

- Large number of targets: More targets mean more metrics to collect and store, directly impacting memory usage.

- Complex queries: Resource-intensive PromQL queries can cause temporary but significant memory spikes.

Strategies for Reducing Prometheus Memory Consumption

To optimize Prometheus' memory usage, consider implementing these strategies:

- Effective label management:

- Limit the number of label values, especially for high-cardinality dimensions.

- Use relabeling to drop unnecessary labels or metrics.

- Optimize scrape intervals:

- Balance data freshness with resource usage by adjusting scrape frequencies.

- Consider longer intervals for stable metrics or less critical targets.

- Implement federation:

- Use Prometheus Federation for large-scale deployments to distribute the load.

- Set up hierarchical Prometheus instances, with higher-level instances aggregating data from lower levels.

- Utilize retention policies and downsampling:

- Set appropriate retention periods to limit the amount of historical data kept in memory.

- Implement downsampling for long-term storage to reduce data resolution over time.

Fine-tuning Prometheus Configuration

Adjusting Prometheus configuration can significantly impact memory usage:

- TSDB settings:

- Tune

storage.tsdb.retention.timeandstorage.tsdb.retention.sizeto control data retention. - Adjust

storage.tsdb.wal-compressionto enable write-ahead log compression, reducing disk I/O at the cost of some CPU usage. Explore other TSDB settings here.

- Tune

- Recording rules:

- Implement recording rules to pre-compute expensive queries, reducing query-time memory spikes. Check out the documentation to explore Recording rules in Prometheus.

- External storage:

Offloading older or less frequently accessed data to an external storage system can free up memory and disk space. Read more about external storage integrations in Prometheus.

Using external storage for storing grafana metrics

Monitoring and Analyzing Prometheus' Resource Usage

Prometheus can monitor itself, providing valuable insights into its performance:

Key metrics to watch:

Monitoring specific metrics will help you understand where memory is being consumed and whether adjustments are necessary. Here are some of the most important ones:

prometheus_tsdb_head_series: Number of time series in the head block.

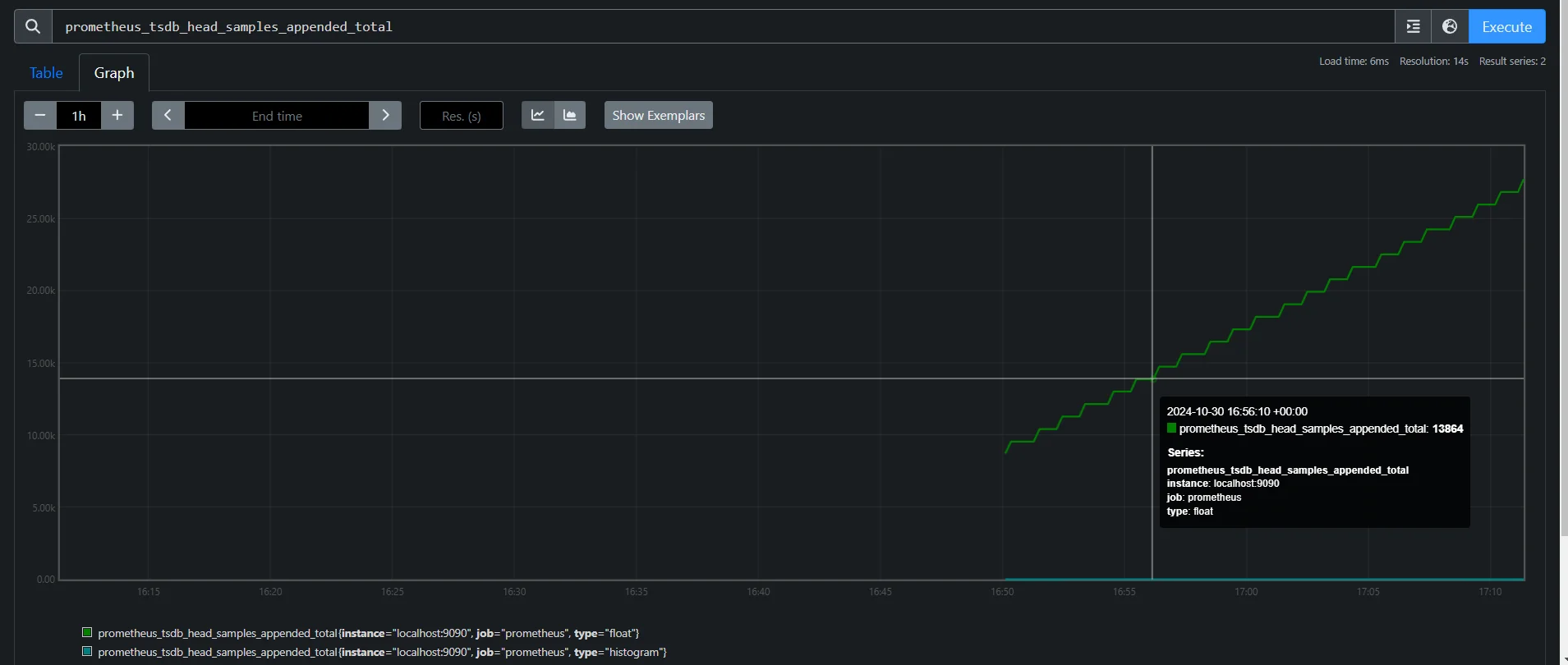

Metric prometheus_tsdb_head_series prometheus_tsdb_head_samples_appended_total: Total number of appended samples.

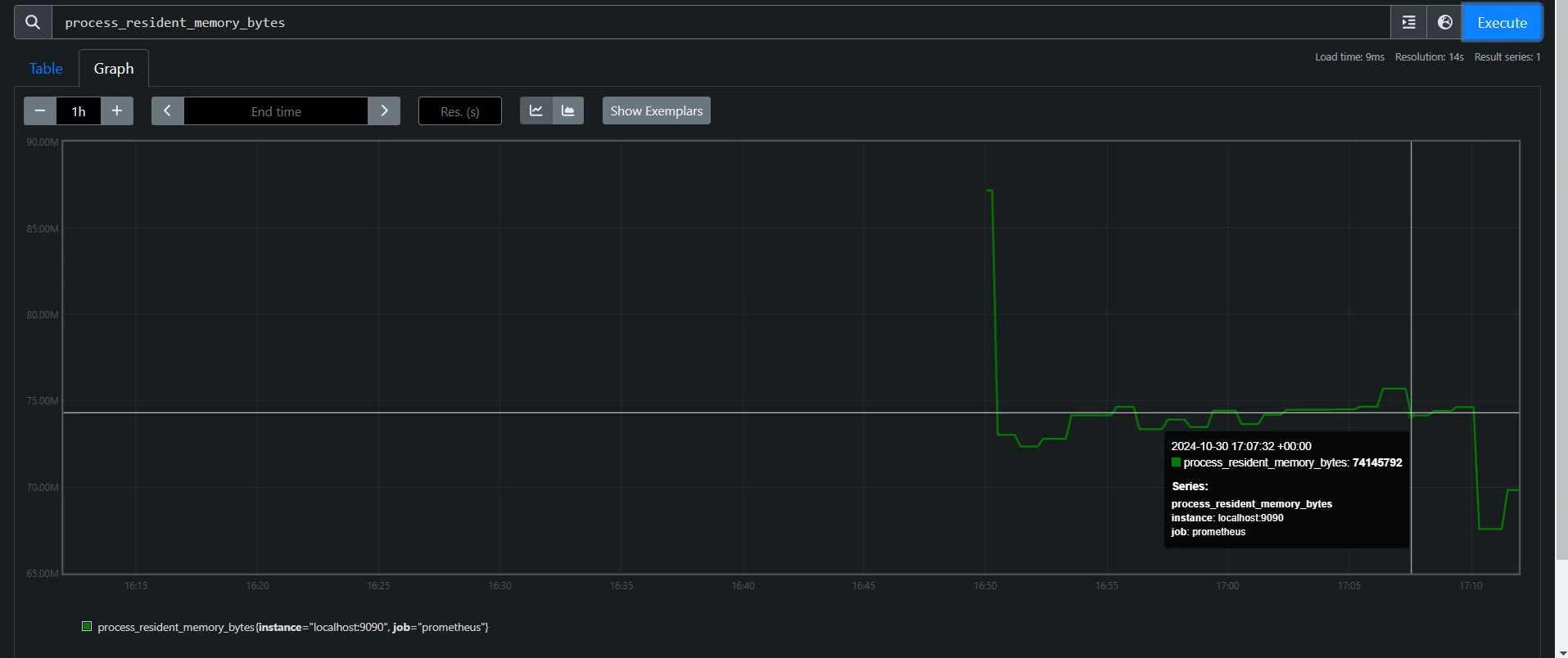

Metric prometheus_tsdb_head_samples_appended_total process_resident_memory_bytes: Prometheus process memory usage.

Metric process_resident_memory_bytes

Dashboards and alerts:

- Setting up a dedicated Prometheus self-monitoring dashboard in a visualization tool (like Grafana) allows you to easily track these metrics.

- Commonly monitored areas on a Prometheus health dashboard include memory usage, CPU load, query duration, and the number of active time series.

Query analysis:

Some queries or targets might contribute more heavily to memory usage than others. Here’s how you can identify and address these issues:

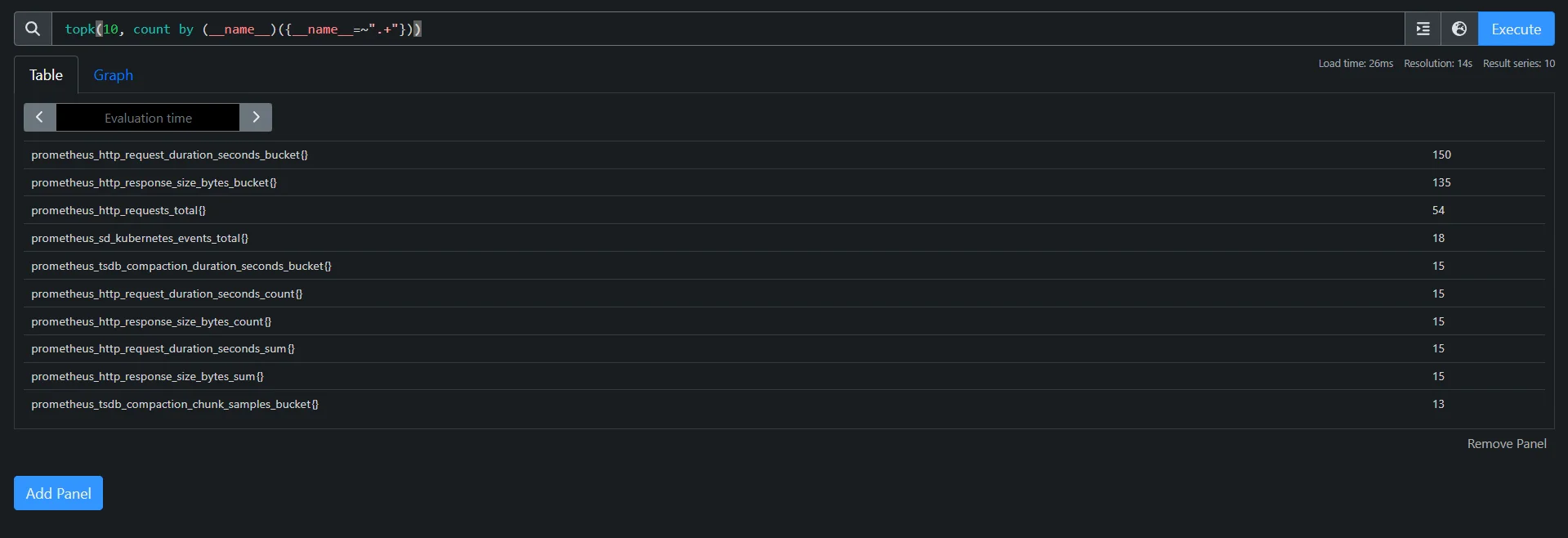

Identify high-cardinality metrics: Run

topk(10, count by (__name__)({__name__=~".+"}))to find the top 10 metrics with the most series and detect high-cardinality metrics.

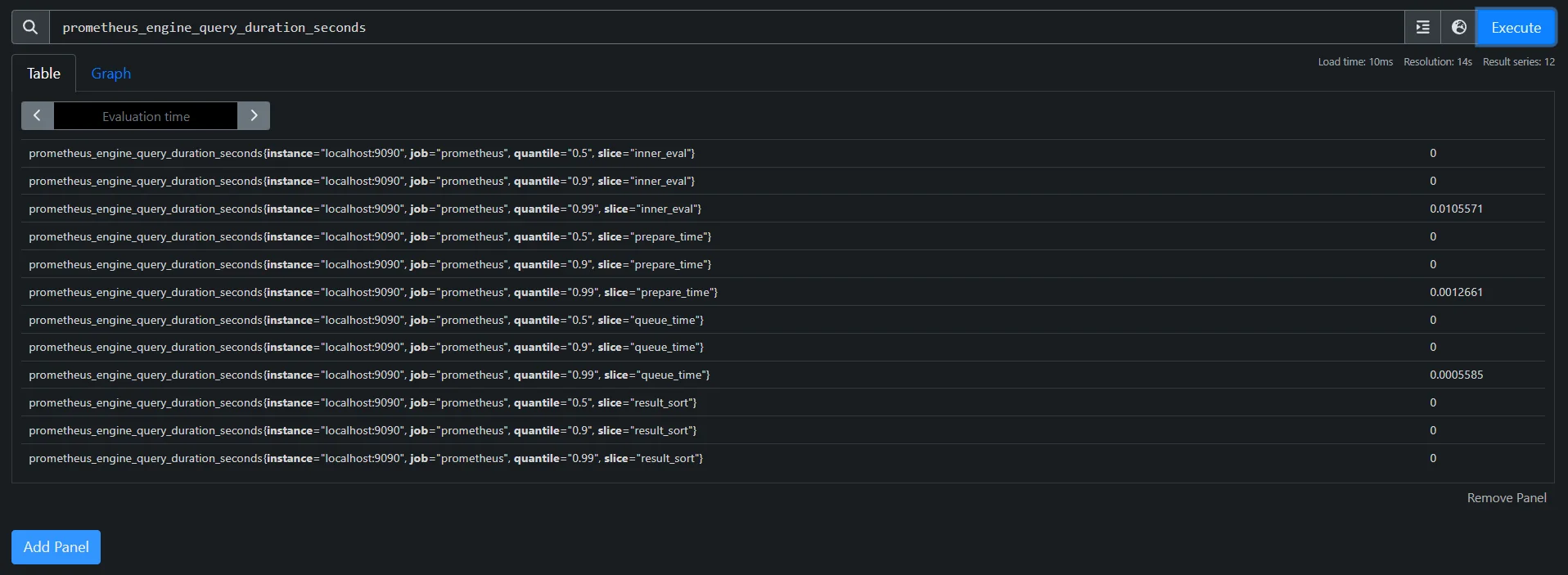

Identify High-Cardinality Metrics Monitor query load: Check

prometheus_engine_query_duration_secondsto spot long-running or resource-intensive queries. Use recording rules for complex queries.

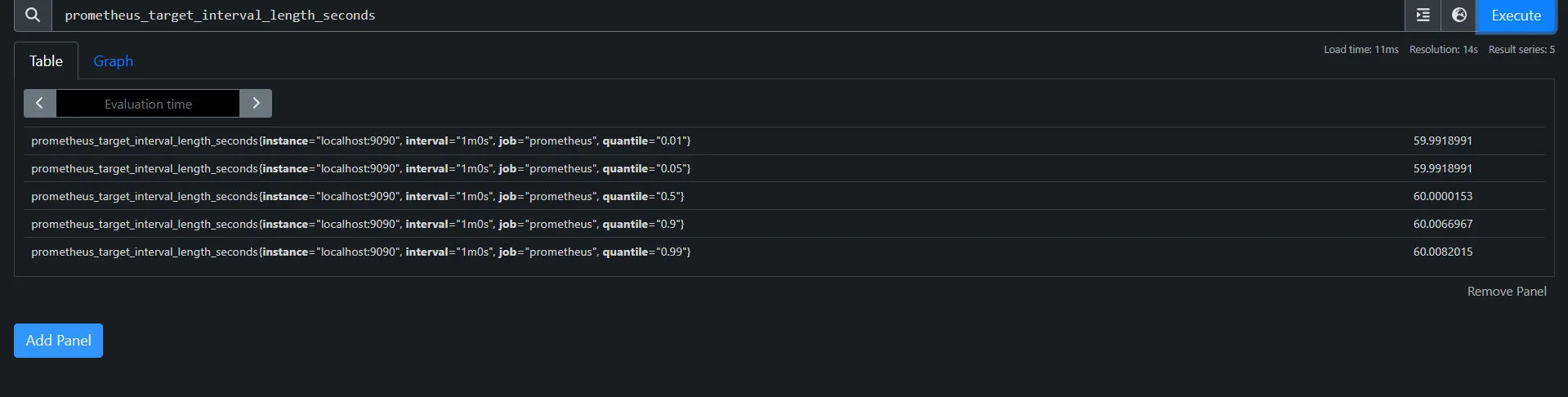

Monitor Query Load Analyze target metrics: Use

prometheus_target_interval_length_secondsto review scrape intervals and identify any targets with high scrape frequencies that may be overloading memory.

Identify targets with high scrape frequencies

Alternatives and Complementary Tools for Large-Scale Monitoring

For scenarios where Prometheus alone struggles with resource constraints, consider these alternatives:

- Thanos: Thanos adds horizontal scaling, long-term storage, and downsampling capabilities to Prometheus, making it a good option for large-scale deployments.

- VictoriaMetrics: VictoriaMetrics is a high-performance time-series database that is compatible with Prometheus but optimized for large-scale environments and efficient resource usage.

- Grafana Mimir: Mimir is another scalable solution that works well for multi-tenant environments and offers horizontal scaling similar to Thanos.

Exploring SigNoz for Efficient Monitoring

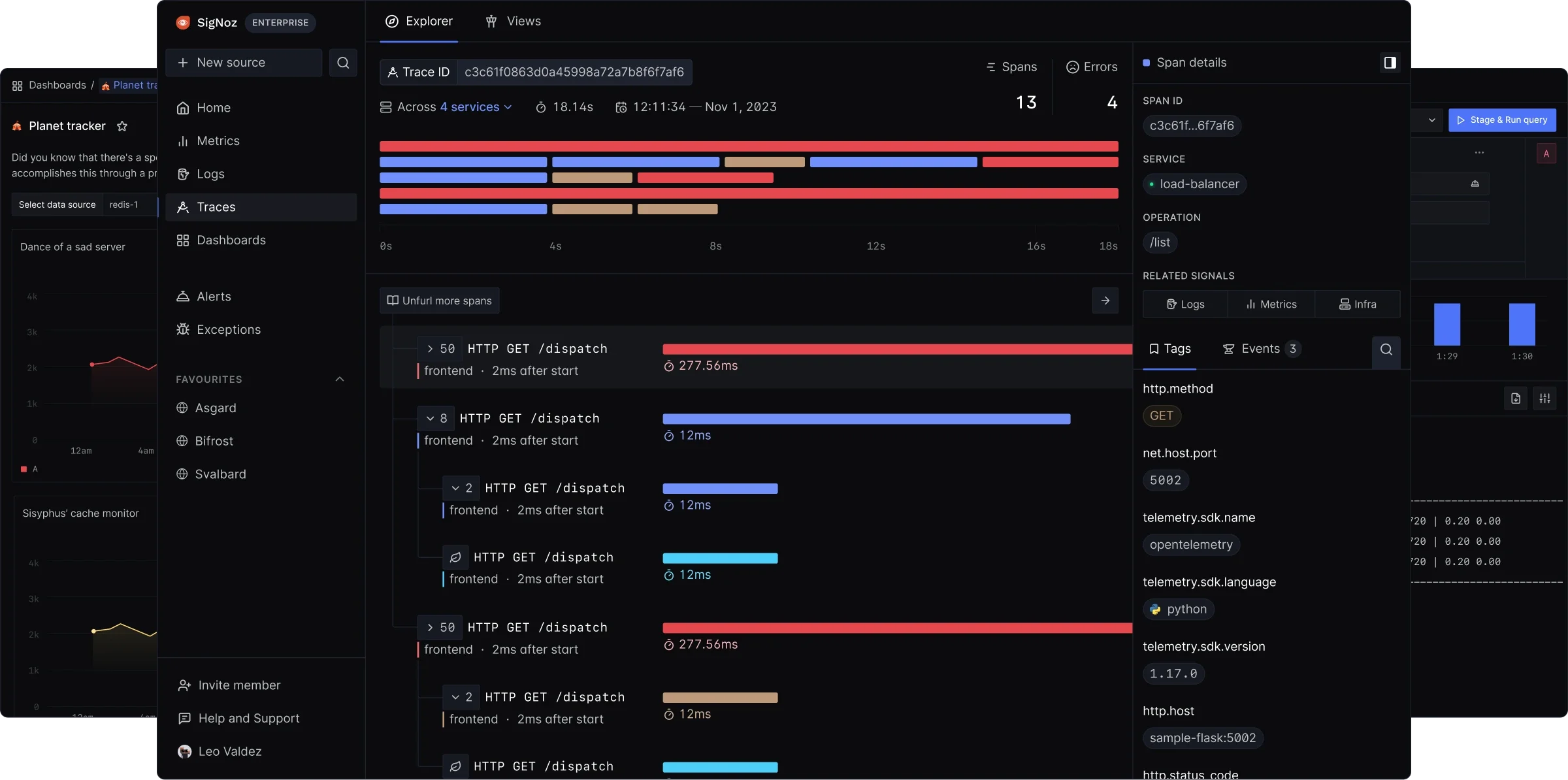

SigNoz offers a modern approach to observability, combining metrics, traces, and logs within a unified platform that provides a streamlined and resource-efficient alternative to traditional Prometheus stacks. SigNoz can be used either alongside or as a replacement for Prometheus in scenarios where efficiency and integrated monitoring are essential.

Key benefits of using SigNoz:

Optimized for High-Cardinality Data: SigNoz efficiently handles metrics with high cardinality, reducing memory strain.

Efficient resource usage: SigNoz is designed to handle high cardinality data more efficiently than Prometheus.

Integrated tracing: Combines metrics and distributed tracing, providing deeper insights into application performance.

Integrating traces and logs with metrics for comprehensive observability User-friendly interface: Offers an intuitive UI for querying and visualizing data without the need for separate visualization tools.

SigNoz Cloud is the easiest way to run SigNoz. Sign up for a free account and get 30 days of unlimited access to all features.

You can also install and self-host SigNoz yourself since it is open-source. With 24,000+ GitHub stars, open-source SigNoz is loved by developers. Find the instructions to self-host SigNoz.

SigNoz can complement or replace Prometheus in scenarios where resource efficiency and integrated observability are priorities.

Best Practices for Prometheus Memory Optimization

To maintain an optimized Prometheus setup:

- Regular Cardinality Audits: Periodically review your metrics to identify and reduce unnecessary labels and high-cardinality time series.

- Gradual Rollouts: When introducing new metrics, roll them out gradually to monitor the impact on memory usage.

- Service Discovery: Use dynamic service discovery to automatically adjust scrape targets and avoid over-scraping.

- Continuous Tuning: Regularly monitor and adjust Prometheus configurations to adapt to changing workloads and environments.

Key Takeaways

- Prometheus memory usage is primarily driven by time-series cardinality.

- Effective label management and optimizing scrape intervals are crucial for reducing memory consumption.

- Regular monitoring and tuning of Prometheus itself are essential to maintaining performance.

- For large-scale deployments, consider using complementary tools like Thanos or alternatives like SigNoz for more efficient resource management.

FAQs

How much RAM does Prometheus typically require?

Prometheus' RAM requirements vary based on the number of time series, scrape frequency, and query complexity. A general estimate is 3kB per time series, so an environment with 1 million series might need around 3GB of RAM for data storage alone, plus additional memory for query processing and operating system overhead.

Can I reduce Prometheus memory usage without losing data?

Yes, you can optimize memory usage without data loss by:

- Implementing effective label management to reduce cardinality.

- Adjusting scrape intervals for less critical metrics.

- Using recording rules to pre-compute expensive queries.

- Implementing proper retention policies and leveraging long-term storage solutions.

What's the impact of increasing scrape interval on memory consumption?

Increasing the scrape interval reduces the frequency of data collection, which can lower memory usage. However, this comes at the cost of reduced data granularity. A balance must be struck between resource efficiency and monitoring resolution based on your specific requirements.

How does SigNoz compare to Prometheus in terms of resource usage?

SigNoz is designed to handle high cardinality data more efficiently than Prometheus, which can lead to lower resource usage in environments with many unique time series. Its integrated approach to metrics, logs, and traces helps reduce complexity and overall resource requirements. Additionally, SigNoz leverages ClickHouse as its storage backend, which is known for its efficient query performance and enhanced resource optimization compared to Prometheus. This makes SigNoz particularly advantageous in high-cardinality scenarios, potentially offering a more lightweight observability solution overall.