Datadog is a popular observability platform, but understanding its pricing structure can be challenging. Whether you're just starting with Datadog or are receiving hefty bills, this guide will help you understand its pricing model, estimate your potential costs, and find ways to optimize them.

You can use the below tool to estimate your Datadog bill.

Estimate Your Datadog Bill

Use our interactive tool to estimate your Datadog bill based on your expected usage:

Datadog Pricing Calculator

Log Volume:GB

(Assuming 15 day retention and no on-demand usage)

Total Estimated Cost

$0.00 / month

Note that the above calculator assumes the following:

- 15 day retention for logs

- No additional span ingestion

- No container, custom metric, and custom event usage

- No on-demand usage

To get detailed pricing information for each datadog product, refer to the Product wise datadog pricing section.

Questions answered in this article:

- How does DataDog pricing work?

- What are the different pricing models used by DataDog?

- How to optimize DataDog Bills?

- Will switching to a more affordable solution impact the features available to you?

- Will switching to a more affordable solution impact the performance of your observability setup?

If you are new, we highly recommend you to first understand the fundamentals of datadog pricing discussed in the next section.

Who Should Care About Datadog Pricing?

Datadog's pricing can be a critical consideration for many organizations, particularly if:

Experiencing unexpected cost spikes and need to understand the root causes.

Planning infrastructure expansion and want to forecast associated expenses accurately.

Considering Datadog and need to evaluate its cost-effectiveness for your specific use case.

Given the variability in pricing depending on usage patterns, many users have shared their frustration on platforms like Reddit and Twitter about unpredictable costs. We at SigNoz understand these concerns, and this guide aims to provide clarity and help you make informed decisions.

Datadog Pricing 101

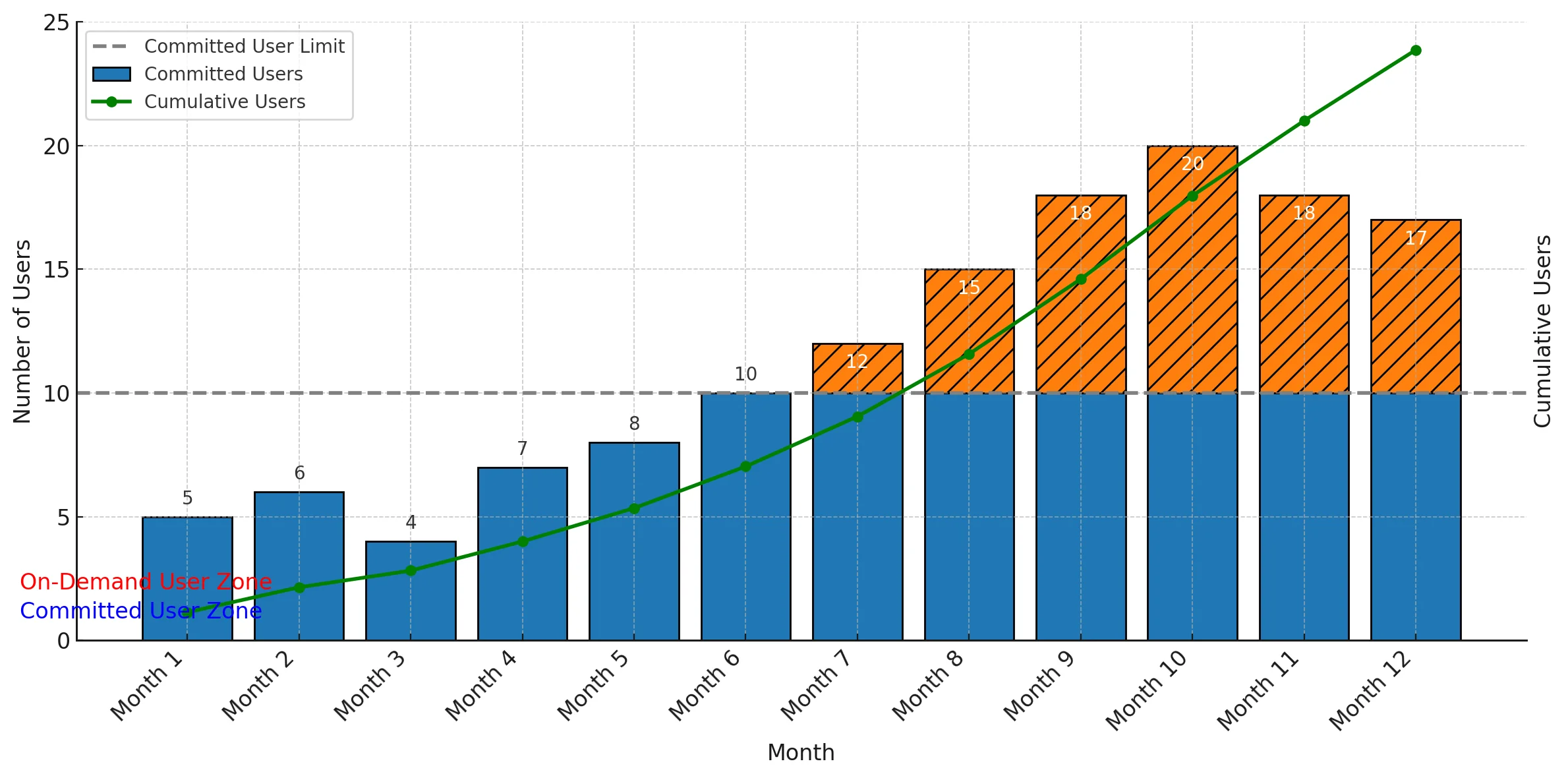

Datadog pricing involves two main models: committed use pricing and on-demand pricing.

Committed use offers lower rates but requires a forecast of your usage in advance, while on-demand pricing is more flexible but generally more expensive.

Different Pricing Models

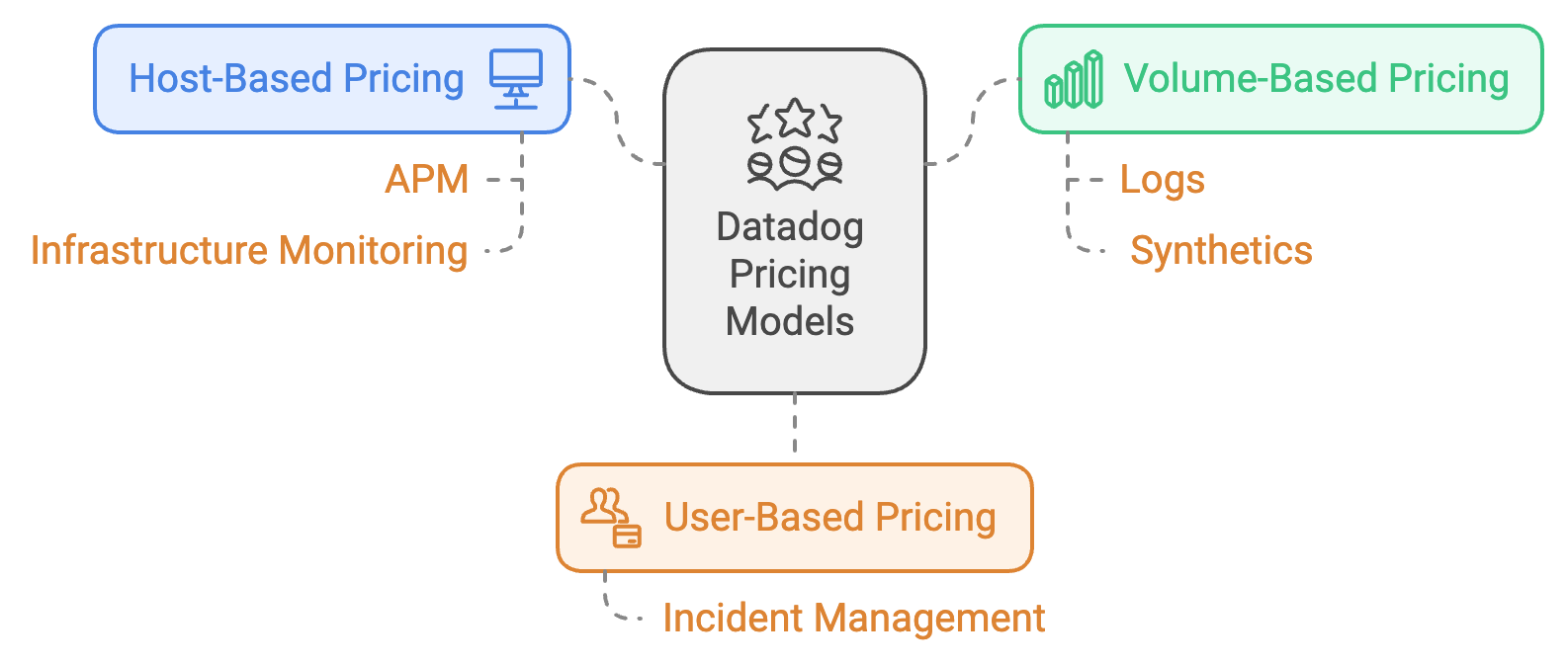

Datadog's pricing structure is built around three main models, each tailored to different products and specific monitoring requirements:

🖥️ Host-Based Pricing: Used for products like APM and Infrastructure Monitoring, where you're charged per host monitored.

📊 Volume-Based Pricing: Applicable for products like logs and synthetics, where you pay based on the volume of data ingested.

👥 User-Based Pricing: Active user pricing is applied to products like Incident Management, where the costs depend on the number of active users.

We will understand each of the models in detail in the later part of the article, let's first understand what makes datadog pricing difficult to understand and predict.

Why Datadog Pricing Is Difficult to Understand & Predict

Datadog has a huge fleet of products and sub-products, each with its unique pricing model, which makes it challenging to get a clear understanding of the overall cost. Factors like autoscaling and dynamic workloads further complicate cost predictability.

👉 As your business grows and infrastructure scales, your Datadog bill scales as well, often making it hard to forecast costs.

Imagine scaling up a new feature only to realize that it drastically increased the volume of metrics collected. A sudden spike from $200 per month to $2500 due to unanticipated data ingestion can be a wake-up call to optimize and monitor your observability setup more closely.

👉 Developers may not always be aware of the full cost implications, especially when infrastructure changes, making budgeting difficult.

For instance, a developer might enable detailed logging for troubleshooting but forget to disable it after the issue is resolved, leading to an unexpected spike in log volume and causing costs to soar from $500 to $5000 per month.

For many developers, cost management isn't just about usage—it's about understanding the broader implications of how and when resources are being used. This can lead to unexpected surprises during billing, particularly when infrastructure changes occur rapidly.

Understanding Different Datadog Pricing Models

Let's dive deep into each of the pricing models used by Datadog for their various products.

Host-Based Pricing

Host-Based Pricing is used for products like APM and Infrastructure Monitoring. Below, we break down the details of how it works, the challenges it presents, and ways to optimize it effectively:

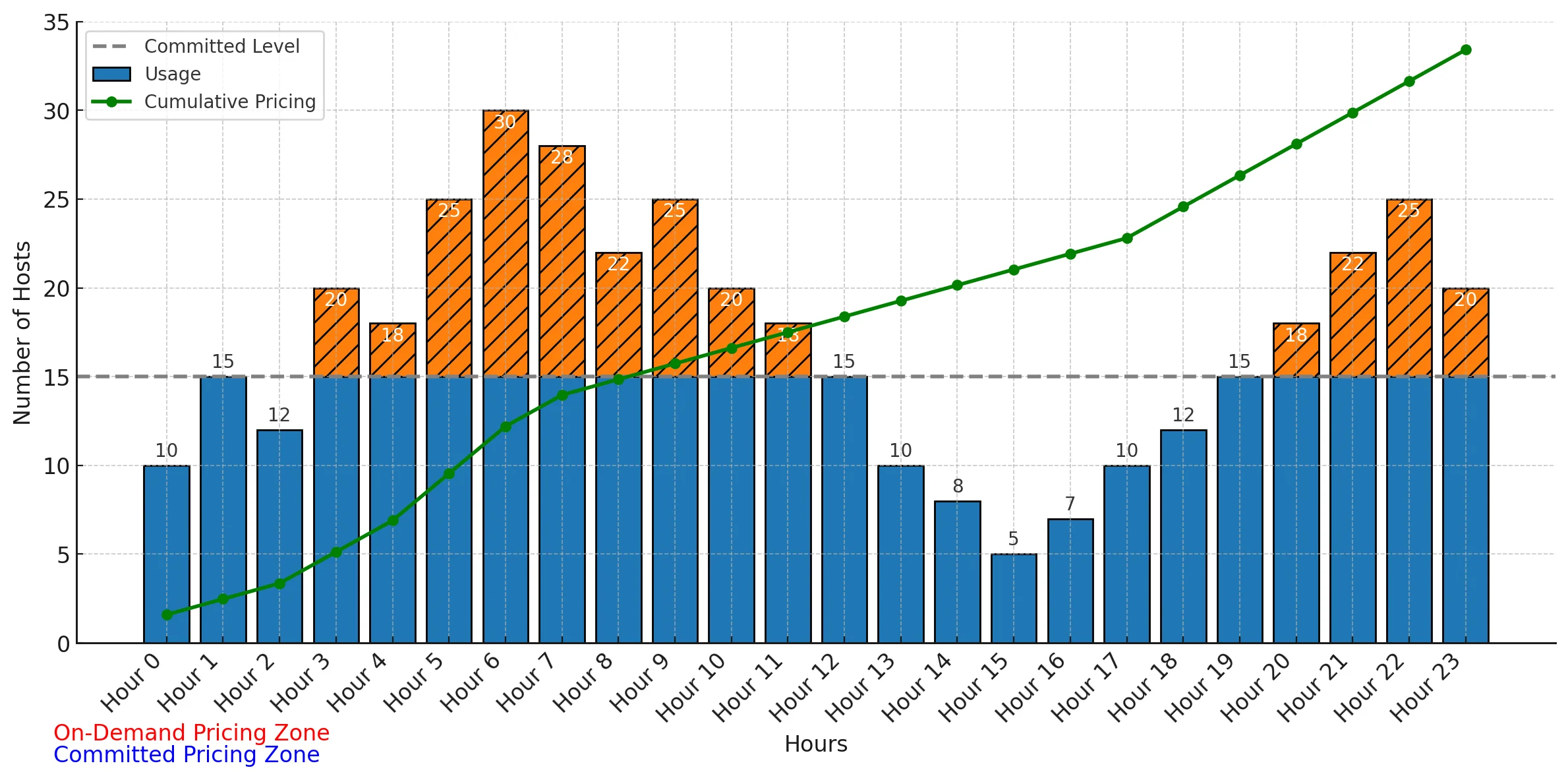

Costs are calculated based on the number of active hosts being monitored at any given time. For example, if you are monitoring 10 hosts, you will be billed for those hosts on an hourly basis throughout the month. If you autoscale during peak periods to 20 hosts, your bill will reflect this higher usage.

When You Commit (Hybrid Monthly/Hourly Plan - MHP): On a hybrid monthly/hourly plan, Datadog charges a minimum monthly commitment for a set number of hosts. If the number of hosts exceeds this commitment, Datadog charges an additional hourly rate for those extra hosts. This plan can help you save costs if you have predictable host usage with occasional spikes.

When You Don't Commit (High Watermark Plan - HWMP): On a high watermark plan, the billable count of hosts is calculated at the end of the month using the maximum count (high-water mark) of the lower 99 percent of usage for those hours. Datadog excludes the top 1 percent to reduce the impact of spikes in usage on your bill. This plan can be more flexible but tends to be more expensive if you have frequent and unpredictable spikes in usage.

One of the major challenges with host-based pricing is the unpredictability caused by autoscaling. For instance, if you autoscale to meet a traffic spike, your number of active hosts can increase substantially, resulting in a higher bill. This makes it difficult to stick to a budget when your infrastructure is elastic.

To mitigate costs, commit to the minimum number of hosts you need under normal operations and use dynamic optimization tools to manage autoscaling efficiently. Another helpful approach is to track and analyze peak usage patterns to better anticipate and plan for cost increases.

Volume-Based Pricing

Volume-Based Pricing is used for products such as Logs, Synthetics, APM Ingestion, Database Monitoring, and Observability Pipelines.

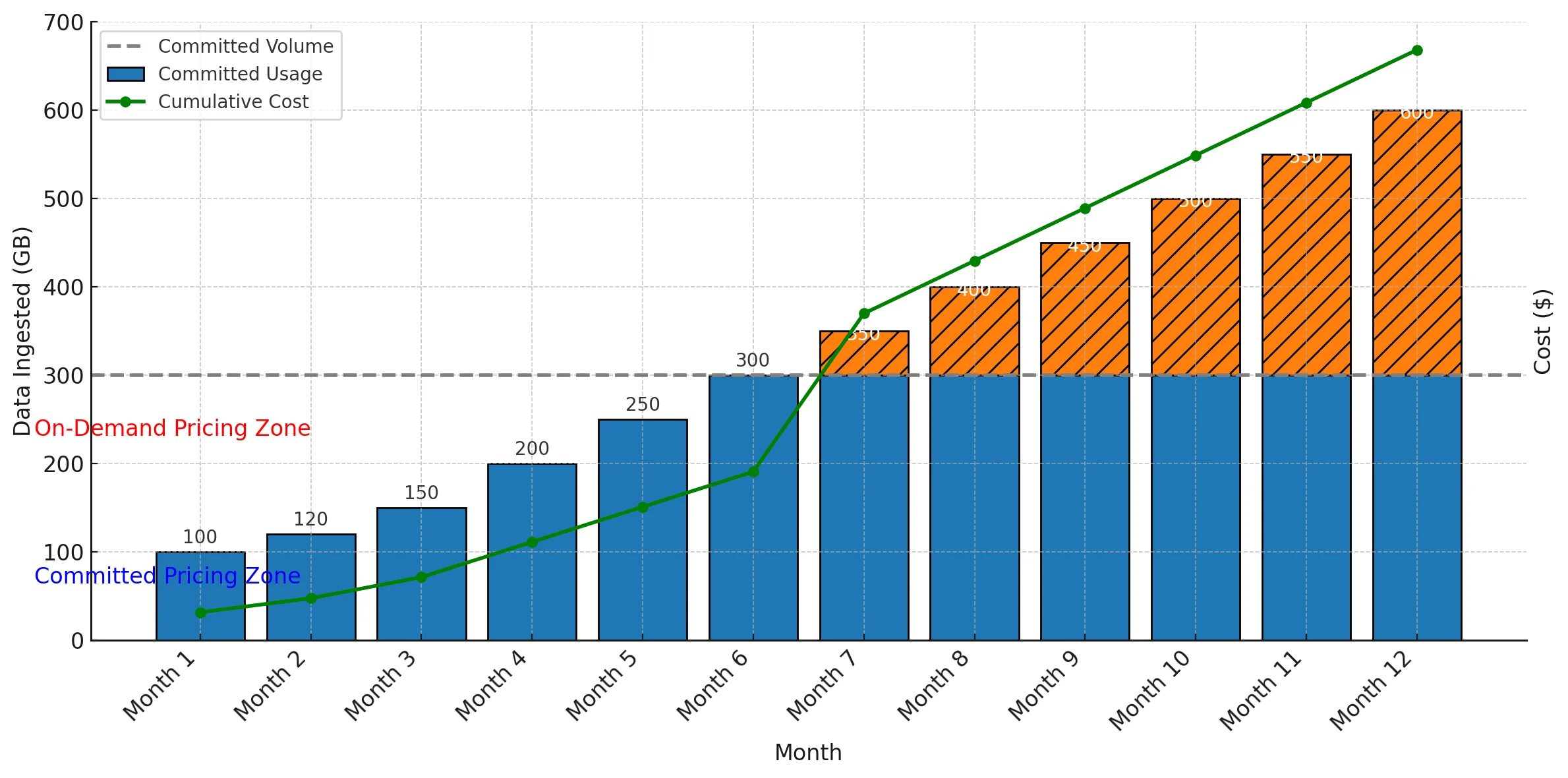

In volume-based pricing, you are billed based on the amount of data ingested, indexed, and stored in Datadog. This model is often used for data-heavy environments where significant volumes of logs and metrics are produced.

For example, if your application generates 300 GB of log data, you will incur charges for every gigabyte ingested, indexed, and stored. The more data you process, the higher the cost.

Commit to Volume: Datadog offers the ability to commit to a specific data volume to secure a reduced rate. However, if your usage exceeds the committed volume, any additional data will be billed at the on-demand rate, which is generally higher.

Volume-based pricing can lead to unpredictable costs, especially in dynamic environments where log volumes fluctuate. Enabling verbose logging during incidents, for instance, can significantly increase the data ingested, resulting in higher expenses. Developers may unintentionally drive up costs by adding new logs or increasing synthetic API tests without considering the implications.

- Commit Strategically: Committing to a predictable data volume can save up to 50% compared to on-demand pricing. If you can reasonably forecast your needs, committing is a smart way to lower costs.

- Log Filtering and Sampling: Reduce data volume by filtering out unnecessary logs or using sampling techniques to ingest only the most relevant logs.

- Quotas and Alerts: Set quotas on data ingestion and alerts to warn of unusually high log volumes, preventing budget overruns due to unexpected spikes.

User-Based Pricing

User-Based Pricing is used for products like Incident Management.

This is probably the easiest to understand pricing model in DataDog, yet it can increase your bill amount quickly if you work in a large org where a lot of folks need access to the product. Here's how it works in more detail:

This model charges based on the number of active users interacting with the product. For example, if your team has five active users managing incidents through Datadog, you will be billed according to the number of users who have access to and interact with the system each month.

Costs can quickly rise if more users need access, especially during incidents when cross-functional teams might need to log in simultaneously.

- Limit access to only those who absolutely need it.

- Consider creating shared accounts for certain roles (with careful management) to avoid incurring high costs.

- Review user activity regularly and remove inactive users.

Understanding Datadog Additional Products Pricing

Another important aspect of Datadog pricing is the add-ons that you can use to enhance your observability stack. Let's go through a detailed breakdown of the pricing structure for Datadog's add-on services, helping you evaluate their value and manage associated costs effectively.

Custom Metrics Pricing

Custom Metrics Pricing is used for adding specific metrics unique to your business across multiple Datadog products.

This pricing model can quickly become complex due to the potential for high cardinality in custom metrics. Here's a detailed breakdown:

Custom metrics are billed based on the number of unique metric-series (cardinality) and tags used. Each unique combination of a metric and its associated tags counts as a separate billable entity.

For example, if you track response times for different endpoints and add tags like region and environment, the unique combinations can quickly add up, increasing costs.

🤔 What is a tag?

A tag is a label or metadata that you attach to your metrics, logs, or traces to provide additional context and make them easier to organize, filter, and search.

Tags help you understand the source and meaning of your data. For example, if you are collecting metrics from different servers or services, you can use tags to distinguish between them. By tagging your data, you can group or filter it based on meaningful attributes, such as environment, service, or region.

Here are some common examples of tags:

- Environment:

env:production,env:staging - Service:

service:web-app,service:payment-service - Region:

region:us-east-1,region:eu-west-1 - Instance Type:

instance-type:t2.medium - Custom Tags: You can also create custom tags like

team:frontend,feature:experiment-a

Tags are very useful because they help you answer questions like:

- What is the CPU usage of all servers in the

productionenvironment? - How many requests are hitting a particular

servicein theus-east-1region? - Are error rates higher on a specific instance type?

However, tags with high cardinality, meaning tags that can have an extremely large number of unique values (e.g., user_id or session_id), can become problematic. This is because they can lead to a significant increase in the amount of data being stored and processed, leading to higher costs and performance issues.

The main issue is the unpredictable nature of high cardinality. Each unique tag combination adds a new metric series, leading to a rapid increase in costs. This makes it challenging to control expenses, especially in complex environments with extensive monitoring needs.

Additionally, distribution/histogram custom metrics are billed at least 5 times higher than Gauges/Counters, further complicating cost management.

Play with Custom Metrics

Use this interactive tool to understand the impact of high cardinality on your custom metric costs:Metric: order_processing_time

region

tag

5

product_category

tag

10

payment_method

tag

6

Monthly Cost ($)

Full Cardinality

Cost = (Regions * Products * Payments) * $0.05

$15.00

(Assuming additional custom metrics are charged at $1 per 100 metrics per month.)

Examples:

- North America, electronics, credit card: 48.3 seconds

- Europe, clothing, credit card: 49.7 seconds

- Asia, electronics, PayPal: 51.2 seconds

- North America, clothing, credit card: 39.9 seconds

- North America, home goods, credit card: 42.1 seconds

Optimized

Cost = (Regions * Products) * $0.05

$2.50

(Assuming additional custom metrics are charged at $1 per 100 metrics per month.)

Examples:

- North America, electronics: 47.3 seconds

- Europe, electronics: 49.5 seconds

- Asia, electronics: 52.8 seconds

- North America, clothing: 39.6 seconds

- North America, home goods: 43.7 seconds

To control costs, limit the use of high-cardinality tags such as user IDs or session IDs. Instead, focus on aggregating data that is meaningful for long-term trends. Regularly audit and remove unused custom metrics that do not add significant value.

Container Monitoring Pricing

Container Monitoring in Datadog offers comprehensive insights into containerized workloads, enabling teams to proactively manage performance, troubleshoot issues, and ensure optimal resource utilization across dynamic environments.

Datadog charges based on the number of unique containers monitored. Each container, regardless of its lifespan, contributes to the bill. For instance, if you have 100 containers running across multiple clusters, the billing is based on the total number of monitored containers, irrespective of their lifecycle.

The main challenge lies in the high dynamicity of container environments, especially in Kubernetes. Containers can be rapidly spun up and down based on load, leading to fluctuating costs. Even ephemeral containers that last only seconds incur costs, which can accumulate over time.

To manage costs, monitor only critical containers and set policies to exclude transient or non-essential containers. Using tools like resource quotas and limits can help ensure that container usage remains within a predictable range. Alternatively, consider open-source monitoring tools like Prometheus for less critical workloads, as they can offer more predictable costs.

How to Optimize Datadog Pricing

Now let's discuss some strategies that can significantly reduce your Datadog Pricing without compromising on observability.

#1: Use Committed Pricing

Analyze your usage and commit to a specific number of hosts or data volume to save up to 50% compared to on-demand rates.

#2: Use Cost Monitoring Tools

Transition from end-of-month billing surprises to daily cost monitoring. Set alerts for spikes in log volume or host count.

The earlier you catch anomalies and cost spikes, the earlier you can address their root cause, and the more predictable your end of the month payment will be.

Reasons for cost spikes could be:

- 🏷️ Unknowingly adding a high cardinality tag to a custom metric.

- 🚀 Spinning up a testing environment and forgetting it.

- 🆕 A new feature is released to production that you didn't foresee its usage increase.

#3: Reduce Waste and Idle Monitoring

Many engineers, especially newer ones, don't realize observability costs money. They think adding a log or metric is just writing code, which is free.

Try to make your team aware that observability has a price tag. Help them understand that every new log or metric they add will impact costs.

- Turn off debug logs in production. Only log what is necessary.

- Use DataDog's logs as metrics to reduce logs volume.

- Remove custom metrics that are not used. A good rule is remove any custom metric that is not being used in any dashboard.

- Make sure that your Synthetic tests are actually used and alerted on by at least one active Monitor and scheduled at reasonable intervals.

- Ensure that you only monitor hosts you care about and not all your fleet by default.

Consider using cheaper open source tools for "general monitoring", and use the full extent of the Datadog platform for your most valuable hosts and services

#4: Migrate to Alternatives

If the cost of Datadog is becoming unsustainable, consider switching to a commercial or open-source alternative. Several options are available, each with its unique strengths:

SigNoz: SigNoz is an open-source alternative built on top of OpenTelemetry, providing cost-effective observability. It offers a full-stack observability platform similar to Datadog, without the high costs associated with proprietary solutions. SigNoz also has a transparent pricing model with no hidden costs, which helps you better predict your monitoring expenses.

Prometheus + Grafana: For those who prefer open-source tools and have the infrastructure capability, Prometheus and Grafana are a powerful combination. Prometheus handles metrics collection efficiently, while Grafana offers powerful visualization tools. Although it requires more setup and management, it can significantly reduce costs if self-hosted.

New Relic: New Relic is a strong commercial alternative that offers a range of observability tools. While its pricing model is still usage and user based, it provides competitive features and is worth evaluating if you need a fully managed solution with similar functionality to Datadog.

Dynatrace: Dynatrace offers advanced observability features, including AI-powered insights. Its pricing can be complex, but it may provide more value for enterprises needing highly automated and intelligent monitoring capabilities.

You can find a comprehensive list of DataDog Alternatives here.

When comparing these options, keep in mind your organization's specific needs, technical expertise, and budget. SigNoz is especially well-suited for companies that want a straightforward, predictable pricing model and are comfortable with open-source technology.

For more details on SigNoz's offerings, you can explore:

- How SigNoz approaches Application Performance Monitoring

- How SigNoz manages Logs

- Working with Metrics and Dashboards in SigNoz

Trade-Offs of Moving Away from Datadog Due to Pricing

Pricing vs Features

Will switching to a more affordable solution impact the features available to you?

Switching to a more affordable solution may impact the features available to you, depending on your observability needs. SigNoz offers most of the core observability features, such as metrics, traces, and logs in a single pane at a significantly lower price compared to Datadog.

However, it's essential to consider which specific features you use the most. For example, SigNoz provides excellent support for metrics and tracing with OpenTelemetry integration, but some advanced features of Datadog, like network performance monitoring or AI-based anomaly detection, may not be available in the same capacity.

Alternatives like Prometheus + Grafana offer comprehensive monitoring and visualization capabilities, but they require more manual setup and maintenance, which can be a trade-off if your team is limited in engineering resources. New Relic and Dynatrace also provide robust observability tools but can be costly depending on the feature set you require.

Pricing vs Performance

Will switching to a more affordable solution impact the performance of your observability setup?

When considering performance, it's crucial to evaluate if lower-cost alternatives like SigNoz can meet the demands of your specific workloads. SigNoz is designed to handle high-throughput environments and provides real-time metrics and traces, making it suitable for most applications. However, if you need advanced analytics or AI-powered insights, Dynatrace or New Relic might be more appropriate, albeit at a higher cost.

For many users, performance differences between Datadog and alternatives like SigNoz are negligible, especially for standard observability tasks.

It's important to match the solution to your performance requirements and consider whether the extra features justify the additional cost, or if a simpler, more affordable tool like SigNoz can meet your needs without the overhead.

Product-Wise Pricing of Datadog

Now, that we understand the fundamental pricing models used by Datadog, let's dive into the product-wise pricing of Datadog. We will discuss the pricing of core Datadog products, the value they provide, and the challenges you might face and of course the better alternatives in each case.

Log Monitoring

- Pricing

- Features

- Challenges

- Solutions

- Alternatives

- Common Questions

Volume-based pricing, charged per GB of ingested, indexed, and stored logs.

Log Billing Breakdown:

- Ingestion: Starting at $0.10 per ingested or scanned GB per month (billed annually or $0.10 on-demand). Ingest, process, enrich, live tail, and archive all your logs with out-of-the-box parsing for 200+ log sources.

- Standard Indexing: $1.70 per million log events per month (billed annually or $2.55 on-demand). Offers 15-day retention for real-time exploration, alerting, and dashboards with mission-critical logs.

- Flex Storage: Starting at $0.05 per million events stored per month (billed annually or $0.075 on-demand). Flexible long-term retention up to 15 months for historical investigations or security, audit, and compliance use cases.

- Flex Logs Starter: Starting at $0.60 per million events stored per month (billed annually or $0.90 on-demand). Retention options of 6, 12, or 15 months for long-term log retention without the need to rehydrate.

- Log Forwarding to Custom Destinations: Starting at $0.25 per GB outbound per destination per month for centralizing log processing, enrichment, and routing to multiple destinations.

- Rehydration: Logs stored beyond the initial retention period can be rehydrated if needed at $0.10 per compressed GB scanned, plus the indexing cost for the selected retention period.

Important Note: Costs are incurred for logs even if they are not actively used, as long as they are processed, stored, or indexed by Datadog.

Understanding "Million Events": In Datadog's pricing model, "million events" refers to the number of individual log entries or records processed. While ingestion is measured in GB, indexing and storage are often priced per million events. This allows for more granular pricing based on the actual number of log entries, rather than just their raw data size. It's important to consider both the volume (GB) and the number of events when estimating costs.

APM (Application Performance Monitoring)

- Pricing

- Features

- Challenges

- Solutions

- Alternatives

- Common Questions

- Host-based pricing, starting at $31 per host per month (billed annually) or $36 on-demand

- Various plans available:

- APM: $31 per host per month, includes end-to-end distributed traces, service health metrics, and 15-day historical search & analytics.

- APM Pro: $35 per host per month, includes everything in APM plus Data Streams Monitoring.

- APM Enterprise: $40 per host per month, includes APM Pro features along with Continuous Profiler for deeper code-level insights

- APM DevSecOps: $36 per host per month, adds OSS vulnerability detection with Software Composition Analysis (SCA)

- APM DevSecOps Pro: $40 per host per month, builds on the respective plans with SCA for enhanced security monitoring

- APM DevSecOps Enterprise: $45 per host per month.

- Additional span ingestion: $0.10/GB beyond the included 150GB per APM host

- Note: A span represents a unit of work or operation within a distributed system. It's a fundamental component of distributed tracing, capturing timing and contextual information about a specific operation. For example, a span might represent a single database query, an HTTP request, or a function call. The 150GB per APM host typically covers millions of spans, but high-volume applications may exceed this, leading to additional costs.

Infrastructure Monitoring

- Pricing

- Features

- Challenges

- Solutions

- Alternatives

- Common Questions

Host-based, starting at $15 per host per month (billed annually) or $18 on-demand. Three pricing tiers are available:

- Free: Includes core collection and visualization features for up to 5 hosts, with 1-day metric retention.

- Pro: Starting at $15 per host per month. Offers centralized monitoring of systems, services, and serverless functions, including full-resolution data retention for 15 months, alerts, container monitoring, custom metrics, SSO with SAML, and outlier detection.

- Enterprise: Starting at $23 per host per month (billed annually). Adds advanced administrative features, automated insights with Watchdog, anomaly detection, forecast monitoring, live processes, and more.

Additional Pricing Details:

- Container monitoring: 5-10 free containers per host license. Additional containers at $0.002 per container per hour or $1 per container per month prepaid.

- Custom metrics: 100 for Pro, 200 for Enterprise per host. Additional at $1 per 100 metrics per month.

- Custom events: 500 for Pro, 1000 for Enterprise per host. Additional at $2 per 100,000 events (annual) or $3 (on-demand).

Database Monitoring

- Pricing

- Features

- Challenges

- Solutions

- Alternatives

- Common Questions

Starting at $70 per database host per month (billed annually) or $84 on-demand.

- Track normalized query performance trends using database-generated metrics.

- Correlate query performance with database infrastructure metrics.

- Extract valuable data without compromising database security.

- Access insights for database hosts, clusters, and applications.

Real User Monitoring (RUM)

- Pricing

- Features

- Challenges

- Solutions

- Alternatives

- Common Questions

Session-based, charged per 1,000 sessions. Starting at $1.50 per 1,000 sessions per month (billed annually) or $2.20 on-demand.

RUM & Session Replay: Starting at $1.80 per 1,000 sessions per month (billed annually) or $2.60 on-demand. Includes video-like replays of user sessions and heatmaps.

Synthetic Monitoring

- Pricing

- Features

- Challenges

- Solutions

- Alternatives

- Common Questions

Volume-based, charged per synthetic check. Starting at $5 per 10,000 API test runs per month (billed annually) or $7.20 on-demand. Browser testing starts at $12 per 1,000 test runs per month. Mobile App Testing starts at $50 per 100 test runs per month.

Cloud Security Management

- Pricing

- Features

- Challenges

- Solutions

- Alternatives

- Common Questions

Host-based pricing depending on the services monitored. Starting at $10 per host per month for Pro (billed annually or $12 on-demand), or $25 per host per month for Enterprise (billed annually or $30 on-demand).

Cloud Workload Security (stand-alone): Starting at $15 per host per month (billed annually or $18 on-demand).

| Product | Pricing Model | Key Features | Challenges | Solutions | Alternatives |

|---|---|---|---|---|---|

| Log Monitoring 📜 | Volume-based | Real-time log analysis, alerts | High retention costs ⚠️ | Log filtering, retention policies 💡 | Elastic Stack, Splunk, SigNoz |

| APM 🚀 | Host-based | Distributed tracing, service maps | High cardinality, autoscaling costs ⚠️ | Optimize tags, commit rates 💡 | New Relic, Dynatrace, SigNoz |

| Infrastructure Monitoring 🏗️ | Host-based | Server/container monitoring | Unpredictable container costs ⚠️ | Monitor critical infra only 💡 | Prometheus, Zabbix |

| Database Monitoring 📊 | Volume-based | Query performance, anomaly alerts | High activity monitoring costs ⚠️ | Optimize queries, thresholds 💡 | SigNoz, Percona, SolarWinds |

| Real User Monitoring 👥 | Session-based | User journey tracking | High session costs ⚠️ | Sampling, focus key interactions 💡 | Google Analytics |

| Synthetic Monitoring 🤖 | Volume-based | API/browser tests | High frequency check costs ⚠️ | Prioritize key tests 💡 | Checkly, Pingdom |

| Cloud Security Management 🔒 | Host/Volume-based | Threat detection, compliance | High monitoring costs ⚠️ | Monitor critical services only 💡 | AWS Security Hub, Azure Security |

| Application Security 🛡️ | Service-based | Vulnerability scanning | High costs with microservices ⚠️ | Focus on critical applications 💡 | Snyk, Checkmarx |

| Cloud SIEM 🛠️ | Volume-based | Log management, threat detection | High ingestion costs ⚠️ | Targeted log collection 💡 | Splunk, Graylog |

| CI Pipeline Visibility 🔄 | User/Activity-based | Build/test insights | High activity costs ⚠️ | Optimize build frequency 💡 | CircleCI, GitLab CI/CD |

| Incident Management 🚨 | User-based | Incident tracking, RCA | High user access costs ⚠️ | Restrict incident 💡 | PagerDuty, Opsgenie |

Datadog Pricing Case Studies

Several organizations have shared their experiences with Datadog's pricing challenges and their efforts to optimize or move away from the platform to reduce costs and improve observability management.

Case Study #1: Coinbase's $65M Datadog Bill

In 2021, Coinbase faced an enormous $65 million bill from Datadog, largely due to the scaling demands and high observability costs during a boom period for the crypto industry. The high costs prompted Coinbase to evaluate alternatives, leading to the establishment of an in-house observability team. They explored switching from Datadog to a more cost-effective solution using Prometheus, Grafana, and Clickhouse. Despite initial plans to move away from Datadog, Coinbase eventually renegotiated a more favorable deal with Datadog, reducing their future observability expenses significantly while maintaining Datadog's advanced feature set. You can read the full story on the Pragmatic Engineer blog.

Case Study #2: Why Bands Went with an Open Source Solution for Their Observability Setup

Bands, a platform aimed at helping musicians manage their careers, initially used Mixpanel for tracking but found it inadequate for backend observability. As their platform scaled, the need for a robust, cost-effective observability tool became crucial. Shiv, Bands' CTO and an ex-Coinbase engineer, had firsthand experience with the months spent optimizing data sent to Datadog at Coinbase, which he saw as a waste of precious engineering bandwidth.

After evaluating various options, Bands chose SigNoz for its predictable billing and comprehensive features. SigNoz's ability to monitor over 50 third-party integrations efficiently, combined with its seamless support and ease of integration, made it the ideal choice for Bands. You can read the full case study here.

Is Datadog Right for My Organization?

Datadog can be a powerful tool for observability, but it's important to understand whether it aligns with your organization's stage and needs. Here’s a breakdown to help you decide:

Small Startups

Datadog's free tier could be a good starting point if you're just getting off the ground. It provides essential features to help you get visibility into your infrastructure without an initial financial commitment. However, as your infrastructure and traffic grow, you may find that costs escalate quickly, making it challenging to maintain cost efficiency. For small-scale usage, Datadog's ease of setup and feature set can be ideal, but careful monitoring of your usage is crucial. Alternatively, using open-source solutions like SigNoz, which integrates seamlessly with OpenTelemetry, can be more future proof and can provide more predictable costs and flexibility.

Medium-Sized Businesses

If your organization is scaling, Datadog's committed pricing can help you manage costs, but scaling brings complexity. The costs tied to increasing host counts, log volume, and additional features like custom metrics can quickly get out of control. You need to be strategic about which metrics to track and which hosts to monitor, as overuse can result in unexpectedly high bills. This stage is where many businesses struggle with the unpredictability of Datadog's pricing. Open-source solutions like SigNoz, with its predictable pricing and powerful observability features, can be a good alternative to maintain control over costs.

Enterprise-Level Organizations

For larger enterprises, Datadog offers custom pricing tiers and volume discounts, which can be attractive. However, enterprise-level needs often require advanced features such as AI-based anomaly detection, network performance monitoring, and multi-cloud observability, which can drive up costs even with discounts. At this scale, it's worth evaluating whether the features provided by Datadog justify the cost compared to building an in-house observability solution or considering alternatives like open-source platforms. SigNoz, in combination with OpenTelemetry, offers an enterprise-grade observability stack with transparent costs, making it a viable option for large-scale operations.

General Guidance

- If You’re Just Starting Out: Datadog might be a good option for its ease of use and feature-rich platform. The initial free tier is helpful for startups to get off the ground without immediate costs. SigNoz can also be considered for its open-source benefits and cost transparency.

- As You Scale: Costs can become unpredictable and harder to manage. Consider ways to optimize, such as using committed pricing, reducing the number of tracked metrics, or focusing on core functionalities. Alternatively, evaluate open-source options like SigNoz to help keep costs under control.

- When Complexity Grows: At larger scales, it may be worth exploring alternatives, whether it’s negotiating custom contracts with Datadog, using more targeted monitoring solutions, or switching to open-source platforms like Prometheus, Grafana, and SigNoz for better cost control and customization.

FAQs

Final Thoughts

Datadog's pricing is scalable and flexible, but it can become costly without proper management. Regular audits, usage monitoring, and a clear understanding of its pricing models can help keep your costs in check. If you're looking for a more cost-effective solution, consider exploring open-source alternatives like SigNoz.

Key Takeaways

- Understand Your Needs: Before choosing Datadog, assess whether its features align with your organization's stage and observability needs.

- Monitor Costs Actively: Regular audits and cost monitoring can help prevent unexpected billing surprises.

- Optimize Usage: Committed pricing, cost control tools, and optimization techniques can help manage Datadog's expenses.

- Consider Alternatives: Open-source options like SigNoz offer similar features with more predictable and lower costs.

Ready to Make a Change?

Explore how SigNoz can help you save on observability costs while providing similar features.

Ready to Optimize Your Observability Costs?

Discover how SigNoz offers a seamless migration path from Datadog with comparable features and significant cost savings.