AWS CloudWatch vs Azure Monitor: Features, Costs, and Best Fit

AWS CloudWatch and Azure Monitor are the default observability tools for their respective clouds. They cover metrics, logs, traces, alerting, and dashboards, and work well when your workloads live entirely within one provider. The real differences surface when you look at how each tool handles investigation workflows, cost behavior at scale, alerting hygiene, and portability to other environments.

This comparison evaluates CloudWatch and Azure Monitor across six aspects that matter most during actual operations: ecosystem fit, telemetry coverage, query and investigation experience, alerting, OpenTelemetry support, and cost governance. The goal is to help you decide which tool fits your cloud footprint and operating model, not to declare a universal winner.

Fit and Ecosystem

The strongest argument for either tool is the same. It works best inside its own cloud. The question is how deep that integration goes, and where it stops.

CloudWatch

CloudWatch is built into the AWS control plane and most AWS services (EC2, Lambda, RDS, ECS, S3, and others) publish metrics to CloudWatch automatically. You do not need to install agents or configure exporters to get baseline infrastructure visibility for managed services.

The integrations go beyond metrics collection. CloudWatch alarms can trigger Auto Scaling policies, Lambda functions, EC2 actions, and SNS notifications directly. EventBridge routes CloudWatch alarm state changes into broader event-driven workflows. IAM controls who can view metrics, query logs, and modify alarms. CloudWatch supports cross-account observability (commonly used with AWS Organizations, but also available for individually linked accounts) so a monitoring account can view metrics, logs, and traces from linked source accounts.

There are instances where CloudWatch is weaker like it is not designed as a cloud-neutral control plane. If you run workloads across AWS and another provider, CloudWatch can ingest custom metrics from external sources, but you lose the zero-setup automatic collection that AWS services get. External metrics require you to publish data through the CloudWatch API or agent, configure custom namespaces, and manage the ingestion pipeline yourself. There are no pre-built dashboards, no automatic alarm recommendations, and no native service map integration for non-AWS resources. Teams with genuine multi-cloud footprints typically need an additional layer to unify their monitoring workflows.

Azure Monitor

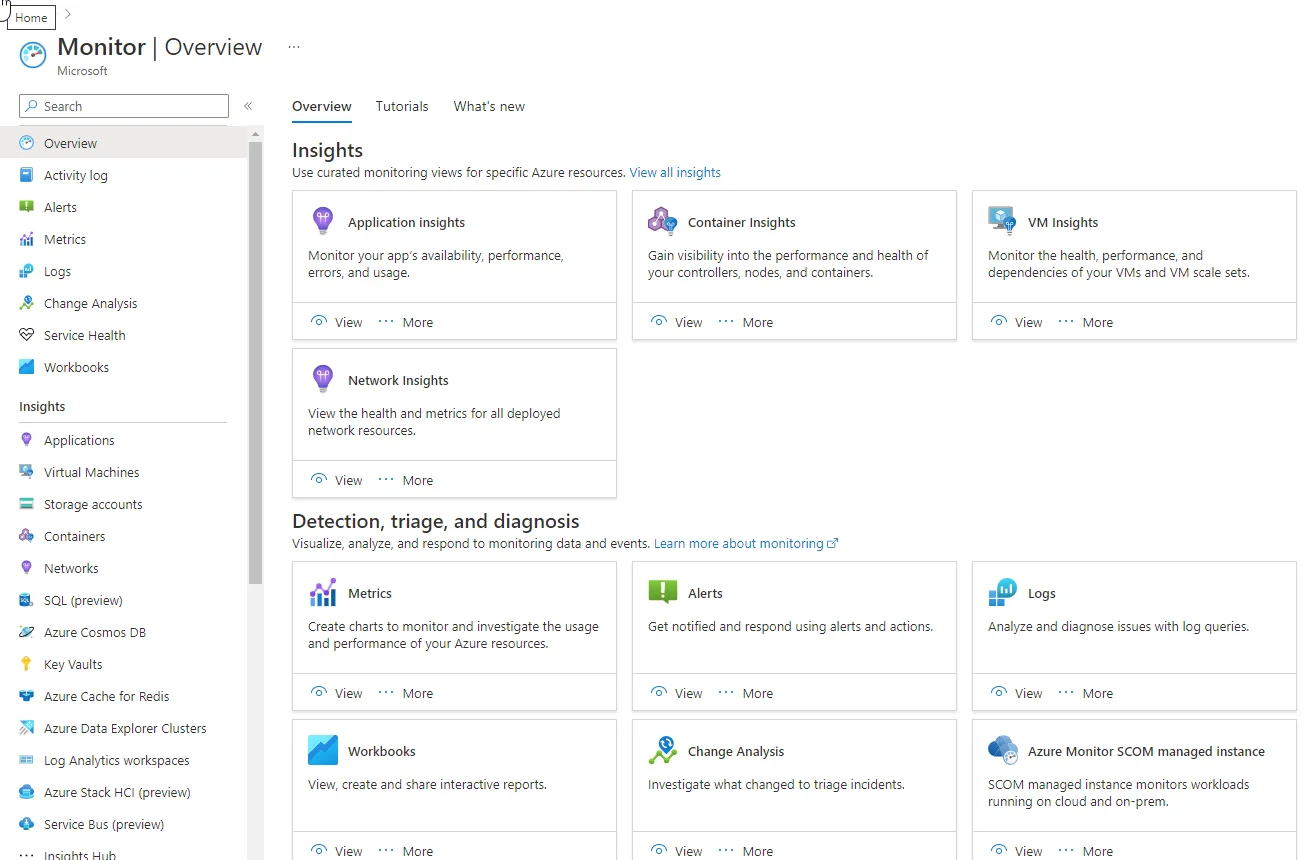

Azure Monitor is the observability platform for Azure resources, applications, and hybrid environments. It integrates tightly with the Azure resource model. Platform logs from Azure services are routed via diagnostic settings , while agent-based collection (for example, VM guest telemetry via Azure Monitor Agent) is governed by Data Collection Rules (DCRs) .

Azure Monitor respects Azure RBAC (Role-Based Access Control), subscription boundaries , and resource group structures . This makes it easier to enforce access policies, scope monitoring to specific teams, and align observability with existing Azure governance hierarchies.

Azure Monitor can also monitor on-premises and other cloud workloads. In practice, the experience is deepest for Azure-native resources. Non-Azure workload monitoring is possible through agents, OpenTelemetry, and custom ingestion paths, but the setup effort and data fidelity vary depending on what you are onboarding.

Telemetry and APM Coverage

Both tools cover metrics, logs, and application traces. The differences are in how each organizes telemetry collection, what ships by default, and how much setup work remains after initial onboarding.

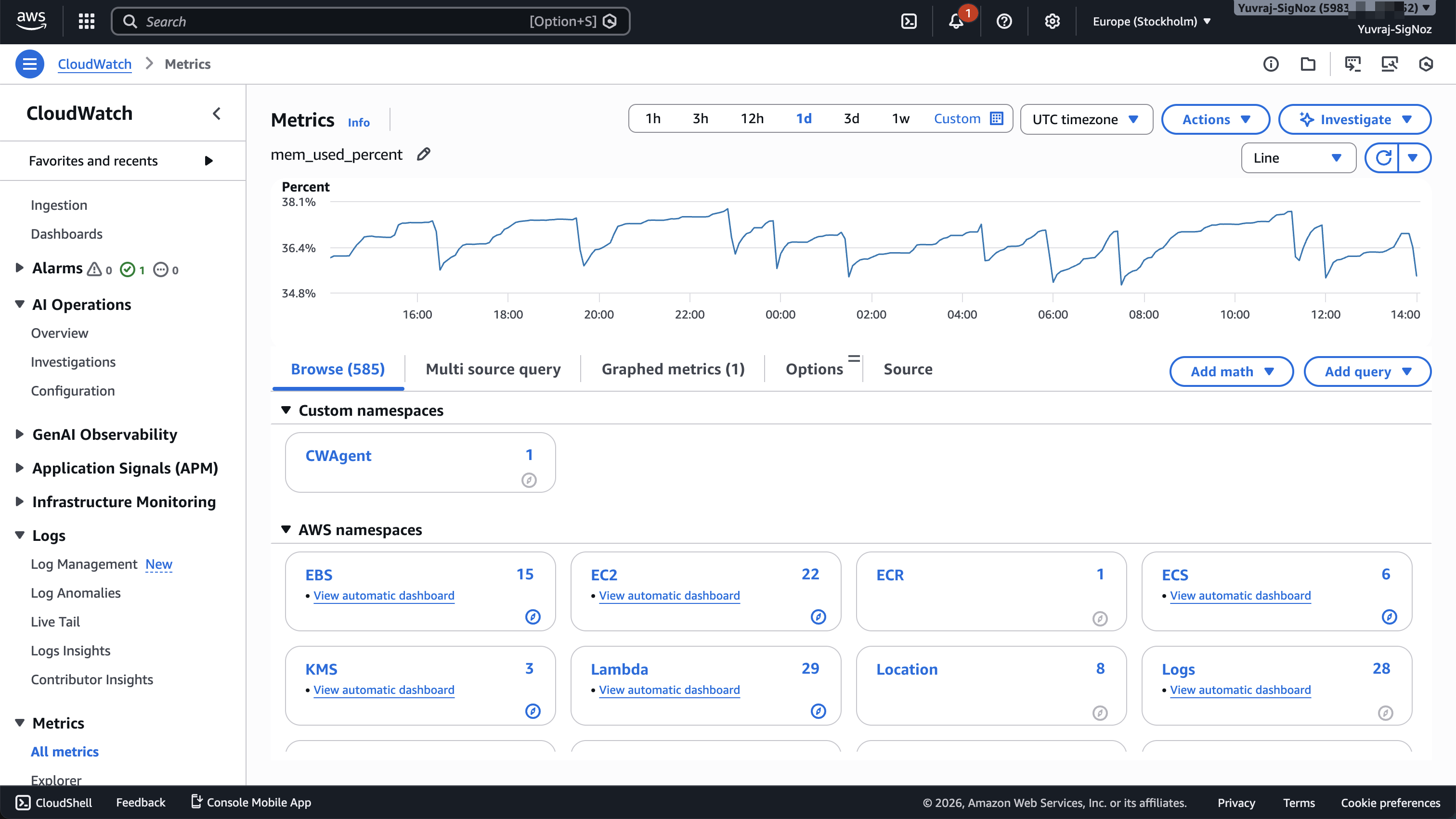

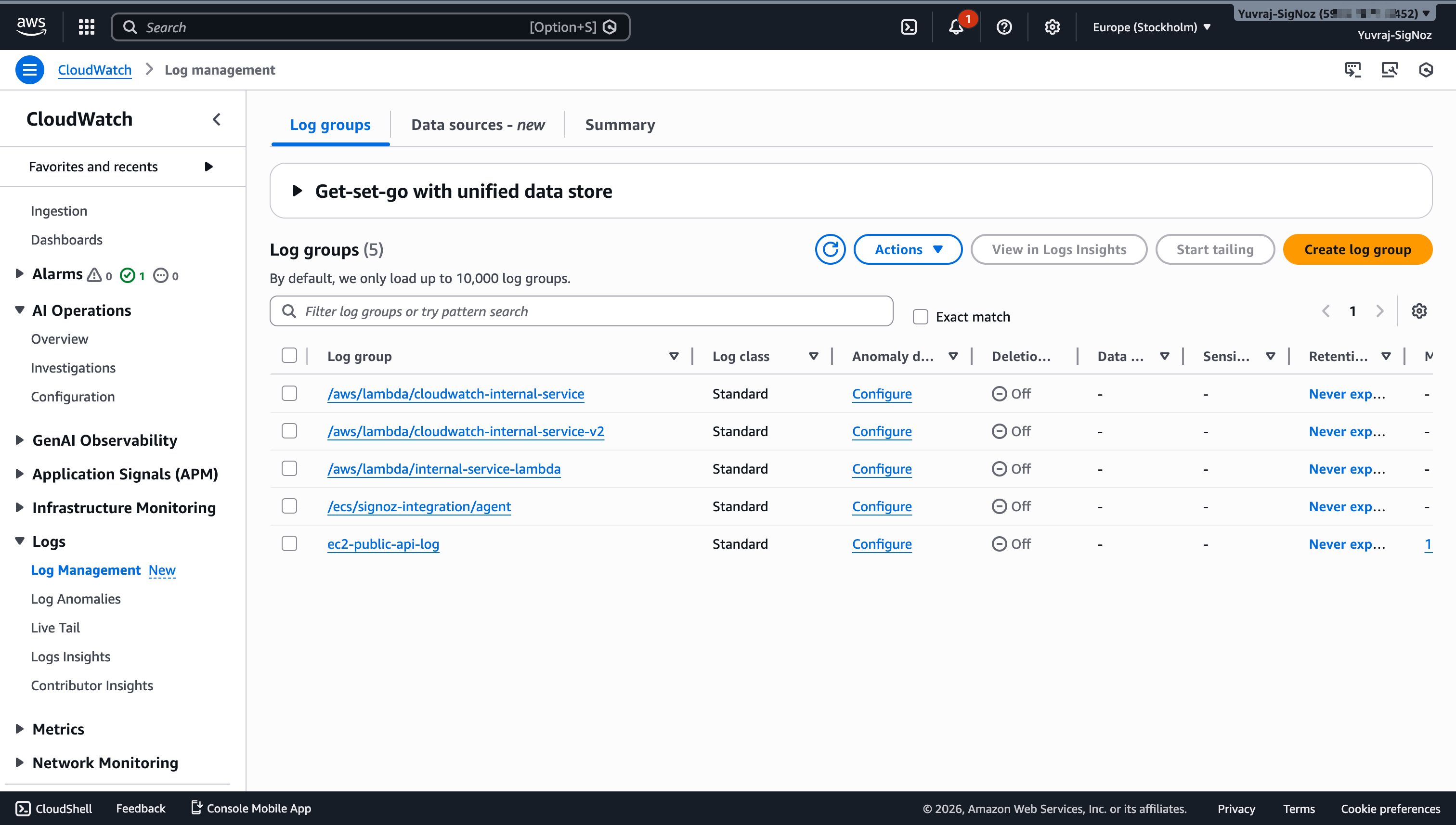

CloudWatch

Metrics: AWS services publish metrics to CloudWatch automatically under service-specific namespaces (AWS/EC2, AWS/Lambda, AWS/RDS, etc.). Custom metrics require explicit publishing through the CloudWatch API or the CloudWatch agent. Metric retention follows a tiered model by granularity: 1-minute data points are retained for 15 days, 5-minute data points for 63 days, and 1-hour data points for 455 days (about 15 months).

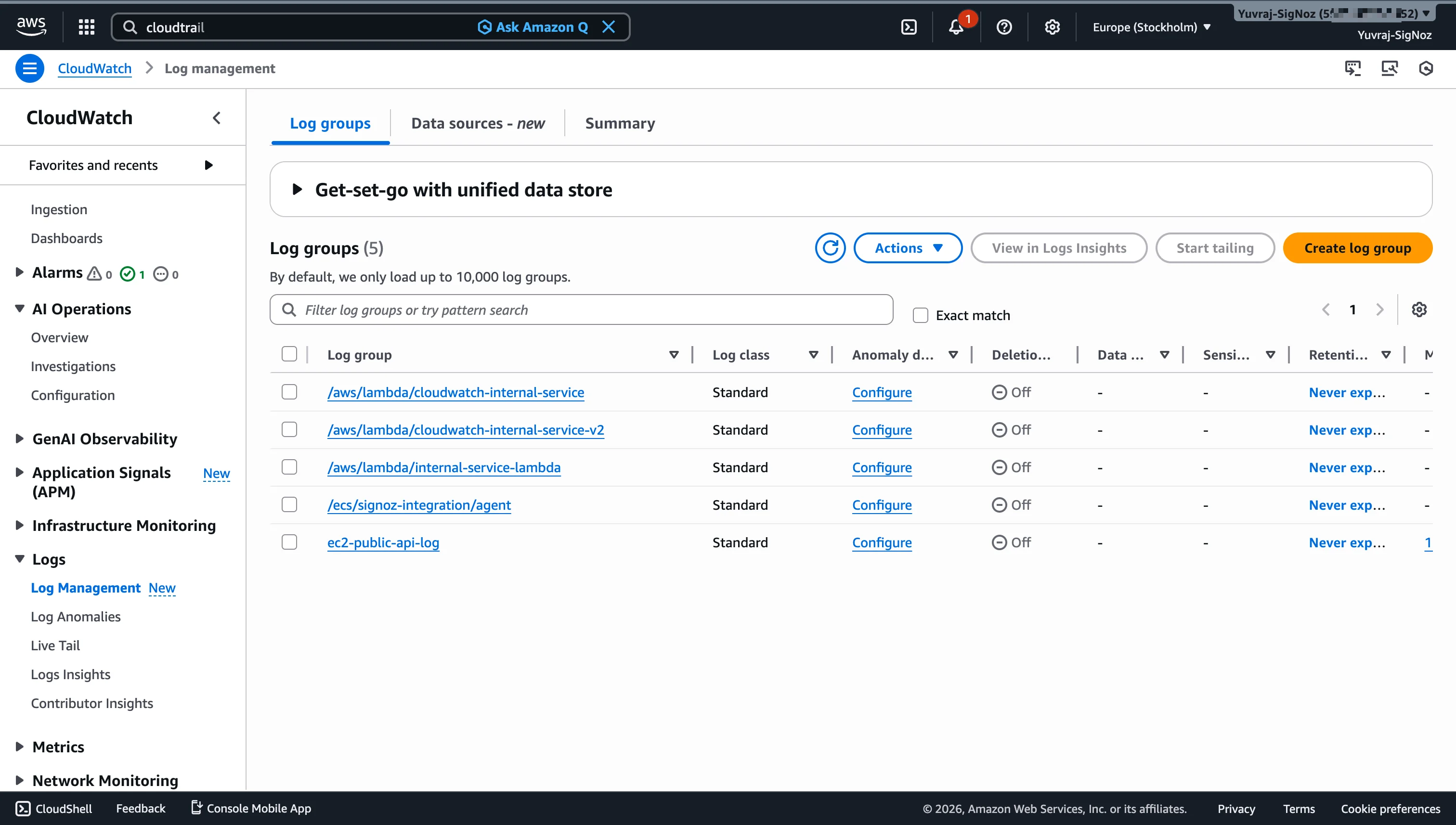

Logs: CloudWatch Logs collects log data through log groups and log streams . Lambda function logs flow automatically. For EC2 instances and on-premise servers, you install the CloudWatch agent and configure which log files to ship. Log data can be routed via subscription filters to Firehose, Lambda, Kinesis Data Streams, or OpenSearch Service (and from there to S3 through Firehose or Lambda).

Tracing and APM: CloudWatch Application Signals and AWS X-Ray provide application-level observability. Application Signals, a newer addition, surfaces service-level health dashboards built on trace and metric data. X-Ray collects distributed traces across AWS services. Both require instrumentation, either through the AWS Distro for OpenTelemetry (ADOT) or AWS-specific SDKs. The APM depth is solid for AWS-native workloads, but the quality of your trace data depends on instrumentation completeness. Note that CloudWatch handles operational monitoring (performance, errors, resource health), while AWS CloudTrail handles audit logging (who did what, when). If you are evaluating both, see CloudWatch vs CloudTrail for a detailed breakdown of where each fits.

Azure Monitor

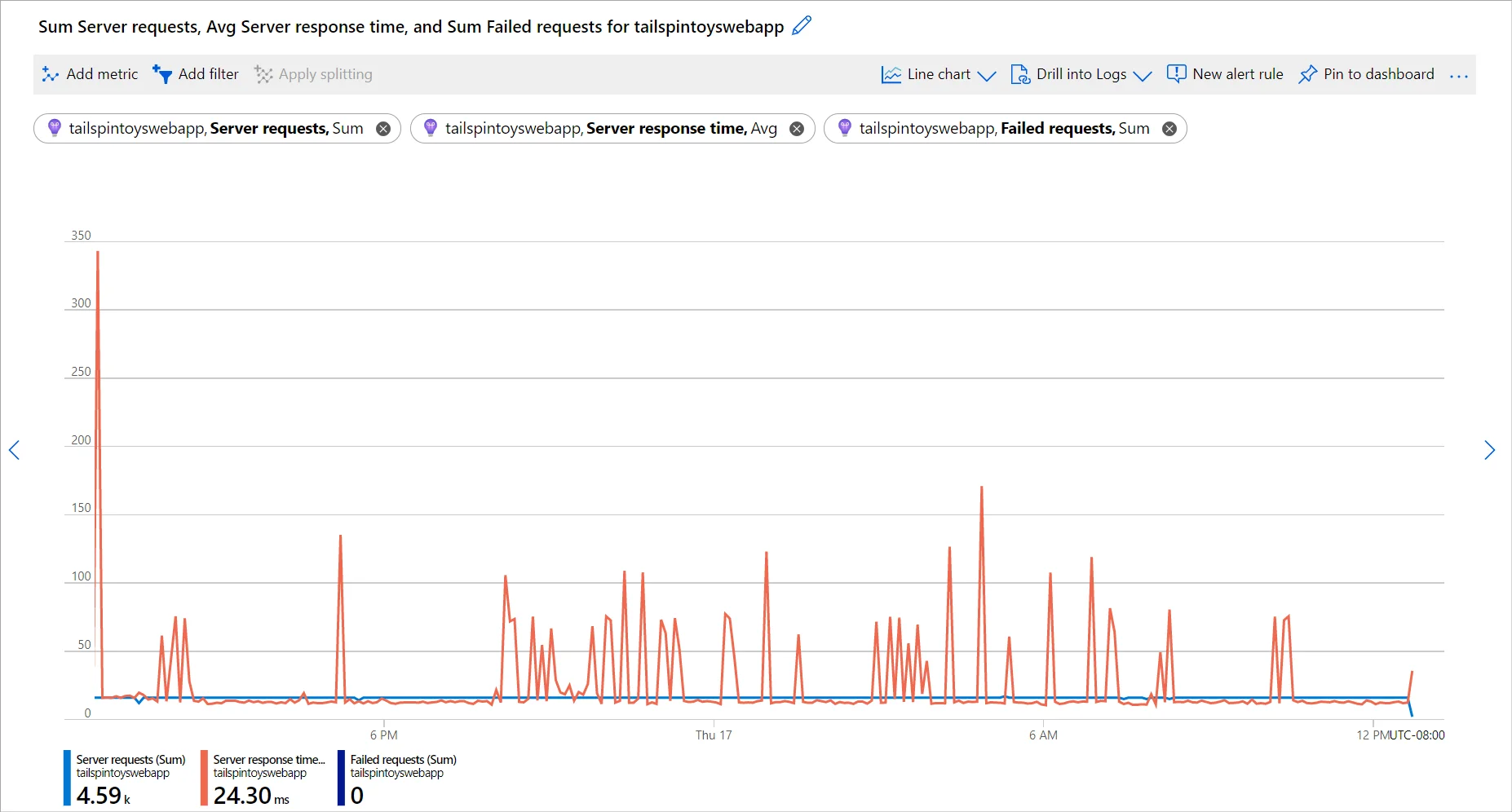

Metrics: Azure Monitor Metrics is a time-series database for Azure platform metrics and custom metrics. Platform metrics from Azure services (VMs, App Services, SQL Database, etc.) are collected automatically. Retention for platform metrics is 93 days. Custom metrics have a separate retention behavior and can be sent via the Azure Monitor ingestion API or OpenTelemetry.

Logs: Azure Monitor Logs, powered by Log Analytics workspaces , handles diagnostic logs, activity logs, and custom log data. You configure what data to collect and where to send it through Data Collection Rules (DCRs), which give you granular control over ingestion. DCRs matter for both observability quality and cost control, since they determine what gets ingested into your workspace.

Tracing and APM: Application Insights, part of Azure Monitor, handles request telemetry, dependency tracking, failure analysis, and performance monitoring. You can instrument applications using the Azure Monitor OpenTelemetry Distro (supporting .NET, Java, Node.js, and Python) or the classic Application Insights SDKs. Application Insights gives you an application-centric view with service maps, transaction search, and failure diagnostics.

Query and Investigation Experience

During an incident, the speed at which you can move from an alert to a root cause depends on your query tools and how smoothly you can correlate across signals. CloudWatch and Azure Monitor take different approaches here.

CloudWatch

CloudWatch provides two distinct query interfaces for different data types.

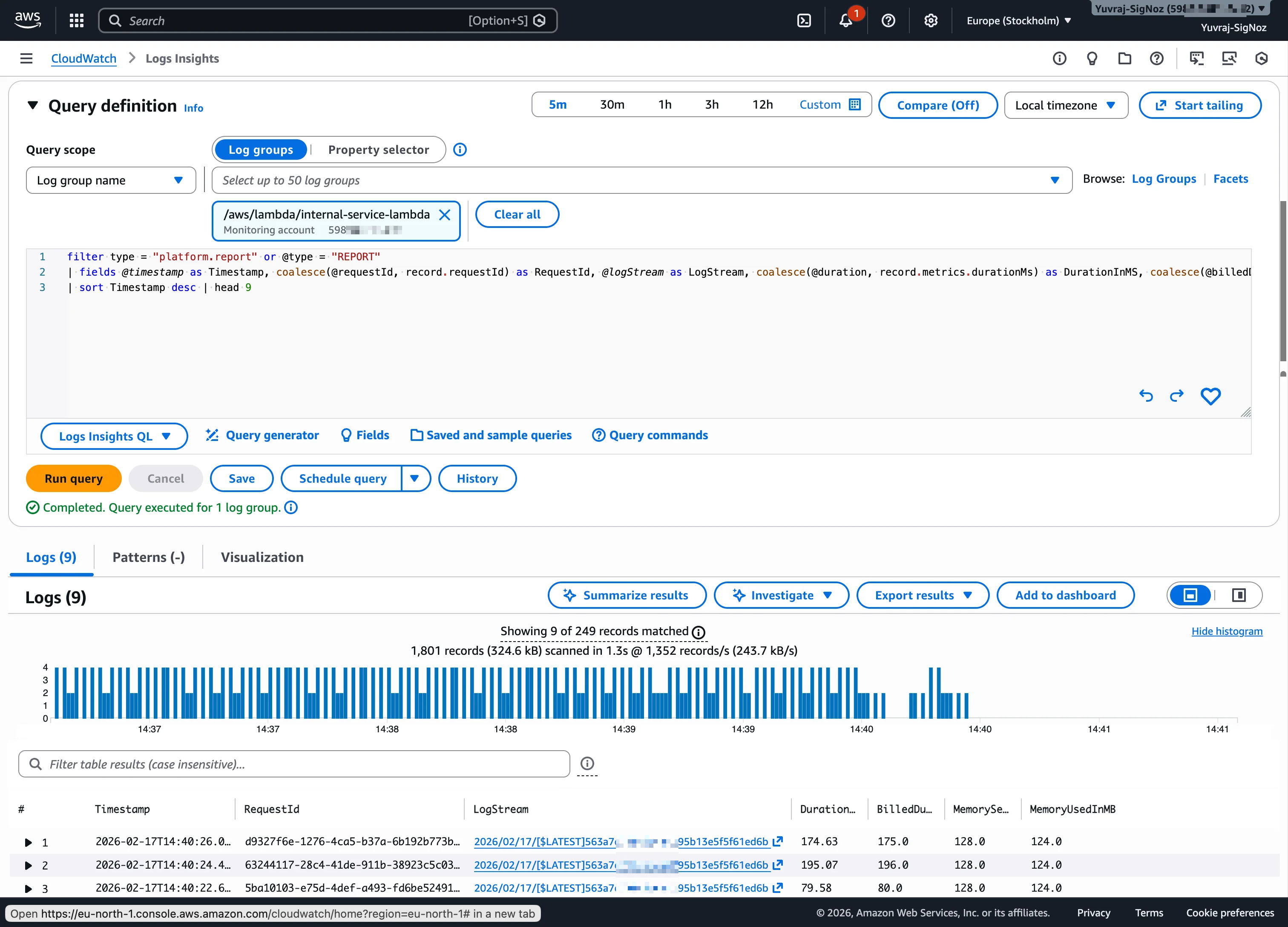

CloudWatch Logs Insights is the primary tool for log investigation. It supports filtering, parsing, aggregation, and visualization of log data. You write queries using a purpose-built syntax with commands like fields, filter, stats, sort, and parse. It is effective for answering questions like "which Lambda function threw the most errors in the last hour" or "show me all log events matching a specific request ID."

CloudWatch Metrics Insights supports a SQL-like syntax for querying metrics across namespaces. It is useful for high-cardinality metric analysis, like finding the top 10 EC2 instances by CPU utilization or comparing latency across Lambda functions.

The investigation gap is context switching. Metrics, logs, and traces live in separate views within the CloudWatch console. During incident triage, you may find yourself jumping between Logs Insights, the Metrics explorer, and X-Ray trace views to build a complete picture. Each tool works well individually, but correlating signals across them takes manual navigation.

Azure Monitor

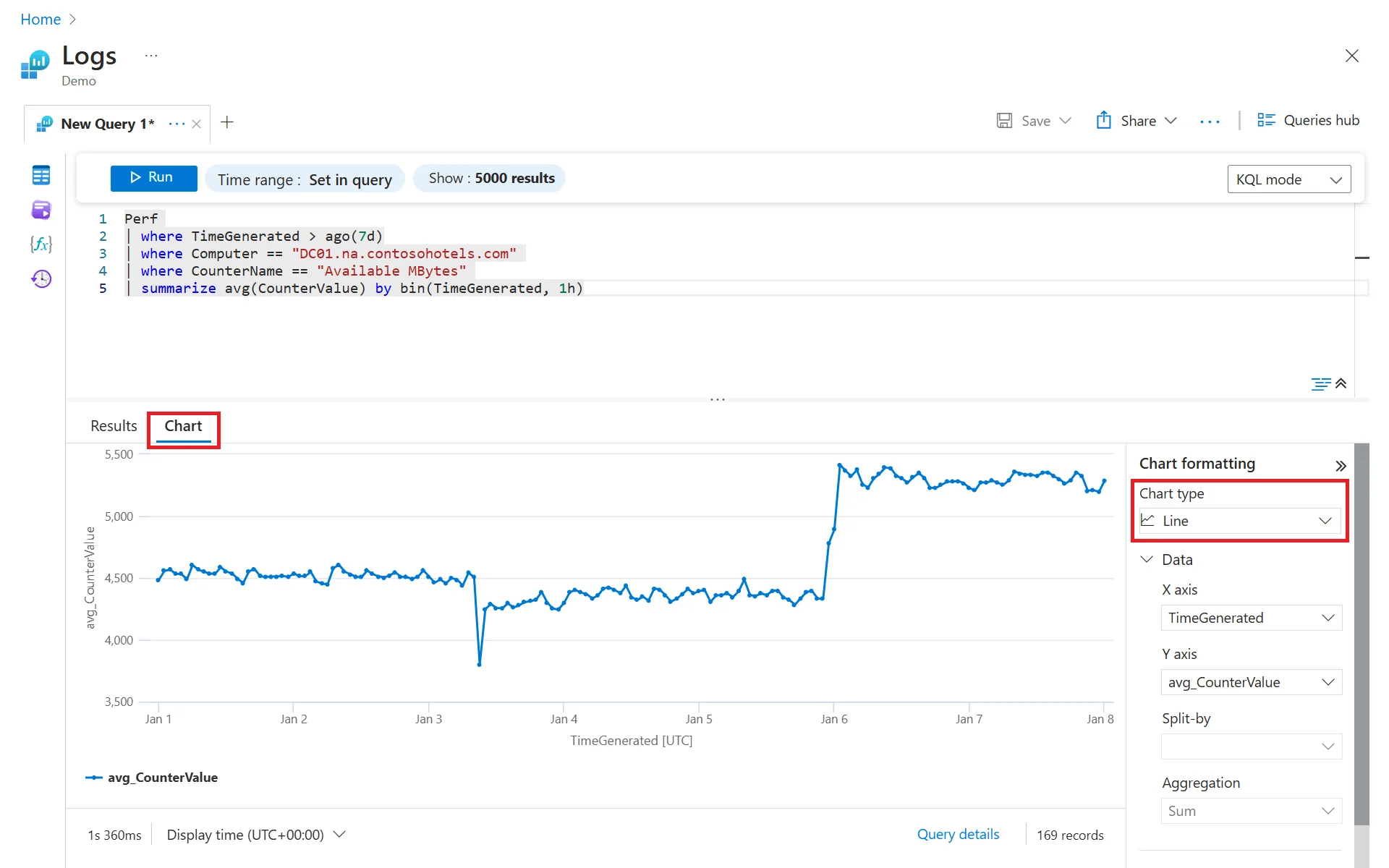

Azure Monitor's investigation experience is centered on Kusto Query Language (KQL), a powerful query language used across Log Analytics workspaces.

KQL supports rich operations: filtering, aggregation, joins, time-series analysis, rendering charts, and pattern detection. If your team already works with KQL (common in organizations using Microsoft Sentinel or Azure Data Explorer), the ramp-up time is minimal. If not, the learning curve is real, but KQL's expressiveness pays off once your team is comfortable with it.

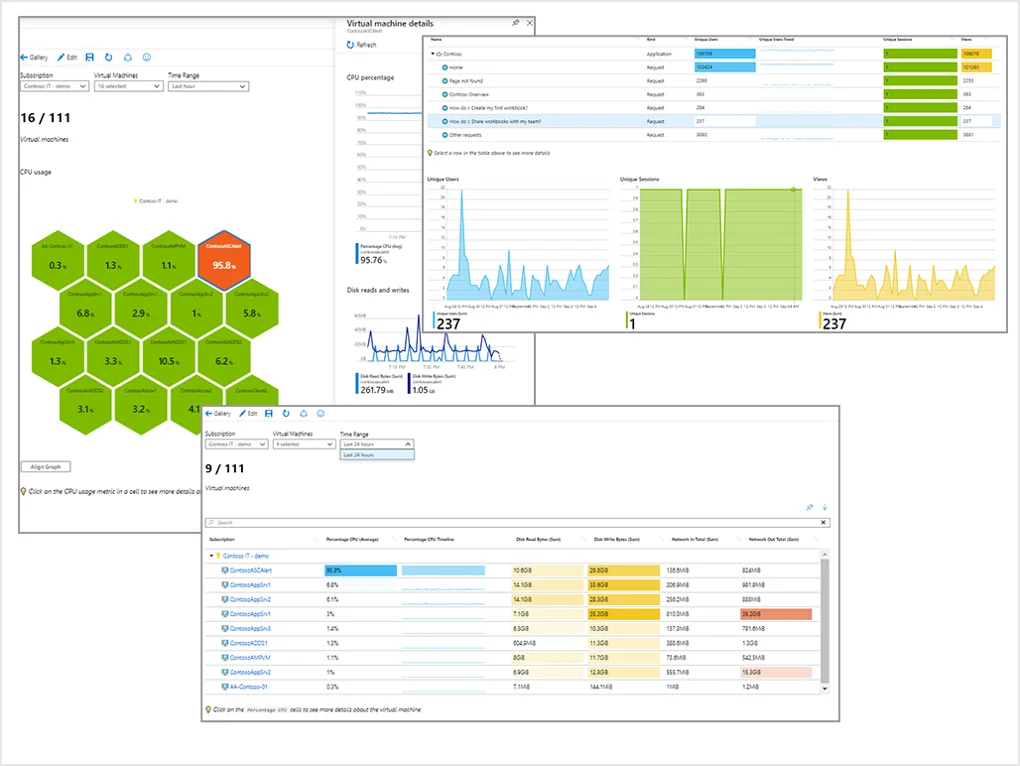

Azure Monitor can also run KQL queries across multiple Log Analytics workspaces and subscriptions. Cross-tenant querying is also possible when you have delegated access through Azure Lighthouse and appropriate RBAC permissions. This is valuable for organizations running centralized operations teams or managed-service providers that need visibility across different business units or customer environments.

Azure Monitor Workbooks add another layer, providing shareable visual reports that combine metrics, logs, and parameter-driven queries into a single view. They serve as investigation templates that teams can reuse during incidents.

The tradeoff is that KQL's power comes with workspace design discipline. Query performance and cost depend on how you structure your workspaces, what data you ingest, and how you write your queries. Poorly designed workspace architectures can make investigation slower and more expensive.

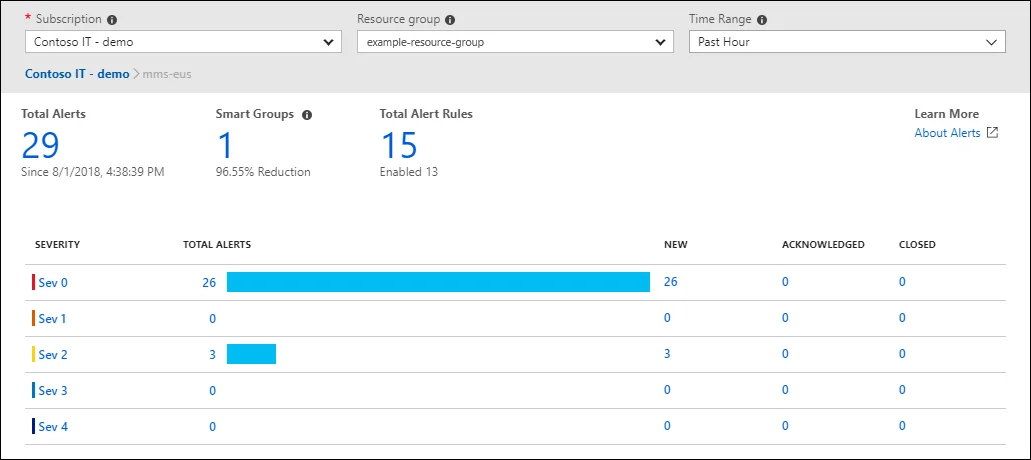

Alerting and Incident Response

Alerting is where monitoring turns into operations. Both tools provide mature alerting capabilities for their ecosystems, but the operational patterns differ.

CloudWatch

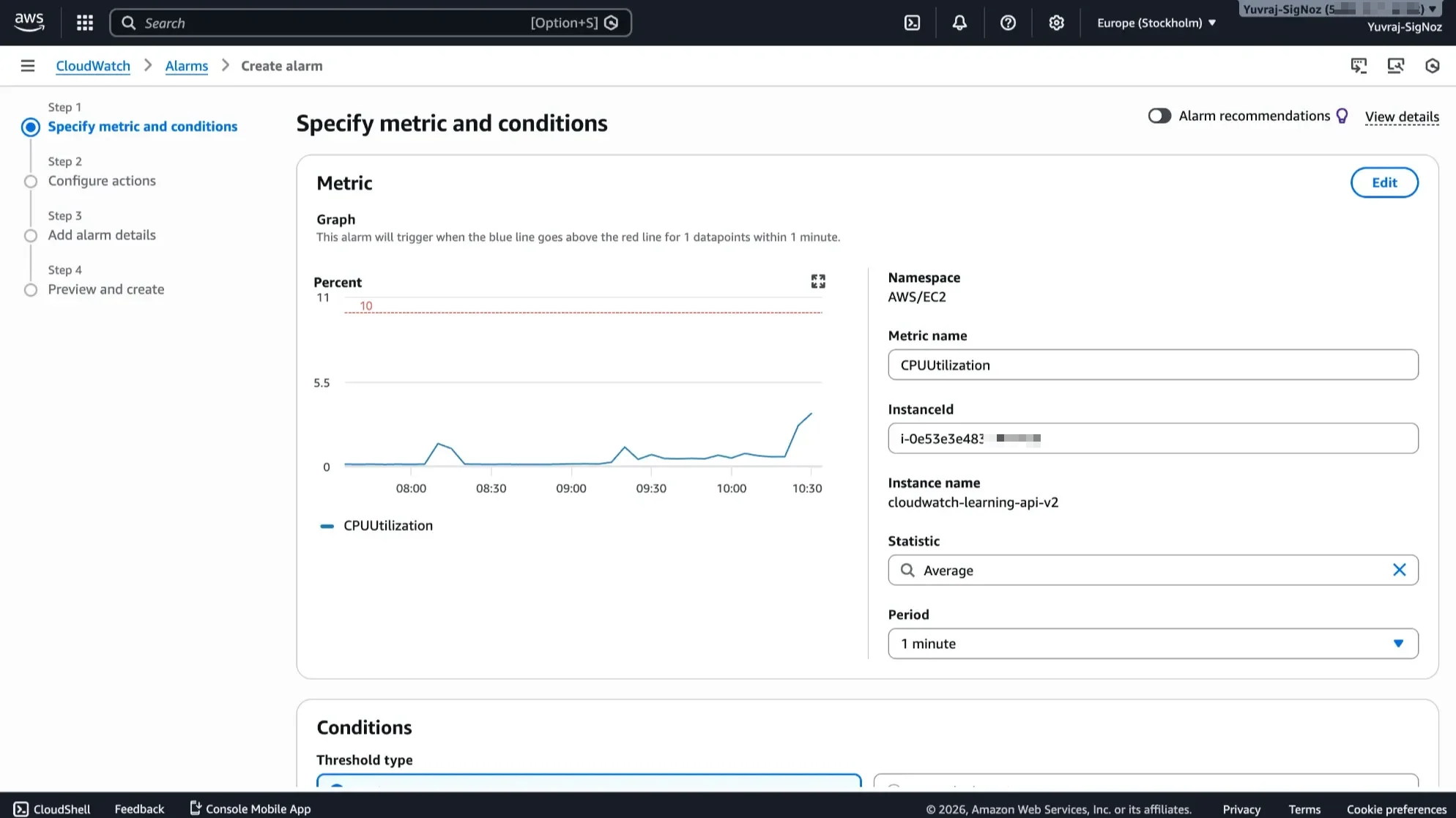

CloudWatch alarms evaluate metric conditions and trigger actions when thresholds are breached.

The alarm types cover common use cases:

- Metric alarms fire when a metric crosses a threshold for a specified number of evaluation periods.

- Composite alarms combine multiple alarm states using boolean logic (AND, OR, NOT), helping reduce noise by requiring multiple conditions before triggering.

- Anomaly detection alarms use machine learning models to set dynamic baselines, so you do not need to manually define thresholds for metrics with variable patterns.

Alarm actions integrate directly with AWS services: SNS for notifications, Auto Scaling for capacity adjustments, EC2 actions (stop, terminate, reboot), and Lambda for custom remediation. This makes CloudWatch alarms a natural fit for automated response workflows within AWS.

The operational challenge at scale is alarm hygiene. Teams that create alarms reactively, without regular cleanup, end up with stale or orphaned alarms that add noise and cost. Composite alarms help reduce noise, but they require upfront design work. Community discussions on Reddit frequently mention surprise alarm costs and the difficulty of tracking which alarms are still useful in large environments.

Azure Monitor

Azure Monitor Alerts provides a unified alerting surface across Azure signals, including platform metrics, log query results, activity log events, and Application Insights data.

Alert rules define the condition, and action groups define what happens when the condition is met. Action groups can route notifications through email, SMS, webhook, Azure Functions, Logic Apps, ITSM connectors, and more. This separation between detection (alert rules) and response (action groups) makes it easier to reuse notification routing across different alert types.

Alert rules inherit Azure RBAC, so you can scope who creates and manages alerts by resource group or subscription. Alert processing rules let you suppress or redirect alerts during maintenance windows or based on specific conditions.

Similar to CloudWatch, the operational challenge is tuning. Broad signal coverage means you can alert on almost anything, but without disciplined rule design and lifecycle management, alert noise grows. Teams running Azure Monitor at scale invest time in alert rule governance, suppression policies, and regular review of alert quality.

OpenTelemetry and Portability

OpenTelemetry (OTel) is the CNCF standard for vendor-neutral telemetry collection, covering traces, metrics, and logs. Both CloudWatch and Azure Monitor support OpenTelemetry, but the portability outcome depends on how deeply your operational workflows depend on each platform's native constructs.

CloudWatch

AWS maintains the AWS Distro for OpenTelemetry (ADOT), an AWS-supported distribution of the OpenTelemetry Collector and SDKs. ADOT lets you instrument applications using standard OTel APIs and route telemetry to CloudWatch, X-Ray, or other backends.

If your instrumentation stays OTel-native (using OTel SDKs and semantic conventions rather than AWS-specific SDK calls), your application code remains portable. You can switch backends by changing the collector configuration without re-instrumenting your services.

The lock-in risk lives in the operations layer. If your team builds runbooks, dashboards, alarm pipelines, and investigation workflows around CloudWatch-specific features (Logs Insights syntax, CloudWatch alarm actions, CloudWatch dashboards), those workflows do not transfer to another platform. The application telemetry may be portable, but the operational muscle memory is not.

Azure Monitor

Azure Monitor offers the Azure Monitor OpenTelemetry Distro, which provides auto-instrumentation and manual instrumentation support for .NET, Java, Node.js, and Python applications. The distro routes OTel telemetry into Application Insights and Log Analytics.

The portability story is similar to CloudWatch. OTel-native instrumentation keeps your application code vendor-neutral. But your KQL queries, Workbook templates, alert rule configurations, and workspace architecture are Azure-specific. If you decide to move to a different backend, you carry over the telemetry pipeline but rebuild the operational layer.

For teams evaluating portability, the practical question is: how much of your total observability investment is in instrumentation versus operations? The more your team invests in platform-specific query patterns, dashboards, and alert workflows, the higher the switching cost, regardless of which cloud you are on.

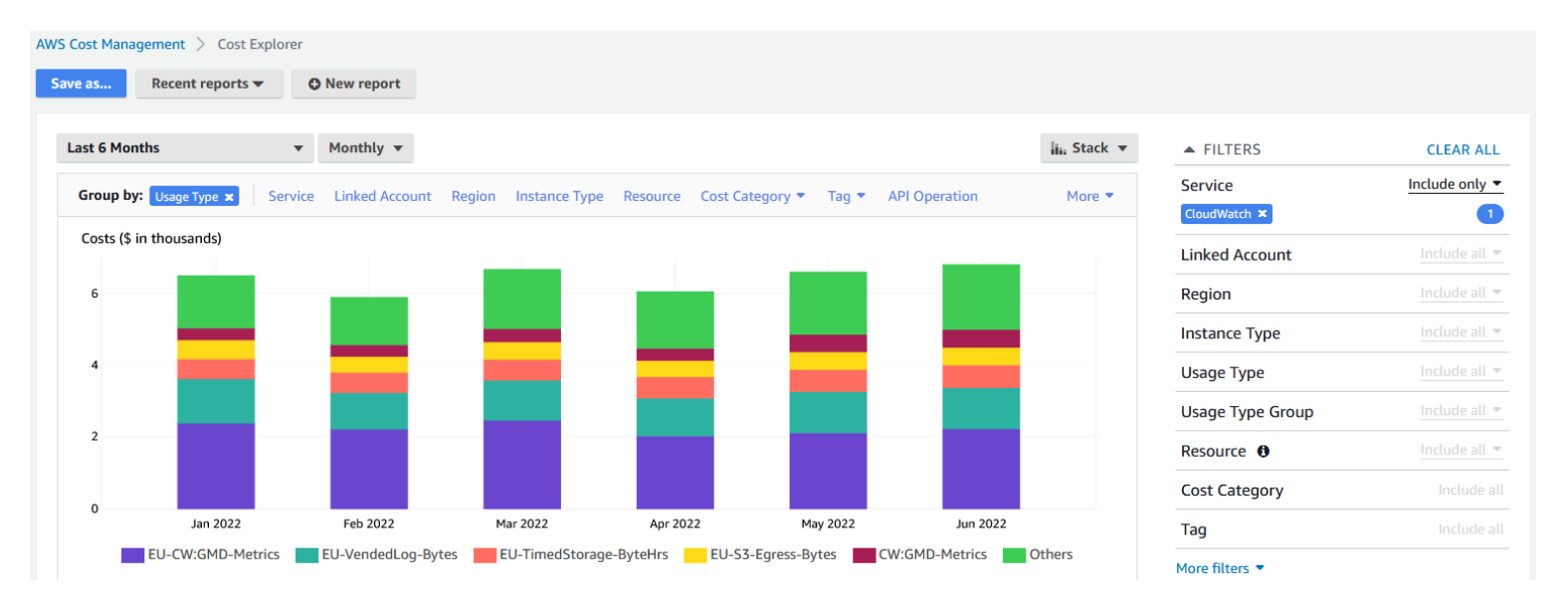

Cost and Governance

Cost predictability is one of the most common pain points in practitioner discussions about both tools. Neither platform has a simple pricing model, and both can produce surprise bills if you do not manage usage proactively.

CloudWatch

CloudWatch pricing is multi-dimensional. You are billed across several independent axes.

The prices below are for US East (N. Virginia) as of February 2026. CloudWatch pricing is region-specific, so rates differ across AWS regions. Always verify against the official AWS CloudWatch pricing page for your region.

- Custom metrics: $0.30 per metric per month (first 10,000), decreasing at higher volumes. Most basic monitoring metrics sent by AWS services by default are free, but detailed monitoring and custom metrics are billed.

- Logs ingestion: Starts at $0.50 per GB after the first 5 GB/month free (rates and tiering vary by log type and region).

- Logs storage: $0.03 per GB-month for CloudWatch Logs storage (pricing can vary by log class and region). Logs stored beyond the default "never expire" retention setting accumulate costs indefinitely.

- Logs Insights queries: $0.005 per GB of data scanned.

- Alarms: $0.10 per standard-resolution metric alarm per month, $0.50 per composite alarm. Anomaly detection alarms cost $0.30 per alarm (standard-resolution) or $0.90 (high-resolution) because each one creates three underlying metrics (the original plus upper and lower bounds).

- Dashboards: $3.00 per dashboard per month beyond the first 3 free.

- API calls: GetMetricData, PutMetricData, and other API calls have per-request pricing.

One thing to watch: enabling advanced features like Container Insights or CloudWatch Synthetics generates charges across multiple buckets simultaneously (logs, metrics, Lambda invocations, S3 storage). Estimating the cost of a feature without accounting for its downstream impact can materially undercount the real spend. For a detailed breakdown of every pricing axis and how they compound, see the complete CloudWatch pricing guide.

The common cost surprises at scale come from three areas. First, log groups without retention policies accumulate storage costs indefinitely. Second, high-cardinality custom metrics (many unique dimension combinations) multiply metric counts faster than expected. Third, heavy Logs Insights querying during incidents can scan large volumes and spike query costs.

Governance practices that help: enforce retention policies on every log group, control metric cardinality through naming conventions and dimension limits, audit alarms regularly for staleness, and monitor CloudWatch spend as its own cost category in AWS Cost Explorer. For a practical walkthrough of cost-reduction strategies, see CloudWatch cost optimization techniques.

Azure Monitor

Azure Monitor pricing centers on Log Analytics workspace usage, with additional costs for alerting and other platform features.

Like CloudWatch, Azure Monitor pricing is region-specific. The prices below are approximate pay-as-you-go rates as of February 2026. Always verify against the official Azure Monitor pricing page and use the region filter for your workspace location.

The major cost components are:

- Log ingestion (Analytics Logs): Billed per GB ingested, with the first 5 GB per billing account per month free. Per-GB rates vary by region, and commitment tiers (starting at 100 GB/day) lower the effective rate.

- Log retention: Analytics Logs include 31 days of retention by default (up to 90 days included when Microsoft Sentinel is enabled, depending on plan and tier). Beyond that, interactive retention (up to 2 years) and long-term retention (up to 12 years) are both billed per GB-month at rates that vary by region. Check the Azure pricing calculator for current rates in your workspace region.

- Log queries: Query cost is included for Analytics Logs. Queries on Basic and Auxiliary Logs are billed per GB scanned, and querying older or archived data can involve additional restore and search-job charges.

- Alerts: Metric alert rules are priced per monitored time-series. Log search alert rules are priced per evaluation frequency.

- Data export: Exporting data from Log Analytics to Storage or Event Hub has per-GB costs.

Workspace architecture directly affects cost. A single centralized workspace simplifies querying but can concentrate ingestion costs. Multiple workspaces give you finer cost allocation but add query complexity when you need cross-workspace analysis. DCRs help by letting you control what data gets ingested, filtering out low-value logs before they reach the workspace.

Teams running Azure Monitor at scale should baseline expected log volume and query patterns before large rollouts. Costs can vary significantly depending on how much data you ingest, how long you retain it, and how frequently you query it.

CloudWatch vs Azure Monitor at a Glance

| Aspect | AWS CloudWatch | Azure Monitor |

|---|---|---|

| Best fit | AWS-first environments | Azure-first environments |

| Metrics | Auto-published for AWS services, custom via API/agent. Tiered retention (15d/63d/455d by granularity). | Platform metrics auto-collected, 93-day retention. Custom metrics via ingestion API or OTel. |

| Logs | CloudWatch Logs with log groups/streams. Logs Insights for querying. | Log Analytics workspaces with KQL. DCRs for ingestion control. |

| APM/Traces | Application Signals + X-Ray. Requires ADOT or AWS SDK instrumentation. | Application Insights. Supports Azure Monitor OTel Distro or classic SDKs. |

| Query language | Logs Insights (custom syntax) + Metrics Insights (SQL-like) | KQL (Kusto Query Language), cross-workspace capable |

| Alerting | Metric alarms, composite alarms, anomaly detection. Actions via SNS/Lambda/Auto Scaling. | Alert rules on metrics + logs. Action groups with email/webhook/Functions/Logic Apps routing. |

| OpenTelemetry | AWS Distro for OpenTelemetry (ADOT) | Azure Monitor OpenTelemetry Distro (.NET, Java, Node.js, Python) |

| Cost model | Multi-axis: metrics, logs ingest/store, queries, alarms, dashboards, API calls | Centered on Log Analytics ingestion/retention, with alerting and export costs |

| Cross-account/cross-resource | Cross-account observability for AWS Organizations | Cross-workspace and cross-subscription KQL queries (cross-tenant via Azure Lighthouse) |

| Governance | IAM-based access, per-resource alarm management | Azure RBAC, DCR-based collection governance, alert processing rules |

Choose CloudWatch if your primary workloads run on AWS, your team is already familiar with AWS operational patterns, and you need tight integration with AWS automation services (Auto Scaling, Lambda, EventBridge).

Choose Azure Monitor if your primary workloads run on Azure, your team is comfortable with KQL or willing to invest in learning it, and you need native governance alignment with Azure RBAC and subscription structures.

If your environment is genuinely multi-cloud, standardize your application instrumentation on OpenTelemetry. Keep native cloud monitoring for infrastructure-level signals where it is strongest, and evaluate whether a unified observability layer can reduce the context switching and operational duplication of running two parallel monitoring stacks.

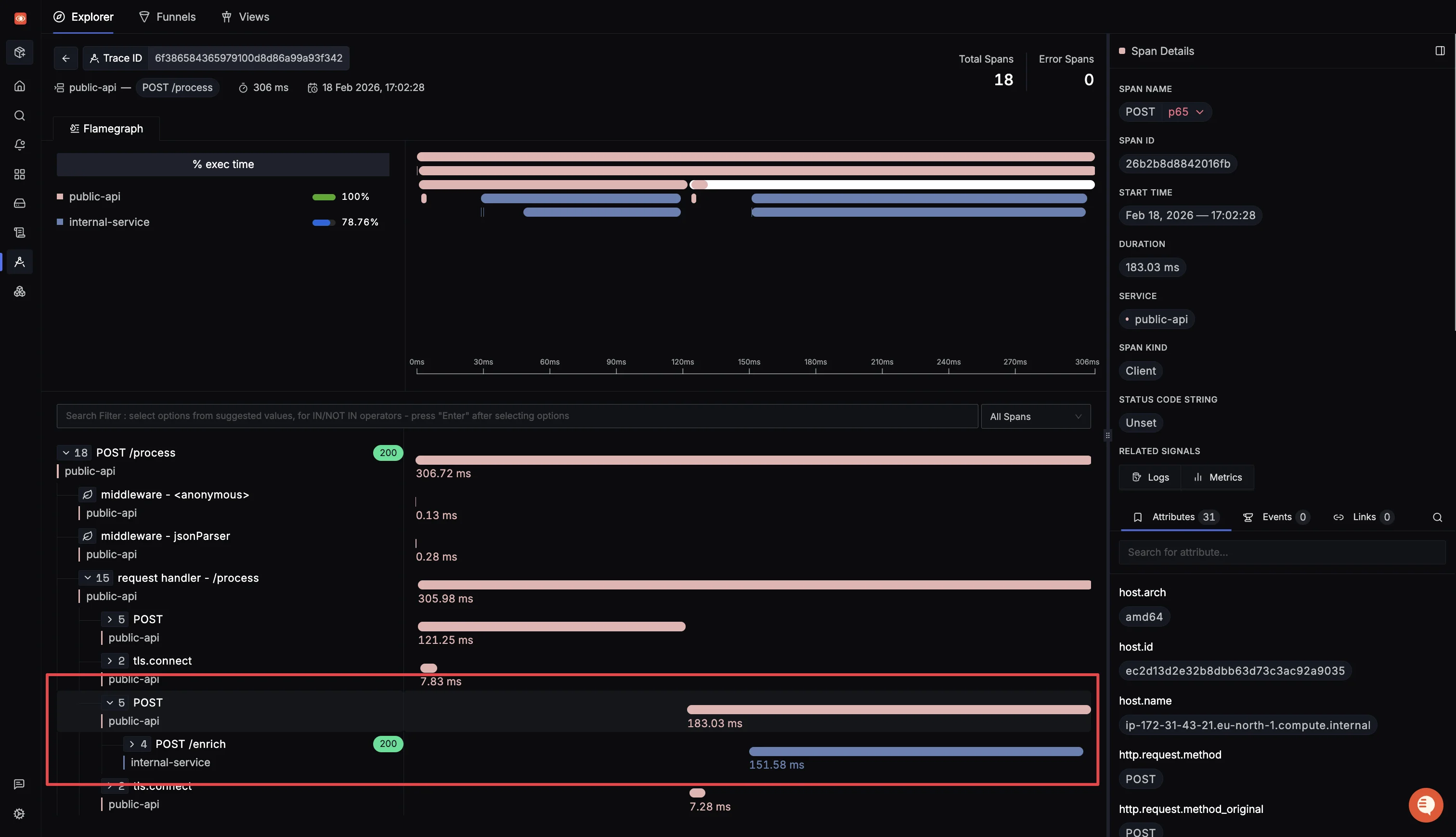

Where SigNoz Fits (If Native Tooling Becomes a Bottleneck)

If your team is AWS-first or Azure-first, native monitoring is usually the fastest starting point. But as environments grow, teams often hit friction points that native tools were not designed to solve.

The three patterns that commonly push teams toward an additional observability layer:

- Investigation context switching. During incidents, jumping between CloudWatch Logs Insights, Metrics Explorer, and X-Ray (or between Azure Monitor Logs, Metrics Explorer, and Application Insights) slows down root-cause analysis. A unified platform that correlates metrics, logs, and traces in a single view reduces the clicks between discovery and resolution.

Portability needs. If you operate across AWS and Azure (or plan to), maintaining parallel monitoring stacks with different query languages, alert configurations, and dashboards doubles your operational overhead. An OpenTelemetry-native backend lets you standardize instrumentation and route telemetry from any cloud to one place.

Cost-governance predictability. Both CloudWatch and Azure Monitor have multi-dimensional pricing that can produce surprise bills at scale. Teams looking for simpler, usage-based pricing often evaluate alternatives that charge per GB of ingested data without separate axes for metrics, alarms, dashboards, and API calls.

SigNoz is an OpenTelemetry-native observability platform that unifies metrics, traces, and logs in a single application. It supports both cloud and self-hosted deployments.

For AWS-first teams, SigNoz provides one-click AWS integrations to collect CloudWatch metrics and logs. You can also use manual OpenTelemetry collection paths for finer control over what gets forwarded. For Azure-first teams, SigNoz supports Azure monitoring workflows including centralized ingestion through Event Hub and OTel collectors.

One important caveat: cloud-native forwarding paths (like one-click integrations) still incur provider-side charges for CloudWatch API calls, log delivery, and similar usage. Teams that optimize heavily for cost control usually evaluate the manual collection approach alongside one-click setup.

Get Started with SigNoz

You can choose between various deployment options in SigNoz. The easiest way to get started with SigNoz is SigNoz cloud. We offer a 30-day free trial account with access to all features.

Those who have data privacy concerns and can't send their data outside their infrastructure can sign up for either enterprise self-hosted or BYOC offering.

Those who have the expertise to manage SigNoz themselves or just want to start with a free self-hosted option can use our community edition.

Conclusion

The choice between CloudWatch and Azure Monitor is driven primarily by your cloud footprint. If your workloads are on AWS, CloudWatch gives you the fastest path to baseline visibility with the deepest native integration. If your workloads are on Azure, Azure Monitor delivers the same advantage within the Azure ecosystem, with added strength in KQL-based analysis and governance alignment.

Where both tools require careful attention is cost governance and operational hygiene. Both can produce surprise bills without proactive retention policies, cardinality management, and alert lifecycle reviews. And both introduce switching costs through platform-specific queries, dashboards, and automation workflows, even when your application instrumentation uses OpenTelemetry.

If you are evaluating both tools, run a time-boxed pilot (2-4 weeks) against real workloads. Measure three things: how quickly your team can go from alert to root cause, how predictable the costs are at your expected log and metric volume, and how much operational overhead the tool adds to your incident response process. Those three signals will tell you more than any feature comparison matrix.

Hope we answered all your questions regarding AWS CloudWatch vs Azure Monitor. If you have more questions, feel free to use the SigNoz AI chatbot, or join our slack community.

You can also subscribe to our newsletter for insights from observability nerds at SigNoz, get open source, OpenTelemetry, and devtool building stories straight to your inbox.