This guide shows you how to instrument your Go application with OpenTelemetry to send metrics to SigNoz. You will learn how to collect runtime metrics, auto-instrument libraries, and create custom metrics.

Prerequisites

- Go 1.21 or later

- A SigNoz Cloud account or self-hosted SigNoz instance

- Your application code

Send metrics to SigNoz

Step 1. Set environment variables

Set the following environment variables to configure the OpenTelemetry exporter:

export OTEL_EXPORTER_OTLP_METRICS_ENDPOINT="https://ingest.<region>.signoz.cloud:443"

export OTEL_EXPORTER_OTLP_METRICS_HEADERS="signoz-ingestion-key=<your-ingestion-key>"

export OTEL_RESOURCE_ATTRIBUTES="service.name=<service-name>"

Verify these values:

<region>: Your SigNoz Cloud region<your-ingestion-key>: Your SigNoz ingestion key<service-name>: A descriptive name for your service (e.g.,payment-service)

Step 1. Set environment variables

Add these environment variables to your deployment manifest:

env:

- name: OTEL_EXPORTER_OTLP_METRICS_ENDPOINT

value: 'https://ingest.<region>.signoz.cloud:443'

- name: OTEL_EXPORTER_OTLP_METRICS_HEADERS

value: 'signoz-ingestion-key=<your-ingestion-key>'

- name: OTEL_RESOURCE_ATTRIBUTES

value: 'service.name=<service-name>'

Verify these values:

<region>: Your SigNoz Cloud region<your-ingestion-key>: Your SigNoz ingestion key<service-name>: A descriptive name for your service (e.g.,payment-service)

Step 1. Set environment variables (PowerShell)

$env:OTEL_EXPORTER_OTLP_METRICS_ENDPOINT = "https://ingest.<region>.signoz.cloud:443"

$env:OTEL_EXPORTER_OTLP_METRICS_HEADERS = "signoz-ingestion-key=<your-ingestion-key>"

$env:OTEL_RESOURCE_ATTRIBUTES = "service.name=<service-name>"

Verify these values:

<region>: Your SigNoz Cloud region<your-ingestion-key>: Your SigNoz ingestion key<service-name>: A descriptive name for your service

Step 1. Set environment variables in Dockerfile

Add environment variables to your Dockerfile:

# ... build stages ...

# Set OpenTelemetry environment variables

ENV OTEL_EXPORTER_OTLP_METRICS_ENDPOINT="https://ingest.<region>.signoz.cloud:443"

ENV OTEL_EXPORTER_OTLP_METRICS_HEADERS="signoz-ingestion-key=<your-ingestion-key>"

ENV OTEL_RESOURCE_ATTRIBUTES="service.name=<service-name>"

CMD ["./main"]

Or pass them at runtime using docker run:

docker run -e OTEL_EXPORTER_OTLP_METRICS_ENDPOINT="https://ingest.<region>.signoz.cloud:443" \

-e OTEL_EXPORTER_OTLP_METRICS_HEADERS="signoz-ingestion-key=<your-ingestion-key>" \

-e OTEL_RESOURCE_ATTRIBUTES="service.name=<service-name>" \

your-image:latest

Verify these values:

<region>: Your SigNoz Cloud region<your-ingestion-key>: Your SigNoz ingestion key<service-name>: A descriptive name for your service (e.g.,payment-service)

Step 2. Install OpenTelemetry packages

Run the following command in your project directory to install the necessary packages for metrics and OTLP export:

go get \

go.opentelemetry.io/otel \

go.opentelemetry.io/otel/sdk/metric \

go.opentelemetry.io/otel/exporters/otlp/otlpmetric/otlpmetricgrpc \

go.opentelemetry.io/otel/attribute

Step 3. Initialize the Meter Provider

Create a helper function to configure the OpenTelemetry Meter Provider. This provider is responsible for creating meters and exporting metrics. It automatically uses the environment variables configured in Step 1.

package main

import (

"context"

"fmt"

"time"

"go.opentelemetry.io/otel"

"go.opentelemetry.io/otel/exporters/otlp/otlpmetric/otlpmetricgrpc"

"go.opentelemetry.io/otel/sdk/metric"

"go.opentelemetry.io/otel/sdk/resource"

)

func initMeterProvider(ctx context.Context) (func(context.Context) error, error) {

// Create the OTLP exporter

// It will automatically use OTEL_EXPORTER_OTLP_METRICS_ENDPOINT and headers from env

exporter, err := otlpmetricgrpc.New(ctx)

if err != nil {

return nil, fmt.Errorf("new otlp metric grpc exporter failed: %w", err)

}

// Create the resource with attributes from environment (OTEL_RESOURCE_ATTRIBUTES)

// and default host/process attributes

res, err := resource.New(ctx,

resource.WithFromEnv(),

resource.WithHost(),

resource.WithProcess(),

resource.WithOS(),

)

if err != nil {

return nil, fmt.Errorf("new resource failed: %w", err)

}

// Create the MeterProvider with the exporter and resource

// Set a periodic reader to export metrics every 10 seconds

mp := metric.NewMeterProvider(

metric.WithResource(res),

metric.WithReader(metric.NewPeriodicReader(exporter, metric.WithInterval(10*time.Second))),

)

// Set the global MeterProvider

otel.SetMeterProvider(mp)

return mp.Shutdown, nil

}

Step 4. Instrument your application

Here's a complete example that tracks HTTP requests with a counter metric:

package main

import (

"context"

"log"

"net/http"

"time"

"go.opentelemetry.io/otel"

"go.opentelemetry.io/otel/attribute"

"go.opentelemetry.io/otel/metric"

)

func main() {

ctx := context.Background()

// Initialize Meter Provider

shutdown, err := initMeterProvider(ctx)

if err != nil {

log.Fatalf("Failed to initialize meter provider: %v", err)

}

defer func() {

if err := shutdown(context.Background()); err != nil {

log.Printf("Error shutting down meter provider: %v", err)

}

}()

// Get a meter from the global provider

meter := otel.Meter("my-app-meter")

// Define a counter metric

reqCounter, err := meter.Int64Counter(

"http.requests",

metric.WithDescription("Total request count"),

)

if err != nil {

log.Fatal(err)

}

// Handler with Instrumentation

http.HandleFunc("/hello", func(w http.ResponseWriter, r *http.Request) {

start := time.Now()

// Simulate work

time.Sleep(50 * time.Millisecond)

w.Write([]byte("ok"))

// Record Count

reqCounter.Add(ctx, 1, metric.WithAttributes(

attribute.String("method", r.Method),

attribute.String("route", "/hello"),

attribute.Int("status", 200),

))

log.Printf("Request completed in %v", time.Since(start))

})

log.Println("Server listening on :8080...")

log.Fatal(http.ListenAndServe(":8080", nil))

}

Library instrumentation

You can use OpenTelemetry's auto-instrumentation packages and middleware for popular Go libraries to automatically collect metrics, or create custom metrics manually.

The runtime package provides auto-instrumentation for Go runtime metrics such as memory usage, goroutine count, and GC stats.

Install

go get go.opentelemetry.io/contrib/instrumentation/runtime

Usage

package main

import (

"context"

"log"

"go.opentelemetry.io/contrib/instrumentation/runtime"

"go.opentelemetry.io/otel"

)

func main() {

ctx := context.Background()

// Initialize Meter Provider (uses env vars from Step 1)

shutdown, err := initMeterProvider(ctx)

if err != nil {

log.Fatalf("Failed to initialize meter provider: %v", err)

}

defer func() {

if err := shutdown(context.Background()); err != nil {

log.Printf("Error shutting down meter provider: %v", err)

}

}()

// Auto Go runtime metrics (go.memory.used, go.goroutine.count, ...)

if err := runtime.Start(runtime.WithMeterProvider(otel.GetMeterProvider())); err != nil {

log.Fatal(err)

}

log.Println("Runtime metrics collection started...")

select {}

}

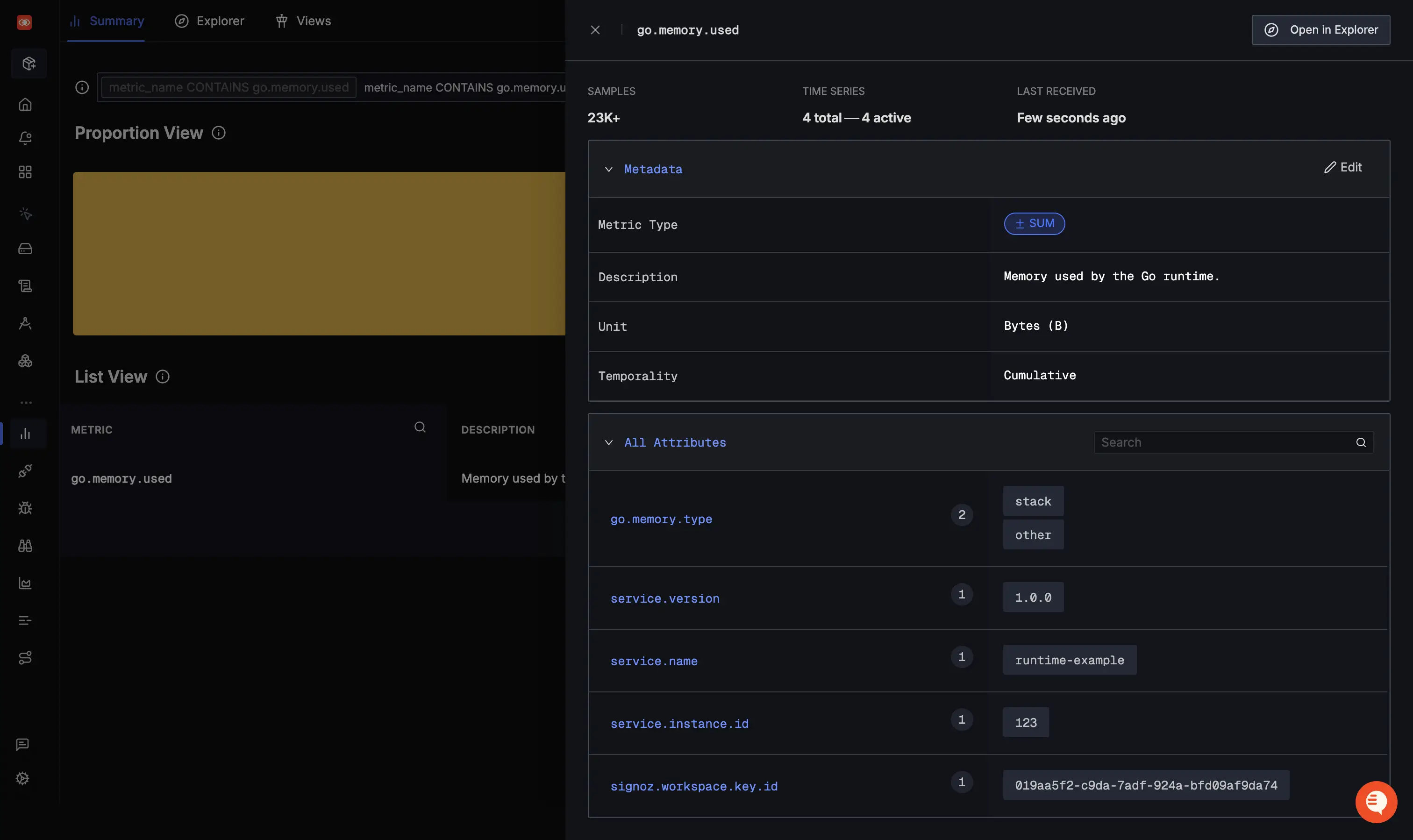

You can also use the pre-configured dashboard to monitor golang runtime metrics.

Exported Metrics

The runtime package automatically exports the following Go runtime metrics:

Memory Metrics:

go.memory.used(UpDownCounter) - Memory used by the Go runtimego.memory.limit(UpDownCounter) - Go runtime memory limit configured by the user, if a limit existsgo.memory.allocated(Counter) - Memory allocated to the heap by the applicationgo.memory.allocations(Counter) - Count of allocations to the heap by the applicationgo.memory.gc.goal(UpDownCounter) - Heap size target for the end of the GC cycle

Concurrency Metrics:

go.goroutine.count(UpDownCounter) - Count of live goroutinesgo.processor.limit(UpDownCounter) - The number of OS threads that can execute user-level Go code simultaneously

Configuration Metrics:

go.config.gogc(UpDownCounter) - Heap size target percentage configured by the user (default: 100)

Scheduling Metrics:

go.schedule.duration(Histogram) - Time goroutines spent in the scheduler in a runnable state before running

Metric names and types follow the OpenTelemetry semantic conventions for Go runtime metrics. For the complete specification, see the Go runtime semantic conventions.

The otelhttp package provides middleware that automatically collects metrics for HTTP requests, such as request count, duration, and size.

Install

go get go.opentelemetry.io/contrib/instrumentation/net/http/otelhttp

Usage

package main

import (

"context"

"log"

"net/http"

"go.opentelemetry.io/contrib/instrumentation/net/http/otelhttp"

)

func main() {

ctx := context.Background()

// Initialize Meter Provider (uses env vars from Step 1)

shutdown, err := initMeterProvider(ctx)

if err != nil {

log.Fatalf("Failed to initialize meter provider: %v", err)

}

defer func() {

if err := shutdown(context.Background()); err != nil {

log.Printf("Error shutting down meter provider: %v", err)

}

}()

hello := http.HandlerFunc(func(w http.ResponseWriter, r *http.Request) {

w.Write([]byte("hi"))

})

// Auto HTTP server metrics via otelhttp middleware

h := otelhttp.NewHandler(hello, "hello-handler")

log.Println("Server listening on :8080...")

log.Fatal(http.ListenAndServe(":8080", h))

}

Exported Metrics

The otelhttp package automatically exports the following HTTP server metrics:

http.server.request.duration- Duration of HTTP server requests (histogram)http.server.active_requests- Number of active HTTP server requests (up/down counter)http.server.request.body.size- Size of HTTP server request bodies (histogram)http.server.response.body.size- Size of HTTP server response bodies (histogram)

Metrics are tagged with attributes such as:

http.request.method- HTTP request method (GET, POST, etc.)http.response.status_code- HTTP response status codehttp.route- The matched route patternurl.scheme- The URI scheme (http, https)

Metric names and attributes follow the OpenTelemetry semantic conventions. For the complete list and latest updates, see the HTTP semantic conventions.

Create custom metrics to track business logic with all metric types: Counter, UpDownCounter, Histogram, and Gauge.

Metric Types

- Counter: A value that only goes up (e.g., total requests)

- UpDownCounter: A value that can go up or down (e.g., queue size)

- Histogram: A distribution of values (e.g., request duration)

- Gauge: A current value (e.g., temperature)

Usage

package main

import (

"context"

"log"

"net/http"

"time"

"go.opentelemetry.io/otel"

"go.opentelemetry.io/otel/attribute"

"go.opentelemetry.io/otel/metric"

)

func main() {

ctx := context.Background()

// Initialize Meter Provider (uses env vars from Step 1)

shutdown, err := initMeterProvider(ctx)

if err != nil {

log.Fatalf("Failed to initialize meter provider: %v", err)

}

defer func() {

if err := shutdown(context.Background()); err != nil {

log.Printf("Error shutting down meter provider: %v", err)

}

}()

// Get a meter from the global provider

meter := otel.Meter("manual-http")

// Define all metric types

reqCounter, _ := meter.Int64Counter("http.requests", metric.WithDescription("Total request count"))

reqDuration, _ := meter.Float64Histogram("http.duration_ms", metric.WithDescription("Request latency"))

activeReqs, _ := meter.Int64UpDownCounter("http.active_requests", metric.WithDescription("Requests currently in flight"))

// Handler with Instrumentation

http.HandleFunc("/hello", func(w http.ResponseWriter, r *http.Request) {

start := time.Now()

// Track Active Requests (UpDownCounter)

activeReqs.Add(ctx, 1)

defer activeReqs.Add(ctx, -1)

// Simulate work

time.Sleep(50 * time.Millisecond)

w.Write([]byte("ok"))

// Record Histogram

reqDuration.Record(ctx, float64(time.Since(start).Milliseconds()))

// Record Counter

reqCounter.Add(ctx, 1, metric.WithAttributes(

attribute.String("method", r.Method),

attribute.String("route", "/hello"),

attribute.Int("status", 200),

))

})

log.Println("Server listening on :8080...")

log.Fatal(http.ListenAndServe(":8080", nil))

}

Exported Metrics

The custom instrumentation example above exports the following metrics:

http.requests(Counter) - Total count of HTTP requests with attributes for method, route, and status codehttp.duration_ms(Histogram) - Distribution of HTTP request latencies in millisecondshttp.active_requests(UpDownCounter) - Current number of requests being processed

You can create additional metrics based on your application's needs by using the meter to create counters, histograms, up/down counters, and gauges to track business metrics, performance indicators, or any other relevant data.

Validate

Once you have configured your application to start sending metrics to SigNoz, you can start visualizing the metrics in the metrics explorer.

Troubleshooting

Metrics not appearing?

Check Environment Variables: Ensure

OTEL_EXPORTER_OTLP_METRICS_ENDPOINTis set correctly:- For gRPC (default in this guide):

https://ingest.<region>.signoz.cloud:443 - For HTTP (if using

otlpmetrichttp):https://ingest.<region>.signoz.cloud:443/v1/metrics

- For gRPC (default in this guide):

Check Exporter Protocol: This guide uses

otlpmetricgrpc. If you are behind a proxy that only supports HTTP/1.1, switch tootlpmetrichttp.Check Console Errors: The OpenTelemetry SDK prints errors to stderr by default. Check your application logs for any connection refused or authentication errors.

Resource Attributes: Ensure

service.nameis set. This helps you filter metrics by service in SigNoz.

Authentication errors

If you see errors like "Unauthorized" or "403 Forbidden":

- Verify your ingestion key is correct in

OTEL_EXPORTER_OTLP_METRICS_HEADERS - Ensure the header format is exactly:

signoz-ingestion-key=<your-key>(no extra spaces) - Check that your ingestion key is active in the SigNoz Cloud dashboard

"Connection Refused" errors

- If running locally and sending to SigNoz Cloud, check your internet connection and firewall.

- If sending to a self-hosted collector, ensure the collector is running and listening on port 4317 (gRPC) or 4318 (HTTP).

Setup OpenTelemetry Collector (Optional)

What is the OpenTelemetry Collector?

Think of the OTel Collector as a middleman between your app and SigNoz. Instead of your application sending data directly to SigNoz, it sends everything to the Collector first, which then forwards it along.

Why use it?

- Cleaning up data — Filter out noisy traces you don't care about, or remove sensitive info before it leaves your servers.

- Keeping your app lightweight — Let the Collector handle batching, retries, and compression instead of your application code.

- Adding context automatically — The Collector can tag your data with useful info like which Kubernetes pod or cloud region it came from.

- Future flexibility — Want to send data to multiple backends later? The Collector makes that easy without changing your app.

See Switch from direct export to Collector for step-by-step instructions to convert your setup.

For more details, see Why use the OpenTelemetry Collector? and the Collector configuration guide.

Next Steps

- Create Dashboards to visualize your metrics.

- Set up Alerts on your metrics.

- Instrument your Go application with traces to correlate metrics with traces for better observability.