Observability Engineering - A Practical Guide for Modern DevOps

Observability engineering has become a critical practice in modern software development and operations. As systems grow more complex and distributed, traditional monitoring approaches fall short. Observability engineering provides the tools and techniques to gain deep insights into system behavior, enabling teams to quickly identify and resolve issues. This guide explores the principles, tools, and best practices of observability engineering, helping you improve system reliability and performance in your DevOps workflows.

What is Observability Engineering?

Observability engineering is designing and implementing systems that allow you to understand their internal state based on external outputs. It is about tackling discovering issues within a service, understanding their nature, and figuring out the best way to resolve them.

As organizations transition from legacy systems to complex cloud-native setups, traditional monitoring methods often fall short. They can only handle known unknowns, like tracking predefined metrics such as application throughput or error rates, and they don’t adapt well to the complexities of microservices and distributed systems.

Observability doesn’t replace monitoring; it just means monitoring is one piece of the puzzle. Think of it like this: Observability is your overall approach to understanding your complex system, whereas monitoring is one of the actions you take to achieve that understanding.

Pillars of Observability

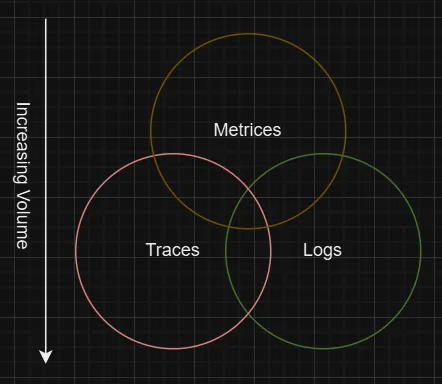

The three pillars of observability are:

- Logs: Detailed records of events within your system.

- Metrics: Quantitative measurements of system performance and behavior.

- Traces: End-to-end records of requests as they flow through your system.

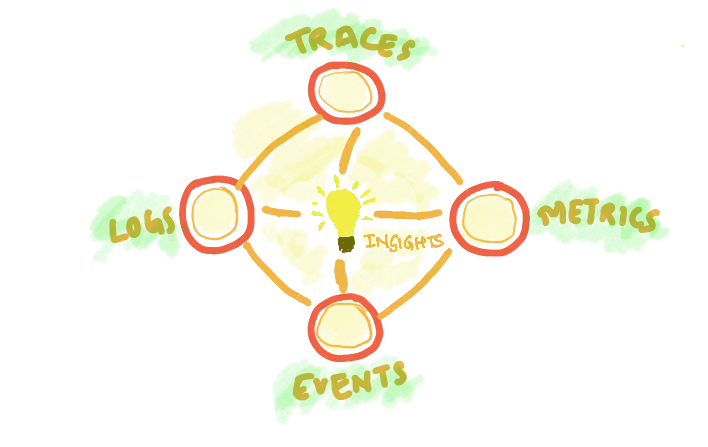

Redefining Observability

At SigNoz, we envision observability as a mesh or network rather than a set of pillars since these signals alone don’t constitute observability, nor does simply analyzing them mean you’re truly practicing observability. Instead, you need to integrate these signals into a broader strategy that includes continuous profiling, business metrics, CI/CD pipeline performance, and customer feedback. Only by combining these elements can you effectively execute a comprehensive observability practice.

Why Observability Engineering Matters for DevOps

Observability engineering is crucial for modern DevOps practices for several reasons:

- Improved incident response: Observability tools provide real-time insights, reducing Mean Time to Resolution (MTTR) for incidents.

- Enhanced system reliability: By understanding system behavior, you can proactively address potential issues before they impact users. Complex, distributed systems are used nowadays, and grasping system behavior can be challenging without the right tools. With observability, we can simplify this process, thereby making it easier to maintain and improve system reliability.

- Better collaboration: Observability tools offer a shared set of data and insights that different teams in an organization can use to understand how the system behaves. It creates a shared understanding of system performance across development and operations teams.

- Data-driven decision making: Observability helps businesses collect data from various sources on system performance and errors. This information is crucial for making informed decisions, enhancing system reliability and improving user experience.

- Improved Security: Observability tools help DevOps teams monitor system behavior, detect vulnerabilities, and receive instant alerts for quick response. This makes observability essential for security monitoring, threat detection, and compliance with industry regulations.

- Optimizing Scalability and System Performance: This robust approach enables DevOps teams to scale applications and infrastructure more effectively, aligning with customer needs. Engineers can achieve cost-effective scalability by planning for future capacity, optimizing resource allocation, and adjusting deployments based on workload trends and resource usage patterns.

Core Principles of Observability Engineering

To implement effective observability, follow these core principles:

Instrument applications comprehensively: Add logging, metrics, and tracing to your code to capture relevant data at key points. Ensure that all your microservices are instrumented to capture traces for both inbound and outbound calls. This guarantees complete visibility and comprehensive coverage across your entire system, helping you track and diagnose issues more effectively.

Implement distributed tracing: Use unique identifiers to track requests across services, enabling end-to-end visibility into system behavior. Distributed tracing visualizes the entire request journey, helping developers pinpoint performance issues and bottlenecks in complex microservices for easier troubleshooting.

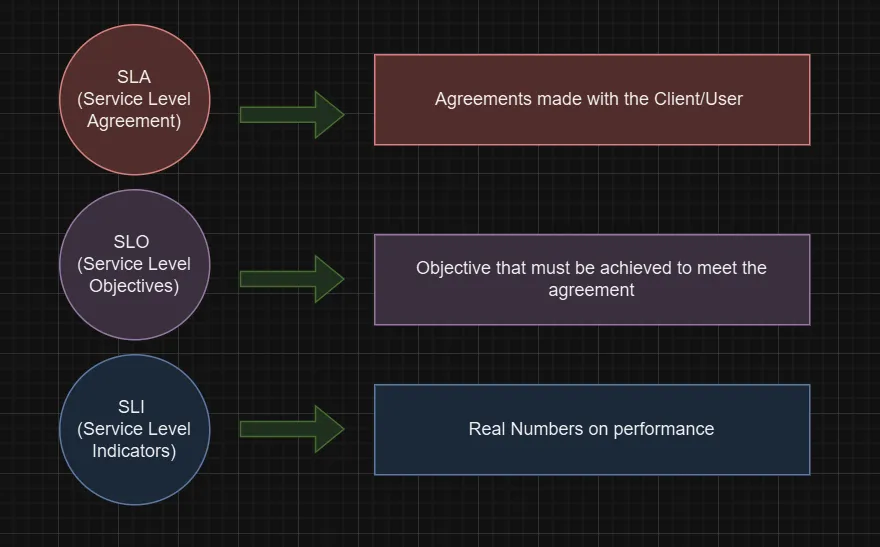

Design effective SLI, SLA and SLOs: Define measurable metrics that reflect user experience and set realistic targets for system performance. The goal is to ensure everyone including vendors and clients are aligned on system performance expectations. This includes how often your systems will be available, how quickly you'll respond to issues, and what speed and functionality promises you're making.

Measurable Metrics Adopt a culture of observability: Encourage your team to embrace observability by integrating it into the development workflow. This means educating the team, choosing the right tools, setting clear metrics, and fostering collaboration. Observability is about understanding and troubleshooting any system state in real-time, without pre-defining debugging needs.

Essential Tools and Technologies for Observability

A wide range of tools are available to support observability engineering:

- Open-source solutions: SigNoz, Prometheus, Grafana, Jaeger, and OpenTelemetry provide powerful, customizable observability capabilities.

- Commercial platforms: Datadog, New Relic, and Dynatrace offer comprehensive observability solutions with advanced features and integrations.

- Cloud-native options: AWS CloudWatch, Google Cloud Operations, and Azure Monitor provide observability tools that are tightly integrated with cloud platforms.

When choosing observability tools, consider factors such as:

- Integration with your existing tech stack: Configuring and maintaining data collection tools like OpenTelemetry and Fluentd can be challenging, especially in production. Instrumenting services for logs, metrics, and traces is often complex and time-consuming. To simplify this, invest in observability tools with automated service instrumentation, service discovery, and data collection. These features save time and streamline the setup process, making observability more efficient.

- Scalability and performance: Choose tools that can handle growing data volumes and complexity as your system scales. Opt for solutions with elastic scaling, efficient data processing, and high availability to maintain performance. Ensure the tool manages data traffic spikes and keeps response times fast as your infrastructure expands.

- Cost and licensing models: Observability costs can quickly spiral if you're paying to store and analyze vast amounts of useless or noisy data. Invest in observability tools with automated storage and data optimization features to help manage data volumes and control expenses. This ensures you only pay for the data that truly supports your observability needs.

- Ease of use and learning curve: Instead of spending time creating your own observability dashboards from scratch, choose tools that offer pre-built dashboards you can customize to fit your needs.

- Community support and documentation: Choose tools that have strong community backing and comprehensive documentation. A vibrant community means access to shared knowledge, troubleshooting help, and best practices from other users. Good documentation ensures you can quickly understand the tool’s features, integration processes, and troubleshooting steps.

- Anomaly Detection Capabilities: For organizations scaling their systems, automated anomaly detection is essential. With data pouring in from numerous components, AI and ML-powered detection can effectively identify issues. Training these algorithms on extensive datasets of normal behavior ensures they can spot anomalies and maintain robust observability.

Observability integrates monitoring, visibility, and automation to assess system states and quickly provide actionable insights, a process that would be time-consuming manually. AI enhances this by adding real-time, adaptive intelligence for better decision-making. With AISecOps, organizations can analyze vast data to understand system activities and their causes. The convergence of observability and cybersecurity into a single tool eliminates data silos and tool sprawl, offering complete context without significant cost.

Implementing Observability in Your DevOps Pipeline

Follow these steps to introduce observability practices into your DevOps workflow:

- Assess your current state: Evaluate your existing monitoring and troubleshooting capabilities to identify gaps.

- Choose your tools: Select observability tools that align with your technology stack and team skills.

- Instrument your applications: Add logging, metrics, and tracing to your code using standardized libraries or agents.

- Configure data collection: Set up your chosen tools to collect and store observability data from your applications and infrastructure.

- Create dashboards and alerts: Design visualizations and alerting rules to surface important insights and anomalies.

- Train your team: Ensure all team members understand how to use observability tools and interpret the data they provide.

- Iterate and improve: Continuously refine your observability practices based on feedback and changing system requirements.

Leveraging SigNoz for Comprehensive Observability

SigNoz is an open-source observability platform that provides a powerful alternative to commercial solutions. Key features of SigNoz include:

- Distributed tracing with OpenTelemetry

- Custom dashboards and alerting

- Log management and analysis

- Infrastructure monitoring

SigNoz Cloud is the easiest way to run SigNoz. Sign up for a free account and get 30 days of unlimited access to all features.

You can also install and self-host SigNoz yourself since it is open-source. With 24,000+ GitHub stars, open-source SigNoz is loved by developers. Find the instructions to self-host SigNoz.

Optimizing Observability

A proven set of best practices for observability engineering is critical for any organization to follow:

- Set your goals: Ask yourself what you want to achieve and how you’ll measure success. Identify specific objectives and key performance indicators (KPIs) that align with your business aims to guide your practice and track progress.

- Map observability goals with business objectives: Use the data you collect to boost application performance, manage resources better, and speed up time-to-market, making sure your goals fit with the bigger picture of your business.

- Embed observability into the entire SDLC: From the start, make observability a part of every stage, development, testing, and deployment, to ensure you have full visibility and transparency throughout the process.

- Monitor and review: Keep your metrics meaningful by customizing dashboards, setting sensible alert thresholds, and using tracing, logs, and user feedback. Adjust your metrics as needed to stay aligned with your goals. Make sure the data updates in real time and regularly tweak your dashboards based on feedback to keep them effective for decision-making.

- Team Training: Make sure your team is up to date with observability tools and practices. Ongoing training and sharing knowledge are crucial, as the field of observability is always evolving.

Challenges in Observability

While implementing observability in DevOps, we often find that with great visibility comes great challenges, such as:

- CI/CD Performance Issues: A poorly implemented CI/CD pipeline can lead to issues like slow load times, delayed server responses, and inefficient memory use, all harming application performance. This can increase bounce rates and decrease user engagement. To improve UX, use tools that offer real-time web performance testing and grade performance based on key metrics.

- Version Control in Test Automation: DevOps relies on version control to maintain stability, but unexpected updates or changes can break the pipeline due to compatibility issues, particularly during automated updates. Additionally, inadequate automated tests can disrupt Continuous Automation. To prevent issues, consider halting auto-updates to ensure that unstable versions aren’t integrated without manual review.

- Security Issues: Security vulnerabilities in the DevOps pipeline can lead to cyber-attacks and compromise sensitive data. Implement a robust monitoring system for quick threat detection and resolution. Lock parts of the pipeline where irregularities are found and mitigate risks by limiting sensitive information in code and using code analysis tools.

- Scalability of Test Infrastructure: A key challenge in Continuous Testing is scaling operations to handle growing data volumes and diverse devices. To tackle this, use scalable testing frameworks and tools that manage increased data and device variety. Cloud-based testing environments and automation can further enhance efficiency and expand capabilities.

Advanced Observability Techniques

As you mature your observability practices, consider these advanced techniques:

- Chaos engineering: Deliberately introduce failures to test and improve system resilience. By simulating various types of disruptions, such as network outages, server crashes, or high-load scenarios, you can identify weaknesses and ensure that your system can handle unexpected conditions.

- Machine learning for anomaly detection: AI algorithms analyze large volumes of observability data and identify anomalies that might not be evident through traditional methods. Algorithms such as Recurrent Convolutional Neural Networks (RCNN) and Long Short-Term Memory (LSTM) can detect unusual patterns, predict potential issues before they occur, and automatically alert users to emerging problems.

- Continuous verification: Incorporate observability checks into your CI/CD pipeline to catch issues before deployment. You can also integrate automated monitoring and logging during the build and deployment stages for detecting anomalies and performance regressions before they reach production. This practice not only improves the reliability of deployments but also accelerates the feedback loop, allowing for quicker iterations and higher-quality software releases.

- Business KPI correlation: Link technical metrics like response times and error rates to business outcomes such as customer satisfaction and revenue. This helps you understand how technical issues affect your bottom line, prioritize improvements based on business impact, and align technical operations with strategic goals. For example, if high error rates correlate with lower user conversion rates, focus on resolving those errors to boost user experience and drive better results.

- OpenTelemetry: This open-source framework provides a standard way to instrument your code and collect observability data across diverse systems and services. OpenTelemetry simplifies integration by standardizing data collection, improves consistency, and enhances the ability to correlate technical metrics with business KPIs, ultimately providing more actionable insights into system performance and impact.

Measuring the Success of Your Observability Strategy

To ensure your observability efforts are effective, track these key performance indicators (KPIs):

- Reduction in Mean Time to Detection (MTTD) and Mean Time to Resolution (MTTR) for incidents

- Improvement in system uptime and reliability

- Decrease in customer-reported issues

- Increase in proactively identified and resolved problems

- Developer productivity gains in debugging and troubleshooting

Regularly assess your observability maturity using frameworks like the Observability Maturity Model to identify areas for improvement. By systematically reviewing data collection, analysis, and incident response, you can pinpoint gaps, track progress over time, and adjust strategies to enhance overall effectiveness.

Key Takeaways

- Observability engineering is essential for understanding and optimizing complex, distributed systems.

- The three pillars of observability—logs, metrics, and traces—provide a comprehensive view of system behaviour.

- Implementing observability requires a combination of cultural shifts, tooling, and best practices.

- Effective observability leads to improved system reliability, faster incident resolution, and data-driven decision-making.

- Continuous improvement and adaptation of observability practices are crucial in the ever-evolving DevOps landscape.

FAQs

What's the difference between monitoring and observability?

Monitoring focuses on tracking predefined metrics and alerting on known issues. Observability goes beyond monitoring by allowing you to explore and understand system behaviour without predefined questions or instrumentation.

How does observability engineering improve DevOps practices?

Observability engineering enhances DevOps by providing deeper insights into system behaviour, enabling faster troubleshooting, promoting collaboration between development and operations teams, and supporting data-driven decision-making.

What are the main challenges in implementing observability?

Common challenges include:

- Dealing with data volume and storage costs

- Ensuring consistent instrumentation across services

- Managing tool complexity and integration

- Fostering a culture of observability across the organization

How can small teams get started with observability engineering?

Small teams can start by:

- Focusing on one pillar of observability (e.g., logging or metrics)

- Using open-source tools to minimize costs

- Instrumenting critical services first

- Gradually expanding observability practices as the team gains experience